Inattentive Driving Detection Using Body-Worn Sensors: Feasibility Study

Abstract

:1. Introduction

2. Overview of the Proposed System

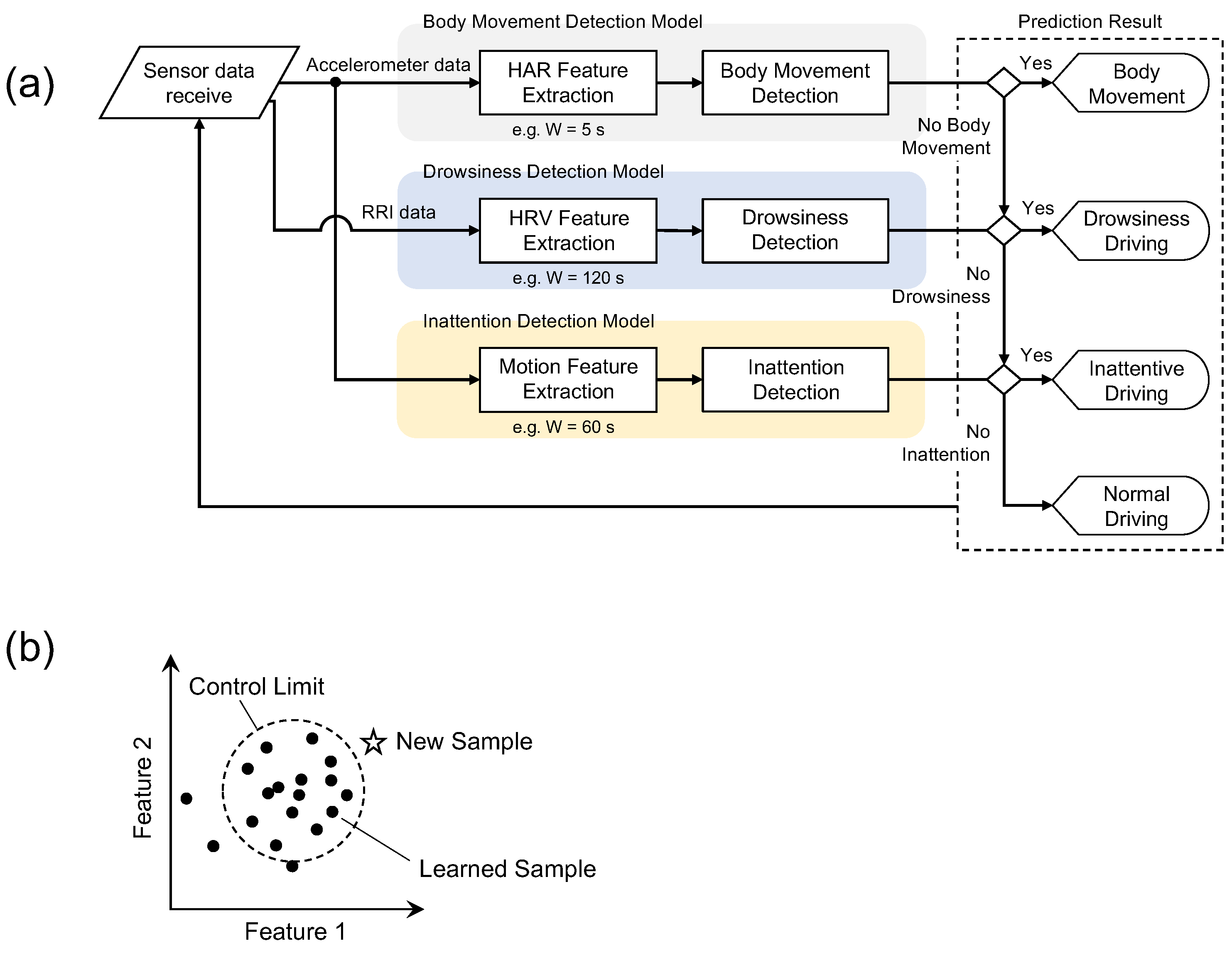

2.1. Detection Algorithm

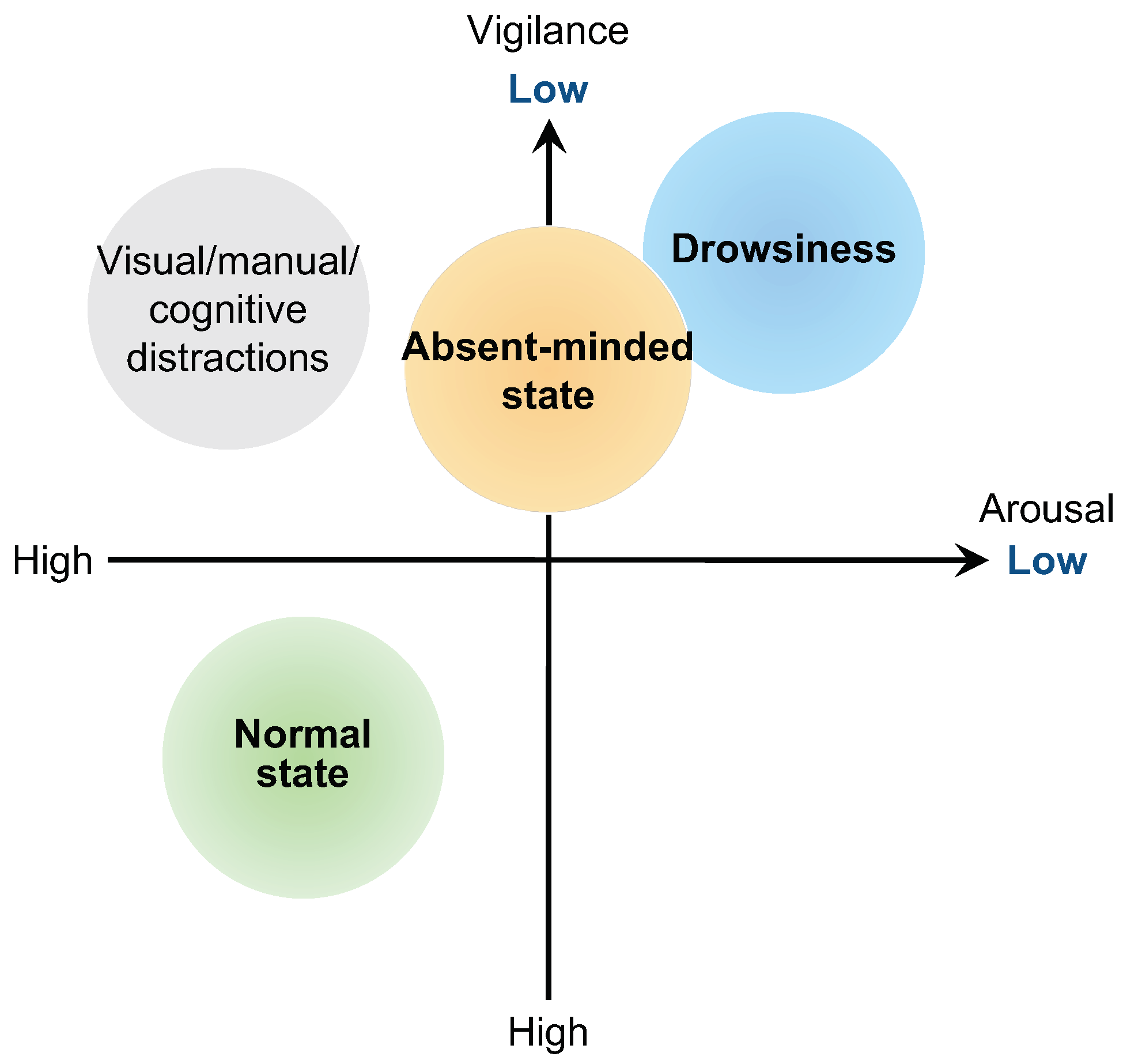

- Body Movement Detection: Based on the anomaly detection approach, this model detects wrist movements during monotonous steering operation as a non-anomaly (i.e., “no body movement’’) and other large body movements such as changing the hand position or releasing the steering wheel as an anomaly. Features of wrist movements such as the mean and variance of acceleration, which are widely used in Human Activity Recognition (HAR) [29,30,31], are computed from accelerometer data and used as input to the model. If “no body movement” is determined, the next model determines the state.

- Drowsiness Detection: Based on the anomaly detection approach, this model detects a state with an ordinary arousal level as a non-anomaly (i.e., “no drowsiness”), and a decrease in arousal from the normal state is considered an anomaly. Features of heart rate variability (HRV), widely used as an indicator of autonomic nervous system activity, are computed from RR interval (RRI) data and used as input to the model. The RR interval is the time interval of the R wave, which is the positive peak included in the ECG waveform, as shown in Figure 1. If “no drowsiness” is determined, the next model determines the state.

- Inattention Detection: Based on the anomaly detection approach, this model detects wrist movements during monotonous steering operation as a non-anomaly (i.e., “no inattention”), and wrist movements during monotonous steering operation in a state of reduced vigilance are considered an anomaly. Motion features described in the below section are computed from accelerometer data to obtain the fine-grained changes of the wrist movement and used as input in the model. If “no inattention” is determined, the state is defined as “normal state”.

2.2. Feature Extraction

2.2.1. Heart Rate Variability Features

2.2.2. Motion Features

- Consider a subsequence extracted by the sliding window [34] of length W [s] from the acceleration signal of th-axis.

- The subsequence is divided into sub-windows and calculated by taking the difference in average amplitude between the adjacent sub-window:where is the average amplitude of the acceleration signal at the ith sub-window.

- Calculate a histogram for the , and its statistics are used as the motion features. In this study, we use three statistics: variance, skewness, and kurtosis to describe the distribution shape. Note that the three motion features, variance/skewness/kurtosis of the difference values within the subsequence, were defined experimentally, which provided the best overall estimation.

2.3. Multivariate Statistical Process Control Model

3. Experiment

3.1. Participants

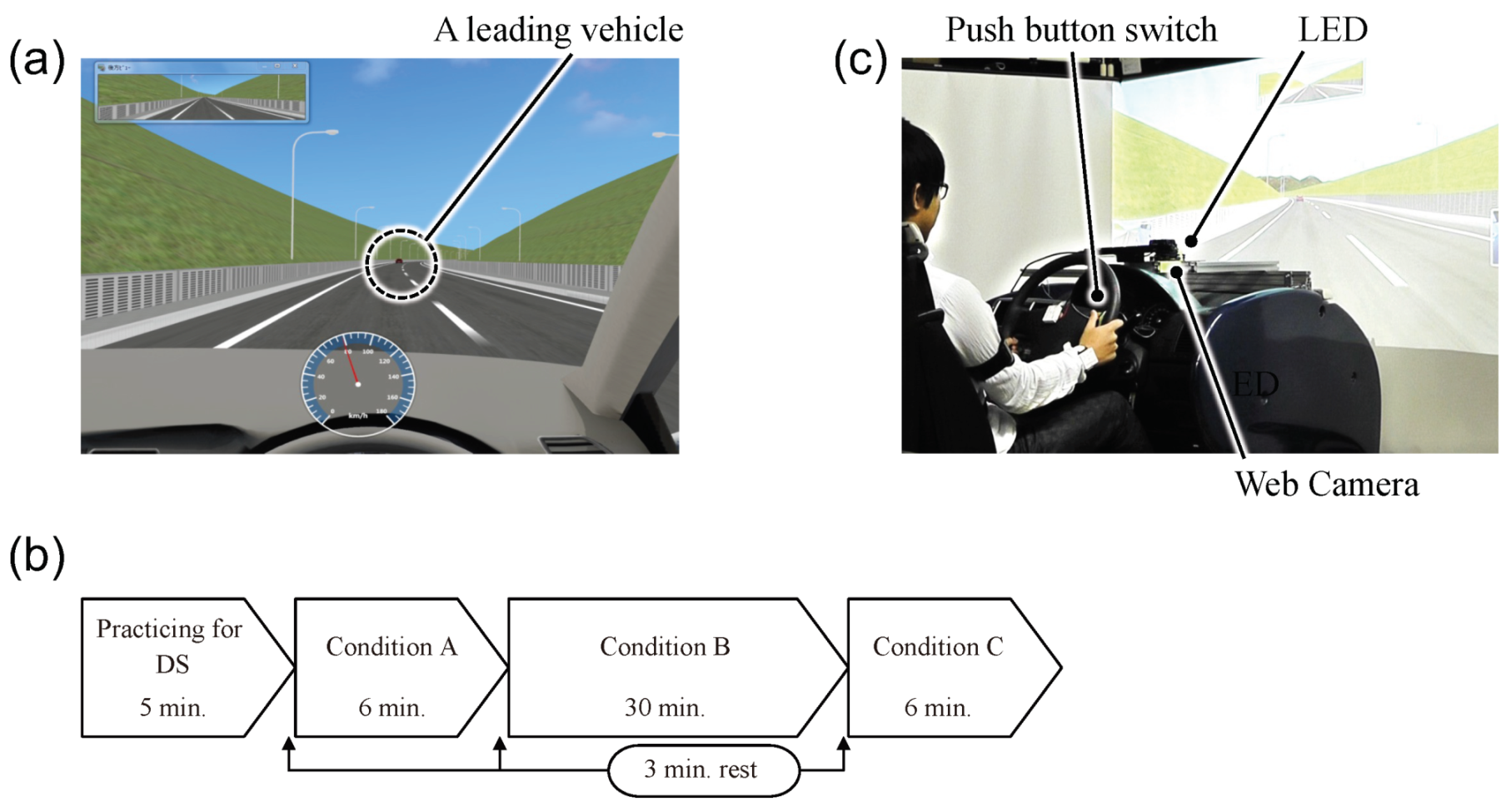

3.2. Experimental Design and Procedures

3.3. Annotation of Driver State

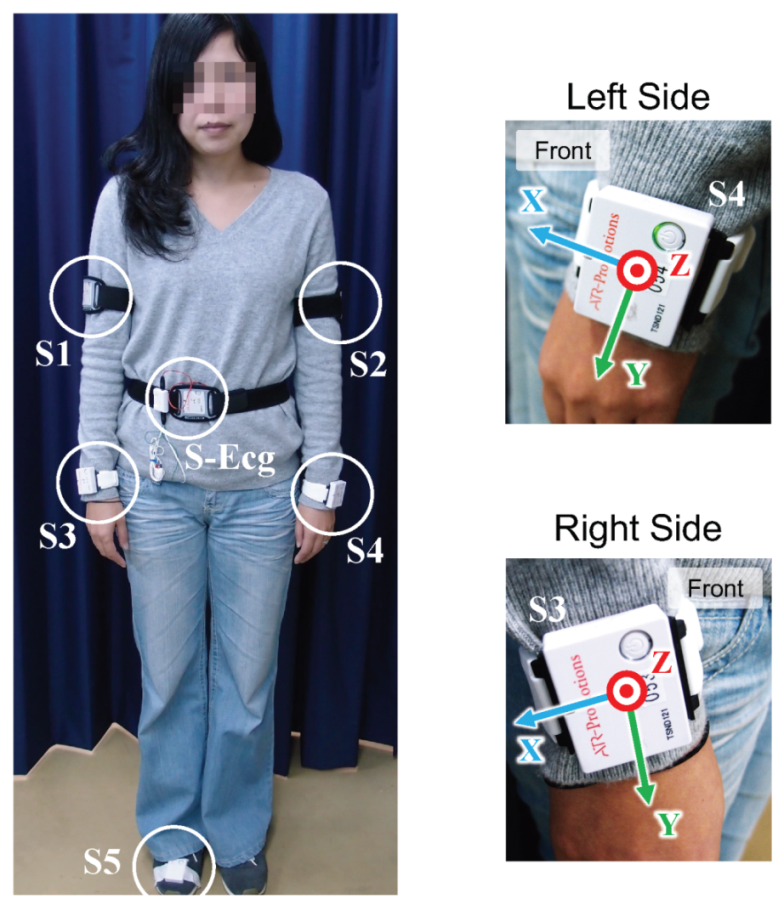

3.4. Data Acquisition

4. Results and Discussions

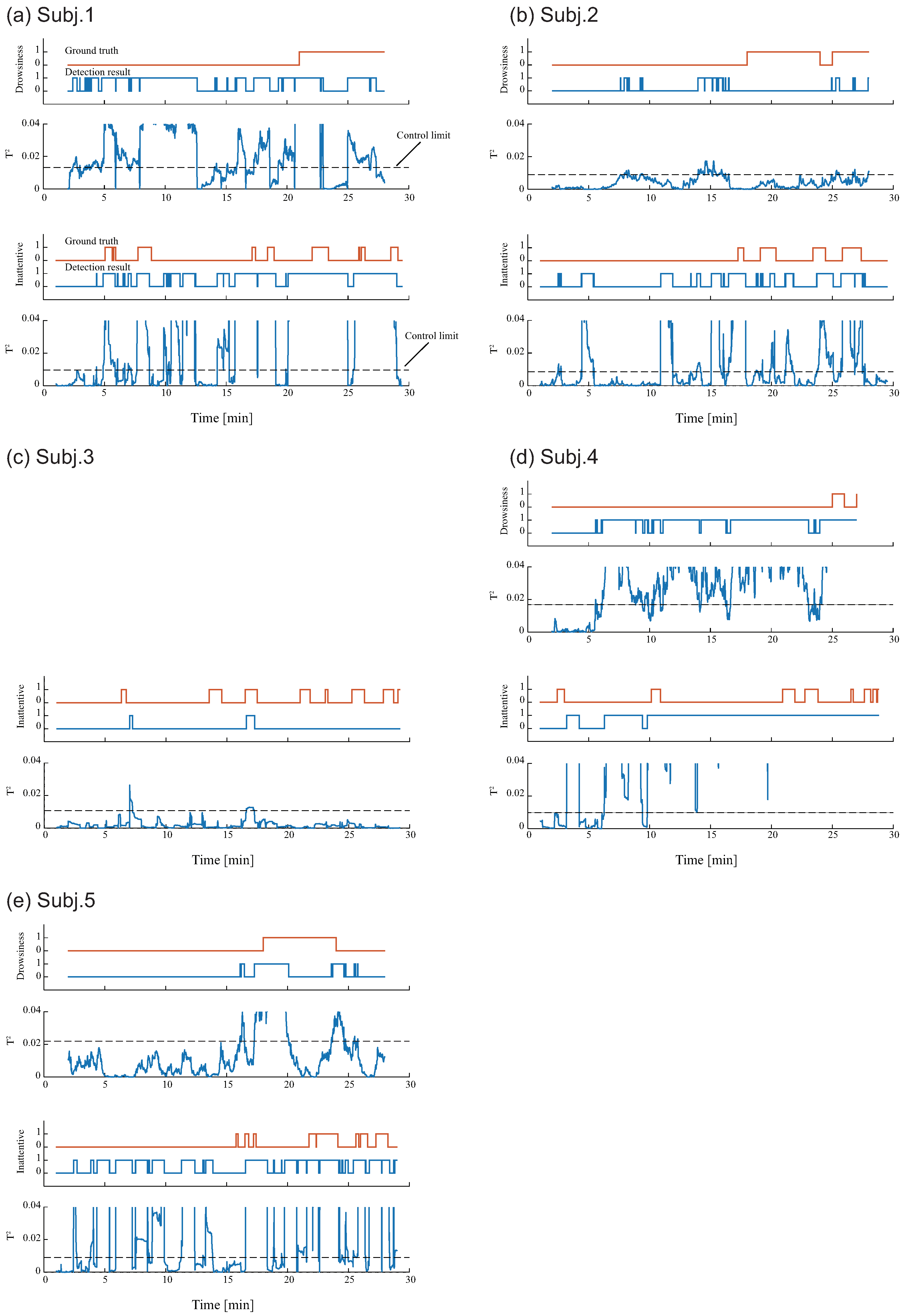

4.1. Drowsiness Detection Model

4.2. Inattentive Detection Model

4.3. Detection Results

4.4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Japanese National Police Agency. Report of Traffic Accident Statistics. Available online: https://www.e-stat.go.jp/stat-search/files?page=1&layout=datalist&lid=000001202708 (accessed on 18 October 2021). (In Japanese).

- Boer, E.R.; Liu, A. Behavioral Entropy as an Index of Workload. In Proceedings of the 44th Annual Meeting of the Human Factors and Ergonomics Society, San Diego, CA, USA, 30 July–4 August 2000; Volume 44, pp. 125–128. [Google Scholar]

- Kume, T.; Naito, T.; Ishida, K.; Kawai, S.; Matsunaga, S.; Nishii, K.; Kitajima, H. Development of Absentminded State Detection and Resolution Methods Using Vehicle Equipments. Trans. Soc. Automot. Eng. Jpn. 2014, 45, 567–572. [Google Scholar]

- Saito, Y.; Itoh, M.; Inagaki, T. Driver Assistance System with a Dual Control Scheme: Effectiveness of Identifying Driver Drowsiness and Preventing Lane Departure Accidents. IEEE Trans. Human-Mach. Syst. 2016, 46, 660–671. [Google Scholar] [CrossRef] [Green Version]

- Arefnezhad, S.; Samiee, S.; Eichberger, A.; Nahvi, A. Driver Drowsiness Detection Based on Steering Wheel Data Applying Adaptive Neuro-Fuzzy Feature Selection. Sensors 2019, 19, 943. [Google Scholar] [CrossRef] [Green Version]

- Akhtar, Z.U.A.; Wang, H. WiFi-Based Driver’s Activity Monitoring with Efficient Computation of Radio-Image Features. Sensors 2020, 20, 1381. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jeon, Y.; Kim, B.; Baek, Y. Ensemble CNN to Detect Drowsy Driving with In-Vehicle Sensor Data. Sensors 2021, 21, 2372. [Google Scholar] [CrossRef]

- Omi, T. Detecting Drowsiness with the Driver Status Monitor’s Visual Sensing. Denso Tech. Rev. 2016, 21, 93–102. [Google Scholar]

- Bergasa, L.M.; Nuevo, J.; Sotelo, M.A.; Barea, R.; Lopez, M.E. Real-Time System for Monitoring Driver Vigilance. IEEE Trans. Intell. Transp. Syst. 2006, 7, 63–77. [Google Scholar] [CrossRef] [Green Version]

- Dinges, D.F.; Grace, R. PERCLOS: A Valid Psychophysiological Measure of Alertness as Assessed by Psychomotor Vigilance; FHWA-MCRT-98-006; Federal Highway Administration: Washington, DC, USA, 1998.

- Mbouna, R.O.; Kong, S.G.; Chun, M.G. Visual Analysis of Eye State and Head Pose for Driver Alertness Monitoring. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1462–1469. [Google Scholar] [CrossRef]

- Naurois, C.J.; Bourdin, C.; Stratulat, A.; Diaz, E.; Vercher, J.L. Detection and Prediction of Driver Drowsiness Using Artificial Neural Network Models. Accid. Anal. Prev. 2019, 126, 95–104. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, X.; Yang, X.; Xue, C.; Zhu, X.; Wei, J. Driver Drowsiness Detection Using Mixed-effect Ordered Logit Model Considering Time Cumulative Effect. Anal. Methods Accid. Res. 2020, 26, 100114. [Google Scholar] [CrossRef]

- Tamanani, R.; Muresan, R.; Al-Dweik, A. Estimation of Driver Vigilance Status Using Real-Time Facial Expression and Deep Learning. IEEE Sens. Lett. 2021, 5, 6000904. [Google Scholar] [CrossRef]

- Abe, E.; Fujiwara, K.; Hiraoka, T.; Yamakawa, T.; Kano, M. Development of Drowsiness Detection Method by Integrating Heart Rate Variability Analysis and Multivariate Statistical Process Control. SICE J. Control Meas. Syst. Integr. 2016, 9, 10–17. [Google Scholar] [CrossRef] [Green Version]

- Fujiwara, K.; Abe, E.; Kamata, K.; Nakayama, C.; Suzuki, Y.; Yamakawa, T.; Hiraoka, T.; Kano, M.; Sumi, Y.; Masuda, F.; et al. Heart Rate Variability-based Driver Drowsiness Detection and Its Validation with EEG. IEEE Trans. Biomed. Eng. 2019, 66, 1769–1778. [Google Scholar] [CrossRef] [PubMed]

- Iwamoto, H.; Hori, K.; Fujiwara, K.; Kano, M. Real-driving-implementable Drowsy Driving Detection Method Using Heart Rate Variability Based on Long Short-term Memory and Autoencoder. IFAC-PapersOnLine 2021, 54, 526–531. [Google Scholar] [CrossRef]

- Guo, Z.; Pan, Y.; Zhao, G.; Cao, S.; Zhang, J. Detection of Driver Vigilance Level Using EEG Signals and Driving Contexts. IEEE Trans. Reliab. 2018, 67, 370–380. [Google Scholar] [CrossRef]

- Arif, S.; Arif, M.; Munawar, S.; Ayaz, Y.; Khan, M.J.; Naseer, N. EEG Spectral Comparison Between Occipital and Prefrontal Cortices for Early Detection of Driver Drowsiness. In Proceedings of the of 2021 International Conference on Artificial Intelligence and Mechatronics Systems (AIMS), Jakarta, Indonesia, 28–30 April 2021; pp. 1–6. [Google Scholar]

- Roge, J.; Pebayle, T.; Muzet, A. Variations of the Level of Vigilance and of Behavioural Activities During Simulated Automobile Driving. Accid. Anal. Prev. 2001, 33, 181–186. [Google Scholar] [CrossRef]

- Matsuo, H.; Abdelaziz, K. The Measurement, Observation and Evaluation of the Vehicle Driver Drowsiness. IEICE Trans. Inf. Syst. 2015, J98-D, 700–708. [Google Scholar]

- Sunagawa, M.; Shikii, S.; Nakai, W.; Mochizuki, M.; Kusukame, K.; Kitajima, H. Comprehensive Drowsiness Level Detection Model Combining Multimodal Information. IEEE Sens. J. 2020, 20, 3709–3717. [Google Scholar] [CrossRef]

- Lee, B.G.; Lee, B.L.; Chung, W. Wristband-Type Driver Vigilance Monitoring System Using Smartwatch. IEEE Sens. J. 2015, 15, 5624–5633. [Google Scholar] [CrossRef]

- Jiang, L.; Lin, X.; Liu, X.; Bi, C.; Xing, G. SafeDrive: Distracted Driving Behaviors Using Wrist-Worn Devices. ACM Interact. Mob. Wearable Ubiquitous Technol. 2017, 1, 144. [Google Scholar] [CrossRef]

- Tanaka, R.; Akiduki, T.; Takahashi, H. Detection of Driver Workload Using Wrist-Worn Wearable Sensors: A Feasibility Study. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, CA, USA, 11–14 October 2020; pp. 1723–1730. [Google Scholar]

- Sun, W.; Si, Y.; Guo, M.; Li, S. Driver Distraction Recognition Using Wearable IMU Sensor Data. Sustainability 2021, 13, 1342. [Google Scholar] [CrossRef]

- Tsubowa, K.; Akiduki, T.; Zhong, Z.; Takahashi, H.; Omae, Y. A Study of Effects of Driver’s Sleepiness on Driver’s Subsidiary Behaviors. Int. J. Innov. Comput. Inf. Control. 2021, 17, 1791–1799. [Google Scholar]

- Nagasawa, J.; Akiduki, T.; Zhang, Z.; Miyake, T.; Takahashi, H. A Study of Detection Method of Aimless Driving State by Using Body-Worn Sensors. In Proceedings of the JSME Annual Conference on Robotics and Mechatronics, Yokohama, Japan, 8–11 June 2016; p. 1P1-12a5. [Google Scholar]

- Andreas, B.; Ulf, B.; Bernt, S. A Tutorial on Human Activity Recognition Using Body-worn Inertial Sensors. ACM Comput. Surv. 2014, 46, 33. [Google Scholar]

- Suto, J.; Oniga, S.; Pop, P.C. Feature Analysis to Human Activity Recognition. Int. J. Comput. Commun. Control. 2017, 12, 116–130. [Google Scholar] [CrossRef]

- Suto, J.; Oniga, S.; Pop, P.C. Comparison of Wrapper and Filter Feature Selection Algorithms on Human Activity Recognition. In Proceedings of the IEEE International Conference on Computers, Communications and Control 2016, Oradea, Romania, 10–14 May 2016; pp. 124–129. [Google Scholar]

- Task Force of the European Society of Cardiology and the North American Society of Pacing and Electrophysiology, Heart rate variability: Standards of measurement, physiological interpretation. Eur. Heart J. 1996, 17, 354–381. [CrossRef] [Green Version]

- Matsunaga, S.; Naito, T.; Kato, T.; Oguri, K. Driver Condition Estimation Technology Using Vehicle Signal and Heart Rate. In Proceedings of the 2011 JSAE Annual Congress, Yokohama, Japan, 18–20 May 2011; Volume 122, pp. 13–16. [Google Scholar]

- Ravi, N.; Dandekar, N.; Mysore, P.; Littman, M. Activity Recognition from Accelerometer Data. AAAI 2005, 5, 1541–1546. [Google Scholar]

- Kano, M.; Hasebe, S.; Hashimoto, I.; Ohno, H. A New Multivariate Statistical Process Monitoring Method Using Principal Component Analysis. Comput. Chem. Eng. 2001, 25, 1103–1113. [Google Scholar] [CrossRef]

- Fujiwara, K.; Miyajima, M.; Yamakawa, T.; Abe, E.; Suzuki, Y.; Sawada, Y.; Kano, M.; Maehara, T.; Ohta, K.; Sasai-Sakuma, T.; et al. Epileptic Seizure Prediction Based on Multivariate Statistical Process Control of Heart Rate Variability Features. IEEE Trans. Biomed. Eng. 2016, 63, 1321–1332. [Google Scholar]

- Kitajima, H.; Numata, N.; Yamamoto, K.; Goi, Y. Prediction of Automobile Driver Sleepiness (1st Report, Rating of Sleepiness Based on Facial Expression and Examination of Effective Predictor Indexes of Sleepiness). Trans. Jpn. Soc. Mech. Eng. C 1997, 63, 3059–3066. [Google Scholar] [CrossRef] [Green Version]

- ATR-Promotions, Inc. Compact Wireless Multifunction Sensor TSND121 and Its Amplifier Module TS-EMG01. Available online: https://www.atr-p.com/products/TSND121.html (accessed on 18 October 2020). (In Japanese).

- Arakawa, T. A Review of Heartbeat Detection Systems for Automotive Applications. Sensors 2021, 21, 6112. [Google Scholar] [CrossRef]

- Lee, H.; Lee, J.; Shin, M. Using Wearable ECG/PPG Sensors for Driver Drowsiness Detection Based on Distinguishable Pattern of Recurrence Plots. Electronics 2019, 8, 192. [Google Scholar] [CrossRef] [Green Version]

- NHTSA. Visual-manual NHTSA Driver Distraction Guidelines for In-vehicle Electronic Devices. Fed. Regist. 2013, 78, 24817–24890. [Google Scholar]

| Drowsiness | Inattention | |||

|---|---|---|---|---|

| Subj. | Sensitivity | Specificity | Sensitivity | Specificity |

| 1 | 0.59 | 0.33 | 0.94 | 0.47 |

| 2 | 0.06 | 0.86 | 0.73 | 0.75 |

| 3 | - | - | 0.12 | 0.99 |

| 4 | 1.00 | 0.26 | 0.87 | 0.17 |

| 5 | 0.41 | 0.91 | 0.91 | 0.49 |

| Avg. | 0.52 | 0.59 | 0.71 | 0.58 |

| Study | Category | Measuring Method | Participant# (Male:Female, Age) | Scenario | Platform | Ground Truth |

|---|---|---|---|---|---|---|

| Abe et al. (2016) [15] | Drowsiness | Wearable RRI telemetry | 27 (17:10, 20 s to 40 s) | Driving on a highway loop line at night for two hours | DS | Facial expression rating by human referees |

| Lee et al. (2019) [40] | Drowsiness | Wristwatch-type PPG and Chest-belt-type ECG sensor | 6 (n/a, 20 to 35) | n/a | DS | Visual evaluation of facial and body movement |

| Iwamoto et al. (2021) [17] | Drowsiness | ECG with chest electrode | 25 (17:8, mean ) | A monotonous driving task in a dark room for three hours | DS | Labeled based on sleep specialist’s score |

| Lee et al. (2015) [23] | Drowsiness | Wristwatch-type PPG and Wrist-worn IMU sensors | 12 (9:3, 21 to 45) | Highway driving simulation | DS | Karolinska sleepiness scale (KSS) every 2 min |

| Jiang et al. (2018) [24] | Manual distraction | Wrist-worn IMU sensor (on the right wrist) | 20 (10:10, 25 to 35) | Participants perform five different hand gestures, such as smartphone use | Real | Manually labeled |

| Tanaka et al. (2020) [25] | Cognitive distraction | Wrist-worn IMU sensors | 7 (7:0, mean ) | A monotonous driving task with a cognitive task called N-back task | DS | The task level, that is, N in the N-back task |

| Sun et al. (2021) [26] | Manual distraction | Wrist-worn IMU sensor (on the right wrist) | 20 (14:6, 21 to 35) | Participants perform four types of gestures; three manual distractions and one regular driving motion | Real | Manually labeling by a passenger |

| Kume et al. (2014) [3] | Drowsiness and absentminded state | Steering wheel angles and vehicle speed | 34 (16:18, 20 s to 60 s) | Driving for 1.5 h on the specified highway section | Real | Subjective evaluation on a 5-point scale per 3 min |

| This study | Drowsiness and absentminded state | Wrist-worn IMU sensors and ECG with chest electrode | 5 (2:3, 20 to 45) | A monotonous driving task for approximately an hour | DS | Facial expression rating and reaction time (see Section 3.3) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Akiduki, T.; Nagasawa, J.; Zhang, Z.; Omae, Y.; Arakawa, T.; Takahashi, H. Inattentive Driving Detection Using Body-Worn Sensors: Feasibility Study. Sensors 2022, 22, 352. https://doi.org/10.3390/s22010352

Akiduki T, Nagasawa J, Zhang Z, Omae Y, Arakawa T, Takahashi H. Inattentive Driving Detection Using Body-Worn Sensors: Feasibility Study. Sensors. 2022; 22(1):352. https://doi.org/10.3390/s22010352

Chicago/Turabian StyleAkiduki, Takuma, Jun Nagasawa, Zhong Zhang, Yuto Omae, Toshiya Arakawa, and Hirotaka Takahashi. 2022. "Inattentive Driving Detection Using Body-Worn Sensors: Feasibility Study" Sensors 22, no. 1: 352. https://doi.org/10.3390/s22010352

APA StyleAkiduki, T., Nagasawa, J., Zhang, Z., Omae, Y., Arakawa, T., & Takahashi, H. (2022). Inattentive Driving Detection Using Body-Worn Sensors: Feasibility Study. Sensors, 22(1), 352. https://doi.org/10.3390/s22010352