Abstract

This paper presents the construction of a new objective method for estimation of visual perceiving quality. The proposal provides an assessment of image quality without the need for a reference image or a specific distortion assumption. Two main processes have been used to build our models: The first one uses deep learning with a convolutional neural network process, without any preprocessing. The second objective visual quality is computed by pooling several image features extracted from different concepts: the natural scene statistic in the spatial domain, the gradient magnitude, the Laplacian of Gaussian, as well as the spectral and spatial entropies. The features extracted from the image file are used as the input of machine learning techniques to build the models that are used to estimate the visual quality level of any image. For the machine learning training phase, two main processes are proposed: The first proposed process consists of a direct learning using all the selected features in only one training phase, named direct learning blind visual quality assessment . The second process is an indirect learning and consists of two training phases, named indirect learning blind visual quality assessment . This second process includes an additional phase of construction of intermediary metrics used for the construction of the prediction model. The produced models are evaluated on many benchmarks image databases as , , and in the wild image quality challenge. The experimental results demonstrate that the proposed models produce the best visual perception quality prediction, compared to the state-of-the-art models. The proposed models have been implemented on an platform to demonstrate the feasibility of integrating the proposed solution on an image sensor.

1. Introduction

Digital images are increasingly used in several vision application domains of everyday life, such as medical imaging [1,2], object recognition in images [3], autonomous vehicles [4], Internet of Things () [5], computer-aided diagnosis [6], and mapping [7]. In all these applications, the produced images are subject to a wide variety of distortions during acquisition, compression, transmission, storage, and displaying. These distortions lead to a degradation of visual quality [8]. The increasing demand for images in a wide variety of applications involves perpetual improvement of the quality of the used images. As each domain has different thresholds in terms of visual perception needed and fault tolerance, so, equally, does the importance of visual perception quality assessment.

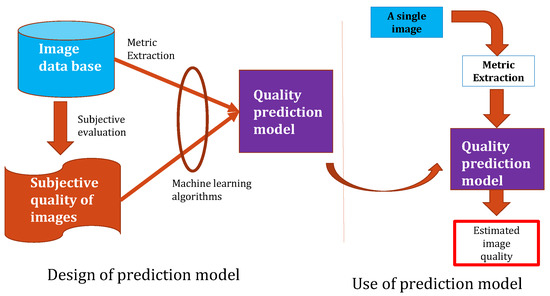

As human beings are the final users and interpreters of image processing, subjective methods based on the human ranking score are the best processes to evaluate image quality. The ranking consists of asking several people to watch images and rate their quality. In practice, subjective methods are generally too expensive, time-consuming, and not usable in real-time applications [8,9]. Thus, many research works have focused on objective image quality assessment methods aiming to develop quantitative measures that automatically predict image quality [2,8,10]. The objective process is illustrated in Figure 1. This process has been introduced in the paper [11].

Figure 1.

Objective image quality assessment process.

In digital image processing, objective can be used for several roles, such as dynamic monitoring and adjusting the image quality, benchmark and optimize image processing algorithms, and parameter setting of image processing [8,12]. Many research investigations explore the use of machine learning () algorithms in order to build objective models in agreement with human visual perception. Recent methods include, artificial neural network (), support vector machine (), nonlinear regression (), decision tree (), clustering, and fuzzy logic () [13]. After the propulsion of deep learning techniques in 2012 [14], researchers were also interested in the possibility of using these techniques in the image quality assessment. Thus, in 2014, the first studies emerged on the use of convolutional neural networks in [15]. Many other works have followed, and we find in the literature increasingly efficient models [16,17,18].

The evaluation methods can be classified into three categories according to whether or not they require a reference image: full-reference (), reduced-reference (), and no-reference () approaches. Full reference image quality assessment (-) needs a complete reference image in order to be computed. Among the most popular -, we can cite the peak signal to noise ratio (), structure similarity index metric () [8,19], and visual information fidelity () [20]. In reduced reference image quality assessment (-), the reference image is only partially available, in the form of a set of extracted features, which help to evaluate the distorted image quality; this is the case of reduced reference entropic differencing () [21]. In many real-life applications, the reference image is unfortunately not available. Therefore, for this application, the need of no-reference image quality assessment (-) methods or blind (), which automatically predict the perceived quality of distorted images, without any knowledge of reference image. Some - methods assume the type of distortions are previously known [22,23], these objective assessment techniques are called distortion specific () -. They can be used to assess the quality of images distorted by some particular distortion types. As example, the algorithm in [23] is for compressed images, while in [22] it is for compressed images, and in [24] it is for detection of blur distortion. However, in most practical applications, information about the type of distortion is not available. Therefore, it is more relevant to design non-distortion specific () - methods that examine image without prior knowledge of specific distortions [25]. Many existing metrics are the base units used in methods, such as [26], - [27], [28], [29], [30], [31], DIQA [32], and DIQa-NR [33].

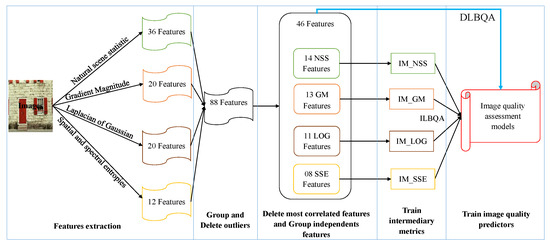

The proposal of this paper is a non-distortion-specific - approach, where the extracted features are based on a combination of the natural scene statistic in the spatial domain [28], the gradient magnitude [29], the Laplacian of Gaussian [29], as well as the spatial and spectral entropies [30]. These features are trained using machine learning methods to construct the models used to predict the perceived image quality. The process we propose for designing the evaluation of perceived no-reference image quality models is described in Figure 2. The process consists of

Figure 2.

Design of prediction models for objective no-reference process.

- extracting the features from images taken in the databases,

- removing the superfluous features according to the correlation between the extracted features,

- grouping the linearly independent features to construct some intermediate metrics, and

- using the produced metrics to construct the estimator model for perceived image quality assessment.

Finally, we compare the designed models with the state-of-the-art models using the features extraction, but also the process using deep learning with convolutional neural network; as shown in Section 4.1.

To evaluate the performances of the produced models, we measure the correlation between objective and subjective quality scores using three correlation coefficients:

- (1)

- Pearson’s linear correlation coefficient (), which is used to measure the degree of the relationship between linear related variables.

- (2)

- Spearman’s rank order correlation coefficient (), which is used to measure the prediction monotony and the degree of association between two variables.

- (3)

- Brownian distance (), which is a measure of statistical dependence between two random variables or two random vectors of arbitrary, not necessarily equal dimension.

The paper is organized as follows. Section 2 presents the feature extraction methods. Section 3 explains the feature selection technique based on feature independence analysis. The construction of intermediate metrics is also presented in this section. Section 4 explains the experimental results and their comparison. Section 5 presents the implementation architectures and results. Finally, Section 6 draws a conclusion and perspectives for future investigations.

2. Feature Extraction

Feature extraction in this paper is based on four principal axes: natural scene statistic in the spatial domain, gradient magnitude, Laplacian of Gaussian, and finally spatial and spectral entropies.

2.1. Natural Scene Statistic in the Spatial Domain

The extraction of the features based on in spatial domain starts by normalization of the image represented by , to remove local mean displacements from zero log-contrast, and to normalize the local variance of the log contrast as observe in [34]. Equation (1) presents normalization of the initial image.

where i and j are the spatial indices, M and N are the image dimensions, and . that denotes the local mean is represented by (2) and that estimates the local contract is expressed by (3).

where , and . is a 2D circularly-symmetric Gaussian weighting function sampled out to 3 standard deviations () and rescaled to unit volume [28].

The model produced in (1) is used as the mean-subtracted contrast normalized () coefficients. In [28], they take the hypothesis that the coefficients have characteristic statistical properties that are changed by the presence of distortion. Quantifying these changes helps predict the type of distortion affecting an image as well as its perceptual quality. They also found that a Generalized Gaussian Distribution () could be used to effectively capture a broader spectrum of a distorted image, where the with zero mean is given by (4).

where is represented by (5) and is expressed by (6).

The parameter controls the shape of the distribution while controls the variance.

In [28], they also give the statistical relationships between neighboring pixels along four orientations: and . This is used with asymmetric density function to produce a practical alternative to adopt a general asymmetric generalized Gaussian distribution () model [35]. Equation (7) gives the AGGD with zero mode.

where (with ) is given by (8).

The parameter controls the shape of the distribution, while and are scale parameters that control the spread on each side of the mode. The fourth asymmetric parameter is given by (9)

Finally, the founded parameters are composed of the symmetric parameters ( and ) and the asymmetric parameters (, and ), where the asymmetric parameters are computed for the four orientations, as shown in Table 1. All the founded parameters are also computed for two scales, yielding 36 features (2 scales × [2 symmetric parameters asymmetric parameters orientations]). More details about the estimation of these parameters are given in [28,36].

Table 1.

Extracted features based on NSS in spatial domain.

2.2. Gradient Magnitude and Laplacian of Gaussian

The second feature extraction method is based on the joint statistics of the Gradient Magnitude () and the Laplacian of Gaussian () contrast. These two elements and are usually used to get the semantic structure of an image. In [29], they also introduce another usage of these elements as features to predict local image quality.

By taking an image I(i,j), its GM is represented by (10).

where ⊗ is the linear convolution operator, and is the Gaussian partial derivative filter applied along the direction , represented by (11).

To produce the used features, the first step is to normalize the and features map as in (14).

where is a small positive constant, used to avoid instabilities when is small, and is given by (15).

where ; and is given by (16).

Then (17) and (18) give the final statistic features.

where and , and is the empirical probability function of G and L [37,38]; it can be given by (19).

In [29], the authors also found that the best results are obtained by setting ; thus, 40 statistical features have been produced as shown in Table 2, 10 dimensions for each statistical features vector and .

Table 2.

Extracted features based on GM and LoG.

2.3. Spatial and Spectral Entropies

Spatial entropy is a function of the probability distribution of the local pixel values, while spectral entropy is a function of the probability distribution of the local discrete cosine transform () coefficient values. The process of extracting the spatial and spectral entropies (SSE) features from images in [30] consists of three steps:

- The first step is to decompose the image into 3 scales, using bi-cubic interpolation: low, middle, and high.

- In the third step, evaluate the means and skew of blocks entropy within each scales.

At the end of the three steps, 12 features are extracted from the images as seen in Table 3. These features represent the mean and skew for spectral and spatial entropies, on 3 scales ( features).

Table 3.

Extracted features based on SSE.

2.4. Convolutional Neural Network for NR-IQA

In this paper, we explore the possibility of use the deep learning with convolutional neural network to build the model used to evaluate the quality of the image. In this process, the extraction of features are done by the convolution matrix, constructed using the training process with deep learning.

In CNNs, three main characteristics of convolutional layers can distinguish them from fully connected linear layers in the vision field. In the convolutional layer,

- each neuron receives an image as inputs and produces an image as its output (instead of a scalar);

- each synapse learns a small array of weights, which is the size of the convolutional window; and

- each pixel in the output image is created by the sum of the convolutions between all synapse weights and the corresponding images.

The convolutional layer takes as input and image of dimension with channels, and the output value of the pixel (the pixel of the row r and the column c, of the neuron n in the layer l) is computed by (23).

where are the convolution kernel dimensions of the layer l, is the weight of the row i and the column j in the convolution matrix of the synapse s, connected to the input of the neuron n, in the layer l. In reality, a convolution is simply an element-wise multiplication of two matrices followed by a sum. Therefore, the 2D convolution take two matrices (which both have the same dimensions), multiply them, element-by-element, and sum the elements together. To close the convolution process in a convolutional layer, the results are then passed through an activation function.

In convolution process, a limitation of the output of the feature map is that they record the precise position of features in the input in the convolutional layers. This means that small movements in the position of the feature in the input image will result in a different feature map. This can happen with cropping, rotating, shifting, and other minor changes to the input image [15]. In CNNs, the common approach to solving this problem is the down-sampling using the pooling layers [39]. The down-sampling is a reduction in the resolution of an input signal while preserving important structural elements, without the fine details that are not very useful for the task. The pooling layers are used to reduce the dimensions of the feature maps. Thus, it reduces the number of parameters to learn and the amount of computation performed in the network. The pooling layer summarizes the features present in a region of the feature map generated by a convolution layer. Therefore, further operations are performed on summarized features instead of precisely positioned features generated by the convolution layer. This makes the model more robust to variations in the position of the features in the input image.

In CNNs, the most used pooling function is Max Pooling, which calculates the maximum value for each patch on the feature map. But other pooling function exist, like Average Pooling, which calculates the average value for each patch on the feature map.

Our final CNN model, called - has 10 layers: four convolutional layers with 128, 256, 128, and 64 channels, respectively; four max pooling layers; and two fully connected layers with 1024 and 512 neurons, respectively. Finally, a output layer with one neuron is computed to give the final score of the image.

3. Construction of Prediction Models

In the feature extraction phase, 88 features were extracted in the images. These features are used to construct the prediction models used to evaluate the quality of images. In our case, two processes have been proposed to evaluate the image quality:

- The Direct Learning Blind visual Quality Assessment method (), in which machine learning methods are directly applied overall set of selected features for training, and producing the final models.

- Indirect Learning Blind visual Quality Assessment method (), in which ML methods are applied to the four produced intermediary metrics to construct the final model. The process requires two training phases: the first is used to merge the independent features of each class in order to generate the adequate intermediary metrics, while the task of the second training is to derive the final model. The inputs of the produced model are the four produced intermediary metrics, and the output is the estimated quality score of the input image.

3.1. Remove Superfluous Features

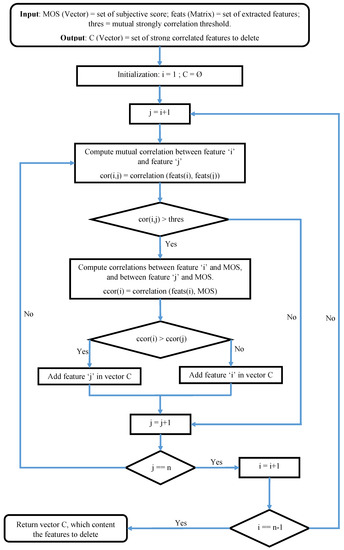

Having extracted the 88 features on the images, the next step consists of removing the superfluous features, which not add any useful information for the construction of final models. The main type of superfluous features consists of strongly correlated features.

The strongly correlated features give approximately the same information, and this redundancy may cause overfitting problem in the estimation process of the model parameters, and can also produce uselessly complex final models. The proposed way in this paper in order to avoid these problems is to remove the strongly correlated features. The suppression of features is based on the following idea: “if there are two strongly correlated features in the set of extracted features, with a mutual correlation greater than a predefined threshold, we remove the one that has the least correlation with the subjective score without significant loss of information”. This reduction process is clearly described in Figure 3, which takes as input the set of extracted features, and produces the set of superfluous features.

Figure 3.

Flow diagram for selection of strongly correlated features to remove.

The features contained in the output vector of this algorithm are removed from the list of those to be used in the rest of the work. In our use case, we took () as the threshold of mutual correlation between two features. This implies that for any mutual correlation between 2 features higher than , one of the two features concerned must be removed. With this threshold, the output vector contains 42 features to remove from the initial extracted features list. At the end of this step, 42 features have been found as superfluous features; yielding 46 features are saved for study.

3.2. Direct Process ()

In the direct process, the whole set of 46 selected features is used by only one training phase to produce the final quality assessment model. The produced models have been called Direct Learning Blind visual Quality Assessment index based on Machine Learning (-). Different machine learning methods have been used to construct assessment models. These include methods based on artificial neural networks (-), polynomial Nonlinear Regression (-), Support Vector Machine (-), Decision Tree (-), and Fuzzy Logic (-). This different machine learning methods have been presented in [11,40]

Table 4 resumes the results using different correlation scores on the test data by the - models produced using different machine learning methods, on the TID2013 [41,42], LIVE [8,43,44], and LIVE challenge [45,46] image databases.

Table 4.

Correlation score of MOS vs. estimated image quality of different image databases using different ML methods for DLBQA.

These results show that the based on support vector machine () and decision tree () methods provide the best models for predicting image quality from the different image quality databases.

3.3. Indirect Process ()

As the number of selected features is too big (46), the indirect process devises the training phase into three steps:

- The first step, as mentioned in the previous section, is to distribute the features into independent classes, depending on the axes of the extracted features.

- The next step uses machine learning methods to merge the features of each class in order to generate an appropriate intermediary metrics. Then, the pool process has been carried out using different ML methods to create intermediary metrics , , , and .

- The third step consists of using the produced intermediary metrics to construct final model and to derive the quality score estimators.

3.3.1. Construction of Intermediate Models

Having removed superfluous features, the next step consists of grouping the selected features into a set of classes. The produced classes are based on four principal axes:

- Natural Scene Statistic in the spatial domain () with 14 extracted features;

- Gradient Magnitude () with 13 extracted features;

- Laplacian of Gaussian () with 11 extracted features;

- Spatial and Spectral Entropies () with 8 extracted features.

Each produced class is used to construct one intermediary metric. In each produced class, the features have been merged to generate the appropriate intermediary metrics. The pool process has been carried out, using different machine learning methods.

Table 5 compares the result of applying four ML methods (fuzzy logic, support vector machine, decision tree, and artificial neural network) in order to build the intermediary metrics, using the feature classes. The comparison is represented by Spearman’s correlation score between the produced intermediary metrics and the subjective scores give in different image databases.

Table 5.

Spearman’s correlation between intermediary metrics and MOS for different image databases.

The selection of the adequate ML method is determined by studying Brownian correlations between the produced intermediary metrics and the subjective scores in multivariate space as shown in Table 6.

Table 6.

Brownian’s distance between the group of intermediary metrics and MOS.

The main remark extracts on this Table 6 is that the decision tree () ML method produces the best results on all the studied image databases. Thus, the decision tree method has been used as the machine learning method to learn the intermediary metrics.

3.3.2. Construction of Final Indirect Prediction Model

Once the intermediary metrics are built, the next step in the indirect process is the construction of final prediction model using these intermediary metrics. The final evaluation index constructed consists of several models: four models of construction of the intermediary metrics, and a model of evaluation of the final score using the intermediary metrics. As the intermediary metrics have been constructed in the previous section, all that remains is the construction of the final prediction model, taking as inputs these intermediary metrics previously evaluated. These models are built using different machine learning methods.

Table 7 resumes the prediction performance of -based image quality models trained on TID213, LIVE, and Live challenge image databases. The design of models consists here of two training steps. The first step uses the decision tree approach in order to merge the quality features into four intermediary metrics. Moreover, the next training step is used different ML approaches (, , , and ) for predicting quality score from the corresponding set of intermediary metrics.

Table 7.

Correlation score of MOS vs. estimated image quality of different image databases using different ML methods for ILBQA.

These results show that the based on decision tree () methods provide the best models for predicting image quality from the different image quality databases.

4. Experimental Results

Having extracted and removed the superfluous features, the next step is to construct machine learning models, which automatically predict the perceived quality of an input image without a need to reference image. The construction process of the objective NR-IQA block essentially consists of training (with of data) and validation phases. During the training phase, machine learning algorithms are applied to create an appropriate mapping model that relates the features extracted from an image to subjective score provided in the image database. While the validation phase is used to estimate how well the trained ML models predict the perceived image quality, and to compare the accuracy and robustness of the different ML methods.

The Monte Carlo Cross-Validation () method [47] is also applied to compare and confirm the accuracy of the obtained results, as follows:

- First, split the dataset with a fixed fraction into training and validation set, where of the samples are randomly assigned to the training process in order to design a model, and the remaining samples are used for assessing the predictive accuracy of the model.

- Then, the first step is repeated k times.

- Finally, the correlation scores are averaged over k.

In this paper, and .

4.1. Results Comparison

Having built our evaluation models, the next step is the validation of these models, and the comparison of the results produced with those produced by the state-of-the-art models. The validation process is done using Monte Carlo Cross-Validation () [47].

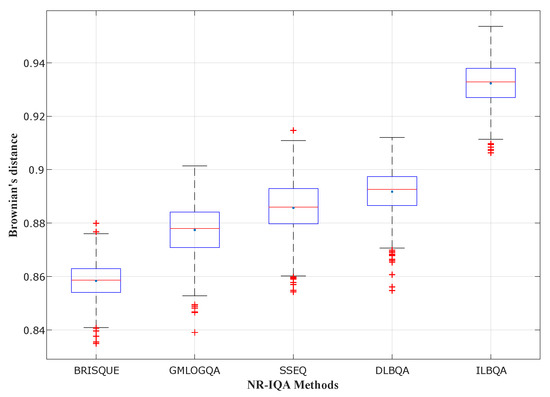

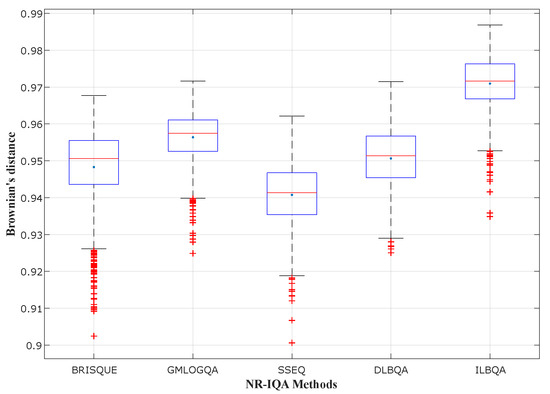

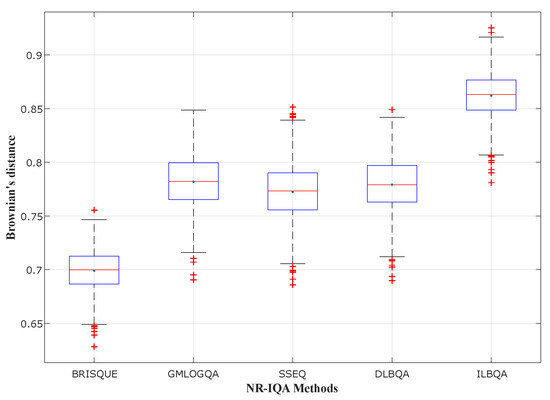

In Figure 4, Figure 5 and Figure 6, different state-of-the-art no-reference methods have been studied and compared with the two models design in this paper ( and ). These blind methods consist of BRISQUE [28] that uses NSS in the spatial domain, GMLOGQA [29] that uses the gradient magnitude (GM) and the Laplacian of Gaussian (LOG) to make a predictor for image quality, and SSEQ [30] based on the spatial and spectral entropies. These figures depict the box-plot of Brownian’s distance in the phase, of different no-reference methods, trained on , , and Challenge image databases, respectively. They also show that the model that obtains the best prediction accuracy (with the highest mean and the smallest standard deviation) is designed by the proposed method with two training steps.

Figure 4.

Performance comparison for different NR-IQA methods trained on TID2013.

Figure 5.

Performance comparison for different NR-IQA methods trained on LIVE.

Figure 6.

Performance comparison for different NR-IQA methods trained on LIVE Challenge.

We compare the proposed models (, , and -) with six feature extraction based BIQA methods, and five CNN-based methods, on three popular benchmark databases. The results are listed in Table 8. In the table, the best two SROCC and PLCC are highlighted in bold. The performances are the correlations between the subjective score (MOS/DMOS) take in image databases, and the evaluated objective scores given by the different studied - methods in cross-validation phase with . The main remark is that for every image databases (, , and Challenge), the proposed and methods perform the best results.

Table 8.

Performance comparison on three benchmark databases.

4.2. Computational Complexity

In many image quality assessment applications, the computational complexity is an important factor when evaluating the produced BIQA models. In this paper, the feature extraction is based on four principal axes. Table 9 presents the percentage of time spent by each feature extraction axes.

Table 9.

Percentage of time consumed by each feature extraction axes.

The run-time of the produced model is compared to the run-times of five other good NR-IQA indexes: BLIINDS-II [50], DIIVINE [26], BRISQUE [28,36], GMLOGQA [29], and SSEQ [30]. Table 10 presents the estimated run-time for an execution on CPU, in second(s), of the different methods, for a image with resolution , on MATLAB, using a computer with RAM and Intel Xeon dual-core CPU, GHz for each core. We observe that the run-time of the proposed method () is significantly inferior to and - run-time, approximately equal to run-time, and superior to and run-time.

Table 10.

Comparison of time complexity for different approaches.

5. Implementation Architectures and Results

After the construction of the no-reference image quality evaluation models, through the MATLAB tool, the next step in our process is the implementation of the produced models on an FPGA platform. The produced IP-Core can be integrated into the output of an image sensor to evaluate the quality of the produced images. The implemented index is the indirect process (). This implementation is done with the Xilinx Vivado and Vivado HLS and is implemented on the Xilinx Virtex 7 () and Zynq 7000 () platforms.

The work in [11] introduces this phase of implementation of our proposed process on the objective no-reference image quality assessment. The general implementation process consists of the following.

- HLS implementation of the block IP, allowing the encapsulation of the designed models, through the Vivado HLS tools, in C/C++ codes.

- Integration of the produced IP-cores in the overall design at RTL, through the Vivado tool, in VHDL/Verilog codes.

- Integration on Xilinx’s Virtex 7 () and Zynq 7000 () FPGA boards, and evaluation of the obtained results on several test images.

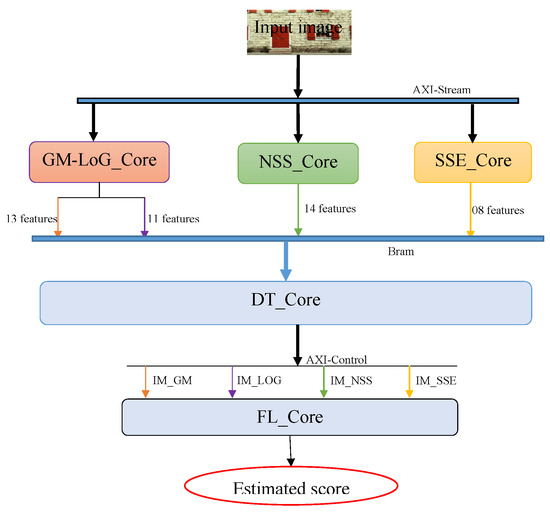

5.1. HLS Implementation of the IP-Cores

The implementation of the models in HLS enabled the construction of five IP-cores. This IP-Cores are divided into three groups, leading to the evaluation of the objective score of an image taken at the input of the process. The IP-Cores in each group can run in parallel, but the inputs in each group depend on the outputs of the previous group. The three proposed groups consist of feature extraction, construction of intermediate metrics based on decision tree models, and evaluation of the objective score from the model based on fuzzy logic, taking as input the intermediate metrics. The layered architecture of the produced IP-cores is presented in Figure 7.

Figure 7.

Implementation architecture of the on an platform.

- Feature extraction: the entries of this group are, the image whose quality is sought to be evaluated, as an AXI-stream, and the dimensions of this input image (row and column sizes as an integer). It contains three IP-cores: which extracts 14 features on the image; - consisting of extraction of 13 and 11 features on and axes, respectively; and which extracts 08 features.

- Production of the intermediary metrics: This group contains only one IP-core, the decision tree () IP-core. The uses the features extracted from the input image and generates the intermediary metrics in floating-point format.

- Production of the estimated score of the image using the intermediary metrics: this group also has only one IP-core, fuzzy logic IP-core (). This IP-core takes as input the four intermediary metrics produced by the and produces the estimated image quality score of the input image, in floating-point format.

Table 11 presents the estimation of performances and utilization at the synthesis level mapped to Xilinx Virtex 7 () FPGA platform, for the different produced IP-cores, and the global process. These results are produced by an image with a resolution of , and for a clock cycle equal to 25 ns (a frequency equal to 40 Mhz).

Table 11.

Device performance utilization estimates in HLS synthesis phase.

5.2. SDK Implementation and Results

The global design containing our models has been implemented on an board, then a program is implemented in the micro-controller (MicroBlaze) to evaluate the quality of the image thus received through the model implemented on . This program also return the objective score evaluated by the implemented models. The images are retrieved in “AXI-Stream” format and transmitted on the board through an extension using the protocol. The program is written in C/C++ codes, and passed to micro-controller via the interface of the board, thanks to Xilinx SDK tool.

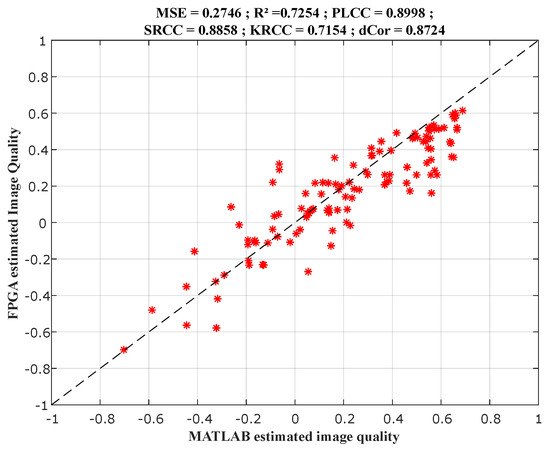

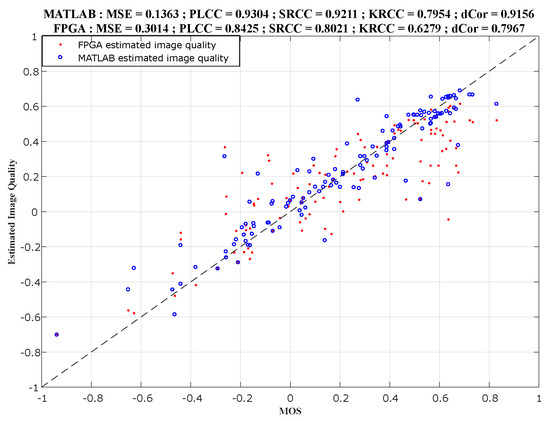

120 images distorted by 24 types of distortion, from the image database [41,42] were evaluated by the produced models on the platform. The implementation results were compared to the experimental results under and also to the subjective scores taken the image database.

Figure 8 presents the comparison between results in the X-axis and implementation results in the Y-axis, for the index. While Figure 9 presents the comparison between the subjective scores in the X-axis, and results (in blue) and implementation results (in red) in the Y-axis. These figures also present the correlation scores between the scores in the X-axis and the produced objective scores in the Y-axis on each graph.

Figure 8.

Comparison between and implemented scores.

Figure 9.

Comparison between MOS, , and implemented scores.

The difference between the image quality scores estimated under and those estimated on is caused by several factors, including the following.

- Spreading 32-bit floating-point truncation errors in the system;

- The difference between the results of the basic functions of the MATLAB and Vivado HLS tools. For example, the conversion functions in gray-scale, or the resizing functions using the bi-cubic interpolation, give slightly different results on MATLAB and Vivado HLS. These small errors are propagated throughout the evaluations, to finally cause the differences between the estimated scores shown in the previous figures.

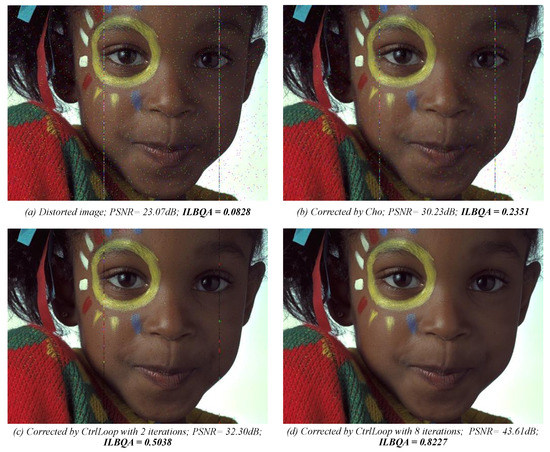

5.3. Visual Assessment

Our NR-IQA process has been used in a control loop process [51] to assess the quality of an image and decide if that image needs correction. Gaussian noise is inserted in a reference image, resulting in distorted image illustrated in Figure 10a, with a very low score like show in the figure. Figure 10b–d shows the corrected version of the distorted image presented in the graph (a), by the control loop process based on the image quality assessment. Each graph also presents the between the reference image and the corrected image, as well as the blind image quality () score of each image. The graphs (b) and (c) of the Figure 10 show the intermediary corrected images, while the graph (d) presents the best-corrected image by the process.

Figure 10.

Example of using index in a control loop process.

6. Conclusions

This paper has presented the construction of models for objective assessment of perceived visual quality. The proposed models use a non-distortion specific no-reference image quality assessment methods. The objective is computed by combining the most significant image features extracted from natural scene statistics () in the spatial domain, gradient magnitude (), Laplacian of Gaussian (), and spectral and spatial entropies (). In our proposal, the training phase has been performed using and process. The index evaluates the image quality using all the selected features in one training phase. While the index uses two training phases. The first phase consists of training the intermediary metrics using the feature classes based on four extraction axes, and the second training phase evaluates the image quality using the intermediary metrics. Different machine learning methods were used to assess image quality and their performance was compared. The proposed methods consisting of artificial neural network (), nonlinear polynomial regression (), decision tree (), support vector machine (), and fuzzy logic () are discussed and compared. Both the stability and the robustness of designed models are evaluated using a variant of Monte Carlo cross-validation () with 1000 randomly chosen validation image subsets. The accuracy of the produced models has been compared to the results produced by other state-of-the-art - methods. Implementation results on and on the platform demonstrated the best performances for proposed in this paper, which uses two training phases in the modeling process. One of the future work based on this article is to present how to use our image quality assessment indexes in the control loop process for self-healing of the image sensor. In addition, the implementation on an ASIC and the implementation of the generated IP-core in image sensors.

Author Contributions

Conceptualization, G.T.T. and E.S.; methodology, G.T.T. and E.S.; software, G.T.T.; validation, G.T.T. and E.S.; formal analysis, G.T.T. and E.S.; investigation, G.T.T. and E.S.; resources, G.T.T. and E.S.; data curation, G.T.T. and E.S.; writing—original draft preparation, G.T.T.; writing—review and editing, G.T.T. and E.S.; visualization, G.T.T. and E.S.; supervision, E.S.; project administration, E.S.; funding acquisition, E.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lu, L.; Wang, X.; Carneiro, G.; Yang, L. Deep Learning and Convolutional Neural Networks for Medical Imaging and Clinical Informatics; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Leonardi, M.; Napoletano, P.; Schettini, R.; Rozza, A. No Reference, Opinion Unaware Image Quality Assessment by Anomaly Detection. Sensors 2021, 21, 994. [Google Scholar] [CrossRef] [PubMed]

- Geirhos, R.; Janssen, D.H.; Schütt, H.H.; Rauber, J.; Bethge, M.; Wichmann, F.A. Comparing deep neural networks against humans: Object recognition when the signal gets weaker. arXiv 2017, arXiv:1706.06969. [Google Scholar]

- Plosz, S.; Varga, P. Security and safety risk analysis of vision guided autonomous vehicles. In Proceedings of the 2018 IEEE Industrial Cyber-Physical Systems (ICPS), Saint Petersburg, Russia, 15–18 May 2018; pp. 193–198. [Google Scholar]

- Aydin, I.; Othman, N.A. A new IoT combined face detection of people by using computer vision for security application. In Proceedings of the 2017 International Artificial Intelligence and Data Processing Symposium (IDAP), Malatya, Turkey, 16–17 September 2017; pp. 1–6. [Google Scholar]

- Song, H.; Nguyen, A.D.; Gong, M.; Lee, S. A review of computer vision methods for purpose on computer-aided diagnosis. J. Int. Soc. Simul. Surg 2016, 3, 2383–5389. [Google Scholar] [CrossRef] [Green Version]

- Kanellakis, C.; Nikolakopoulos, G. Survey on computer vision for UAVs: Current developments and trends. J. Intell. Robot. Syst. 2017, 87, 141–168. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ponomarenko, N.; Ieremeiev, O.; Lukin, V.; Jin, L.; Egiazarian, K.; Astola, J.; Vozel, B.; Chehdi, K.; Carli, M.; Battisti, F.; et al. A new color image database TID2013: Innovations and results. In International Conference on Advanced Concepts for Intelligent Vision Systems; Springer: Berlin/Heidelberg, Germany, 2013; pp. 402–413. [Google Scholar]

- Tsai, S.Y.; Li, C.H.; Jeng, C.C.; Cheng, C.W. Quality Assessment during Incubation Using Image Processing. Sensors 2020, 20, 5951. [Google Scholar] [CrossRef]

- Tchendjou, G.T.; Simeu, E.; Lebowsky, F. FPGA implementation of machine learning based image quality assessment. In Proceedings of the 2017 29th International Conference on Microelectronics (ICM), Beirut, Lebanon, 10–13 December 2017; pp. 1–4. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Lu, L. Why is image quality assessment so difficult? In Proceedings of the International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Orlando, FL, USA, 13–17 May 2002; Volume 4, p. IV-3313. [Google Scholar]

- Tchendjou, G.T.; Alhakim, R.; Simeu, E. Fuzzy logic modeling for objective image quality assessment. In Proceedings of the 2016 Conference on Design and Architectures for Signal and Image Processing (DASIP), Rennes, France, 12–14 October 2016; pp. 98–105. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Kang, L.; Ye, P.; Li, Y.; Doermann, D. Convolutional neural networks for no-reference image quality assessment. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1733–1740. [Google Scholar]

- Ma, J.; Wu, J.; Li, L.; Dong, W.; Xie, X.; Shi, G.; Lin, W. Blind Image Quality Assessment With Active Inference. IEEE Trans. Image Process. 2021, 30, 3650–3663. [Google Scholar] [CrossRef]

- Zhang, W.; Ma, K.; Zhai, G.; Yang, X. Uncertainty-aware blind image quality assessment in the laboratory and wild. IEEE Trans. Image Process. 2021, 30, 3474–3486. [Google Scholar] [CrossRef]

- Ullah, H.; Irfan, M.; Han, K.; Lee, J.W. DLNR-SIQA: Deep Learning-Based No-Reference Stitched Image Quality Assessment. Sensors 2020, 20, 6457. [Google Scholar] [CrossRef]

- Li, C.; Bovik, A.C. Content-partitioned structural similarity index for image quality assessment. Signal Process. Image Commun. 2010, 25, 517–526. [Google Scholar] [CrossRef]

- Sheikh, H.R.; Bovik, A.C. Image information and visual quality. IEEE Trans. Image Process. 2006, 15, 430–444. [Google Scholar] [CrossRef] [PubMed]

- Soundararajan, R.; Bovik, A.C. RRED indices: Reduced reference entropic differencing for image quality assessment. IEEE Trans. Image Process. 2012, 21, 517–526. [Google Scholar] [CrossRef] [Green Version]

- Brandão, T.; Queluz, M.P. No-reference image quality assessment based on DCT domain statistics. Signal Process. 2008, 88, 822–833. [Google Scholar] [CrossRef]

- Meesters, L.; Martens, J.B. A single-ended blockiness measure for JPEG-coded images. Signal Process. 2002, 82, 369–387. [Google Scholar] [CrossRef]

- Caviedes, J.; Gurbuz, S. No-reference sharpness metric based on local edge kurtosis. In Proceedings of the International Conference on Image Processing, Rochester, NY, USA, 22–25 September 2002; Volume 3, p. III. [Google Scholar]

- Ye, P.; Doermann, D. No-reference image quality assessment using visual codebooks. IEEE Trans. Image Process. 2012, 21, 3129–3138. [Google Scholar] [PubMed]

- Moorthy, A.K.; Bovik, A.C. Blind image quality assessment: From natural scene statistics to perceptual quality. IEEE Trans. Image Process. 2011, 20, 3350–3364. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Zhang, L.; Bovik, A.C. A feature-enriched completely blind image quality evaluator. IEEE Trans. Image Process. 2015, 24, 2579–2591. [Google Scholar] [CrossRef] [Green Version]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef]

- Xue, W.; Mou, X.; Zhang, L.; Bovik, A.C.; Feng, X. Blind image quality assessment using joint statistics of gradient magnitude and Laplacian features. IEEE Trans. Image Process. 2014, 23, 4850–4862. [Google Scholar] [CrossRef]

- Liu, L.; Liu, B.; Huang, H.; Bovik, A.C. No-reference image quality assessment based on spatial and spectral entropies. Signal Process. Image Commun. 2014, 29, 856–863. [Google Scholar] [CrossRef]

- Ghadiyaram, D.; Bovik, A.C. Perceptual quality prediction on authentically distorted images using a bag of features approach. J. Vis. 2017, 17, 32. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Nguyen, A.D.; Lee, S. Deep CNN-based blind image quality predictor. IEEE Trans. Neural Netw. Learn. Syst. 2018, 30, 11–24. [Google Scholar] [CrossRef]

- Bosse, S.; Maniry, D.; Müller, K.R.; Wiegand, T.; Samek, W. Deep neural networks for no-reference and full-reference image quality assessment. IEEE Trans. Image Process. 2017, 27, 206–219. [Google Scholar] [CrossRef] [Green Version]

- Ruderman, D.L. The statistics of natural images. Netw. Comput. Neural Syst. 1994, 5, 517–548. [Google Scholar] [CrossRef]

- Lasmar, N.E.; Stitou, Y.; Berthoumieu, Y. Multiscale skewed heavy tailed model for texture analysis. In Proceedings of the 2009 16th IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, 7–10 November 2009; pp. 2281–2284. [Google Scholar]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. Making image quality assessment robust. In Proceedings of the 2012 Conference Record of the Forty Sixth Asilomar Conference on Signals, Systems and Computers (ASILOMAR), Pacific Grove, CA, USA, 4–7 November 2012; pp. 1718–1722. [Google Scholar]

- Wainwright, M.J.; Schwartz, O. 10 Natural Image Statistics and Divisive. In Probabilistic Models of the Brain: Perception and Neural Function; The MIT Press: Cambridge, MA, USA, 2002; p. 203. [Google Scholar]

- Lyu, S.; Simoncelli, E.P. Nonlinear image representation using divisive normalization. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Jiang, T.; Hu, X.J.; Yao, X.H.; Tu, L.P.; Huang, J.B.; Ma, X.X.; Cui, J.; Wu, Q.F.; Xu, J.T. Tongue image quality assessment based on a deep convolutional neural network. BMC Med. Inform. Decis. Mak. 2021, 21, 147. [Google Scholar] [CrossRef]

- Tchendjou, G.T.; Simeu, E.; Alhakim, R. Fuzzy logic based objective image quality assessment with FPGA implementation. J. Syst. Archit. 2018, 82, 24–36. [Google Scholar] [CrossRef]

- Ponomarenko, N.; Ieremeiev, O.; Lukin, V.; Egiazarian, K.; Jin, L.; Astola, J.; Vozel, B.; Chehdi, K.; Carli, M.; Battisti, F.; et al. Color image database TID2013: Peculiarities and preliminary results. In Proceedings of the European Workshop on Visual Information Processing (EUVIP), Paris, France, 10–12 June 2013; pp. 106–111. [Google Scholar]

- Ponomarenko, N.; Ieremeiev, O.; Lukin, V.; Egiazarian, K.; Jin, L.; Astola, J.; Vozel, B.; Chehdi, K.; Carli, M.; Battisti, F.; et al. TID2013 Database. 2013. Available online: http://www.ponomarenko.info/tid2013.htm (accessed on 20 November 2021).

- Sheikh, H.R.; Wang, Z.; Huang, H.; Bovik, A.C. LIVE Image Quality Assessment Database Release 2. 2005. Available online: http://live.ece.utexas.edu/research/quality (accessed on 20 November 2021).

- Sheikh, H.R.; Sabir, M.F.; Bovik, A.C. A statistical evaluation of recent full reference image quality assessment algorithms. IEEE Trans. Image Process. 2006, 15, 3440–3451. [Google Scholar] [CrossRef]

- Ghadiyaram, D.; Bovik, A.C. Massive online crowdsourced study of subjective and objective picture quality. IEEE Trans. Image Process. 2016, 25, 372–387. [Google Scholar] [CrossRef] [Green Version]

- Ghadiyaram, D.; Bovik, A. Live in the Wild Image Quality Challenge Database. 2015. Available online: http://live.ece.utexas.edu/research/ChallengeDB/index.html (accessed on 20 November 2021).

- Xu, Q.S.; Liang, Y.Z. Monte Carlo cross validation. Chemom. Intell. Lab. Syst. 2001, 56, 1–11. [Google Scholar] [CrossRef]

- Kim, J.; Lee, S. Fully deep blind image quality predictor. IEEE J. Sel. Top. Signal Process. 2016, 11, 206–220. [Google Scholar] [CrossRef]

- Xu, J.; Ye, P.; Li, Q.; Du, H.; Liu, Y.; Doermann, D. Blind image quality assessment based on high order statistics aggregation. IEEE Trans. Image Process. 2016, 25, 4444–4457. [Google Scholar] [CrossRef]

- Saad, M.A.; Bovik, A.C.; Charrier, C. Blind image quality assessment: A natural scene statistics approach in the DCT domain. IEEE Trans. Image Process. 2012, 21, 3339–3352. [Google Scholar] [CrossRef]

- Tchendjou, G.T.; Simeu, E. Self-Healing Image Sensor Using Defective Pixel Correction Loop. In Proceedings of the 2019 International Conference on Control, Automation and Diagnosis (ICCAD), Grenoble, France, 2–4 July 2019; pp. 1–6. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).