Hand Gesture Recognition Using EGaIn-Silicone Soft Sensors

Abstract

:1. Introduction

- Integration of multiple sensory points to detect multiple joints of a finger; generally, hand gestures involving finger movements require two separate sensors to measure the degree of bending (DoB) of a finger.

- High stretchable characteristic; essentially, the proposed soft sensor has higher stretchability and robustness which are came from the material properties of the soft silicone compared to the piezoelectric film which is widely used for sensors of a data glove in the hand gesture recognition applications.

- The possibility to measure dual properties: pressure and strain; fortunately, the proposed EGaIn-silicone microchannel sensor has abilities reacting to being pressured and to being stretched.

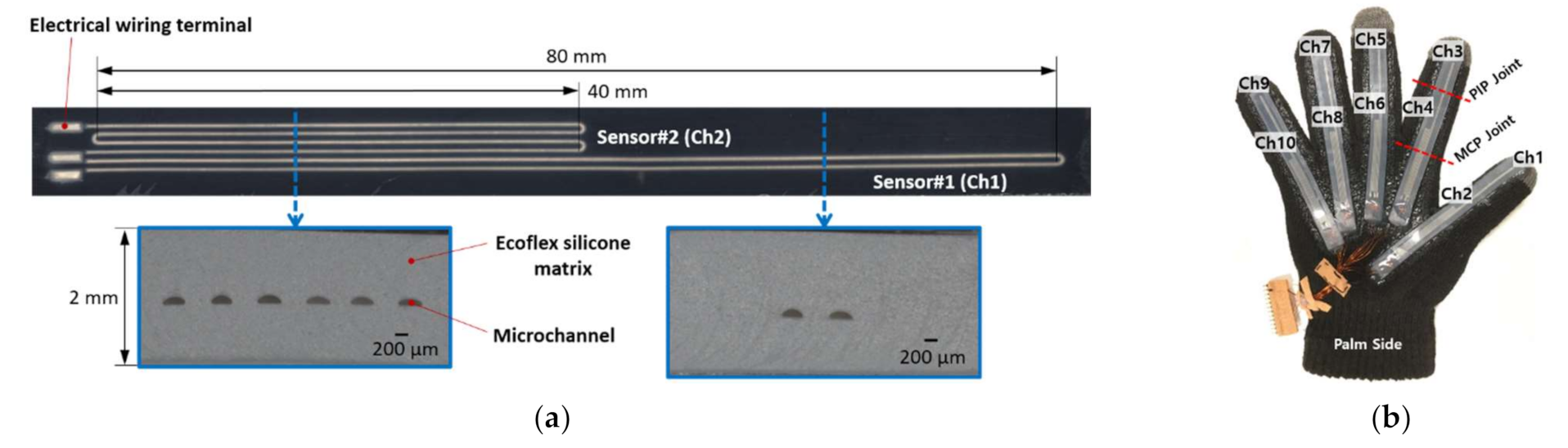

- This study developed a EGaIn-silicone based soft sensor for creating a data glove. The proposed sensor was designed for (1) the integration of multiple sensory points; (2) highly stretchable characteristic; and (3) possibility to measure dual properties. Generally, hand gestures related to finger movements require at least two separated sensory points to measure DoB of a finger. In the consideration of the integrated sensor design, it may become easier to install the sensors on a data glove. Consequently, the complexity and the defective rate of the manufacturing procedure of the data glove may decrease.

- The performance of the proposed soft sensor (or the data glove) was evaluated in a real application as the classification of hand gestures. We collected the dataset of the hand gestures from the human subjects and evaluated the performance of the data glove upon six traditional classification algorithms. As interpreting the results, we discussed the functionality of the proposed sensor in the hand gesture recognition.

2. Materials and Methods

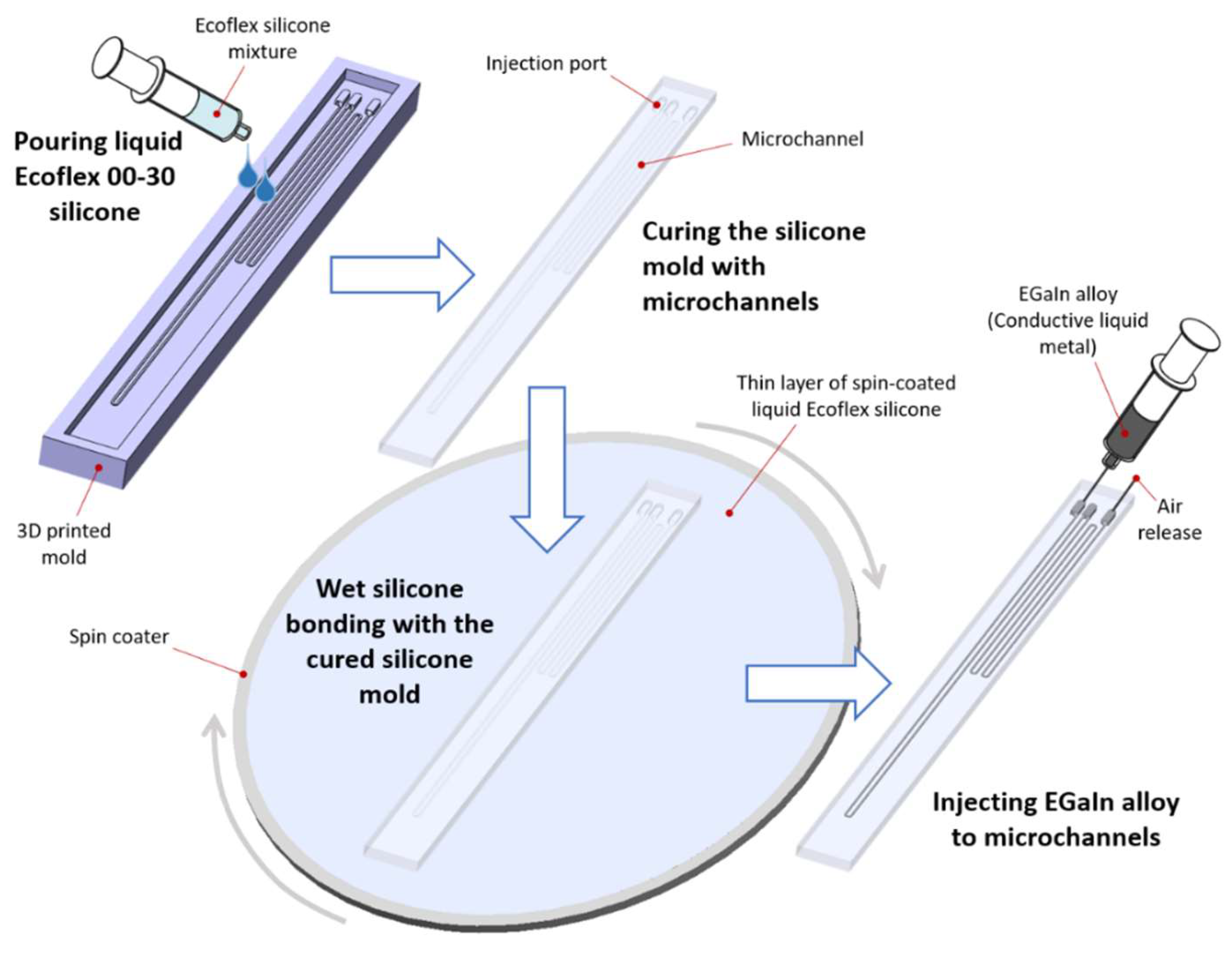

2.1. EGaIn-Silicone Sensor Fabrication Process

2.2. Resistance Change Measurment

2.3. Data Acqusition of Hand Gestures

- Start at Gesture #1 (Rest)

- Hold the Gesture #1 for 7 s

- Return to the Gesture #1 for rest

- Prepare Gesture #2 (Hand Close)

- Perform Gesture #2

- Hold the Gesture #2 for 7 s

- Return to the Gesture #1 for rest

- Repeat 4. ~ 7. for Gesture #3 ~ #11

- Prepare Gesture #12 (Num 9)

- Perform Gesture #12

- Hold the Gesture #12 for 7 s

- Return to the Gesture #1 for rest

- Take a rest for a while (one section has been finished.)

2.4. Analysis of Hand Gesture Recognition

- (1)

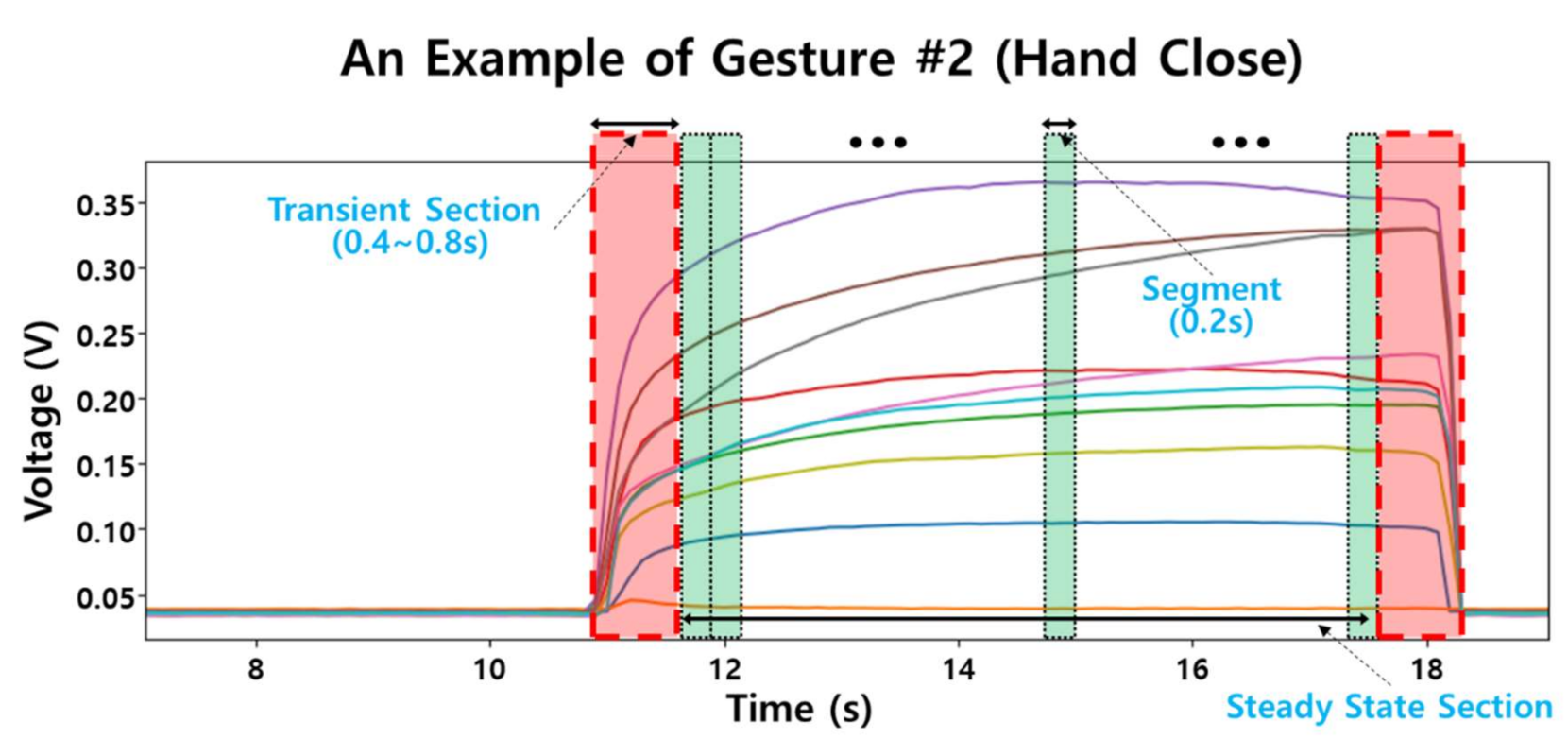

- The preprocessing procedure included 2 tasks: removing the start and end transient sections from the analysis and segmenting the steady state section with a prefixed window length to extract features. The length of the transient section is about 0.4 s(s) to 0.8 s; mainly, this was caused from a motion transaction from Gesture #1 (Rest) to a specific gestures and from a specific gesture to Gesture #1 for the rest. The transient section, which was found by the visual inspection of a trained inspector, was removed from the analysis. The investigator inspected the voltage signals of 10 channels visually; (1) finding the onset and end of each gesture trial, and (2) determining the transient sections which was about 0.4–0.8 s long after the onset and before the end as shown in Figure 6.

- (2)

- For the segmentation, the steady state section was windowed by 200 milliseconds (ms) length with no overlapped area. The window length, 200 ms, was empirically selected for a reasonable accuracy and train/test sample size by trial-and-error [48].

- (3)

- For the feature extraction, the mean value of each segment which included 200 samples due to 200 ms of the window length at 1 kHz sampling rate was calculated as a feature. For example, a hand gesture which had a 5 s steady state section had 25 segments per each channel, therefore, this gesture generated a 25 × 10 feature matrix which had 25 samples of 10 feature dimensions from 10 channels of the sensors. Due to this averaging method, no filtering techniques were applied to the raw voltage data in the preprocessing procedure. The total sample size generated from 15 subjects was 36,323. The dataset included 10 feature columns which had floating-point numbers in voltage (V). The total number of classes was 12 stemmed from the static hand gestures investigated in this study.

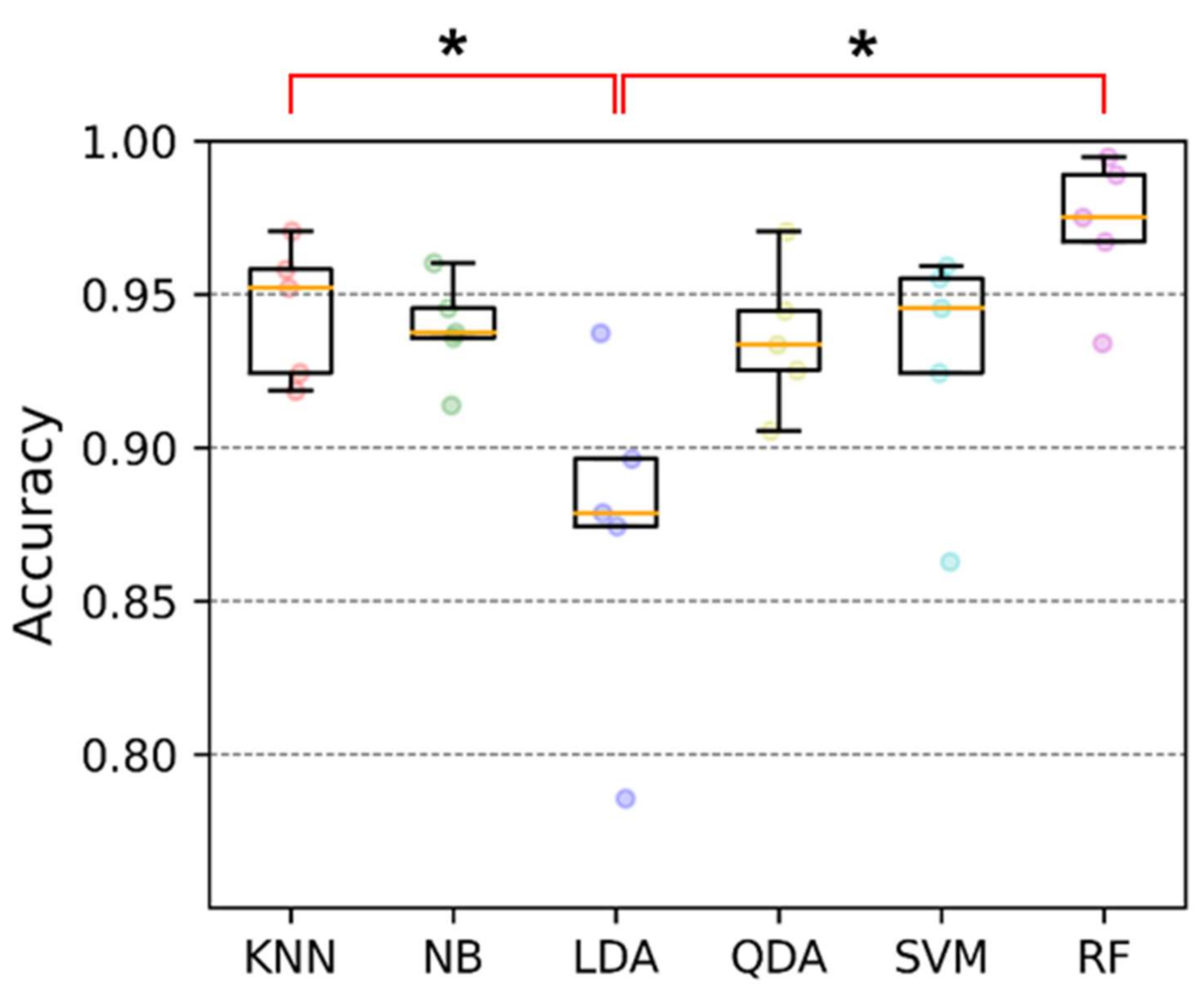

- (4)

- We chose six traditional machine learning techniques: K-Nearest Neighbors (KNN) [49], Support Vector Machine (SVM) [50], Linear Discriminant Analysis (LDA) [51], Quadratic Discriminant Analysis (QDA) [51], Random Forest (RF) [52], and Naïve Bayes (NB) [53]. We investigated the six traditional classifiers because the results needed to be interpreted by speculating the reasons from the behaviors of the classifiers in the white box (interpretable model) manner. That is why we did not include black-box classifiers such as ANN [50] and Deep learning architectures [54] in this study. The model parameters of the classifiers were estimated by the grid search. The estimated parametres were: KNN: ‘k’ = 2 where ‘k’ is the number of neighbors; LDA: ‘n_components’ = 1 where ‘n_components’ is the number of components; QDA: ‘reg_param’ = 0.001 where ‘reg_param’ is the regularization of the per-class covariance; SVM: ‘C’ = 107, ‘gamma’ = 0.001, and kernel = radial basis function where ‘C’ is the regularization parameter and ‘gamma’ is the kernel coefficient; RF: ‘n_estimators’ = 1500 where ‘n_estimators’ is the number of trees in the forest. For training and testing the classifiers, we divided the data by 80% for training and 20% for testing at the subject level; the samples from 12 subjects were used for training and the samples from the other three subjects were used for testing (five-fold cross validation was adopted). We assumed that the five-fold cross validation appropriately divides the samples for the proper train and reliable test of the classifiers as well as the subject level separation to assess the effect of inter-subject variation in the hand gesture classification.

- (5)

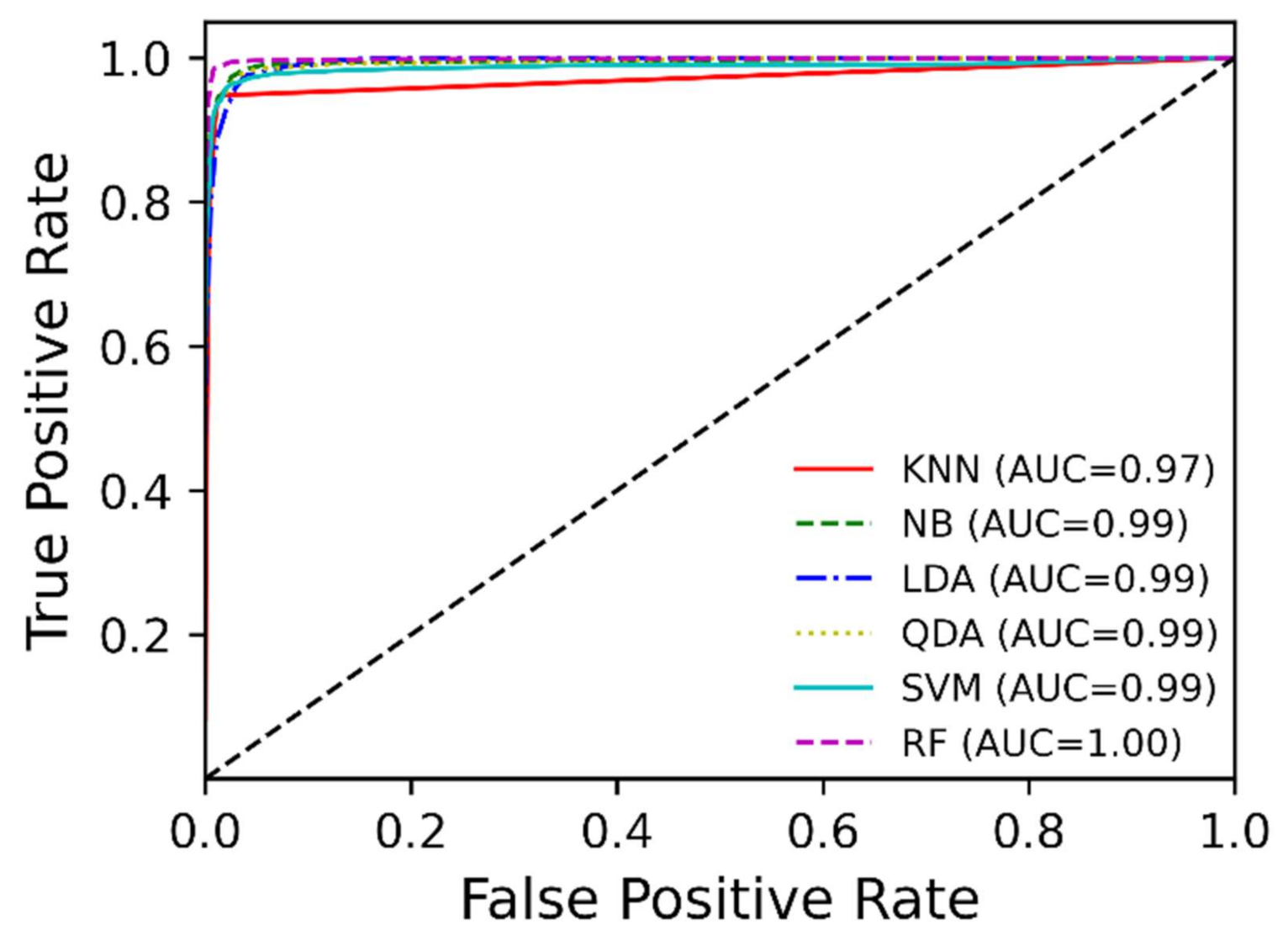

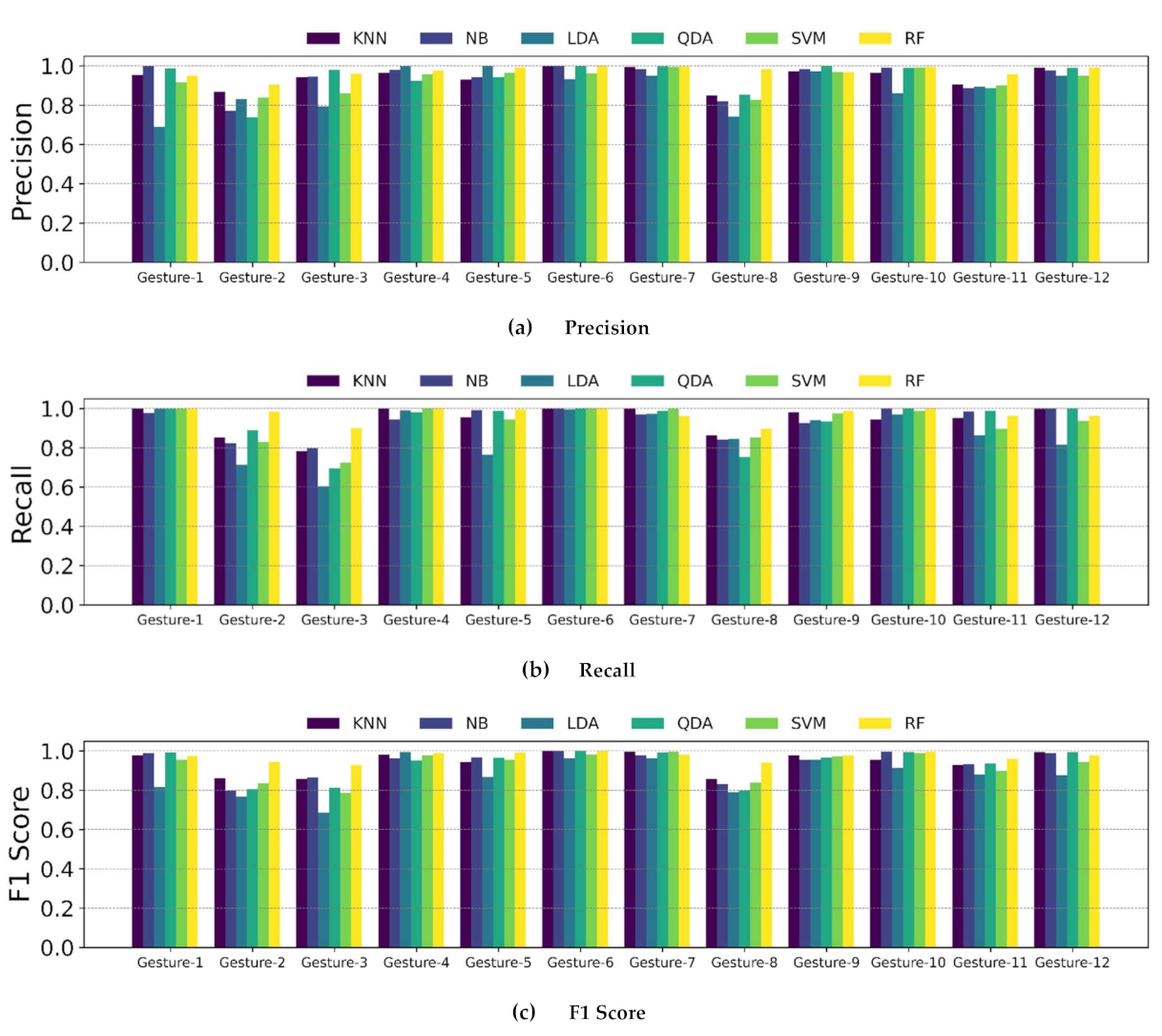

- For the performance evaluation, we calculated the accuracy, recall, precision, and F1 score of each classifiers. As well, confusion matrix and ROC curve were analyzed.

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Prieur, J.; Barbu, S.; Blois-Heulin, C.; Lemasson, A. The origins of gestures and language: History, current advances and proposed theories. Biol. Rev. 2020, 95, 531–554. [Google Scholar] [CrossRef] [PubMed]

- Cheok, M.J.; Omar, Z.; Jaward, M.H. A review of hand gesture and sign language recognition techniques. Int. J. Mach. Learn. Cybern. 2019, 10, 131–153. [Google Scholar] [CrossRef]

- Hu, B.; Wang, J. Deep Learning Based Hand Gesture Recognition and UAV Flight Controls. Int. J. Autom. Comput. 2020, 17, 17–29. [Google Scholar] [CrossRef]

- Shin, S.; Tafreshi, R.; Langari, R. EMG and IMU based real-time HCI using dynamic hand gestures for a multiple-DoF robot arm. J. Intell. Fuzzy Syst. 2018, 35, 861–876. [Google Scholar] [CrossRef]

- Kim, M.; Choi, S.H.; Park, K.-B.; Lee, J.Y. User Interactions for Augmented Reality Smart Glasses: A Comparative Evaluation of Visual Contexts and Interaction Gestures. Appl. Sci. 2019, 9, 3171. [Google Scholar] [CrossRef] [Green Version]

- Rautaray, S.S.; Agrawal, A. Vision based hand gesture recognition for human computer interaction: A survey. Artif. Intell. Rev. 2015, 43, 1–54. [Google Scholar] [CrossRef]

- Al-Shamayleh, A.S.; Ahmad, R.; Abushariah, M.A.M.; Alam, K.A.; Jomhari, N. A systematic literature review on vision based gesture recognition techniques. Multimed. Tools Appl. 2018, 77, 28121–28184. [Google Scholar] [CrossRef]

- Chen, W.; Yu, C.; Tu, C.; Lyu, Z.; Tang, J.; Ou, S.; Fu, Y.; Xue, Z. A Survey on Hand Pose Estimation with Wearable Sensors and Computer-Vision-Based Methods. Sensors 2020, 20, 1074. [Google Scholar] [CrossRef] [Green Version]

- Ahmed, M.A.; Zaidan, B.B.; Zaidan, A.A.; Salih, M.M.; Lakulu, M.M. Bin A Review on Systems-Based Sensory Gloves for Sign Language Recognition State of the Art between 2007 and 2017. Sensors 2018, 18, 2208. [Google Scholar] [CrossRef] [Green Version]

- Fang, B.; Lv, Q.; Shan, J.; Sun, F.; Liu, H.; Guo, D.; Zhao, Y. Dynamic Gesture Recognition Using Inertial Sensors-based Data Gloves. In Proceedings of the 2019 IEEE 4th International Conference on Advanced Robotics and Mechatronics (ICARM), Toyonaka, Japan, 3–5 July 2019; pp. 390–395. [Google Scholar]

- Jaramillo-Yánez, A.; Benalcázar, M.E.; Mena-Maldonado, E. Real-Time Hand Gesture Recognition Using Surface Electromyography and Machine Learning: A Systematic Literature Review. Sensors 2020, 20, 2467. [Google Scholar] [CrossRef]

- Su, H.; Ovur, S.E.; Zhou, X.; Qi, W.; Ferrigno, G.; De Momi, E. Depth vision guided hand gesture recognition using electromyographic signals. Adv. Robot. 2020, 34, 985–997. [Google Scholar] [CrossRef]

- KISA, D.H.; OZDEMIR, M.A.; GUREN, O.; AKAN, A. EMG based Hand Gesture Classification using Empirical Mode Decomposition Time-Series and Deep Learning. In Proceedings of the 2020 Medical Technologies Congress (TIPTEKNO), Online, 19–20 November 2020; pp. 1–4. [Google Scholar]

- Zhang, Y.; Chen, Y.; Yu, H.; Yang, X.; Lu, W. Learning Effective Spatial–Temporal Features for sEMG Armband-Based Gesture Recognition. IEEE Internet Things J. 2020, 7, 6979–6992. [Google Scholar] [CrossRef]

- Chossat, J.-B.; Tao, Y.; Duchaine, V.; Park, Y.-L. Wearable soft artificial skin for hand motion detection with embedded microfluidic strain sensing. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 30 May 2015; pp. 2568–2573. [Google Scholar]

- Xu, S.; Vogt, D.M.; Hsu, W.-H.; Osborne, J.; Walsh, T.; Foster, J.R.; Sullivan, S.K.; Smith, V.C.; Rousing, A.W.; Goldfield, E.C.; et al. Biocompatible Soft Fluidic Strain and Force Sensors for Wearable Devices. Adv. Funct. Mater. 2019, 29, 1807058. [Google Scholar] [CrossRef]

- Shin, H.-S.; Ryu, J.; Majidi, C.; Park, Y.-L. Enhanced performance of microfluidic soft pressure sensors with embedded solid microspheres. J. Micromech. Microeng. 2016, 26, 025011. [Google Scholar] [CrossRef]

- Dickey, M.D. Stretchable and Soft Electronics using Liquid Metals. Adv. Mater. 2017, 29, 1606425. [Google Scholar] [CrossRef]

- Choi, D.Y.; Kim, M.H.; Oh, Y.S.; Jung, S.-H.; Jung, J.H.; Sung, H.J.; Lee, H.W.; Lee, H.M. Highly Stretchable, Hysteresis-Free Ionic Liquid-Based Strain Sensor for Precise Human Motion Monitoring. ACS Appl. Mater. Interfaces 2017, 9, 1770–1780. [Google Scholar] [CrossRef]

- Park, Y.L.; Chen, B.R.; Wood, R.J. Design and fabrication of soft artificial skin using embedded microchannels and liquid conductors. IEEE Sens. J. 2012, 12, 2711–2718. [Google Scholar] [CrossRef]

- Zhang, S.-H.; Wang, F.-X.; Li, J.-J.; Peng, H.-D.; Yan, J.-H.; Pan, G.-B. Wearable Wide-Range Strain Sensors Based on Ionic Liquids and Monitoring of Human Activities. Sensors 2017, 17, 2621. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Huang, Y.; Sun, X.; Zhao, Y.; Guo, X.; Liu, P.; Liu, C.; Zhang, Y. Static and Dynamic Human Arm/Hand Gesture Capturing and Recognition via Multiinformation Fusion of Flexible Strain Sensors. IEEE Sens. J. 2020, 20, 6450–6459. [Google Scholar] [CrossRef]

- Dickey, M.D.; Chiechi, R.C.; Larsen, R.J.; Weiss, E.A.; Weitz, D.A.; Whitesides, G.M. Eutectic Gallium-Indium (EGaIn): A Liquid Metal Alloy for the Formation of Stable Structures in Microchannels at Room Temperature. Adv. Funct. Mater. 2008, 18, 1097–1104. [Google Scholar] [CrossRef]

- Paik, J.K.; Kramer, R.K.; Wood, R.J. Stretchable circuits and sensors for robotic origami. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 414–420. [Google Scholar]

- Boley, J.W.; White, E.L.; Chiu, G.T.C.; Kramer, R.K. Direct Writing of Gallium-Indium Alloy for Stretchable Electronics. Adv. Funct. Mater. 2014, 24, 3501–3507. [Google Scholar] [CrossRef]

- So, J.-H.; Thelen, J.; Qusba, A.; Hayes, G.J.; Lazzi, G.; Dickey, M.D. Reversibly Deformable and Mechanically Tunable Fluidic Antennas. Adv. Funct. Mater. 2009, 19, 3632–3637. [Google Scholar] [CrossRef]

- Rashid, A.; Hasan, O. Wearable technologies for hand joints monitoring for rehabilitation: A survey. Microelectron. J. 2019, 88, 173–183. [Google Scholar] [CrossRef]

- Zheng, Y.; Peng, Y.; Wang, G.; Liu, X.; Dong, X.; Wang, J. Development and evaluation of a sensor glove for hand function assessment and preliminary attempts at assessing hand coordination. Measurement 2016, 93, 1–12. [Google Scholar] [CrossRef]

- Shen, Z.; Yi, J.; Li, X.; Mark, L.H.P.; Hu, Y.; Wang, Z. A soft stretchable bending sensor and data glove applications. In Proceedings of the 2016 IEEE International Conference on Real-time Computing and Robotics (RCAR), Angkor Wat, Cambodia, 6–9 June 2016; pp. 88–93. [Google Scholar]

- Ciotti, S.; Battaglia, E.; Carbonaro, N.; Bicchi, A.; Tognetti, A.; Bianchi, M. A Synergy-Based Optimally Designed Sensing Glove for Functional Grasp Recognition. Sensors 2016, 16, 811. [Google Scholar] [CrossRef] [Green Version]

- Saggio, G. A novel array of flex sensors for a goniometric glove. Sens. Actuators A Phys. 2014, 205, 119–125. [Google Scholar] [CrossRef]

- Bianchi, M.; Haschke, R.; Büscher, G.; Ciotti, S.; Carbonaro, N.; Tognetti, A. A Multi-Modal Sensing Glove for Human Manual-Interaction Studies. Electronics 2016, 5, 42. [Google Scholar] [CrossRef]

- Michaud, H.O.; Dejace, L.; de Mulatier, S.; Lacour, S.P. Design and functional evaluation of an epidermal strain sensing system for hand tracking. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 3186–3191. [Google Scholar]

- Park, W.; Ro, K.; Kim, S.; Bae, J. A Soft Sensor-Based Three-Dimensional (3-D) Finger Motion Measurement System. Sensors 2017, 17, 420. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Atalay, A.; Sanchez, V.; Atalay, O.; Vogt, D.M.; Haufe, F.; Wood, R.J.; Walsh, C.J. Batch Fabrication of Customizable Silicone-Textile Composite Capacitive Strain Sensors for Human Motion Tracking. Adv. Mater. Technol. 2017, 2, 1700136. [Google Scholar] [CrossRef] [Green Version]

- Ryu, H.; Park, S.; Park, J.-J.; Bae, J. A knitted glove sensing system with compression strain for finger movements. Smart Mater. Struct. 2018, 27, 055016. [Google Scholar] [CrossRef]

- Glauser, O.; Panozzo, D.; Hilliges, O.; Sorkine-Hornung, O. Deformation Capture via Soft and Stretchable Sensor Arrays. ACM Trans. Graph. 2019, 38, 1–16. [Google Scholar] [CrossRef] [Green Version]

- Yang, C.-C.; Hsu, Y.-L. A Review of Accelerometry-Based Wearable Motion Detectors for Physical Activity Monitoring. Sensors 2010, 10, 7772–7788. [Google Scholar] [CrossRef]

- Hsiao, P.C.; Yang, S.Y.; Lin, B.S.; Lee, I.J.; Chou, W. Data glove embedded with 9-axis IMU and force sensing sensors for evaluation of hand function. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBS), Milan, Italy, 25–29 August 2015; pp. 4631–4634. [Google Scholar]

- Wu, J.; Huang, J.; Wang, Y.; Xing, K. RLSESN-based PID adaptive control for a novel wearable rehabilitation robotic hand driven by PM-TS actuators. Int. J. Intell. Comput. Cybern. 2012, 5, 91–110. [Google Scholar] [CrossRef]

- Chen, K.-Y.; Patel, S.N.; Keller, S. Finexus: Tracking Precise Motions of Multiple Fingertips Using Magnetic Sensing. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems; Santa Clara, CA, USA, 7–12 May 2016, ACM: New York, NY, USA, 2016; pp. 1504–1514. [Google Scholar]

- Yeo, J.C.; Yap, H.K.; Xi, W.; Wang, Z.; Yeow, C.-H.; Lim, C.T. Flexible and Stretchable Strain Sensing Actuator for Wearable Soft Robotic Applications. Adv. Mater. Technol. 2016, 1, 1600018. [Google Scholar] [CrossRef]

- Kim, S.; Jeong, D.; Oh, J.; Park, W.; Bae, J. A Novel All-in-One Manufacturing Process for a Soft Sensor System and its Application to a Soft Sensing Glove. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 7004–7009. [Google Scholar]

- Nassour, J.; Amirabadi, H.G.; Weheabby, S.; Al Ali, A.; Lang, H.; Hamker, F. A Robust Data-Driven Soft Sensory Glove for Human Hand Motions Identification and Replication. IEEE Sens. J. 2020, 20, 12972–12979. [Google Scholar] [CrossRef]

- Gallium-Indium eutectic. Available online: https://pubchem.ncbi.nlm.nih.gov/substance/24872973 (accessed on 2 February 2021).

- Stratasys. Available online: https://www.stratasys.com/3d-printers/objet-350-500-connex3 (accessed on 2 February 2021).

- Park, Y.-L.; Tepayotl-Ramirez, D.; Wood, R.J.; Majidi, C. Influence of cross-sectional geometry on the sensitivity and hysteresis of liquid-phase electronic pressure sensors. Appl. Phys. Lett. 2012, 101, 191904. [Google Scholar] [CrossRef] [Green Version]

- Oskoei, M.A.; Hu, H. Support vector machine-based classification scheme for myoelectric control applied to upper limb. IEEE Trans. Biomed. Eng. 2008, 55, 1956–1965. [Google Scholar] [CrossRef]

- Fix, E.; Hodges, J.L. Discriminatory Analysis. Nonparametric Discrimination: Consistency Properties. Int. Stat. Rev./Rev. Int. Stat. 1989, 57, 238. [Google Scholar] [CrossRef]

- Bishop, C.M. Pattern Recoginiton and Machine Learning; Springer: New York, NY, USA, 2006; ISBN 978-0-387-31073-2. [Google Scholar]

- Hastie, T.; Tibshirani, R.; Friedman, J. Springer Series in Statistics The Elements of Statistical Learning—Data Mining, Inference, and Prediction; Springer: New York, NY, USA, 2009; ISBN 978-0-387-84858-7. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Zhang, H. Exploring conditions for the optimality of naive Bayes. Int. J. Pattern Recognit. Artif. Intell. 2005, 19, 183–198. [Google Scholar] [CrossRef]

- Kiranyaz, S.; Avci, O.; Abdeljaber, O.; Ince, T.; Gabbouj, M.; Inman, D.J. 1D convolutional neural networks and applications: A survey. Mech. Syst. Signal Process. 2021, 151, 107398. [Google Scholar] [CrossRef]

- Shukor, A.Z.; Miskon, M.F.; Jamaluddin, M.H.; bin Ali@Ibrahim, F.; Asyraf, M.F.; Bahar, M.B. Bin A New Data Glove Approach for Malaysian Sign Language Detection. Procedia Comput. Sci. 2015, 76, 60–67. [Google Scholar] [CrossRef] [Green Version]

- Saggio, G.; Cavallo, P.; Ricci, M.; Errico, V.; Zea, J.; Benalcázar, M.E. Sign Language Recognition Using Wearable Electronics: Implementing k-Nearest Neighbors with Dynamic Time Warping and Convolutional Neural Network Algorithms. Sensors 2020, 20, 3879. [Google Scholar] [CrossRef]

- Pezzuoli, F.; Corona, D.; Corradini, M.L. Recognition and Classification of Dynamic Hand Gestures by a Wearable Data-Glove. SN Comput. Sci. 2021, 2, 5. [Google Scholar] [CrossRef]

- Huang, X.; Wang, Q.; Zang, S.; Wan, J.; Yang, G.; Huang, Y.; Ren, X. Tracing the Motion of Finger Joints for Gesture Recognition via Sewing RGO-Coated Fibers Onto a Textile Glove. IEEE Sens. J. 2019, 19, 9504–9511. [Google Scholar] [CrossRef]

- Mummadi, C.; Leo, F.; Verma, K.; Kasireddy, S.; Scholl, P.; Kempfle, J.; Laerhoven, K. Real-Time and Embedded Detection of Hand Gestures with an IMU-Based Glove. Informatics 2018, 5, 28. [Google Scholar] [CrossRef] [Green Version]

- Wong, W.K.; Juwono, F.H.; Khoo, B.T.T. Multi-Features Capacitive Hand Gesture Recognition Sensor: A Machine Learning Approach. IEEE Sens. J. 2021, 21, 8441–8450. [Google Scholar] [CrossRef]

- James, G.; Witten, D.; Hastie, T.; Tibshirani, R. An Introduction to Statistical Learning; Springer Texts in Statistics; Springer: New York, NY, USA, 2013; Volume 103, ISBN 978-1-4614-7137-0. [Google Scholar]

| Age [yr] | Gender (M: Male, F: Female) | Height [cm] | Weight [kg] |

|---|---|---|---|

| 32 ± 7 | M: 13, F: 2 | 172.0 ± 7.6 | 71.4 ± 11.5 |

| Classifier | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|

| KNN | 94.5% ± 2.2% | 95.0% ± 2.0% | 94.5% ± 2.2% | 94.4% ± 2.3% |

| NB | 93.9% ± 1.7% | 94.4% ± 1.7% | 93.9% ± 1.7% | 93.8% ± 1.7% |

| LDA | 87.4% ± 5.6% | 89.5% ± 4.1% | 87.4% ± 5.6% | 87.1% ± 5.7% |

| QDA | 93.6% ± 2.4% | 94.3% ± 2.1% | 93.6% ± 2.4% | 93.4% ± 2.5% |

| SVM | 92.9% ± 4.0% | 93.5% ± 3.3% | 92.9% ± 4.0% | 92.7% ± 4.0% |

| RF | 97.3% ± 2.4% | 97.6% ± 1.9% | 97.3% ± 2.4% | 97.2% ± 2.4% |

| Reference | Sensor | Raw Data | # Gestures | # Users | Accuracy |

|---|---|---|---|---|---|

| Shukor et al. [55] | Tilt | 10(tilt) | 9 | 4 | 89% |

| Saggio et al. [56] | Flex(glove) + IMU(arm) | 10 (flex) + 6 (IMU) | 10 | 7 | 98% |

| Pezzuoli et al. [57] | Flex(glove) + IMU(arm) | 10 (flex) + 2 (IMU) | 27 | 5 | 99% |

| Huang et al. [58] | Reduced Graphene Oxide(RGO) coated fibers | 10 (flex) | 10 | 4 | 99% |

| Nassour et al. [44] | Potassium Iodide(KI)-Glycerol(Gly) + Conductive Liquid | 14 (flex) | 15 | 1 | 89% |

| Ciotti et al. [30] | Knitted Piezoresistive Fabrics | 5 (stretch) | 8 | 5 | 98% |

| Mummadi et al. [59] | IMU | 5 (IMU) | 22 | 57 | 92% |

| Wong et al. [60] | Capacitive | 5 (capacitive) | 26 | 10 | 99% |

| This Study | EGaIn Microchannels | 10 (stretch) | 12 | 15 | 97% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shin, S.; Yoon, H.U.; Yoo, B. Hand Gesture Recognition Using EGaIn-Silicone Soft Sensors. Sensors 2021, 21, 3204. https://doi.org/10.3390/s21093204

Shin S, Yoon HU, Yoo B. Hand Gesture Recognition Using EGaIn-Silicone Soft Sensors. Sensors. 2021; 21(9):3204. https://doi.org/10.3390/s21093204

Chicago/Turabian StyleShin, Sungtae, Han Ul Yoon, and Byungseok Yoo. 2021. "Hand Gesture Recognition Using EGaIn-Silicone Soft Sensors" Sensors 21, no. 9: 3204. https://doi.org/10.3390/s21093204

APA StyleShin, S., Yoon, H. U., & Yoo, B. (2021). Hand Gesture Recognition Using EGaIn-Silicone Soft Sensors. Sensors, 21(9), 3204. https://doi.org/10.3390/s21093204