Can Markerless Pose Estimation Algorithms Estimate 3D Mass Centre Positions and Velocities during Linear Sprinting Activities?

Abstract

1. Introduction

2. Materials and Methods

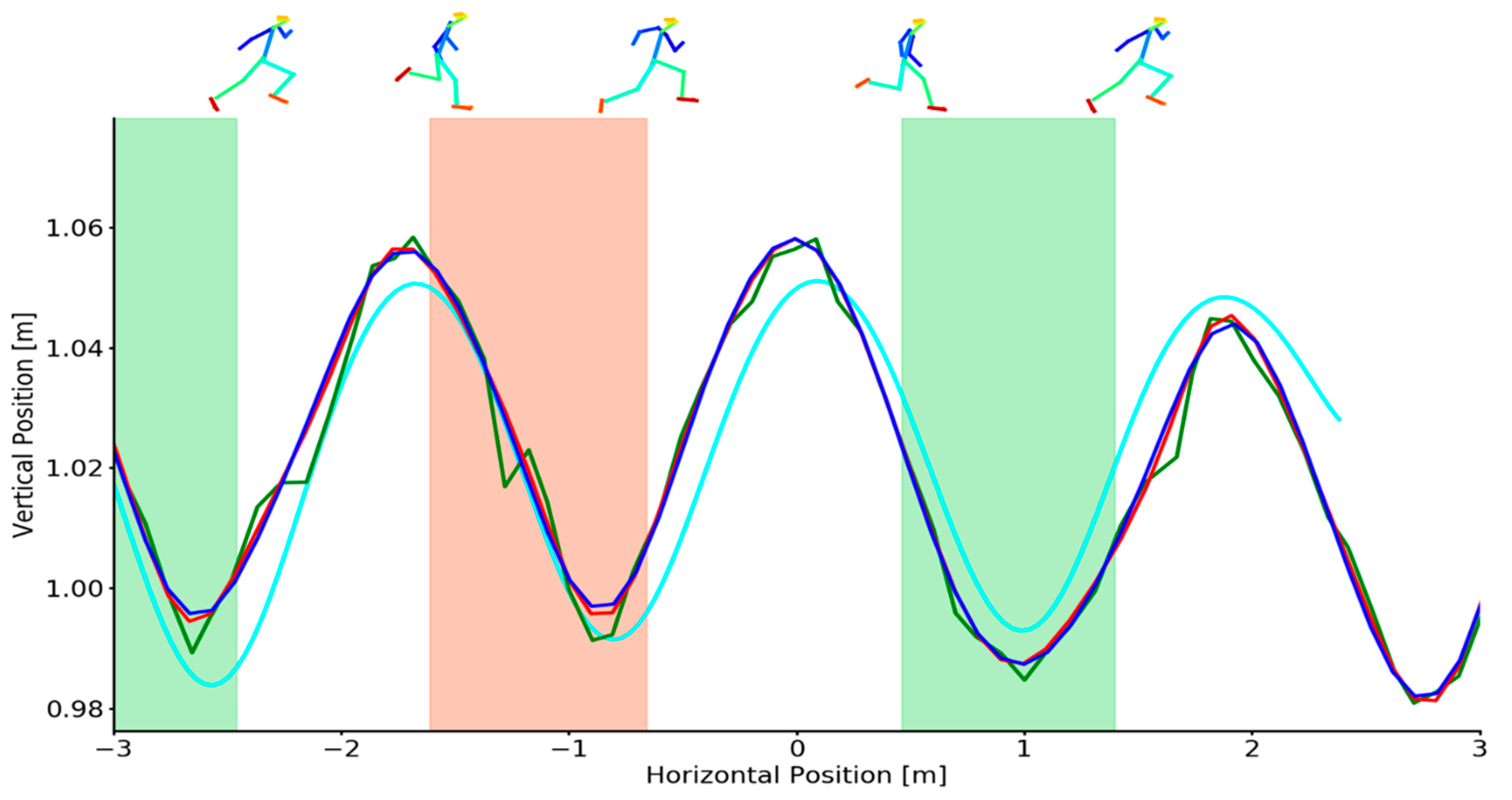

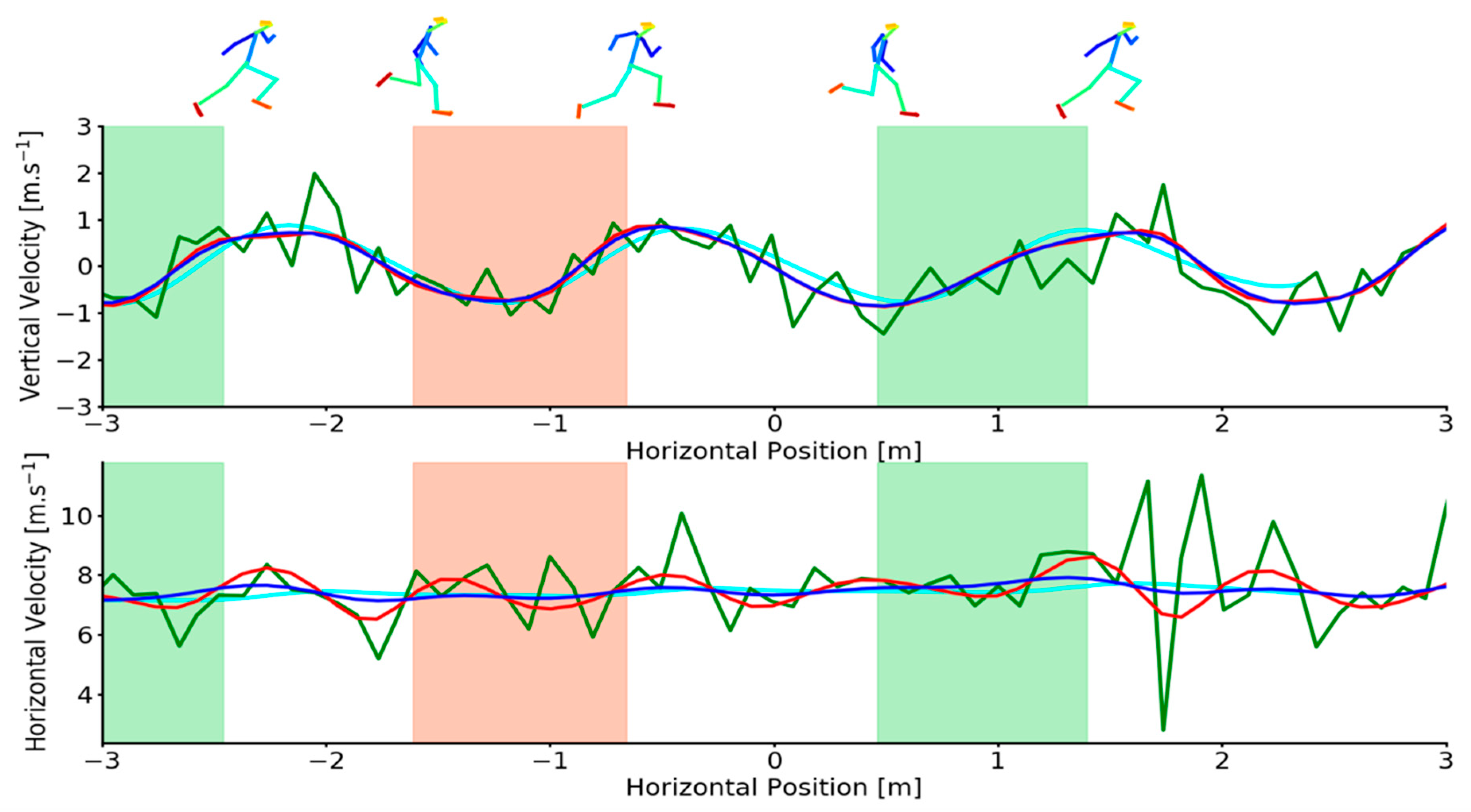

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Keypoint Number | Body Part | Dataset |

|---|---|---|

| 0 | Nose | COCO |

| 1 | Neck | COCO |

| 2 | Right Shoulder | COCO |

| 3 | Right Elbow | COCO |

| 4 | Right Wrist | COCO |

| 5 | Left Shoulder | COCO |

| 6 | Left Elbow | COCO |

| 7 | Left Wrist | COCO |

| 8 | Mid Hip | COCO |

| 9 | Right Hip | COCO |

| 10 | Right Knee | COCO |

| 11 | Right Ankle | COCO |

| 12 | Left Hip | COCO |

| 13 | Left Knee | COCO |

| 14 | Left Ankle | COCO |

| 15 | Right Eye | COCO |

| 16 | Left Eye | COCO |

| 17 | Right Ear | COCO |

| 18 | Left Ear | COCO |

| 19 | Right Big Toe | Human Foot Keypoint Dataset |

| 20 | Right Small Toe | Human Foot Keypoint Dataset |

| 21 | Right Heel | Human Foot Keypoint Dataset |

| 22 | Left Big Toe | Human Foot Keypoint Dataset |

| 23 | Left Small Toe | Human Foot Keypoint Dataset |

| 24 | Left Heel | Human Foot Keypoint Dataset |

| 25 | Background | COCO |

References

- Duffield, R.; Reid, M.; Baker, J.; Spratford, W. Accuracy and reliability of GPS devices for measurement of movement patterns in confined spaces for court-based sports. J. Sci. Med. in Sport 2010, 13, 523–525. [Google Scholar] [CrossRef]

- Zanoletti, C.; La Torre, A.; Merati, G.; Rampinini, E.; Impellizzeri, F.M. Relationship between push phase and final race time in skeleton performance. J. Strength Cond. Res. 2006, 20, 579–583. [Google Scholar]

- Colyer, S.L.; Stokes, K.A.; Bilzon, J.L.J.; Cardinale, M.; Salo, A.I.T. Physical Predictors of Elite Skeleton Start Performance. Int. J. Sports Physiol. Perform. 2017, 12, 81–89. [Google Scholar] [CrossRef]

- Colyer, S.L.; Stokes, K.A.; Bilzon, J.L.J.; Salo, A.I.T. Skeleton sled velocity profiles: a novel approach to understand critical aspects of the elite athletes’ start phases. Sports Biomech. 2018, 17, 168–179. [Google Scholar] [CrossRef]

- Colyer, S.L.; Stokes, K.A.; Bilzon, J.L.J.; Holdcroft, D.; Salo, A.I.T. The effect of altering loading distance on skeleton start performance: Is higher pre-load velocity always beneficial? J. Sports Sci. 2018, 36, 1930–1936. [Google Scholar] [CrossRef]

- Linke, D.; Link, D.; Lames, M. Validation of electronic performance and tracking systems EPTS under field conditions. PLoS ONE 2018, 13. [Google Scholar] [CrossRef]

- Topley, M.; Richards, J.G. A comparison of currently available optoelectronic motion capture systems. J. Biomech. 2020, 106. [Google Scholar] [CrossRef]

- Napier, C.; Jiang, X.T.; MacLean, C.L.; Menon, C.; Hunt, M.A. The use of a single sacral marker method to approximate the centre of mass trajectory during treadmill running. J. Biomech. 2020, 108. [Google Scholar] [CrossRef]

- Colyer, S.L.; Evans, M.; Cosker, D.P.; Salo, A.I.T. A Review of the Evolution of Vision-Based Motion Analysis and the Integration of Advanced Computer Vision Methods Towards Developing a Markerless System. Sports Med.-Open 2018, 4. [Google Scholar] [CrossRef]

- Hay, J.G.; Miller, J.A. Techniques used in the transition from approach to takeoff in the long jump. J. Appl. Biomech. 1985, 1, 174–184. [Google Scholar] [CrossRef]

- Mero, A.; Komi, P.V. Effects of supramaximal velocity on biomechanical variables in sprinting. J. Appl. Biomech. 1985, 1, 240–252. [Google Scholar] [CrossRef]

- Bezodis, N.E.; Salo, A.I.T.; Trewartha, G. Measurement Error in Estimates of Sprint Velocity from a Laser Displacement Measurement Device. Int. J. Sports Med. 2012, 33, 439–444. [Google Scholar] [CrossRef]

- Haugen, T.; Buchheit, M. Sprint running performance monitoring: methodological and practical considerations. Sports Med. 2016, 46, 641–656. [Google Scholar] [CrossRef] [PubMed]

- Al-Ali, A.; Almaadeed, S. A review on soccer player tracking techniques based on extracted features. In Proceedings of the 2017 6th International Conference on Information and Communication Technology and Accessibility (ICTA), Muscat, Oman, 19–21 December 2017; pp. 1–6. [Google Scholar]

- Mundermann, L.; Corazza, S.; Andriacchi, T.P. The evolution of methods for the capture of human movement leading to markerless motion capture for biomechanical applications. J. Neuroeng. Rehabil. 2006, 3. [Google Scholar] [CrossRef]

- Cao, Z.; Simon, T.; Wei, S.E.; Sheikh, Y.; IEEE. Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields. In Proceedings of the 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1302–1310. [Google Scholar]

- Nakano, N.; Sakura, T.; Ueda, K.; Omura, L.; Kimura, A.; Iino, Y.; Fukashiro, S.; Yoshioka, S. Evaluation of 3D Markerless Motion Capture Accuracy Using OpenPose With Multiple Video Cameras. Front. Sports Act. Living 2020, 2. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollar, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle adjustment—a modern synthesis. In Proceedings of the International workshop on vision algorithms, Corfu, Greece, 21–22 September 1999; pp. 298–372. [Google Scholar]

- Zhang, Z.Y. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Challis, J.H. A procedure for the automatic determination of filter cutoff frequency for the processing of biomechanical data. J. Appl. Biomech. 1999, 15, 303–317. [Google Scholar] [CrossRef]

- de Leva, P. Adjustments to Zatsiorsky-Seluyanov’s segment inertia parameters. J. Biomech. 1996, 29, 1223–1230. [Google Scholar] [CrossRef]

- Salo, A.I.T.; Scarborough, S. Changes in technique within a sprint hurdle run. Sports Biomech. 2006, 5, 155–166. [Google Scholar] [CrossRef]

- Khan, S.M.; Shah, M. Tracking multiple occluding people by localizing on multiple scene planes. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 31, 505–519. [Google Scholar] [CrossRef]

- Slabaugh, G.; Schafer, R.; Livingston, M. Optimal ray intersection for computing 3d points from n-view correspondences. Deliv. Rep. 2001, 1–11. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.15.6117&rep=rep1&type=pdf (accessed on 2 October 2001).

- Fischler, M.A.; Bolles, R.C. Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Rauch, H.E.; Tung, F.; Striebel, C.T. Maximum likelihood estimates of linear dynamic systems. AIAA J. 1965, 3, 1445–1450. [Google Scholar] [CrossRef]

- Atkinson, G.; Nevill, A.M. Statistical methods for assessing measurement error (reliability) in variables relevant to sports medicine. Sports Med. 1998, 26, 217–238. [Google Scholar] [CrossRef]

- Iosa, M.; Cereatti, A.; Merlo, A.; Campanini, I.; Paolucci, S.; Cappozzo, A. Assessment of Waveform Similarity in Clinical Gait Data: The Linear Fit Method. BioMed Res. Int. 2014, 2014. [Google Scholar] [CrossRef] [PubMed]

- Handsaker, J.C.; Forrester, S.E.; Folland, J.P.; Black, M.I.; Allen, S.J. A kinematic algorithm to identify gait events during running at different speeds and with different footstrike types. J. Biomech. 2016, 49, 4128–4133. [Google Scholar] [CrossRef] [PubMed]

- Needham, L.; Evans, M.; Cosker, D.P.; Colyer, S.L. Using computer vision and deep learning methods to capture skeleton push start performance characteristics. ISBS Proc. Arch. 2020, 38, 756. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; The MIT Press: Cambridge, MA, USA, 2016; pp. 1–775. [Google Scholar]

- Seethapathi, N.; Wang, S.; Saluja, R.; Blohm, G.; Kording, K.P. Movement science needs different pose tracking algorithms. arXiv 2019, arXiv:1907.10226. [Google Scholar]

- Xiu, Y.; Li, J.; Wang, H.; Fang, Y.; Lu, C. Pose flow: Efficient online pose tracking. arXiv 2018, arXiv:1802.00977. [Google Scholar]

- Raaj, Y.; Idrees, H.; Hidalgo, G.; Sheikh, Y.; Soc, I.C. Efficient Online Multi-Person 2D Pose Tracking with Recurrent Spatio-Temporal Affinity Fields. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 4615–4623. [Google Scholar]

- Kidzinski, L.; Yang, B.; Hicks, J.L.; Rajagopal, A.; Delp, S.L.; Schwartz, M.H. Deep neural networks enable quantitative movement analysis using single-camera videos. Nat. Commun. 2020, 11. [Google Scholar] [CrossRef]

- Mai, P.; Willwacher, S. Effects of low-pass filter combinations on lower extremity joint moments in distance running. J. Biomech. 2019, 95. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.Y. Kalman Filter for Robot Vision: A Survey. IEEE Trans. Ind. Electron. 2012, 59, 4409–4420. [Google Scholar] [CrossRef]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? arXiv 2014, arXiv:1411.1792. [Google Scholar]

- Mathis, A.; Biasi, T.; Schneider, S.; Yuksekgonul, M.; Rogers, B.; Bethge, M.; Mathis, M.W. Pretraining boosts out-of-domain robustness for pose estimation. In Proceedings of the Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 5–9 January 2021; pp. 1859–1868. [Google Scholar]

- Mathis, A.; Mamidanna, P.; Cury, K.M.; Abe, T.; Murthy, V.N.; Mathis, M.W.; Bethge, M. DeepLabCut: markerless pose estimation of user-defined body parts with deep learning. Nat. Neurosci. 2018, 21, 1281–1289. [Google Scholar] [CrossRef] [PubMed]

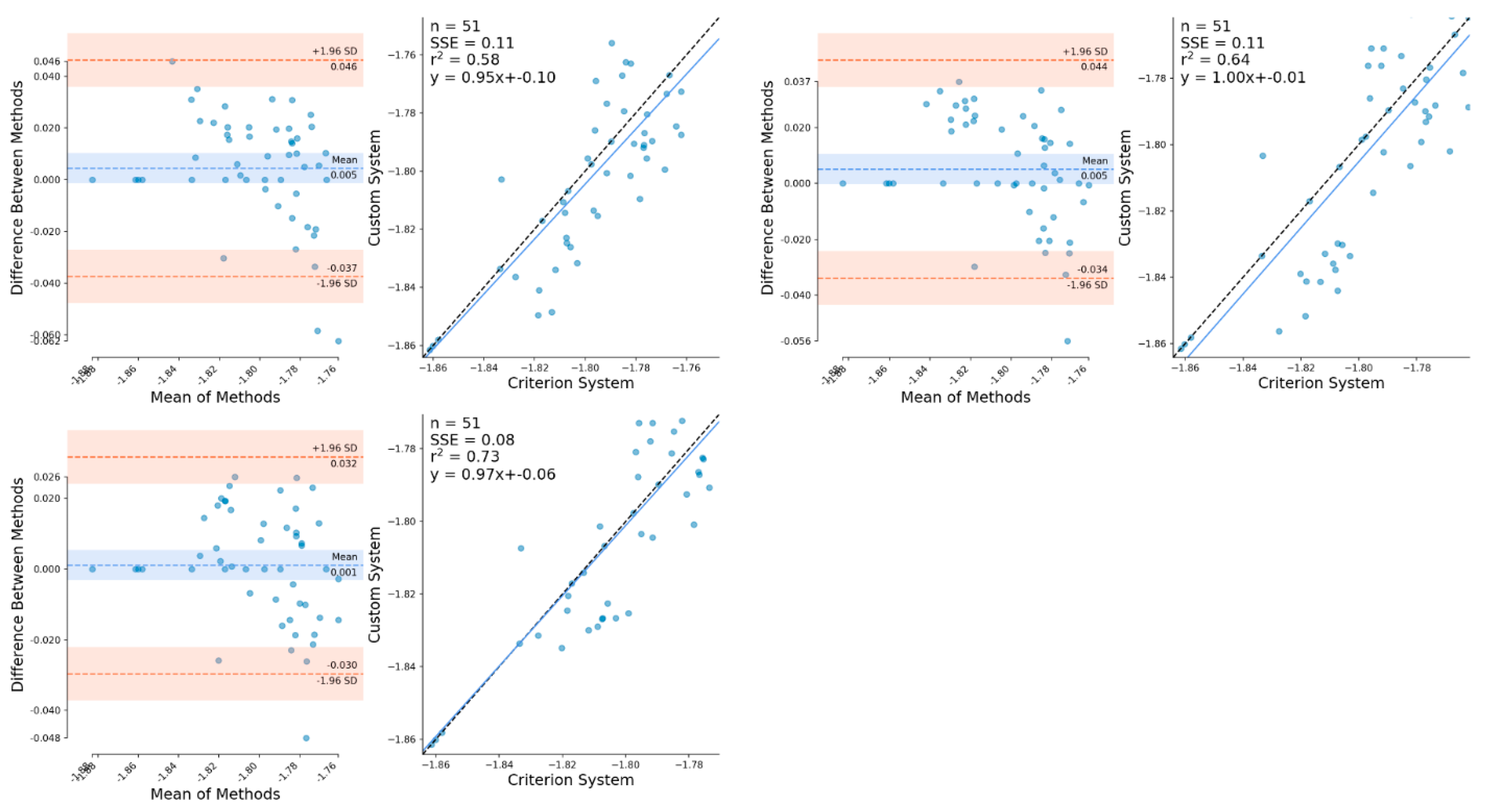

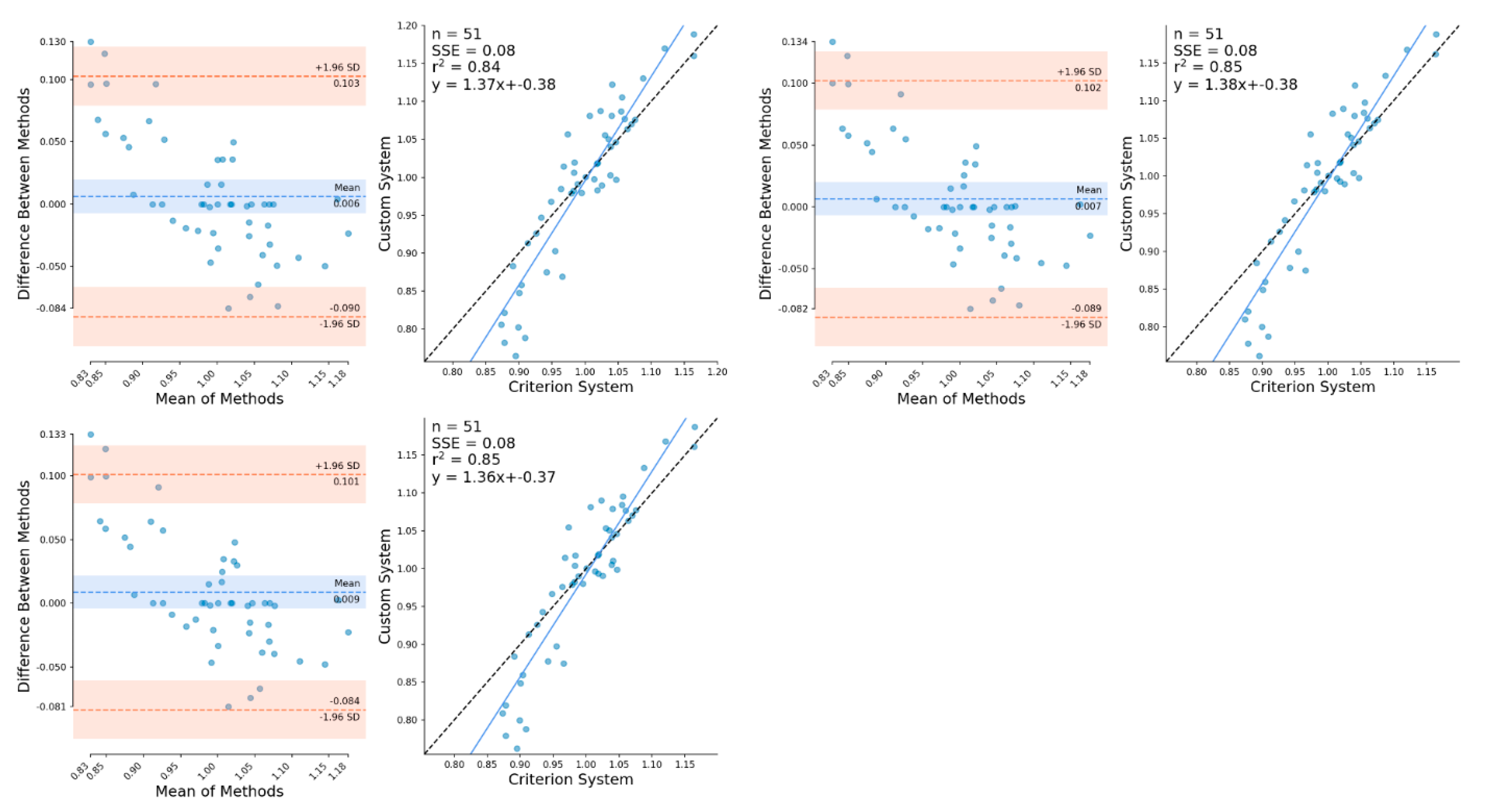

| CoM Position | Component | Mean Difference (Bias) (m) | ±SD | 95% LoA | R2 |

|---|---|---|---|---|---|

| Unfiltered | Horizontal | 0.005 | 0.021 | −0.037–0.046 | 0.58 |

| Unfiltered | Vertical | 0.006 | 0.028 | −0.090–0.103 | 0.84 |

| Low-Pass | Horizontal | 0.005 | 0.020 | −0.034–0.044 | 0.64 |

| Low-Pass | Vertical | 0.007 | 0.027 | −0.089–0.102 | 0.85 |

| Kalman | Horizontal | 0.001 | 0.016 | −0.030–0.032 | 0.73 |

| Kalman | Vertical | 0.009 | 0.032 | −0.084–0.101 | 0.85 |

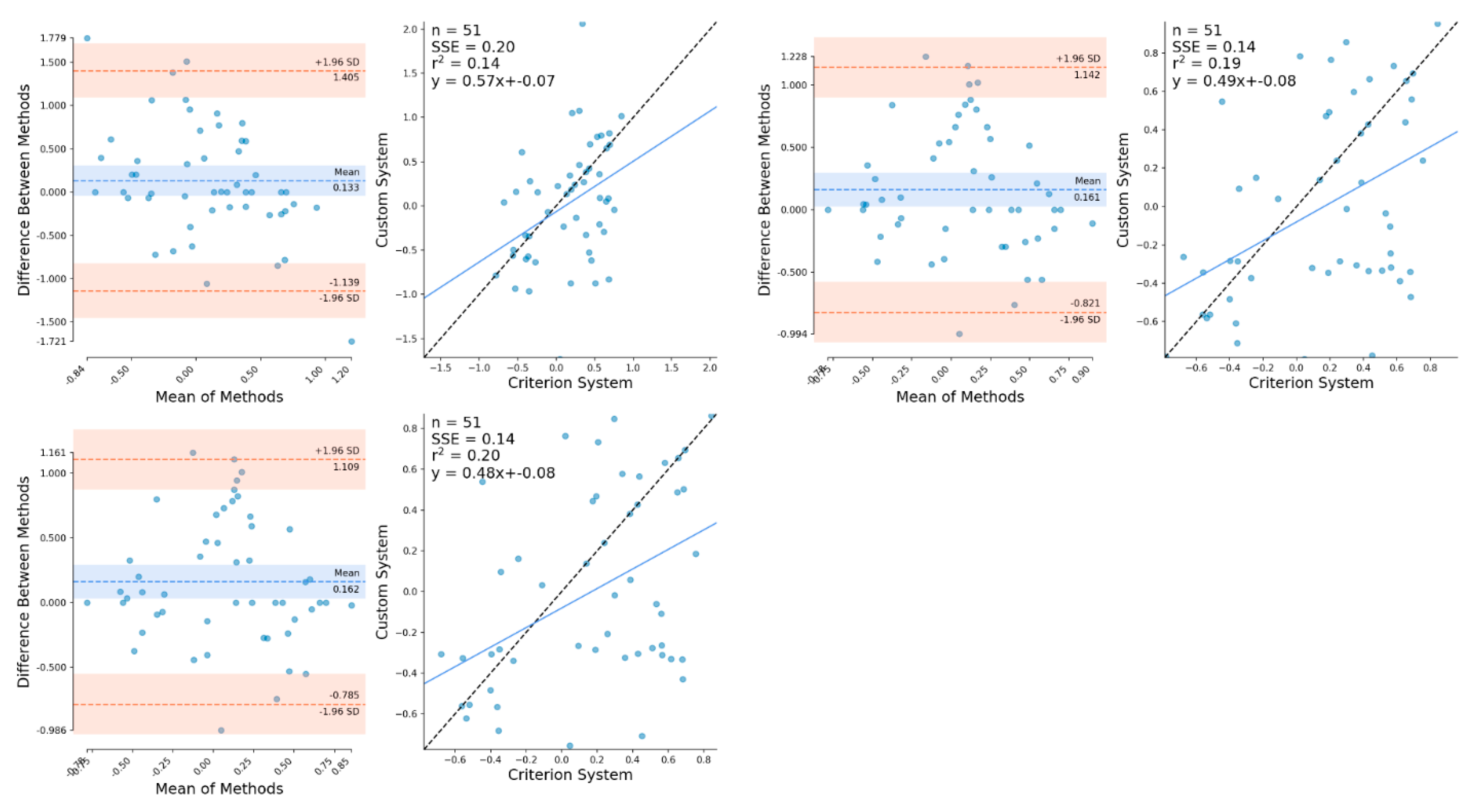

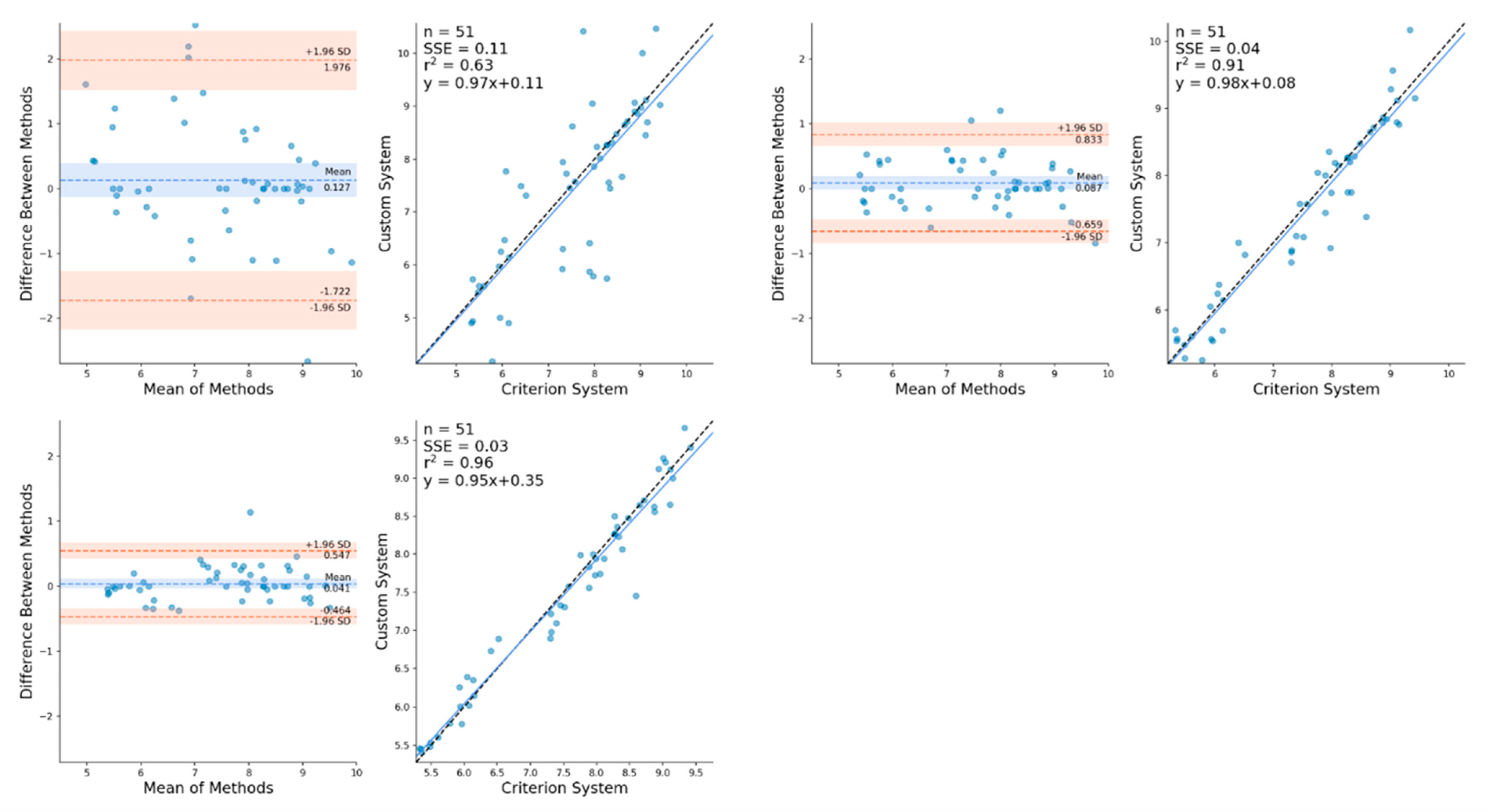

| CoM Velocity | Component | Mean Difference (Bias) (m·s−1) | ±SD | 95% LoA | R2 |

|---|---|---|---|---|---|

| Unfiltered | Horizontal | 0.127 | 0.943 | −1.722–1.974 | 0.63 |

| Unfiltered | Vertical | 0.133 | 0.648 | −1.139–1.405 | 0.14 |

| Low-Pass | Horizontal | 0.087 | 0.381 | −0.659–0.833 | 0.91 |

| Low-Pass | Vertical | 0.161 | 0.501 | −0.821–1.142 | 0.19 |

| Kalman | Horizontal | 0.041 | 0.257 | −0.464–0.547 | 0.96 |

| Kalman | Vertical | 0.162 | 0.483 | −0.785–1.109 | 0.20 |

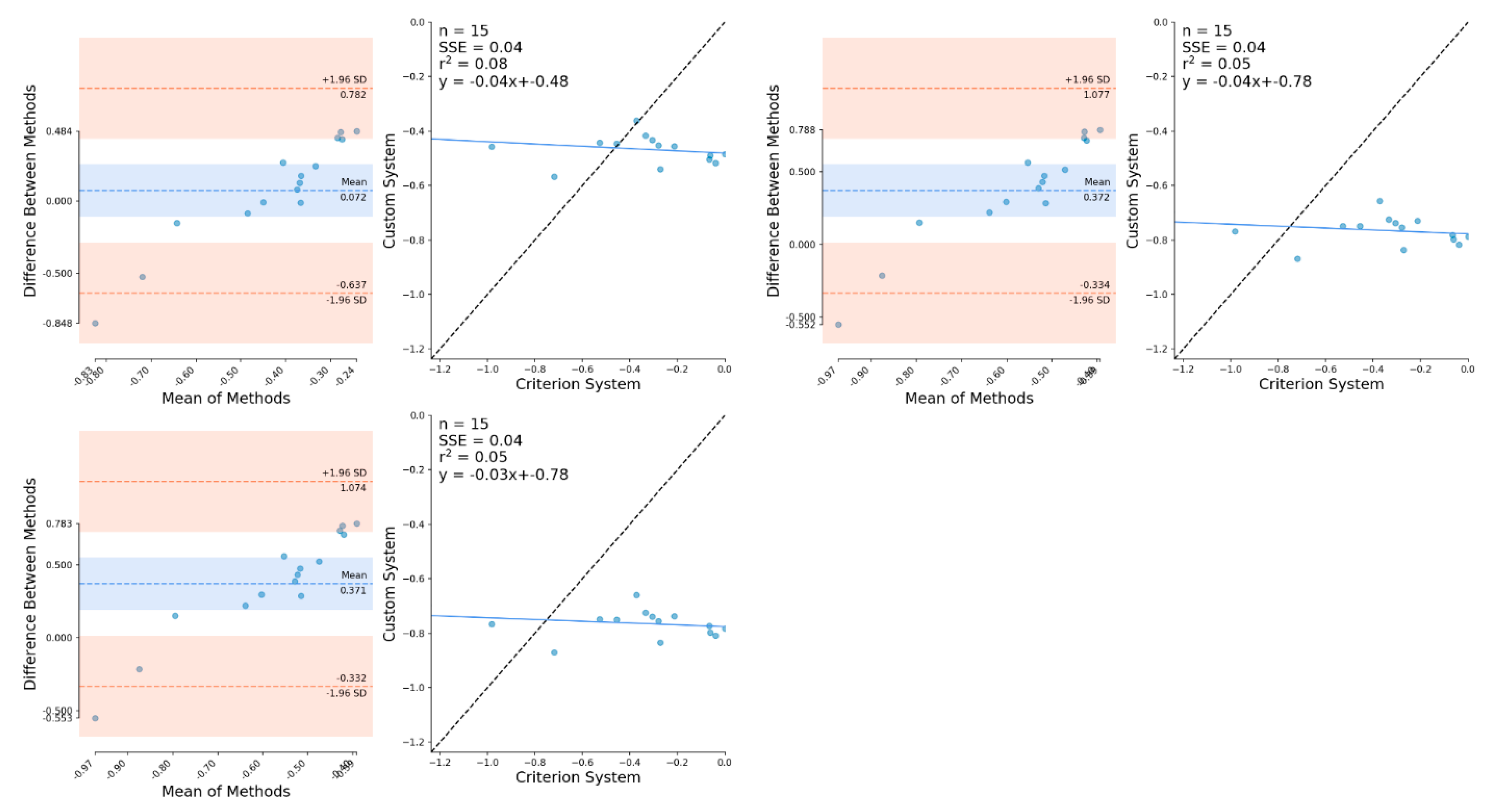

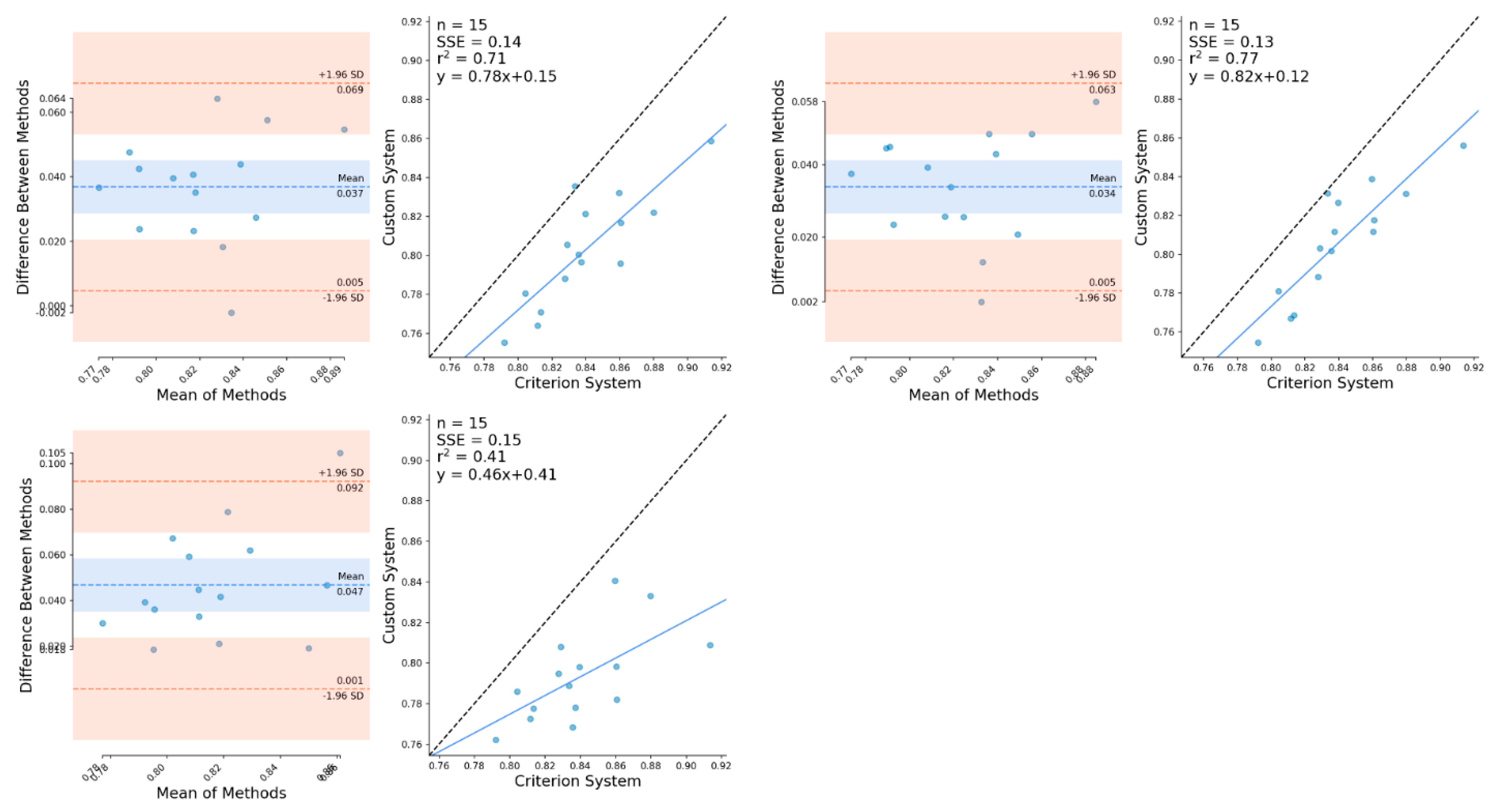

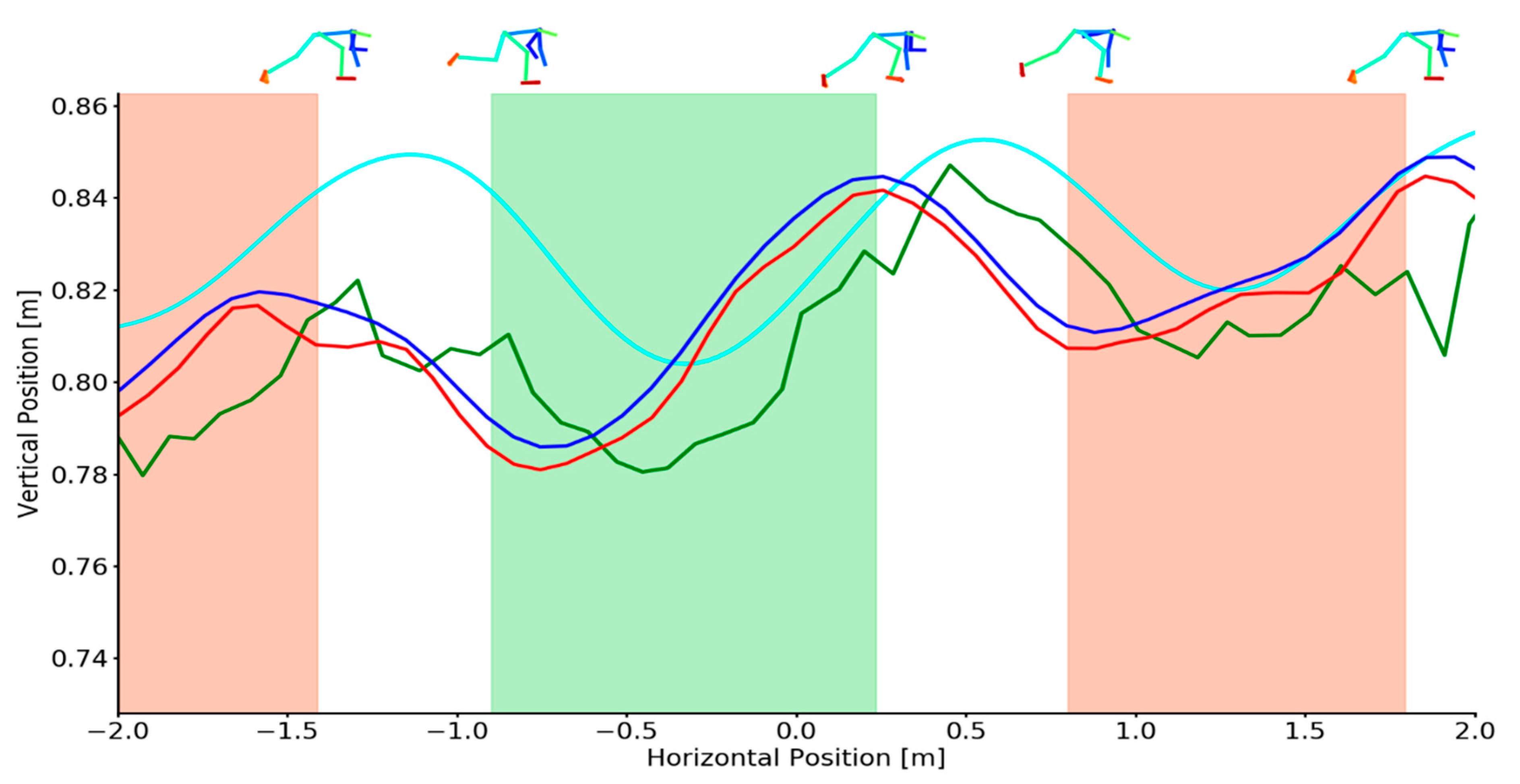

| CoM Position | Component | Mean Difference (Bias) (m) | ±SD | 95% LoA | R2 |

|---|---|---|---|---|---|

| Unfiltered | Horizontal | 0.072 | 1.549 | −0.637–0.786 | 0.08 |

| Unfiltered | Vertical | 0.037 | 0.016 | 0.005–0.069 | 0.71 |

| Low-Pass | Horizontal | 0.372 | 0.391 | −0.334–1.077 | 0.05 |

| Low-Pass | Vertical | 0.034 | 0.014 | 0.005–0.063 | 0.77 |

| Kalman | Horizontal | 0.371 | 0.370 | −0.332–1.074 | 0.05 |

| Kalman | Vertical | 0.047 | 0.023 | 0.001–0.092 | 0.41 |

| CoM Velocity | Component | Mean Difference (Bias) (m·s−1) | ±SD | 95% LoA | R2 |

|---|---|---|---|---|---|

| Unfiltered | Horizontal | −0.197 | 1.549 | −3.235–2.841 | 0.01 |

| Unfiltered | Vertical | −0.136 | 0.798 | −1.702–1.429 | 0.35 |

| Low-Pass | Horizontal | 0.075 | 0.391 | −0.692–0.842 | 0.40 |

| Low-Pass | Vertical | 0.027 | 0.369 | −0.697–0.752 | 0.46 |

| Kalman | Horizontal | 0.020 | 0.370 | −0.706–0.746 | 0.46 |

| Kalman | Vertical | 0.020 | 0.235 | −0.421–0.461 | 0.58 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Needham, L.; Evans, M.; Cosker, D.P.; Colyer, S.L. Can Markerless Pose Estimation Algorithms Estimate 3D Mass Centre Positions and Velocities during Linear Sprinting Activities? Sensors 2021, 21, 2889. https://doi.org/10.3390/s21082889

Needham L, Evans M, Cosker DP, Colyer SL. Can Markerless Pose Estimation Algorithms Estimate 3D Mass Centre Positions and Velocities during Linear Sprinting Activities? Sensors. 2021; 21(8):2889. https://doi.org/10.3390/s21082889

Chicago/Turabian StyleNeedham, Laurie, Murray Evans, Darren P. Cosker, and Steffi L. Colyer. 2021. "Can Markerless Pose Estimation Algorithms Estimate 3D Mass Centre Positions and Velocities during Linear Sprinting Activities?" Sensors 21, no. 8: 2889. https://doi.org/10.3390/s21082889

APA StyleNeedham, L., Evans, M., Cosker, D. P., & Colyer, S. L. (2021). Can Markerless Pose Estimation Algorithms Estimate 3D Mass Centre Positions and Velocities during Linear Sprinting Activities? Sensors, 21(8), 2889. https://doi.org/10.3390/s21082889