Abstract

In this paper, a novel method to modify color images for the protanopia and deuteranopia color vision deficiencies is proposed. The method admits certain criteria, such as preserving image naturalness and color contrast enhancement. Four modules are employed in the process. First, fuzzy clustering-based color segmentation extracts key colors (which are the cluster centers) of the input image. Second, the key colors are mapped onto the CIE 1931 chromaticity diagram. Then, using the concept of confusion line (i.e., loci of colors confused by the color-blind), a sophisticated mechanism translates (i.e., removes) key colors lying on the same confusion line to different confusion lines so that they can be discriminated by the color-blind. In the third module, the key colors are further adapted by optimizing a regularized objective function that combines the aforementioned criteria. Fourth, the recolored image is obtained by color transfer that involves the adapted key colors and the associated fuzzy clusters. Three related methods are compared with the proposed one, using two performance indices, and evaluated by several experiments over 195 natural images and six digitized art paintings. The main outcomes of the comparative analysis are as follows. (a) Quantitative evaluation based on nonparametric statistical analysis is conducted by comparing the proposed method to each one of the other three methods for protanopia and deuteranopia, and for each index. In most of the comparisons, the Bonferroni adjusted p-values are <0.015, favoring the superiority of the proposed method. (b) Qualitative evaluation verifies the aesthetic appearance of the recolored images. (c) Subjective evaluation supports the above results.

1. Introduction

The human trichromatic color vision originates from the comparison of the rates at which photons are absorbed by three types of photoreceptor cone-cells namely, the L-, M-, and S-cones [1,2]. In practice, the above types of cones define the three channels of the LMS color space, and only as an approximation of their stimulation they correspond to the red, green, and blue colors, respectively. Color vision deficiency (CVD) (or color-blindness) is defined as the human eye’s inability to correctly match and perceive colors affecting at least 8% of males and 0.8% of females [2,3]. It is caused by genetic mutations that lead either to the absence or dysfunctionality of one or two types of cones [2,4,5]. The impact of CVD on human vision is reflected on various physiological levels such as color discrimination, object recognition, color appearance, color naming, etc. [1,6].

There are three categories of CVDs [1,2,3,5]. The most severe and the rarest one is the achromatopsia caused by the absence of two types of cones. The second is the dichromacy, where one type of cones is missing. Dichromacy includes three subcategories: protanopia, deuteranopia, and tritanopia, depending on whether the L-, the M-, or the S-cones are missing, respectively. Protanopia and deuteranopia have similar effects and belong to the so-called red–green color vision deficiency. The third is the anomalous trichromacy, where no cone types are missing but at least one of them is malfunctioning. It also comprises three subcategories namely the protanomaly, deuteranomaly and tritanomaly depending on whether the L-, the M-, and the S-cones are affected, respectively.

This paper considers the defects of protanopia and deuteranopia. Since protanopes and deuteranopes lack one primary type of cone, they match the full-color spectrum using the other two primary types of cones. Thus, they confuse only the colors that can be perceived and discriminated by the missing primary type of cone [3].

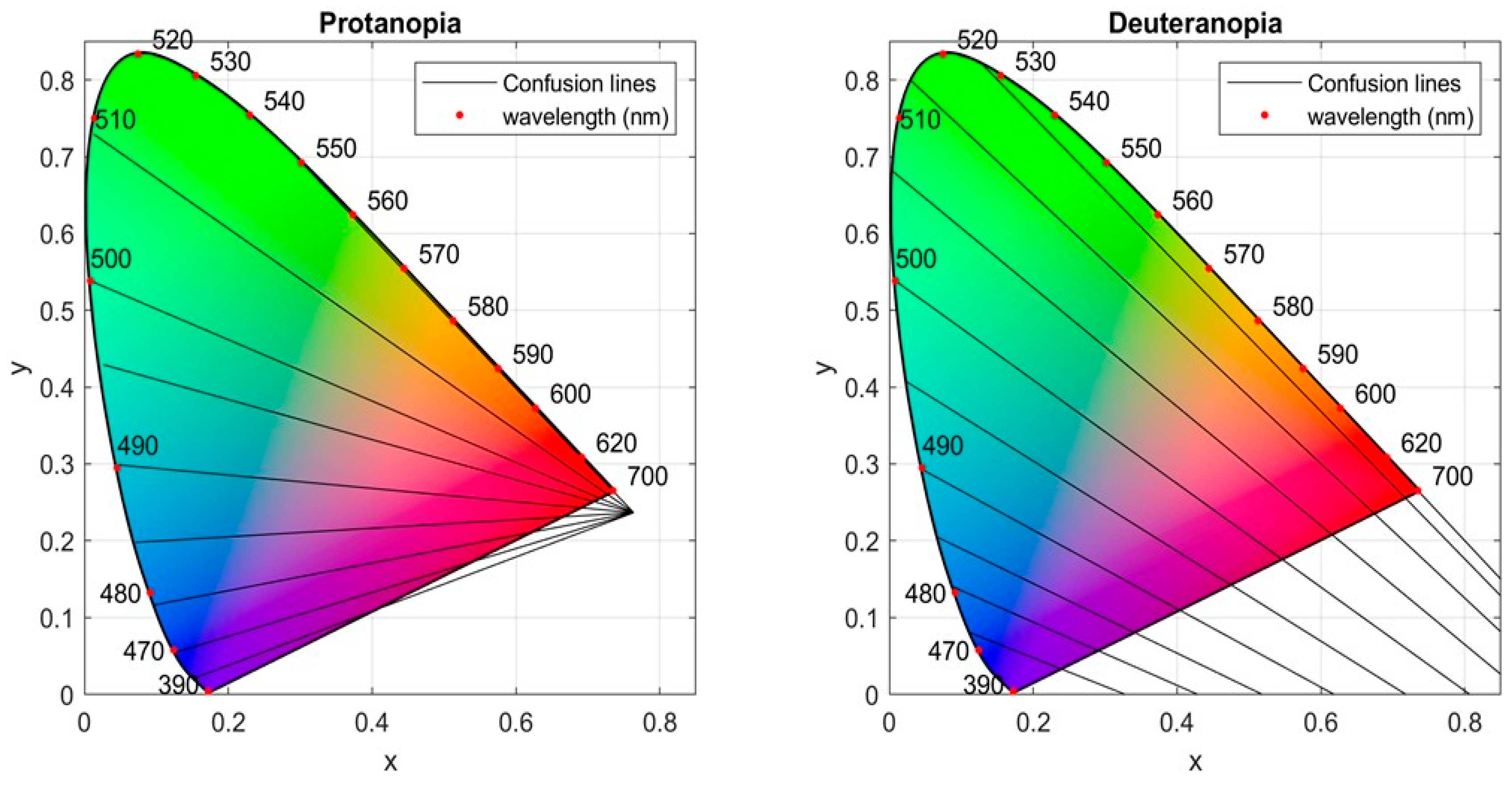

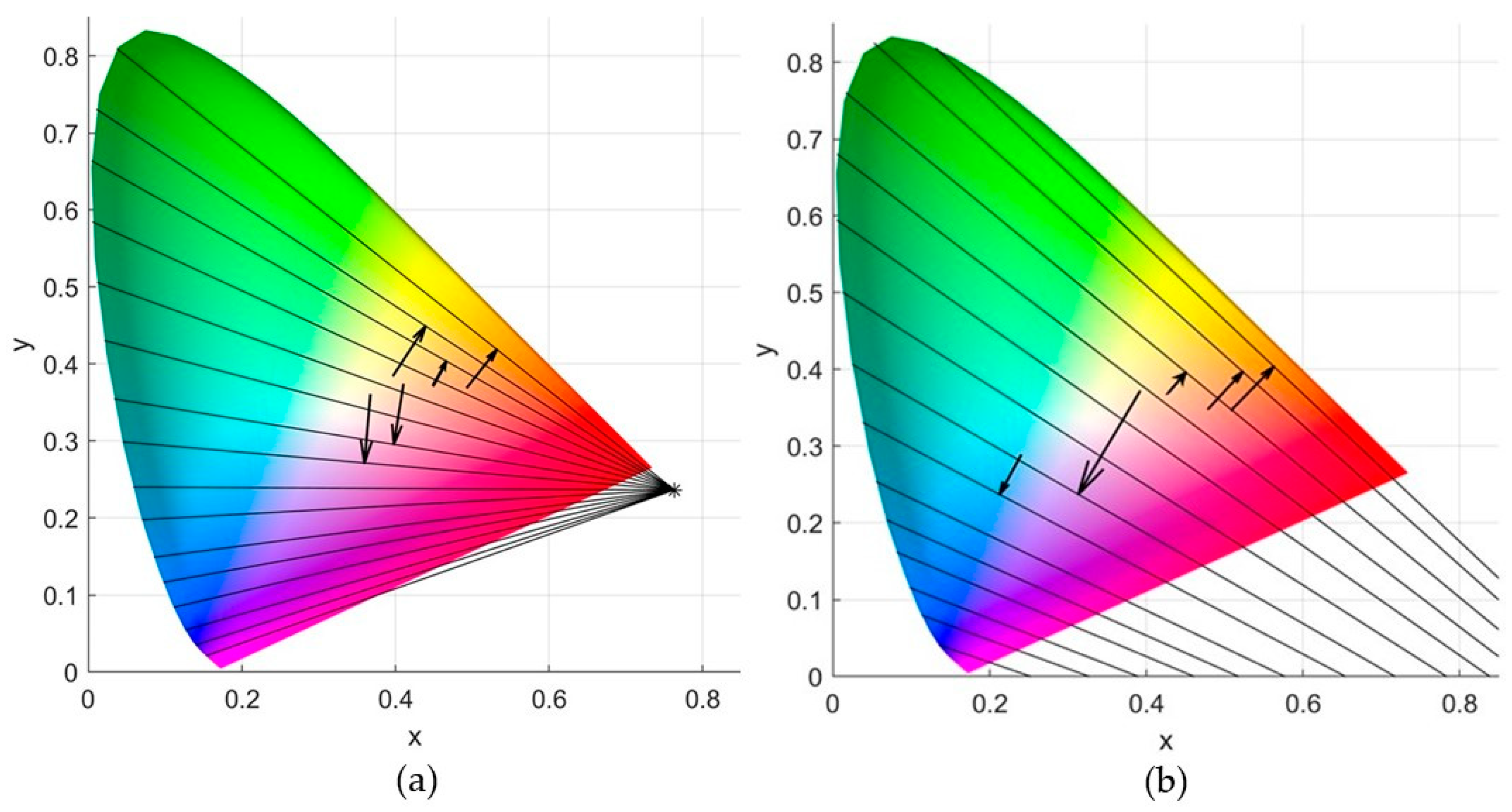

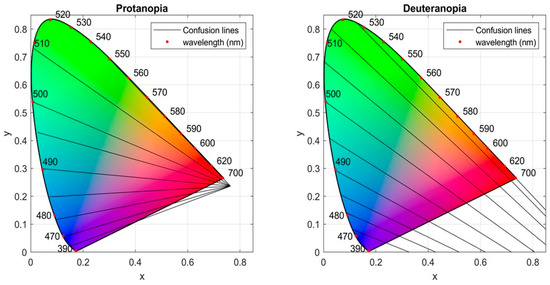

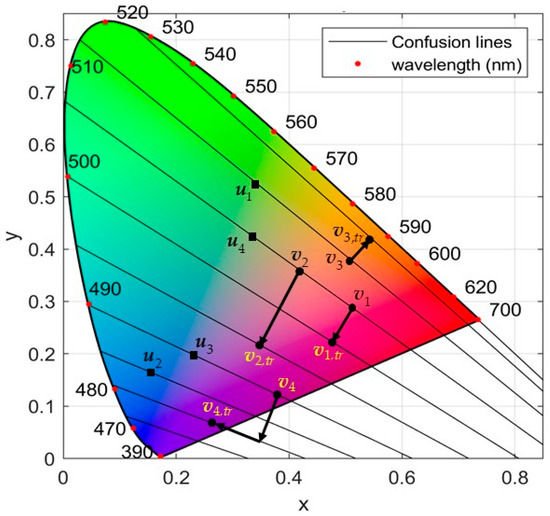

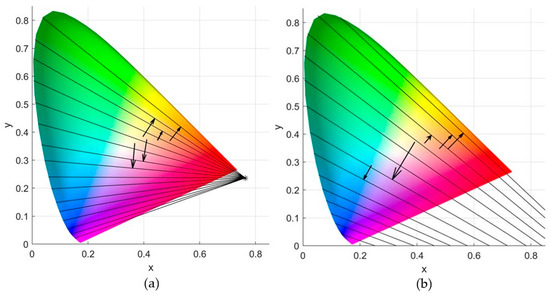

An effective tool to describe the above color confusion process is the confusion lines defined on the CIE 1931 chromaticity diagram [4,5]. A confusion line is defined as the locus of the set of colors confused by the protanope or deuteranope. All confusion lines intersect at a point outside the chromaticity diagram, which is called copunctal point and it is imaginary stimulus realized as the locus of the missing primary [3,6]. The color-blind confuses all colors belonging to the same line and therefore the entire line appears achromatic to him [4]. In essence, those colors are perceived by the color-blind with the same colorfulness, which consists of hue and saturation, but they can be identical only under the appropriate intensity [6,7,8]. Figure 1 shows some confusion lines for protanopia and deuteranopia taken from Judd’s revised chromaticity diagram [3,9].

Figure 1.

Confusion lines for protanopia (left diagram) with copunctal point and deuteranopia (right diagram) with copunctal point .

To solve the problem of color-blindness, image recoloring algorithms have been implemented following different approaches. In any case however, the design of image recoloring algorithms must fulfill certain requirements. The most important are the preservation of color naturalness and the preservation or enhancement of color contrast [10]. Naturalness refers to the reduction of the perceptual color difference between the original and the recolored image, and therefore it quantifies their color distribution and aesthetic similarity. Contrast is important for object recognition and color discrimination, especially in the case where two different objects are perceived by the color-blind as one object.

So far, a wide range of recoloring methods have been developed based on optimization of specially designed objective functions [11,12,13,14,15,16] or regularized objective functions [17,18,19] that uniformly combine the naturalness and contrast criteria, pixel-based classification [20], spectral filtering [21], cluster analysis [22], gradient domain recoloring [23,24], confusion-line based [7,8,25], color transformation and rotation/translation [26,27,28,29], neural networks [30], image retrieval [31], and deep learning [32].

In this study, a novel approach to image recoloring for protanopes and deuteranopes is developed. The proposed method encompasses four modules: (a) key color extraction, (b) key color translation, (c) key color optimization, and (d) cluster-to-cluster color transfer. The first module performs color segmentation of the input image using fuzzy clustering to extract a number of cluster centers, called key colors. In the second module, the key colors are mapped in the CIE 1931 chromaticity diagram. Colors confused by the color blind (called here confusing key colors) are ranked in terms of the cardinalities of the associated clusters. Then, an iterative process is set up, where in each iteration the confusing key color with the highest rank is translated (removed) to its closest non-occupied (by other key colors) confusion line. In the third module, only the luminance channel of the translated confusing key colors is further optimized in terms of a regularized objective function that uniformly quantifies the contrast and the naturalness criteria. Finally, the fourth module uses a color transfer mechanism to finalize the recoloring process of the image’s pixels.

2. State of the Art and the Current Contribution

2.1. Image Recoloring for the Color-Blind: State of the Art

One of the most challenging problems in color science is the identification of the dichromatic color appearance so that normal trichromats can realize the way color-blinds experience colors. In their seminal papers, Vienot et al. [33,34] developed an algorithmic framework to simulate the dichromatic color vision. Their findings suggested that protanopia and deuteranopia are reduced forms of normal trichromatic vision. In particular, they showed that the dichromat color gamut is a plane in the three-dimensional RGB space. Having generated the dichromat simulation of an image using the above method, recoloring processes can be applied to carry out color adaptation of the image in order to enhance color appearance, color discrimination, and object recognition for the color-blind [10,13,17].

So far, several approaches have been developed to perform image recoloring. Kuhn et al. [13] transformed the red–green–blue (RGB) dichromatic simulated gamut in the CIELab color space and generated a set of key colors. They minimized an objective function that included distances between key colors and their projections on the Lb plane. Finally, the Lb plane was rotated to match the desired colors. While the method manages to increase the contrast, it finally changes the colors significantly due to the rotation process and the naturalness deteriorates. In [17] the CIELab color space was used to perform image recoloring. Confusing colors were rotated in the ab plane. The rotation angles were calculated by the minimization of a regularized objective function that involved color contrast and naturalness criteria. However, the above minimization might compromise either the contrast or the naturalness requirement. Han et al. [7] also employed the CIELab space, where the original image was segmented into regions and extracted representative colors. Confusing colors belonging to the same confusion line were relocated so that all regions can be discriminated by the color-blind. However, the selected number of confusion lines was very large, exceeding the number of wavelengths seen by the color-blind. Therefore, it is possible colors belonging to two or more confusion lines may still be confused and the contrast of the recolored image is compromised. Huang et al. [25] applied mixture modeling in the CIELab space to partition the colors into clusters. The distance between pairs of cluster centers was generalized to quantify the dissimilarity between pairs of distributions. Based on these dissimilarities an objective function was minimized to obtain an efficient recoloring of the original image. The above method increases the contrast but there is no control over hue changes for the confusing colors and thus, the color naturalness might be compromised. Meng and Tanaka [16] modified the lightness in the CIELab space without changing the hue of the original image. That modification was carried out in terms of an optimization problem where the objective function included color differences. Although this strategy seems to effectively maintain the naturalness, the unchanged hue in combination with the changed lightness may negatively affect the contrast of the recolored image. Kang et al. [15] calculated a set of key colors in the CIELab. The authors estimated the differences between pairs of them and projected the resulting difference vectors on the color subspace seen by the dichromats, which is a plane. Then, they minimized an objective function that included the above projections and attempted to improve the local contrast between color regions of the image, and the image’s global contrast. While the optimization method improves the contrast, there is no mechanism to control the naturalness, which might be compromised.

Apart from the CIELab, a commonly used color space is the Hue-Saturation-Value (HSV). For example, Wong and Bishop [27] showed that effective results can be obtained if the saturation and brightness remain the same as in the original image, while the hue value is shifted by applying a nonlinear mapping that involves a power of the hue value. This process makes confused hue ranges distinguishable from each other. The hues’ remapping process finally improves the contrast, while the power hue shift retains the aesthetics of the original image and thus the naturalness. Similarly, Chin and Sabudin [28] transformed the original image into the HSV color space, where the hue value was appropriately rotated, while the saturation and brightness remained unchanged. Specifically, the ranges of reds and greens are translated towards the ranges of blues and yellows, which are distinguishable by the protanopes and deuteranopes. Using the above strategy, the contrast seems to be enhanced while the naturalness is compromised.

The RGB color space has been considered, also. Ma et al. [30] applied a self-organizing map (SOM) to transform the RGB space into the dichromatic space by preserving the distance ratios between homologous colors. The resulting code-vectors are mapped into a rectangular color palette that has black and white in two opposing corners, blue and yellow in the other two corners, obtaining the final image recoloring. Although the method enhances the color contrast, the naturalness may be compromised because the color palette utilizes only blue and yellow and their variants. In [32], a recoloring for art paintings was proposed. First, a deep learning network was used to perform transfer learning from natural images to art paintings and then a semantic segmentation approach was set up to generate annotated object recognition of art paintings. The recoloring process was carried out by optimizing an objective function that involved only the colors that were significantly different from the respective simulated ones. Since there are recolored only colors associated with the annotated objects the naturalness is improved, but the contrast might be compromised. In [23], the RGB image was represented as a vector-valued function. Then, using the gradient domain, a global transformation followed by a multi-scale reintegration took place to obtain the recolored image. However, it tended to produce visual halo artifacts near strong chromatic edges, which might negatively affect both naturalness and contrast.

Finally, in [26] the original and the simulated images were transformed in the XYZ space, with normalized luminance values. Regarding the other two channels, errors between homologous colors of the original and the simulated images were derived and rotated. As the rotation angle increases, the selected colors are far away from the dichromat plane and vice-versa. In any case, the colors do not change that much for distant colors and the naturalness is preserved. However, the above rotation strategy will not favor contrast enhancement.

2.2. The Current Contribution

The method proposed in this paper uses four algorithmic modules (as mentioned in Section 1) and builds on a novel perspective to the image recoloring for the color-blind. To enhance color discrimination and color appearance, the method provides certain contributions, which are delineated as follows:

- The first contribution concerns the number of colors to be modified. In contrast to other approaches that adapt all colors of the input image [26,27,28,30], our approach modifies only the colors confused by the color blind. Since not all image colors are modified it is expected that the recolored image will maintain the naturalness.

- The second contribution assumes that the adaptation of confusing colors should be driven by a confusion-line based approach. Confusion lines are the product of extensive experimentations [3,6,9]. As such, they accurately reflect the way a dichromat perceives colors. In contrast to other approaches that perform the recoloring only in terms of optimization [15,16,23,25,32], this paper introduces a mechanism to remove specific confusing colors to specific confusion lines, thus enhancing the contrast. Since each color is transferred to its closest non-occupied confusion line, it is expected that the naturalness will be preserved, also.

- The third contribution concerns the need to further optimize both naturalness and contrast. Unlike other approaches that use color or plane rotation mechanisms [13,17,27,28], herein we manipulate the luminance channel to minimize a regularized objective that uniformly combines the naturalness and contrast criteria.

In summary, the main idea of the current contribution is to build a four-module approach, where the naturalness and contrast are gradually improved from one module to the next, taking into account specific requirements.

3. The Proposed Method

3.1. Preliminaries

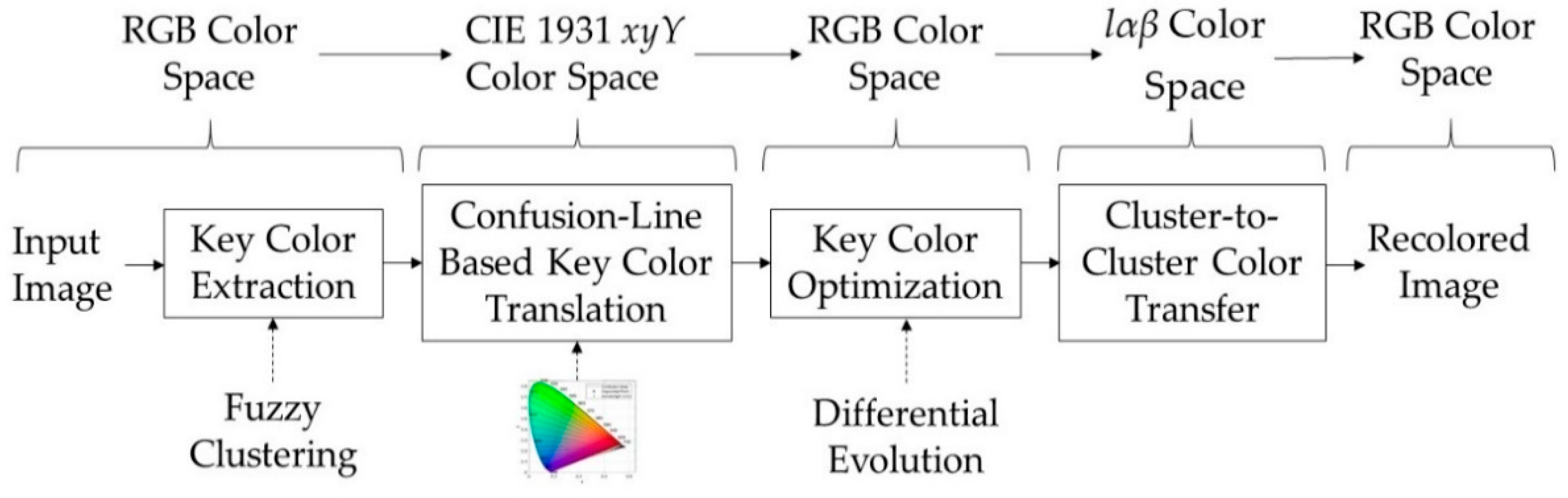

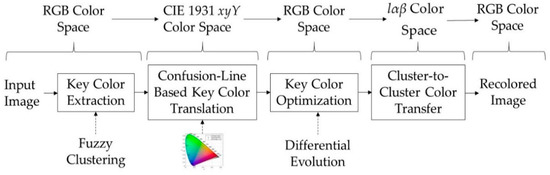

The processing of the proposed method is depicted in Figure 2. There are four operational modules involved. (a) Fuzzy clustering [35] is applied to the input image to extract a set of key colors, which are the resulting cluster centers, in the RGB color space. A subset of key colors corresponds to confusing image colors, called here confusing key colors, while the rest of them to non-confusing colors, called here non-confusing key colors. (b) The confusing key colors are ranked in decreasing order according to the cardinalities of the associated clusters. Then, all key colors are mapped on the CIE 1931 chromaticity diagram defined on the xyY color space. An iterative process is carried out, where in each iteration the highest ranked confusing key color, if necessary, is translated (moved) to a different confusion line and becomes discriminated by the color-blind. (c) The modified key colors are transformed back to the RGB space. To avoid suboptimal results, an objective function that combines the naturalness and contrast criteria is minimized by the differential evolution algorithm [36]. This module obtains the final recolored key colors. (d) All pixels of the input image that are not discriminated by the color blind along with the above recolored key colors are mapped in the space [37]. Then, each pixel is recolored by a cluster-to-cluster color transfer approach, which involves the key colors and the associated clusters. Finally, the recolored pixels are mapped into the RGB space, obtaining the recolored image. The color space pipeline used is depicted on the top of Figure 2.

Figure 2.

The basic structure of the proposed recoloring method.

The mapping from RGB to xyY is denoted as , and from RGB to as . In the above transformations, all colors were gamma-corrected, and the procedure was carried out in terms of the CIE Standard Illuminant D65 [1,38]. The analytical description for the former can be found in [1,38], while for the latter in [39,40].

The space is a transformation of the LMS model and was introduced by Ruderman et al. in [37]. The reason for using this space in this paper is that it reduces the correlation between the three channels, enabling the elimination of undesirable cross-channel effects [39,40]. Moreover, its logarithmic-based nature enables the uniform changes in channel intensity to be equally detectable, which is expected to derive an effective pixel-based recoloring.

To obtain the dichromat simulation of a color we use the algorithm developed by Vienot et al. [33,34]. This algorithm shows that the dichromat color space perceived by people suffering from dichromacy is a plane in the RGB space, denoted as , where D refers to protanopia or deuteranopia, interchangeably. Therefore, is a proper subspace of the RGB space, , which means that . The mapping, from to is . Thus,. Since its follows that it has three coordinates, one for each channel of the RGB model: . Relationally, belongs to the and, as showed above, it also belongs to the RGB space. Therefore, has also three channel values: . A detailed description for the calculations of the transformation is given in [33,34].

3.2. Module 1: Key Color Extraction

The sized input image is with , and is the pixel of the image. To reduce the computational complexity, the image pixels are grouped into m color bins , with radius . The radius is selected to be neither very small (because the computational time would be increased) nor large (because the resulting color matching would be inefficient). Through experimentation we found that a credible interval is . For each bin, the representative color is the mean of all pixels belonging to that bin. The set of the representative colors is , with . The dichromat simulation of C is:.

If the color and its dichromat simulation lie in a sphere with radius , which is appropriately selected, then the two colors appear to be the same to a protanope or deuteranope, meaning that the color is not confused, otherwise it is confused by the color-blind. Thus, the set C is divided into two subsets, containing confusing colors and containing non-confusing colors,

The physical meaning of the parameter is to decide whether the distance between and its dichromat simulation is small enough. If this is the case, the two colors belong to the same color region in the RGB color space and therefore, the color-blind perceives the color correctly. Otherwise, they belong to different color regions, meaning that the color blind confuses the color with other colors. The parameter takes values between 0 and 255. However, its value should not be very large or very small. Through extensive experimentation on the Flowers and Fruits data sets, which were taken from the McGill’s calibrated color image database [41] and contain 195 calibrated color images, we found that a credible interval for the parameter in Equation (1) is .

Next, the well-known fuzzy c-means [35] is applied separately to the sets and . The target is to partition the elements of the above sets into and fuzzy clusters, respectively. There are several reasons for choosing the fuzzy c-means algorithm. First, it is not sensitive to random initialization. Second, it involves soft competition between cluster centers, which implies that all clusters have the potential to move and to win data avoiding the creation of underutilized small clusters. Finally, it can generate compact and well separated clusters, thus effectively revealing the underlying data structure.

The sets of the resulting cluster centers are,

Only for , the clusters are denoted as: .

Definition 1.

A colorof the setbelongs to the clusterif it also belongs to the setand appears to have its maximum membership degree to that cluster. Then, each pixel of the input image belonging to the corresponding binalso belongs to the cluster. The cardinality of the clusteris defined as the number of pixels of the input image that belong to, and it is denoted as.

The elements of are the key colors of the confusing image colors, called confusing key colors, and the elements of the key colors of the non-confusing image colors, called non-confusing key colors. The problem is how to appropriately recolor the key colors of to obtain the set

where rec stands for the recoloring. The next two modules (presented in Section 3.3 and Section 3.4) describe in detail the above task.

3.3. Module 2: Key Color Translation

The sets and are mapped onto the xyY space as,

with

where is the hue, the colorfulness, and the relative luminance coordinate. The latter takes values in [0, 100]. Note that, in view of Equations (4), (5), and (7), the problem is to recolor the elements of and map them back to the RGB space.

The CIE 1931 chromaticity diagram is the projection of the xyY color space on the xy-plane [1,9,38]. Thus, the key colors in Equations (7), (8) are projected as points on the xy-plane,

The above points include only the chromaticity coordinates, which consists of hue and colorfulness. By defining the sets and , we can easily verify that there is a bijective correspondence between V and (thus, between V and ), and also between U and .

To recolor the elements of we must, first, recolor the elements of V. The recoloring of V is denoted as . To address this issue, a confusion-line based algorithm has been developed, which is described within the next paragraphs.

The colors are ranked in decreasing order according to the cardinalities of the associated clusters ,

The reason for using the above ranking function is that a key color corresponding to a cluster with large cardinality is associated with a large image area, and its contribution to the final result should be more important.

Proper subsets of the set of confusion lines of Judd’s revised chromaticity diagram [3,9] for protanopia and deuteranopia are employed. The number of total confusion lines is defined as , where D refers either to protanopia or deuteranopia. All colors belonging to the same confusion line are not discriminated one from another and they are perceived as a single color by the color-blind. Using the standard point-to-line distance, each one of the colors of V and U is assigned to its closest confusion line. The distance of the point to the confusion line that passes through the copunctal point and a point in the chromaticity diagram is given as follows,

Each confusion line is labeled as “occupied” if it contains at least one of the colors and , or “non-occupied” if it does not contain any colors. The set of the “non-occupied” confusion lines is , where stands for set cardinality.

Next, we identify colors of the set V to be translated to different confusion lines. Regarding the occupied confusion lines, the following cases can happen:

- Case 1: A confusion line contains at least one color from the set U. If it also contains colors from the set V, then all these colors are going to be translated to separate confusion lines.

- Case 2: A confusion line does not contain colors from the set U, but it contains at least two colors from the set V. In this case, the color with the lowest rank remains on the confusion line, while the rest of the colors are translated to different confusion lines.

- Case 3: A confusion line contains only one color, which belongs to the set V. In this case, no color is going to be translated.

The identified colors to be translated form the set , with . The point-to-line distances (see Equation (12)) between colors belonging to , which are points on the chromaticity diagram, and lines belonging to the set are calculated. In addition, the standard projection of the point on the line is calculated and symbolized as .

Then, an iterative algorithm takes place. Each iteration involves the following steps.

First the algorithm identifies the color with the highest rank as,

Then, the non-occupied confusion line that is closer to is determined,

Next, the is translated to its projection point on the line ,

Since the color has been removed it must be deleted form the set , while the same holds for the line and the set . Thus, the iteration ends with the updating process of the sets and ,

Using the above iterative process, all colors of are recolored,

Finally, we obtain the recoloring of the set as follows,

The next algorithm presents the above steps in a systematic manner.

| Algorithm 1: Translation process of the colors belonging to the set V |

| Inputs: The sets , , ; Output: The set |

| Set and |

| Whileanddo |

|

| End While |

|

Remark 1.

Regarding Algorithm 1, the following observations are brought into spotlight.

- 1.

- It is possible that at least two colors will move to distant confusion lines. Although this will increase contrast, the naturalness will be compromised.

- 2.

- It is recommendedso that, and all colors ofwill move to different confusion lines. We performed extensive experiments on the Flowers and Fruits data sets, which contain 195 calibrated color images and were taken from the McGill’s calibrated color image database [41] and found that the above condition is effective as far as the color segmentation of the input image is concerned. However, depending on the designer’s choice, ifit is possible to getand some key colors ofwill not be removed. In this case the naturalness will be enhanced, and the contrast will be reduced.

Remark 2.

In steps 1–3 the key color with the highest rank is translated first, while the key color with the lowest rank last. Given that the higher ranked key color corresponds to larger image area, the reasons behind this choice are enumerated as follows:

- 1.

- Let us assume that there is an occupied confusion line, which falls in the above-mentioned Case 1. Thus, the confusion line contains key colors from the sets U and V and therefore, all key colors belonging to V and lying on that confusion line must be translated. By translating, first, the key color with the highest rank, this color will be removed to its closest non-occupied confusion line, and the final color will be close to the original one. In this direction, a low ranked key color will be removed to a distant non-occupied confusion line. Following this strategy, large image areas will be recolored using colors similar to the original ones, while small image areas using colors much different to the original ones. This fact directly implies that the recolored image will preserve the naturalness criterion. On the other hand, if we choose to remove the low ranked key colors first, the opposite effect will take place and the naturalness criterion of the recolored image will be seriously compromised.

- 2.

- Let us assume that there is an occupied confusion line, which falls in the above-mentioned Case 2. Thus, the confusion line contains key colors from the set V and therefore all but one key colors must be translated. If we choose to remove the low ranked key colors first, then the non-occupied confusion lines closer to the above occupied one will be exhausted, and the higher ranked key colors will be forced to be removed to distant confusion lines. Thus, large images areas will be recolored using much different colors to the original one and the naturalness will be seriously damaged. Yet, the highest ranked key color will remain the same. However, there is no guarantee that this counterbalancing effect will be strong enough to improve the naturalness criterion.

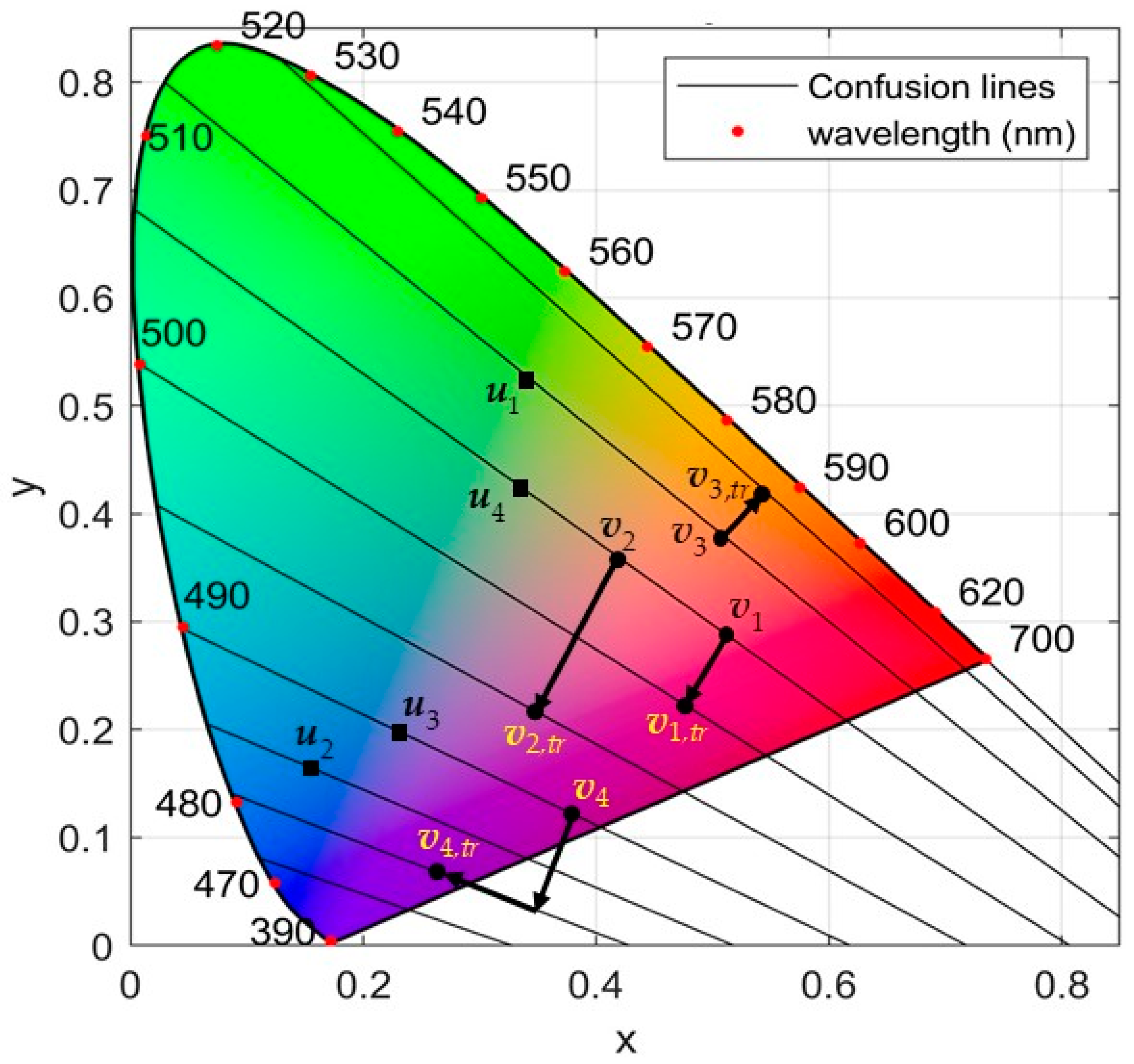

Figure 3 illustrates the mechanism of Algorithm 1 using four color translations. Note that the projection of color lies outside the chromaticity diagram. This is a rare situation, and the final position of is selected above the diagram’s baseline, called “line of purples”. This line contains non-spectral colors. Therefore, the selected point is located at a very small distance above that line. Since we are moving on the same confusion line, the result will be the same for the color-blind.

Figure 3.

The key color translation process, where .

So far, the translation process focused on the chromaticity values and of the color (see Equations (7) and (9)). Thus, the luminance coordinates have not been considered yet. A simple approach to this problem would be to avoid changing the values of luminance. However, in view of the difficulties reported in Remarks 1 and 2, keeping the input image’s luminosity might lead to suboptimal results as far as the naturalness and contrast criteria are concerned. Therefore, we further optimize the adapted key colors by manipulating their luminance values only. This task will be presented in the next module. For the moment, we complete the analysis of the current module as indicated in the next paragraphs. Based on Equations (9) and (18) it follows that,

Using Equations (5), (7), (18), and (20) the recoloring of reads as,

where

Note that the luminance values of the recolored key colors are defined as . Their values will be determined in the next section in terms of an optimization procedure.

3.4. Module 3: Key Color Optimization

Having estimated the recolored chromaticity values and for the key colors of the set , the respective luminance values should be defined as functions of the input image’s luminance values . If then the image will be much brighter, while in the opposite case much darker. Imposing such aggressive changes in the input image’s luminance will negatively affect the balance between naturalness and contrast. To resolve this problem, the following domain of values for the recolored luminance values is suggested,

In general, the above relation implies that the recolored luminance lies within an interval with radius equal to and center the original image luminance Y, with , retaining the edges of the interval [0, 100] inviolable. The parameter should neither be large nor small. Through trial-and-error it was found that a credible value is .

The set is mapped from the xyY into the RGB space as , where based on Equations (4), (20), and (21) each element of is,

Note that the above inverse mapping defines the as a function of the corresponding luminance . The dichromat simulation of is,

To this end, there are five sets involved in the optimization approach namely, given in Equation (3), reported in Equation (4) and its dichromat simulation in Equation (25), in Equation (3) and its dichromat simulation . Recalling that and respectively include the key colors that correspond to the clusters of image’s confusing and non-confusing colors, the color differences between the elements of and as perceived by a normal trichromat are determined as . After the recoloring, the same differences as seen by a dichromat color-blind are defined between the elements of the sets and as . To enable a dichromat to perceive the color differences in a similar way as a normal trichromat does, thus enhancing the contrast of the recolored image, the above distances must be as similar as possible [32],

Following the same reasoning between the elements of and [32],

Thus, and quantify the contrast enhancement of the recolored image [32]. To further improve the naturalness, the subsequent error function is employed [32],

Based on the above analysis, the overall optimization approach is defined as,

with respect to the luminance variables .

As indicated in Equation (23) . Note that Equation (24) defines the colors as functions of . The parameter is the regularization factor that takes positive values, and its functionality relies on obtaining a counterbalance between contrast and naturalness.

To perform the above optimization the well-known differential evolution (DE) algorithm is applied [36]. The DE is carried out in three evolutionary phases namely, mutation, crossover, and selection. It also includes two pre-defined learning parameters: (a) the parameter that controls the population’s evolving rate denoted as , and (b) the parameter that controls the fraction of the feature values copied from one generation to the next, denoted as . The population consists of particles. Each particle encodes the luminance variables, . Thus, the size of feature space where the DE searches for a solution is . The maximum number of iterations is .

The result of the above-mentioned optimization is the final calculation of the recoloring set .

3.5. Module 4: Cluster-to-Cluster Color Transfer

The implementation of this module obtains the recolored image, eventually. The key idea is to elaborate on the clusters , the respective cluster centers , and the recolored centers , obtained in the previous module, with and .

According to Definition 1, each cluster includes pixels from the input image, which correspond to confusing colors. Those pixels along with the cluster center and its recoloring are mapped from RGB in the space as

and

In [39,40], Reinhard et al. developed a color transfer technique between images. In this paper, we adopt that technique and use it to perform color transfer between clusters. A detailed analysis of the color transfer technique is given in [39,40].

Our target is to obtain a recoloring of the pixels that belong to the cluster . We can easily calculate the standard deviation vector of the cluster in color space.

Upon the assumption that is the center of a hypothetical cluster, which has the same cardinality with and the same standard deviation , we use the mechanism of Reinhard et al. [39,40] to transfer the color of the second hypothetical cluster to the first cluster and to obtain the recoloring of each pixel as follows,

The colors are mapped back to the RGB space as,

Finally, the recolored image is generated by recoloring all pixels belonging to the clusters , while the rest of the pixels remain unchanged.

3.6. Computational Complexity Analysis

In this section, the computational complexity of the algorithm is evaluated in terms of distance calculations per iteration.

First, Module 1 is considered. Recalling that the size of the input image is , the number of distance calculations involved in the generation of the bins is less than . Also, it is easily verified that for the implementation of Equations (1) and (2), distance calculations are needed. On the other hand, it is well known that the complexity of the fuzzy c-means for c clusters and data is [35]. As indicated previously by Equation (3) and the corresponding analysis, the sets and , defined in Equations (1) and (2), are separately clustered by the fuzzy c-means into and fuzzy clusters, respectively. Thus, the computational complexity of this procedure is . By setting , and taking into account that it follows that . In total, for Module 1 we get . By considering the maximum of and it follows that . Thus, the computational complexity of Module 1 is .

In Module 2, the mappings and the ranking process take place only once, and their effects are not significant. Thus, there are only two dominant effects. The first is related to the implementation of Equation (12) and the second to the implementation of Algorithm 1. The Equation (12) is applied between the elements of the sets V and U and the confusion lines. The number of distance calculations is . In Algorithm 1, step 1 involves less than calculations, while step 2 less than . Since and , the number of calculations for Algorithm 1 is less than . Thus, the total number of calculations in Module 2 is less than , and since is fixed it follows that the computational complexity of Module 2 is .

In Module 3, the estimations of , and (see Equations (26), (27), and (28)) involve , , and distance calculations, respectively. It can be easily shown that the sum of the above numbers is less than . In addition, the implementation of the differential evolution is carried out using q particles. Thus, the total number of distance calculations performed in this module is less than . Since q is fixed, the computational complexity of Module 3 is .

Regarding Module 4, the most dominant effect is the implementation of Equation (33). The resulting number of calculations is , and the computational complexity of Module 4 is .

Thus, the overall computational complexity is . We can easily show that , which implies that the computational complexity is modified as .

4. Experimental Evaluation

The performance of the proposed method was evaluated and compared to the respective performances of three related methods. The first, called here Method 1, is the algorithmic scheme developed by Huang et al. in [25]. The second, called here Method 2, was introduced in [27] by Wong and Bishop. Finally, the third, called here Method 3, was developed by Ching and Sabudin in [28]. The basic structures of the three methods are described in Section 2. At this point, it is emphasized that Method 2 produces only one recolored image for both protanopia and deuteranopia [27]. The only difference is that the dichromat simulated images of that recolored image are obviously different for protanopia and deuteranopia. Therefore, the quantitative results obtained by this method are the same for both the protanopia and deuteranopia cases. Table 1 depicts the parameter setting for the proposed method. For the other three methods the parameter settings were the same as reported in the respective referenced papers.

Table 1.

Parameter setting for the proposed method.

Two data sets were used. The first data set includes the Flowers and Fruits data sets taken from the McGill’s calibrated color image database (http://tabby.vision.mcgill.ca (accessed on 5 January 2021)) [41]. The Flowers data set includes 143 natural images, while the Fruits data set 52 natural images. In total there were 195 natural images. Figure 4 illustrates four of those images.

Figure 4.

A sample of four images included in the Flowers and Fruits data sets taken from the McGill’s calibrated color image database.

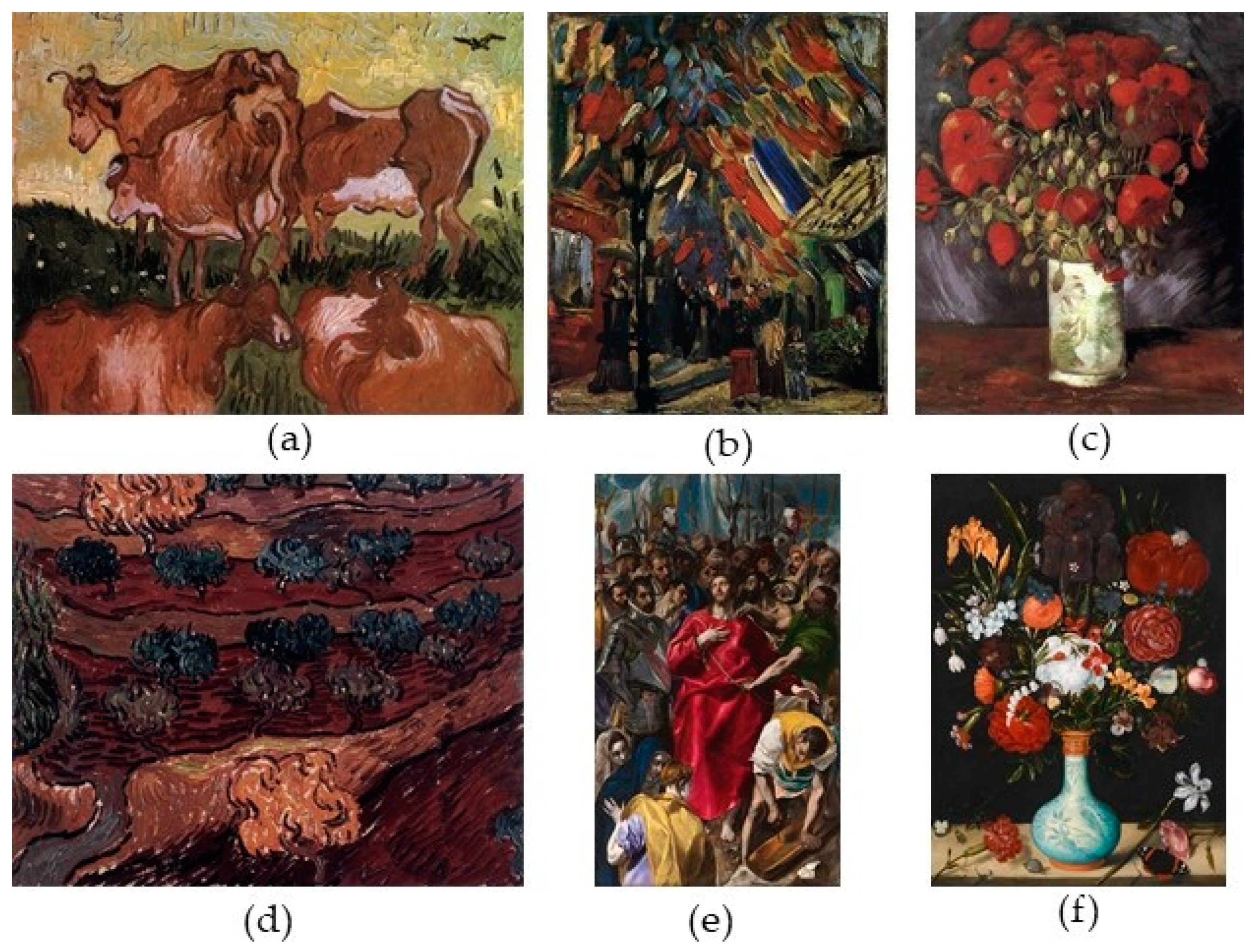

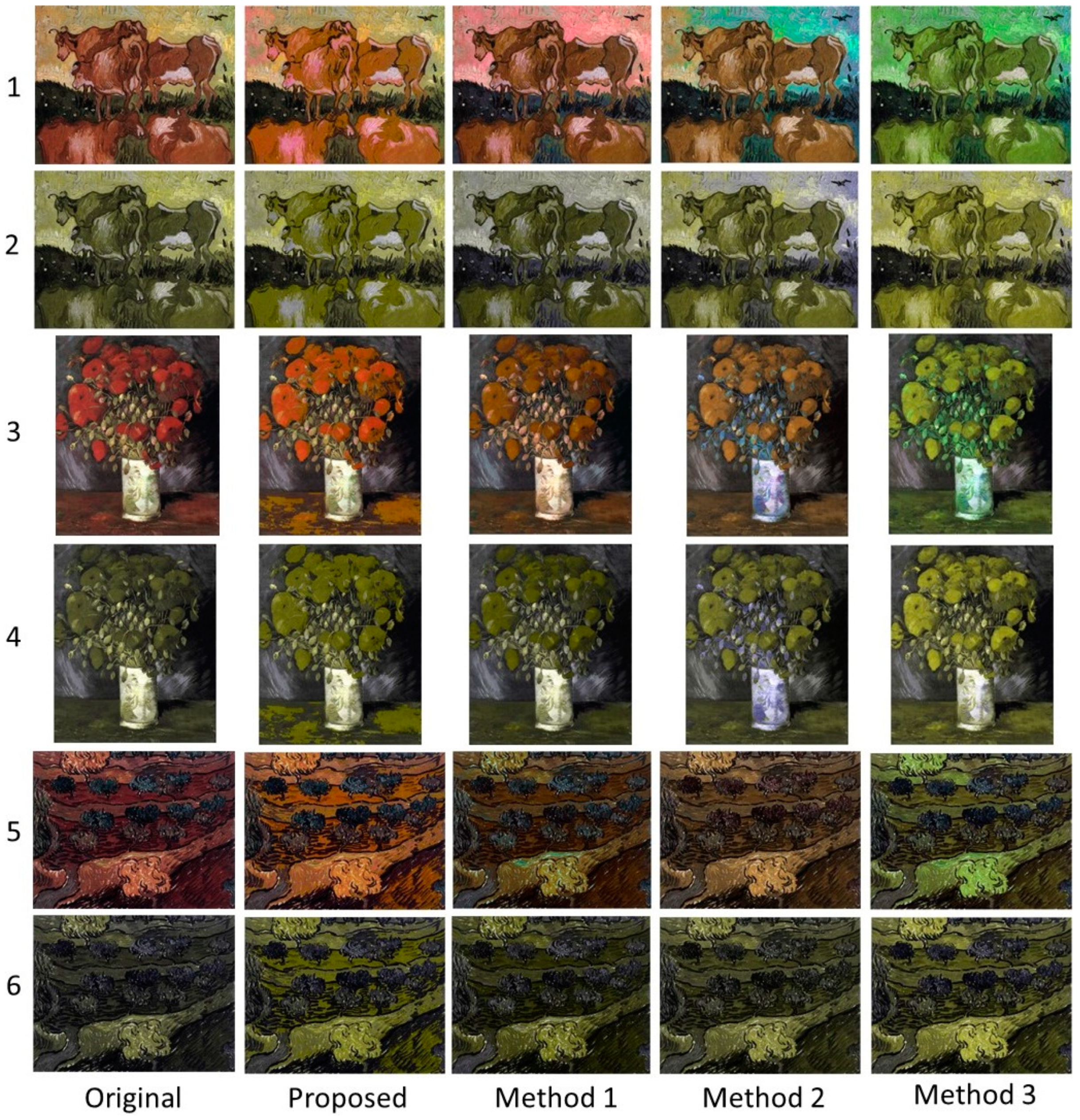

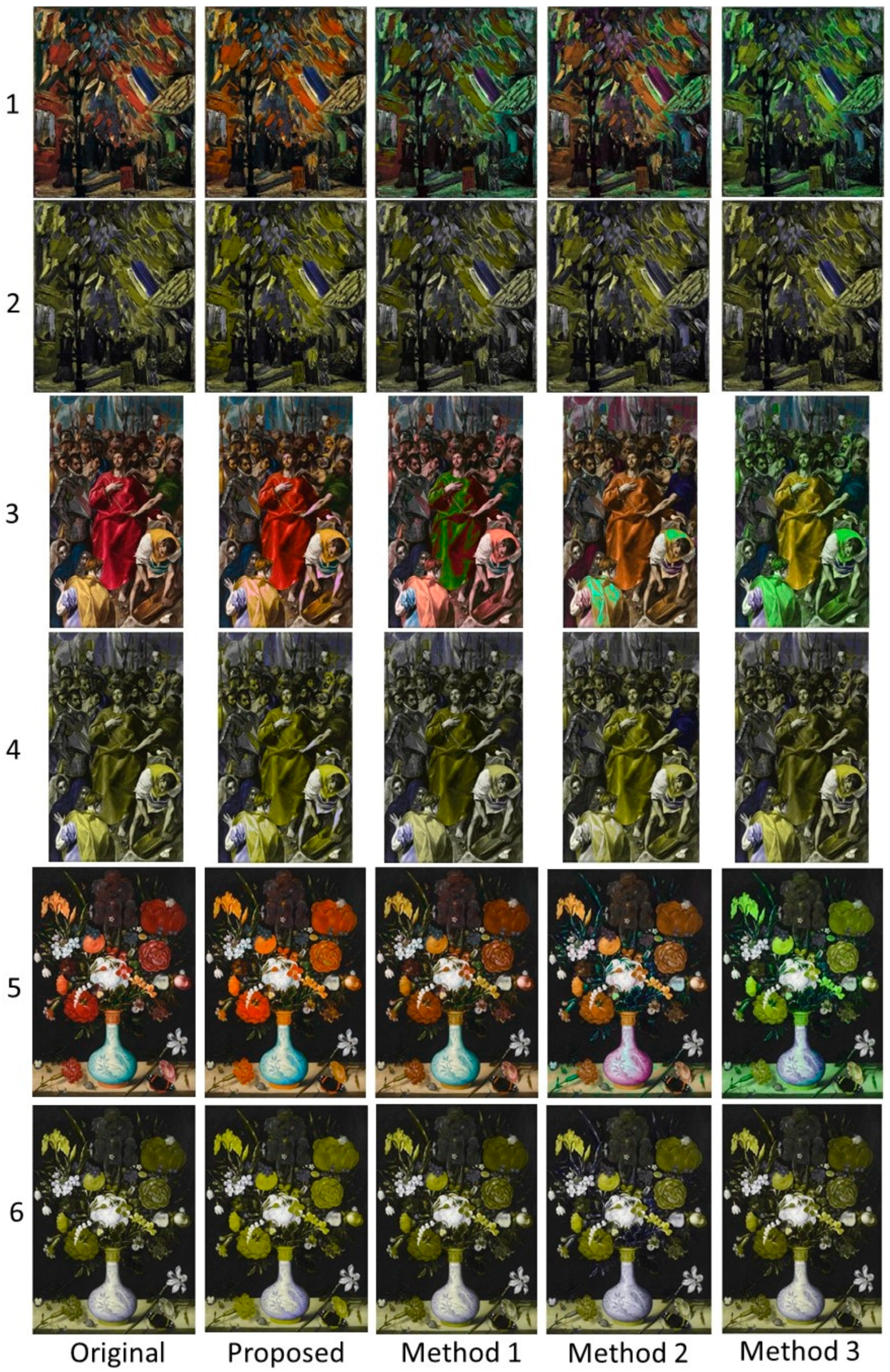

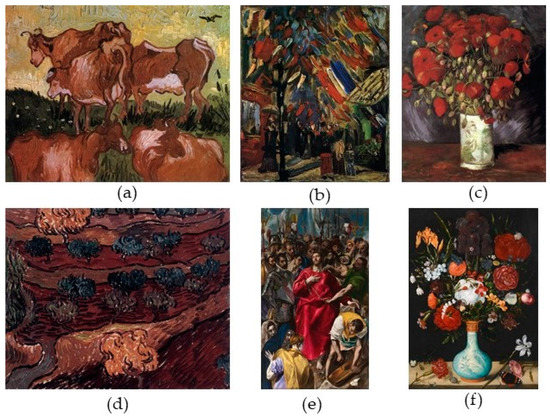

The second data set includes six paintings taken from the Web Gallery of Art (https://www.wga.hu (accessed on 28 September 2020)), which are depicted in Figure 5.

Figure 5.

(a) Painting 1, (b) Painting 2, (c) Painting 3, (d) Painting 4, (e) Painting 5, and (f) Painting 6. Paintings 1–4 were created by Vincent van Gogh, Painting 5 by El Greco, and Painting 6 by Ambrosius Bosschaert.

To perform the overall comparison, three kinds of experimental evaluations were conducted, which are analytically described in the following subsections.

4.1. Quantitative Evaluation

To conduct the comparative quantitative evaluation of the four methods, two performance indices were employed. First the naturalness index [17,22,32]

Second, the feature similarity index (FSIMc) that was developed in [42]. FSIMc takes values in [0, 1] and quantifies the chrominance information of the recolored image in relation to the original image. A higher value of FSIMc indicates that the recolored image’s chrominance information is closer to the chrominance information of the original image. The interested reader may refer to [42] for a detailed description of the index, while a brief derivation of its basic structure is given in the Appendix A.

4.1.1. Quantitative Evaluation Using the Data Set of the Art Paintings

In this evaluation, the six art paintings are used to compare the four methods. Specifically, the values of the two indices Jnat and FSIMc obtained by the four methods are directly compared. In addition, diagrammatic illustration of the translation process is given.

Table 2 presents the results for the naturalness index. Apart from the simulations in Painting 6 (concerning both protanopia and deuteranopia), the proposed method outperforms the other three methods, indicating a high-quality natural appearance of the recolored images when they are viewed by a normal trichromat. This fact is also supported by the subjective evaluation presented later on in this paper.

Table 2.

Naturalness index (Jnat) values obtained by the four methods for protanopia and deuteranopia considering the paintings reported in Figure 5.

Table 3 reports the FSIMc index values obtained by the four methods for both dichromacy cases. This table shows some interesting results. Regarding the protanopia case, it seems that Method 1 appears to be very competitive to our proposed method. Similar results are reported in the case of deuteranopia, where, in addition to the previous results, Method 3 gives the best index value for Painting 1. In total, the proposed method achieves a competitive performance when compared to the other methods, which directly implies that the chrominance information of the recolored paintings is close to the original ones.

Table 3.

Feature similarity index (FSIMc) values obtained by the four methods for protanopia and deuteranopia considering the paintings reported in Figure 5.

Figure 6 depicts a sample of two key color translations as described in Algorithm 1. The first refers to Painting 1 and concerns the protanopia case, while the second refers to Painting 5 and concerns the deuteranopia case.

Figure 6.

Translation process (see Algorithm 1) for the confusing key colors: (a) Painting 1 for the protanopia case; (b) Painting 5 for the deuteranopia case.

4.1.2. Quantitative Evaluation Using the Data Set of Natural Images

Herein, the four algorithms are compared in terms of statistical analysis using the two above-mentioned indices and considering the 195 calibrated natural images taken McGill’s color image database [41].

For both protanopia and deuteranopia we explored differences between the four methods separately for the Jnat index and the FSIMc index. We utilized nonparametric methods for statistical inference. For each CVD type by index combination, we employed an overall 0.05 significance level. The Friedman test was used to assess differences in median between the four methods. Follow-up, pairwise comparisons between our proposed method and the other three were conducted using the Wilcoxon signed rank procedure. The confidence intervals and associated p-values concerning follow-up comparisons reported in this paper are Bonferroni adjusted to ensure an overall 0.05 type-I error for each CVD type by index combination. A detailed report of the results of the statistical analysis follows, separately for each CVD type.

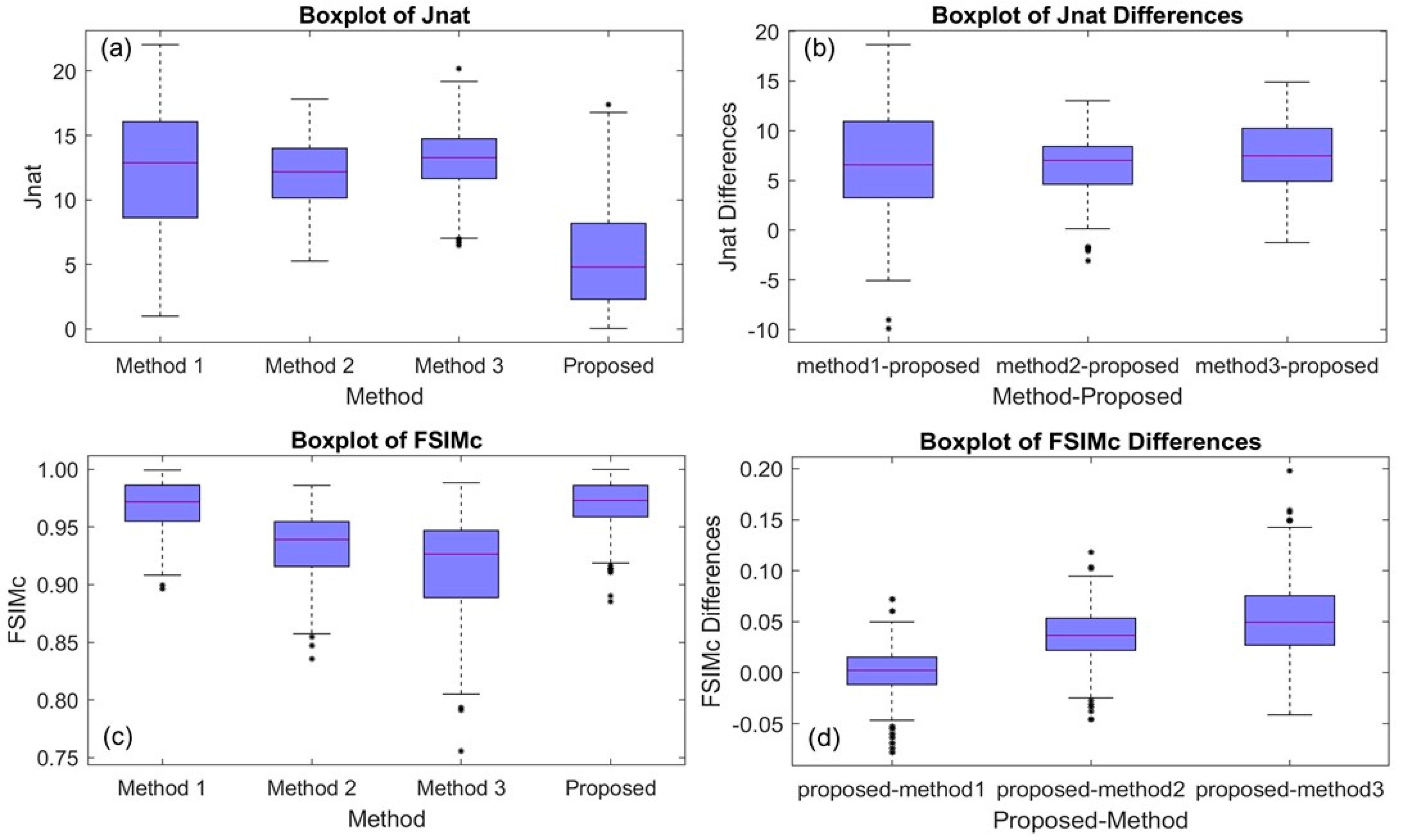

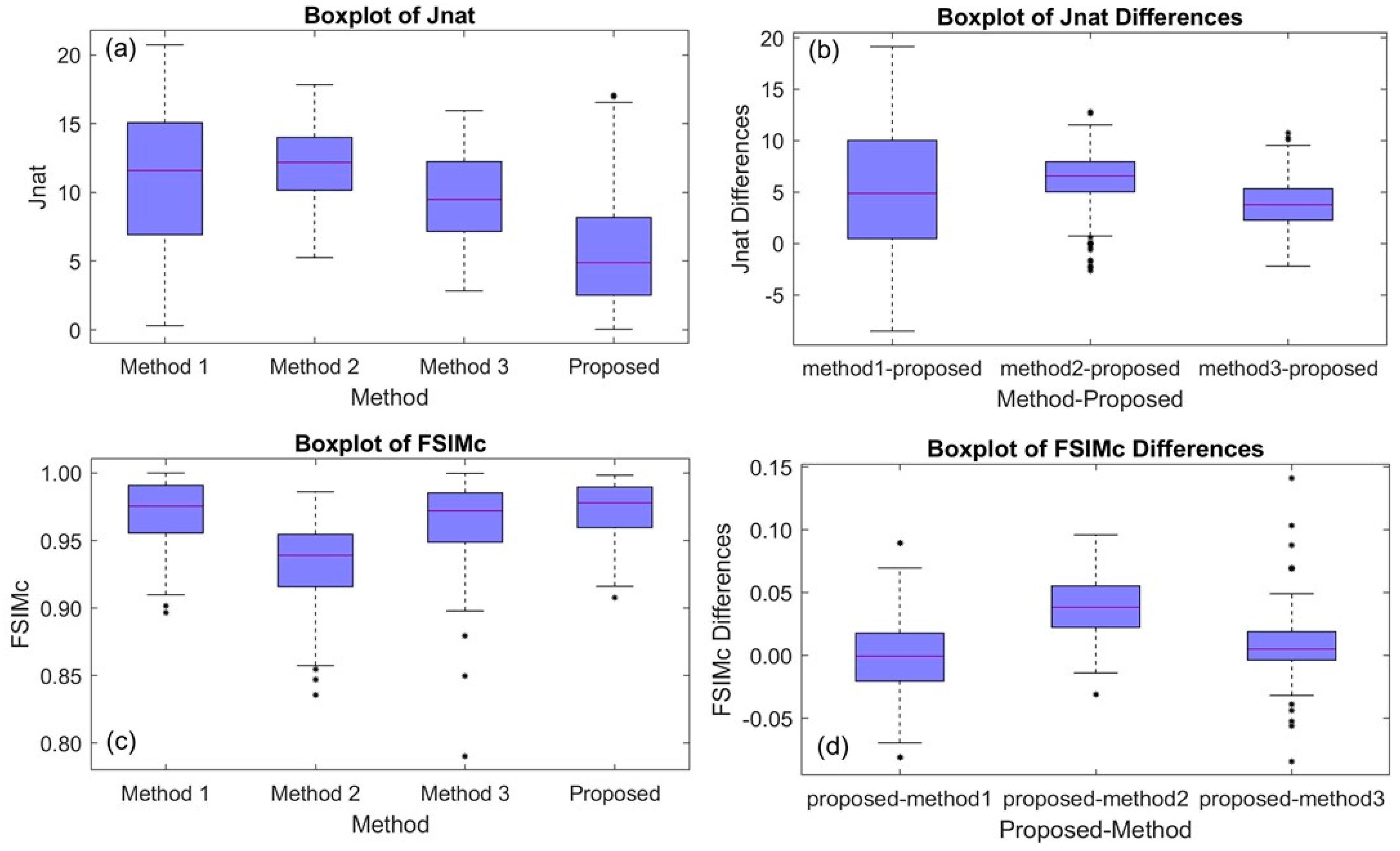

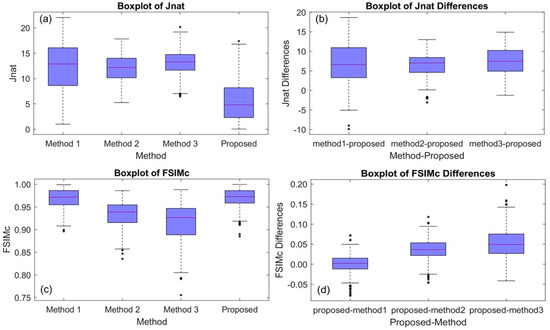

Table 4 and Figure 7a summarize the Jnat results for Protanopia. There were statistically significant differences in median Jnat between the four methods. The overall p-value was <0.001 (based on the Friedman test).

Table 4.

Descriptive Statistics of the Jnat and FSIMc indices for the protanopia case considering the McGill’s Flowers and Fruits data sets (in total 195 images).

Figure 7.

Boxplots for protanopia case considering the McGill’s Flowers and Fruits data sets (in total 195 images): (a) the Jnat values (see Table 4), (b) Jnat differences between the three competing methods and the proposed method (see Table 5), (c) FSIMc values (see Table 4), and (d) FSIMc differences between the three competing methods and the proposed method (see Table 5).

Table 5 and Figure 7b provide a summary of the differences in Jnat (competing method value–proposed method value). The median difference was statistically significantly greater than zero. The p-values are Bonferroni adjusted and based on the Wilcoxon signed rank testing procedure. We conclude that our proposed method was superior to the other three.

Table 5.

Descriptive statistics of the observed Jnat and FSIMc differences between the three competing methods and the proposed method for the protanopia case considering the McGill’s Flowers and Fruits data sets (in total 195 images).

Table 4 and Figure 7c summarize the FSIMc results for protanopia. There were statistically significant differences in median FSIMc between the four methods (p-value < 0.001).

Table 5 and Figure 7d provide a summary of the differences in FSIMc (proposed method value–competing method value). The p-values are Bonferroni adjusted and based on the Wilcoxon signed rank testing procedure. Our proposed method was superior to Method 2 and Method 3. On the other hand, the median difference in FSIMc between our method and Method 1 was not statistically significant.

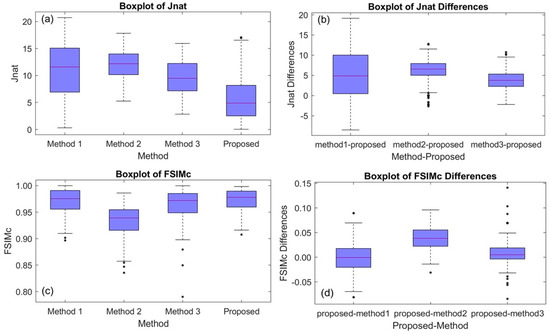

Table 6 and Figure 8a summarize the Jnat results for deuteranopia. There were statistically significant differences in median Jnat between the four methods. The overall p-value was <0.001 (based on the Friedman test).

Table 6.

Descriptive statistics of the Jnat and FSIMc indices for the deuteranopia case considering the McGill’s Flowers and Fruits data sets (in total 195 images).

Figure 8.

Boxplots for deuteranopia case considering the McGill’s Flowers and Fruits data sets (in total 195 images): (a) the Jnat values (see Table 6), (b) Jnat differences between the three competing methods and the proposed method (see Table 7), (c) FSIMc values (see Table 6), and (d) FSIMc differences between the three competing methods and the proposed method (see Table 7).

Table 7 and Figure 8b provide a summary of the differences in Jnat between our proposed method and the other three methods (competing method value–proposed method value). The median difference in Jnat between our method and any competing method was statistically significantly greater than zero. The p-values are Bonferroni adjusted and based on the Wilcoxon signed rank testing procedure. Our proposed method was superior to the other three methods.

Table 7.

Descriptive statistics of the observed Jnat and FSIMc differences between the three competing methods and the proposed method for the deuteranopia case considering the McGill’s Flowers and Fruits data sets (in total 195 images).

Table 6 and Figure 8c summarize the FSIMc results for deuteranopia. There were statistically significant differences in median FSIMc between the four methods (p-value < 0.001).

Finally, Table 7 and Figure 8d provide a summary of the differences in FSIMc (proposed method value–competing method value). The p-values are Bonferroni adjusted and based on the Wilcoxon signed rank testing procedure. We conclude that our proposed method was superior to Method 2 and Method 3. On the other hand, the median difference in FSIMc between our method and Method 1 was not statistically significant.

In summary, there were statistically significant differences between the four methods for all four CVD types by index combinations. Follow up testing revealed that: (a) our proposed method performed better than the other three methods in terms of Jnat, for both protanopia and deuteranopia, (b) our proposed method performed better than Method 2 and Method 3 in terms of FSIMc, for both protanopia and deuteranopia, (c) there was no difference in performance in terms of FSIMc between our proposed method and Method 1, either for protanopia or deuteranopia.

4.2. Qualitative Evaluation

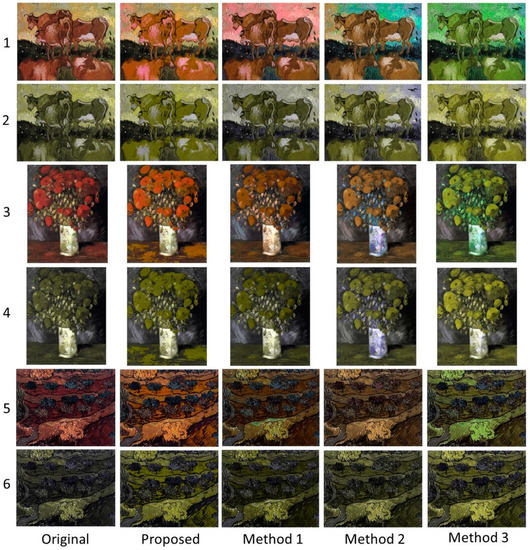

In this section visual comparison is conducted considering the paintings in Figure 5. Figure 9 illustrates the results for the case of protanopia using Paintings 1, 3, and 4. Considering Painting 1 (rows 1 and 2), the proposed recolored image clearly enhances the contrast of the depicted cows. In addition, it softly modifies the background and the grass, maintaining the overall painting’s naturalness. Those properties are passed in the dichromat simulation of the recolored painting, also. On the other hand, Methods 1 and 2 enhance the contrast by mainly modifying the background and the grass. However, this strategy imposes an apparent negative effect on the naturalness. Method 3 also changes the background but not as much as Methods 1 and 2. To enhance contrast, it chooses to use mainly pure green color for the cows resulting in an unnatural representation of the painting.

Figure 9.

Qualitative comparison for protanopia using Paintings (1,3, and 4). The original and the recolored paintings are given in rows (1,3, and 5), while their respective protanope simulations are given in rows (2,4, and 6).

In the case of Painting 3 (rows 3 and 4), the colors used by the proposed method are closer to the original painting. Moreover, the contrast (especially in the dichromat simulated painting) is enhanced when compared to the other methods. This fact is obvious at the edge line that separates the flowers and the background. The recoloring of Method 1 seems to be more competitive to our method. In Method 2 the colors of the dichromat simulated painting look alike the colors of the respective modified painting. While the same characteristic appears in the adapted paintings obtained by Method 3, the contrast in this case provides better object discrimination than Methods 1 and 2.

In Painting 4 (rows 5 and 6) the effect of the contrast enhancement is obvious regarding the proposed method. Specifically, the method chooses colors that enable the color-blind to easily discriminate between different areas of the painting. This effect is justified by the luminance optimization process. Regarding this issue, Methods 2 and 3 seem to be more competitive to our method, while the contrast of Method 1 has not been enhanced significantly.

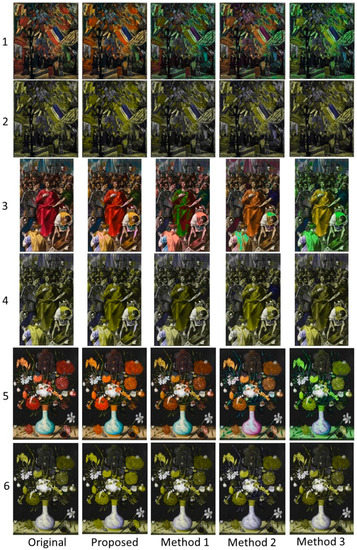

Figure 10 summarizes the results for the case of deuteranopia using Paintings 2, 5, and 6. In the case of Painting 2 (rows 1 and 2), Methods 1 and 3 perform the color adaptation using mainly green color. On the other hand, Method 2 is slightly diversified from the above two methods. As far as the proposed method is concerned, the adapted colors are closer to the original colors, retaining the painting’s aesthetics and therefore its naturalness. Note that in the simulated painting of the proposed method the different areas of the paintings are clearly distinguishable one from another.

Figure 10.

Qualitative comparison for deuteranopia using Paintings (2,5, and 6). The original and the recolored paintings are given in rows (1,3, and 5), while their respective deuteranope simulations are given in rows (2,4, and 6).

In the case of Painting 5 (rows 3 and 4) the proposed algorithm obtains the best tradeoff between naturalness and contrast when compared to the rest of the methods. For example, the color of the cloak in our recolored painting resembles the color of the original image, while the dichromat simulated painting obtains more pleasant contrast enhancement than the other three methods.

Finally, in the case of Painting 6 (rows 5 and 6), the results support the above-mentioned remarks. Clearly the recolored painting obtained by the proposed method chooses more pleasant colors retaining the overall image aesthetics. Moreover, in the dichromat simulated image, the contrast is effectively enhanced providing better discrimination abilities for the color-blind.

4.3. Subjective Evaluation

The four methods were evaluated in terms of pairwise comparisons performed by 28 volunteers. The responses of the volunteers were completely anonymous and no personally identifiable information was captured. Each volunteer was subjected to the well-known Ishihara test [43], which identifies if a person is protanope, deuteranope, or has normal color vison. The test suggested that eight are normal color viewers, two protanopes, seven protanomalous trichromats, three deuteranopes, and eight deuteranomalous trichromats.

Color confusions between protanopes and protanomalous trichromats are qualitatively similar, while the same holds between the deuteranopes and deuteranomalous trichromats [3,44]. This remark justifies term “protan” for protanopia/protanomaly and “deutan” for deuteranopia/deuteranomaly [3,44]. Based on this remark, three groups were identified: (a) Group1 including eight normal color vision viewers; (b) Group 2 including nine protan viewers; (c) Group 3 including 11 deutan viewers. The tested images were the six paintings reported in Figure 5. To carry out the evaluation, the subsequent three questions were used. Q1: “Which image looks more similar to the original one?” Q2: “Which image has the most pleasant contrast?” Q3: “What is your overall preference?” The first question refers to the naturalness, the second to the contrast, and the third to the overall appearance of the recolored images.

For Group 1 two experiments took place. In the first experiment, called experiment 1, viewers were asked to indicate their binary preferences for the above-mentioned questions regarding the protanopia (protan case) recolored images. Given the six images and the eight viewers belonging to Group 1, the comparison between a specific pair of methods was conducted using 48 binary choices for each pairwise comparison. Since there were six pairwise cases, the number of binary choices, called preference scores, between all pairs of algorithms was equal to 288 per question. In the second experiment, called experiment 2, the viewers did the same procedure regarding the deuteranopia (deutan case) recolored images, obtaining again 288 preference samples per question.

For Group 2 and Group 3, the first question Q1 was discarded. The reason behind this choice relies on the fact that when protans and deutans look at the original image they do not perceive it correctly, and any attempt to judge whether the recolored image is similar or not to the original will fail. Therefore, for Groups 2 and 3 only the questions Q2 and Q3 were considered for the protanopia and deuteranopia recolored paintings, respectively. Given the six images and the nine viewers belonging to Group 2, the comparison for a specific pair of algorithms was conducted using 54 binary choices. Since there were six pairwise cases, the total number of binary preferences between all pairs of algorithms was equal to 324 per question. Following the same analysis for the Group 3, which included 11 participants, we obtained a set of 396 binary choices for each question.

The pairwise-comparison data were analyzed by the Thurstone’s law of comparative judgement, case V [45]. Case V of Thurstone’s comparative judgment law is a classical tool to perform ranking of items based on subjective choices of individuals by measuring the individuals’ preference orderings for some stimuli taken from a set of discrete binary choices. In his seminal paper [45], Thurstone proposed a solution in calculating the average preference scores for each item, which Mosteller later showed was the solution to a least squares’ optimization problem [46]. In this paper, the items correspond to the four methods, and the Thurstone–Mosteller fitting model is used to estimate the average preference scores.

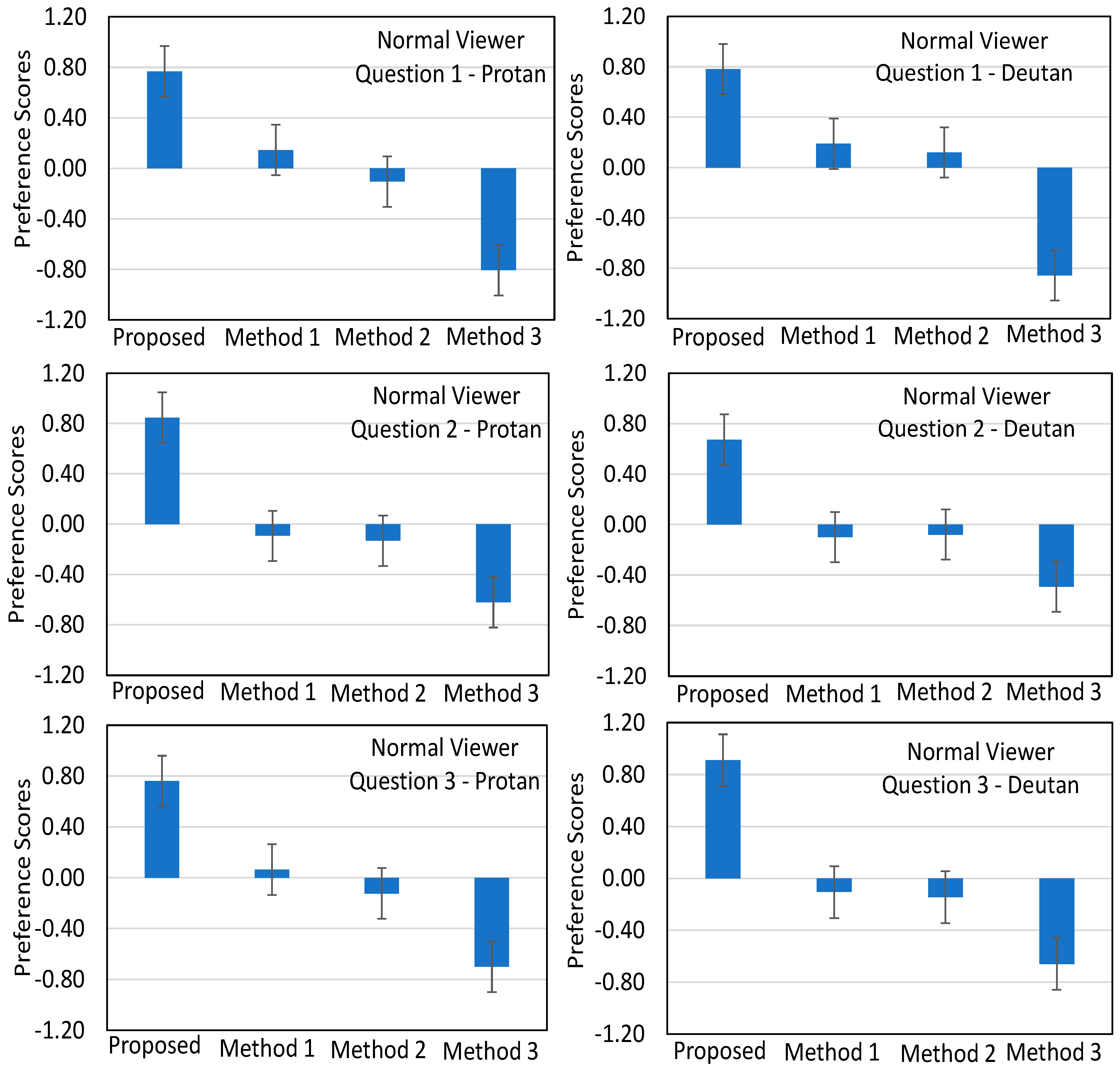

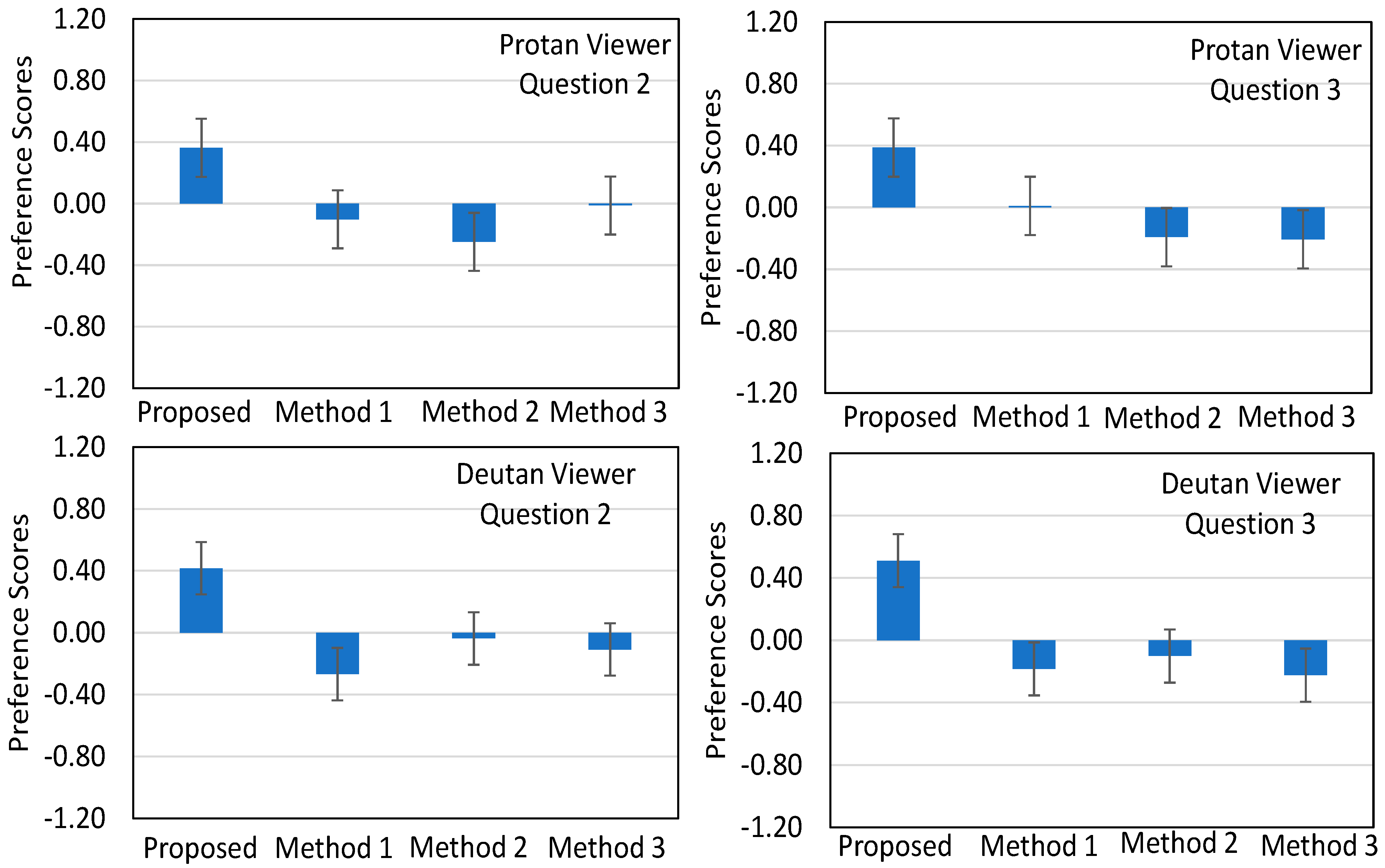

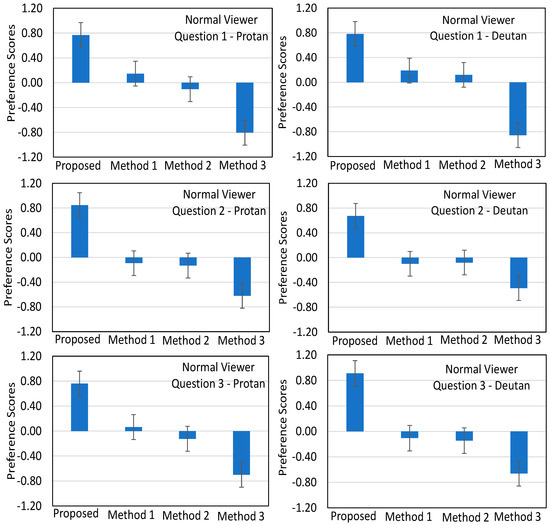

The results from the above analysis are depicted in Figure 11 for the Group 1, and in Figure 12 for the Groups 2 and 3.

Figure 11.

Average preference scores (blue column bars) and the corresponding 95% confidence intervals (error bars) of the pairwise comparisons for the participants belonging to Group 1: (left column) reports the results for experiment 1 (recolored paintings for protanopia) for the three questions, and (right column) reports the results for the experiment 2 (recolored paintings for deuteranopia) for the three questions.

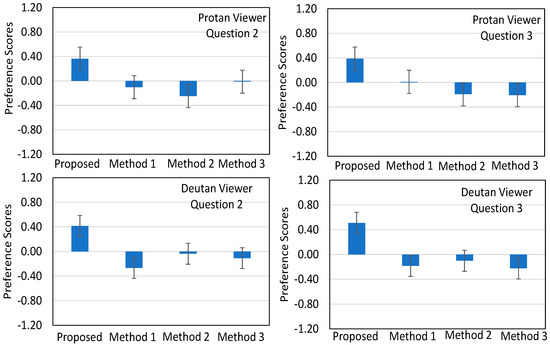

Figure 12.

Average preference scores (blue column bars) and the corresponding 95% confidence intervals (error bars) of the pairwise comparisons for the participants belonging to Group 2 and 3: (first raw) reports the results for the protan viewers regarding the protanopia recolored images and the questions 2 and 3, and (second raw) reports the results for the deutan viewers regarding the deuteranopia recolored paintings and the questions 2 and 3.

Figure 11 illustrates the average preference scores and the corresponding 95% confidence intervals of the pairwise comparisons between the four methods with respect to the normal color vision participants regarding the three aforementioned questions Q1, Q2, and Q3. Recalling that Q1 refers to the naturalness, Q2 to the contrast, and Q3 to the overall appearance of the recolored paintings, we can easily point out the following observations.

First, the analysis indicates that the normal color vision viewers clearly preferred the proposed method for all questions and cases of recolored paintings (protan and deutan). In particular, the participants’ preference to the proposed method becomes more evident in Question 2—Protan, Question 2—Deutan, and Question 3—Deutan cases. Second, the most competitive method to our proposed one is Method 1 (Huang et al. [25]). Indeed, apart from the case Question 2—Deutan, that method outperformed the other two. Third, Method 2 (Wong and Bishop [27]) performs better than Method 3 (Ching and Sabudin [28]).

Figure 12 illustrates the average preference scores and the corresponding 95% confidence intervals of the pairwise comparisons between the four methods with respect to Group 2 and Group 3, regarding Questions 2 and 3. The analysis illustrated in this figure directly indicates the superiority of the proposed method, supporting the results reported in Figure 11. However, the difference between the proposed and the other three methods has been clearly reduced for both the protans and deutans when compared to the results in Figure 11. As far as the other three methods are concerned, Method 2 outperforms the others in two cases of deutan viewers (second raw in Figure 12), while each one of Method 1 and Method 3 in only one, respectively.

In summary, the results of the subjective evaluations show that the proposed method improves the visual details, enhances the contrast, and provides a pleasant appearance for normal color vision viewers as well as for protans and deutans.

5. Discussion and Conclusions

This contribution presents a novel method to modify color images for protanopia and deuteranopia color vision deficiencies. The method consists of four main modules, which are applied in sequence. The first module concerns the color segmentation of the input image and the generation of a number of key colors that might be confusing or non-confusing for the color-blind. The second module maps the key colors onto CIE 1931 chromaticity diagram, where a sophisticated mechanism removes confusing key colors that lie on the same confusion line to different confusion lines so that they can be discriminated by the color-blind. Specifically, each confusing key color is translated to its closest non-occupied confusion line. Next, the above modified key colors are further optimized in the third module through a regularized objective function. Finally, the fourth module obtains the recolored image by adapting the pixels of the input image that correspond to confusing key colors using a color transfer technique.

The implementation of the proposed method is carried out by considering two basic criteria. First, the preservation of the natural appearance of the recolored image in relation to the original input image. Second the contrast enhancement between different image areas. The former is related to the preservation of the recolored image aesthetics as perceived by a normal color vision viewer. The latter refers to the discrimination of image areas that include colors confused by the color-blind.

The above-mentioned criteria are implemented throughout the whole method. To justify that conclusion the following remarks are pointed out. First, the image pixels that are recolored are those that correspond to the confusing key colors. Thus, the part of the input image that corresponds to non-confusing colors remains unchanged; a fact that favors the naturalness criterion. Second, in the key color translation process, the key colors that lie on the same confusion line are removed to different confusion lines. This strategy enhances the contrast because colors that were initially confused can now be discriminated by the color-blind. Moreover, since each confusing key color is moved to its closest non-occupied confusion line, the recolored key colors will be close to the original ones, preserving the naturalness criterion. Finally, the optimization procedure presented in the third module uniformly takes into account both criteria and finally obtains an optimal recoloring of the input image.

For a full validation of the proposed method, extensive experimental studies were conducted. The validation demonstrated the improved performance compared to other three recoloring methodologies. By way of the next steps, future research efforts could be made in the following directions. First, in extending the methodology to cope with the tritanopia and anomalous trichromacy defects. Second, in developing more effective machine learning approaches in the recoloring process. Third, in implementing more sophisticated optimization procedures to maintain an optimal tradeoff between naturalness preservation and contrast enhancement.

Author Contributions

G.E.T. proposed the idea, formulated the method, and wrote the first draft of the paper; A.R. and S.C. developed the software and proposed substantial modifications regarding the method; J.T. and C.-N.A. performed the statistical analysis; K.K. and G.C. performed the experiments. All authors contributed to writing the final version of the paper. All authors have read and agreed to the published version of the manuscript.

Funding

This research is co-financed by Greece and the European Union (European Social Fund-ESF) through the Operational Programme “Human Resources Development, Education and Lifelong Learning 2014–2020” in the context of the project “Color perception enhancement in digitized art paintings for people with Color Vision Deficiency” (MIS 5047115).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We thank the anonymous reviewers for providing constructive comments for improving the overall manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

In this Appendix, a brief description of the form of the FSIMc index is given. Further details can be found in reference [42]. The analysis involves the input image and its recolored image , .

To obtain the FSIMc index the above two images are transformed into the YIQ color space. The transformation of is . Relationally, the transformation of is . Only for the luminance channel Y, for each of the above pixels, we calculate the phase congruencies (PC), which are denoted as , . In addition, only for the luminance channel, we calculate the respective image gradient magnitudes (G), which are denoted as , .

The maximum value between , is

Next, the following similarity measures are estimated,

Relationally, for the chromaticity channels I and Q the subsequent similarity measures are estimated,

To this end, the FSIMc index is defined as follows,

The detailed calculation procedure of the phase congruencies and image gradient magnitudes as well the appropriate values for the parameters and are given in reference [42].

References

- Fairchild, M.D. Color Appearance Models; Wiley: West Sussex, UK, 2005. [Google Scholar]

- Stockman, A.; Sharpe, L.T. The spectral sensitivities of the middle- and long-wavelength-sensitive cones derived from meas-urements in observers of known genotype. Vis. Res. 2000, 40, 1711–1737. [Google Scholar] [CrossRef]

- Smith, V.C.; Pokorny, J. Color matching and color discrimination. In The Science of Color; Elsevier BV: Oxford, UK, 2003; pp. 103–148. [Google Scholar]

- Pridmore, R.W. Orthogonal relations and color constancy in dichromatic colorblindness. PLoS ONE 2014, 9, e107035. [Google Scholar] [CrossRef]

- Fry, G.A. Confusion lines of dichromats. Color Res. Appl. 1992, 17, 379–383. [Google Scholar] [CrossRef]

- Moreira, H.; Álvaro, L.; Melnikova, A.; Lillo, J. Colorimetry and dichromatic vision. In Colorimetry and Image Processing; IntechOpen: London, UK, 2018; pp. 2–21. [Google Scholar]

- Han, D.; Yoo, S.J.; Kim, B. A novel confusion-line separation algorithm based on color segmentation for color vision deficiency. J. Imaging Sci. Technol. 2012, 56, 1–17. [Google Scholar] [CrossRef]

- Choi, J.; Lee, J.; Moon, H.; Yoo, S.J.; Han, D. Optimal color correction based on image analysis for color vision deficiency. IEEE Access 2019, 7, 154466–154479. [Google Scholar] [CrossRef]

- Judd, D.B. Standard response functions for protanopic and deuteranopic vision. J. Opt. Soc. Am. 1945, 35, 199–221. [Google Scholar] [CrossRef]

- Ribeiro, M.; Gomes, A.J. Recoloring algorithms for colorblind people: A survey. ACM Comput. Surv. 2019, 52, 1–37. [Google Scholar] [CrossRef]

- Wakita, Κ.; Shimamura, Κ. SmartColor: Disambiguation framework for the colorblind. In Proceedings of the 7th International ACM SIGACCESS Conference on Computers and Accessibility (ASSETS ’05), Baltimore, MD, USA, 9–12 October 2005; pp. 158–165. [Google Scholar]

- Jefferson, L.; Harvey, R. Accommodating color blind computer users. In Proceedings of the 8th international ACM SIGACCESS Conference on Computers and Accessibility—Assets ’06, Portland, OR, USA, 23–25 October 2006; pp. 40–47. [Google Scholar]

- Kuhn, G.R.; Oliveira, M.M.; Fernandes, L.A.F. An efficient naturalness-preserving image-recoloring method for dichro-mats. IEEE Trans. Vis. Comput. Graph. 2008, 14, 1747–1754. [Google Scholar] [CrossRef]

- Zhu, Z.; Toyoura, M.; Go, K.; Fujishiro, I.; Kashiwagi, K.; Mao, X. Naturalness- and information-preserving image recoloring for red–green dichromats. Signal Process. Image Commun. 2019, 76, 68–80. [Google Scholar] [CrossRef]

- Kang, S.-K.; Lee, C.; Kim, C.-S. Optimized color contrast enhancement for dichromats suing local and global contrast. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 25–28 October 2020; pp. 1048–1052. [Google Scholar]

- Meng, M.; Tanaka, G. Lightness modification method considering visual characteristics of protanopia and deuteranopia. Opt. Rev. 2020, 27, 548–560. [Google Scholar] [CrossRef]

- Huang, J.-B.; Tseng, Y.-C.; Wu, S.-I.; Wang, S.-J. Information preserving color transformation for protanopia and deuteran-opia. IEEE Signal Process. Lett. 2007, 14, 711–714. [Google Scholar] [CrossRef]

- Nakauchi, S.; Onouchi, T. Detection and modification of confusing color combinations for red-green dichromats to achieve a color universal design. Color Res. Appl. 2008, 33, 203–211. [Google Scholar] [CrossRef]

- Rigos, A.; Chatzistamatis, S.; Tsekouras, G.E. A systematic methodology to modify color images for dichromatic human color vision and its application in art paintings. Int. J. Adv. Trends Comput. Sci. Eng. 2020, 9, 5015–5025. [Google Scholar] [CrossRef]

- Bennett, M.; Quigley, A. A method for the automatic analysis of colour category pixel shifts during dichromatic vision. Lect. Notes Comput. Sci. 2006, 4292, 457–466. [Google Scholar]

- Martínez-Domingo, M.Á.; Valero, E.M.; Gómez-Robledo, L.; Huertas, R.; Hernández-Andrés, J. Spectral filter selection for increasing chromatic diversity in CVD subjects. Sensors 2020, 20, 2023. [Google Scholar] [CrossRef] [PubMed]

- Jeong, J.-Y.; Kim, H.-J.; Wang, T.-S.; Yoon, Y.-J.; Ko, S.-J. An efficient re-coloring method with information preserving for the color-blind. IEEE Trans. Consum. Electron. 2011, 57, 1953–1960. [Google Scholar] [CrossRef]

- Simon-Liedtke, J.T.; Farup, I. Multiscale daltonization in the gradient domain. J. Percept. Imaging 2018, 1, 10503-1–10503-12. [Google Scholar] [CrossRef]

- Farup, I. Individualised Halo-Free Gradient-Domain Colour Image Daltonisation. J. Imaging 2020, 6, 116. [Google Scholar] [CrossRef]

- Huang, J.-B.; Chen, C.-S.; Jen, T.-S.; Wang, S.-J. Image recolorization for the color blind. In Proceedings of the 2009 IEEE Inter-national Conference on Acoustics, Speech and Signal Processing (ICASSP 2009), Taipei, Taiwan, 19–24 April 2009; pp. 1161–1164. [Google Scholar]

- Hassan, M.F.; Paramesran, R. Naturalness preserving image recoloring method for people with red–green deficiency. Signal Process. Image Commun. 2017, 57, 126–133. [Google Scholar] [CrossRef]

- Wong, A.; Bishop, W. Perceptually-adaptive color enhancement of still images for individuals with dichromacy. In Proceedings of the 2008 Canadian Conference on Electrical and Computer Engineering; Institute of Electrical and Electronics Engineers (IEEE), Vancouver, BC, Canada, 6–7 October 2008; pp. 002027–002032. [Google Scholar]

- Ching, S.-L.; Sabudin, M. Website image colour transformation for the colour blind. In Proceedings of the 2nd International Conference on Computer Technology and Development; Institute of Electrical and Electronics Engineers (IEEE), Cairo, Egypt, 2–4 November 2010; pp. 255–259. [Google Scholar]

- Lin, H.-Y.; Chen, L.-Q.; Wang, M.-L. Improving Discrimination in Color Vision Deficiency by Image Re-Coloring. Sensors 2019, 19, 2250. [Google Scholar] [CrossRef]

- Ma, Y.; Gu, X.; Wang, Y. Color discrimination enhancement for dichromats using self-organizing color transformation. Inf. Sci. 2009, 179, 830–843. [Google Scholar] [CrossRef]

- Li, J.; Feng, X.; Fan, H. Saliency Consistency-Based Image Re-Colorization for Color Blindness. IEEE Access 2020, 8, 88558–88574. [Google Scholar] [CrossRef]

- Chatzistamatis, S.; Rigos, A.; Tsekouras, G.E. Image Recoloring of Art Paintings for the Color Blind Guided by Semantic Seg-mentation. In Proceedings of the 21st International Conference on Engineering Applications of Neural Networks (EANN 2020), Halkidiki, Greece, 5–7 June 2020; pp. 261–273. [Google Scholar]

- Vienot, F.; Brettel, H.; Ott, L.; Ben M’Barek, A.; Mollon, J.D. What do color-blind people see. Nature 1995, 376, 127–128. [Google Scholar] [CrossRef]

- Vienot, F.; Brettel, H.; Mollon, J.D. Digital Video Colourmaps for Checking the Legibility of Displays by Dichromats. Color Res. Appl. 1999, 24, 243–252. [Google Scholar] [CrossRef]

- Bezdek, J.C. Pattern Recognition with Fuzzy Objective Function Algorithms; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 1981. [Google Scholar]

- Price, K.V.; Storn, R.M.; Lampinen, J.A. Differential Evolution: A Practical Approach to Global Optimization; Springer: Berlin/Heidelberg, Germany, 2005. [Google Scholar]

- Ruderman, D.L.; Cronin, T.W.; Chiao, C.-C. Statistics of cone responses to natural images: Implications for visual coding. J. Opt. Soc. Am. A 1998, 15, 2036–2045. [Google Scholar] [CrossRef]

- Poynton, C.A. Digital Video and HDTV: Algorithms and Interfaces; Morgan Kaufmann: San Francisco, CA, USA, 2003. [Google Scholar]

- Reinhard, E.; Adhikhmin, M.; Gooch, B.; Shirley, P. Color transfer between images. IEEE Eng. Med. Biol. Mag. 2001, 21, 34–41. [Google Scholar] [CrossRef]

- Reinhard, E.; Pouli, T. Colour spaces for colour transfer. Lect. Notes Comput. Sci. 2011, 6626, 1–15. [Google Scholar]

- Olmos, A.; Kingdom, F.A.A. A Biologically Inspired Algorithm for the Recovery of Shading and Reflectance Images. Perception 2004, 33, 1463–1473. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. FSIM: A feature similarity index for image quality assessment. IEEE Trans. Image Process. 2011, 20, 2378–2386. [Google Scholar] [CrossRef]

- Ishihara, S. Tests for Color Blindness. Am. J. Ophthalmol. 1918, 1, 376. [Google Scholar] [CrossRef]

- Farnsworth, D. The Farnsworth Dichotomous Test for Color Blindness—Panel D–15; Psychological Corporation: New York, NY, USA, 1947. [Google Scholar]

- Thurstone, L.L. A law of comparative judgement. Psychol. Rev. 1927, 34, 273–286. [Google Scholar] [CrossRef]

- Mosteller, F. Remarks on the method of paired comparisons: I. The least squares solution assuming equal standard deviations and equal correlations. Psychometrika 1951, 16, 3–9. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).