Radar Recorded Child Vital Sign Public Dataset and Deep Learning-Based Age Group Classification Framework for Vehicular Application

Abstract

1. Introduction

- Raw signals of FMCW radar, reflected from child’s chest. This raw signal contains the reflections from a human chest and all the component present within the radar’s operation range. Apart from developing the vital signs extraction algorithms, the researchers may use this data to develop or compare clutter removal techniques using the raw signals.

- The respiration and heartbeat signal from a clinically approved sensor, BSM6501K (Nihon-Kohden, Tokyo, Japan). These reference signals were synchronized with raw radar signal in the time-domain.

- The details related to the age, gender, height, and BMI of the involved human participants.

- As a first example, a MATLAB code is included in the public repository to extract the berating rate and heart rate using the raw radar signal. We included all the basic building block required to process FMCW radar signal based vital signs extraction.

2. Materials and Methods

2.1. Participants

2.2. Data Collection Environment and Process

2.3. Reference Sensor

2.4. Radar Sensor

2.5. Radar Signal Processing for Vital Sign Extraction

2.6. Data Records

3. Experimental/Data Validation and Use-Case Application

3.1. Sensor Synchronization

3.2. Correlation Between the Clinical Sensor and FMCW Radar Datasets

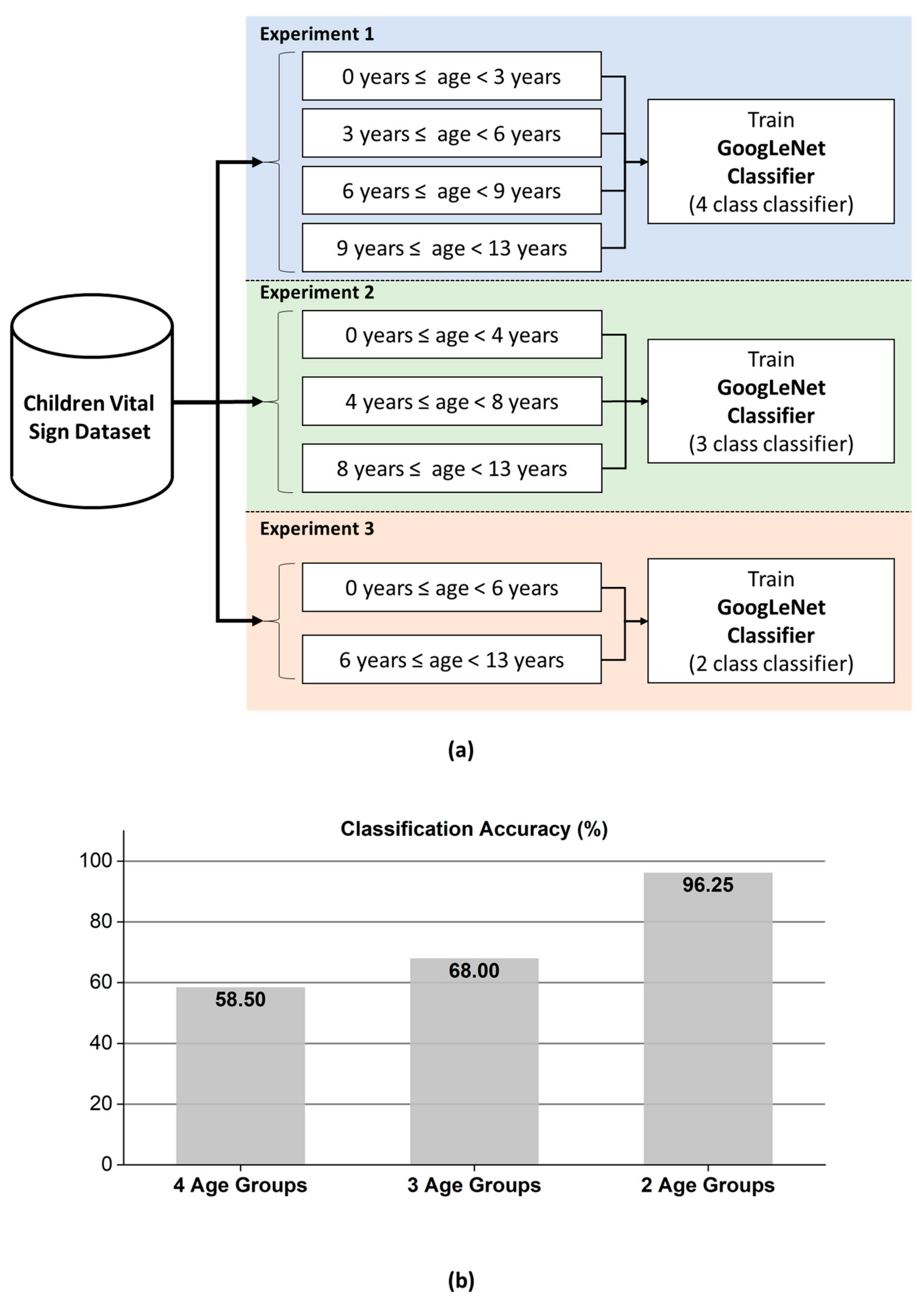

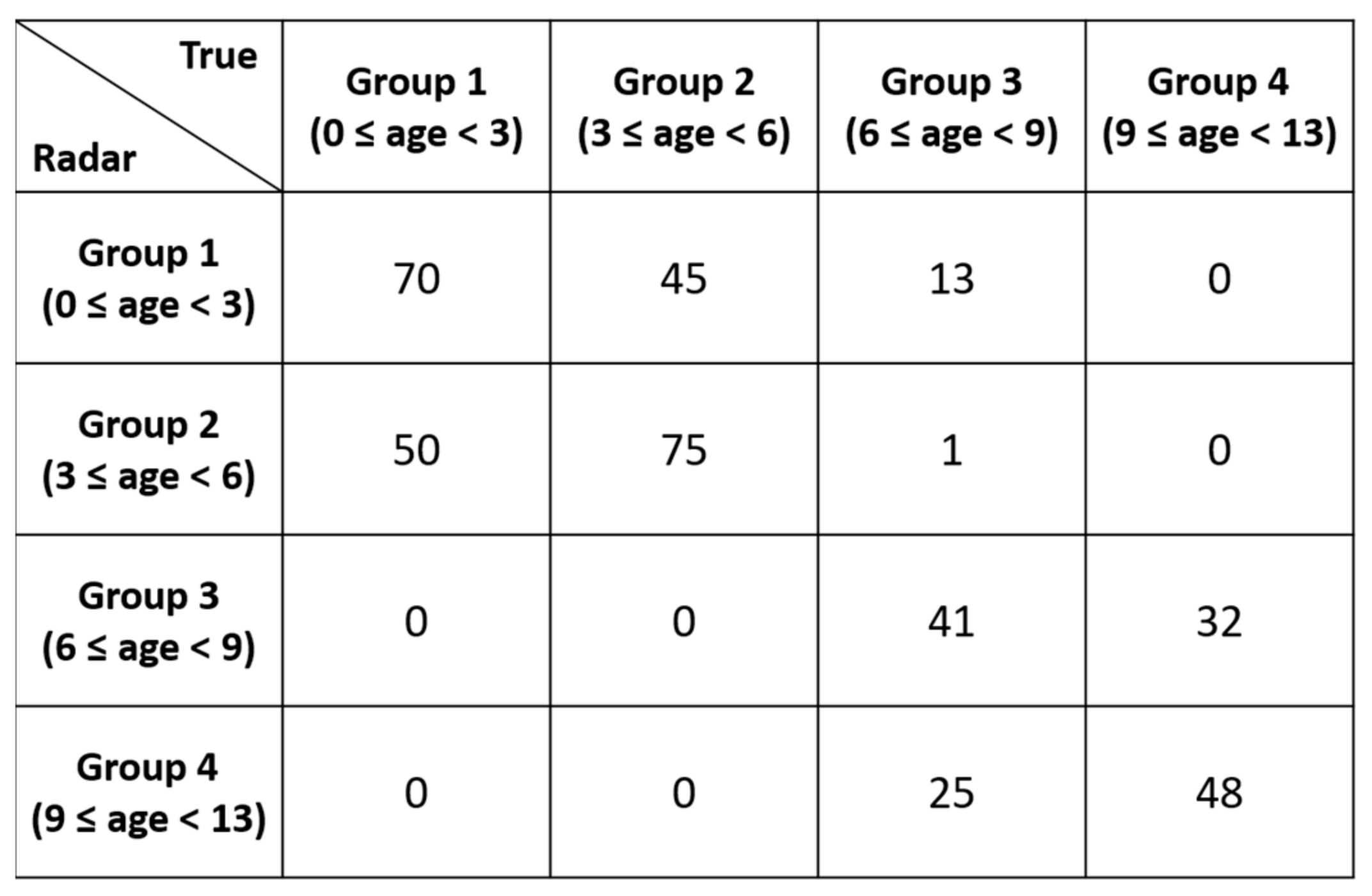

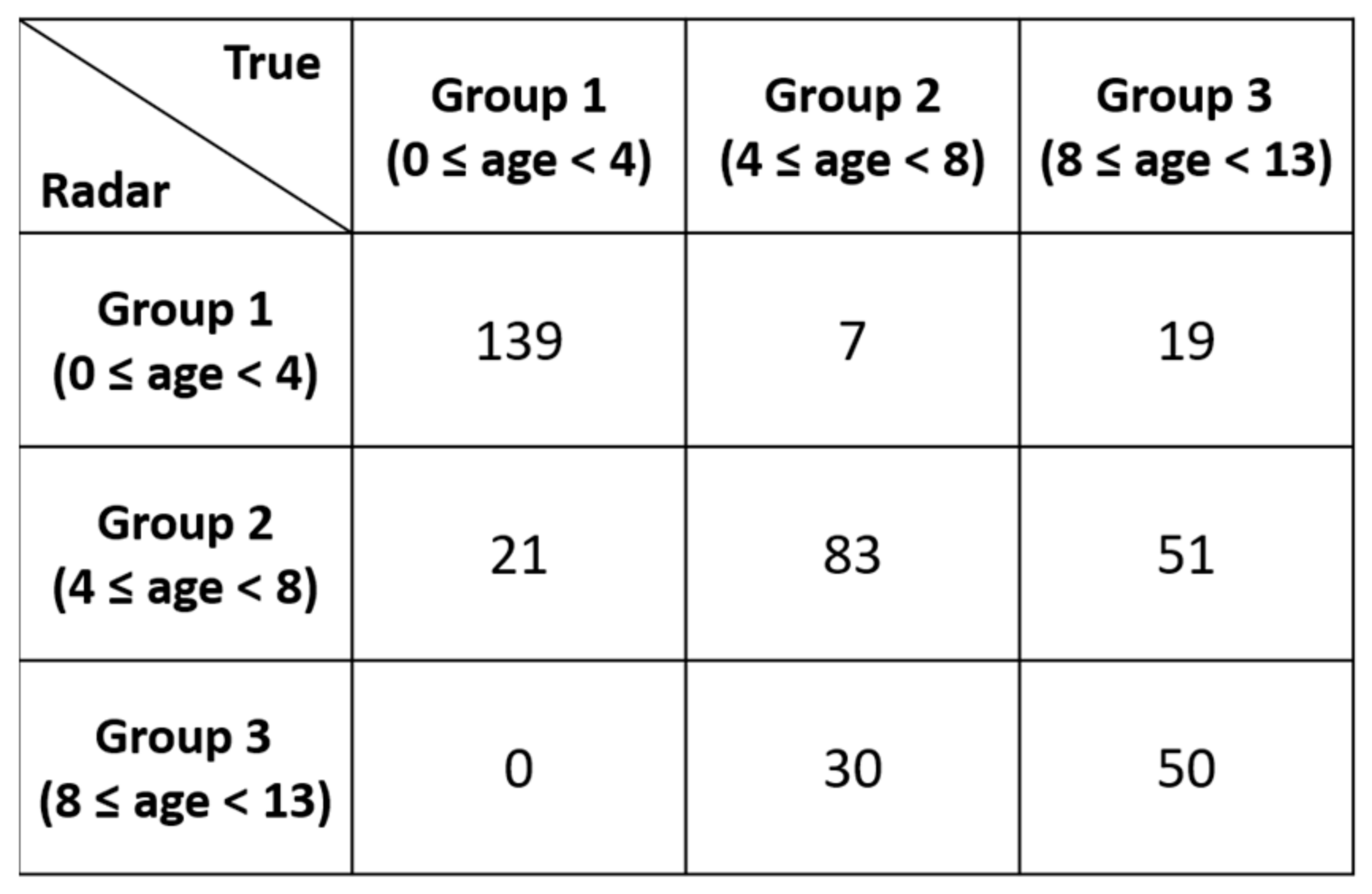

3.3. Demonstration of GoogLeNet-Based Age Group Classifier

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Human Data for 50 Children

| Index | Gender | Age (Month) | Height (cm) | Weight (kg) | BMI (m/kg2) | Car Seat | Breath Rate Range (bpm) | Heart Rate Range (bpm) |

|---|---|---|---|---|---|---|---|---|

| 1 | Female | 38 | 97.5 | 16.3 | 17.15 | Yes | 16–37 | 83–117 |

| 2 | Male | 44 | 101.1 | 16.2 | 15.85 | Yes | 19–44 | 96–125 |

| 3 | Female | 15 | 77.5 | 8.7 | 14.48 | Yes | 26–56 | 117–150 |

| 4 | Male | 54 | 102.0 | 16.6 | 15.96 | Yes | 18–26 | 102–124 |

| 5 | Female | 95 | 125.9 | 28.8 | 18.17 | No | 13–39 | 83–112 |

| 6 | Female | 60 | 102.3 | 14.7 | 14.05 | Yes | 15–29 | 86–119 |

| 7 | Male | 34 | 101.0 | 14.7 | 14.41 | Yes | 19–39 | 89–110 |

| 8 | Female | 25 | 91.3 | 13.2 | 15.84 | Yes | 21–38 | 95–131 |

| 9 | Male | 57 | 107.7 | 19.3 | 16.64 | Yes | 15–27 | 82–107 |

| 10 | Male | 82 | 127.0 | 22.7 | 14.07 | No | 20–31 | 80–100 |

| 11 | Male | 117 | 145.7 | 48.8 | 22.99 | No | 22–34 | 81–105 |

| 12 | Female | 82 | 116.8 | 17.4 | 12.75 | No | 19–28 | 76–92 |

| 13 | Female | 95 | 125.6 | 23.0 | 14.58 | No | 17–24 | 82–99 |

| 14 | Female | 116 | 137.1 | 36.2 | 19.26 | No | 14–23 | 71–83 |

| 15 | Female | 53 | 102.4 | 15.2 | 14.50 | Yes | 19–34 | 90–107 |

| 16 | Female | 95 | 131.9 | 33.1 | 19.03 | No | 9–25 | 76–91 |

| 17 | Female | 75 | 125.2 | 33.1 | 21.12 | No | 15–26 | 77–90 |

| 18 | Female | 22 | 88.2 | 12.7 | 16.33 | Yes | 18–35 | 101–130 |

| 19 | Male | 42 | 104.5 | 15.1 | 13.83 | Yes | 15–39 | 106–128 |

| 20 | Male | 69 | 115.7 | 19.9 | 14.87 | Yes | 17–25 | 91–117 |

| 21 | Male | 112 | 127.0 | 26.8 | 16.62 | No | 17–28 | 94–120 |

| 22 | Male | 82 | 126.4 | 35.0 | 21.91 | No | 21–42 | 103–117 |

| 23 | Female | 84 | 125.0 | 36.6 | 23.42 | No | 13–31 | 74–95 |

| 24 | Male | 75 | 121.0 | 23.0 | 15.71 | No | 15–31 | 72–92 |

| 25 | Male | 21 | 84.2 | 12.0 | 16.93 | Yes | 19–37 | 95–119 |

| 26 | Male | 31 | 89.1 | 11.5 | 14.49 | Yes | 14–35 | 89–110 |

| 27 | Male | 31 | 93.0 | 14.4 | 16.65 | Yes | 21–37 | 96–128 |

| 28 | Female | 32 | 92.4 | 14.5 | 16.98 | Yes | 16–28 | 100–120 |

| 29 | Male | 53 | 104.8 | 16.4 | 14.93 | Yes | 16–39 | 88–108 |

| 30 | Female | 35 | 90.5 | 13.9 | 16.97 | Yes | 19–27 | 87–111 |

| 31 | Female | 63 | 105.4 | 15.1 | 13.59 | Yes | 18–24 | 81–106 |

| 32 | Female | 66 | 116.2 | 18.3 | 13.55 | Yes | 13–26 | 83–109 |

| 33 | Female | 13 | 78.4 | 9.6 | 15.62 | Yes | 24–33 | 106–119 |

| 34 | Male | 9 | 73.1 | 9.6 | 17.97 | Yes | 19–47 | 96–124 |

| 35 | Male | 112 | 147.0 | 47.5 | 21.98 | No | 13–22 | 79–96 |

| 36 | Male | 112 | 148.5 | 31.9 | 14.47 | No | 11–28 | 80–101 |

| 37 | Male | 121 | 144.8 | 46.8 | 22.32 | No | 13–31 | 90–115 |

| 38 | Male | 109 | 132.6 | 29.1 | 16.55 | No | 12–31 | 87–102 |

| 39 | Male | 124 | 153.1 | 44.1 | 18.81 | No | 15–31 | 70–83 |

| 40 | Female | 29 | 90.8 | 13.5 | 16.37 | Yes | 27–36 | 95–116 |

| 41 | Female | 44 | 92.0 | 13.5 | 15.95 | Yes | 19–39 | 102–120 |

| 42 | Female | 47 | 102.3 | 16.5 | 15.77 | Yes | 20–27 | 102–135 |

| 43 | Female | 9 | 69.1 | 8.4 | 17.59 | Yes | 26–40 | 115–133 |

| 44 | Male | 40 | 100.0 | 14.5 | 14.50 | Yes | 13–24 | 93–116 |

| 45 | Female | 7 | 73.2 | 9.6 | 17.92 | Yes | 15–24 | 94–112 |

| 46 | Female | 42 | 95.4 | 13.2 | 14.50 | Yes | 13–26 | 81–111 |

| 47 | Female | 9 | 74.0 | 9.3 | 16.98 | Yes | 17–24 | 103–119 |

| 48 | Male | 25 | 92.1 | 11.2 | 13.20 | Yes | 12–24 | 94–115 |

| 49 | Female | 148 | 160.0 | 54.0 | 21.09 | No | 18–27 | 76–90 |

| 50 | Male | 103 | 144.4 | 30.4 | 14.58 | No | 16–24 | 66–93 |

References

- Li, C.; Lubecke, V.M.; Boric-Lubecke, O.; Lin, J. A review on recent advances in doppler radar sensors for noncontact healthcare monitoring. IEEE Trans. Microw. Theory Tech. 2013, 61, 2046–2060. [Google Scholar] [CrossRef]

- Van, N.T.P.; Tang, L.; Demir, V.; Hasan, S.F.; Minh, N.D.; Mukhopadhyay, S. Review-microwave radar sensing systems for search and rescue purposes. Sensors 2019, 19, 2879. [Google Scholar] [CrossRef]

- Lim, S.; Lee, S.; Jung, J.; Kim, S.-C. Detection and localization of people inside vehicle using impulse radio ultra-wideband radar sensor. IEEE Sens. J. 2020, 20, 3892–3901. [Google Scholar] [CrossRef]

- Huang, M.-C.; Liu, J.J.; Xu, W.; Gu, C.; Li, C.; Sarrafzadeh, M. A self-calibrating radar sensor system for measuring vital signs. IEEE Trans. Biomed. Circuits Syst. 2015, 10, 352–363. [Google Scholar] [CrossRef]

- Cardillo, E.; Caddemi, A. A review on biomedical MIMO radars for vital sign detection and human localization. Electron 2020, 9, 1497. [Google Scholar] [CrossRef]

- Lee, Y.; Park, J.-Y.; Choi, Y.-W.; Park, H.-K.; Cho, S.-H.; Cho, S.H.; Lim, Y.-H. A novel non-contact heart rate monitor using impulse-radio ultra-wideband (IR-UWB) radar technology. Sci. Rep. 2018, 8, 1–10. [Google Scholar] [CrossRef]

- Park, J.-Y.; Lee, Y.; Choi, Y.-W.; Heo, R.; Park, H.-K.; Cho, S.-H.; Cho, S.H.; Lim, Y.-H. Preclinical evaluation of a noncontact simultaneous monitoring method for respiration and carotid pulsation using impulse-radio ultra-wideband radar. Sci. Rep. 2019, 9, 1–12. [Google Scholar] [CrossRef]

- Alizadeh, M.; Shaker, G.; De Almeida, J.C.M.; Morita, P.P.; Safavi-Naeini, S. Remote monitoring of human vital signs using mm-wave FMCW radar. IEEE Access 2019, 7, 54958–54968. [Google Scholar] [CrossRef]

- Da Cruz, S.D.; Beise, H.-P.; Schroder, U.; Karahasanovic, U. A theoretical investigation of the detection of vital signs in presence of car vibrations and radar-based passenger classification. IEEE Trans. Veh. Technol. 2019, 68, 3374–3385. [Google Scholar] [CrossRef]

- Da Cruz, S.D.; Beise, H.-P.; Schröder, U.; Karahasanovic, U. Detection of vital signs in presence of car vibrations and RADAR-based passenger classification. In Proceedings of the 2018 19th International Radar Symposium (IRS), Bonn, Germany, 20–26 June 2018. [Google Scholar]

- Hwang, B.; You, J.; Vaessen, T.; Myin-Germeys, I.; Park, C.; Zhang, B.-T. Deep ECGNet: An optimal deep learning framework for monitoring mental stress using ultra short-term ecg signals. Telemed. e-Health 2018, 24, 753–772. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.F. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Riquelme, F.; Espinoza, C.; Rodenas, T.; Minonzio, J.-G.; Taramasco, C. eHomeSeniors Dataset: An infrared thermal sensor dataset for automatic fall detection research. Sensors 2019, 19, 4565. [Google Scholar] [CrossRef]

- Geissinger, J.H.; Asbeck, A.T. Motion inference using sparse inertial sensors, self-supervised learning, and a new dataset of unscripted human motion. Sensors 2020, 20, 6330. [Google Scholar] [CrossRef]

- Bhat, G.; Tran, N.; Shill, H.; Ogras, U.Y. w-HAR: An activity recognition dataset and framework using low-power wearable devices. Sensors 2020, 20, 5356. [Google Scholar] [CrossRef]

- Sucerquia, A.; López, J.D.; Vargas-Bonilla, J.F. SisFall: A fall and movement dataset. Sensors 2017, 17, 198. [Google Scholar] [CrossRef]

- Luna-Perejón, F.; Muñoz-Saavedra, L.; Civit-Masot, J.; Civit, A.; Domínguez-Morales, M. AnkFall—Falls, falling risks and daily-life activities dataset with an ankle-placed accelerometer and training using recurrent neural networks. Sensors 2021, 21, 1889. [Google Scholar] [CrossRef]

- Schellenberger, S.; Shi, K.; Steigleder, T.; Malessa, A.; Michler, F.; Hameyer, L.; Neumann, N.; Lurz, F.; Weigel, R.; Ostgathe, C.; et al. A dataset of clinically recorded radar vital signs with synchronised reference sensor signals. Sci. Data 2020, 7, 1–11. [Google Scholar] [CrossRef]

- Shi, K.; Schellenberger, S.; Will, C.; Steigleder, T.; Michler, F.; Fuchs, J.; Weigel, R.; Ostgathe, C.; Koelpin, A. A dataset of radar-recorded heart sounds and vital signs including synchronised reference sensor signals. Sci. Data 2020, 7, 1–12. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, C.; Zhang, H.; Dong, Y.; Wei, S. A SAR dataset of ship detection for deep learning under complex backgrounds. Remote. Sens. 2019, 11, 765. [Google Scholar] [CrossRef]

- Barnes, D.; Gadd, M.; Murcutt, P.; Newman, P.; Posner, I. The Oxford radar robotcar dataset: A radar extension to the Oxford robotcar dataset. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA); Institute of Electrical and Electronics Engineers (IEEE), Paris, France, 31 May–31 August 2020; pp. 6433–6438. [Google Scholar]

- Ahmed, S.; Kallu, K.D.; Ahmed, S.; Cho, S.H. Hand gestures recognition using radar sensors for human-computer-interaction: A review. Remote Sens. 2021, 13, 527. [Google Scholar] [CrossRef]

- Yoo, S.; Ahmed, S.; Kang, S.; Hwang, D.; Lee, J.; Son, J.; Cho, S.H. Radar-Recorded Child Vital Sign Dataset and Deeplearning-Based Age Group Classification Framework for Vehicular Applications. 2021. Available online: https://figshare.com/s/936cf9f0dd25296495d3 (accessed on 29 March 2021).

- Wang, Y.; Wang, W.; Zhou, M.; Ren, A.; Tian, Z. Remote monitoring of human vital signs based on 77-GHz mm-wave FMCW radar. Sensors 2020, 20, 2999. [Google Scholar] [CrossRef]

- Gambi, E.; Ciattaglia, G.; De Santis, A.; Senigagliesi, L. Millimeter wave radar data of people walking. Data Brief 2020, 31, 105996. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Wang, S.; Zhou, M.; Jiang, Q.; Tian, Z. TS-I3D based hand gesture recognition method with radar sensor. IEEE Access 2019, 7, 22902–22913. [Google Scholar] [CrossRef]

- Yanowitz, F.G. Introduction to ECG Interpretation; LDS Hospital and Intermountain Medical Center: Salt Lake City, UT, USA, 2012. [Google Scholar]

- Clifford, G.D.; Azuaje, F.; McSharry, P. Advanced Methods and Tools for ECG Data Analysis; Artech House: Boston, MA, USA, 2006. [Google Scholar]

- Brooker, G.M. Understanding millimetre wave FMCW radars. In Proceedings of the 1st International Conference on Sensing Technology, Karachi, Pakistan, 24–26 June 2005. [Google Scholar]

- Cicchetti, D.V. Guidelines, criteria, and rules of thumb for evaluating normed and standardized assessment instruments in psychology. Psychol. Assess. 1994, 6, 284. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2015. [Google Scholar]

| Parameter | Value |

|---|---|

| Number of Transmit Antennas | 3 |

| Number of Receive Antennas | 4 |

| Starting Frequency () | 60 GHz |

| Bandwidth () | 4 GHz |

| Tx Power | 12 dBm |

| Rx Noise | 12 dB |

| Parameter | Value |

|---|---|

| Number of Transmit Antennas | 1 |

| Number of Receive Antennas | 4 |

| Starting Frequency () | 60.25 GHz |

| Bandwidth () | 3.75 GHz |

| ADC Sampling Rate () | 3 Msps |

| Chirp Duration () | 91.72 μsec |

| Number of Chirps per Frame | 2 |

| Frames per Second | 20 |

| Range Resolution () | 4 cm |

| Maximum Range () | 11 m |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yoo, S.; Ahmed, S.; Kang, S.; Hwang, D.; Lee, J.; Son, J.; Cho, S.H. Radar Recorded Child Vital Sign Public Dataset and Deep Learning-Based Age Group Classification Framework for Vehicular Application. Sensors 2021, 21, 2412. https://doi.org/10.3390/s21072412

Yoo S, Ahmed S, Kang S, Hwang D, Lee J, Son J, Cho SH. Radar Recorded Child Vital Sign Public Dataset and Deep Learning-Based Age Group Classification Framework for Vehicular Application. Sensors. 2021; 21(7):2412. https://doi.org/10.3390/s21072412

Chicago/Turabian StyleYoo, Sungwon, Shahzad Ahmed, Sun Kang, Duhyun Hwang, Jungjun Lee, Jungduck Son, and Sung Ho Cho. 2021. "Radar Recorded Child Vital Sign Public Dataset and Deep Learning-Based Age Group Classification Framework for Vehicular Application" Sensors 21, no. 7: 2412. https://doi.org/10.3390/s21072412

APA StyleYoo, S., Ahmed, S., Kang, S., Hwang, D., Lee, J., Son, J., & Cho, S. H. (2021). Radar Recorded Child Vital Sign Public Dataset and Deep Learning-Based Age Group Classification Framework for Vehicular Application. Sensors, 21(7), 2412. https://doi.org/10.3390/s21072412