Abstract

Reliable object tracking that is based on video data constitutes an important challenge in diverse areas, including, among others, assisted surgery. Particle filtering offers a state-of-the-art technology for this challenge. Becaise a particle filter is based on a probabilistic model, it provides explicit likelihood values; in theory, the question of whether an object is reliably tracked can be addressed based on these values, provided that the estimates are correct. In this contribution, we investigate the question of whether these likelihood values are suitable for deciding whether the tracked object has been lost. An immediate strategy uses a simple threshold value to reject settings with a likelihood that is too small. We show in an application from the medical domain—object tracking in assisted surgery in the domain of Robotic Osteotomies—that this simple threshold strategy does not provide a reliable reject option for object tracking, in particular if different settings are considered. However, it is possible to develop reliable and flexible machine learning models that predict a reject based on diverse quantities that are computed by the particle filter. Modeling the task in the form of a regression enables a flexible handling of different demands on the tracking accuracy; modeling the challenge as an ensemble of classification tasks yet surpasses the results, while offering the same flexibility.

1. Introduction

Novel technological developments have led to great advances in computer assisted surgery, whereby image-guided support of surgeons plays a particularly important role besides direct robotic assistance [1]. Some advances can be observed in recent years, including, among other approaches, dedicated open source platforms to advance and evaluate technical progress [2]. Assistive systems often rely on visual information, such as videos or depth images, and imaging sensors play a crucial role for a wide array of medical domains. One such domain with a potentially high benefit constitutes the field of osteotomy, where the exact position and pose of the bone has to be known precisely. In this context, current practice is to use markers, such as 3D-printed templates, to assist the surgeon [3]. However, the design and production of these templates constitutes a significant overhead that could be avoided by markerless tracking systems. Such intelligent tracking methods enable the reliable identification or segmentation of the images into semantically meaningful parts, e.g., relevant organs, bone structure, or malignant parts, and tracking these over time.

Several approaches address optical tracking in the medical domain, such as models that are based on optical flow [4], or probabilistic frameworks [5]. In the last decade, particle filtering became one of the most prominent methods for reliable positioning and tracking in the medical domain and beyond [6,7]. Particle filtering basically constitutes a technique for an efficient Monte Carlo simulation to solve filtering problems that arise when internal states of dynamic systems, such as the location of a specific constituent in an image, need to be estimated based on partial observations that are subject to sensor noise. Thereby, a crucial part of the model is the design of a suitable probability model that captures the true underlying probability but is sufficiently easy to compute. There exists extensive work regarding appropriate probabilistic models in continuous and discrete settings [8,9] as well as extensive research on the accuracy of the result and how to arrive at unbiased estimators [10,11,12,13]. Usually, real-time performance is required, such that computationally complex models are prohibited and considerable work addresses computation schemes on the edge [14].

Popular models can typically incorporate some form of generic measurement noise, yet major disturbances, such as occlusions, pose a challenge. Hence, it is a matter of ongoing research as to how to design robust tracking schemes that enable a re-detection of the object after it has been lost and realize a larger robustness and invariance to noise, as an example, by means of multiple correlation filters or robust representations, such as hidden layers of deep networks [15,16,17]. However, in realistic scenarios, it is unavoidable that tracked objects are lost in some settings, as an example, in the case of a total occlusion of the tracked object. In critical settings, such as assisted surgery, it is desirable to equip tracking algorithms with the notion of a reject option—meaning that tracking algorithms are capable of detecting on their own if the tracking is no longer reliable and a major discrepancy of the tracked object and the result of the tracking algorithm is to be expected. In such cases, an autonomous or assisted re-initialization of the tracking algorithm could be done and major harm due to a lost tracking be prevented. Work in this direction exists that focuses on rejecting outlier measurements [18], which uses non-parametric kernel density estimation. The latter is well known to be impractical already for a medium number of dimensions [19]. In contrast, the sum of likelihoods of updated particles is used for a decision on rejecting in [20]. The authors of [21] mitigate the effect of corrupted observations by updating only a subset of the particles, where an observation is considered to be corrupted if the largest particle likelihood is below a pre-defined threshold. We consider this detection mechanism, which is also investigated by Chow [22] in a more general setting, as a baseline in our evaluation. In this contribution, we investigate the challenge of how to equip a recent state-of-the-art particle filtering technology for object tracking, which is based on numerically stable representations of particle weights in the logarithmic domain [23], by efficient possibilities to equip the tracking result with a notion of reliability in the form of a reject option, which filters too large deviations of the tracked quantities from their true values. We investigate this, in particular, with respect to changing configuration settings of the particle filter.

The question of how to enhance models with a notion of their reliability and an according reject constitutes an open research challenge for more general models than particle filters, as considered in our approach. For example, in classification tasks, the notion of selective classification or classification with a reject option has already been introduced quite early on [22]. It has been shown that a simple threshold strategy yields an optimum reject in the sense of cost optimization, provided that the true underlying probability is known. The latter is usually not the case, and the models inferred from data provide approximations of the true values only, provided probabilistic, rather than frequentist, modeling takes place. As a consequence, it has been investigated, in how far deterministic models can be extended to incorporate a reject option by suitable loss modeling [24], how confidence values can be approximated by suitable alternatives that are provided by the given model [25], and how reject options can be learned in parallel to the task at hand [26]. Note that statistical frameworks exist—such as conformal prediction [27]—which compute confidence values from a given model and data in such ways that strong mathematical guarantees hold. Yet, these approaches are usually time consuming and they cannot be used in an online tracking task where real-time conditions hold.

In this contribution, we address the question of how to equip a state-of-the art particle filter for tracking by efficient schemes that implement a reliable reject option to detect cases where the tracking is invalid. Because particle filters are based on a probabilistic modeling and, hence, provide likelihood values, a natural baseline builds a reject option on a global threshold strategy: settings are rejected if the likelihood is too small, following the strategy, as investigated by Chow [22]. It turns out that the results of this threshold strategy are not satisfactory for a benchmark problem from the domain of assisted surgery. We propose modelling the problem as a machine learning task, and we propose two possible ways to formalize the problem: as a regression task that predicts the amount of displacement, or as an ensemble of classification tasks that detect settings for which the misplacement is larger than a given threshold. We will formalize these two approaches and evaluate those in a realistic benchmark and different settings of the particle filter.

2. Materials and Methods

2.1. Experimental Prerequisites

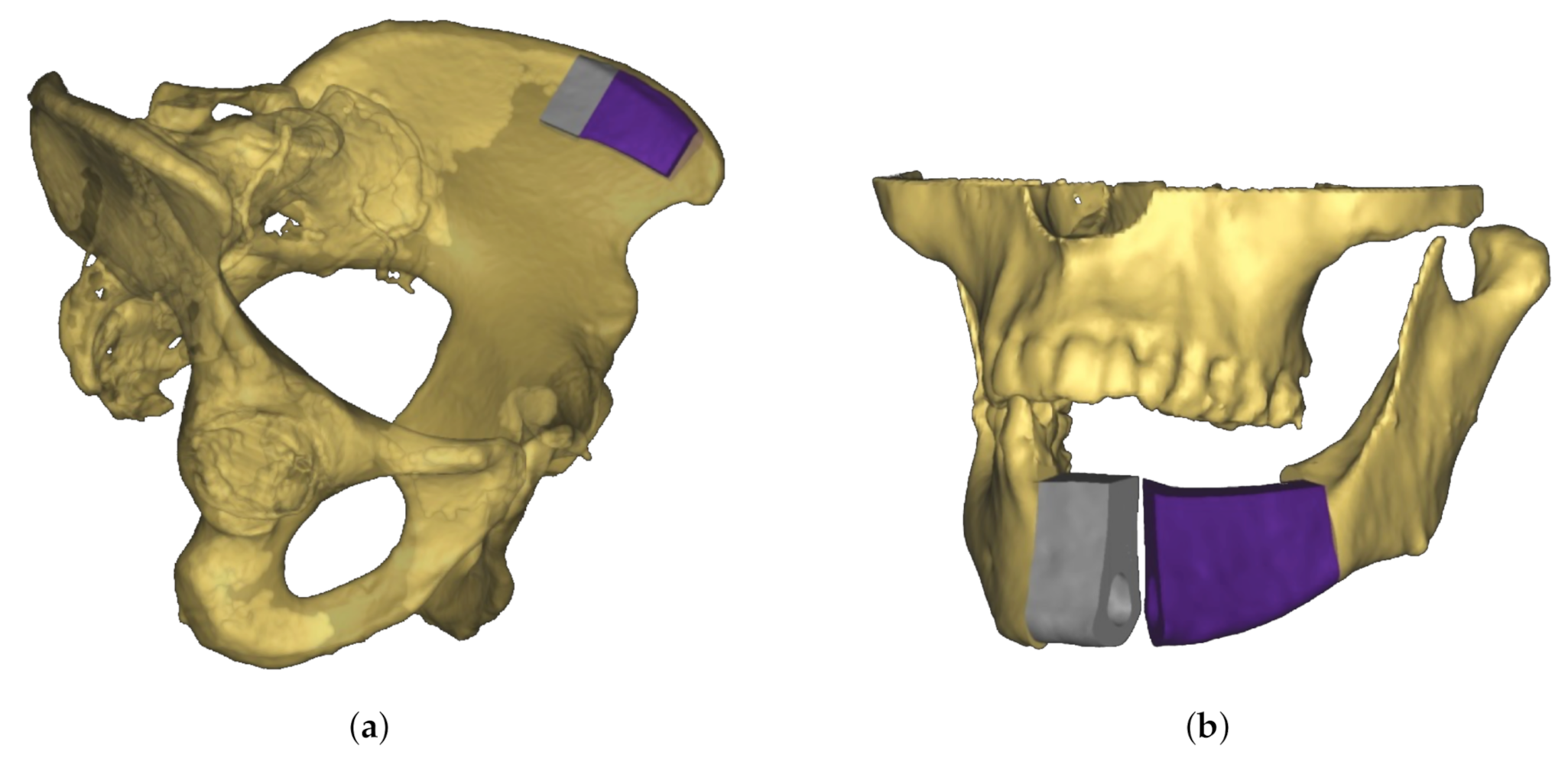

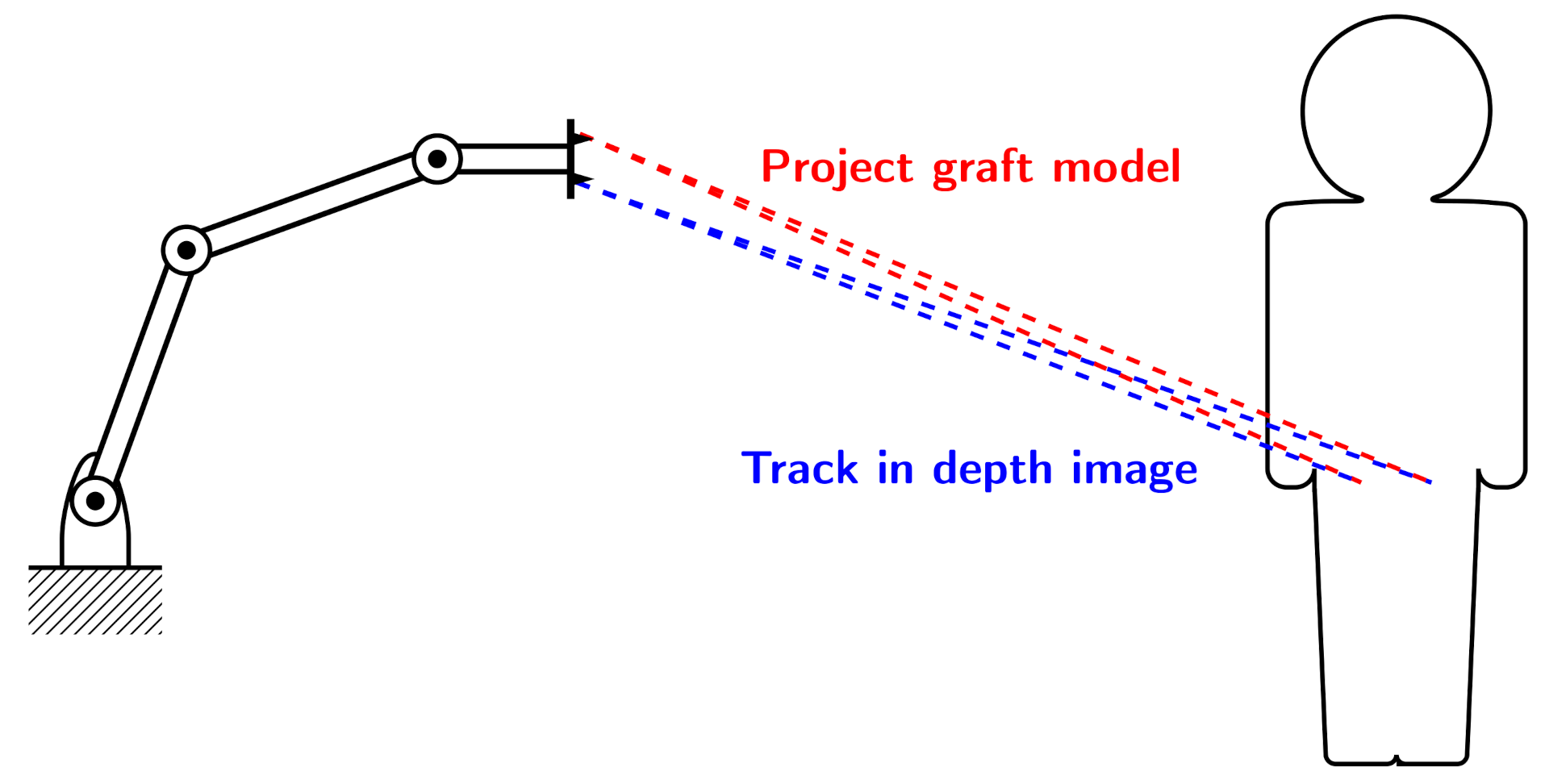

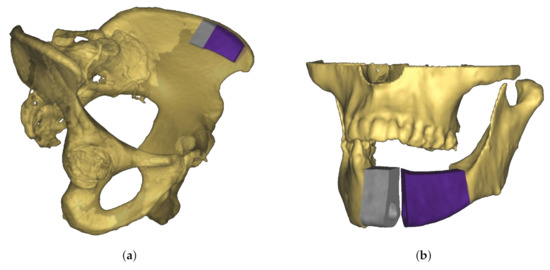

A novel approach to jaw reconstruction surgery utilizes bone cut from the patient’s pelvis (Figure 1a) to create a graft for the jaw (Figure 1b) with low risk of getting rejected by the body [28]. For the cutting procedure a custom 3D model is created as a template for the surgeon. So far, this model was printed and then placed on the bone, which is expensive and time consuming. In a new approach [29], a robotic arm is equipped with a depth camera to track a representation of the template in the depth image while using a particle filter [23], and a projector to display cutting outlines onto the bone (Figure 2) during surgery. However, this new approach leads to the risk that the projection is off when the tracking is inaccurate.

Figure 1.

Schematic view of material for jaw reconstruction surgery. (a) Three-dimensional (3D) model of graft fitted to patient’s pelvic bone. To reconstruct the geometry of the jaw, two separate transplants are necessary (colored in grey and purple). (b) Use of bone graft to reconstruct jaw. Because of the separation into two transplants the bend of the jaw bone can be rebuilt.

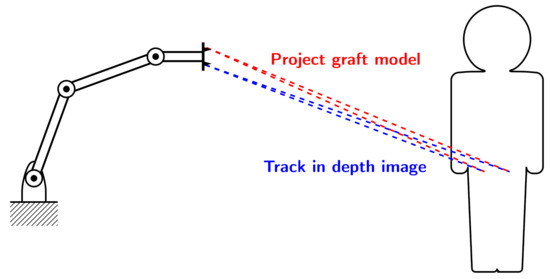

Figure 2.

Experimental Setup: 3D camera tracks model of bone graft in depth image of patient’s pelvis (blue). Projector displays cutting lines at tracked position for the surgeon (red).

The projection cannot be static, since displacements of the body are unavoidable during surgery. Hence, a tracking of the shape and an according adjustment of the projection is necessary. There exist several reasons why tracking can be wrong—as an example, a major challenge consists in the fact that the tracking target might be partially or fully occluded by the surrounding tissue or a person.

Our goal is to find a method that identifies when the track is inaccurate, so the surgeon can be notified to stop cutting, and a manual reset may be conducted, if needed. Thereby, we want to find a technology that works for different parameterizations of the particle filter that we will introduce in Section 2.2, such that an easy transfer in between different settings becomes possible. Because it might be specific to the situation, which deviation of the tracking from the ground truth is crucial, we also consider different degrees of inaccuracy that will be explained in Section 2.4. This way, our method can also be applied to other setups that utilize particle filter tracking.

For this purpose, we utilize the data and particle filter implementation from the study [29]. In the following, we first summarize the according particle filter methodology before we then provide more details on the used data sequences.

2.2. Particle Filter Tracking

Assume a depth image at time t is denoted

where m and n are the width and height of the depth image and the distance to the camera of the pixel at position . The basic objective of the particle filter tracking is to find the pose

consisting of position as 3D coordinates in the room, and orientation as a quaternion, for the 3D model of the graft that has the biggest pixel overlap to the depth image at time t. is the current translational velocity of the particle and the current rotational velocity. The tracking algorithm that has been implemented and evaluated in the approach [29] consists of the following steps: it is manually initialized at approximately the correct position. Subsequently, within an iterative loop, we predict K possible new poses (particles) by sampling according to a noise distribution

with the parameters for the translational noise , rotational noise , and a velocity factor . refers to the normal distribution. The new velocities are obtained by adding gaussian noise to the old ones. Additionally, the velocity is assumed to be decaying due to a loss of energy that is modeled by the multiplication of the velocity factor. Using the new velocities, the new position and orientation are calculated by applying the backward Euler integration method. Note that the new orientation is the Nlerp between our old orientation and the new orientational velocity. For each new particle, we generate an expected depth image that is based on simple geometric principles, and we calculate the weight

where is the log likelihood estimating how likely the observed pixel fits the expected pixel and it is calculated as

where are configurable as uniform, Gaussian, and exponential weight, and the camera intrinsic values as maximum and minimum depth value, as depth factor, and as the camera’s base noise. In the first case, the expected depth at this pixel is invalid, i.e., it belongs to the background, therefore any observation fits. In the second case, occlusion is a possible reason why the observation is closer than the expectation. This possibility is modeled with an additional exponential probability term as compared to the third case. In the case of an invalid observation, the uniform weight is taken as a random measurement (case 4). The tracking pose is then determined by the particle with the maximum log likelihood

See the reference [23] for details.

2.3. Tracking Scenario

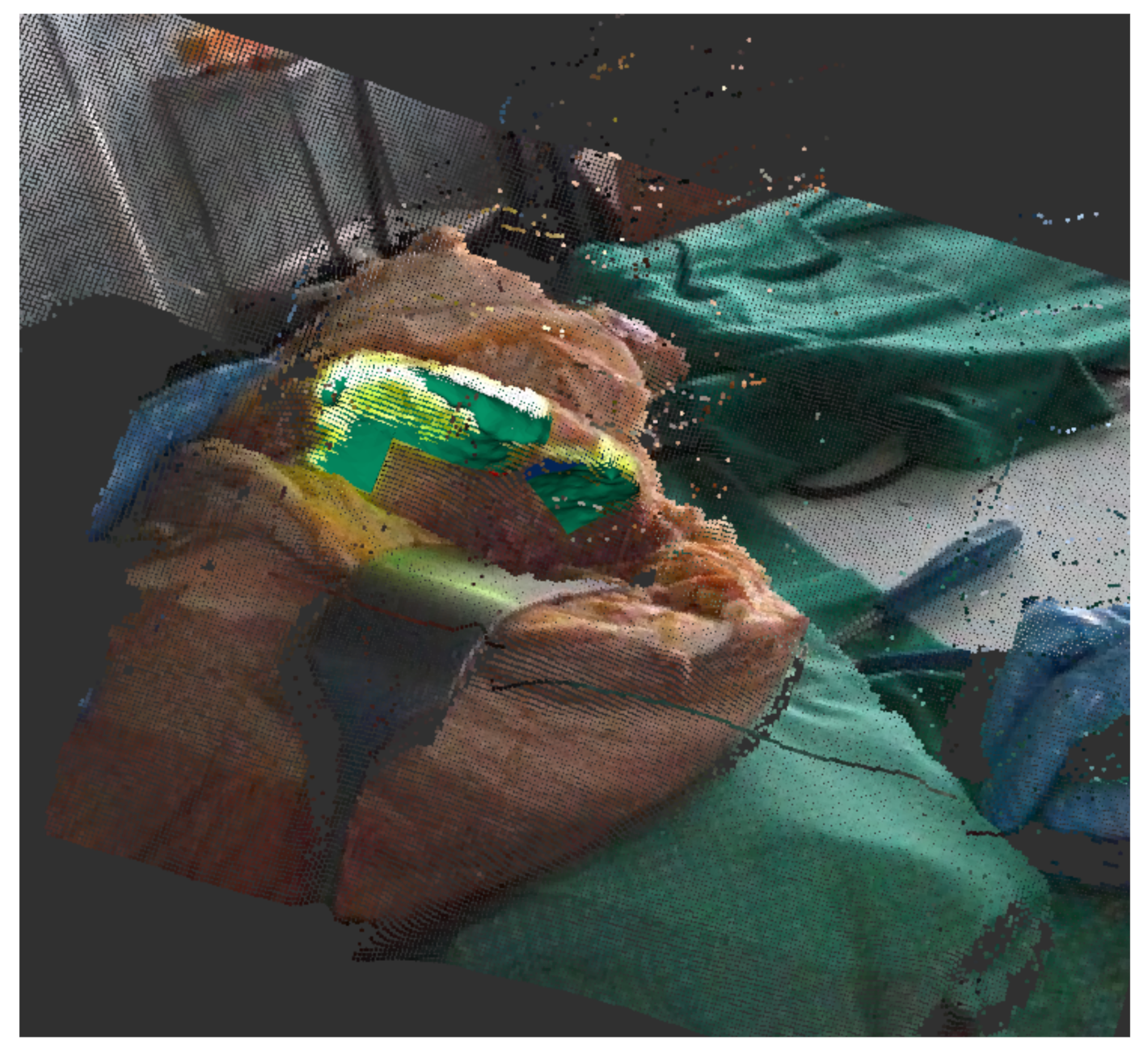

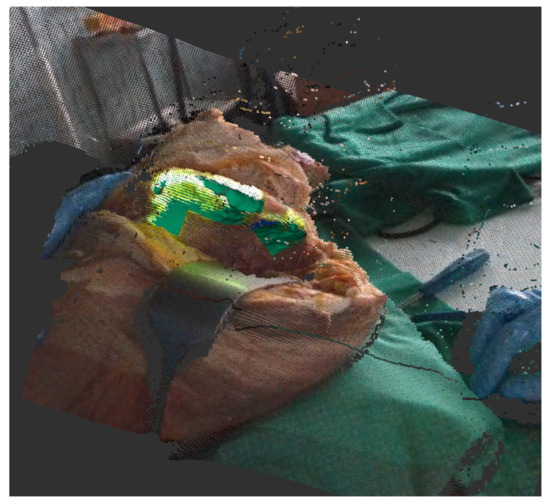

As a concrete tracking scenario that is representative for assisted surgery, we use a rosbag [30] that was recorded during a test surgery on a cadaver at University Hospital RWTH Aachen. This bag contains the depth image data during the whole surgery as a sensor_msgs/PointCloud2 [31] and a tracking output as geometry_msgs/Pose [32] (Figure 3). Because this output was under human supervision and manually reset and corrected, we can utilize it as our ground truth . This allows for us to rerun the tracking while replaying the bag to record different outputs of the tracking algorithm in different configurations.

Figure 3.

Recorded rosbag of test surgery on corpse shows depth image with a registered color image. The projection on the bone can be seen in light green, the current tracking output in green.

For each run, we save the parameter configuration which contains: translational noise (), rotational noise (), velocity factor (), exponential weight (), gaussian weight (), uniform weight (), and particle count (K). Table 1 shows the chosen value ranges.

Table 1.

Parameter configurations. would mean the values are chosen between a and b in steps of c.

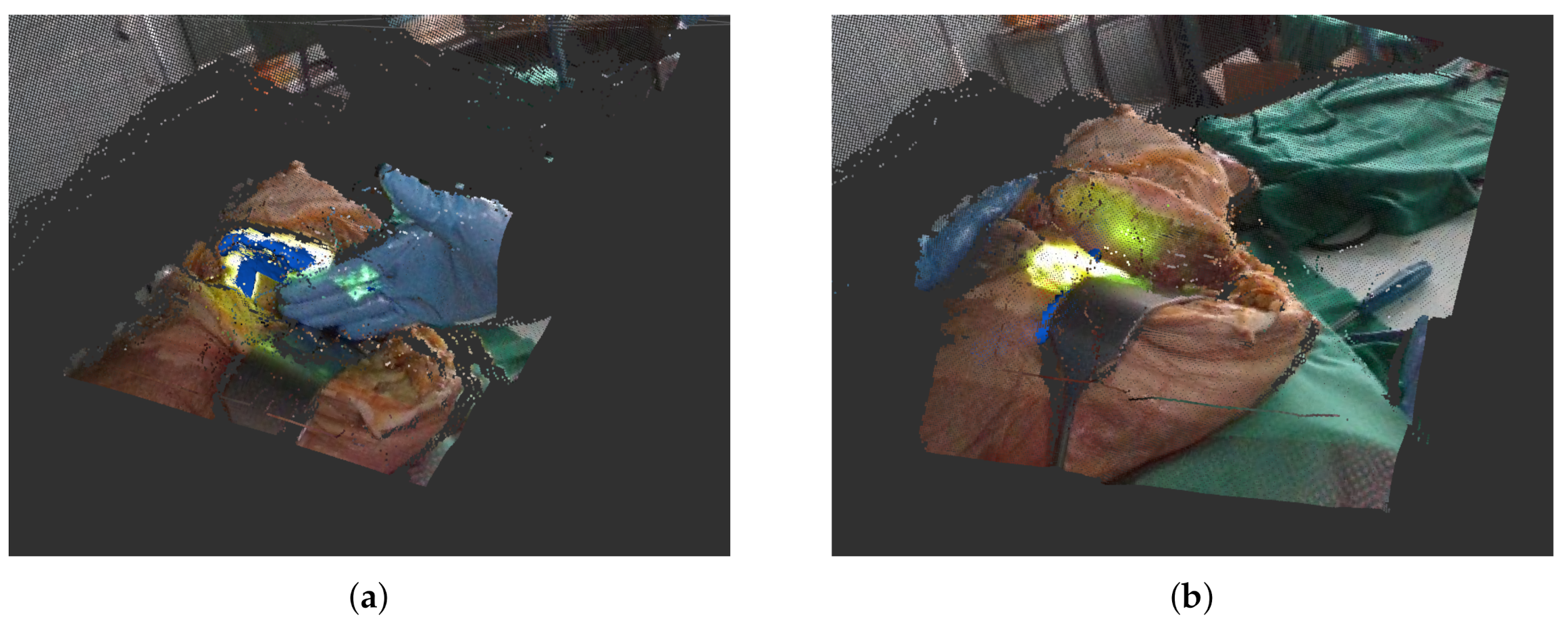

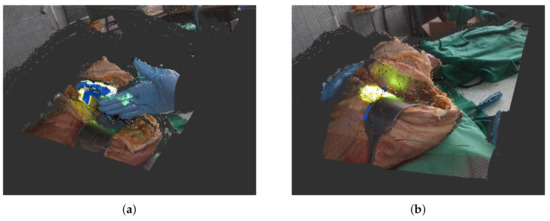

Additionally, we record the particle filter output that contains the maximum log likelihood the effective sample size where normalized weights are used for computation and the mean and variance of all weights. Lastly, we save the labels which contain the distance of our current track to the ground truth and the angular difference between two quaternions . The two most common problems during tracking are occlusions in the depth image (Figure 4a) by the surgeon and sudden displacements when the body’s position is readjusted for easier cutting (Figure 4b). For our data set, we chose two sequences where either an occlusion or displacement takes place that present considerable problems for the tracking algorithm. Both of the sequences are around 450 frames long. We reran the tracking with the different parameter configurations on both sequences by replaying the aforementioned rosbag. An overview of the recorded data set can be seen in Table 2.

Figure 4.

Example settings in which tracking by particle filters faces difficulties. (a) Partial occlusion of area to track. Here, a newly recorded track (in blue) is still stable. (b) Newly recorded track (in blue) is lost after body was relocated.

Table 2.

Overview of the recorded data set.

2.4. Formalization of an Inaccurate Track

We need to define when the tracking is too inaccurate and we consider the track lost in order to train our machine learning models. This is a priori unclear, since suitable choices depend on the current task. The track can be off in its position, which can be measured as the distance to the ground truth, and in its orientation measured as the angle difference to the ground truth. We choose 20 equidistant threshold pairs in order to evaluate different constellations for possible inaccurate tracking, thus enabling a larger flexibility for practical applications

where represents the tolerance value for the distance (in m) to the ground truth and the tolerance value for the according angular difference (in ). The pairs are ordered from very strict () to very tolerant (). Tracks that are off by more than in distance or in angle are considered to be lost in regards to the chosen threshold pair, i.e., one such pair defines the ground truth for the subsequent prediction tasks.

2.5. Reject Strategies Based on Machine Learning Methods

We investigate three different approaches for determining when the current track is lost.

Threshold strategy: because particle filters are based on probabilistic models, we can implement a simple thresholding based approach, as proposed by Chow [22] as a baseline. Intuitively the maximum log likelihood gives confidence for the current track. Accordingly, we utilize a threshold to predict a lost track for a chosen pair of parameters characterizing the desired accuracy:

could be chosen according to a desired likelihood, yet this requires a suitable scaling of the values and its relation to the geometric accuracy. In our approach, we optimize the threshold value that is based on the training data by evaluating a set of candidate values in the range .

Ensemble of binary classification tasks: we consider two approaches for framing the current problem of deciding whether the current track is lost (as defined by the selected pair ) as a machine learning task. Firstly, we model our problem as a binary classification task for each :

Here, the input to the machine learning model x is chosen as a vector of representative quantities of the particle filter. It is the four-dimensional vector of the particle filter outputs and the seven parameters , whereby the values are averaged over a 20 frame window, and

This way, a separate classifier is used for every desired accuracy regarding distance and angle difference. We train our classification models on the same distance and angle difference combinations as in our baseline evaluation and with a 10 frame overlap between windows. We use Support Vector Machine (SVM) [33] with a linear kernel and Random Forests [34] as the classification models. We also apply SMOTE sampling [35] to combat any imbalance in our data set. See [36] for the implementation that we used.

Note that SVM and Random Forests both provide a certainty value of the class by means of the distance to the decision boundary or the percent of trees that predict a certain class, respectively. By shifting the threshold from 0 to a different value, we can extend a model that is optimized for a specific threshold pair to neighboring ones. This gives rise to a sparse ensemble of models: instead of using the models for all pairs , we can rely on a small number of classifiers, which extend a model to a neighborhood of different thresholds by moving the classifier’s threshold . The range contains every for which a decision threshold exists, so that classifier still yields target values for precision and recall. We then find the minimum number of classifiers, so that their ranges contain every at least once. If a threshold is contained in multiple ranges, then the classifier that yields the highest combined precision and recall is chosen. This ensemble of classifiers can then be evaluated on the test set for each threshold combination.

Regression task: ss a second modeling approach, we train a regression model:

where x is the same as above and is a prediction of the distance and angle difference. In this case, only one model is trained for every choice of threshold pairs . Given a specific threshold pair , a decision is done that is based on the question whether and for being the predictions of the regression model on x. In the following, we evaluate such regression against the same set of distance and angle difference combinations. As candidate models for this regression task, we consider a Linear Regression [37], Support Vector Regression with Gaussian Kernel [38], and Random Forest Regression [39]. All of the models are implemented using the scikit learn library [40].

3. Results

All methods are evaluated in a 10-fold cross-validation scheme, adjusted for time series data in order to ensure time consistency: In each split, consecutive data samples amounting to of a time sequence are used for testing while all the preceding samples are used for training. In the next split, the old test samples are added to the training set and the successive of the time series are employed for the next test set, such that every data sample is used for testing at maximum once.

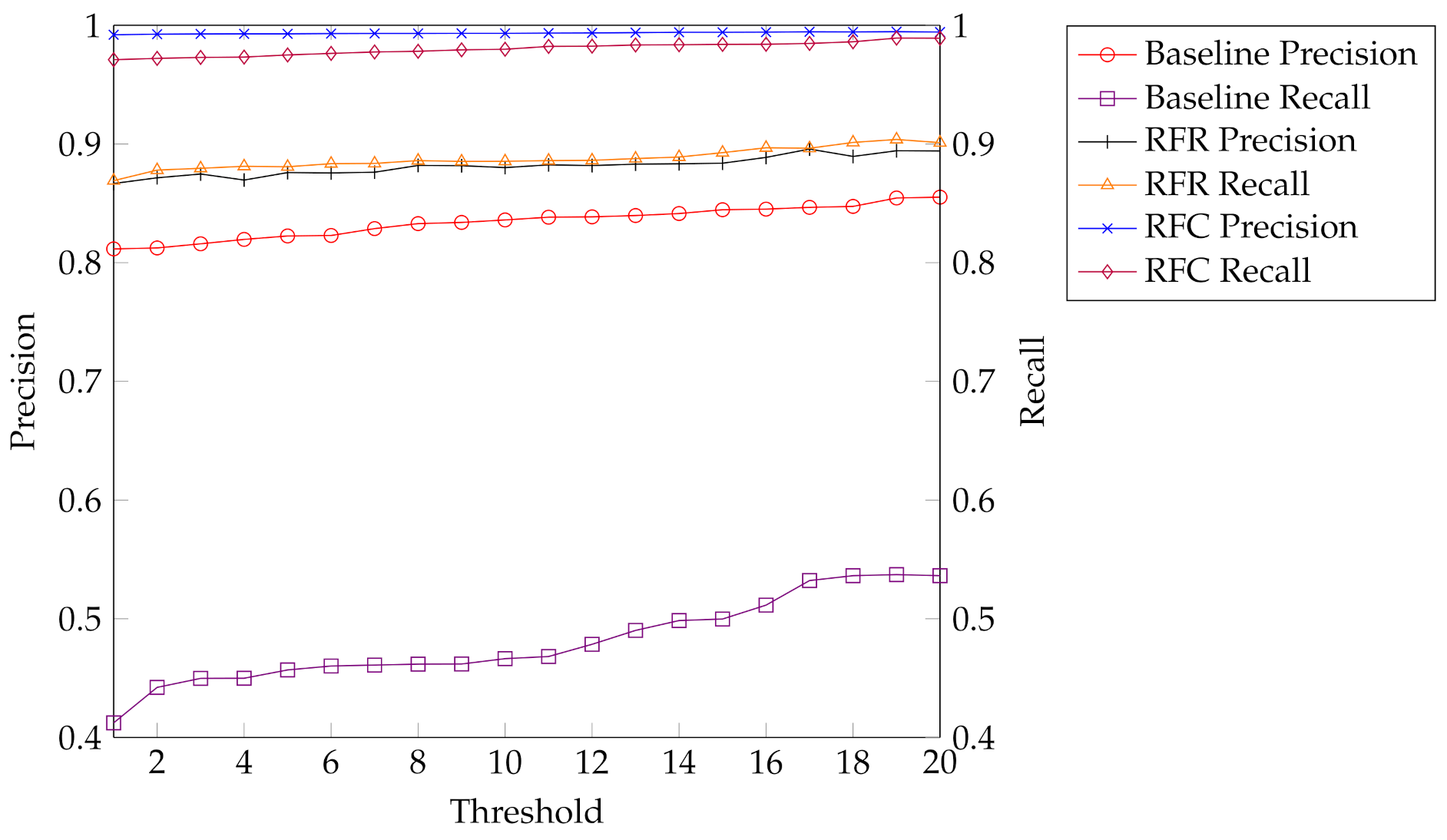

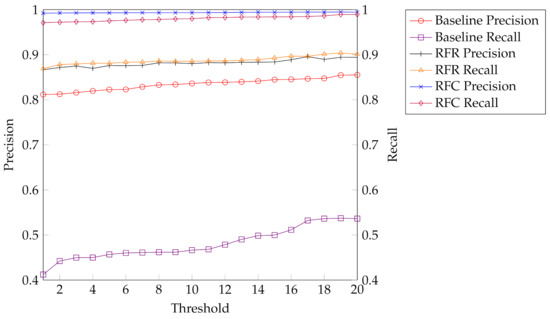

The direct threshold strategy yields a precision larger than 80% but a recall of close to 50% only (see Figure 5). Hence the baseline evaluation shows that the maximum log likelihood is not scaled in such a ways that it allows a robust reject option based on a threshold.

Figure 5.

Overview of our training results shows precision and recall for our baseline evaluation, the best performing regression model, Random Forest regression (RFR), and the best performing classification model, Random Forest classification (RFC).

As a comparison, the results obtained by modeling the task as a regression are significantly better. Here, Random Forest regression performs best. It yields both precision and recall close to 90%, which is an acceptable result and much higher than our baseline.

Provided that a separate classification model is designed for every pair of threshold values , we can further improve, i.e., using a Random Forest classification, we obtain a precision that is close to 1 and recall of around 98% for almost all values. An overview of all trained models can be seen in Table 3 and a comparison between the best performing regression and classification model against the baseline is shown in Figure 5.

Table 3.

This table shows the summarized results for the different instances of machine learning models.

The current classification models, as shown in Table 3, consist of an ensemble of different classifiers according to every pair of threshold values. Because this can become quite exhaustive, the question occurs as to whether an acceptable accuracy can be achieved by using fewer classification models of the same type with different threshold values of the classifier per instance . Therefore, we investigate how to minimize the number of different classifiers required in the ensemble given a desired quality of precision and recall, as described in Section 2.5. Table 4 and Table 5 show the results of this method. Interestingly, already a single Random Forest classifier yields precision and recall 0.9, and five models already cover the full space with precision and recalls of 0.98, yielding flexible as well as high performant models for a reject option for particle tracking. For SVM classification, more models are needed for the ensemble. The individual decision boundaries need to be modified, such that target recall values are met, thus resulting in actually lower precision values for a higher number of models.

Table 4.

This table shows the minimum amount of Random Forest classification models needed to still achieve given goals for precision and recall on the training set and their resulting performance on the test set.

Table 5.

This table shows the minimum amount of support vector classification models that are needed to still achieve given goals for precision and recall on the training set and their resulting performance on the test set.

4. Discussion

We have investigated efficient possibilities to enhance particle filtering models for tracking by a reject option, which enables practitioners to react to an alert as soon as the tracking precision is lower than a predefined threshold. Although particle filters provide a tracking probability, this quantity is not scaled in such a way that a simple threshold strategy provides satisfactory results. As a consequence, we have investigated the possibility to model reject options as a learning task, more precisely as regression or classification problem. It turns out that an ensemble of classifiers provides the best results, with an accuracy reaching 0.98, depending on the chosen setting.

The proposed approach provides an immediate possibility to enhance particle filters for tracking in assisted surgery by an alert strategy to prevent malfunctioning. Because of the generality of the proposed approach, we expect that the proposed modeling framework is of more general interest to enhance particle filtering from visual sensor data by a reject option.

Author Contributions

Conceptualization and methodology, J.K., A.S. and B.H.; software and validation, J.K.; resources, T.R., N.A., A.M. and D.A.; data curation, J.K., T.R., N.A. and A.M.; writing J.K., A.S. and B.H. All authors have read and agreed to the published version of the manuscript.

Funding

J.K. and B.H. gratefully acknowledge funding from the BMBF under grant number 01S18041A. T.R., A.M. and D.A.: Funded by the Excellence Initiative of the German federal and state governments.

Institutional Review Board Statement

The cadaver specimen originated from body donors of the body donation program of the RWTH Aachen University, Germany. This trial was performed in accordance with the Declaration of Helsinki (2013).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to ethical concerns since they were obtained in a clinical trial.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Schmale, I.L.; Vandelaar, L.J.; Luong, A.U.; Citardi, M.J.; Yao, W.C. Image-Guided Surgery and Intraoperative Imaging in Rhinology: Clinical Update and Current State of the Art. Ear Nose Throat J. 2020. [Google Scholar] [CrossRef]

- Adair, D.S.P.; Gomes, K.S.; Kiss, Z.H.T.; Gobbi, D.G.; Starreveld, Y.P. Tactics: An open-source platform for planning, simulating and validating stereotactic surgery. Comput. Assist. Surg. 2020, 25, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Tack, P.; Victor, J.; Gemmel, P.; Annemans, L. 3D-printing techniques in a medical setting: A systematic literature review. Biomed. Eng. Online 2016, 15, 1–21. [Google Scholar] [CrossRef] [PubMed]

- Lautissier, J.; Legrand, L.; Lalande, A.; Walker, P.; Brunotte, F. Object tracking in medical imaging using a 2D active mesh system. In Proceedings of the 25th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (IEEE Cat. No.03CH37439), Cancun, Mexico, 17–21 September 2003; Volume 1, pp. 739–742. [Google Scholar] [CrossRef]

- Wang, Y.; Georgescu, B.; Chen, T.; Wu, W.; Wang, P.; Lu, X.; Ionasec, R.; Zheng, Y.; Comaniciu, D. Learning-Based Detection and Tracking in Medical Imaging: A Probabilistic Approach. In Deformation Models: Tracking, Animation and Applications; González Hidalgo, M., Mir Torres, A., Varona Gómez, J., Eds.; Springer: Dordrecht, The Netherlands, 2013; pp. 209–235. [Google Scholar] [CrossRef]

- de Bruijne, M.; Nielsen, M. Image segmentation by shape particle filtering. In Proceedings of the 17th International Conference on Pattern Recognition, (ICPR 2004), Cambridge, UK, 26 August 2004; Volume 3, pp. 722–725. [Google Scholar] [CrossRef]

- Gustafsson, F.; Gunnarsson, F.; Bergman, N.; Forssell, U.; Jansson, J.; Karlsson, R.; Nordlund, P. Particle filters for positioning, navigation, and tracking. IEEE Trans. Signal Process. 2002, 50, 425–437. [Google Scholar] [CrossRef]

- Wang, F. Particle Filters for Visual Tracking. In Advanced Research on Computer Science and Information Engineering; Shen, G., Huang, X., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 107–112. [Google Scholar]

- Ristic, B.; Beard, M.; Fantacci, C. An Overview of Particle Methods for Random Finite Set Models. arXiv 2016, arXiv:1602.03945. [Google Scholar] [CrossRef]

- Bourque, A.E.; Bedwani, S.; Carrier, J.-F.; Ménard, C.; Borman, P.; Bos, C.; Raaymakers, B.W.; Mickevicius, N.; Paulson, E.; Tijssen, R.H. Particle Filter-Based Target Tracking Algorithm for Magnetic Resonance-Guided Respiratory Compensation: Robustness and Accuracy Assessment. Int. J. Radiat. Oncol. Biol. Phys. 2018, 100, 325–334. [Google Scholar] [CrossRef]

- Wang, X.; Li, T.; Sun, S.; Corchado, J.M. A Survey of Recent Advances in Particle Filters and Remaining Challenges for Multitarget Tracking. Sensors 2017, 17, 2707. [Google Scholar] [CrossRef] [PubMed]

- Kudlicka, J.; Murray, L.M.; Schön, T.B.; Lindsten, F. Particle filter with rejection control and unbiased estimator of the marginal likelihood. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2020), Barcelona, Spain, 4–8 May 2020. [Google Scholar]

- Finke, A.; Doucet, A.; Johansen, A.M. Limit theorems for sequential MCMC methods. Adv. Appl. Probab. 2020, 52, 377–403. [Google Scholar] [CrossRef]

- Cho, J.U.; Jin, S.H.; Pham, X.D.; Jeon, J.W.; Byun, J.E.; Kang, H. A Real-Time Object Tracking System Using a Particle Filter. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 2822–2827. [Google Scholar] [CrossRef]

- Yuan, D.; Li, D.; He, Z.; Zhang, X. Particle Filter Re-detection for Visual Tracking via Correlation Filters. arXiv 2017, arXiv:1711.10069. [Google Scholar] [CrossRef]

- Zhang, T.; Xu, C.; Yang, M.H. Multi-Task Correlation Particle Filter for Robust Object Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Liu, T.; Chu, H.; Wang, K.; Xing, X. Target Tracking via Particle Filter and Convolutional Network. J. Electr. Comput. Eng. 2018. [Google Scholar] [CrossRef]

- Kumar, R.; Castañón, D.; Ermis, E.; Saligrama, V. A new algorithm for outlier rejection in particle filters. In Proceedings of the 2010 13th International Conference on Information Fusion, Edinburgh, UK, 26–29 July 2010; pp. 1–7. [Google Scholar] [CrossRef]

- Chen, Y.C. A Tutorial on Kernel Density Estimation and Recent Advances. arXiv 2017, arXiv:1704.03924. [Google Scholar] [CrossRef]

- Hu, X.L.; Schon, T.B.; Ljung, L. A basic convergence result for particle filtering. IEEE Trans. Signal Process. 2008, 56, 1337–1348. [Google Scholar] [CrossRef]

- Lee, J.S.; Chung, W.K. Robust particle filter localization by sampling from non-corrupted window with incomplete map. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 1133–1139. [Google Scholar]

- Chow, C. On optimum recognition error and reject tradeoff. IEEE Trans. Inf. Theory 1970, 16, 41–46. [Google Scholar] [CrossRef]

- Gentner, C.; Zhang, S.; Jost, T. Log-PF: Particle Filtering in Logarithm Domain. J. Electr. Comput. Eng. 2018. [Google Scholar] [CrossRef]

- Bartlett, P.L.; Wegkamp, M.H. Classification with a Reject Option using a Hinge Loss. J. Mach. Learn. Res. 2008, 9, 1823–1840. [Google Scholar]

- Fischer, L.; Hammer, B.; Wersing, H. Optimal local rejection for classifiers. Neurocomputing 2016, 214, 445–457. [Google Scholar] [CrossRef]

- Geifman, Y.; El-Yaniv, R. Selective Classification for Deep Neural Networks. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017; pp. 4885–4894. [Google Scholar]

- Shafer, G.; Vovk, V. A Tutorial on Conformal Prediction. J. Mach. Learn. Res. 2008, 9, 371–421. [Google Scholar]

- Modabber, A.; Gerressen, M.; Ayoub, N.; Elvers, D.; Stromps, J.P.; Riediger, D.; Hölzle, F.; Ghassemi, A. Computer-assisted zygoma reconstruction with vascularized iliac crest bone graft. Int. J. Med. Robot. 2013, 9, 497–502. [Google Scholar] [CrossRef]

- Übelhör, T.; Gesenhues, J.; Ayoub, N.; Modabber, A.; Abel, D. 3D camera-based markerless navigation system for robotic osteotomies. at-Automatisierungstechnik 2020, 68, 863–879. [Google Scholar] [CrossRef]

- Ros Wiki: Rosbag. Available online: http://wiki.ros.org/rosbag (accessed on 25 January 2021).

- Ros Sensor Message: Pointcloud2. Available online: http://docs.ros.org/en/melodic/api/sensor_msgs/html/msg/PointCloud2.html (accessed on 28 January 2021).

- Ros Geometry Message: Pose. Available online: http://docs.ros.org/en/jade/api/geometry_msgs/html/msg/Pose.html (accessed on 28 January 2021).

- Scikit Learn: SVC. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.svm.SVC.html (accessed on 28 January 2021).

- Scikit Learn: Random Forest Classifier. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestClassifier.html (accessed on 28 January 2021).

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Lemaître, G.; Nogueira, F.; Aridas, C.K. Imbalanced-learn: A Python Toolbox to Tackle the Curse of Imbalanced Datasets in Machine Learning. J. Mach. Learn. Res. 2017, 18, 559–563. [Google Scholar]

- Scikit Learn: Linear Regressor. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.linear_model.LinearRegression.html (accessed on 28 January 2021).

- Scikit Learn: SVR. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.svm.SVR.html (accessed on 28 January 2021).

- Scikit Learn: Random Forest Regressor. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestRegressor.html (accessed on 28 January 2021).

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).