Colour-Balanced Edge-Guided Digital Inpainting: Applications on Artworks

Abstract

1. Introduction

2. Related Work

2.1. Deep Learning Approaches for Image Inpainting

2.2. Deep Learning Approaches for Paintings Retouching

2.3. Research Gaps and Contributions

3. Method

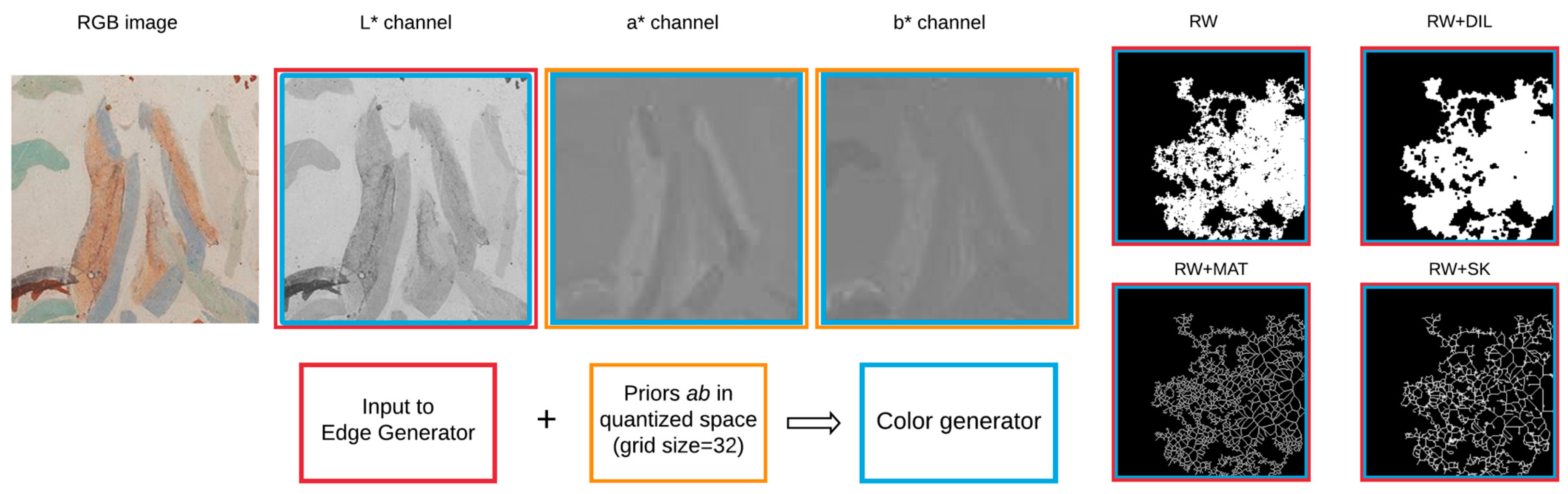

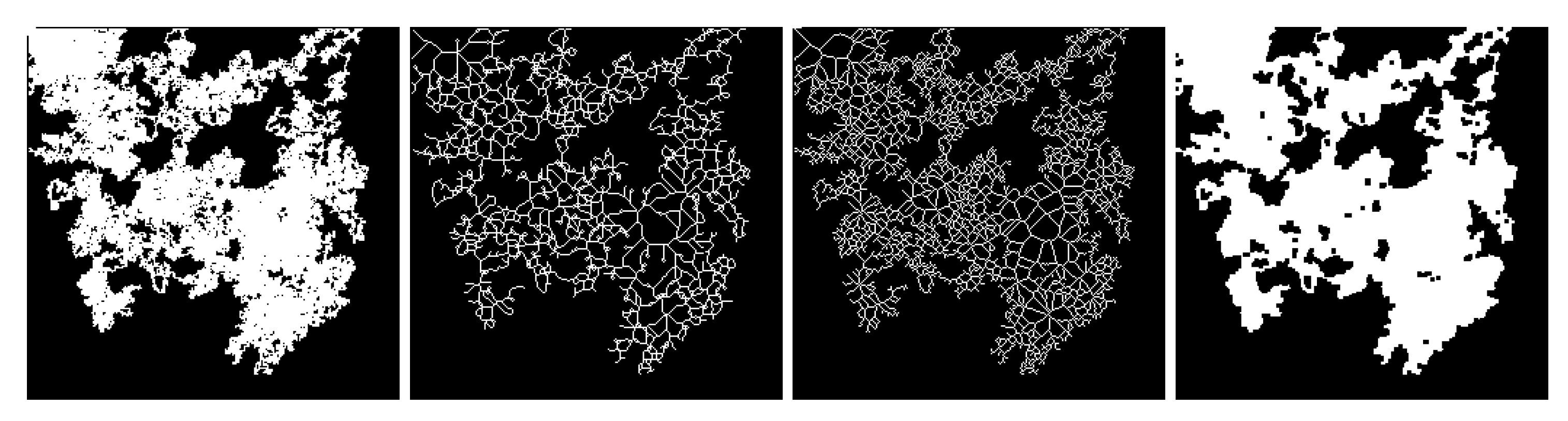

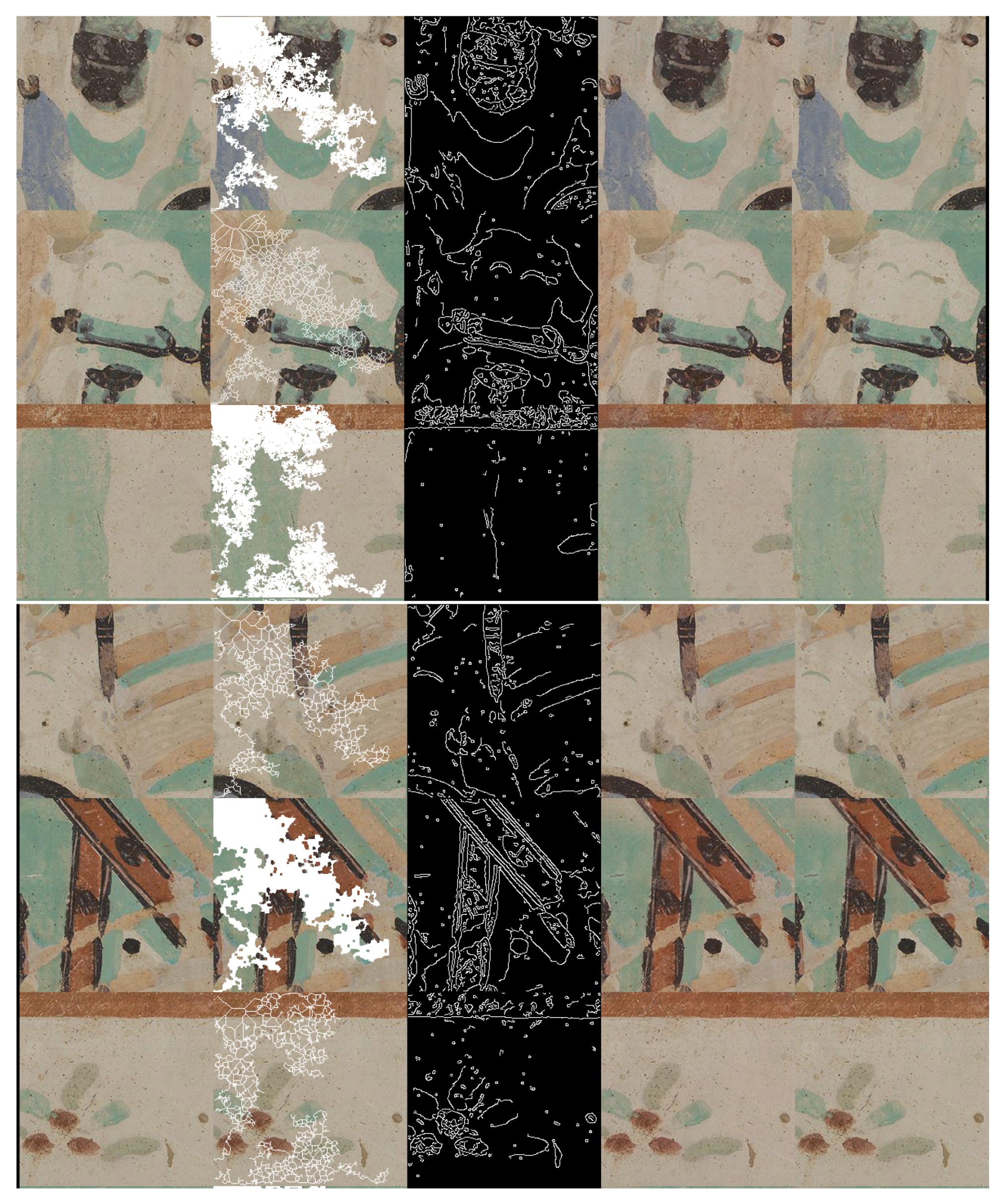

3.1. Masks

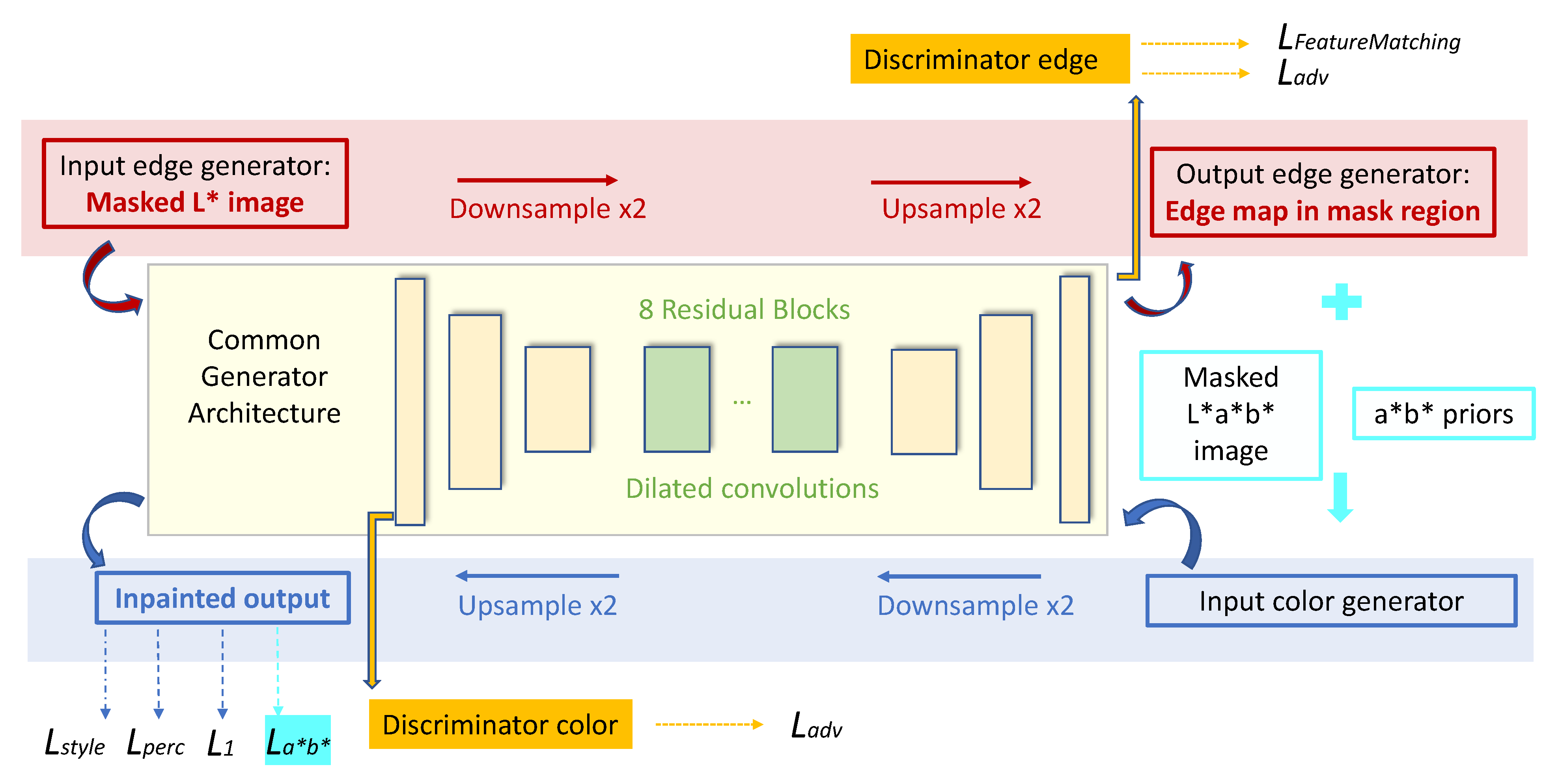

3.2. Edge Inpainting Model

3.3. Color Inpainting Model

4. Results

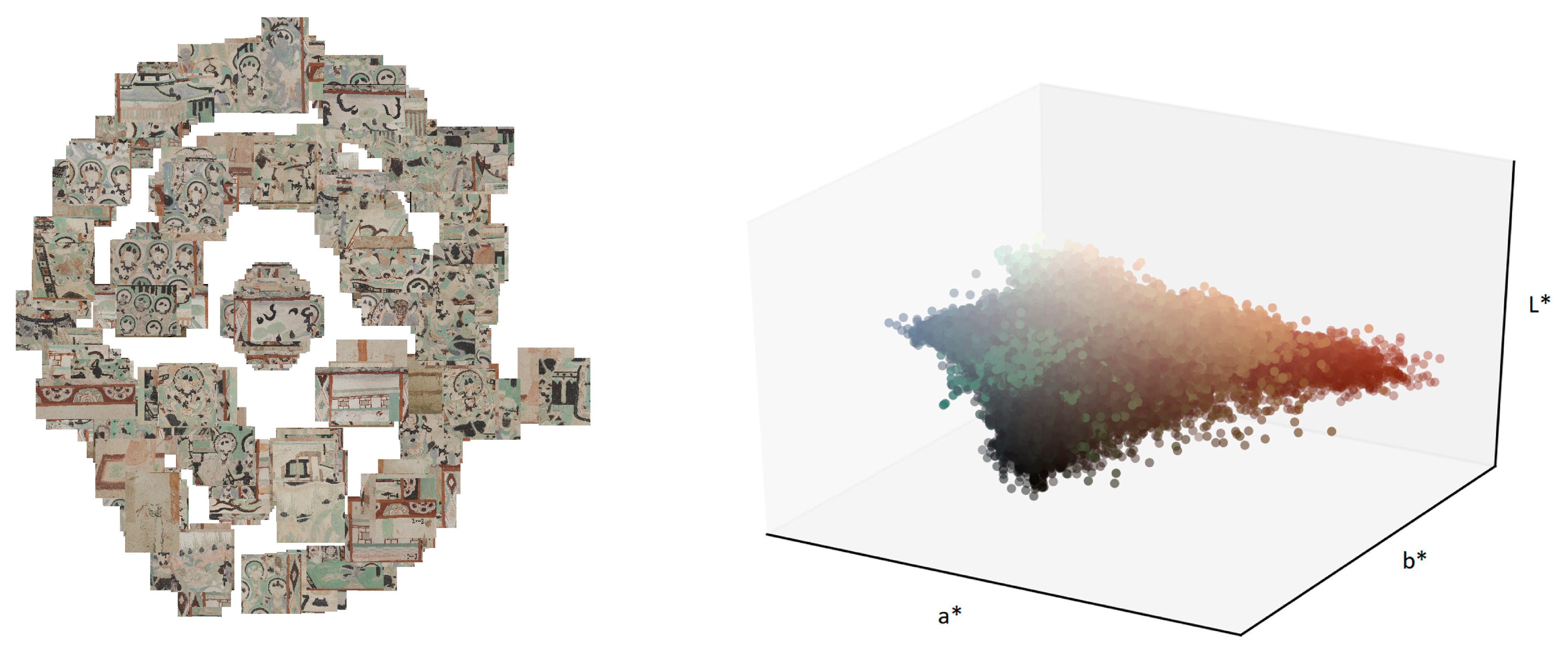

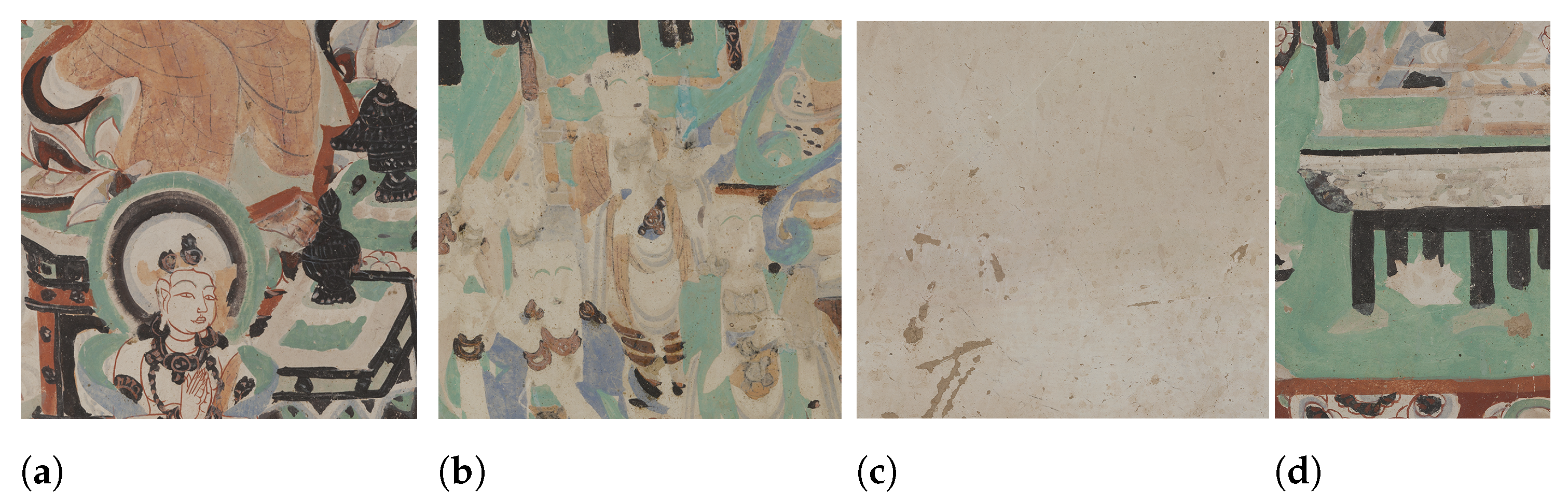

4.1. Dataset and Training Specifications

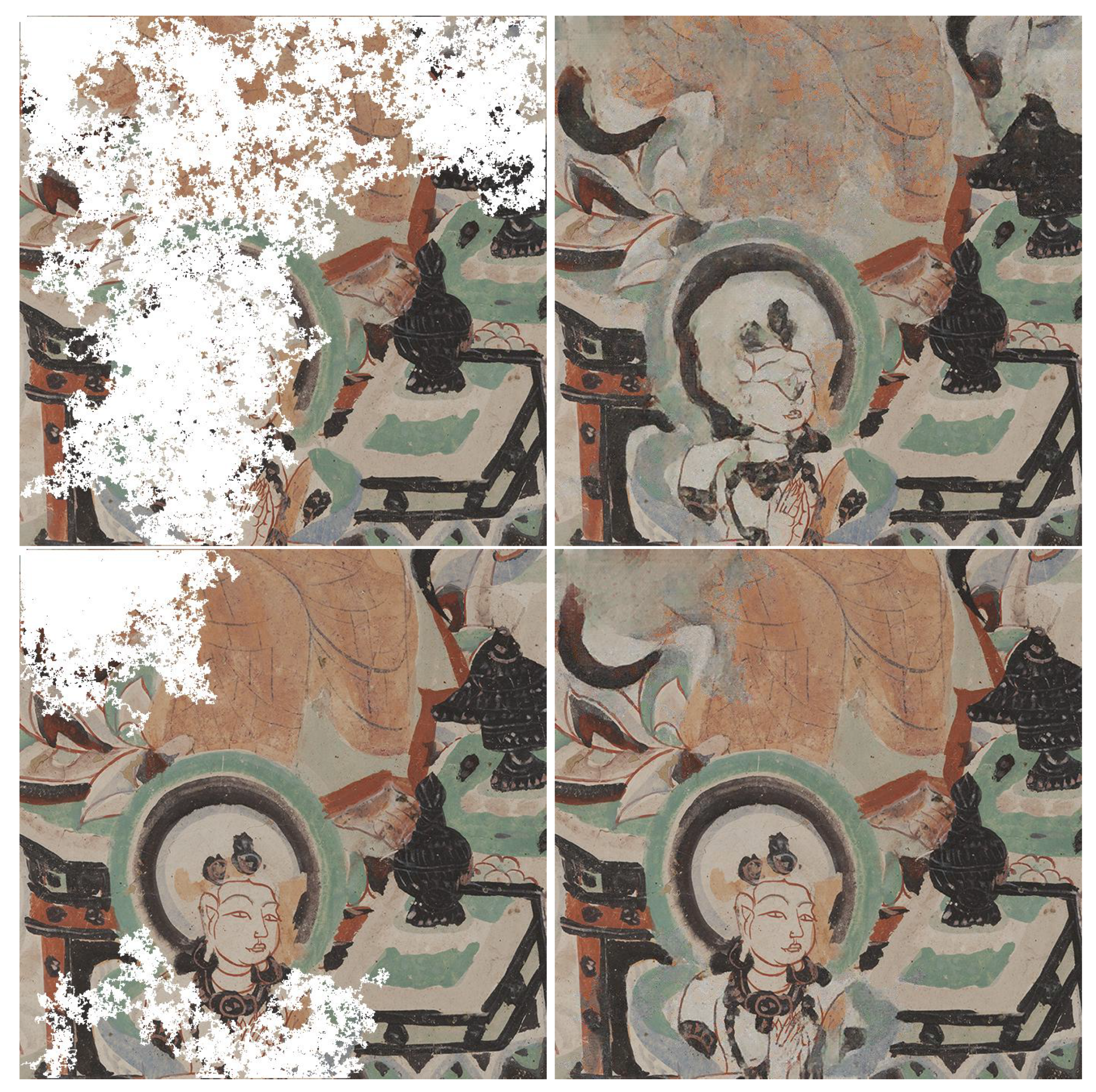

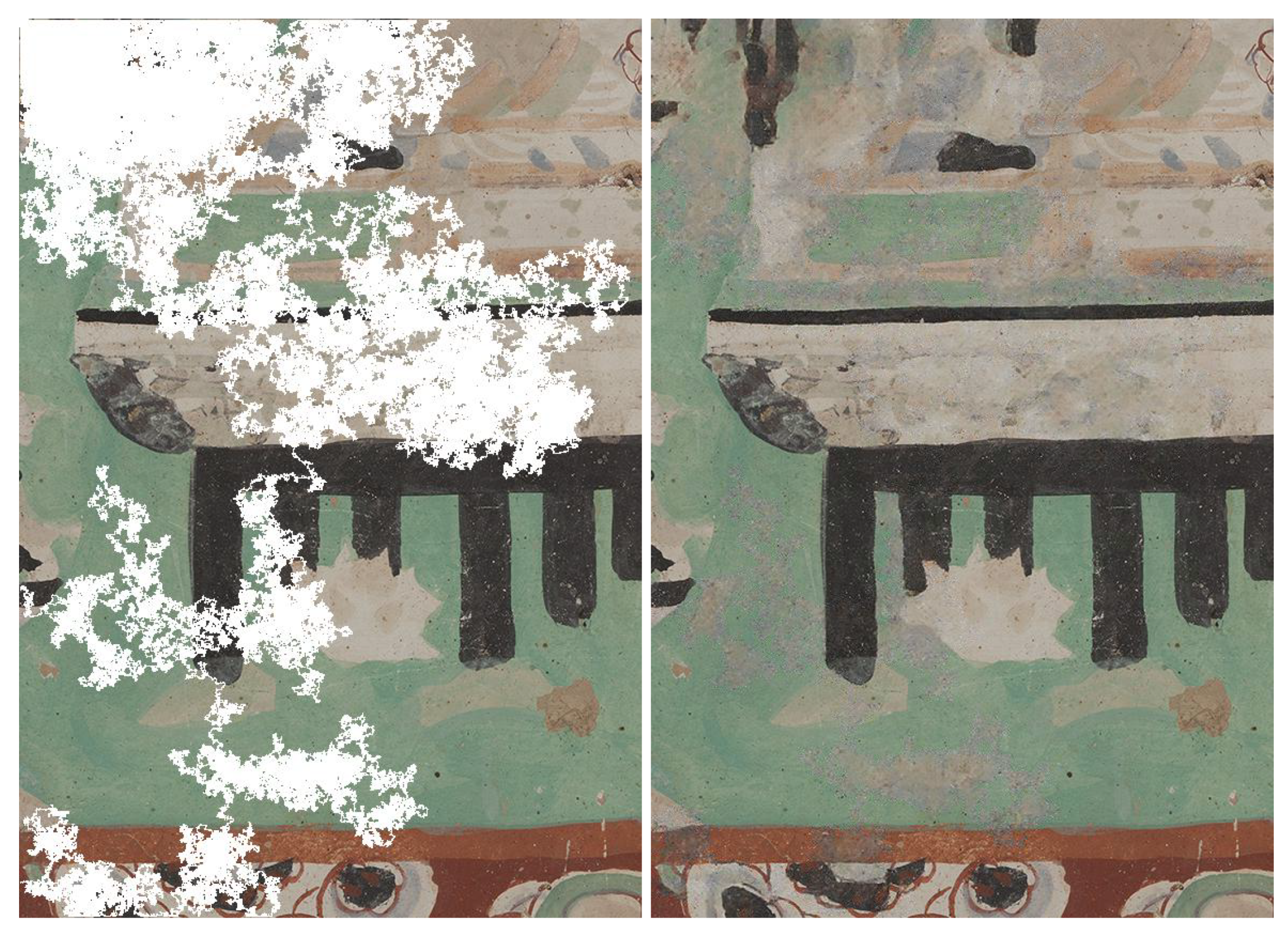

4.2. Qualitative and Quantitative Assessment

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| S-CIELAB | Spatial extension of the CIELAB colorimetric difference |

| JPEG | Joint Photographic Experts Group |

| PHOG | Pyramid Histogram of Oriented Gradients |

| PSNR | Peak Signal-to-Noise Ratio |

| SSIM | Structural Similarity Index Measure |

| CNN | Convolutional Neural Network |

| DIL | Dilation |

| DIP | Deep Image Prior |

| GAN | Generative Adversarial Network |

| IQM | Image Quality Metric |

| MAT | Medial Axis Transform |

| CH | Cultural Heritage |

| RW | Random Walk |

| SK | Skeletonization |

References

- Bertalmio, M.; Sapiro, G.; Caselles, V.; Ballester, C. Image inpainting. In Proceedings of the 27th annual Conference on Computer Graphics and Interactive Techniques, New Orleans, LA, USA, July 2000; ACM Press/Addison-Wesley Publishing Co.: New York, NY, USA, 2000; pp. 417–424. [Google Scholar]

- International Conference on Computer Vision. ICCV Workshop on E-Heritage 2019. 2019. Available online: http://www.eheritage-ws.org/ (accessed on 20 November 2020).

- Yu, T.; Zhang, S.; Lin, C.; You, S. Dunhuang Grotto Painting Dataset and Benchmark. arXiv 2019, arXiv:1907.04589. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Zhou, B.; Lapedriza, A.; Khosla, A.; Oliva, A.; Torralba, A. Places: A 10 million image database for scene recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 1452–1464. [Google Scholar] [CrossRef] [PubMed]

- Doersch, C.; Singh, S.; Gupta, A.; Sivic, J.; Efros, A. What makes Paris look like Paris? ACM Trans. Graph. 2012, 31, 1–9. [Google Scholar] [CrossRef]

- Liu, Z.; Luo, P.; Wang, X.; Tang, X. Deep learning face attributes in the wild. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3730–3738. [Google Scholar]

- van der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Pathak, D.; Krahenbuhl, P.; Donahue, J.; Darrell, T.; Efros, A.A. Context encoders: Feature learning by inpainting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2536–2544. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Iizuka, S.; Simo-Serra, E.; Ishikawa, H. Globally and locally consistent image completion. ACM Trans. Graph. (ToG) 2017, 36, 1–14. [Google Scholar] [CrossRef]

- Liu, G.; Reda, F.A.; Shih, K.J.; Wang, T.C.; Tao, A.; Catanzaro, B. Image inpainting for irregular holes using partial convolutions. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 85–100. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Zheng, C.; Cham, T.J.; Cai, J. Pluralistic image completion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–21 June 2019; pp. 1438–1447. [Google Scholar]

- Nazeri, K.; Ng, E.; Joseph, T.; Qureshi, F.; Ebrahimi, M. EdgeConnect: Structure guided image inpainting using edge prediction. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Korea, 27–28 October 2019. [Google Scholar]

- Cai, H.; Bai, C.; Tai, Y.W.; Tang, C.K. Deep video generation, prediction and completion of human action sequences. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 366–382. [Google Scholar]

- Song, L.; Cao, J.; Song, L.; Hu, Y.; He, R. Geometry-aware face completion and editing. Proc. AAAI Conf. Artif. Intell. 2019, 33, 2506–2513. [Google Scholar] [CrossRef]

- Wu, X.; Li, R.L.; Zhang, F.L.; Liu, J.C.; Wang, J.; Shamir, A.; Hu, S.M. Deep Portrait Image Completion and Extrapolation. IEEE Trans. Image Process. 2020, 29, 2344–2355. [Google Scholar] [CrossRef] [PubMed]

- van Noord, N. Learning Visual Representations of Style. Ph.D. Thesis, Tilburg University, Tilburg, The Netherlands, 2018. [Google Scholar]

- Yu, T.; Lin, C.; Zhang, S.; You, S.; Ding, X.; Wu, J.; Zhang, J. End-to-end partial convolutions neural networks for Dunhuang grottoes wall-painting restoration. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Korea, 27–28 October 2019. [Google Scholar]

- Wang, N.; Wang, W.; Hu, W.; Fenster, A.; Li, S. Damage Sensitive and Original Restoration Driven Thanka Mural Inpainting. In Chinese Conference on Pattern Recognition and Computer Vision (PRCV); Springer: Cham, Switzerland, 2020; pp. 142–154. [Google Scholar]

- Wang, H.L.; Han, P.H.; Chen, Y.M.; Chen, K.W.; Lin, X.; Lee, M.S.; Hung, Y.P. Dunhuang mural restoration using deep learning. In SIGGRAPH Asia 2018 Technical Briefs; Association for Computing Machinery: Tokyo, Japan, 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Weber, T.; Hußmann, H.; Han, Z.; Matthes, S.; Liu, Y. Draw with me: Human-in-the-loop for image restoration. In Proceedings of the 25th International Conference on Intelligent User Interfaces, Cagliari, Italy, 17–20 March 2020; pp. 243–253. [Google Scholar]

- Ulyanov, D.; Vedaldi, A.; Lempitsky, V. Deep image prior. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 9446–9454. [Google Scholar]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein GAN. arXiv 2017, arXiv:1701.07875. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A. Colorful Image Colorization. In Computer Vision–ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2016; pp. 649–666. [Google Scholar] [CrossRef]

- Cho, J.; Yun, S.; Mu Lee, K.; Young Choi, J. PaletteNet: Image recolorization with given color palette. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 62–70. [Google Scholar]

- Köhler, R.; Schuler, C.; Schölkopf, B.; Harmeling, S. Mask-specific inpainting with deep neural networks. In German Conference on Pattern Recognition; Springer: Cham, Switzerland, 2014; pp. 523–534. [Google Scholar]

- van Noord, N.; Postma, E. A learned representation of artist-specific colourisation. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 2907–2915. [Google Scholar]

- PyTorch. An Open Source Machine Learning Framework that Accelerates the Path from Research Prototyping to Production Deployment. 2020. Available online: https://pytorch.org/ (accessed on 20 November 2020).

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Sharma, G.; Wu, W.; Dalal, E.N. The CIEDE2000 color-difference formula: Implementation notes, supplementary test data, and mathematical observations. Color Res. Appl. 2005, 30, 21–30. [Google Scholar] [CrossRef]

- Zhang, X.; Wandell, B.A. A spatial extension of CIELAB for digital color image reproduction. In SID International Symposium Digest of Technical Papers; Citeseer Online Library: Princeton, NJ, USA, 1996; Volume 27, pp. 731–734. [Google Scholar]

- Amirshahi, S.A.; Pedersen, M.; Yu, S.X. Image quality assessment by comparing CNN features between images. J. Imaging Sci. Technol. 2016, 60, 60410-1–60410-10. [Google Scholar] [CrossRef]

- Amirshahi, S.A.; Pedersen, M.; Beghdadi, A. Reviving traditional image quality metrics using CNNs. In Color and Imaging Conference; Society for Imaging Science and Technology: Springfield, VA, USA, 2018; Volume 2018, pp. 241–246. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 586–595. [Google Scholar]

- Flachot, A.; Gegenfurtner, K.R. Processing of chromatic information in a deep convolutional neural network. J. Opt. Soc. Am. A 2018, 35, B334–B346. [Google Scholar] [CrossRef] [PubMed]

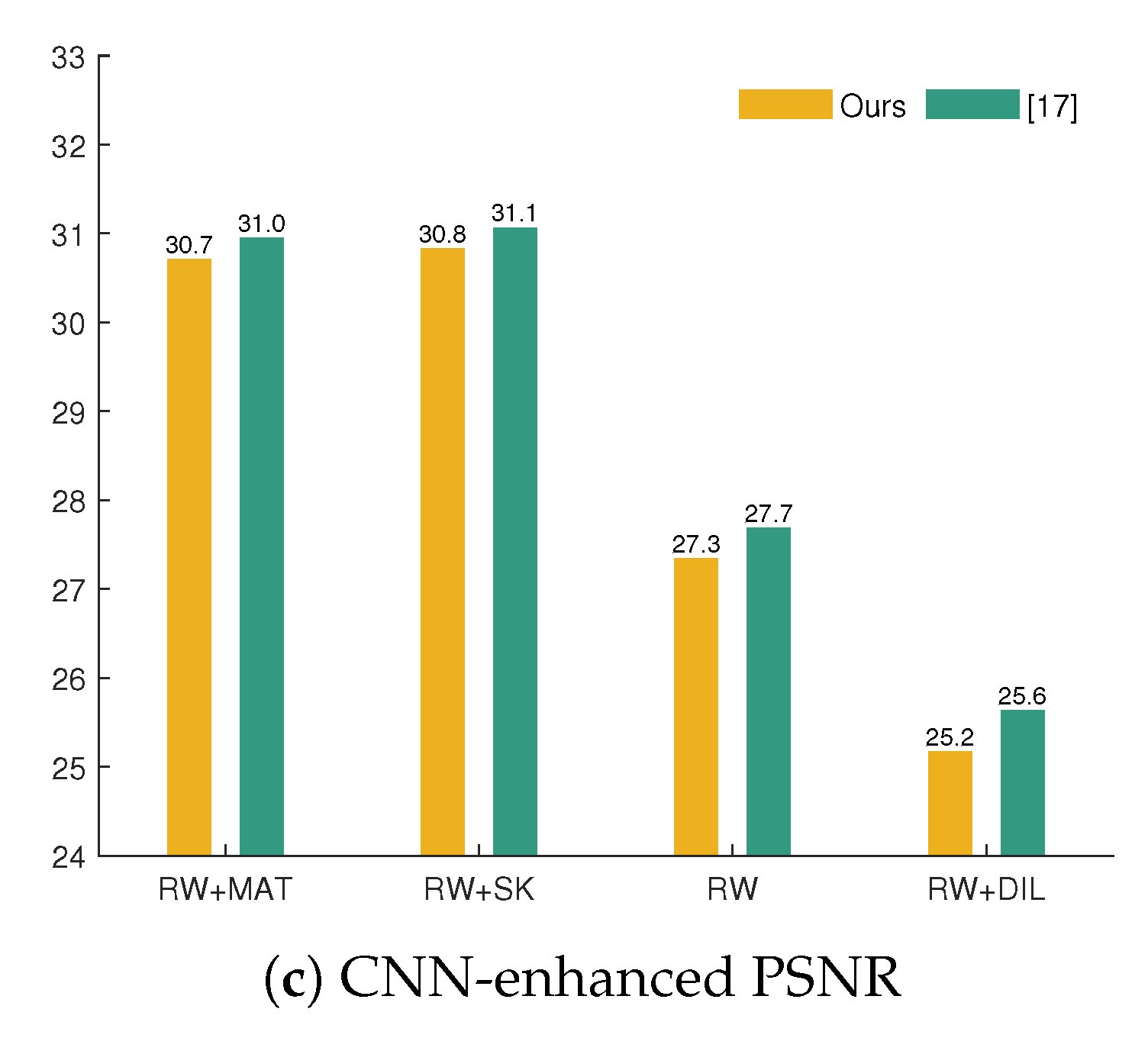

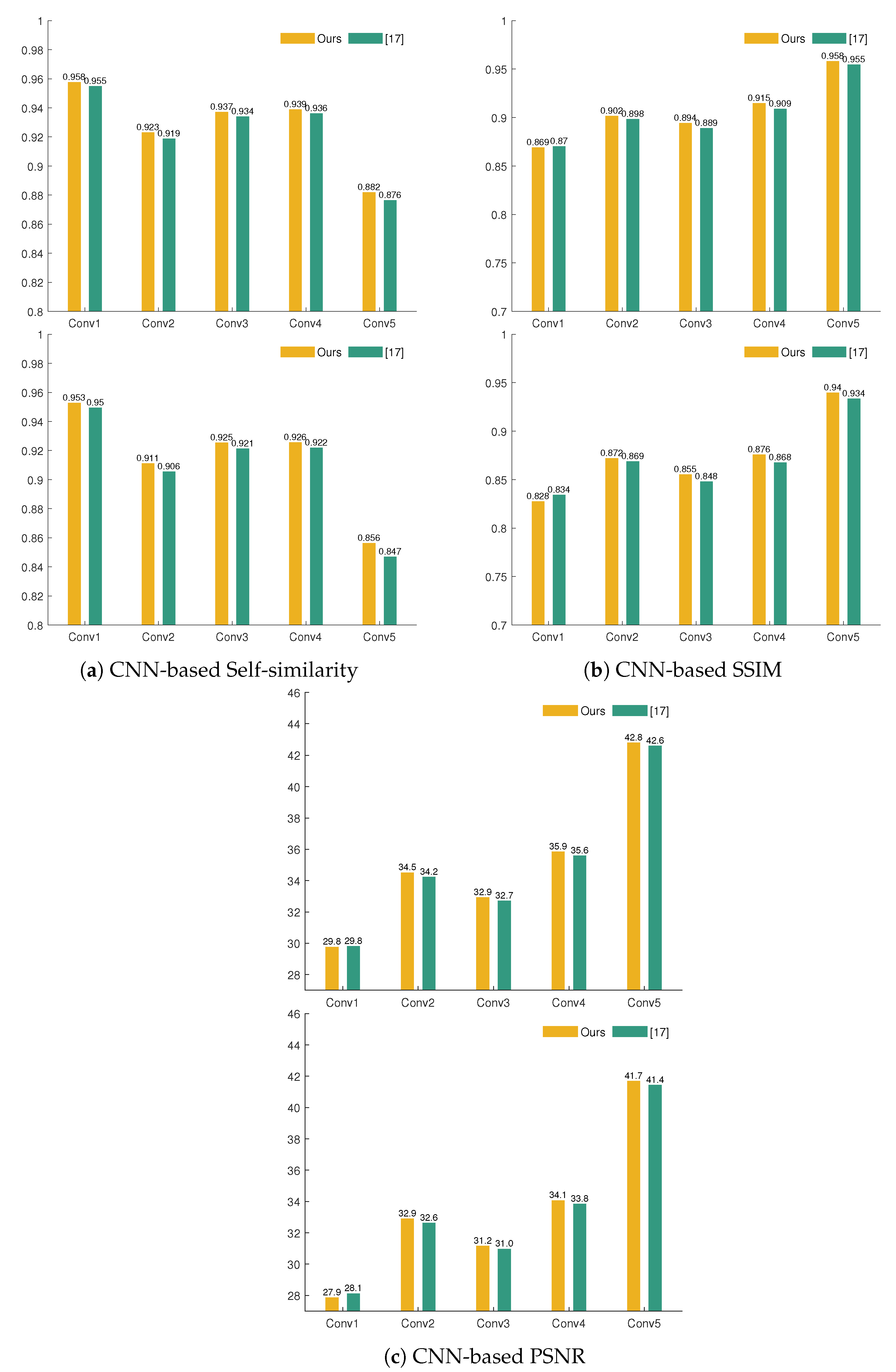

| Mask Type | Missing Pixels (%) | PSNR | SSIM | CIEDE2000 | S-CIELAB | ||||

|---|---|---|---|---|---|---|---|---|---|

| Ours | [17] | Ours | [17] | Ours | [17] | Ours | [17] | ||

| RW | 28.42 | 26.57 | 26.86 | 0.74 | 0.76 | 4.09 | 4.05 | 3.75 | 3.71 |

| RW + SK | 6.93 | 29.89 | 30.12 | 0.81 | 0.82 | 3.19 | 3.10 | 2.30 | 2.19 |

| RW + MAT | 7.60 | 29.81 | 30.04 | 0.81 | 0.81 | 3.21 | 3.12 | 2.38 | 2.26 |

| RW + DIL | 31.20 | 24.75 | 25.18 | 0.72 | 0.73 | 4.44 | 4.34 | 4.24 | 4.13 |

| Approach | Dataset | Mask Type | Damage Levels | Nr. Images Evaluated Quantitatively | Quantitative Results | |||

|---|---|---|---|---|---|---|---|---|

| PSNR | SSIM | |||||||

| Lowest | Highest | Lowest | Highest | |||||

| Ours | Dunhuang | RW, RW + DIL, RW + MAT, RW + SK | 4 | 200 | 24.75 | 29.89 | 0.72 | 0.81 |

| Yu et al. [22] | Dunhuang | Dusk-like (∼RW), Jelly-like (∼RW+DIL) | 2 | - | - | |||

| Weber et al. [25] | Dunhuang | RW | 1 | 10 | - | 0.78 | ||

| Wang et al. [23] | Thanka | Irregular lines and elliptical shapes | 4 | 1391 | 21.23 | 33.12 | 0.74 | 0.98 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ciortan, I.-M.; George, S.; Hardeberg, J.Y. Colour-Balanced Edge-Guided Digital Inpainting: Applications on Artworks. Sensors 2021, 21, 2091. https://doi.org/10.3390/s21062091

Ciortan I-M, George S, Hardeberg JY. Colour-Balanced Edge-Guided Digital Inpainting: Applications on Artworks. Sensors. 2021; 21(6):2091. https://doi.org/10.3390/s21062091

Chicago/Turabian StyleCiortan, Irina-Mihaela, Sony George, and Jon Yngve Hardeberg. 2021. "Colour-Balanced Edge-Guided Digital Inpainting: Applications on Artworks" Sensors 21, no. 6: 2091. https://doi.org/10.3390/s21062091

APA StyleCiortan, I.-M., George, S., & Hardeberg, J. Y. (2021). Colour-Balanced Edge-Guided Digital Inpainting: Applications on Artworks. Sensors, 21(6), 2091. https://doi.org/10.3390/s21062091