A Method to Compensate for the Errors Caused by Temperature in Structured-Light 3D Cameras

Abstract

1. Introduction

- Two strategies to model the error due to temperature of the depth maps obtained from RGB-D structured light cameras.

- A method to compensate for the error due to temperature of RGB-D structured light cameras.

- The evaluation of the proposed method in experimental and real scenarios.

2. Materials and Methods

2.1. Preliminary Study

2.1.1. Study Set-Up

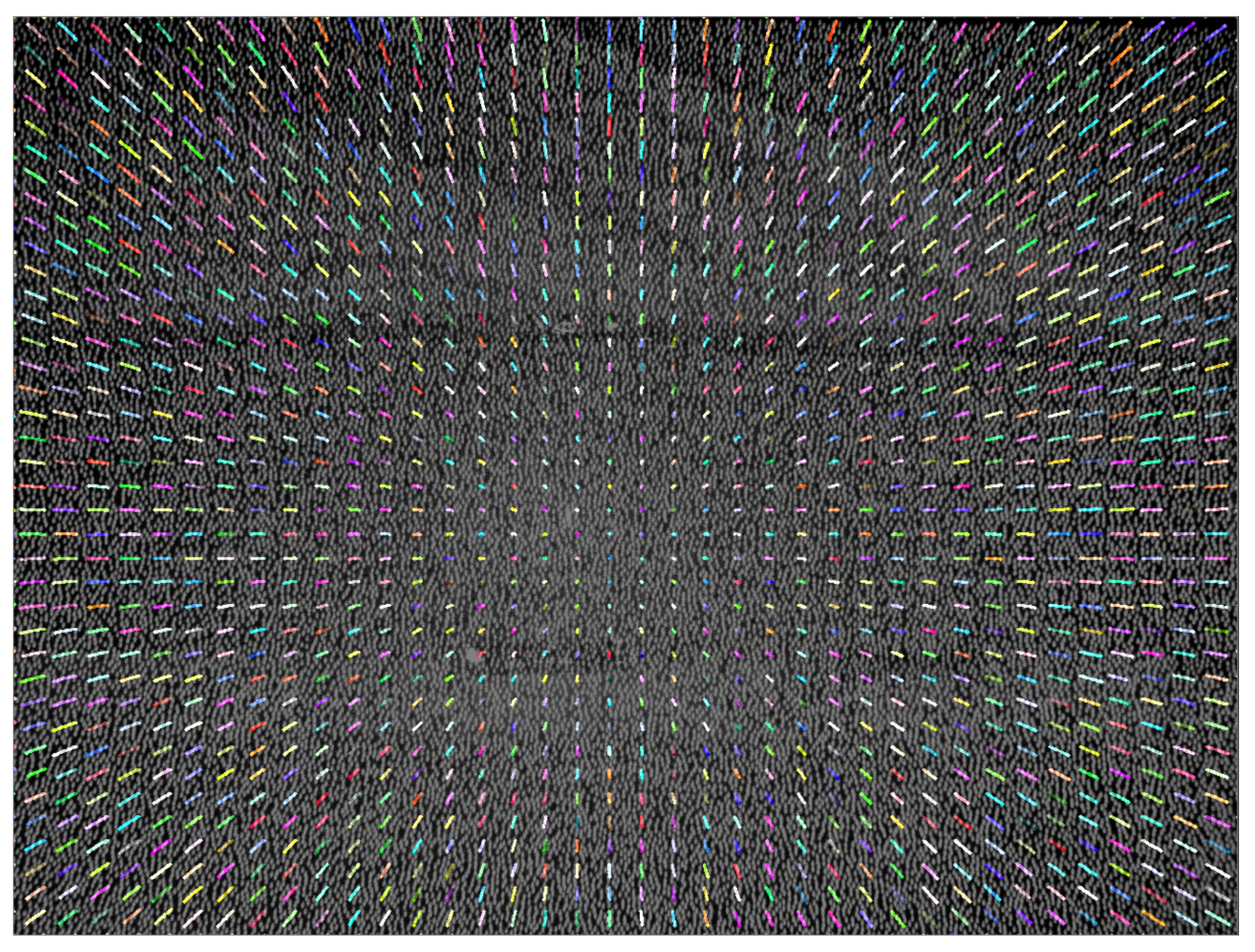

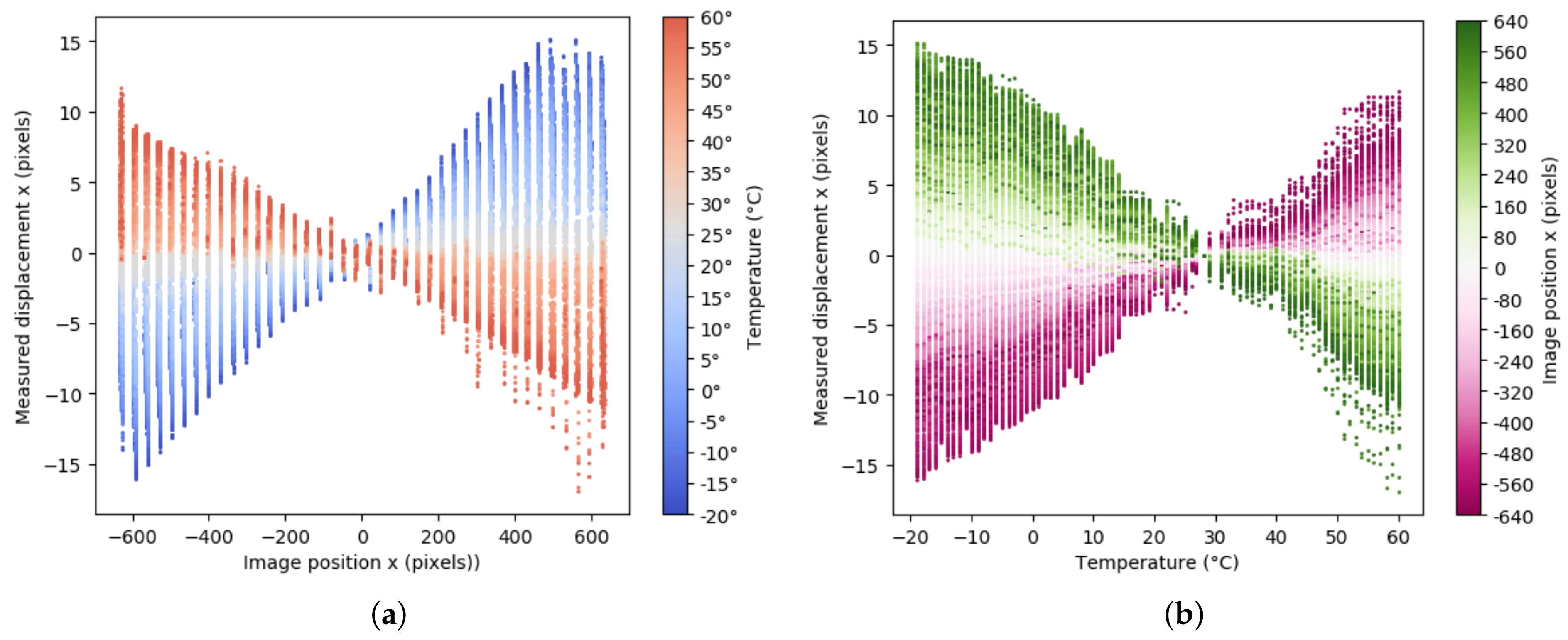

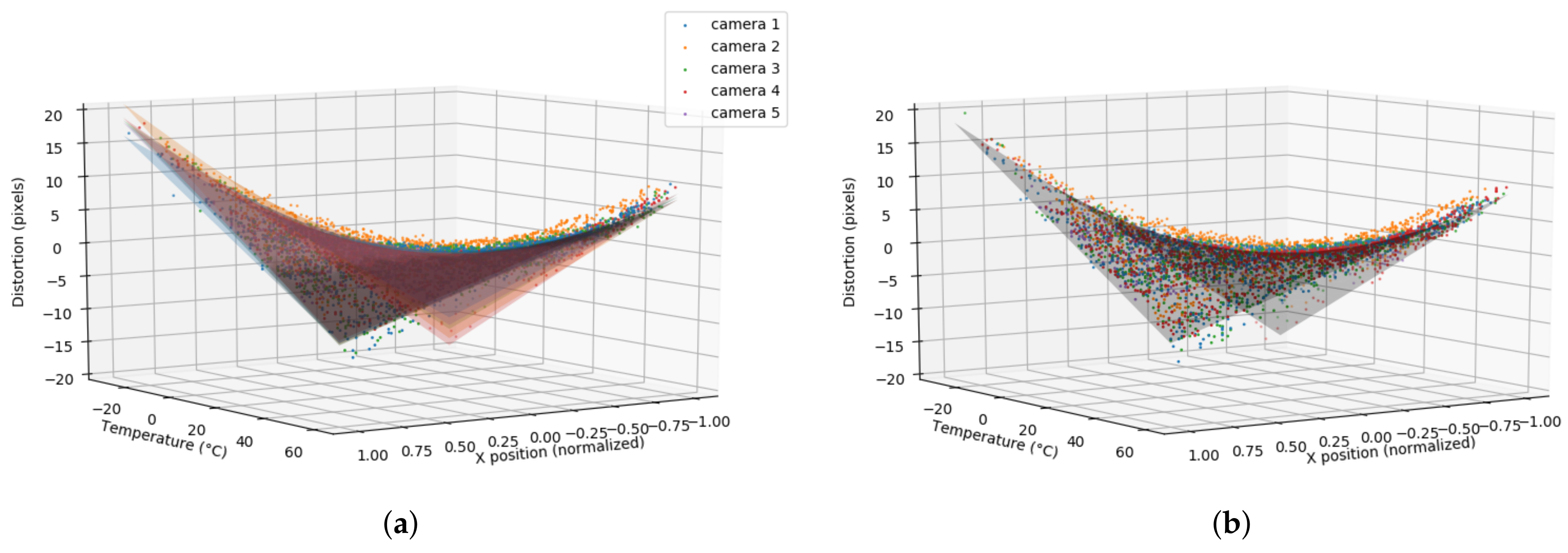

2.1.2. Modelling the Distortion via IR Images

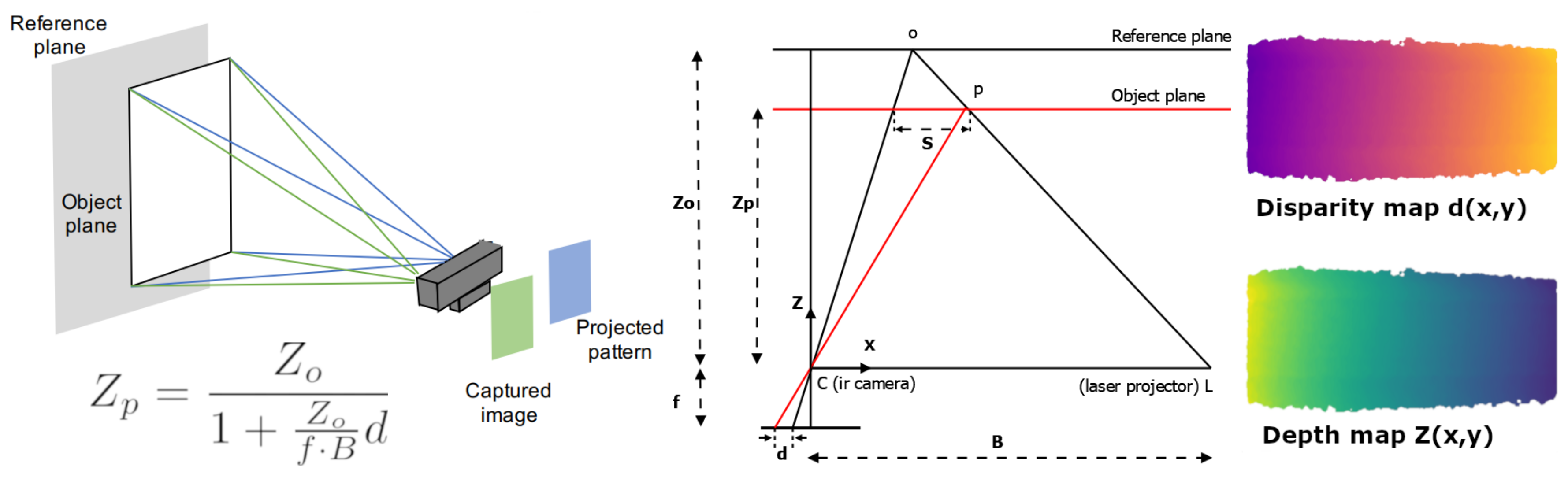

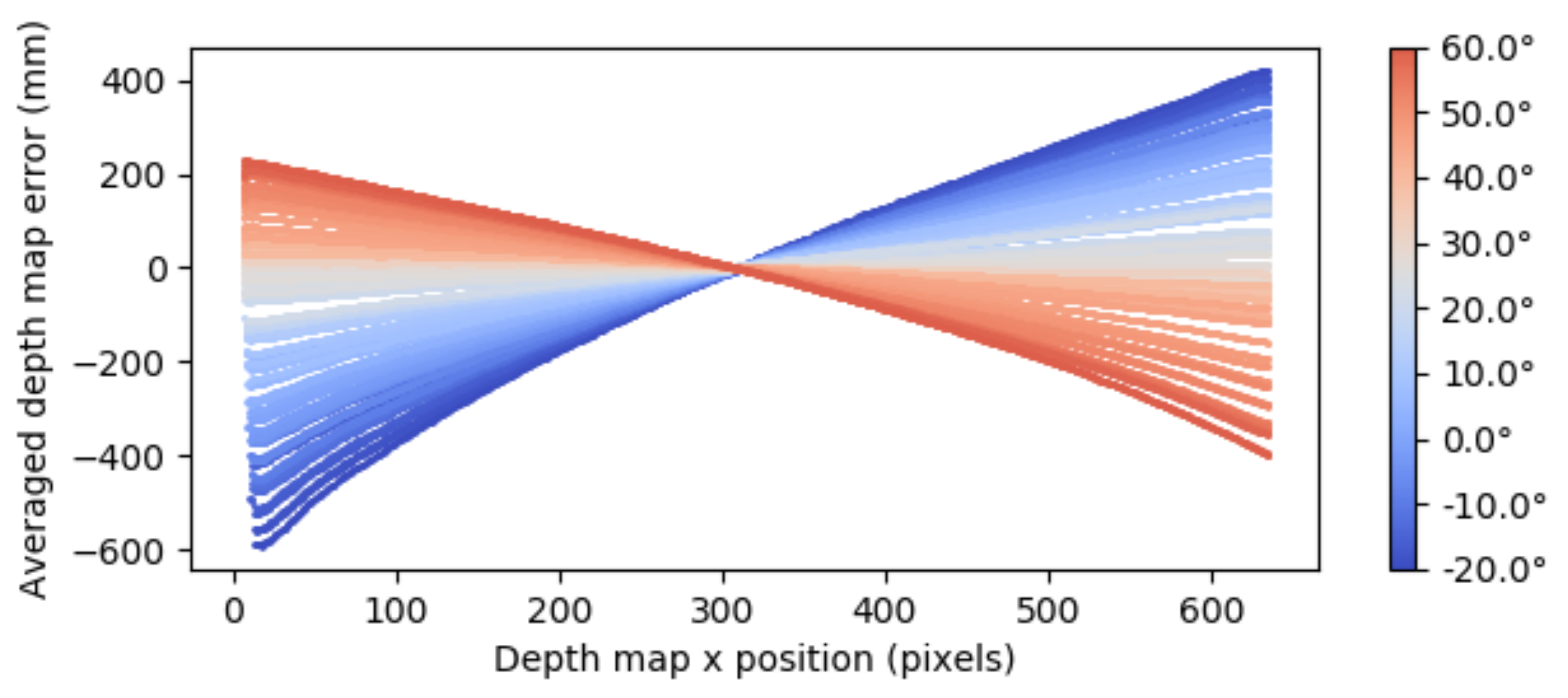

2.1.3. Relationship between Depth Error and the Disparity Error Obtained from the IR Images

2.2. The Thermal Error Compensation Method

2.2.1. Step 1. Obtain the Distortion Model of the Sensor

- Disparity error computation from IR images. This approach was based on the method described in Section 2.1.2 which required access to the sequence of IR images and an algorithm to compute the IR image distortion such as the optical flow algorithm. A limitation of this strategy is that it is only applicable when the user has access to the IR images which is not always possible. Moreover, the algorithm used to compute the IR image distortion can also introduce some noise and error in the final results. In addition, it is sensible to lighting conditions requiring fixed contrast, uniform lighting and texture, among others, to properly perform.

- Disparity error computation from depth map. The disparity map, , could be obtained as (see Equation (2)). Given a depth map and a reference depth map , the disparity error can be obtained as the difference between disparities of the reference depth map and the captured depth map, i.e.,that can be reduced toA limitation of this strategy is that it requires an external depth measurement to serve as a reference depth map, . However, if this information is not available, any of the captured depth maps can be used as a reference map, in order to obtain a biased which can be corrected latter by recomputing the c parameter as . The is a value that can be experimentally obtained by considering different sensors of the same model. For instance, in the case of Orbbec Astra sensors was experimentally set to C. In this way, by assuming a biased reference depth map, the spatial intercept point a, the temperature slope b and a biased temperature intercept point c could be obtained. Then, the c parameter was recalculated using the and the correct model was obtained. For more details see Section 3.1.This second approach was applied to the data obtained in our preliminary study. The obtained parameters were , and . If we compared these parameters with the ones obtained with the IR images-based approach (, and ), it can be seen that they were very close. Although both strategies are suitable to model the disparity error, in our experiments this second approach will be used.

2.2.2. Step 2. Correct the Sensor Measurements

2.2.3. Final Remarks

3. Results and Discussion

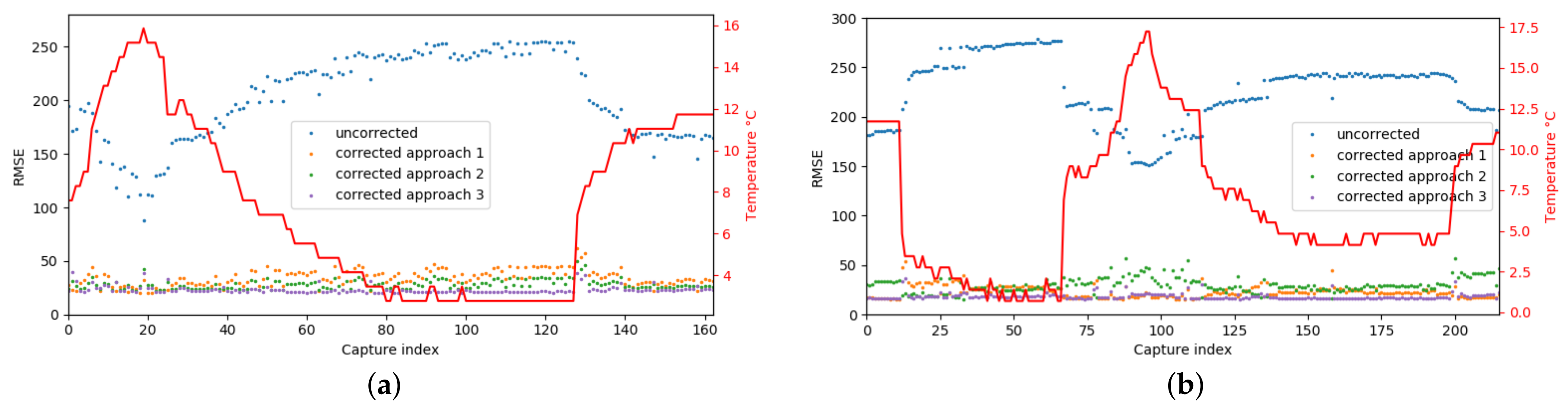

3.1. Evaluation Considering Different Cameras

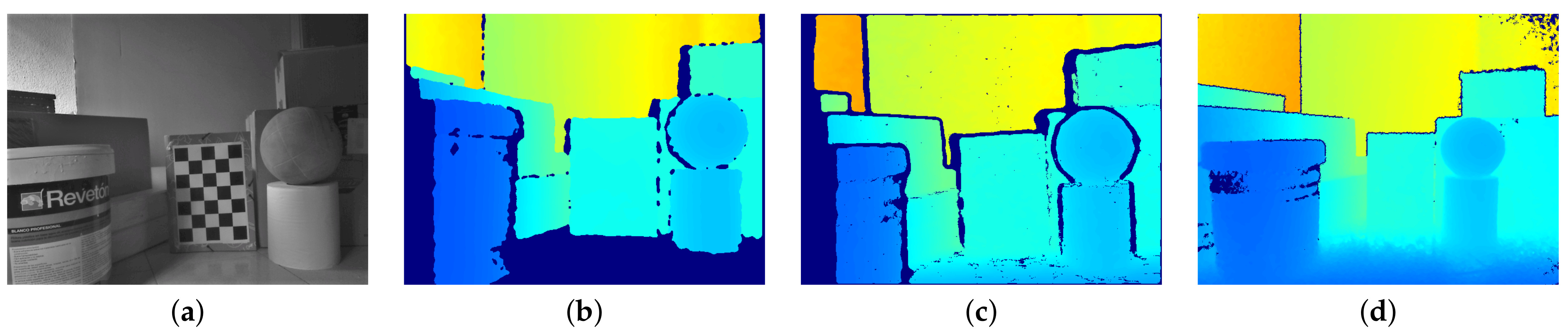

3.2. Evaluation in Real Environments

3.3. Comparison with the Kinect’s Method

3.4. Comparison with Non-Structured Light Cameras

3.5. Limitations

4. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mur-Artal, R.; Tardós, J.D. Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Izadi, S.; Kim, D.; Hilliges, O.; Molyneaux, D.; Newcombe, R.; Kohli, P.; Shotton, J.; Hodges, S.; Freeman, D.; Davison, A.; et al. KinectFusion: Real-time 3D reconstruction and interaction using a moving depth camera. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology, Santa Barbara, CA, USA, 16–19 October 2011; pp. 559–568. [Google Scholar]

- Beckman, G.H.; Polyzois, D.; Cha, Y.J. Deep learning-based automatic volumetric damage quantification using depth camera. Autom. Constr. 2019, 99, 114–124. [Google Scholar] [CrossRef]

- Sun, Y.; Liu, M.; Meng, M.Q.H. Active perception for foreground segmentation: An RGB-D data-based background modeling method. IEEE Trans. Autom. Sci. Eng. 2019, 16, 1596–1609. [Google Scholar] [CrossRef]

- Li, J.; Li, Z.; Feng, Y.; Liu, Y.; Shi, G. Development of a human–robot hybrid intelligent system based on brain teleoperation and deep learning SLAM. IEEE Trans. Autom. Sci. Eng. 2019, 16, 1664–1674. [Google Scholar] [CrossRef]

- Zanuttigh, P.; Marin, G.; Dal Mutto, C.; Dominio, F.; Minto, L.; Cortelazzo, G.M. Time-of-flight and structured light depth cameras. Technol. Appl. 2016, 1, 43–113. [Google Scholar]

- Halmetschlager-Funek, G.; Suchi, M.; Kampel, M.; Vincze, M. An empirical evaluation of ten depth cameras: Bias, precision, lateral noise, different lighting conditions and materials, and multiple sensor setups in indoor environments. IEEE Robot. Autom. Mag. 2018, 26, 67–77. [Google Scholar] [CrossRef]

- Khoshelham, K.; Elberink, S.O. Accuracy and resolution of kinect depth data for indoor mapping applications. Sensors 2012, 12, 1437–1454. [Google Scholar] [CrossRef] [PubMed]

- Geng, J. Structured-light 3D surface imaging: A tutorial. Adv. Opt. Photonics 2011, 3, 128–160. [Google Scholar] [CrossRef]

- Primsense. Available online: http://xtionprolive.com/ (accessed on 7 January 2021).

- Zhang, Z. Microsoft kinect sensor and its effect. IEEE Multimed. 2012, 19, 4–10. [Google Scholar] [CrossRef]

- Han, J.; Shao, L.; Xu, D.; Shotton, J. Enhanced computer vision with microsoft kinect sensor: A review. IEEE Trans. Cybern. 2013, 43, 1318–1334. [Google Scholar]

- Yan, T.; Sun, Y.; Liu, T.; Cheung, C.H.; Meng, M.Q.H. A locomotion recognition system using depth images. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 6766–6772. [Google Scholar]

- Orbbec Astra. Available online: https://orbbec3d.com/product-astra-pro/ (accessed on 7 January 2021).

- Structure Sensor Press Info. Available online: https://structure.io/ (accessed on 7 January 2021).

- Dimitriou, N.; Leontaris, L.; Vafeiadis, T.; Ioannidis, D.; Wotherspoon, T.; Tinker, G.; Tzovaras, D. Fault Diagnosis in Microelectronics Attachment Via Deep Learning Analysis of 3-D Laser Scans. IEEE Trans. Ind. Electron. 2019, 67, 5748–5757. [Google Scholar] [CrossRef]

- Dimitriou, N.; Leontaris, L.; Vafeiadis, T.; Ioannidis, D.; Wotherspoon, T.; Tinker, G.; Tzovaras, D. A deep learning framework for simulation and defect prediction applied in microelectronics. Simul. Model. Pract. Theory 2020, 100, 102063. [Google Scholar] [CrossRef]

- Rousopoulou, V.; Papachristou, K.; Dimitriou, N.; Drosou, A.; Tzovaras, D. Automated Mechanical Multi-sensorial Scanning. In International Conference on Computer Vision Systems; Springer: Berlin/Heidelberg, Germany, 2019; pp. 433–442. [Google Scholar]

- Ingman, M.; Virtanen, J.P.; Vaaja, M.T.; Hyyppä, H. A comparison of low-cost sensor systems in automatic cloud-based indoor 3D modeling. Remote Sens. 2020, 12, 2624. [Google Scholar] [CrossRef]

- Liu, R.; Shen, J.; Chen, C.; Yang, J. SLAM for Robotic Navigation by Fusing RGB-D and Inertial Data in Recurrent and Convolutional Neural Networks. In Proceedings of the 2019 IEEE 5th International Conference on Mechatronics System and Robots (ICMSR), Singapore, 3–5 May 2019; pp. 1–6. [Google Scholar]

- Kolhatkar, C.; Wagle, K. Review of SLAM Algorithms for Indoor Mobile Robot with LIDAR and RGB-D Camera Technology. Innov. Electr. Electron. Eng. 2020, 1, 397–409. [Google Scholar]

- Sun, Q.; Yuan, J.; Zhang, X.; Duan, F. Plane-Edge-SLAM: Seamless fusion of planes and edges for SLAM in indoor environments. IEEE Trans. Autom. Sci. Eng. 2020, 1–15. [Google Scholar] [CrossRef]

- Park, K.B.; Choi, S.H.; Kim, M.; Lee, J.Y. Deep Learning-based mobile augmented reality for task assistance using 3D spatial mapping and snapshot-based RGB-D data. Comput. Ind. Eng. 2020, 146, 106585. [Google Scholar] [CrossRef]

- Darwish, W.; Tang, S.; Li, W.; Chen, W. A new calibration method for commercial RGB-D sensors. Sensors 2017, 17, 1204. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Qu, D.; Xu, F.; Zou, F.; Song, J.; Jia, K. Approach for accurate calibration of RGB-D cameras using spheres. Opt. Express 2020, 28, 19058–19073. [Google Scholar] [CrossRef] [PubMed]

- Giancola, S.; Valenti, M.; Sala, R. A Survey on 3D Cameras: Metrological Comparison of Time-of-Flight, Structured-Light and Active Stereoscopy Technologies; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Canessa, A.; Chessa, M.; Gibaldi, A.; Sabatini, S.P.; Solari, F. Calibrated depth and color cameras for accurate 3D interaction in a stereoscopic augmented reality environment. J. Vis. Commun. Image Represent. 2014, 25, 227–237. [Google Scholar] [CrossRef]

- Basso, F.; Menegatti, E.; Pretto, A. Robust intrinsic and extrinsic calibration of RGB-D cameras. IEEE Trans. Robot. 2018, 34, 1315–1332. [Google Scholar] [CrossRef]

- Villena-Martínez, V.; Fuster-Guilló, A.; Azorín-López, J.; Saval-Calvo, M.; Mora-Pascual, J.; Garcia-Rodriguez, J.; Garcia-Garcia, A. A quantitative comparison of calibration methods for RGB-D sensors using different technologies. Sensors 2017, 17, 243. [Google Scholar] [CrossRef] [PubMed]

- Mankoff, K.D.; Russo, T.A. The Kinect: A low-cost, high-resolution, short-range 3D camera. Earth Surf. Process. Landf. 2013, 38, 926–936. [Google Scholar] [CrossRef]

- Fiedler, D.; Müller, H. Impact of thermal and environmental conditions on the kinect sensor. In International Workshop on Depth Image Analysis and Applications; Springer: Berlin/Heidelberg, Germany, 2012; pp. 21–31. [Google Scholar]

- DiFilippo, N.M.; Jouaneh, M.K. Characterization of different Microsoft Kinect sensor models. IEEE Sens. J. 2015, 15, 4554–4564. [Google Scholar] [CrossRef]

- Zhao, J.; Price, R.K.; Bleyer, M.; Demandolx, D. Temperature Compensation for Structured Light Depth Imaging System. U.S. Patent 10497137, 3 December 2019. [Google Scholar]

- Heindl, C.; Pönitz, T.; Stübl, G.; Pichler, A.; Scharinger, J. Spatio-thermal depth correction of RGB-D sensors based on Gaussian processes in real-time. In Proceedings of the Tenth International Conference on Machine Vision (ICMV 2017), Vienna, Austria, 13 April 2018; Volume 10696, p. 106961A. [Google Scholar]

- Orbbec 3D Applications. Available online: https://orbbec3d.com/ (accessed on 7 January 2021).

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the 7th International Joint Conference on Artificial Intelligence, Vancouver, BC, Canada, 24–28 August 1981; pp. 121–130. [Google Scholar]

- Intel RealSense LiDAR Camera L515. Available online: https://www.intelrealsense.com/lidar-camera-l515/ (accessed on 8 March 2021).

- Intel RealSense Depth Camera D415. Available online: https://www.intelrealsense.com/depth-camera-d415/ (accessed on 8 March 2021).

- Adamczyk, M. Temperature Compensation Method for Raster Projectors Used in 3D Structured Light Scanners. Sensors 2020, 20, 5778. [Google Scholar]

| Camera | a | b | c | Optimal Temperature |

|---|---|---|---|---|

| 1 | 0.03 | −0.27 | 7.60 | 28.13 |

| 2 | 0.06 | −0.31 | 10.32 | 32.75 |

| 3 | 0.04 | −0.30 | 8.90 | 30.05 |

| 4 | −0.02 | −0.31 | 9.94 | 31.97 |

| 5 | 0.05 | −0.29 | 8.70 | 30.40 |

| Camera | RMSE (5.52°) | RMSE (11.72°) | RMSE (19.30°) |

|---|---|---|---|

| Kinect | 33.59 | 28.8 | 23.51 |

| Astra | 217.75 | 158.1 | 103.96 |

| Astra corrected | 32.72 | 22.50 | 27.42 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vila, O.; Boada, I.; Raba, D.; Farres, E. A Method to Compensate for the Errors Caused by Temperature in Structured-Light 3D Cameras. Sensors 2021, 21, 2073. https://doi.org/10.3390/s21062073

Vila O, Boada I, Raba D, Farres E. A Method to Compensate for the Errors Caused by Temperature in Structured-Light 3D Cameras. Sensors. 2021; 21(6):2073. https://doi.org/10.3390/s21062073

Chicago/Turabian StyleVila, Oriol, Imma Boada, David Raba, and Esteve Farres. 2021. "A Method to Compensate for the Errors Caused by Temperature in Structured-Light 3D Cameras" Sensors 21, no. 6: 2073. https://doi.org/10.3390/s21062073

APA StyleVila, O., Boada, I., Raba, D., & Farres, E. (2021). A Method to Compensate for the Errors Caused by Temperature in Structured-Light 3D Cameras. Sensors, 21(6), 2073. https://doi.org/10.3390/s21062073