A Fringe Phase Extraction Method Based on Neural Network

Abstract

1. Introduction

2. Method

2.1. Principle

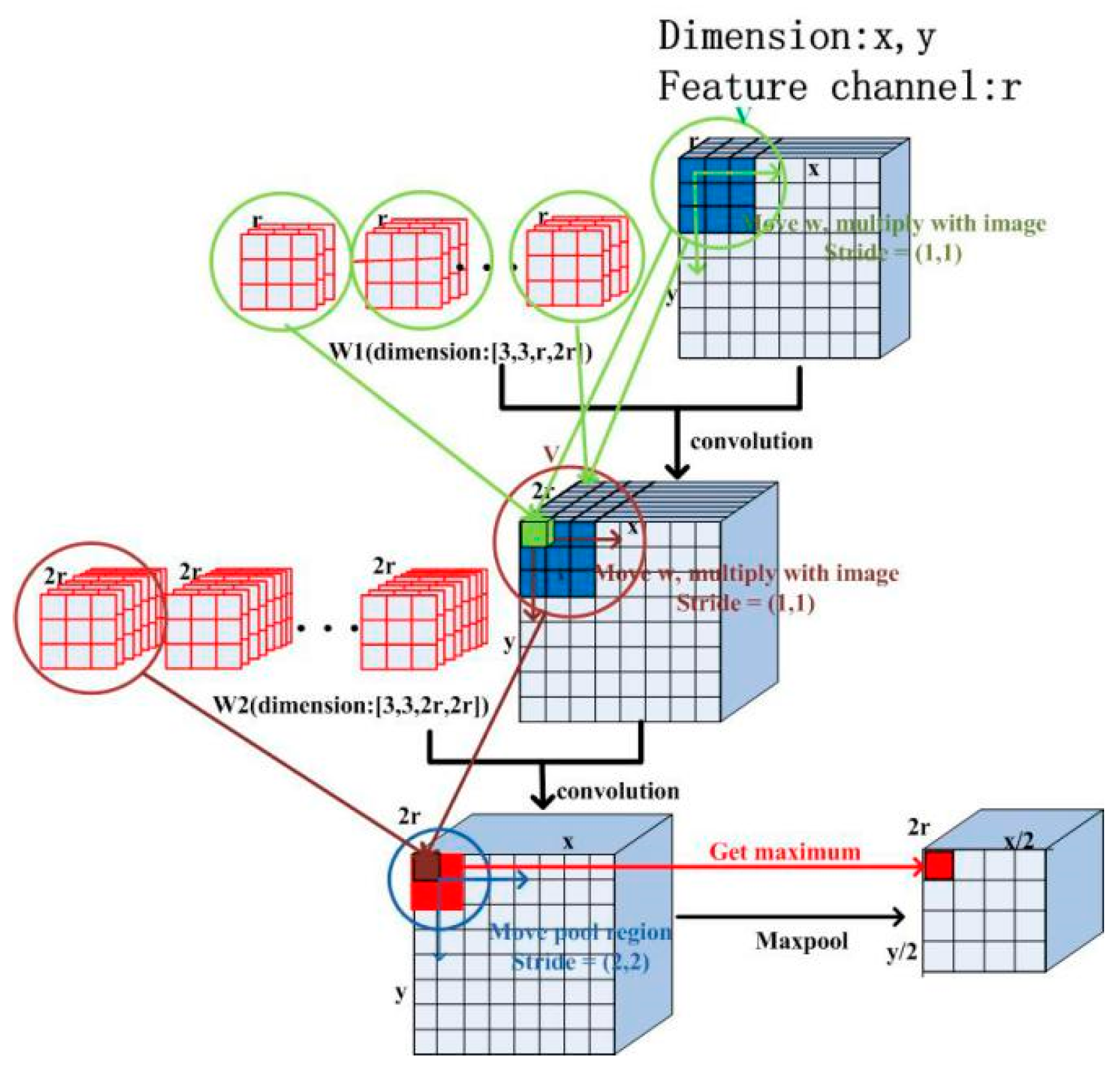

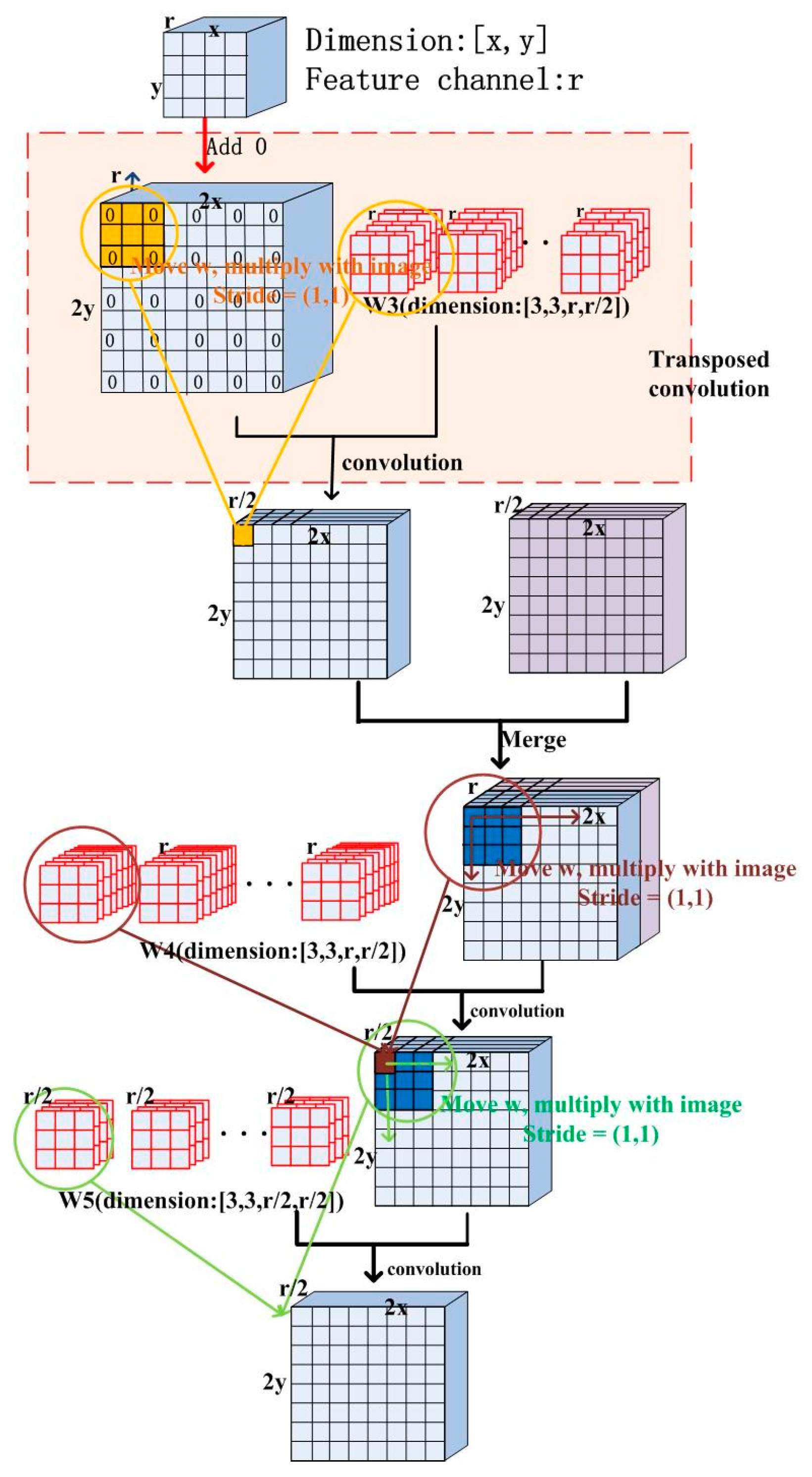

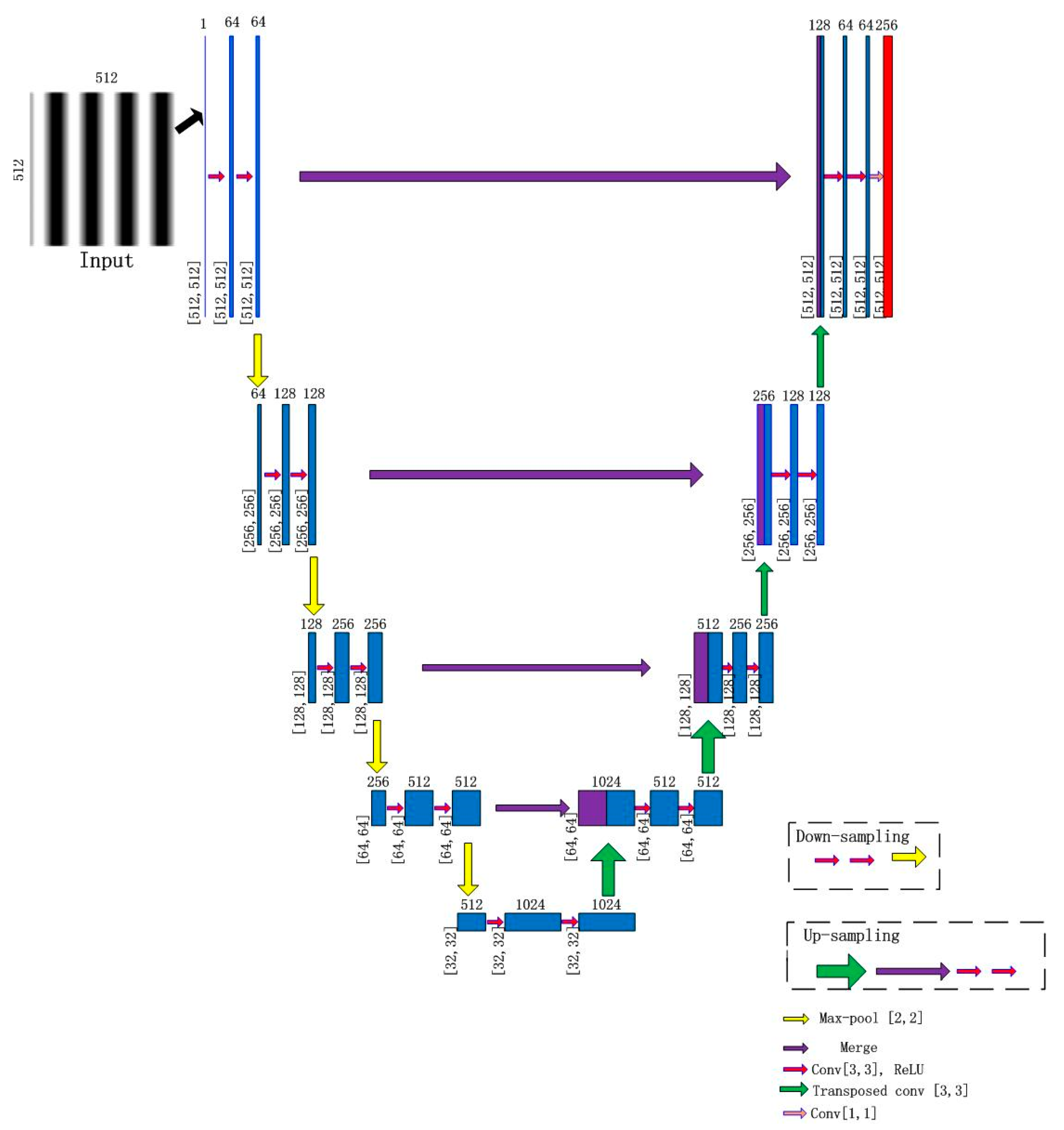

2.2. U-Net Neural Network

2.3. Network Training

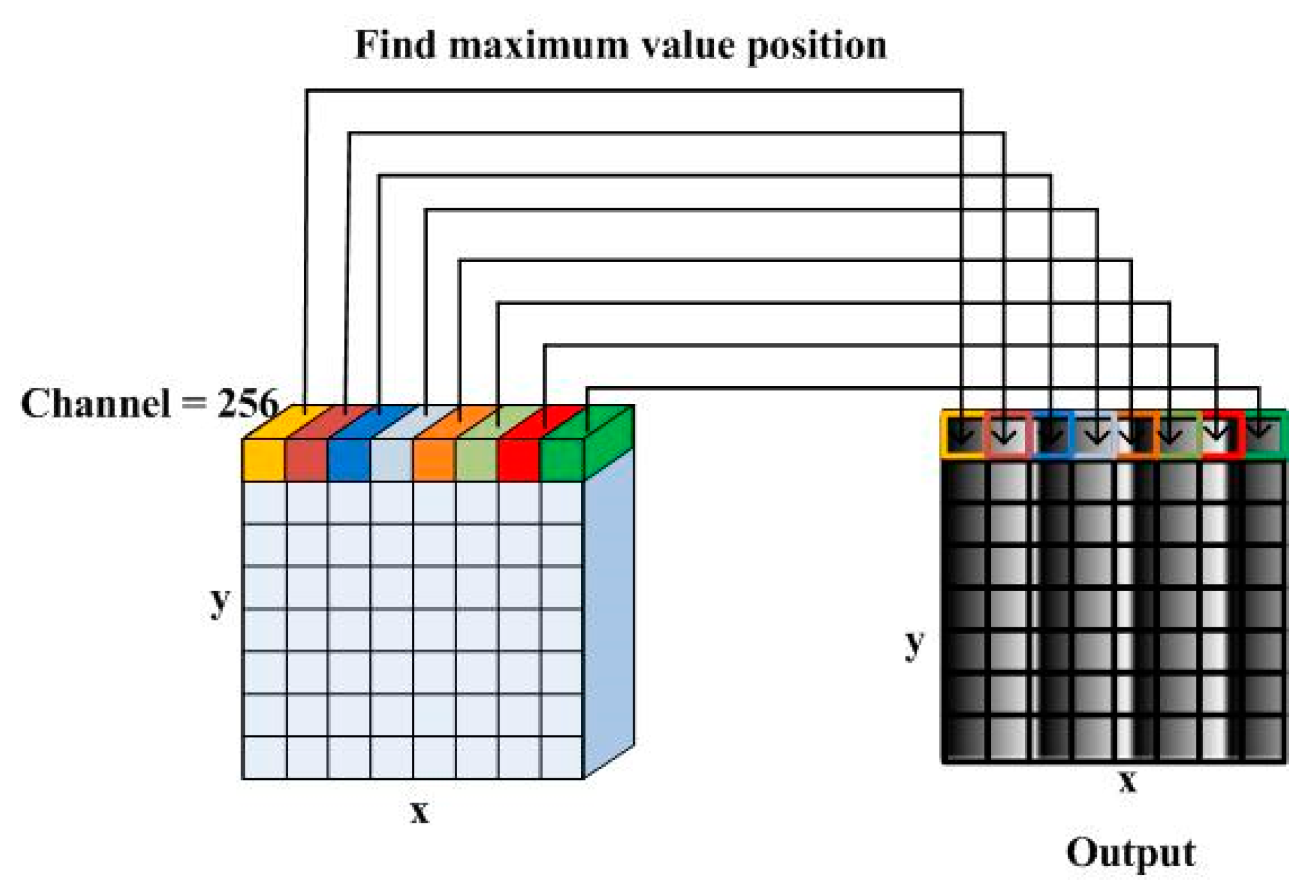

2.4. Output Principle

3. Verification of Method

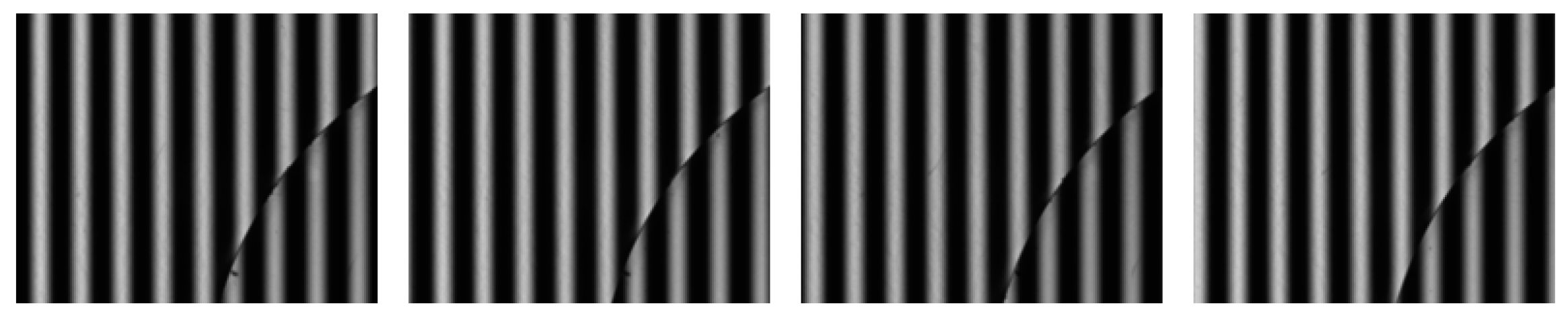

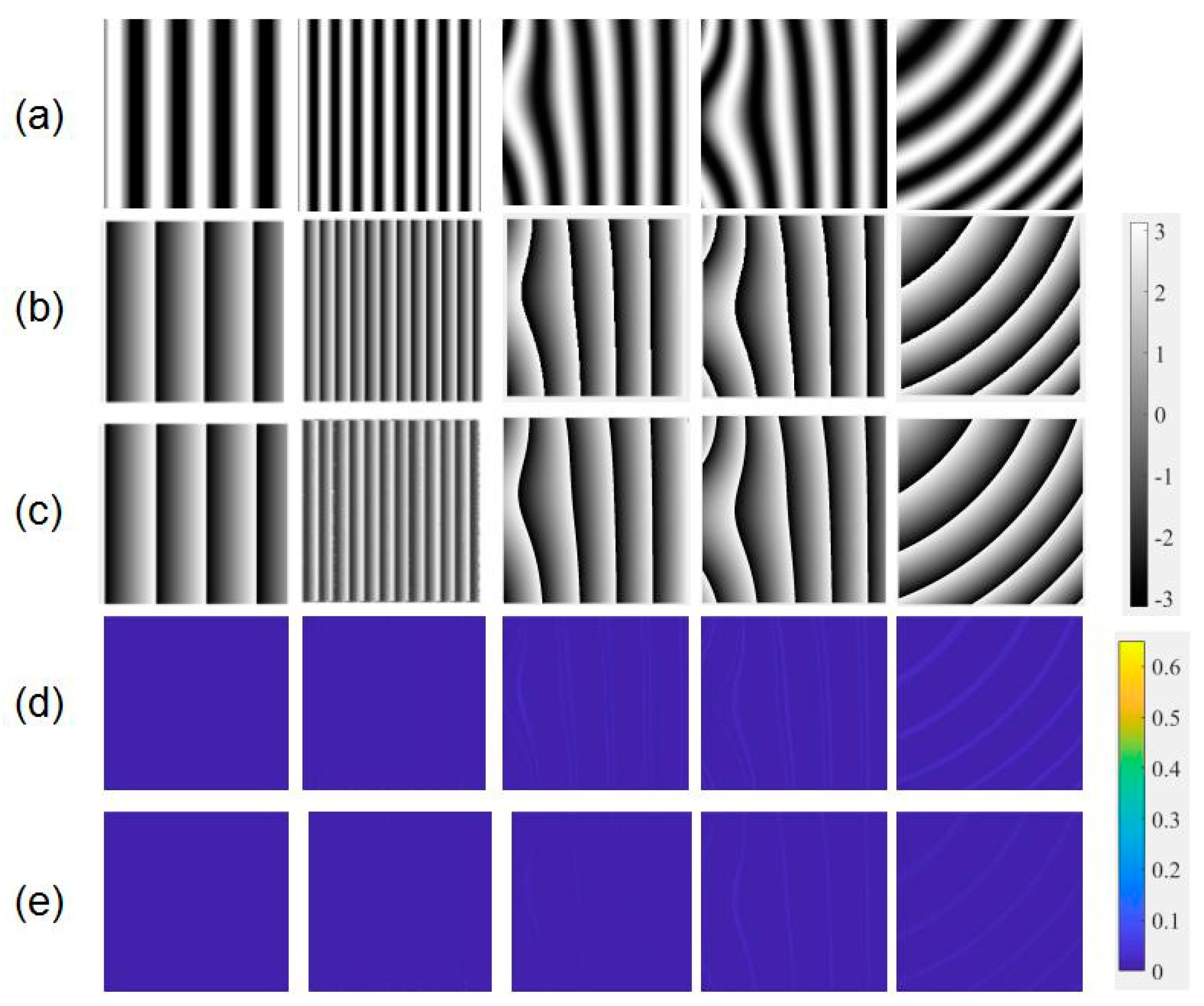

3.1. Simulation Image

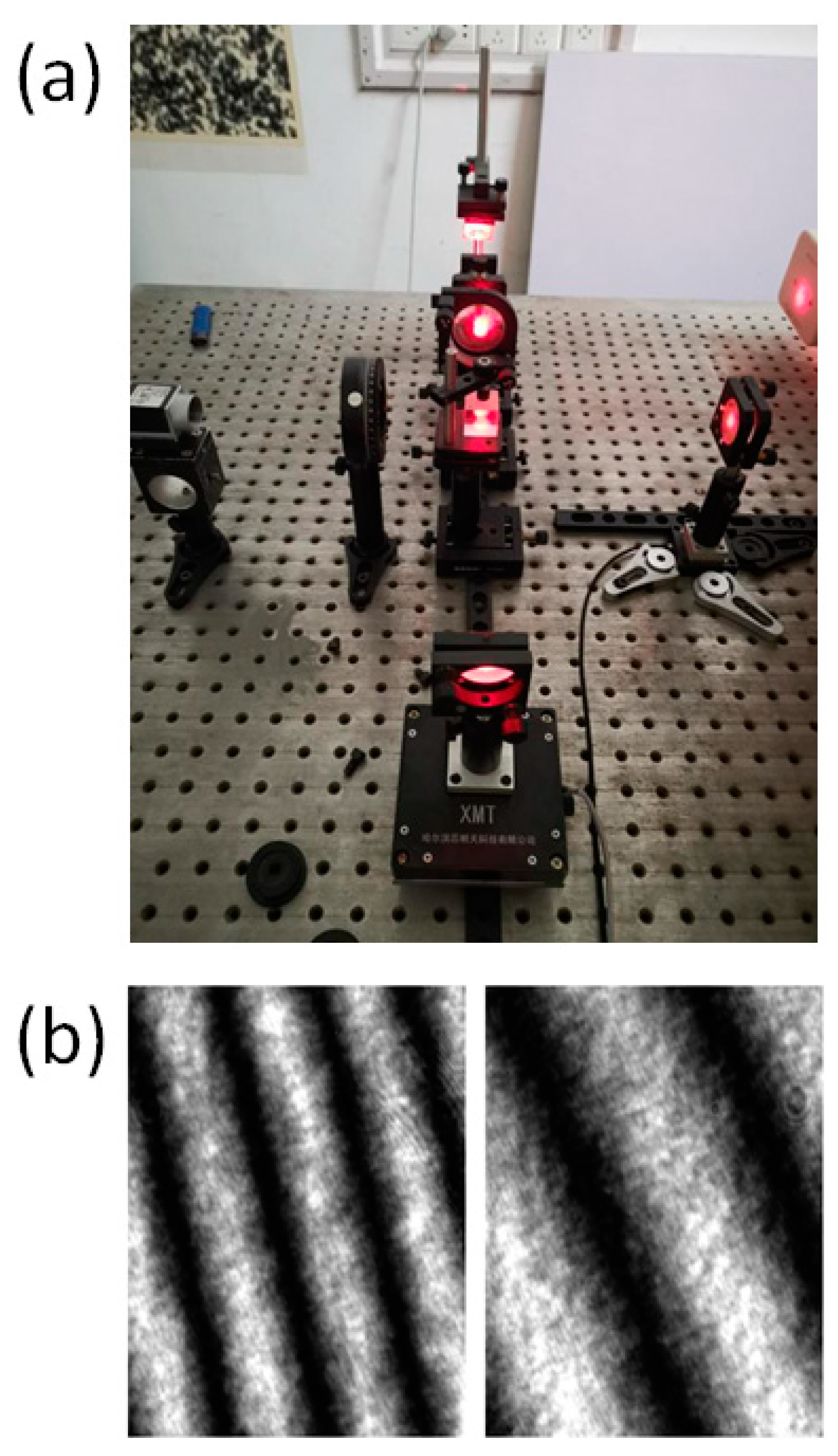

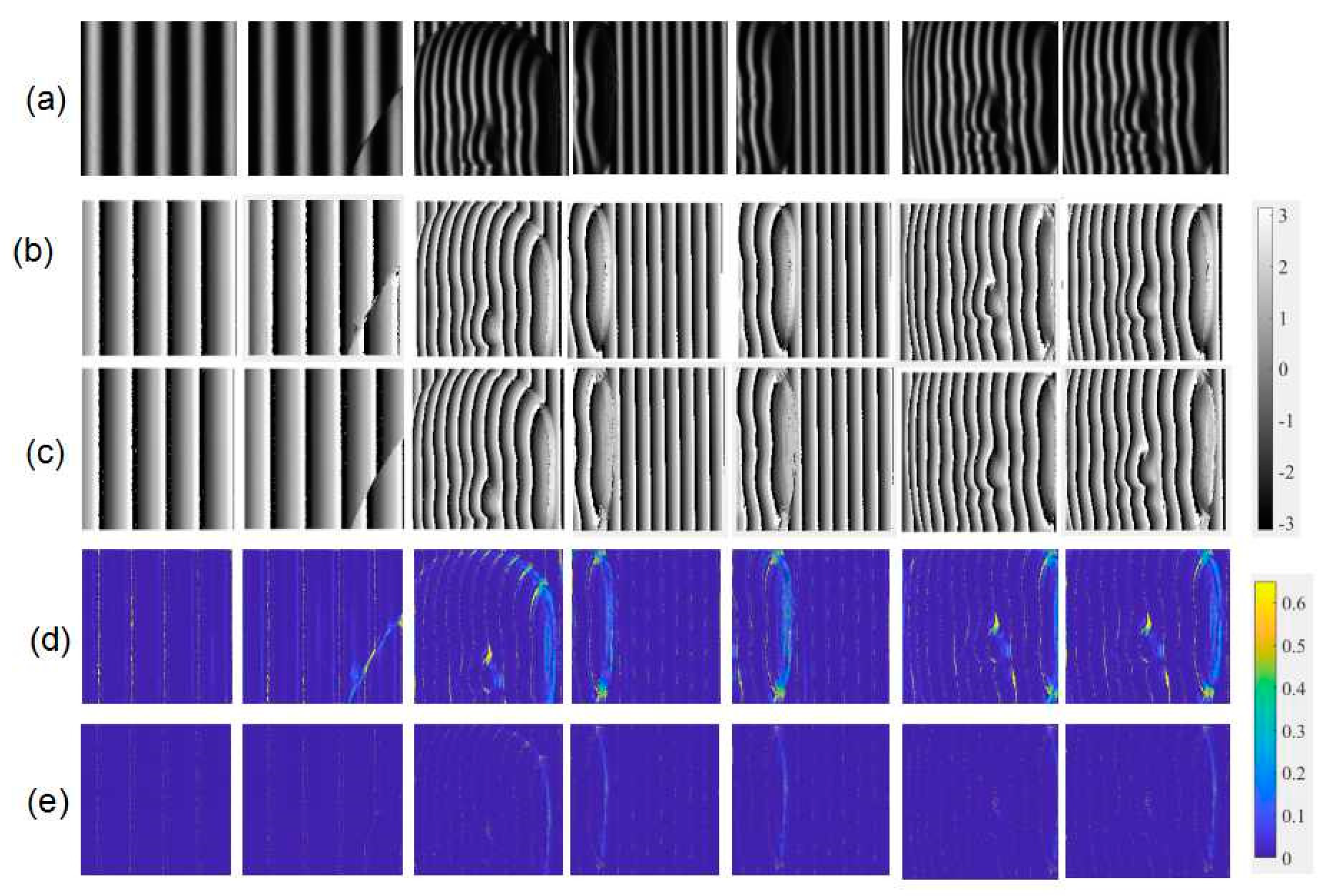

3.2. Experimental Image

4. Results and Discussion

4.1. Computation Accuracy

4.1.1. Results on Simulation Image

4.1.2. Results on Experimental Image

4.2. Computation Efficiency

4.3. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Huang, P.S.; Hu, Q.; Jin, F.; Chiang, F.P. Color-encoded digital fringe projection technique for high-speed 3-D surface contouring. Opt. Eng. 1999, 38, 1065–1071. [Google Scholar] [CrossRef]

- Silva, A.; Flores, J.L.; Muñoz, A.; Ayubi, G.A.; Ferrari, J.A. Three-dimensional shape profiling by out-of-focus projection of colored pulse width modulation fringe patterns. Appl. Opt. 2017, 56, 5198–5203. [Google Scholar] [CrossRef]

- Bhaduri, B.; Mohan, N.K.; Kothiyal, M.P. Simultaneous measurement of out-of-plane displacement and slope using a multiaperture DSPI system and fast Fourier transform. Appl. Opt. 2007, 46, 5680–5686. [Google Scholar] [CrossRef] [PubMed]

- Schreiber, W.; Theory, N.G. Arrangements of self-calibrating whole-body 3-D-measurement systems using fringe projection technique. Opt. Eng. 2000, 39, 159–169. [Google Scholar] [CrossRef]

- Wang, Y.; Basu, S.; Li, B. Binarized dual phase-shifting method for high-quality 3D shape measurement. Appl. Opt. 2018, 57, 6632–6639. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, S. Superfast multifrequency phase-shifting technique with optimal pulse width modulation. Opt. Express 2011, 19, 5149–5155. [Google Scholar] [CrossRef] [PubMed]

- Suja Helen, S.; Kothiyal, M.P.; Sirohi, R.S. Analysis of spectrally resolved white light interferograms: Use of phase shifting technique. Opt. Eng. 2011, 40, 1329–1336. [Google Scholar] [CrossRef]

- Li, J.; Su, X.; Guo, L. Improved Fourier transform profilometry for the automatic measurement of three-dimensional object shapes. Opt. Eng. 1990, 29, 1439–1445. [Google Scholar]

- Kemao, Q.; Wang, H.; Gao, W. Windowed Fourier transform for fringe pattern analysis: Theoretical analyses. Appl. Opt. 2008, 47, 5408–5419. [Google Scholar] [CrossRef] [PubMed]

- Huang, L.; Kemao, Q.; Pan, B.; Asundi, A.K. Comparison of Fourier transform, windowed Fourier transform, and wavelet transform methods for phase extraction from a single fringe pattern in fringe projection profilometry. Opt. Lasers Eng. 2010, 48, 141–148. [Google Scholar] [CrossRef]

- Agarwal, N.; Kemao, Q. Windowed Fourier ridges as a spatial carrier phase-shifting algorithm. Opt. Eng. 2017, 56, 080501. [Google Scholar] [CrossRef]

- Fu, Y.; Tay, C.J.; Quan, C.; Chen, L.J. Temporal wavelet analysis for deformation and velocity measurement in speckle interferometry. Opt. Eng. 2004, 43, 2780–2788. [Google Scholar] [CrossRef]

- Tay, C.J.; Quan, C.; Fu, Y.; Huang, Y. Instantaneous velocity displacement and contour measurement by use of shadow moiré and temporal wavelet analysis. Appl. Opt. 2004, 43, 4164–4171. [Google Scholar] [CrossRef] [PubMed]

- Braga, R.A.; González, R.J. Accuracy in dynamic laser speckle: Optimum size of speckles for temporal and frequency analyses. Opt. Eng. 2016, 55, 121702. [Google Scholar] [CrossRef]

- Ghosh, R.; Mishra, A.; Orchard, G.; Thakor, N.V. Real-time object recognition and orientation estimation using an event-based camera and CNN. In Proceedings of the 2014 IEEE Biomedical Circuits and Systems Conference (BioCAS), Lausanne, Switzerland, 22–24 October 2014; pp. 544–547. [Google Scholar]

- Bevilacqua, V.; Daleno, D.; Cariello, L.; Mastronardi, G. Pseudo 2D Hidden Markov Models for Face Recognition Using Neural Network Coefficients. In Proceedings of the 2007 IEEE Workshop on Automatic Identification Advanced Technologies, Alghero, Italy, 26 January 2017; pp. 107–111. [Google Scholar]

- Herrmann, C.; Willersinn, D.; Beyerer, J. Low-resolution Convolutional Neural Networks for video face recognition. In Proceedings of the 2016 13th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Colorado Springs, CO, USA, 23–26 August 2016; pp. 221–227. [Google Scholar]

- Mondal, M.; Mondal, P.; Saha, N.; Paramita Chattopadhyay. Automatic number plate recognition using CNN based self-synthesized feature learning. In Proceedings of the 2017 IEEE Calcutta Conference (CALCON), Kolkata, India, 2–3 December 2017; pp. 378–381. [Google Scholar]

- Acharya, U.R.; Fujita, H.; LihOh, S.; Hagiwara, Y.; Tan, J.H. Muhammad Adam Application of deep convolutional neural network for automated detection of myocardial infarction using ECG signals. Inf. Sci. 2017, 190, 415–416. [Google Scholar]

- Heeht Nielsen, R. Theory of the Back ProPagation Neural Network. Int. Jt. Conf. Neural Netw. 1989, 1, 593–606. [Google Scholar]

- Hu, X.; Saiko, M.; Hori, C. Incorporating tone features to convolutional neural network to improve Mandarin/Thai speech recognition. In Proceedings of the 2014 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA), Chiang Mai, Thailand, 9–12 December 2014; pp. 1–5. [Google Scholar]

- Yang, B.B.; Ju, J.B.; Zhao, Y.G. Technical Research of Anti-submarine Patrol Aircraft Magnetic Compensation Based on BP Neural Network. Comput. Meas. Control 2016, 24, 146–152. [Google Scholar]

- Xiao, H.; Wang, G.L.; Wang, B.D. Application of BP Neural Network Based on Factor Analysis to Prediction of Rock Mass Deformation Modulus. J. Eng. Geol. 2016, 1, 87–95. [Google Scholar]

- Horisaki, R.; Takagi, R.; Tanida, J. Learning-based imaging through scattering media. Opt. Express 2016, 24, 13738–13743. [Google Scholar] [CrossRef]

- Rivenson, Y.; Zhang, Y.; Günaydin, H.; Teng, D.; Ozcan, A. Phase recovery and holographic image reconstruction using deep learning in neural networks. Light. Sci. Appl. 2018, 7, 17141. [Google Scholar] [CrossRef]

- Pitkäaho, T.; Manninen, A.; Naughton, T.J. Performance of autofocus capability of deep convolutional neural networks in digital holographic microscopy. In Digital Holography and Three-Dimensional Imaging; OSA Technical Digest; Optical Society of America: Washington, DC, USA, 2017; p. W2A-5. [Google Scholar]

- Wang, H.; Lyu, M.; Situ, G. eHoloNet: A learning-based end-to-end approach for in-line digital holographic reconstruction. Opt. Express 2018, 26, 22603–22614. [Google Scholar] [CrossRef]

- Yin, W.; Chen, Q.; Feng, S.; Tao, T.; Huang, L.; Trusiak, M.; Zuo, C. Temporal phase unwrapping using deep learning. Sci. Rep. 2019, 9, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Feng, S.; Chen, Q.; Gu, G.; Tao, T.; Zhang, L.; Hu, Y.; Zuo, C. Fringe pattern analysis using deep learning. Adv. Photonics 2019, 1, 025001. [Google Scholar] [CrossRef]

- Feng, S.; Zuo, C.; Yin, W.; Gu, G.; Chen, Q. Micro deep learning profilometry for high-speed 3D surface imaging. Opt. Lasers Eng. 2019, 121, 416–427. [Google Scholar] [CrossRef]

- Yu, H.; Zhang, Z.; Chen, X.; Zheng, D.; Han, J.; Zhang, Y. Novel phase retrieval based on deep learning for fringe projection profilometry by only using one single fringe. arXiv 2019, arXiv:1906.05652. [Google Scholar]

- Van der Jeught, S.; Dirckx, J.J. Deep neural networks for single shot structured light profilometry. Opt. Express 2019, 27, 17091–17101. [Google Scholar] [CrossRef] [PubMed]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Pan, B.; Xie, H.; Xu, B.-Q.; Dai, F.-L. Performance of sub-pixel registration algorithms in digital image correlation. Meas. Sci. Technol. 2006, 17, 1615. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Diederik, K.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Wang, Z.; Ma, H. Advanced continuous wavelet transform algorithm for digital interferogram analysis and processing. Opt. Eng. 2006, 45, 045601. [Google Scholar]

| Value | E(rad) | S(rad) | ||||

|---|---|---|---|---|---|---|

| Patterns | Simulation | Fringe Projection | Interferograms | Simulation | Fringe Projection | Interferograms |

| The proposed method | 0.03 | 0.10 | 0.22 | 0.07 | 0.08 | 0.24 |

| Wavelet transform method | 0.05 | 0.15 | 0.24 | 0.08 | 0.14 | 0.29 |

| Time (s) | Simulation Patterns | Fringe Projection Patterns | Interferograms |

|---|---|---|---|

| The proposed method | 0.069 | 0.066 | 0.071 |

| Wavelet transform method | 1.154 | 3.152 | 2.850 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, W.; Miao, H.; Yan, K.; Fu, Y. A Fringe Phase Extraction Method Based on Neural Network. Sensors 2021, 21, 1664. https://doi.org/10.3390/s21051664

Hu W, Miao H, Yan K, Fu Y. A Fringe Phase Extraction Method Based on Neural Network. Sensors. 2021; 21(5):1664. https://doi.org/10.3390/s21051664

Chicago/Turabian StyleHu, Wenxin, Hong Miao, Keyu Yan, and Yu Fu. 2021. "A Fringe Phase Extraction Method Based on Neural Network" Sensors 21, no. 5: 1664. https://doi.org/10.3390/s21051664

APA StyleHu, W., Miao, H., Yan, K., & Fu, Y. (2021). A Fringe Phase Extraction Method Based on Neural Network. Sensors, 21(5), 1664. https://doi.org/10.3390/s21051664