1. Introduction

Heavy equipment is commonly employed at large construction sites such as mines and quarries. Its failure directly affects productivity, which can cause great losses to both customers and corporations. Therefore, identifying eminent failures in advance and minimizing downtime are essential for both manufacturers and customers. The advent of the Industry 4.0 era has also increased demand for diagnosis and prognostics with use of smart sensors. Under the slogan “Industry 4.0”, the development of intelligence applications is accelerating at various industrial sites [

1]. Fault diagnostics for parts susceptible to damage such as sun, planetary, and ring gears—major components of heavy machinery—rely on detecting and monitoring changes in the magnitude of fault frequency. However, the complex kinematics of planetary gearboxes generate complex vibration signals, making it difficult to identify characteristic error frequencies. A failure in a planetary gearbox can shut down the entire vehicle, resulting in major economic losses and even human casualties. Condition monitoring and initial fault diagnosis aim to prevent accidents and save costs for planetary gearbox users.

Machine learning technology has had a number of successes in the field of fault diagnosis in recent years. Previous studies tended to consider autoencoders (AEs), convolutional neural networks (CNNs), and recurrent neural networks (RNNs) [

2,

3]. RNNs are a type of deep learning architecture designed for time-series data—i.e., data in which the current output is heavily dependent on the data that preceded it, such as in language. Generally, output from the current time step comprises all or part of the input for the next time step operation [

4]. However, early RNNs had a problem of quickly forgetting the impact of previous data after only a few iterations (vanishing gradient problem). Long Short-Term Memory (LSTM) networks were developed to solve this problem [

5]. Hence, most modern RNNs are LSTM implementations and are extensively used to identify gradual and time-dependent machine faults [

6,

7]. In particular, fault diagnosis for a rotating component, an essential aspect of various applications, has received a lot of attention in industry. For bearing failure diagnosis, a method of indirectly using the starter current rather than data collected from a rotating body was proposed [

8]. In addition, the feature extraction method based on pre-learning, which can more robustly extract the fault features of a rotating body, has been successfully applied [

9].

However, most existing studies are generally conducted under the assumption that the source and target data have similar distributions [

10]. This means that training and testing data must be obtained using the same equipment under the same working conditions. Unfortunately, in general, the operating speed or load of an actual rotating body will constantly change, creating an abnormal vibration signal and error frequency with time-varying characteristics, making fault diagnosis more complicated. Therefore, this assumption is difficult to meet in practice and variations in operating conditions create significant disparities in the distribution of the target domain data [

11,

12]. As a result, the learned fault diagnosis knowledge does not generalize well in the test area due to domain shift issues. A method of solving these problems that has recently attracted attention is transfer learning, which transfers knowledge between different areas [

13,

14,

15]. Transfer learning methods can be summarized into four main categories: (1) Instance-based transfer learning, which rearranges the weights of the learning model through retraining with the target data; (2) feature-based transfer learning to find domain-invariant features by reducing the distribution mismatch between the source and target domains; (3) relationship-based transfer learning, which transfers mutual knowledge based on the similarity between the interactions of two domains; and (4) in model-based transfer learning, in which parameters are transferred directly or fine-tuned by a classifier for the target domain.

In this paper, we propose a new domain transformation-based diagnosis method for diagnosing cross-domain failures of rotating machinery. Label data collected under specific operating conditions and normal state data collected under different operating conditions are used for model training. To emphasize the spatiotemporal information of the input signal, the input signal is preprocessed using short-term Fourier transform (STFT). To minimize the distribution mismatch between the source domain and the target domain, we propose a semantic transformation algorithm in the latent space.

For feature extraction, a deep convolutional neural network with an attention mechanism is adopted, and a domain shift algorithm is introduced to match the distribution of data across the domain. The results indicate that the proposed method is an effective and promising tool for diagnosing cross-domain defects in gearboxes. The main contributions of this paper are as follows:

A domain transformation method is proposed for cross-domain fault diagnosis in rotating machines with significantly changing operating conditions.

A feature extraction method is proposed that can focus on features related to failures using STFT and attention mechanisms.

The results of the preferred dataset and the actual dataset prove the generalization performance of the proposed method.

In the experiment, two types of dataset of a gearbox system, such as benchmark and real machine data, are used for validation. Both datasets contain various types of failures collected under different working conditions.

The paper is organized as follows:

Section 2 describes related works.

Section 3 defines the sensing data and provides an overview of the learning model.

Section 4 proposes an in-depth learning diagnosis method to extract fault relevant features, also with a domain space shifting method.

Section 5 demonstrates the validity of the proposed approach and performance in two cases: a benchmark of a popularly used dataset and real equipment dataset.

Section 6 concludes the paper.

2. Related Works

Among the various transfer learning methods, the domain adaptation technique has been widely adopted for fault diagnosis by assuming the same labeling operation for the training and test data [

16]. In general, domain adaptation approaches aim to extract domain invariant features even if domains are moving. In particular, a deep learning-based domain adaptation method that utilizes both powerful and transfer learning capabilities has been successfully developed [

17,

18,

19].

To diagnose defects in rolling bearings, a domain adaptation method was proposed that improved the generalized class distance [

20]. In addition, a domain adversarial learning system is proposed, since adversarial training is a model for learning generalized features across domains (DCTLN) [

21].

Some researchers minimize domain differences between different working conditions through maximum mean mismatch (MMD). MMD is a distance-based standard method for minimizing discrepancies between two datasets [

22,

23]. In addition, a multi-kernel based MMD has been proposed [

24,

25]. Unlike traditional distance-based methods (e.g., Kullback–Leibler divergence), an MMD can estimate nonparametric distances and does not require calculating the median density of the distribution [

26]. It has been proposed to use an auto-encoder to project into the function shared subspace, and MMD distances are used to minimize the inter-domain distance. Sufficient target data, not labeled via the automatic encoder, also contribute to the learning of the function [

27].

However, if the number of parameters of data required for training is small or difficult to obtain, training itself cannot proceed. Therefore, minimizing the MMD distance cannot be guaranteed to secure a common set of authentication functions for fault diagnosis. To overcome these deficiencies, domain adversarial neural networks (DANNs) have been proposed [

28].

A DANN introduces a gradient inversion layer to extract features that can determine where the target domain is similar to the source domain. The Adversarial Identification Area Adaptation (ADDA) method extends the Adversarial Domain Adaptation method to provide a generalized view [

29]. The Conditional Domain Adversarial Network (CDAN) method was inspired by the Conditional Generative Adversarial Network (CGAN) and used several linear conditions to capture the cross covariance between class prediction and feature expression [

30]. Adversarial learning, which extracts domain invariant feature expressions through the adversarial training of feature extractors and domain classifiers, can achieve better adaptability than most MMD-based methods [

31,

32].

However, the issue of modal stability in adversarial training remains [

33,

34]. In theory, the adversarial training mode is expected to reach equilibrium to extract domain-invariant feature representations. However, if the data distribution varies significantly across domains, it is difficult to scale down the domain adaptation model. When diagnosing failures in rotating machines, the data distribution is greatly affected by the working conditions.

As described above, both the distance-based approach and the adversarial learning approach have a common problem that they require sufficient data from the target domain. Therefore, we propose a domain movement method that uses normal data of the target domain, which are relatively easy to collect, to reflect realistic conditions.

3. Preliminaries

Gearbox vibrations have a very complex structure.

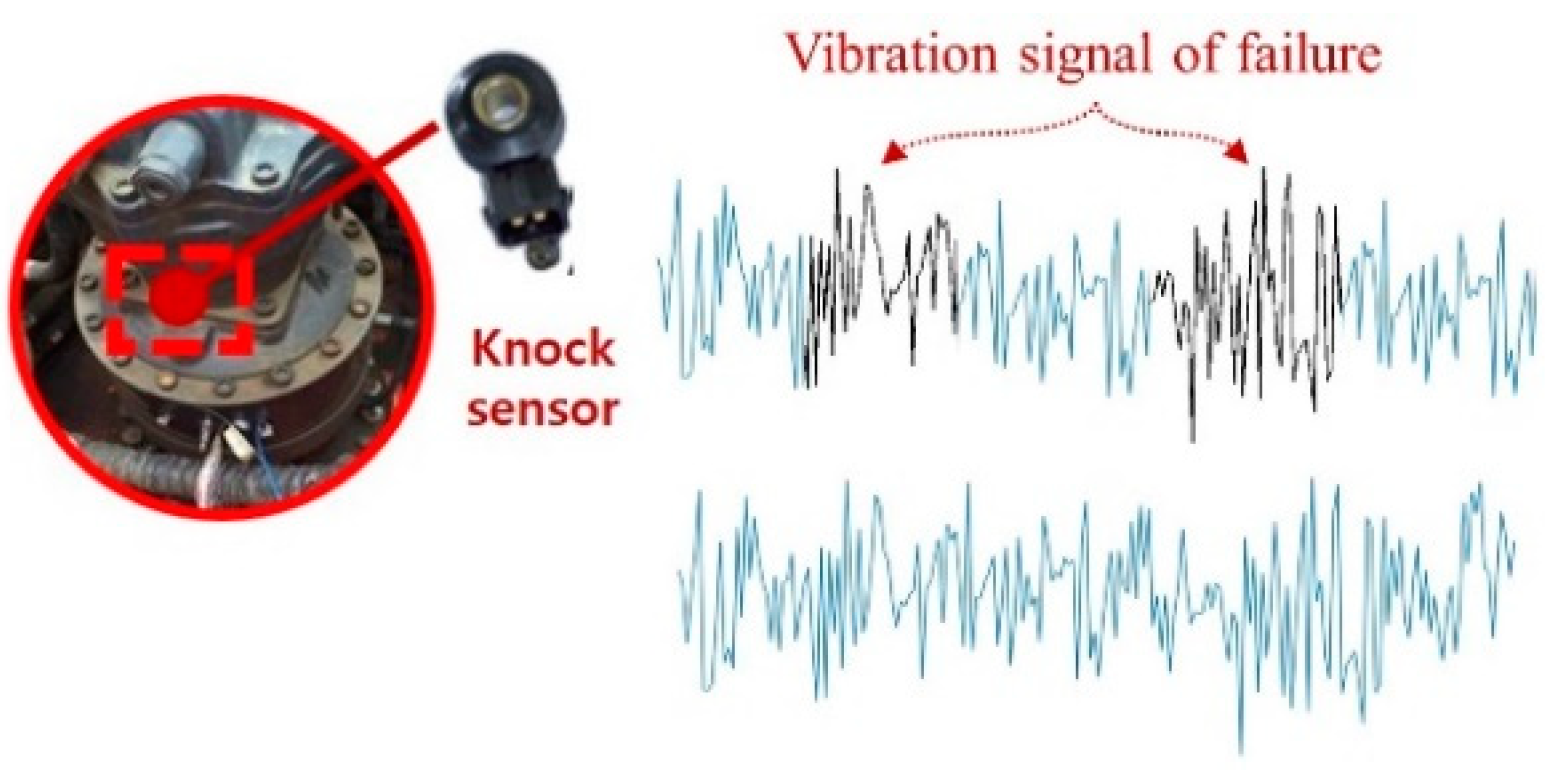

Figure 1 shows the location of a typical sensor to measure vibrations in heavy equipment. The sensor is mounted outside the gearbox and collects not only gearbox vibration but also vibration (noise) from other equipment assets. We intentionally used a low-cost knock sensor commonly deployed in automotive engines. These have only a moderate measurement range and resolution, which may incur noise or missing values at the sensor. These sensors are typically used in real equipment, as they are much cheaper and less capable than those used in academic experimental environments. The specifications of the vibration sensor are described in

Table 1. Vibration data were collected by the control unit with an analog-to-digital converter.

3.1. Measurement Mechanism for the Vibration Signal

For the dataset of the real equipment used in the experiment, the vibration signal was measured using a knock sensor at a 25 kHz sampling rate. Since there was no tachometer to measure the rotational speed, vibrations were measured at high frequencies. The data measured from the actual equipment were collected under the same conditions running in the field. To verify the failure type, the same type of failure was reproduced as that used for training.

Defect areas are difficult to determine, because defect signals are measured irregularly at various rotation speeds.

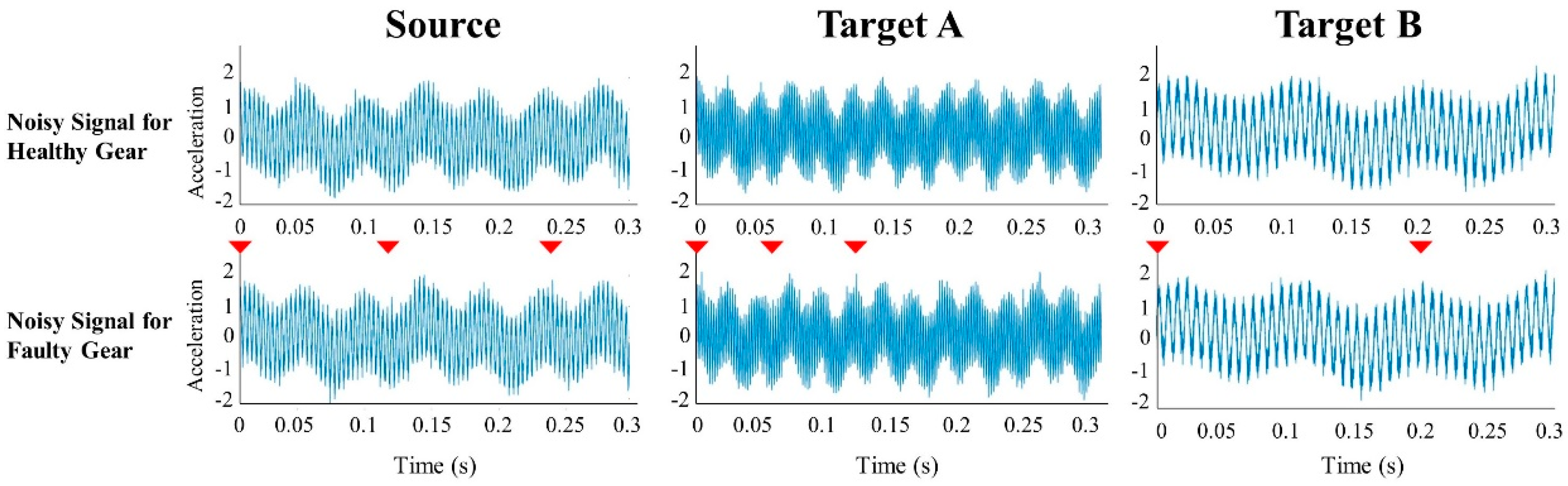

Figure 2 shows the measured vibration signals for each status. Depending on the operating condition, the pattern of the fault signal can change greatly; in the case of a specific fault, it has a subtle change like an impulse signal.

3.2. Overall Idea

We have a labeled dataset

extracted from the source domain,

from the target domain and unlabeled target domain data

.

Figure 3 conceptually describes the domain shifting problem. Domain shifting due to noise interference and fluctuations in the working conditions can significantly degrade the classification performance in the target domain.

Generally, applying a trained model to a new environment requires a new way of generalizing information from the new domain. Inconsistency with new data can be minimized through distance-based learning between available data by active research in recent machine learning-based research [

35]. This distance-based discrepancy learning method has been applied successfully in many research tasks such as human activity recognition and human re-identification [

36,

37].

All the above-mentioned studies assume that the target domain can collect enough data for learning. However, it may not be realistic in a real-world setting, because collecting fault data takes a lot of time and money. On the other hand, normal data can be more easily collected from other domains. Therefore, we propose a method of solving the domain mismatch through a learning method that only includes normal data of the target domain. Inspired by Natural Language Processing called "word embedding", we propose a method of moving feature spaces between domains.

We applied a reconstruction-based stacked autoencoder model that can represent the input signal in a low-dimensional shared space to move the feature vectors of the input data in space. An autoencoder is a deep learning architecture that can efficiently code data. The latent space learned by an autoencoder is that which can best compress and express the features of data; it was proposed to solve the domain adaptation problem by adjusting the latent space.

3.3. Short-Time Fourier Transform (STFT)

The frequency characteristics of a signal can be investigated based on a Fourier series, Fourier Transform, and Discrete Fourier Transform (DFT). STFT is used in the frequency tracking of a tacholess system to represent the spectrogram of the signal. The vibration signals of mechanical systems are often non-stationary. Hence, there is a need for tools for the analysis of time-based frequency content. FFT and DFT allow the investigation of a signal immediately, and temporal-specific information is lost. STFT allows the computation of multiple frequency spectrums by performing successive DFT on a windowed signal. Therefore, it adds a new dimension, defined by

where

is the window that moves along the signal

. In practice, STFT is used to compute the spectrogram of the signal. It is a time-frequency map where the square of the amplitude

is plotted over frequency

f and time

τ. Even though it is a simple tool, there is a trade-off between the time and frequency resolution, which relate to each other as

where

and

are the frequency and the time resolutions, respectively. Therefore, striving for a better time resolution could reduce the frequency accuracy and vice versa. In this paper, the window size was defined as 512 and the overlapping size as 128.

3.4. Attention Mechanism

An attention mechanism is proposed to enable learning the alignment between the source and the target tokens to improve the neural machine translation performance [

38]. Attention mechanisms have mainly been used in language and image fields to find focused words and images, and several studies have used basic and modified attention mechanisms for time series. LSTM using an attention mechanism is proposed for multivariate time series, employing the following attention mechanism transition functions.

and

where

=

is a matrix containing features

extracted by the prediction model,

is a vector of ones, α is a vector of attention weights for features in

is the embedding aspect for the attention mechanism, and

is the output from the attentive neural network as weighted features

[

39].

4. Proposed Model

We extracted time and space information from the original signal through STFT pre-processing and classified the fault type from the input signal using an autoencoder with attention mechanism and 1D CNN LSTM classifier. In addition, we used feature space transformation for domain adaptation in this paper to improve the cross-domain classification performance. Bearing vibration signals collected from a knock sensor are usually 1D, so it is recommended to use 1DCNN for vibration signal processing. In addition, most of the physical failure signals are generated by impulse signals with periods generated by specific gears or bearings [

40]. Therefore, a learning model is designed to localize the impulse signal with 1DCNN and extract contextual features with LSTM.

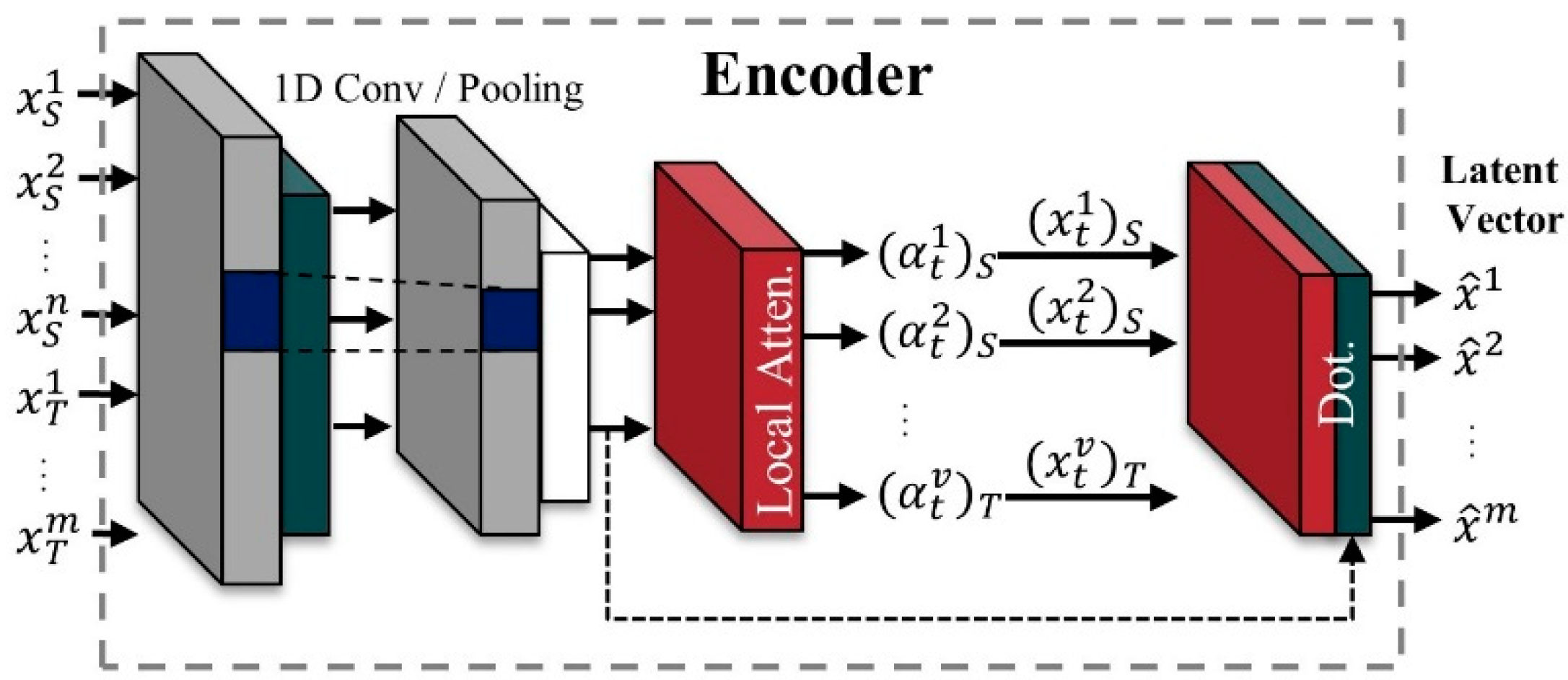

Figure 4 shows the architecture of the learning and domain transformation algorithm. The whole model consists of an attentional autoencoder for latent vector representation and a 1D CNN LSTM-based classifier for classifying failure types from latent vectors. In the inference stage, a latent vector shift stage is added to transform the input data.

4.1. Understanding Latent Space

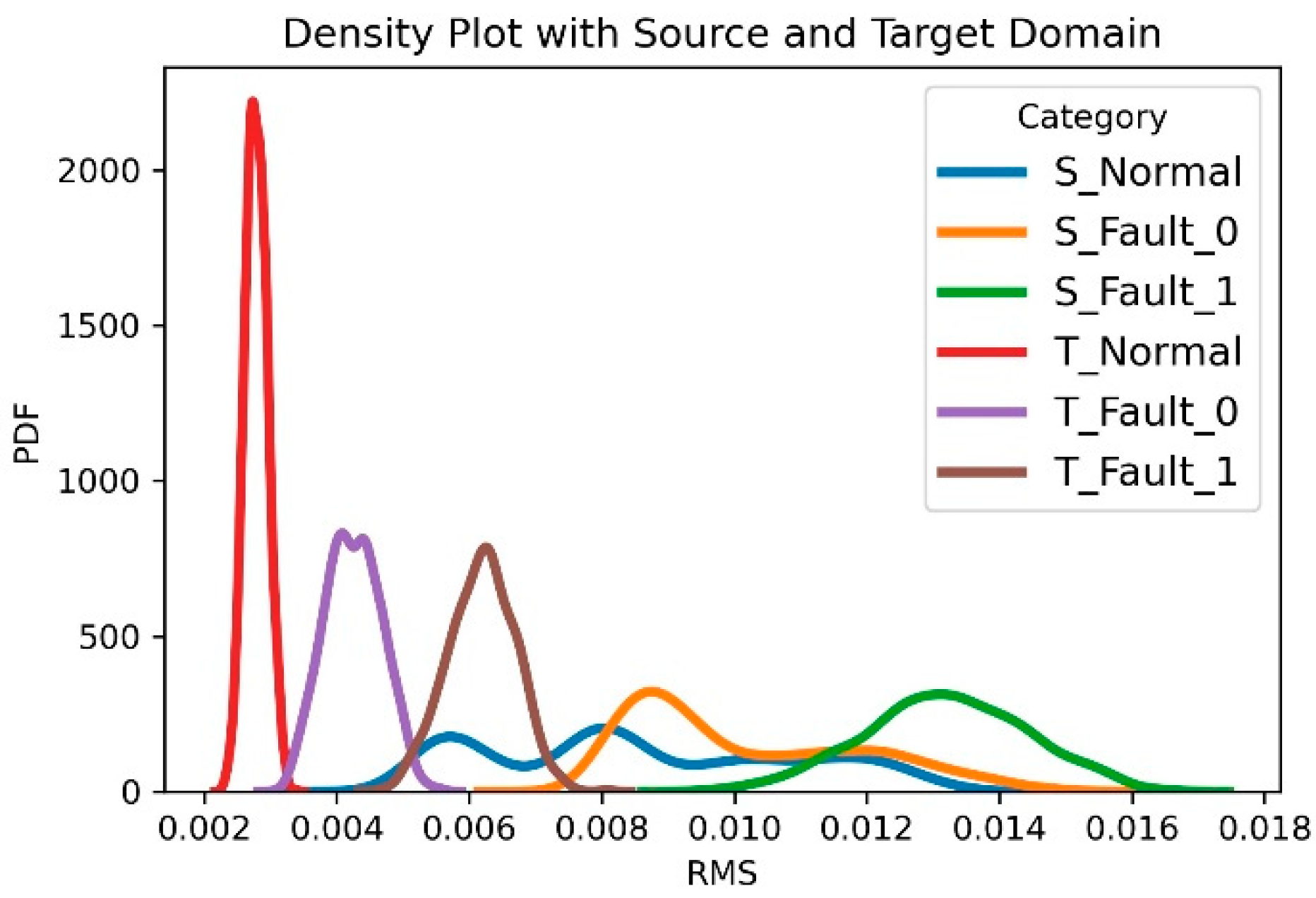

Figure 5 shows the data distribution of three statuses under two different working conditions. We can confirm that the distribution between the source domain and the target domain is significantly different. However, we can see that the alignments of the distributions for each category are similar. Based on the above logic, the model trained on the source domain data encodes the target domain data around the most similar features. Therefore, the target domain alignment does not deviate from the source domain alignment.

Therefore, the weights of a deep neural network are divided into two categories such as basic projection and specific projection. The weight of the base projection is to extract the base features from the input data. Although the basic features are different, they are the basic components involved in the distribution of data and similar. Conversely, the weights of a particular projection can learn many representative features for each category. Therefore, features are extracted to follow a similar shape for newly collected data due to the default projection weights. We try to distribute the feature spatial distribution of newly collected data as similarly as possible to the previously learned data. Therefore, by calculating a specific projection weight that includes the difference between the domains from the normal data, we can reduce the difference between the two by moving the domains [

41].

4.2. Encoder with Spatial Attention

We propose using an autoencoder with an attention mechanism model to represent the features that focus on failure-relevant signals in the latent space. A spatial attention layer for the proposed autoencoder model is inserted between each layer in the encoder, as shown in

Figure 6. Each attention score is applied in the next layer using its dot product with the input vector. The autoencoder is an unsupervised model that learns data representation by generating outputs that are similar to its inputs. Inputs are encoded in latent representation by a high-density layer.

Finally, the latent representation is passed to the decoder to restore back to the same characteristic dimension as the original input. Autoencoder models are generally well-known for their use in noise cancelling and data interpolation [

42,

43]. Therefore, by leveraging the two features of an autoencoder, we devised a more powerful feature representation method that combines the attention mechanisms.

Inspired by [

44], the average pooling and max pooling are performed through the channel dimension and then a 1 × 1 convolution is applied to feature representation. In

,

is a softmax activation function. Here, the role of the local attention is to localize the fault signals from the raw signal and

. In addition, as described above, a context vector is the output obtained from the dot-product attention score function using the input signal. Using the proposed input attention mechanism, the encoder can selectively focus on certain input series instead of treating all input series equally.

The decoder reconstructs the latent vector into a similar signal to the input, as shown in

Figure 7. The decoding process operates in reverse order of the encoding. Furthermore, the attention layer is not included in the decoder for the purpose of stable learning.

The reconstruction error is

The output of the dot product of the attention score and the input layer emphasizes the part on which the model should focus. In addition, Algorithm 1 describes the training procedure of the autoencoder.

| Algorithm 1 Autoencoder Training |

Input: Input data number of epochs , learning rate , amount of batches T. is the parameters of an autoencoder. Output: Trained model E, D, latent vector . - 1:

begin - 2:

Initialize parameters for E, D - 3:

for do - 4:

# extract latent vector and reconstruction at each timestep - 5:

for do - 6:

- 7:

- 8:

- 9:

Compute the reconstruction loss according to (6) - 10:

- 11:

Compute the gradient of the loss with respect to - 12:

for do - 13:

- 14:

end for - 15:

end for - 16:

end for - 17:

end procedure

|

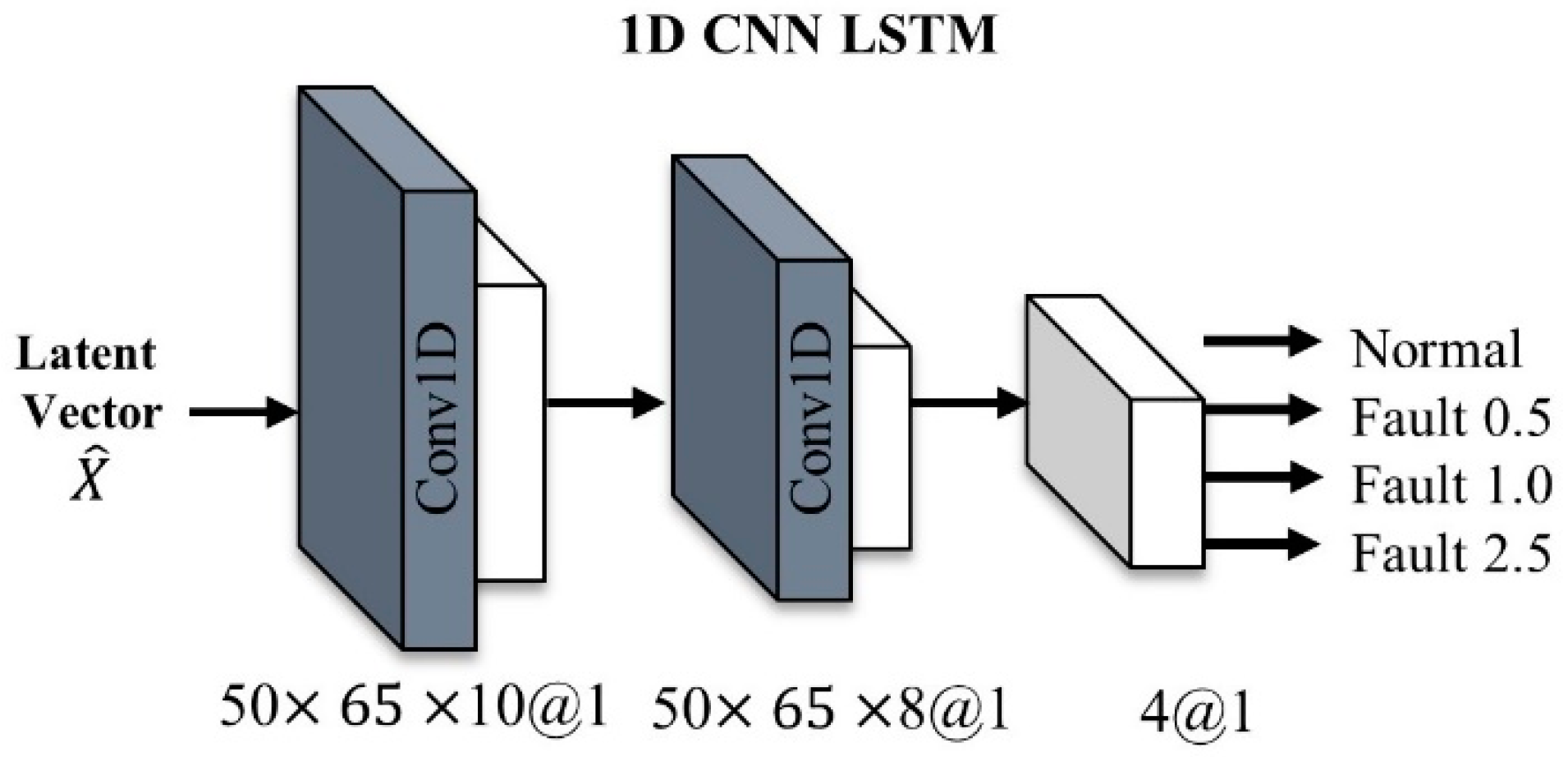

4.3. Classifier Based on 1D CNN LSTM

Figure 8 shows the conceptual architecture of a classifier. In the classifier, the 1D CNN LSTM structure was adopted to learn the contextual features. The architecture consists of 10 layers, including two convolutional layers, two 1D CNN LSTM layers with kernel sizes at 10 and 8, respectively, two batch normalizations with dropout layers, and three dense layers.

The size of the latent vector taken for the data preprocessing process is (None, 50, 65, and 8), and then the output shape changes into (None, 50, 65, and 10). The second layer is the CNN LSTM layer. There are 64 neurons in this layer, and the output shape of this layer is (None, 50, 65, 64). The next layers consist of batch-normalization and dropout, and the above layers are repeated twice. The main purpose of having a dropout layer is to reduce over-fitting, and a softmax layer is used at the end for classification.

4.4. Domain Transformation on Latent Space for Inference

We describe how to use Neural Linear Transformation (NLT) model to allow “domain transformation” in this section. The goal of domain transformation is to transfer the target domain to the source domain distribution to successfully utilize the trained model. In the word embedding area, vector representation is usually used for word analogies to embed a word (i.e., Kings and queens magic).

According to this vector operation, we can assume that a vector representation of the word “queen” is possible if the words “king”, “woman”, and “man” are known.

Figure 9 shows the 2D representation of the semantic analogies.

Inspired by word inference, we propose an algorithm to allow for transformation between domains.

where

is the encoded vector of the normal and abnormal states in the source domain, respectively, and

is the encoded vector of the normal and abnormal states in the target domain, respectively. These data are encoded through the proposed autoencoder. Thus, these data are represented in latent space. Abnormal means a dataset of whole failure status

, where

is the number of failure cases. To apply the concept of transformation by inserting a vector into a word inference as seen above, a feature expression method capable of expressing the source and target data in a specific space is required. Therefore, our proposal aims to move the data of the target domain by constructing a latent space containing the normal state data of the target domain through an attentional autoencoder structure.

In

Figure 9, the vector operation for moving according to the operating condition (domain) of the machine is as in Equation (8). However, since we do not know

, the domain shifting algorithm is required to transfer

to

, which is derived as shown in Equation (9).

The target domain data is denoted as

because we do not know the state of the target domain. Moving the target domain data to the source domain area of the learned latent space is done through the Equation (9) operation. However,

is not from the source domain dataset

.

where

means the input dataset. According to (9), depending on whether

is normal or abnormal, the space is moved as follows.

The domain shift function

is defined as the following:

where

and

are the input and the transferred data that follow the distributions of the target and the source domain, respectively.

denotes the average value of the unary function. The

indicates a vector representing the direction from the target domain to the source domain. Therefore,

shifts the input data

from the target domain to the source domain, yielding the shifted latent vector that follows the distribution of the source domain.

5. Experiments

To verify the performance of the proposed technique, experiments were conducted with two fault diagnosis cases. The first case is an open benchmark data case for rolling element bearing diagnostics. The second was for the fault diagnosis of a rotating gearbox of heavy equipment.

We compared the proposed model with the state-of-the-art classification and CNN architectures, which included the Deep Convolutional Transfer Learning Network (DCTLN) [

21], Deep Convolutional Neural Networks with Wide First-layer Kernels (WDCNN) [

45], Domain-Adversarial Training of Neural Networks (DANN) [

28], and Discriminative Adversarial Domain Adaptation (DADA) [

46]. For DANN [

28] and DADA [

43], the data format is 2D, so Case 2 data was modified with 2D convolution. The detailed parameters for each experiment are shown in

Table 2. To verify the feasibility and practicality of the heavy equipment failure diagnosis, the experiment for Case 2 was set to the same as the actual operating conditions, including noise and other environmental factors.

Table 3 shows the parameters of the encoder. We repeated each experiment five times and have reported the average and the standard deviation of the accuracy.

5.1. Case Study 1: CWRU Dataset

The Case Western Reserve University (CWRU) bearing dataset is a benchmark dataset collected under various operating conditions and was used to verify the performance of the proposed method.

In particular, the defect data of the 12 k drive end bearing was selected as experimental data [

47]. There are four types of bearing fault locations: normal, ball fault, inner race fault, and outer race fault. Each error type was available in three sizes, 0.007, 0.014, and 0.021 inches, respectively, so there are a total of 10 types of error labels. Each fault label contains three types of driving conditions, 1772, 1750, and 1730 RPM motor speed (1, 2, and 3 hp), respectively. Each sample was extracted from a single vibration signal as shown in

Figure 10.

We defined 70% of the vibration signal as a training sample and the rest as a test sample. As shown in

Table 4, each dataset was under different operating conditions, with loads of 1, 2, and 3 hp, respectively. Each dataset contained 12,540 training samples and 3140 test samples, respectively. As shown in

Table 5, the CWRU dataset has a total of 10 classes, and each fault is divided according to fault’s location and size. One of each dataset is defined as the source data, and one of the other datasets is defined as the target domain.

The proposed model performed similarly to or better than the state-of-art method in most cases, as shown in

Table 6. From the results, we found the following interesting fact: when learning from the data obtained from a device rotating at high speeds, the evaluation of low-speed data was quite satisfactory. However, when evaluating the data obtained from a device rotating at low to high speed, reliability was slightly lower.

5.2. Case Study 2: Real Machine Dataset

Unlike the CWRU dataset, the equipment data were collected from heavy equipment actually operating under various conditions (speed, load, etc.) and exposed to different noise environments, taking into consideration its complex and large structures. By doing so, we demonstrated that our model remains robust even in a wide range of speeds and in environments with high noise levels. The corresponding data does not contain accurate speed information (measured without using a tachometer), and the tests were carried out at the speeds of about 100%, 75%, 50%, and 25% based on user manipulation. In addition, data were collected for some classes but not for all classes.

Table 7 shows the operating conditions and fault classes of the collected data. One of each dataset is defined as the source data, and one of the other datasets is defined as the target domain.

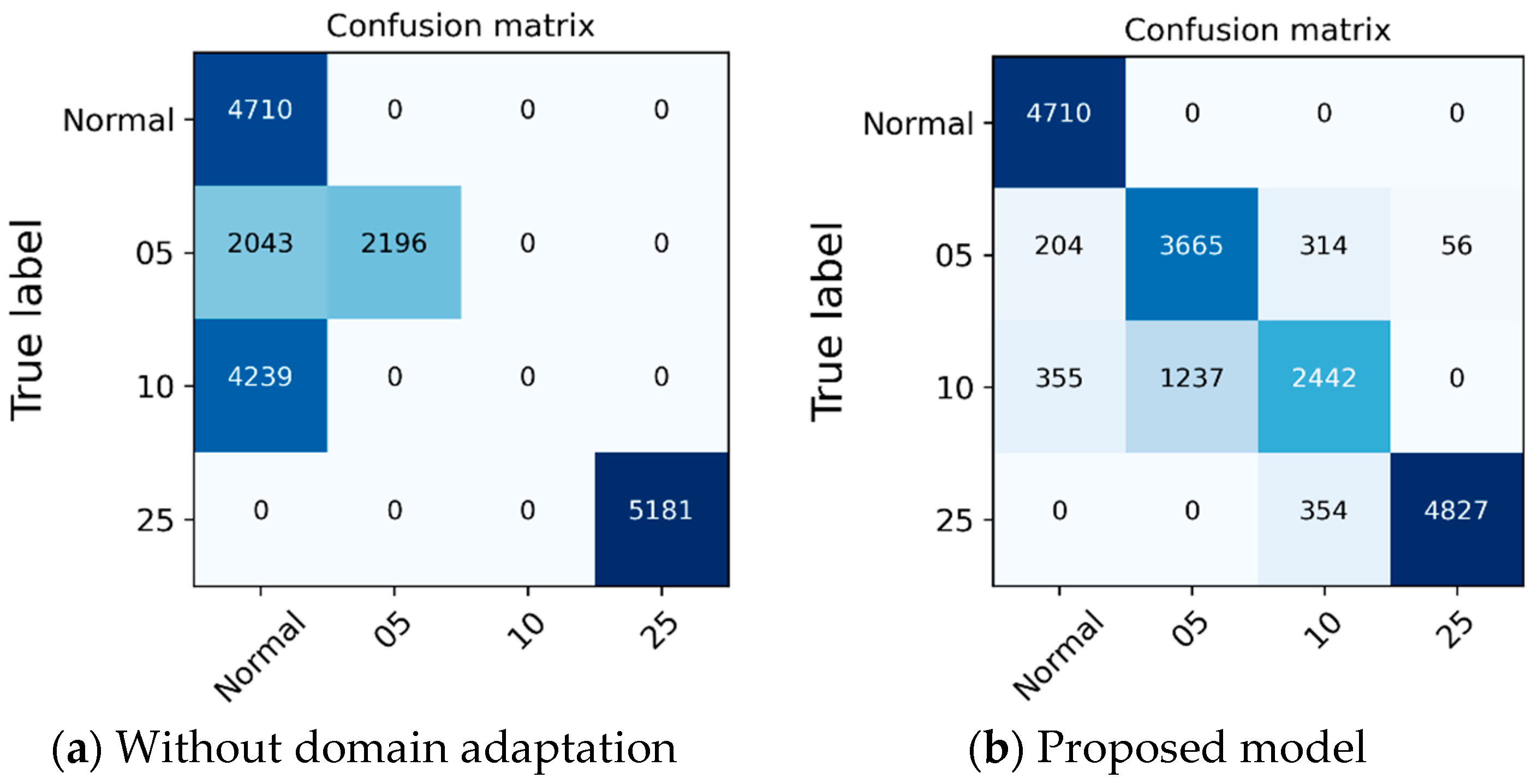

The domain data from the new working condition, measured from the actual machine, was used throughout the entire experiment. The proposed model performed better than the state-of-art method in most cases, as shown in

Table 8. The confusion matrix for the experimental results is shown in

Figure 11. Approximately 79~83% accuracy was achieved for the target domain data. Moreover, we were able to confirm that the ability to recognize the minimum failure (Abnormal_0.5/0.5 mm) and the abnormalities were well distinguished.

In all cases, the proposed model outperformed other models and proved to be an effective technique for diagnosing failures in a rotating body.

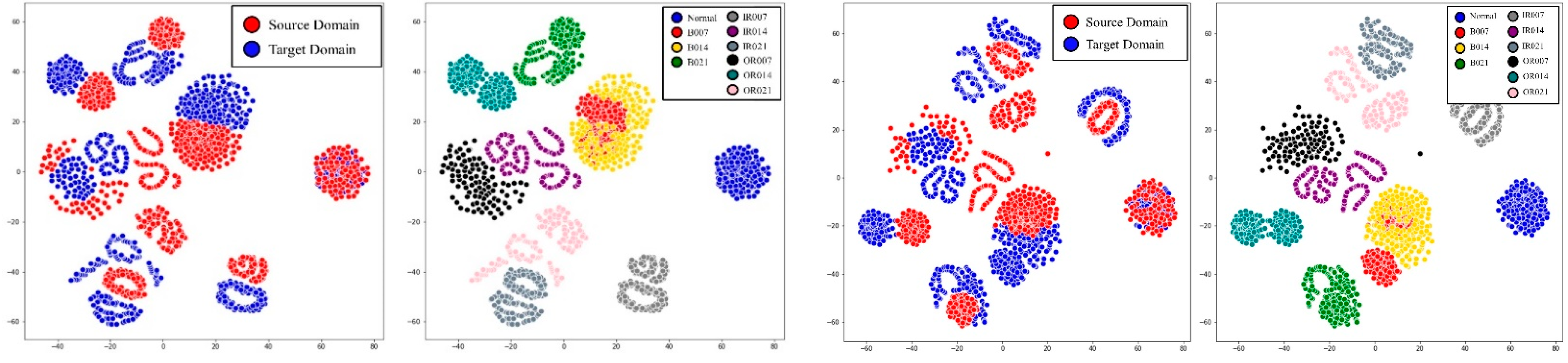

Figure 12 is the results of comparing the effects of the proposed model through the t-SNE results of the A→B transformation case of the CWRU dataset. Before the domain transformation is applied, the source domain and the target domain are clearly separated, but through domain transformation, the failure data of the target domain not used for learning also shares the source domain and the distribution area. In

Figure 13, it can be seen that the domain distribution is similarly close to the actual equipment data in the A→B transformation case. However, in the case of the green area in

Figure 13b, it appears that the distance is not close. Considering that the fault classification performance shows high results, it is judged that it is located in the area where the fault can be identified. In addition, since CWRU data is data acquired through limited experiments in a laboratory environment, there is no significant difference in discrepancy between the source and target domain data. However, in the real equipment case, we found that the distances between the distributions are unpredictable and markedly different. Nevertheless, it is encouraging that the proposed model can achieve the desired performance.

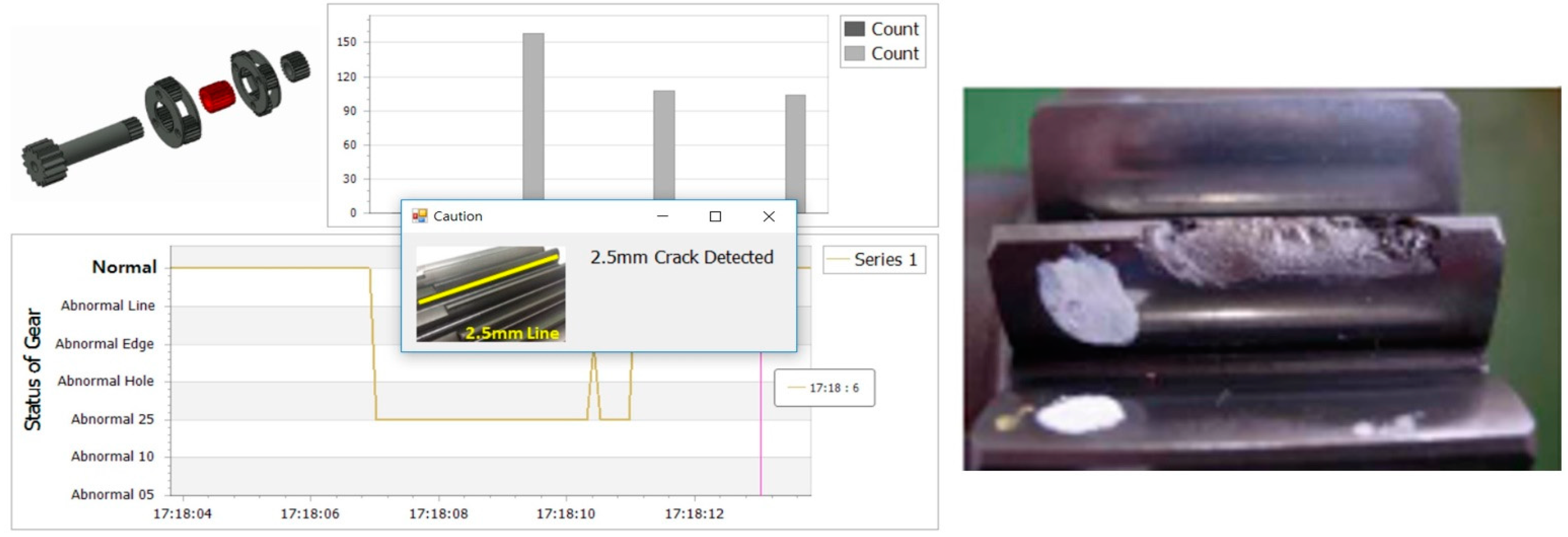

5.3. Evaluation on A Real Machine

We used data collected from the actual equipment to verify reliability of the proposed learning model. Since we did not know the real operating conditions, the raw data looked like unseen data that contained various trends.

Figure 14 shows a program developed to evaluate the data received at 1500 Hz in real time and to diagnose the most frequently occurring actual fault conditions. The system used occurrence count as a criterion to minimize false alarms.

We physically disassembled the gearbox of the machine, compared the estimation type of the program with the actual failure type, and obtained the same results as the failure type estimated by the program. Therefore, the proposed system is suitable for practical applications and has great significance in the large-scale mining machinery industry.

6. Conclusions

This paper presents a domain transformation method for diagnosing faults in rotating machines operating under various operating conditions. The proposed algorithm successfully performs domain transformation using only the general data of the target domain to solve real problems for which it is difficult to obtain failure data under various conditions. An autoencoder with an attention mechanism was applied to extract features containing relevant fault information, and a new latent vector transformation method inspired by word embedding was proposed.

The proposed model was verified with widely used public data and data collected from real equipment and showed an accuracy above 83%, resulting in a significant performance improvement over the existing method. In addition, the proposed model was mounted on an embedded board and verified under actual equipment operation, demonstrating that it can effectively diagnose faults in a new environment. Attempts to apply the system to monitor actual excavator operation have been successful, and the system accurately identified existing defect cases without prior knowledge. Therefore, the proposed system is suitable for practical applications and has great significance in the large-scale mining machinery industry.