Abstract

Unmanned ground vehicles (UGVs) have great potential in the application of both civilian and military fields, and have become the focus of research in many countries. Environmental perception technology is the foundation of UGVs, which is of great significance to achieve a safer and more efficient performance. This article firstly introduces commonly used sensors for vehicle detection, lists their application scenarios and compares the strengths and weakness of different sensors. Secondly, related works about one of the most important aspects of environmental perception technology—vehicle detection—are reviewed and compared in detail in terms of different sensors. Thirdly, several simulation platforms related to UGVs are presented for facilitating simulation testing of vehicle detection algorithms. In addition, some datasets about UGVs are summarized to achieve the verification of vehicle detection algorithms in practical application. Finally, promising research topics in the future study of vehicle detection technology for UGVs are discussed in detail.

1. Introduction

The unmanned ground vehicle (UGV) is a comprehensive intelligent system that integrates environmental perception, location, navigation, path planning, decision-making and motion control [1]. It combines high technologies including computer science, data fusion, machine vision, deep learning, etc., to satisfy actual needs to achieve predetermined goals [2].

In the field of civil application, UGVs are mainly embodied in autonomous driving. High intelligent driver models can completely or partially replace the driver’s active control [3,4,5]. Moreover, UGVs with sensors can easily act as “probe vehicles” and perform traffic sensing to achieve better information sharing with other agents in intelligent transport systems [6]. Thus, it has great potential in reducing traffic accidents and alleviating traffic congestion. In the field of military application, it is competent in tasks such as acquiring intelligence, monitoring and reconnaissance, transportation and logistics, demining and placement of improvised explosive devices, providing fire support, communication transfer, and medical transfer on the battlefield [7], which can effectively assist troops in combat operations.

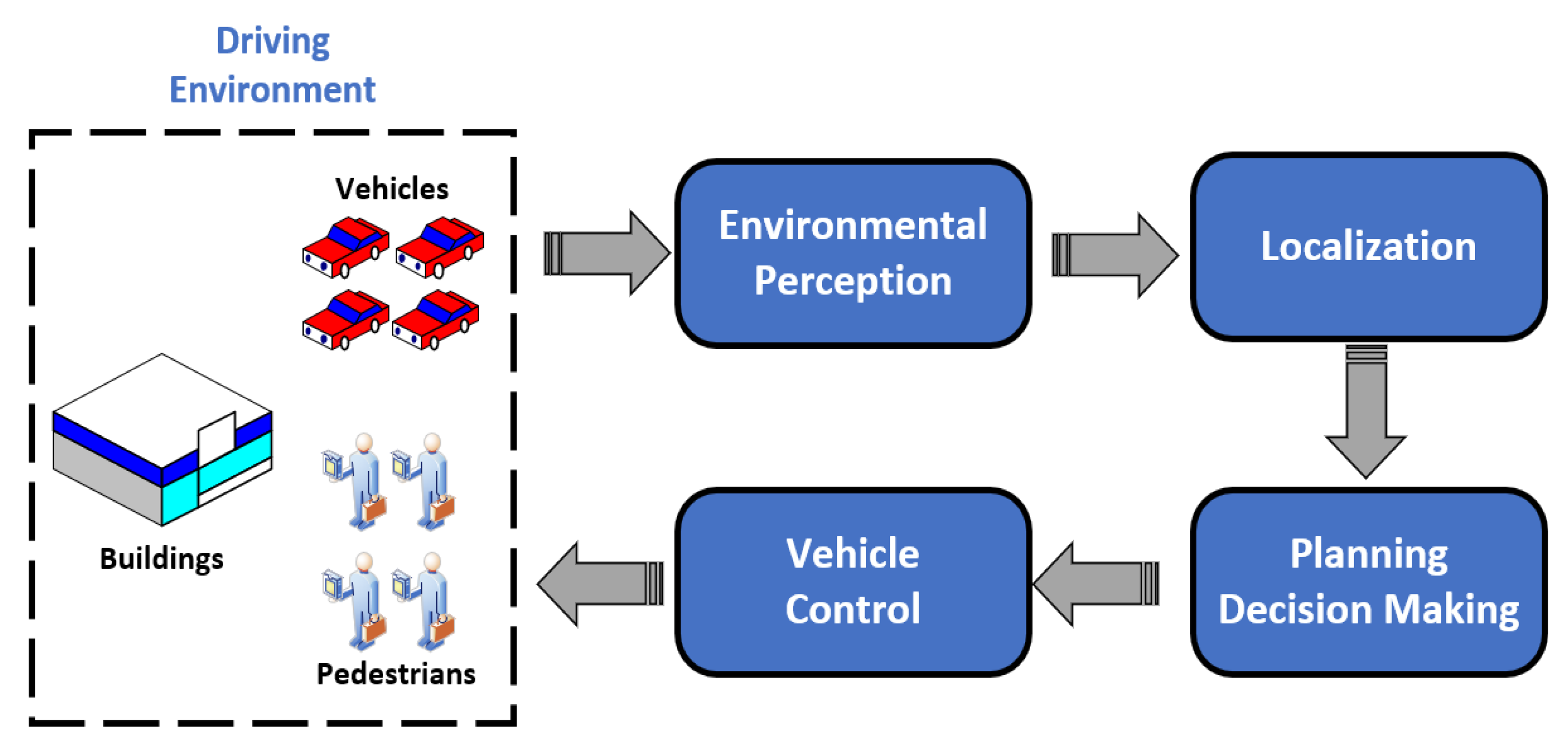

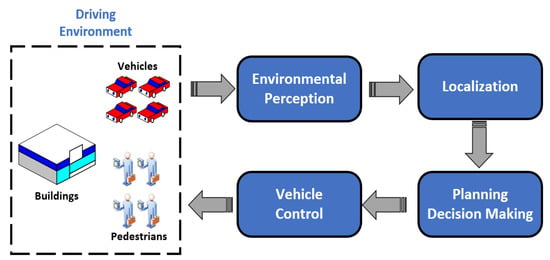

The overall technical framework for UGVs is shown in Figure 1. It is obvious that environmental perception is an extremely important technology for UGVs, including the perception of the external environment and the state estimation of the vehicle itself. An environmental perception system with high-precision is the basis for UGVs to drive safely and perform their duties efficiently. Environmental perception for UGVs requires various sensors such as Lidar, monocular camera and millimeter-wave radar to collect environmental information as input for planning, decision making and motion controlling system.

Figure 1.

Technical framework for UGVs.

Environment perception technology includes simultaneous localization and mapping (SLAM), semantic segmentation, vehicle detection, pedestrian detection, road detection and many other aspects. Among various technologies, as vehicles are the most numerous and diverse targets in the driving environment, how to correctly identify vehicles has become a research hotspot for UGVs [8]. In the civil field, the correct detection of road vehicles can reduce traffic accidents, build a more complete ADAS [9,10] and achieve better integration with driver model [11,12], while in the field of military, the correct detection of military vehicle targets is of great significance to the battlefield reconnaissance, threat assessment and accurate attack in modern warfare [13].

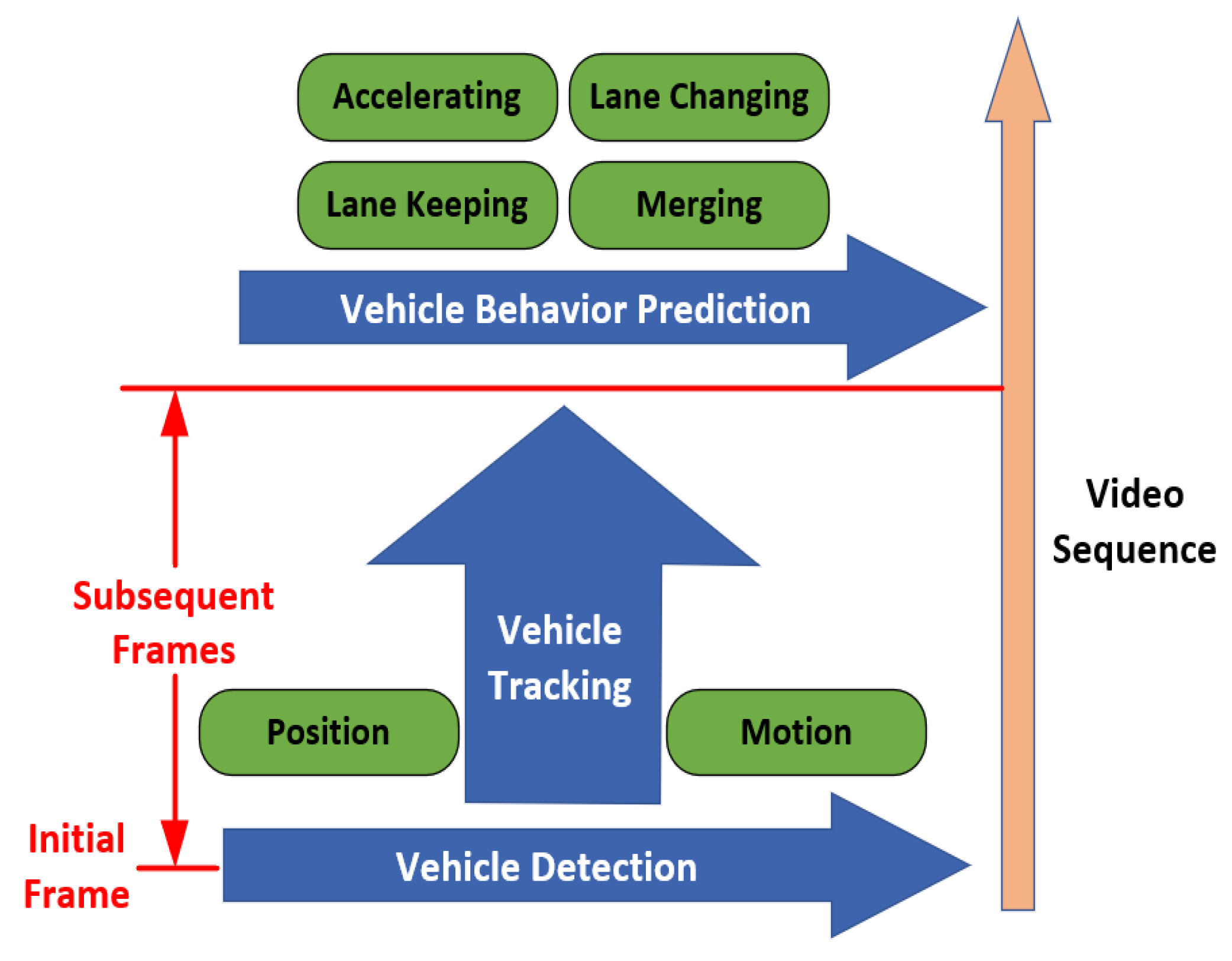

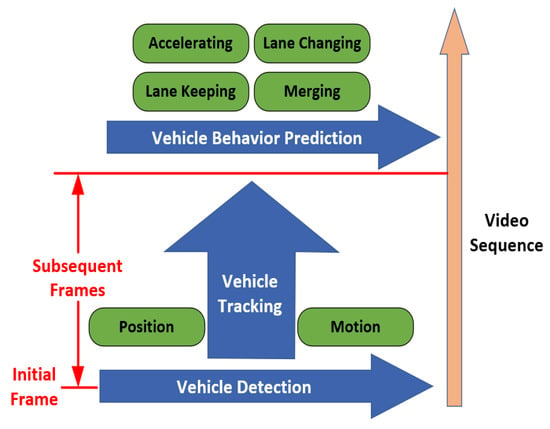

The complete framework of vehicle recognition in UGVs autonomous driving system is portrayed in Figure 2. Generally, vehicle detection is used to extract vehicle targets in a single frame of an image, vehicle tracking aims to reidentify positions of the vehicles in subsequent frames, vehicle behavior prediction refers to characterizing vehicles’ behavior basing on detection and tracking in order to make a better decision for ego vehicle [14]. For tracking technology, readers can refer to [15,16], while for vehicle behavior prediction, [17] presented a brief review on deep-learning-based methods. This review paper focuses on the vehicle detection component among the complete vehicle recognition process, summarizes and discusses related research on vehicle detection technology with sensors as the main line.

Figure 2.

The overall framework of vehicle recognition technology for unmanned ground vehicles (UGVs).

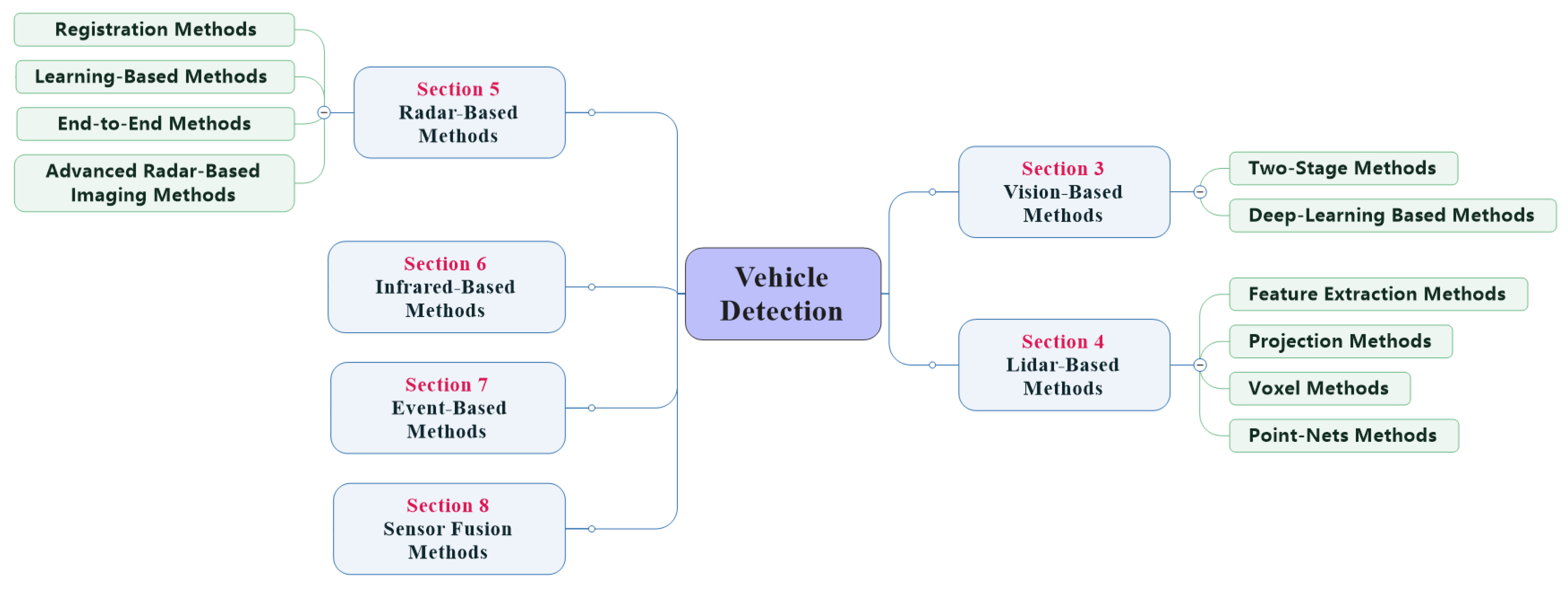

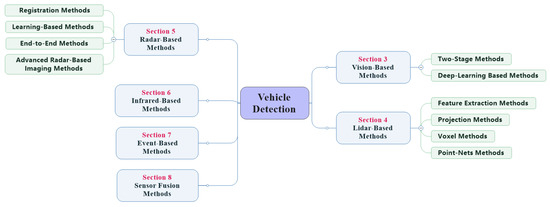

This article is organized as followed. Section 2 introduces the commonly used sensors on UGVs and compares the pros and cons of different sensors under different application scenarios. Section 3, Section 4, Section 5, Section 6, Section 7 and Section 8 systematically summarizes and compares the research works related to vehicle detection using different sensors, the structure of the vehicle detection overview is illustrated in Figure 3. Section 9 introduces the simulation platform related to UGVs, which is convenient for simulation tests of the vehicle detection algorithm. Section 10 introduces the datasets to verify the actual effect of the vehicle detection algorithm. Section 11 summarizes and looks forward to the research focus and direction of vehicle detection technology.

Figure 3.

Structure of vehicle detection algorithm overview in this survey.

2. Sensors for Vehicle Detection

The operation of UGVs requires a persistent collection of environmental information, and the efficient collection of environmental information relies on high-precision and high-reliability sensors. Therefore, sensors are crucial for the efficient work of UGVs. They can be divided into two categories: Exteroceptive Sensors (ESs) and Proprioceptive Sensors (PSs) according to the source of collected information.

ESs are mainly used to collect external environmental information, specifically vehicle detection, pedestrian detection, road detection, semantic segmentation, commonly used ESs include Lidar, millimeter-wave radar, cameras, ultrasonic. PSs are mainly used to collect real-time information about the platform itself, such as vehicle speed, acceleration, attitude angle, wheel speed, and position, to ensure real-time state estimation of UGV itself, common PSs include GNSS, and IMU.

Readers can refer to [18] for detailed information on different sensors. This section mainly introduces ESs that have the potential for vehicle detection. ESs can be further divided into two types: active sensors and passive sensors. The active sensors discussed in this section include Lidar, radar, and ultrasonic, while passive sensors include monocular cameras, stereo cameras, omni-direction cameras, event cameras and infrared cameras. Readers can refer to Table 1 for the comparison of different sensors.

Table 1.

Information for Different Exteroceptive Sensors.

2.1. Lidar

Lidar can obtain object position, orientation, and velocity information by transmitting and receiving laser beam and calculating time difference. The collected data type is a series of 3D point information called a point cloud, specifically the coordinates relative to the center of the Lidar coordinate system and echo intensity. Lidar can realize omni-directional detection, and can be divided into single line Lidar and multi-line Lidar according to the number of laser beams, the single line Lidar can only obtain two-dimensional information of the target, while the multi-line Lidar can obtain three-dimensional information.

Lidar is mainly used in SLAM [19], point cloud matching and localization [20], object detection, trajectory prediction and tracking [21]. Lidar has a long detection distance and a wide field of view, it has high data acquisition accuracy and can obtain target depth information, and it is not affected by light conditions. However, the size of Lidar is large with extremely expensive, it cannot collect the color and texture information of the target, the angular resolution is low, and the long-distance point cloud is sparsely distributed, which is easy to cause misdetection and missed detection, and it is easily affected by sediments in the environment (rain, snow, fog, sandstorms, etc.) [22], at the same time, Lidar is an active sensor, and the position of the sensor can be detected by the laser emitted by itself in the military field, and its concealment is poor.

2.2. Radar

Radar is widely used in the military and civilian fields with important strategic significance. The working principle of a radar sensor is like that of Lidar, but the emitted signal source is radio waves, which can detect the position and distance of the target.

Radars can be classified according to the different transmission bands, and the radars used by UGVs are mostly millimeter-wave radars, which are mainly used for object detection and tracking, blind-spot detection, lane change assistance, collision warning and other ADAS-related functions [18]. Millimeter-wave radars equipped on UGVs can be further divided into “FMCW radar 24-GHz” and “FMCW radar 77-GHz” according to their frequency range. Compared with long-range radar, “FMCW radar 77-GHz” has a shorter range but relatively high accuracy with very low cost, therefore almost every new car is equipped with one or several “FMCW radar 77-GHz” for its high cost- performance. More detailed information about radar data processing can refer to [23].

Compared with Lidar, radar has a longer detection range, smaller size, lower price, and is not easily affected by light and weather conditions. However, radar cannot collect information such as color and texture, the data acquisition accuracy is general, and there are many noise data, the filtering algorithm is often needed for preprocessing, at the same time, radar is an active sensor, which has poor concealment and is easy to interfere with other equipment [24].

2.3. Ultrasonic

Ultrasonic detects objects by emitting sound waves and is mainly used in the field of ships. In terms of UGVs, ultrasonic is mainly used for the detection of close targets [25], ADAS related functions such as automatic parking [26] and collision warning [27].

Ultrasonic is small in size, low in cost, and not affected by weather and light conditions, but its detection distance is short, the accuracy is low, it is prone to noise, and it is also easy to interfere with other equipment [28].

2.4. Monocular Camera

Monocular cameras store environmental information in the form of pixels by converting optical signals into electrical signals. The image collected by the monocular camera is basically the same as the environment perceived by the human eye. The monocular camera is one of the most popular sensors in UGV fields, which is strongly capable of many kinds of tasks for environmental perception.

Monocular cameras are mainly used in semantic segmentation [29], vehicle detection [30,31], pedestrian detection [32], road detection [33], traffic signal detection [34], traffic sign detection [35], etc. Compared with Lidar, radar, and ultrasonic, the most prominent advantage of monocular cameras is that they can generate high-resolution images containing environmental color and texture information, and as a passive sensor, it has good concealment. Moreover, the size of the monocular camera is small with low cost. Nevertheless, the monocular camera cannot obtain depth information, it is highly susceptible to illumination conditions and weather conditions, for the high-resolution images collected, longer calculation time is required for data processing, which challenges the real-time performance of the algorithm.

2.5. Stereo Camera

The working principle of the stereo camera and the monocular camera is the same, compared with the monocular camera, the stereo camera is equipped with an additional lens at a symmetrical position, and the depth information and movement of the environment can be obtained by taking two pictures at the same time through multiple viewing angles information. In addition, a stereo vision system can also be formed by installing two or more monocular cameras at different positions on the UGVs, but this will bring greater difficulties to camera calibration.

In the field of UGVs, stereo cameras are mainly used for SLAM [36], vehicle detection [37], road detection [38], traffic sign detection [39], ADAS [40], etc. Compared with Lidar, stereo cameras can collect more dense point cloud information [41], compared with monocular cameras, binocular cameras can obtain additional target depth information. However, it is also susceptible to weather and illumination conditions, in addition, the field of view is narrow, and additional calculation is required to process depth information [41].

2.6. Omni-Direction Camera

Compared with a monocular camera, an omni-direction camera has too large a view to collect a circular panoramic image centered on the camera. With the improvement of the hardware level, they are gradually applied in the field of UGVs. Current research work mainly includes integrated navigation combined with SLAM [42] and semantic segmentation [43].

The advantages of omni-direction camera are mainly reflected in its omni-directional detection field of view and its ability to collect color and texture information, however, the computational cost is high due to the increased collection of image point clouds.

2.7. Event Camera

An overview of event camera technology can be found in [44]. Compared with traditional cameras that capture images at a fixed frame rate, the working principle of event cameras is quite different. The event camera outputs a series of asynchronous signals by measuring the brightness change of each pixel in the image at the microsecond level. The signal data include position information, encoding time and brightness changes.

Event cameras have great application potential in high dynamic application scenarios for UGVs, such as SLAM [45], state estimation [46] and target tracking [47]. The advantages of the event camera are its high dynamic measurement range, sparse spatio-temporal data flow, short information transmission and processing time [48], but its image pixel size is small and the image resolution is low.

2.8. Infrared Camera

Infrared cameras collect environmental information by receiving signals of infrared radiation from objects. Infrared cameras can better complement traditional cameras, and are usually used in environments with peak illumination, such as vehicles driving out of a tunnel and facing the sun, or detection of hot bodies (mostly used in nighttime) [18]. Infrared cameras can be divided into infrared cameras that work in the near-infrared (NIR) area (emit infrared sources to increase the brightness of objects to achieve detection) and far-infrared cameras that work in the far-infrared area (to achieve detection based on the infrared characteristics of the object). Among them, the near-infrared camera is sensitive to the wavelength of 0.15–1.4 μm, while the far-infrared camera is sensitive to the wavelength of 6–15 μm. In practical applications, the corresponding infrared camera needs to be selected according to the wavelength of different detection targets.

In the field of UGVs, infrared cameras are mainly used for pedestrian detection at night [49,50] and vehicle detection [51]. The most prominent advantage of an infrared camera is its good performance at night, Moreover, it is small in size, low in cost, and not easily affected by illumination conditions. However, the images collected do not contain color, texture and depth information, and the resolution is relatively low.

3. Vehicle Detection: Vision-Based Methods

Vision-based vehicle detection can be divided into two-stage methods and one-stage methods according to the inspection process. These two methods will be discussed in detail in the following content.

3.1. Two-Stage Methods

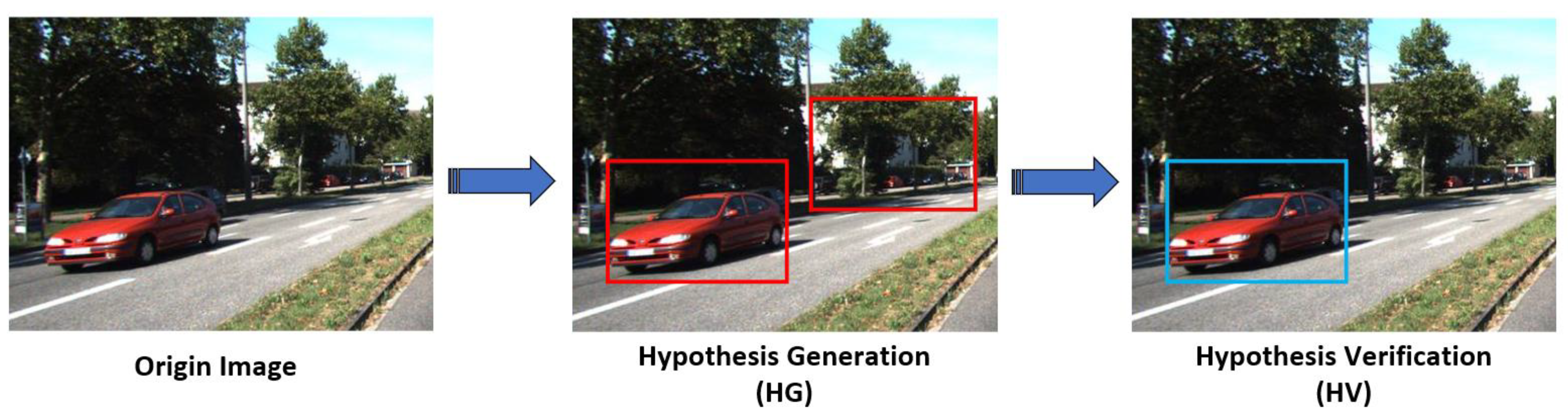

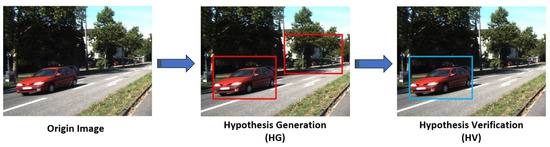

Vision-based two-stage vehicle detection method usually follows two steps: hypothetical generation (HG) and hypothetical verification (HV). The purpose of the HG step is to generate a candidate region that may contain vehicles in the captured image, represents the region of interests (ROIs), while the HV step aims to identify the presence of a vehicle in ROIs. The detection process of two-stage methods is described in Figure 4.

Figure 4.

Detection flowchart of two-stage methods. Schematic is taken from KITTI dataset [52].

3.1.1. Hypothetical Generation (HG)

Various HG methods with vision sensors can be divided into three categories: appearance-based methods, motion-based methods and stereo-based methods. Moreover, related works of appearance-based and motion-based methods are summarized in Table 2 related works of stereo-based methods are summarized in Table 3.

Table 2.

Summary of hypothetical generation (HG) methods for monocular vision.

Table 3.

Summary of HG methods for stereo vision.

- Appearance-based Methods

The appearance-based method depends on the prior knowledge of the vehicle to generate the ROIs in an image. Some important cues to extract vehicle features including color, edges, corners, symmetry, texture, shadow and vehicle lights are reviewed in the following content.

- (a)

- Color

Color provides rich information in an image, ensuring the great potential for scene understanding. In general, colors of vehicle body and lights are evenly distributed and have large discrimination from the road surface and image background, thus color information can be extracted to segment vehicles from background to generate ROIs.

In [53], a conventional RGB color space was used to generate ROIs. Firstly, all red areas in the image were extracted through the RGB color space, then prior knowledge that the vehicle brake lights have the same shape, size and symmetrical distribution was used to design a similar scale calculation model to extract the position of brake lights to be the final ROIs.

Since the RGB color space is sensitive to changes of illumination, in [54], ROIs were also generated by detecting brake lights, but the color space proposed is L*a*b, which is insensitive to the changes of illumination, Moreover, in [55], HSV color space was put forward to generate ROIs. In [56], RGB color space was combined with the background modeling method to reduce the impact of illumination, and the accuracy of the extracted ROIs was about 95%, but the real-time performance of the algorithm was therefore affected.

To achieve a better balance between accuracy and real-time performance, in [57], ROIs were extracted based on HSV color space with the “convex hull” operation to filter out noisy points in the image, then the boundary of ROIs was fitted to make it smooth, the accuracy of the algorithm was about 91.5% with running time about 76 ms/fps. In [58], ROIs were first extracted using HSV color space, and then RGB color space was utilized to further detect the vehicle lights in the ROIs to achieve the detection of emergency vehicles (vehicles with double flashes in an accident, ambulances, etc.). This work expanded the application scenarios and made a contribution to the early warning of vehicle behavior.

- (b)

- Edges

Different views of the vehicle (especially front and rear view) contain different types of edge features, for instance, the horizontal edge features of the bumper and the horizontal and vertical edge features of the windows show a strong ability to generate ROIs.

In general, the changes of gray value on sides of edges in the image is faster than in other areas. Thus, a possible solution is to calculate the sum of the gray value of each row and column in the image to form an “Edge Map”, and preliminarily judge the location of edges in the image (potential location of ROIs) according to the peak gray value [59,60].

In addition to the implementation of “Edge Map” methods, the Sobel operator is another choice to extract vehicle edge features. In [61], Sobel operator was used to extract the left and right edge features of the vehicle, then the grayscale of the image was analyzed to extract the shadow of the vehicle, both were finally fused with a designed filter to generate ROIs, the accuracy of this approach is about 70%.

Moreover, several approaches were carried out to optimize Sobel operator in edge extraction. In [62], the “Scharr-Sobel” operator was established to highlight image edge features and reduce the complexity of the algorithm with an accuracy of 82.2%. In [63], the Sobel operator was combined with Hough transform to extract vehicle edge features, and a Faster-RCNN was trained for verification. In [64], the Sobel operator and Perwitt operator were combined to extract the edge features of vehicles for detection. The accuracy is different under different traffic conditions, which fluctuated between 70% and 90%.

- (c)

- Corners

From the perspective of vehicle design, the shape of the vehicle can be generally regarded as a rectangle. The four corners that can form a rectangle in all corners detected in the image can be used as the basis for generating ROIs.

In [65], Harris corner detection model was proposed to extract corners in the image, and “corner mask” was designed to remove false-detected corners, then corners and color features were fused to generate ROIs, the detection accuracy of vehicles of different colors varied from 79.66% to 91.73%. In [66], a grayscale map was created to select appropriate threshold value to detect corners in the image, then, coordinate of all corners were calculated and paired between each other, and finally “Convex Hull algorithm” were carried out to generate ROIs.

- (d)

- Symmetry

The front view and the rear view of the vehicle have obvious symmetry with respect to the vertical centerline, thus the location of the vehicle is able to be evaluated by detecting the area with high symmetry characteristics in the image to generate ROIs. The symmetry detection methods need to calculate the symmetry measurement in the image to generate a symmetry axis or center point for the vehicle by adapting the image pixel characteristics (grayscale, color, feature point, etc.).

The detection of symmetry features usually requires the extraction of edge features in the image. In [67], the edge features of the image were firstly extracted and 15 horizontal scan lines were generated to select the candidate areas, then, a symmetry measurement function was designed basing on contour features to extract the vehicle symmetry axis, and the k-means clustering was used to extract the central point and generate ROIs. The detection accuracy was about 94.6% with a running time of 80 ms/fps. In [68], the symmetry measurement function was also based on the contour feature to extract the symmetry axis and the center point, compared with [67], the author transplanted the program package to the Android system, and the detection accuracy was about 92.38%, with a running time of 33 ms/fps. Limited by the computing performance of the Android system, although this algorithm meets the requirements of good detection accuracy and real-time performance, it can only be used for the detection of simple scenes, and the results did not have a strong reference. The Canny operator was used in [69] to extract edge features, and combined with the two-frame difference method, the extracted edge was “amplified” to enhance its feature strength, so as to improve the accuracy of symmetry axis extraction. This method had been tested to achieve a better performance for dynamic vehicle detection.

Apart from extract image edge features first, a linear regression model was also used to extract vehicle symmetry axis in [70], Haar features with Adaboost classifier were trained for verification using an active learning method, with an accuracy of about 87% and running time of 40 ms/fps.

- (e)

- Texture

The presence of the vehicle will cause the local intensity change in an image, and the rules of intensity change follow a certain texture form. Thus, the difference between the vehicle texture and the background environment can be used to extract ROIs from the image.

There were few studies on the algorithm of vehicle detection by extracting texture features. The main approaches include entropy [71], gray level co-occurrence matrix [72] and LBP [73]. In [71], the entropy value of each pixel was calculated in the image, and the area with high entropy value was regarded as the ROIs of possible vehicles. In [72], ROIs were extracted by calculating the gray co-occurrence matrix of images, compared with the simple calculation of image entropy, this method was a second-order statistic of pixels, with higher accuracy but larger computing cost. In [73], the texture feature of the image background (mainly referred to road area) was extracted by LBP method, and the location of the shadow was extracted by using the characteristic that the bottom shadow is similar to the texture feature of the road area, both were fused to generate ROIs. The texture features extracted by the LBP method were suitable for further classification by SVM.

- (f)

- Shadow

The shadow area underneath the vehicle in the image is darker than the area of the road surface. The feature of brightness difference can be used to extract ROIs by investigating image intensity.

The conventional shadow-based method is to select an appropriate threshold (lower bound of road intensity) to segment the shadow areas. In [74], road areas in the image were first extracted, and the shadow areas were defined according to the intensity that is less than the threshold “m-3σ” to generate ROIs, where m and σ are the average and standard deviation of the road pixel frequency distribution, after which ROIs were verified by SVM. The detection accuracy is about 92% with a running time of 76 ms/fps.

Since the intensity of the shadow area is sensitive to changes of illumination, selecting a fixed threshold to segment the shadow area cannot be applied to various scenes. Therefore, an adaptive threshold algorithm is carried out in [75], the pixel ratio of each point in the image was first calculated, then two parameters α and β between 0 and 1 were selected and the areas whose pixel ratio between α and β were defined as shadows. Although this method solved the limitation of the fixed threshold methods, the selection of parameters α and β requires constant iteration, which makes it difficult to obtain an optimal solution. Thus, in [76], a “three threshold” method was presented based on the RGB color space combined with the ViBe algorithm to extract the shadow areas in order to further improve the robustness.

Different from the aforementioned literature by determining the threshold value as the lower bound of the intensity of the road area in the image to segment the shadow area, in [77], the rough upper bound of the intensity of the undercarriage was determined based on the “binary mask function” constrained by saturation and intensity difference Value to reduce false detection rate.

- (g)

- Vehicle Lights

For nighttime vehicle detection, the performance of vision cameras is greatly affected due to the poorly illuminated conditions, therefore most cues summarized above are not reliable during nighttime detection. A salient feature of the vehicle is its headlights and taillights that can be extracted to represent ROIs of the vehicle.

One possible solution is to extract the features of vehicle lights from the image background by setting a specific threshold [78]. In [78], the threshold was selected as 30% of the maximum gray value in the threshold image to extract the position of the lights to generate ROIs. Since the gray value of the vehicle lights is various at different distances from the camera, and the roadside lighting equipment will also affect the environmental brightness, only setting a single threshold for detection is prone to false detection.

In order to solve this problem, in [79], the lower bound of the threshold value of lights was first calculated based on the grayscale image, then, the OTSU method was proposed to obtain the optimized threshold, the similarity measurement was finally calculated to match the extracted vehicle lights with Kalman filter for noisy reduction to generate ROIs. In [80], the “CenSurE” method was put forward based on the Laplacian of Gaussian (LOG) to detect areas with sharp intensity changes in the image to extract the features of the lights, and paired by detecting lights on the same horizontal line, this approach did not depend on a specific threshold and achieved a faster calculation speed than LOG.

Apart from the threshold method, some researchers used machine learning methods to extract features of vehicle lights. In [81,82], original images were first converted into grayscale images, then an Adaboost classifier with Haar features was trained to get the position of the vehicle lights, and finally, the similarity measurement was calculated to pair the lights.

The width lights and brake lights of vehicles are red, therefore the red areas can be detected according to the color-based method discussed above. In [83], RGB color space was used to extract features of vehicle lights, and then closing operation (one of the morphological operations) was performed to eliminate holes in the feature map. ln [84] HSV color space was proposed to extract features of car light, after which Gaussian filter was used for filtering and noise reduction, and non-maximum suppression (NMS) method was implemented to eliminate the overlapping area.

- (h)

- Multiple Features Fusion

Since using a single feature for vehicle hypothesis generation is limited in different application scenarios, ROIs can be generated by fusing multiple features to improve the robustness and reliability of the detection system, however, it will increase the complexity and calculation time of the system. There is no conventional method to select which features and which algorithm to use for fusion, some related works are listed in Table 4.

Table 4.

Summary of Fusion methods for HG.

- Motion-based Methods

The motion-based methods generate ROIs by extracting the changes of the moving vehicle relative to the background in the image sequence. Compared with the appearance-based method, it is able to achieve a more direct detection process without prior knowledge of the vehicle. Nevertheless, for a single frame image or a low-speed moving and a stationary vehicle in the image sequence, the method will fail. Related methods include the frame difference method, background modeling method and optical flow method.

- (a)

- Frame Difference

The frame difference methods first calculate the absolute value of the grayscale difference between adjacent frames of the image sequence and then select a threshold to distinguish the background and foreground in the image. If the absolute value satisfies the threshold condition, it can be judged as the ROIs of moving vehicles.

In [88], a conventional two-frame difference method was proposed for vehicle detection. Although the two-frame difference method achieved a low computing cost, it was pointed out that if the detected object had a relatively uniform grayscale, the overlapping part of the moving objects in the image will appear to be “blank” [89]. Therefore, the three-frame difference method was established to solve this problem in [89]. Later, some researchers made further improvements to the three-frame difference method to better solve the problem of “blank holes” in the image. The three-frame difference method was combined with the Gaussian model in [90], while it was combined with the image contrast enhancement algorithm and morphological filtering in [91]. In [92] a five-frame difference method was designed for vehicle detection in low-speed motion.

- (b)

- Background Modeling

This approach establishes a background model through the video sequence. It generates a hypothesis of moving vehicles through pixel changes with the assumption that the background is stationary. The main challenge background modeling needs to solve is the establishment and update of the background model.

The typical background modeling method is the Gaussian Mixture Model (GMM) proposed by [93]. The main idea of the method is to assume that all data points in the image are generated by a finite Gaussian distribution with unknown parameters. Due to the slow initialization of GMM and the inability to distinguish between moving objects and shadows [94], an adaptive GMM method was designed to solve this problem in [94], and adaptive GMM was combined with a vehicle underneath shadow features to improve the computational efficiency and robustness.

Another typical algorithm is the codebook algorithm [95], which is characterized by high calculation accuracy. An improved codebook algorithm based on the conventional codebook algorithm was designed in [96] to improve its computational efficiency in complex environments.

In addition, the ViBE algorithm was proposed in [97]. This approach first selected a pixel and extracted the pixel value in the neighborhood of this pixel at a current and previous time to form a point set, then the pixel value of the selected pixel was compared with the pixel value in point set to determine whether the pixel belonged to the background, in general, the ViBE algorithm was able to achieve strong real-time performance, and overall has a relatively good background detection effect. Moreover, in [98], an adaptive ViBE algorithm was designed based on the ViBE algorithm to improve the background update efficiency for scenes with changing illumination.

Machine learning methods have also been applied to background modeling by researchers. In [99], the feature input was the first to fourth order statistics of the grayscale of the image, and the output was the appropriate morphological parameters to dynamically adjust the extracted background, the author tested it under the condition of sudden illumination changes and result showed better robustness.

- (c)

- Optical Flow

The optical flow methods obtain the motion information of the object by matching the feature points between two adjacent frames in the image sequence or calculating the pixel changes, the return value is the optical flow vector of the object (describes the instantaneous velocity of a certain point in the image), and the optical flow at each point in the image constitutes an optical flow field to generate ROIs for moving objects.

Optical flow can be divided into dense optical flow and sparse optical flow. Dense optical flow is also called global optical flow, and it calculates the optical flow field of the whole image or a certain area, the registration n result is accurate but the computing cost is large. The typical methods are the Horn–Schunck (HS) optical flow method and its extension [100]. Sparse optical flow is also called local optical flow to calculate the optical flow field at some specific point, which improves the calculation efficiency but reduces the registration accuracy. The typical methods are the Lucas–Kanad (LK) optical flow method and its extension [101].

In [102], pyramid LK optical flow was proposed with the fusion of edge feature extraction, and k-means clustering was finally used to detect vehicles. In [103], the fusion of HS optical flow method and median filtering was proposed to achieve vehicle detection. In [104], ROIs were first extracted based on CNN, then, the Haar feature was utilized to extract feature points for ROIs, and finally, the LK optical flow method and k-means clustering were combined to achieve vehicle detection.

- Stereo-based Methods

It should be noted that the aforementioned appearance-based methods and motion-based methods can also be carried out in the images collected by stereo vision. Compared with monocular cameras, stereo cameras can obtain scene depth information, which enables more information for vehicle detection. Typical hypothesis generation methods using stereo camera include Inverse Perspective Mapping and disparity map.

- (a)

- Inverse Perspective Mapping

The Inverse Perspective Mapping (IPM) refers to transforming the image collected by the stereo camera from the camera coordinate system to the world coordinate system through the rotation and translation transformation, and the result is a top view image without disparity. Depth information of roads and objects can be obtained through IPM, providing intuitive information for vehicle detection.

In [105,106], IPM was used to convert the left and right images from stereo camera into two top view images respectively; then, pixel difference between top view images was calculated, the areas with a non-zero difference were regarded as possible vehicles, and the locations of the ROIs were finally determined by the polar coordinate histogram. In [107], IPM was fused with a background modeling method to detect vehicles in motion. Moreover, IPM can also be used to obtain more information about the vehicle to be detected. In [110], ROIs were extracted based on IPM and the distance between the vehicle and the camera center was obtained, while in [111], IPM was combined with CNN to obtain the location, size and attitude angle of vehicles.

Using IPM for vehicle detection is easy to implement and computationally efficient, but this approach needs to be assumed that the road surface is completely flat and the road area in the image is large, so it is not suitable for vehicle detection in complex or unstructured scenes. Since the geometric information of the road can be extracted intuitively from the top view, IPM can often implement for road detection [128,129,130], some researchers used IPM to detect road areas to assist vehicle detection.

In [108], the drivable areas were first generated through IPM as preliminary determined ROIs, then vehicle lights were extracted to generate precise ROIs. In [109], road edges were extracted through IPM to first generate ROIs, the left and right cameras were then transformed to top view images and pixel differences were compared to achieve vehicle detection.

- (b)

- Disparity Map

The difference between the corresponding pixels between left and right images is represented as disparity, calculating disparity of all the image points forms the disparity map, in addition, the disparity is negatively related to the distance between the image point and the camera. Planes in the image can be extracted by statistically analyzed the disparity distribution to generate areas contain an object with flat features (i.e., Side of the vehicle).

The derivation and calculation of the disparity map were reviewed in [131]. In order to make better use of the disparity map for object detection, some researchers have optimized the traditional disparity map to directly acquire scene information. For instance, the V-disparity map [112] that can be used to extract planes parallel to the camera horizontal plane (usually referred to as road areas), the UV-disparity map [113] that combined the U-disparity map on the basis of the V-disparity map to further extract planes perpendicular to the camera horizontal plane to realize 3D reconstruction of the environment.

In [114], a V-disparity map was combined with an optimized evolutionary algorithm (EA) for vehicle ROIs generation. In [115], the UV-disparity map and DCNN were combined to jointly extract vehicle ROIs. In [116], V-disparity maps with Hough transform were first utilized to extract road areas, and then a U-disparity map was used to generate ROIs, in addition, the distance of the vehicles was also derived based on depth information. In [118], a stereo camera was fused with millimeter-wave radar for vehicle detection, where stereo images were acquired to detect nearby vehicles through UV-disparity maps. In [119], ROIs were extracted based on a UV-disparity map and verified by Faster-RCNN.

Vehicle detection based on original disparity maps were also carried out by some researchers. One main approach is clustering point clouds data to extract vehicle information. In [120], a mean-shift algorithm was proposed based on a semi-dense disparity map to achieve vehicle detection and tracking. In [121], K-neighbor clustering with frame difference method and fast corner detection method was put forward to realize vehicle detection, while in [123], K-neighbor clustering was combined with optical flow, moreover, in [122], DBSCAN was used for ROIs generation. In [124], CNN was trained to generate semantic maps, then clustering based on the DFS method was performed to detect vehicles.

In addition, some researchers did not process the original disparity map for vehicle detection based on the clustering method. A typical method was designed in [117], the author first calculated the disparity map and combined the depth information to achieve 3D reconstruction, then, the RANSAC method was used to fit the road surface, and the areas above a certain threshold of the ground were regarded as ROIs, which were then matched with the predefined CAD wireframe model of the vehicle for verification.

- (c)

- Optical Flow

The application of the optical flow method in stereo vision is similar to that in monocular vision. In general, feature points of interest are usually extracted through a single camera, and the three-dimensional coordinate of the object to be detected is determined by combining the disparity map and depth map.

In [126], the optical flow was used to detect vehicles moving in opposite directions, while the Haar feature was extracted to detect vehicles moving in the same direction. In [125], the 3D optical flow was obtained by matching feature points and the motion state of ego vehicle generated by the visual odometer, then 3D optical flow was projected to the aerial view to realize vehicle detection. In [127], optical flow and vehicle motion estimation model were designed based on stereo vision, then optical flow generated by camera motion (COF) was estimated by using the motion information of vehicle and the depth information of scene, and mixed optical flow (MOF) of scene was estimated by using HS algorithm. Finally, MOF and COF were differential calculated with elimination of static objects in the background, and vehicle detection was achieved by morphological filtering.

3.1.2. Hypothetical Verification (HV)

The input of the HV stage is the set of hypothesis locations generated from the HG stage. During the HV stage, solutions are carried out to validate whether there is a true vehicle in ROIs. Various HV methods can be divided into two categories: template-based methods and classifier-based methods.

- Template-based Methods

The template-based methods need to establish the predefined vehicle feature template basing on different types of vehicle images, then the similarity measurement is put forward by calculating the correlation between the templates and ROIs.

Due to various types, shapes, and brands of vehicles, it is necessary to establish a generic template that can represent the common characteristics of the vehicle in order to make the template more widely used. Typical feature templates included as follows: a template that combines rear windows and license plates [132], a rectangular template with a fixed aspect ratio [133], and an “inverted U-shaped” template with one horizontal edge and two vertical edges [134].

In the same image, the vehicle could appear in different size and shape related to its distance and captured perspective from the camera [9], therefore, the traditional template matching method did not achieve good robustness, and the establishment of a dynamic template was significant to improve the efficiency of verification. In [135], a hybrid template library was established for matching, fusing four kinds of feature templates including vehicle wireframe model, texture model, image flatness and image color consistency. In [136] a deformable vehicle wireframe model was established with logistic regression to achieve vehicle detection, the model was composed of several discrete short line segments, and it could be dynamically adjusted to adapt to different capture perspectives and distance of vehicles in image, through the translation and rotation transformation of the short line segments.

A large number of modern vehicle datasets were collected in [137], vehicle appearances were analyzed through active learning methods, and a multi-view variable vehicle template library was then established. It should be noted that this approach was different from the conventional vehicle template mentioned above, this template library was composed of visual images of various vehicles from different perspectives, and each picture in the template library could be replaced according to driving condition, thereby expanding the application range of the template matching algorithm and optimizing the matching accuracy.

- Classifier-based Methods

The classifier-based methods establish an image classifier to distinguish vehicle targets versus non-vehicle targets in the candidate area. A large number of labeled positive (vehicle) and negative (non-vehicle) samples are used to train a classifier to learn the characteristic of the vehicle. This approach consists of two steps: feature extraction and object classification.

- (a)

- Feature Extraction

In general, feature extraction refers to the process of converting the training samples into a feature vector that satisfies the input of the classifier. In order to achieve better classification results, the design and selection of features are particularly important. A fine feature should include most of the appearance of the vehicle, and should be as simple as possible to improve training efficiency. Commonly used feature extraction methods include HOG feature, Gabor filter, PCA, Haar feature, SIFT and SURF feature.

HOG refers to Histogram of Oriented Gradient, which was first proposed in [138] for pedestrian detection, and then gradually used in the related work of vehicle detection. Most of the current research are devoted to optimizing conventional HOG in order to improve its calculation efficiency and detection accuracy.

In [139], the vehicle feature was extracted based on traditional HOG methods for classification training. In [140], the performance of three feature extraction methods of CR-HOG, H-HOG and V-HOG in vehicle detection were compared, and it was found that V-HOG has the best overall effect, compared with the conventional HOG method, the calculation efficiency was improved with the reducing of accuracy. The results of this literature were further verified in [141]. In [142], the accuracy of the V-HOG was optimized by constantly adjusting its parameters from experimental results. In [143], the calculation efficiency was improved by reducing the dimensions of the extracted feature vector based on the traditional HOG method. Since the traditional HOG method can only calculate the gradient features in both horizontal and vertical directions, in [144], Compass-HOG was designed to expand the direction dimension of image gradient calculation to reduce information loss and improve accuracy. In [145], 2D-HOG is designed to deal with the problem of resolution change of input image, and the accuracy was also improved compared with HOG.

The principle of the Gabor filter is to perform Fourier transform in a specific time window of the image, which can better extract the straight line and edge features of different directions and scales in the image. This method is very similar to the response of human vision to external stimuli, and can effectively extract image frequency-domain information, however, it has a high computing cost.

In [146], a parameter optimization method based on a genetic algorithm was designed for the Gabor filter to extract vehicle features. In [147,148], the Log–Gabor filter was designed to compensate for its amplitude attenuation in the process of processing natural language images [149] to achieve better image frequency-domain information extraction characteristics in vehicle detection. In [150], vehicle features in the night environment were extracted by the Gabor filter, and the filter parameters were adjusted through experiments.

PCA refers to Principal Component Analysis, converting the relevant high-dimensional indicators into low-dimensional indicators to reduce the computing cost with as less as possible loss of the original data.

In [151], PCA was used to extract vehicle features and SVM was trained to classify the generated ROIs, which can identify vehicles in front view and rear view at the same time. Since the traditional PCA extracted one-dimensional feature vectors of the image, the vector dimension is large with high computing cost, in [152], the vehicle feature extraction was realized by 2D-PCA combined with a genetic algorithm, nevertheless, the image pixel of the dataset used by the author is relatively low, optimizing computation efficiency by reducing the pixel was not representative. Thus in [153], the pixel of datasets was improved, 2D-PCA was combined with a genetic algorithm, fuzzy adaptive theory and self-organizing mapping for vehicle identification. In [154], features were extracted by HOG and dimensionality was reduced by PCA to reduce the amount of computation.

The Haar feature is based on the integral map method to find the sum of all the pixels in the image, which was first applied to face recognition in [155], Haar features include edge features, straight-line features, center features and diagonal features. The Haar feature is suitable for extracting edge features and symmetry features of vehicles, with a high computational efficiency to better meet the real-time requirements of vehicle detection.

In [156], the Haar feature was combined with a 2D triangular filter to achieve feature extraction. In [157,158], the Haar feature was introduced into LBP to realize vehicle detection through statistical image texture features, In [70], detection of frontal vehicles was based on the Haar feature, and active learning was then carried out to realize the detection of occluded vehicles. In [159], the Haar feature was used in infrared images combined with the maximum entropy threshold segmentation method to achieve vehicle detection.

SIFT refers to Scale-Invariant Feature Transform. It was proposed in [160], generating features by extracting key points in the image and attaching detailed information.

In [161], feature points were extracted based on SIFT, and feature vectors near feature points were extracted using the implicit structural model (ISM) to train SVM to detect vehicles. Due to the slow computing speed of the traditional SIFT method, in [162] vehicle feature was extracted by the Dense-SIFT method, so as to realize the detection of remote moving vehicles and improve the computing efficiency. In [163], the color invariant “CI-SIFT” was designed to enable it to have good characteristics when detecting vehicles of different colors. The author first recognized the body color through HSV color space, and then extracted the features through CI-SIFT, finally, vehicle detection was realized based on the matching algorithm.

SURF feature is the optimization and acceleration of SIFT feature. In [164,165], the symmetric points of vehicles were extracted based on SURF features to realize vehicle detection. By combining Haar features and SURF features, the real-time performance of this algorithm was improved by combining good robustness of SURF features and fast calculation speed of Haar features in [166]. In order to further improve computational efficiency, SURF characteristics and the BOVW model were combined to realize the detection of front and side vehicles in [167].

The comparison of different feature extraction methods is shown in Table 5.

Table 5.

Summary of feature extraction methods for hypothetical verification (HV) stage.

- (b)

- Object Classification

The purpose of object classification step is to choose or design a classifier according to the extracted features. The most commonly used classifiers for hypothesis verification are SVM and AdaBoost, related works are listed in Table 6.

Table 6.

Summary of related works for classifiers of HV stage.

3.2. Deep-Learning Based Methods

The aforementioned two-stage method includes two steps: HG and HV to form two-stage detector. In general, deep-learning based methods refer to designing a single-stage detector which does not need to extract ROIs from the image through training a neural network, but directly considers all regions in the image as region of interest, the entire image is taken as input, and each region is judged to verify whether it contains vehicles to be detected. Compared with the two-stage method, this method omits ROIs extraction and achieves a much faster processing speed, which is suitable for scenes with high real-time requirements, however, the detection accuracy is relatively low and the robustness is poor. In addition, there are also two-stage detectors designed based on deep learning methods, such research will be discussed together in this section.

3.2.1. Two-Stage Neural Network

Generally speaking, the two-stage neural network is composed of region proposal stage and region verification stage, where the region proposal stage aims to generate candidate regions, while the region verification stage is carried out to train a classifier based on features generated by the convolution process to determine whether there is a true vehicle in candidate regions. With the development of deep learning technology, it has been widely used in various fields for its highly nonlinear characteristic and good robustness. Classical and recent neural networks are summarized in Table 7 and Table 8.

Table 7.

Summary and characteristic of classical two-stage neural network.

Table 8.

Summary of related works for recent two-stage neural network for vehicle detection.

3.2.2. One-Stage Neural Network

Training a one-stage neural network to achieve vehicle detection have emerged in recent years, typical single-stage detectors include the YOLO series and SSD. YOLO was proposed by [180] and it was the first single-stage detector in the field of deep learning. The framework of YOLO was a deep convolutional neural network (DCNN) and full convolutional neural network (FCNN), where DCNN was used to extract image features and greatly reduce its resolution to improve computational efficiency, FCNN was adapted for classification. Although YOLO has fast detection speed, it sacrificed detection accuracy. Thus in [181], SSD was proposed to solve the limitations of YOLO, which increased the resolution of the input image before extracting image features, thereby improving the detection accuracy, and also allowing to detected objects with a different scale. Subsequent YOLO-based improved networks include YOLO9000 (YOLOv2) [182], YOLOv3 [183], and recently optimized detection efficiency of YOLOv4 [184] and YOLOv5 [185]. Typical one-stage networks are summarized in Table 9.

Table 9.

Summary and characteristic of classical one-stage neural network.

In addition to the typical networks mentioned above, there are also many scholars who have improved original networks and designed new networks on this basis. See Table 10 for related work.

Table 10.

Summary of related works for recent one-stage neural network for vehicle detection.

4. Vehicle Detection: Lidar-Based Vehicle Methods

Although vision-based vehicle detection methods are popular among UGVs, the lack of depth information makes it difficult to obtain vehicle position and attitude information. Therefore, three-dimensional detection methods are significant to be designed to achieve better scene understanding and communication with other modules such as planning and decision making, furthermore, they are also important for vehicle-to-everything (V2X) in ITS application [192]. Lidar is a good choice to effectively make up for the shortcomings of vision methods in vehicle detection, related approaches can be divided into four categories: classical feature extraction methods and learning-based approaches including projection methods, voxel methods and point-nets methods. Characteristics of each method and related learn-based methods are summarized in Table 11 and Table 12.

Table 11.

Research of vehicle detection with Lidar.

Table 12.

Summary of related works for learning-based vehicle detection using Lidar.

4.1. Feature Extraction Methods

Classical feature extraction methods for Lidar mainly refer to extracting various types of features by processing point clouds, such as lines extracted by Hough Transform, planes fitted by RANSAC. In the field of vehicle detection, vehicle geometric feature and vehicle motion feature are usually extracted from point clouds to achieve vehicle detection.

4.1.1. Vehicle Geometric Feature

Vehicles show various types of geometric features in point clouds, such as planar, shape, and profile. Therefore, vehicle detection can be realized by extracting geometric features in Lidar point clouds.

In [193], a 3D occupancy grid map was first constructed through octree, then a list of the grids whose states were inconsistent between the current and previous scan was maintained as potential areas of objects, finally, the shape ratio feature of potential areas was extracted to achieve vehicle detection. However, this extracted shape feature was not robust for occluded vehicles. In [194], a Bayesian approach for data reduction based on spatial filtering is proposed that enables detection of vehicles partly occluded by natural forest, the filtering approach was based on a combination of several geometric features including planar surface, local convex regions and rectangular shadows, finally features were combined into maximum likelihood classification scheme to achieve vehicle detection. In [195], profile features were first extracted under polar space as the input of the subsequent detection scheme, then an online unsupervised detection algorithm was designed based on Gaussian Mixture Model and Motion Compensation to achieve vehicle detection. In [196], vehicle shape features were extracted approximately through non-uniform rational B-splines (NURBS) surfaces to achieve vehicle detection and tracking. In [197], the shape features of vehicles were predefined by constructing a CAD point clouds model of vehicles, then point clouds registration was carried out to realize vehicle detection and tracking. Results showed very good performance in detecting and tracking single vehicles without occlusions.

4.1.2. Vehicle Motion Feature

The movement of vehicles in the environment will cause inconsistencies in Lidar point clouds of different frames. Thus, positions that may contain moving vehicles can be generated by extracting motion features in point clouds.

In [198], vehicle motion features were extracted from the continuous motion displacement, and are represented by rectangular geometric information on the 2D grid map. The algorithm was implemented on “Junior” which won second place in the Urban Grand Challenge in 2007. In [199], motion features were extracted by estimating Lidar flow from two consecutive point clouds, then FCN was trained to generate 3D motion vectors of moving vehicles to achieve vehicle detection as well as motion estimation.

Considering that it is a great challenge to detect vehicles that are far from Lidar because of the sparse point clouds. In [200], a dynamic vehicle detection scheme based on a likelihood-field-based model with coherent point drift (CPD) is proposed to achieve vehicle detection. Firstly, dynamic objects were detected through an adaptive threshold based on distance and grid angular resolution, then vehicle pose was estimated through CPD, finally, vehicle states were updated by Bayesian filter. Results showed that the proposed algorithm especially increased the accuracy in the distance of 40~80 m.

4.2. Projection Methods

Since vehicle detection in 2D vision images is a hot topic due to the various kinds of methods as well as the high availability of datasets, projection methods are put forward to transform Lidar point clouds into 2D images with depth and attitude information that can be processed via 2D detection methods. Related approaches can be divided into three categories including spherical projection, front-view projection and bird-eye projection on the basis of the representation of Lidar point clouds data.

4.2.1. Spherical Projection

Spherical projection refers to projecting point clouds to a spherical coordinate system. The information contained in each point includes azimuth, elevation and distance from the Lidar scanning center.

Related work mainly focused on deep learning methods after projecting point clouds to the spherical image. In [201], “SqueezeSeg” was trained based on CNN to achieve detection after completing the point cloud projection. In [202], “PointSeg” was trained also based on CNN.

4.2.2. Front-View Projection

Front-view projection refers to projecting the point clouds into the camera plane (similar to the depth image generated by a stereo camera). However, this kind of approach would generate numerous empty pixels at long-distance from Lidar due to the sparse distribution of point clouds. Thus in [203], a high-resolution image was constructed through a bilateral filter, results showed that the point cloud density of vehicles, pedestrians, etc., in the image had increased to a certain extent to optimize the overall resolution.

In [204], after completing the front-view projection, FCNN was performed for vehicle detection. In [205], ROIs were generated based on the DBSCAN algorithm, and ConvNet was trained for verification. Since the characteristics of the point cloud information for variant types of objects are different due to the measurement distance, angle and material of the object, in [206], Lidar echo intensity information was fused on the basis of [205] to firstly generate “sparse reflection map” (SRM), and points were connected into non-coincident triangles to establish “dense reflection map” (DRM), finally “ConvNet” was trained for faster vehicle detection compared with [205].

Some scholars also perform detection by fusing camera data. In [207], a non-gradient optimizer was carried out to fuse camera Lidar data, project Lidar point cloud data into a depth map, and establish Faster R-CNN for target detection, in [208], point clouds were projected into plane images and echo Intensity map, ROIs were generated from the camera image, and then the active learning network is trained for verification.

4.2.3. Bird-Eye Projection

Bird-eye projection refers to projecting the point clouds into the top-view plane that is able to directly provide size and position information of objects to be detected. The bird-eye view can be further divided into three types [211]: height map generated by computing the maximum height of the points in each cell, intensity map generated according to the reflectance value of the point which has the maximum height in each cell, density map generated based on the number of points in each cell.

Deep learning methods are still popular in current research. In [209], multiple height maps, intensity maps and density maps were established, vehicle detection was then implemented based on CNN. Similar approaches included “Birdnet” in [210], “Complex-YOLO” in [211,212].

In [213] a three-channel bird’s eye view was established based on the maximum, median, and minimum height values of all points in the grid, which enable the network used for RGB image detection to be transferred for Lidar detection, and then RPN is used to realize vehicle detection with posture information. In [214], a heightmap and an intensity map were generated only considering the point clouds with maximum height in each grid, and “YOLO-3D” was proposed for vehicle detection. In [215], Lidar bird’s-eye view was fused with camera image, and then CNN with “coefficient non-uniform pooling layer” was put forward for vehicle detection. In [216], a density map was first generated to predefine a calculation area, a “PIXOR” network was then designed based on CNN for vehicle detection. In [217], a series of height maps through slices was generated, and features were extracted through RPN with classifies based on FCNN.

In addition to deep learning methods, in [218] stereo vision and 2D Lidar were integrated for vehicle detection. ROIs were generated based on bird’s-eye view established from 2D Lidar with depth information, then the similarity measurement with loss function evaluation of vehicle template is established for vehicle detection. In [219], point clouds were directly projected to the bird’s-eye view, vehicles were detected based on the edge and contour features, and the detection areas containing vehicles were trimmed according to vehicle size information to achieve optimization.

4.3. Voxel Methods

The voxel method decomposed the environmental space into numerous voxels, and points are allocated to the voxel grid at the corresponding position. In this way, objects to be detected can be represented as 3D voxel grid with their shape and size information.

In [220], point cloud was voxelized and vehicles were detected based on CNN. In [221], a “3D-FCN” was established for vehicle detection. The main idea was to take down sampling of voxel characteristics at 1/8 step length, and then deconvolution with phase synchronization length. In [222], monocular camera and Lidar was combined for vehicle detection. Firstly, the candidate regions were extracted from camera images based on “2D-CNN”, then the voxels in candidate area were matched and scored with the established three vehicle point cloud models (SUV, car and van), which were finally verified by CNN.

A more typical voxel method via neural network was “Voxelnet” designed in [223]. The main idea of this method was the designed “VFE layer” to characterize each Voxel, then objects could be detected through RPN. In [224], the “Second” network was designed based on “Voxelnet” to improve the processing capacity for sparse voxel grid, and a “angle loss regression equation” was designed to improve the detection performance of attitude angle. Subsequent improvements based on “Voxelnet” include “Part-A2” in [225] and “MVX-NET” in [226].

In addition to the deep learning method adopted by most researchers, in [227], a 3D occupancy grid map was generated after voxelization, and vehicles were detected by particle filter algorithm.

4.4. Point-Nets Methods

Compared with the projection method and the voxel method, point–nets method does not need to preprocess the point clouds. It directly regards the raw point clouds data as input to vehicle detection system with fewer points information loss, such approaches usually depend on an end-to-end deep-learning framework to process point clouds data.

The point-nets method was first proposed in [228]. The author designed a “PointNet” neural network to directly detect targets with the original point cloud of Lidar as input. The author then designed “PointNet++” [229] on the basis of “PointNet” to improve its ability of fine-grained identification (object subclass identification) to make it better applied in complex scenarios. It was pointed out in [230] that point clouds from Lidar were irregular and disordered, therefore applying direct convolution processing would cause shape information loss. Thus, an “X transformation” was first conducted for the processing of point clouds, and then “PointCNN” was established for vehicle detection. Other subsequent point-nets methods included “IPOD” in [231], “PointPillars” in [232] and “PointRCNN” in [233].

A fusion method of monocular camera and Lidar with limitation point cloud processing area was proposed in [179]. ROIs were first generated through the “RoarNet-2D” network. Then, the “RoarNet-3D” network was designed to detect vehicles from candidate areas and obtain the final attitude information of vehicles in order to lower the computing cost of point cloud processing.

5. Vehicle Detection: Radar-Based Methods

Radar has a wide range of applications in vehicle detection with higher cost performance, with the development of communication technology, automotive radar applications have played an increasingly critical role in intelligent transport system since it can obtain numerous types of information of the object (e.g., distance, relative speed, phase information), and is not affected by weather conditions. Therefore, co-existence between radars and UGVs has become more and more important. The commonly used radars for UGVs are millimeter-wave radar and ultrasonic radar (sonar). Both have similar working principles, therefore, this article only reviews the vehicle detection methods using millimeter-wave radar (MMW). The radar-based vehicle detection methods mainly include registration methods, learning-based methods, end-to-end methods and advanced radar-based imaging methods. Related works are summarized in Table 13.

Table 13.

Research of vehicle detection with Radar.

5.1. Registration Methods

The essence of the registration method is the sensor fusion vehicle detection framework of MMW and vision sensors to achieve a better balance between detection accuracy and real-time performance. The vehicle position and speed information are firstly derived from MMW to initially generate ROIs, which are then registered by coordinate transformation with images to achieve joint vehicle detection.

In [234], MMW and monocular camera were fused for vehicle detection, MMW data were first transformed to the camera plane to jointly generate ROIs, and then verified based on DPM. In [235], vehicle contour features were used to verify ROIs after registration. In [189], MMW was used to extract feature points of vehicles, they were then transformed to the camera plane to jointly generate ROIs, and finally verified based on YOLOv2. In [236], the algorithm framework was similar to that of [189], however, ROIs were verified by HOG-SVM.

In [237], a stereo camera was equipped to detect side and nearby vehicles, while MMW was used to detect distant and longitudinal vehicles. The vehicle’s attitude and relative speed were estimated by MMW, and feature points were projected to the camera plane to realize jointly multi-directional vehicle detection.

5.2. Learning-Based Methods

Learning-based methods utilized for radar mainly include LSTM and Random Forest, this approach requires the establishment of a training set. Usually, the training data are clustered and calibrated first, then features are extracted and converted into feature vectors to input into the classifier.

In addition to vehicles, this method can also detect other road users such as pedestrians and bicycles. In [238,239], radar data were clustered based on the DBSCAN method, and results of random forest and LSTM in vehicle detection were compared. In [240], ROIs were extracted based on radar echo intensity, and LSTM was used to classify and track targets.

The above works all cluster radar data and convert it into feature vectors, then determines which type of target it belongs to (vehicles, pedestrians, bicycles, etc.), however, a different approach was carried out in [241]. After clustering the data, it directly judged the category based on the characteristics of the clustering points, and then LSTM was utilized to determine the correctness of classification (two-category classification); compared with traditional classification methods, the accuracy of this approach is improved by about 2%.

5.3. End-to-End Methods

The end-to-end methods directly use radar data as input to train a neural network for vehicle detection, whose principle is similar to that of “point-net methods” introduced in the above section. Due to the similarities between radar data and Lidar data, the design of the network often relies on the Lidar end-to-end framework.

In [242], radar data were directly used as input to PointNet++ for vehicle detection, while “PointNets” was used in [243]. In [244], “RTCnet” was established based on CNN with the input of the vehicle distance, azimuth and speed information collected by radar for vehicle detection.

5.4. Advanced Radar-Based Imaging Methods

The aforementioned vehicle detection methods are all based on the principle of radar echo to obtain distance, velocity and other types of information to achieve vehicle detection, however, the detected vehicles cannot be embodied or visualized. If the scene within UGVs’ detection range can be imaged by radar, the accuracy and scalability of the detection algorithm can be improved, and more complete environmental information can be obtained under different weather conditions. Advanced radar-based imaging methods has become a rapidly emerging technique, and it has great potential for improving the stability of UGVs.

In general, advanced radar-based imaging technology is usually applied in the field of aerospace. However, some related research is still carried out among vehicle detection in UGVs, and research about vehicle detection through radar-based imaging technology is summarized below.

High-resolution radar imaging can be achieved through SAR imaging technology. Using a suitable algorithm to generate images from radar data is the basis of applying advanced radar imaging technology to UGVs, algorithms about SAR imaging were reviewed in [245]. However, SAR data are inherently affected by speckle noise, methods related to reducing speckle noise for full polarimetric SAR image were briefly reviewed in [246]. In addition, real-time performance of SAR imaging is also crucial for the efficient operation of UGVs, SAR sparse imaging technologies that will help improve real-time performance were reviewed in [247].

In [248], a squint SAR imaging model was proposed via the backward projection imaging algorithm to perform high-resolution imaging for vehicle detection. In [249], Maximally Stable Extremal Region (MSER) methods were carried out to generate ROIs of vehicles, and a morphological filter was utilized to redefine ROIs; finally, the width-to-height ratio was used for verification to achieve vehicle detection in a parking lot. The same work was also carried out in [250], where the spectral residual was utilized to judge the postures of the vehicles, and vehicle detection was realized by PCA with SVM.

To make a better balance between image resolution and real-time performance, in [251], a hierarchical high-resolution imaging algorithm for FMCW automotive radar via MIMO-SAR imaging technique was designed for improving real-time performance while process imaging resolution, the algorithm was implemented in a UGV for roadside vehicle detection with a run time of 1.17 s/fps.

6. Vehicle Detection: Infrared-Based Methods

Commonly used vision-based vehicle detection algorithms are extremely susceptible to illumination conditions, the efficiency of the algorithm will greatly reduce especially at nighttime or under bad weather conditions. Therefore, the implementation of an infrared camera is crucial to compensate for vehicle detection under poor illumination conditions.

Some researchers used vision-based methods for vehicle detection in infrared images. In [252], edge features of vehicles in infrared images were extracted for vehicle detection. In [253], edge features were also extracted to generate ROIs, then, a vehicle edge template was established to achieve verification, the algorithm was embedded into FPGA and the running time reached 40 ms/fps. In [254], a polar coordinate histogram was established to extract vehicle features based on the polarization characteristics of vehicles in infrared images, with SVM implemented to classification. In [255], HOG was carried out to extract vehicle features, with supervised locality-preserving projections (SLPP) method to reduce dimensionality, and finally, an extreme learning machine was trained for classification.

It should be noted that the resolution of the infrared image is relatively low, consequently, accuracy feature extraction is difficult, some researchers first enhanced the contrast of infrared images in order to achieve better results. In [256], the contrast of infrared images was first enhanced, then ROIs were generated based on image saliency and average gradient method, finally, the confidence level was assessed to verify the ROIs. In [159], the contrast between the vehicle and the background was enhanced through top-hat transformation and bottom hat transformation, then vehicle features are extracted through the Haar method, and ROIs were generated through the improved maximum entropy segmentation algorithm which was finally verified by vehicle prior knowledge (vehicle size and driving position).

Two or more infrared cameras can also be equipped to form an “infrared stereo rig” to obtain depth information. In [51], two infrared cameras were used to form a stereo infrared vision, and a disparity map was generated for vehicle detection under different weather conditions.

With the development of artificial intelligence technology, some researchers have applied the deep learning framework for vehicle detection in infrared images. In [257], improved YOLOv3 was put forward for vehicle detection in infrared images. In [258], SSD was used with the adding of the “incomplete window” module to optimize the structure of datasets to solve the problem of vehicle missed detection.

7. Vehicle Detection: Event-Based Methods

Compared with traditional cameras, millisecond-level time resolution for event cameras makes it have powerful potential for detecting dynamic objects. Due to the low resolution of sensors, event cameras are currently used to detect small-sized targets. For example, in [259], a robot was used as a platform to detect small balls based on clustering and polar coordinate HOG transformation, in [260], a hierarchical model “HOTS” was established to recognize dynamic cards and faces. The application of event cameras for UGVs mainly focuses on SLAM and 3D reconstruction, and the generated 3D maps and models can intuitively represent the vehicles to be detected in the environment, but it is difficult to extract information from the maps or reconstructed models for subsequent planning and decision-making.