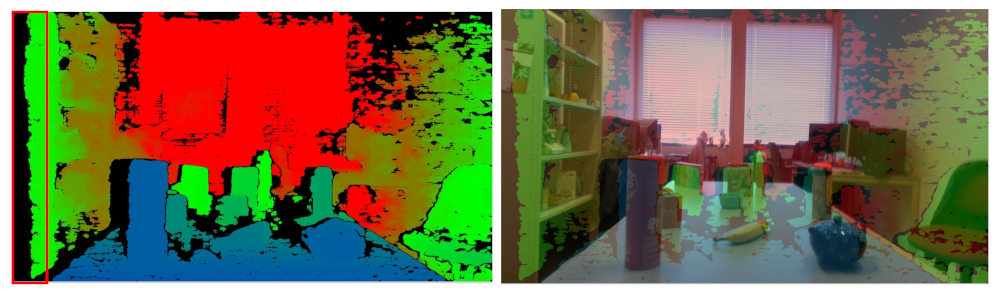

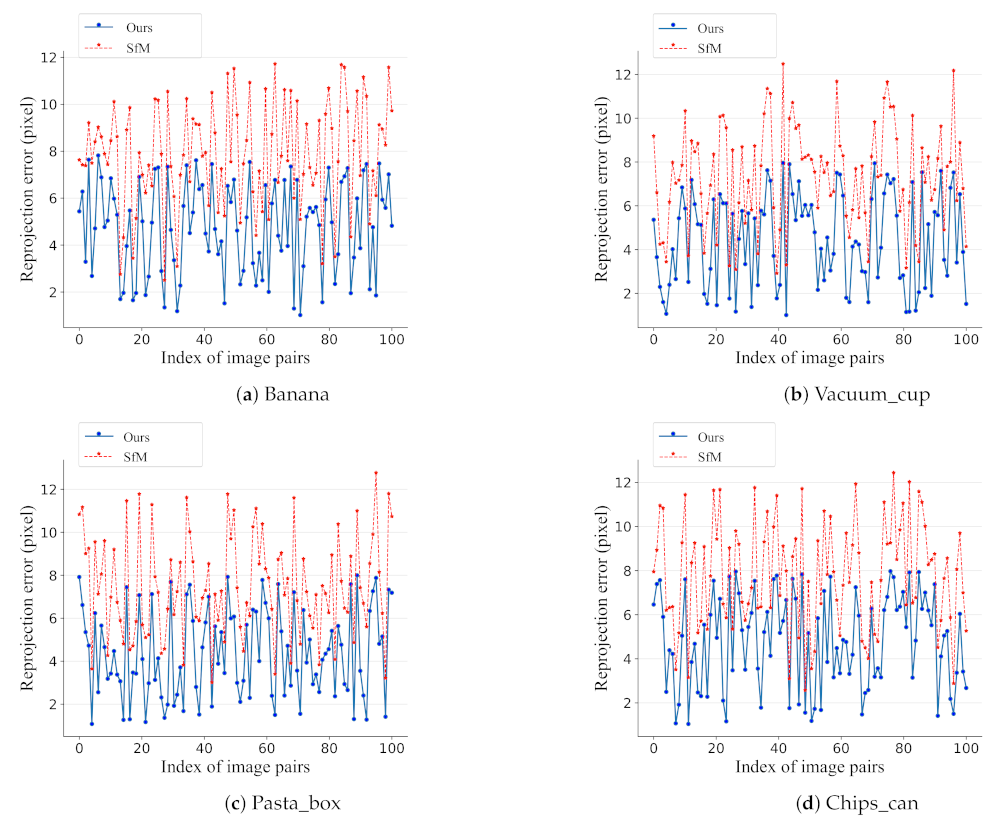

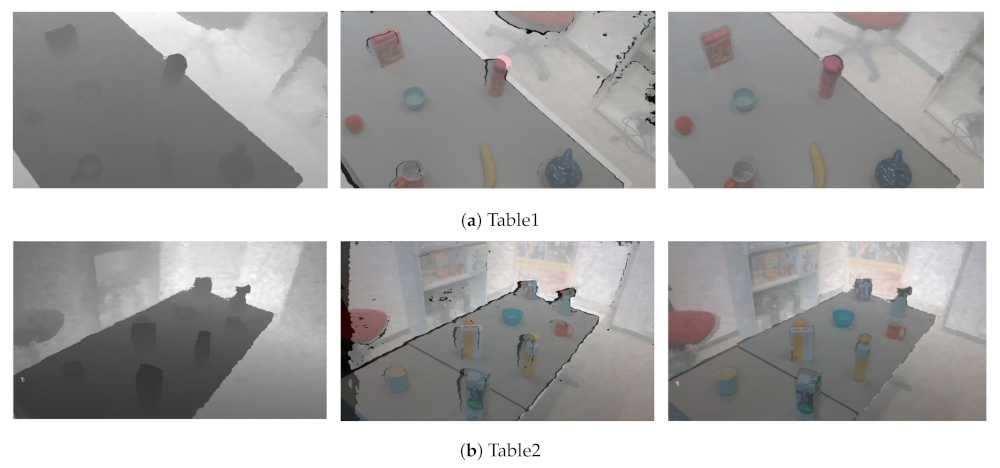

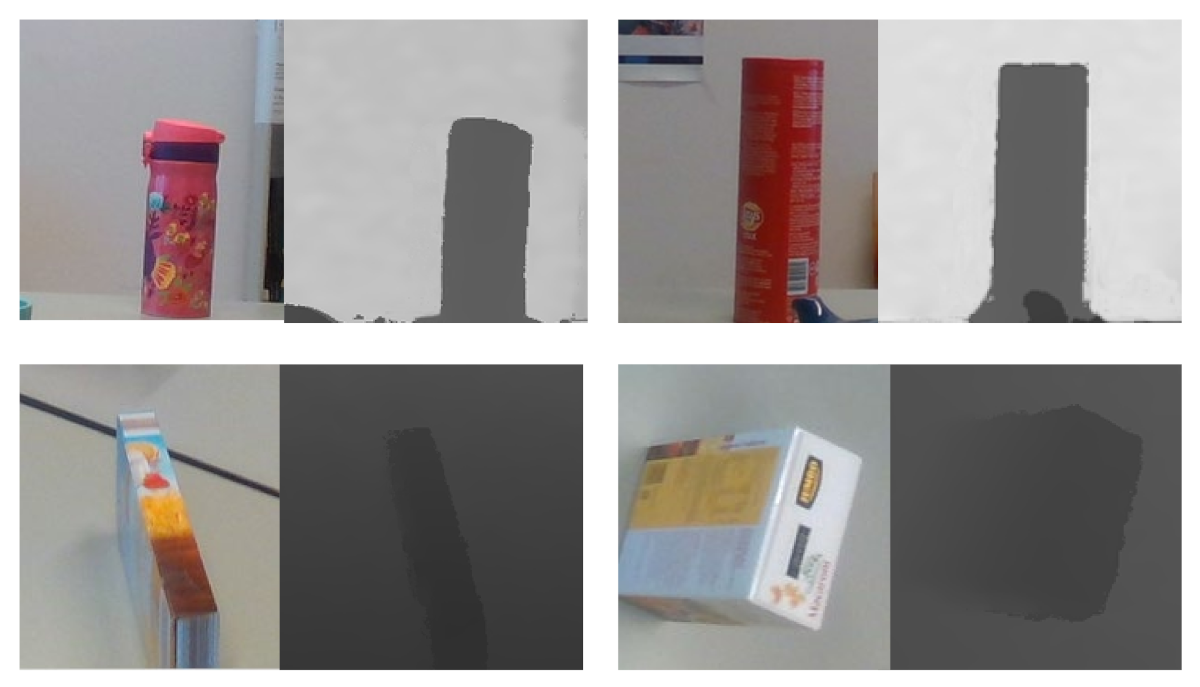

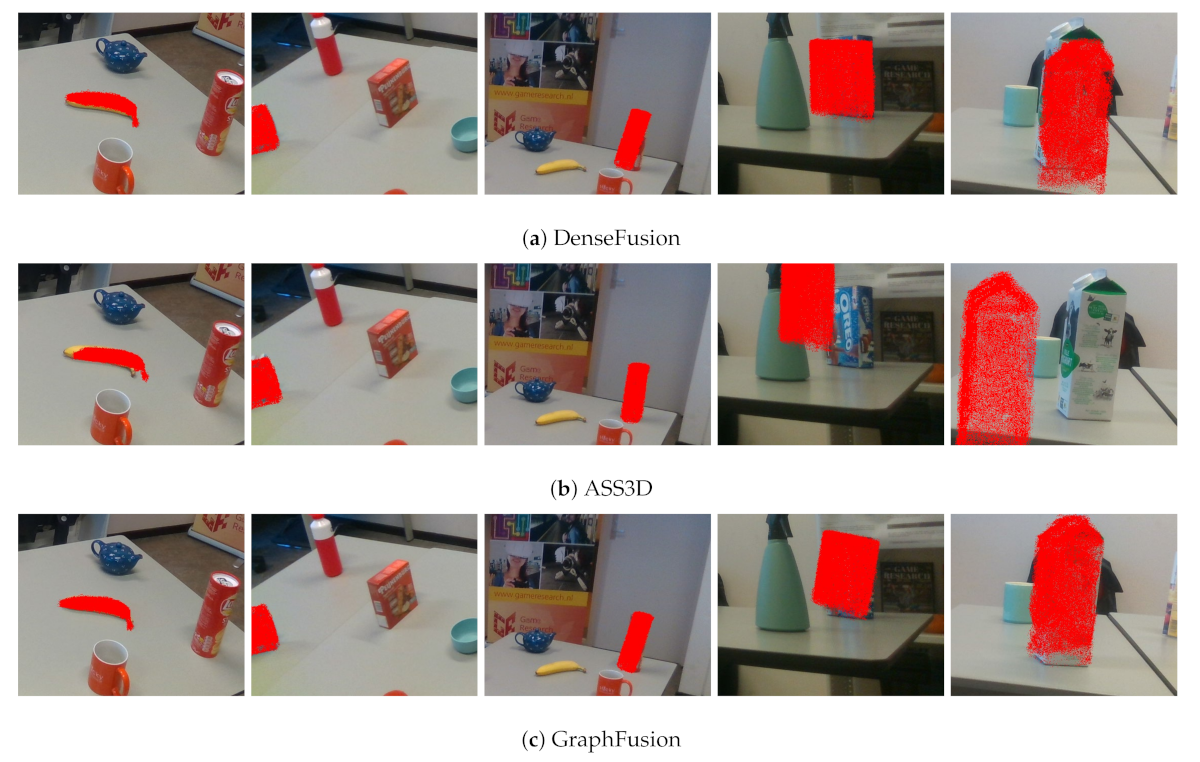

Color images have been successfully used by deep learning for many robotic vision tasks, such as object recognition and scene understanding. However, grasping objects with the exact physical dimensions is a very hard problem that requires not only RGB data, but also extra information. Depth images can provide such a brand-new channel of information and are essential elements in the datasets designed for 6D pose estimation. However, captured depth images often suffer from missing information and misalignment between color-and-depth image pairs due to the inherent limitation of depth cameras. The Intel RealSense D415 camera also has the same limitation. Even though the alignment and hole filling methods from Intel are applied, the quality of the captured depth image is still low, especially when the camera is near the object (see

Figure 6). Therefore, new algorithms are required to improve the quality of captured depth images.

Depth and Color Image Alignment

Because the Intel RealSense D415 camera is based on depth from stereo to calculate depth values, it uses the left sensor as the reference for stereo matching. This leads to a non-overlap region in the field of view of left and right sensors, where no depth information is available at the left edge of the image (see

Figure 6a).

Based on the stereo vision, the depth field of view (DFOV) at any distance (

Z) can be defined by [

40]:

where HFOV is the horizontal field of view of left sensor on the depth module and

B is the baseline between the left and right sensors.

We can see that, when the distance between the scene and the depth sensor decreases, the invalid depth band increases, which results in the increase of the invalid depth in the overall depth image. Besides, if the distance between the object and the depth sensor decreases, the misalignment between the color and depth image also increases, as shown in

Figure 6b.

In previous works, a new depth image is created, which has the same size as the color image but the content being depth data calculated in the color sensor coordinate system, in orde to align the depth image to its corresponding color image. In other words, to create such a depth image, the projected depth data is determined by transforming the original depth data to the color sensor coordinate system based on the transformation matrix between the color and depth sensors. However, it is difficult to obtain the correct transformation matrix, as the depth and color images are defined in different spaces and have different characteristics.

To solve this problem, we first create an estimated depth image for each color image by MVS from COLMAP [

41]. The estimated depth image has better alignment with the color image (see

Figure 7), as it is estimated with the consideration of photometric priors and global geometric consistency. Subsequently, we align the captured depth images to estimated depth images to achieve better alignment between color-and-depth image pairs. For the captured and estimated depth images have the same characteristics, it is easier for us to align the captured depth image to the estimated depth image.

In order to find correspondences between captured and estimated depth images, we compare depth values and normals between them. We should make sure the estimated and captured depth images have the same scene scale in order to compare depth values. However, a fundamental limitation of the estimated depth image is that we do not know the scale of the scene. We use linear regression in a random sample consensus (RANSAC) loop to find the metric scaling factor. After obtaining the scaling factor, we use it to scale the estimated depth image to the captured depth image.

We convert the depth image to a point cloud by camera intrinsic matrix to estimate normals. Subsequently, we compute the surface normal at each point in the point cloud. Determining the normal to a point on the surface can be considered as estimating the normal of a plane tangent to the surface. Thus, this problem becomes a least-square plane fitting estimation problem [

42]. Let

x be a point and

be a normal vector. The plane is represented as

. The distance from a point

in a point set

Q to the plane

is defined by

. Because the values of

x and

fit the least-square sense,

. Subsequently, we define

x as the centroid of

Q:

where

k is the number of points in

Q. Therefore, the solution for estimating the normal

is reduced to analyze the eigenvectors and eigenvalues of a covariance matrix

C created from

Q. More specifically, the covariance matrix

C is expressed as:

where

is a possible weight for point

,

is the

j-th eigenvector of the covariance matrix, and

is the

j-th eigenvalue. The normal

can be computed based on (

4).

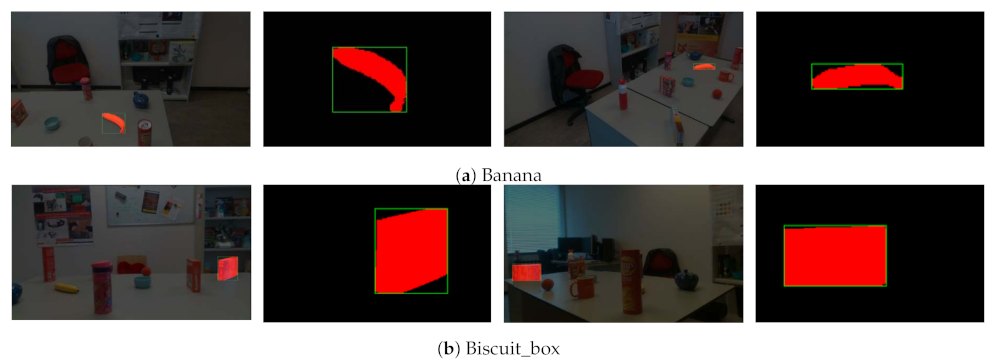

Our aim is to produce better aligned color-and-depth pairs for objects not the overall scene, as the generated dataset is used for object pose estimation. We first extract a patch containing a target object in the captured depth image . Afterwards, we define an offset map whose size is the same as but the content being index differences between and its corresponding patch in the estimated depth image . In ideal conditions, the values in the offset map should be zeros.

The matching process, which is based on PatchMatch [

43], is implemented by first initializing the offset map with random values. Subsequently, we extract a patch

that is based on the offset map as the corresponding patch for

. The pixel

in

is transformed to pixel

in

by:

where

is the index offsets for each pixel in

.

After that, we perform an iterative process which allows good index offsets propagating to its neighbors to update the offset map. The iteration starts with the top left pixel and then an odd iteration starting with the opposite direction. We first calculate the depth differences between pixel and pixel , and the angles between normals and . If the angle is smaller than a predefined threshold, then is saved. Subsequently, if is smaller than its neighbors, we replace the offsets of ’s neighbors with ’s offset. After every iteration, we calculate the sum of . We stop propagation when the change of is negligible. Finally, we map the captured depth image to the estimated depth image based on the offset map. Algorithm 1 summarizes the depth alignment process.

| Algorithm 1 Overview of depth alignment procedure. |

| Input Captured depth image , estimated depth image ; |

| Output: aligned depth map for ; |

| 1: Run RANSAC to find the metric scaling factor. |

| 2: Extract patch in . |

| 3: Calculate scaled depth values and normals of . |

| 4: Initialize offset map O. |

| 5: Find a patch in based on O. |

| 6: for ∈ and ∈ do |

| 7: Calculate depth difference and normal angle between and . |

| 8: Run PatchMatch propagation to update offset map O. |

| 9: Mapping to based on O. |

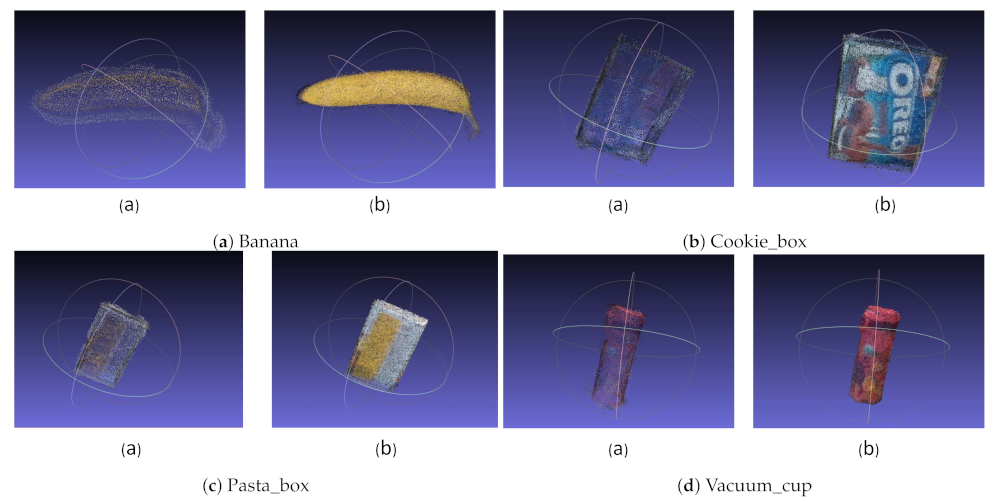

3.4.2. Depth Fusion

Even though the captured depth image is aligned to its corresponding color image, the invalid depth band still exists. Apart from that, it has missing information and noise, especially when reflective or transparent objects are captured. On the other hand, the estimated depth image generated by MVS not only has better alignment with its corresponding color image, but it also provides useful depth information in regions where the depth camera has poor performance. However, the estimated depth image is not able to provide reliable depth information for texture-less or occluded objects, due to the inherent limitations of MVS. Thus, the quality of the estimated depth image is not sufficient for our dataset either.

Because the characteristics of the captured and estimated depth images are complementary, we fuse the captured and estimated depth images together to create a fused depth image. The fused depth image takes advantage of both real captured and estimated depth images, resulting in the improved quality. We generate the fused depth image

by the maximum likelihood estimation:

where

d is the depth value,

is a reliability map and

is a probability map produced from the estimated depth image, and

is a reliability map and

is a probability map that is produced from the captured depth image.

The reliability map

for the captured depth image is computed according to the variation between the depth value and camera’s range. The reliability

of each depth value

d is calculated by

where

and

are the minimum and maximum distances that the depth camera is able to measure. From (

7), we can see that when the distance between the camera and the scene increases, the precision decreases. After calculating the reliability for each pixel, we obtain the reliability map

.

We take the depth image generation into consideration in order to obtain the reliability map

for the estimated depth image. The estimated depth image is generated based on COLMAP, which runs in two stages: photometric and geometric. The photometric stage only optimizes photometric consistency during depth estimation. In the geometric stage, a joint optimization, including geometric and photometric consistency, is performed, which can make sure the estimated depth maps agree with each other in space. We obtain the reliability

of each depth value by comparing the depth values

and

computed from photometric and geometric stages, respectively:

where

is the maximum accepted depth difference that is set to be 50 in our experiments. When the depth value calculated based on geometric consistency has a large difference when compared with the depth value calculated based on photometric consistency, we consider this value is unreliable.

One of the main limitations of the reliability map is that it does not take the idea that spatial neighboring pixels are able to be modeled by similar planes into account. We introduce the probability map in order to solve this problem. To calculate the probability of a depth value in the captured or estimated depth image, we define a (

) support region

S centered at the pixel

i whose depth value is

. For each pixel

, if

j is far from

i, then it is reasonable to associate a low contribution to

j when calculating the probability for

. Following this intuition, the probability

is estimated by

where

is the euclidean distance between

i and

j,

calculated by (

4) accounts for the distance from

j to the plane

, and

and

control the behavior of the distribution. We empirically set

and

to be 4 and 1, respectively. We produce the probability maps

and

after calculating the probability for every depth value in the estimated and captured depth images, respectively.

Finally, with the reliability and probability maps, we generate high-quality fused depth images that are based on (

6).