Abstract

Coded targets have been demarcated as control points in various vision measurement tasks such as camera calibration, 3D reconstruction, pose estimation, etc. By employing coded targets, matching corresponding image points in multi images can be automatically realized which greatly improves the efficiency and accuracy of the measurement. Although the coded targets are well applied, particularly in the industrial vision system, the design of coded targets and its detection algorithms have encountered difficulties, especially under the conditions of poor illumination and flat viewing angle. This paper presents a novel concentric circular coded target (CCCT), and its positioning and identifying algorithms. The eccentricity error has been corrected based on a practical error-compensation model. Adaptive brightness adjustment has been employed to address the problems of poor illumination such as overexposure and underexposure. The robust recognition is realized by perspective correction based on four vertices of the background area in the CCCT local image. The simulation results indicate that the eccentricity errors of the larger and smaller circles at a large viewing angle of 70° are reduced by 95% and 77% after correction by the proposed method. The result of the wing deformation experiment demonstrates that the error of the vision method based on the corrected center is reduced by up to 18.54% compared with the vision method based on only the ellipse center when the wing is loaded with a weight of 6 kg. The proposed design is highly applicable, and its detection algorithms can achieve accurate positioning and robust identification even in challenging environments.

1. Introduction

Recently, vision measurement has become an extensively employed application, owing to the rapid advancement of image acquisition devices and computer vision techniques and its advantages of simple equipment, ease of use, low cost, strong environmental adaptability, fast, non-contact, and high accuracy [1,2,3,4]. The primary information including the position, shape, attitude, deformation, and dynamics of the objects can be derived from the vision measurement. Consequently, this method has become highly advanced with extensive application in various fields such as industry [5,6,7], civil infrastructure [8,9], food [10], medicine health [11,12], transport [13,14,15], security surveillance, etc. Particularly, the vision-based measurement techniques have gathered increasing attention in aerospace research and applications [16,17,18,19].

In vision measurement, extracting image points and matching the extracted points [20] in the images, from various sensors, or, gained by the same sensor in a different instance, are the keys and foundational steps. However, while performing, it results in an incorrect matching of feature points [21], besides being an extremely time-consuming task. To address these problems, methods of attaching artificial coded targets [22] have been developed. Coded targets are designed with a special “coded” symbol to produce unique identification. They can be automatically tracked and matched in multi-view or multi-time images, which significantly improve the efficiency and accuracy. Therefore, the artificial coded targets have been extensively used in vision measurements. Scaioni et al. [23] have used coded targets as ground control points to derive the calibration parameters. Liu and Dong et al. [24] have proposed multicamera systems to measure the displacements of large-span truss string structures. In their works, optical coded targets have been adhered to the structure to obtain the displacements at key points during the progressive collapse experiment. Huang [25] has performed the surface measurements of a mesh antenna by using coded targets. Liu et al. [18] have introduced coded targets in photogrammetric systems to successfully track the aerospace vehicles during a series of water impact tests and wind tunnel tests. Xiao [26] have used coded targets to perform the vision-based deformation measurement of steel structure beam of transmission tower foundation. Furthermore, Xiao et al. [27] have presented an accurate stereo vision system for industrial inspection, in which coded targets have been employed to calibrate vision systems and obtain a higher accuracy, conveniently.

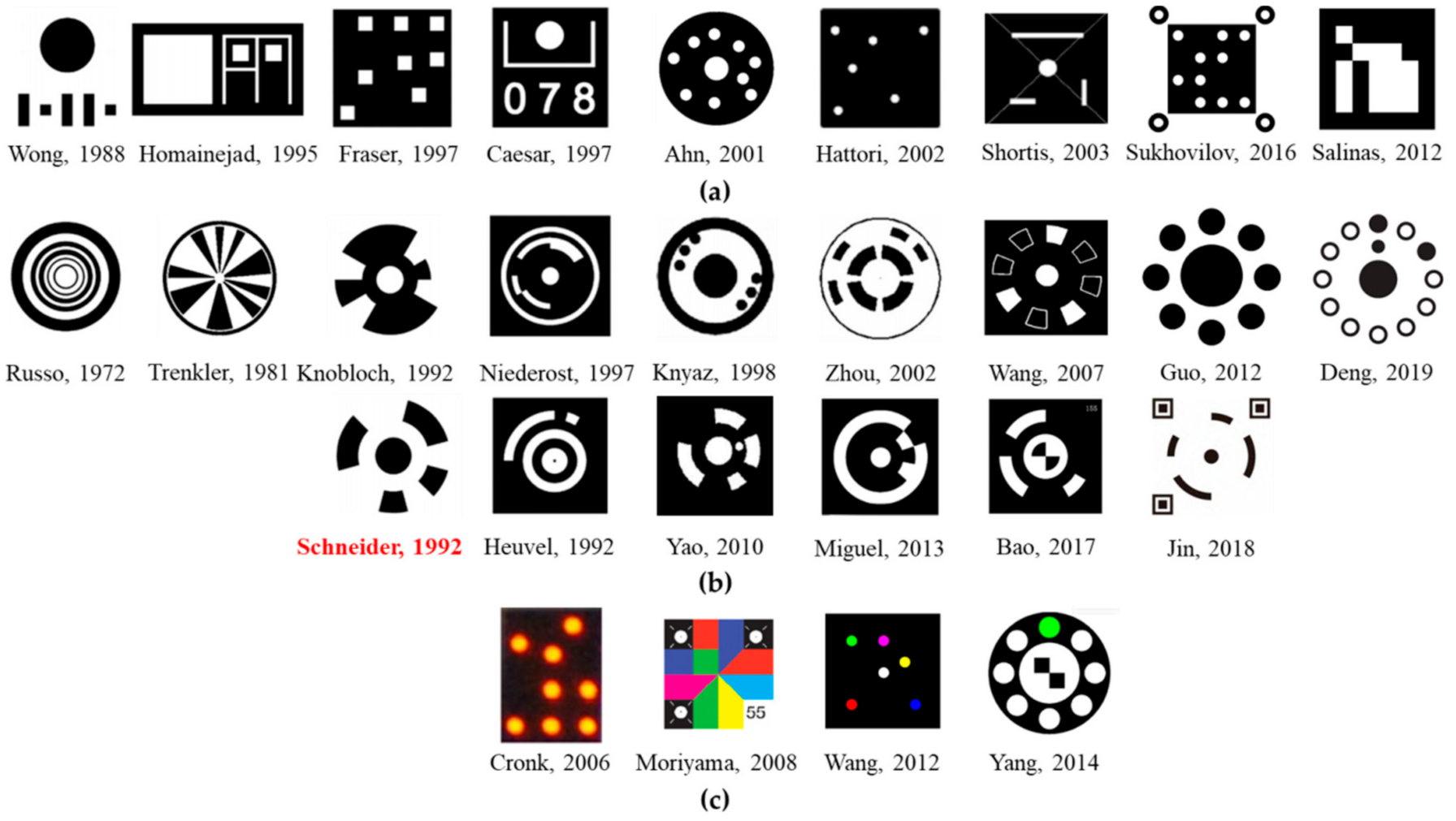

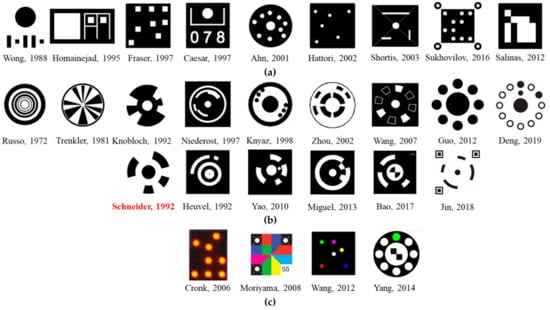

According to the previous studies, vision measurements based on coded targets have been steadily increasing. Correspondingly, the requirements for accuracy have been increasing, thus various schemes of coded targets and their positioning and identification algorithms have been proposed in the publications to provide automatic detection, identification, and measurement. Figure 1 shows several common coded targets. They can be classified into two categories: dot-dispersing and centripetal categories. As shown in Figure 1a, a dot-dispersing form is that of a unique and identifiable pattern consisting of discrete circular or rectangle targets, or numerals [28,29,30,31,32,33,34,35]. Figure 1b shows the centripetal form, which consists of a center circle or diagonal target and a surrounding pattern similar to concentric rings with different widths [35], sector blocks with different areas [35], regularly arranged circles [36,37,38,39], or concentric ring segments [40,41,42,43,44,45,46,47,48,49,50]. Compared with the dot-dispersing form, centripetal form is more versatile and popular. Since the location of the centripetal coded target is based on the centroid of the central positioning circular or diagonal target, it enables fast and precise detection through ellipse fitting or least-squares matching. Moreover, the exclusive IDs of the coded targets have been embedded in the widths of concentric rings, the angles of concentric ring segments, or regularly arranged circles, which is prone to accurate identification. Besides, to take advantage of the color image information provided by the color digital cameras, color-coded targets [51,52,53] have been proposed for vision measurements, as shown in Figure 1c. However, effective color image information depends on the ambient light illumination and passive viewing targets. This is often a disadvantage for vision measurement, hence the studies on color-coded targets are scarce.

Figure 1.

Various types of coded targets: (a) dot-dispersing form (b) centripetal form (c) color-coded targets.

Amongst these code targets with centripetal pattern in Figure 1b, Schneider’s design is primarily applied in industrial vision measurements owing to the advantages of its simple structure, sufficient number of coding arrangements, and little impact of distortion on recognition. This kind of coded target is still widely in use since the 1990s. Many scholars have studied improving the design and locating the position and decoding. Wang et al. [42] have presented an improved encode method based on Schneider’s design and a new object recognition algorithm that combines with the geometrical feature of the new coded targets. Duan [43] has improved the decoding accuracy of the coded targets using a self-adaptive binary method. Liu et al. [44] have proposed an automatic and rapid algorithm for Schneider’s coded targets. Huang [45] have developed a method to detect accurately the center circles of coded targets based on certain criteria. Chen and Zhang [46] have proposed a model based on SIFT for recognizing the motion-blurred coded targets found in the moving objects. Miguel et al. [47,48] have presented a series of new coded targets and their identification and decoding algorithm, whose geometric structures are similar to Schneider’s design. Bao et al. [49] have developed a robust recognition and accurate locating method for circular coded diagonal target in which a diagonal target has been added in the central circle of Schneider’s design. Jin [50] has added three locators on the basis of coded target by Schneider to achieve precision position, besides increasing the number of targets.

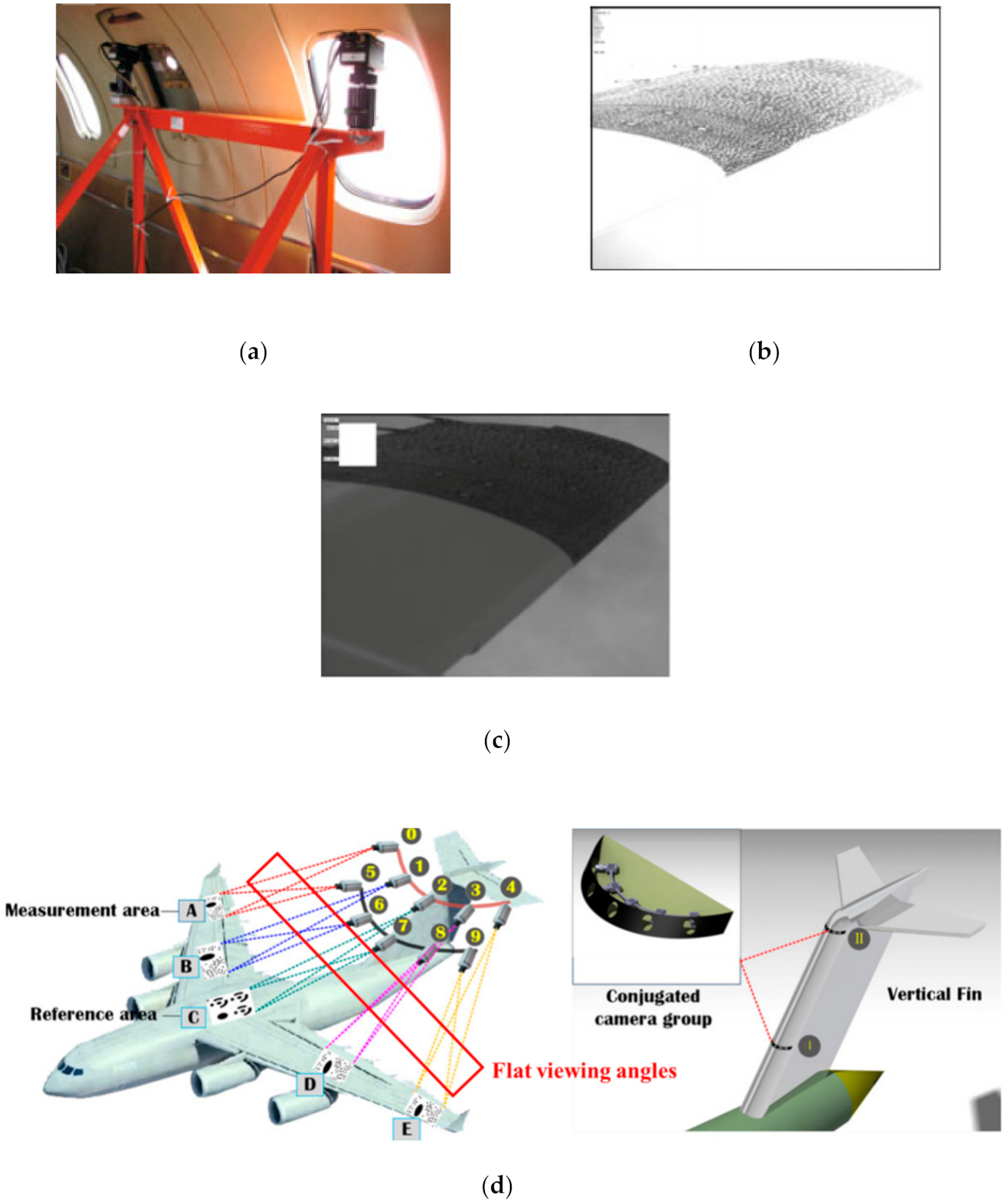

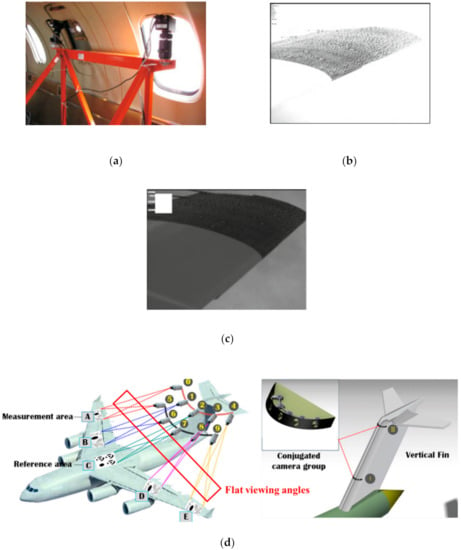

We note that most of the studies presented above have performed their tests only in laboratory conditions to check the performance of their coded targets’ design and detecting algorithms, which mandates a stable lighting environment and a favorable viewing angle. Once the vision method based on coded targets is applied in challenging conditions such as changing and non-uniform illumination, and the flat viewing angles, which were not involved in their works. The example in Figure 2 was taken from a real flight test for wing deformation measurement, where the images formed under overexposure (Figure 2b) and underexposure (Figure 2c) could often happen owing to significant light changes. These situations make it difficult to locate and identify a coded target.

Figure 2.

Vision system for an in-flight wing deformation measurement [54,55]: (a) the camera installed in the passenger compartment; (b) overexposure of the images owing to the changing illumination during the in-flight recording; (c) underexposure of the images; (d) the camera installed on the vertical fin for observing the wing speckle pattern and coded targets. Both of the two cases undergo flat viewing angles.

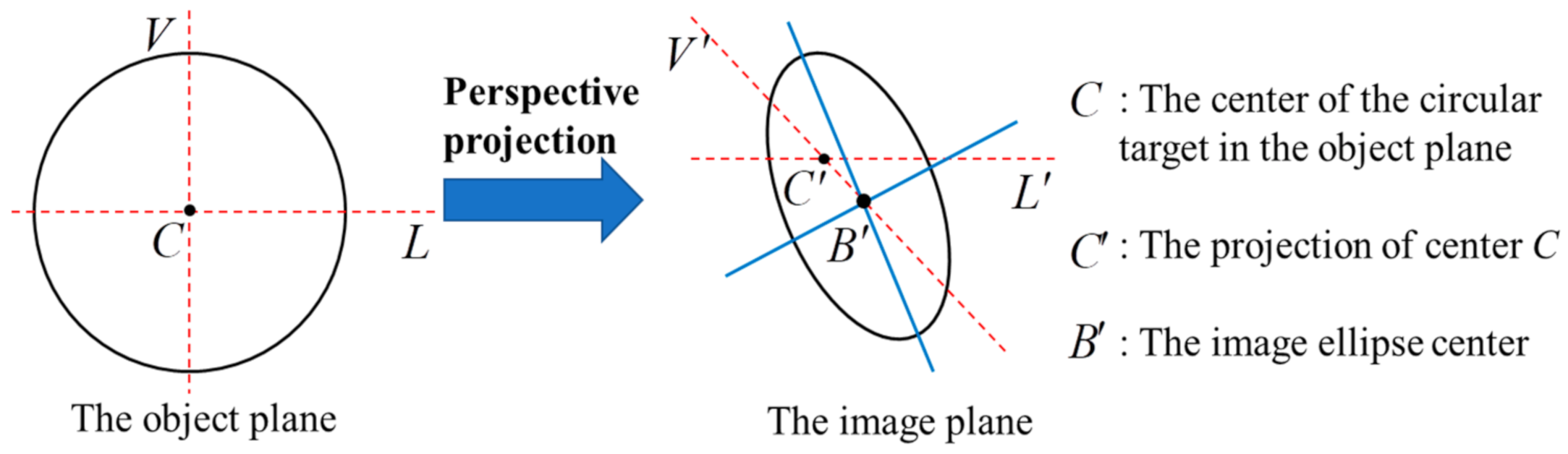

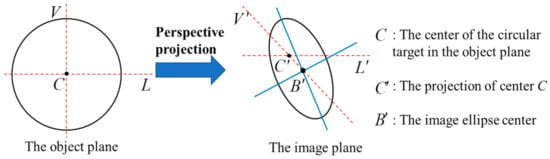

Another critical issue, apart from the poor illumination problem, is the flat viewing angle, by which, the object being observed by the camera is apparently very flat. In the case of measuring the wing deformation, the installation locations of the image acquisition equipment, i.e., camera, are very limited and can only be on top of the fuselage or in the passenger compartment (Figure 2a [54]) or on the vertical fin of the aircraft (see Figure 2d [55]). Consequently, there is a tiny angle subtended between the plane of the coded target attached to the wing and the camera optical axis, and henceforth, the observation angle to the wing becomes flat. A flat viewing angle would cause severe distortion in the perspective images of the coded target. Strong perspective distortion may lead to both inaccurate positioning and low recognition, thereby decreasing the measurement accuracy, and even cause the failure of measurement. Moreover, the flat viewing angle also introduces a large eccentricity error [56,57], which indicates that the fitted ellipse center is inconsistent with the projected circle center of the coded target, as shown in Figure 3.

Figure 3.

Eccentricity error of circular target caused by perspective imaging. C is the center of the circular target in the object plane; is the true projected center of the circular target; is the center of this image ellipse; the deviation between and is defined as eccentricity error.

Hence, two major issues, i.e., the flat viewing angle and the harsh lighting conditions, hamper practical applications of vision measurements based on coded targets, which would be addressed in this investigation.

The main contributions of this study are as follows.

- ◼

- We have designed a novel concentric circular coded target (CCCT) in which a concentric ring is employed to resolve the eccentricity error. This improvement aims at meeting the requirements of the flat viewing angle while measuring the important information (aero-elastic deformation, attitude, position, et al.) of objects such as wind tunnel models, flight vehicles, rotating blades, and other aerospace structures, in both ground and flight testing.

- ◼

- We have proposed a positioning algorithm to precisely locate the true projected center of the CCCT. In this algorithm, the adaptive brightness adjustment has been used to address the problems of poor illumination. Concomitantly, the eccentricity error caused by flat viewing angle is corrected based on a practical error-compensation model.

- ◼

- We have presented an approach to cope with the demands of the identification of CCCTs that make its use more robust in challenging environments, especially in the unfavorable illumination and flat viewing angle.

This paper is organized as follows: Section 2 performs a preceding test to estimate the applicability of Schneider’s coded targets in challenging conditions. Section 3 shows the design of proposed coded targets, and the methods to locate and identify our coded targets. Section 4 presents the simulation and real experiments to verify the proposed design and method in Section 4. Finally, Section 5 summarizes this study.

2. Preceding Tests for Estimating the Applicability of Schneider’s Coded Targets

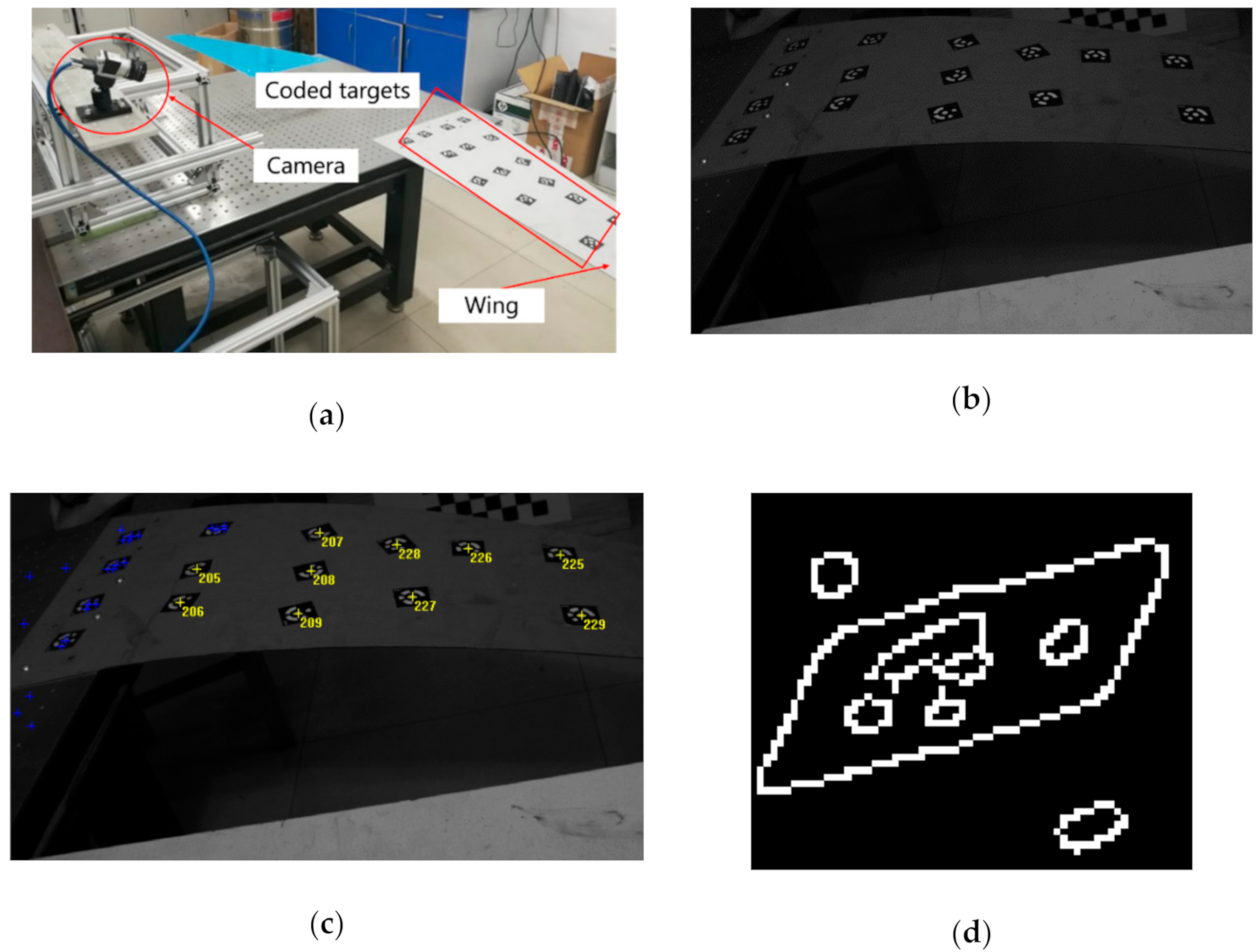

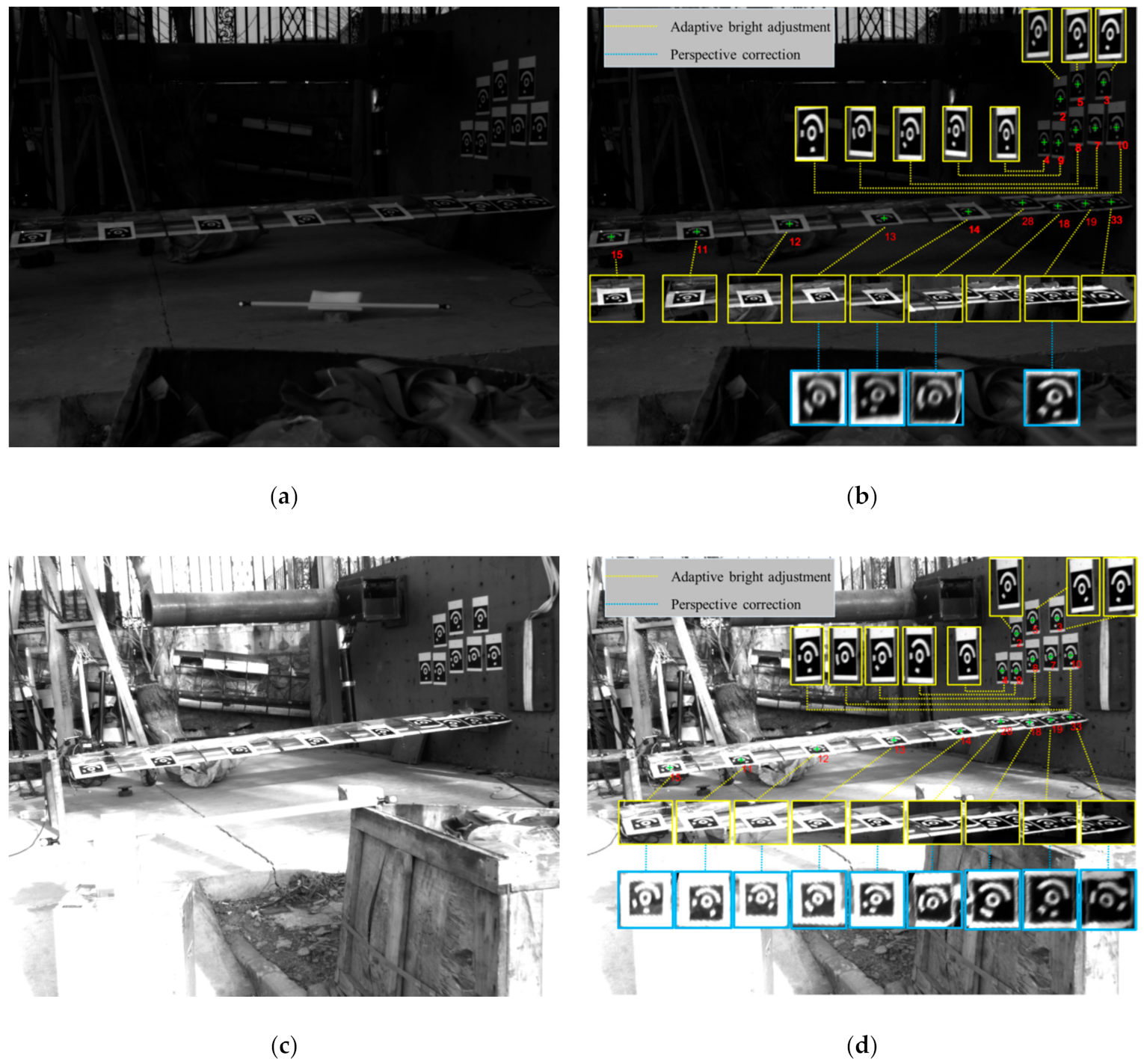

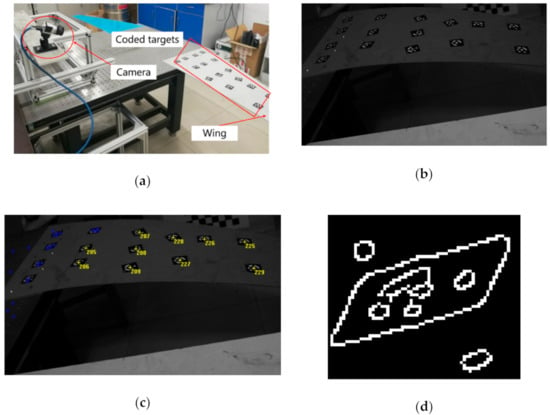

To estimate the performance of Schneider’s design for vision measurement in challenging conditions, the case when the camera is installed on the vertical tail to measure the wing deformation has been simulated. As shown in Figure 4a, a digital camera (Basler acA1300-200 um with a global shutter) is installed on the rigid support links to acquire the images of aluminum alloy flat wing. Figure 4b shows an original image (1080 × 1024 resolution) including 15 coded targets attached to the wing surface, which has been taken with underexposure and heavily dark background. Accordingly, the viewing angle decreases with the closing to the top left, and the image condition becomes increasingly unfavorable.

Figure 4.

The experiment to verify the performance of Schneider’s design: (a) Experimental setup; (b) The real image has been taken with underexposure and heavily dark background; (c) The result of identifying the coded targets using commercial software; (d) The canny edge detection result of the coded target in the upper left corner.

Using commercial software (XJTUDP [13]), the identified coded targets are shown in Figure 4c. Five of the 15 coded targets which are far away from camera are not recognized. Figure 4d shows the result of the Canny edge detection for the coded target in the upper left corner. It suggests that the failure of identification could be directly attributed to the adhesion between the central positioning circle and the coded band. This phenomenon has been caused by strong perspective distortion under the flat viewing angle. Besides, it is in the locating step that poor illumination also presents a major challenge.

3. Methods

3.1. Design of the Concentric Circular Coded Target

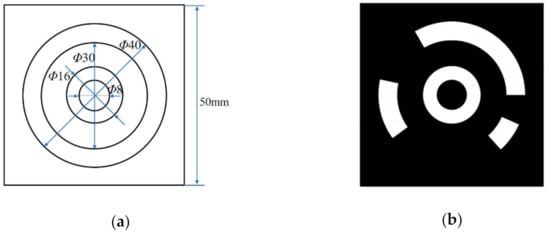

To address the problem of decoding failure that results from poor illumination and flat viewing angle, we propose a 15-bits concentric circular coded target (CCCT) based on Schneider’s design. Further, we present its positioning and recognition method (see Section 3.2 and Section 3.3).

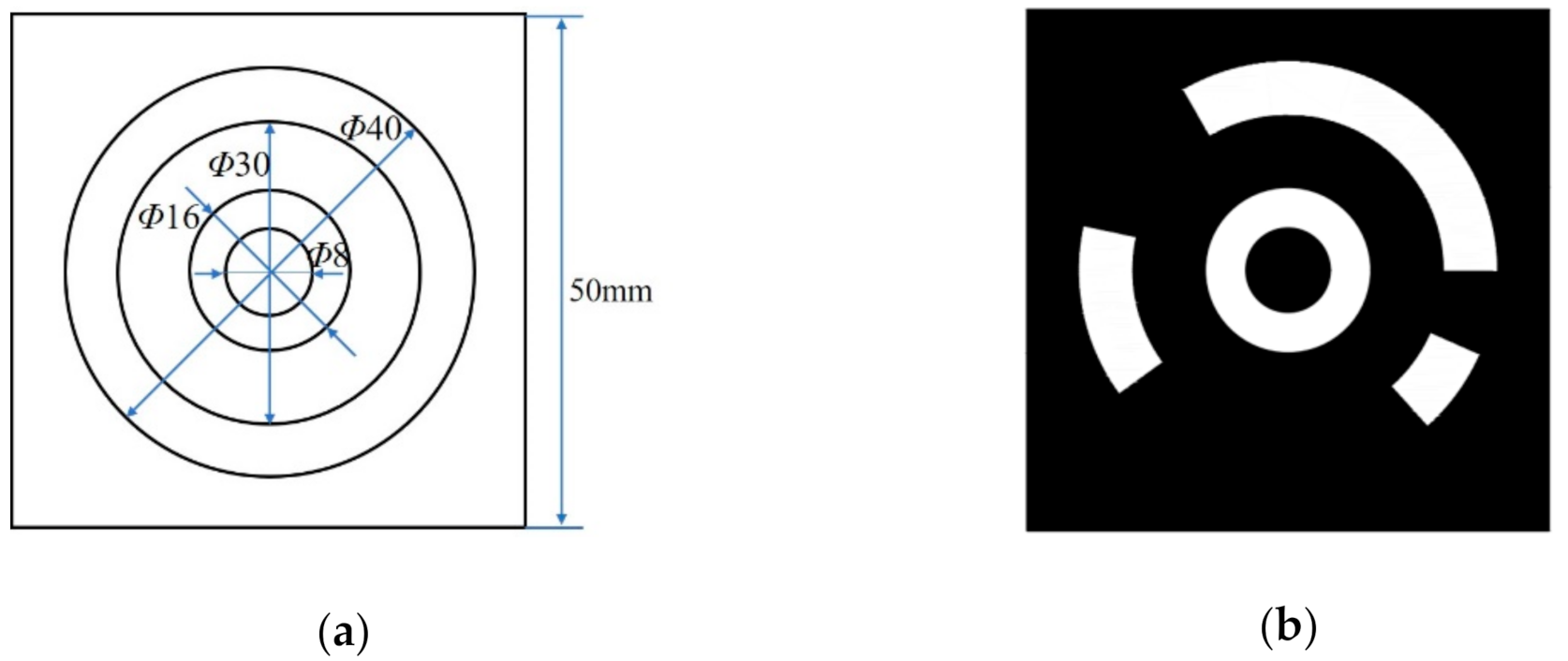

Figure 5 shows the design scheme of the CCCT. Each CCCT consists of two parts: a central positioning ring and the concentric circular coded band. The diameters of the central concentric circles are 8 and 16 mm respectively. The inner and outer circular edges of the coded band are 30 and 40 mm in diameters, respectively. The black square containing the CCCT is 50 mm in length. The above geometric parameters can be regarded as a set of basic proportions. In practical application, the users can enlarge or reduce the above parameters according to photographed distance. In [57], Liu et al. proposed a novel and practical method on size selection of circular coded targets to guarantee high-precision vision measurement.

Figure 5.

(a) Design scheme of the concentric circular coded target (CCCT); (b) One example of the CCCTs.

The improvement mainly lies in two aspects. The initial step is to increase the ratio of the area between the central positioning circle and the circular coded band in CCCT, which aims at handling the problem of adhesion between the central positioning circle, and the circular coded band caused by flat viewing angle and poor illumination. First, we increase the ratio of the area between the central positioning circle and the circular coded band in CCCT. Second, we change the central positioning dot to a ring, which cannot correct the eccentricity error caused by the perspective distortion to improve the positioning accuracy but also achieves the perspective correction of CCCT local image to increase the recognition rate.

3.2. High-Precision Positioning of Concentric Circular Coded Targets

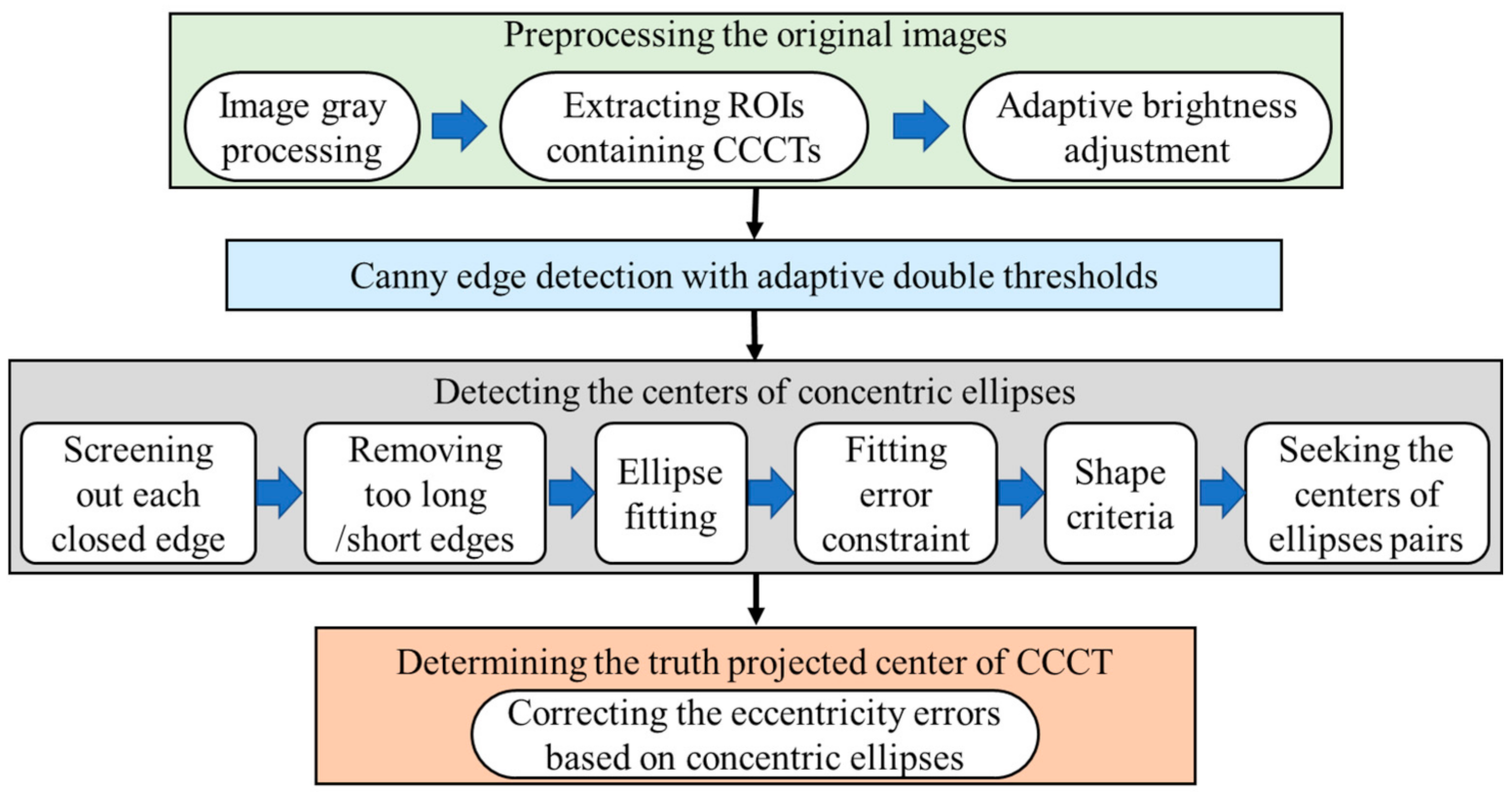

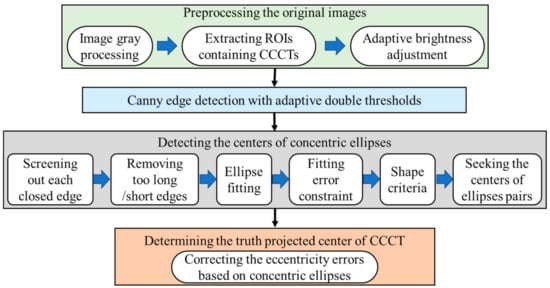

The CCCTs should be able to be accurately located, as well as automatically identified. The position of a coded target must be located first to perform the identification then. Figure 6 schematically shows our workflow to locate the true projected center of the CCCT.

Figure 6.

The workflow to locate the CCCTs.

3.2.1. Preprocessing the Original Images

Image preprocessing consists of three parts: graying, coarse localization to extract the regions of interest (ROIs), which is the local image containing each CCCT, and the adaptive brightness adjustment. First, it is necessary to convert the color image into a gray image if the original is a color image.

Thereafter, the extraction of ROIs that contains CCCTs is followed. We convert the gray image into a binary image, construct a rectangular area with proper length, and search the area with the high-intensity circle or ring. It is noted that the rectangular area should be set to contain each possible CCCT. Re-do searching is performed until all the areas are marked. For the continuous acquisition of images, the CCCT locations in the previous frame can be utilized to extract the ROIs of the current frame.

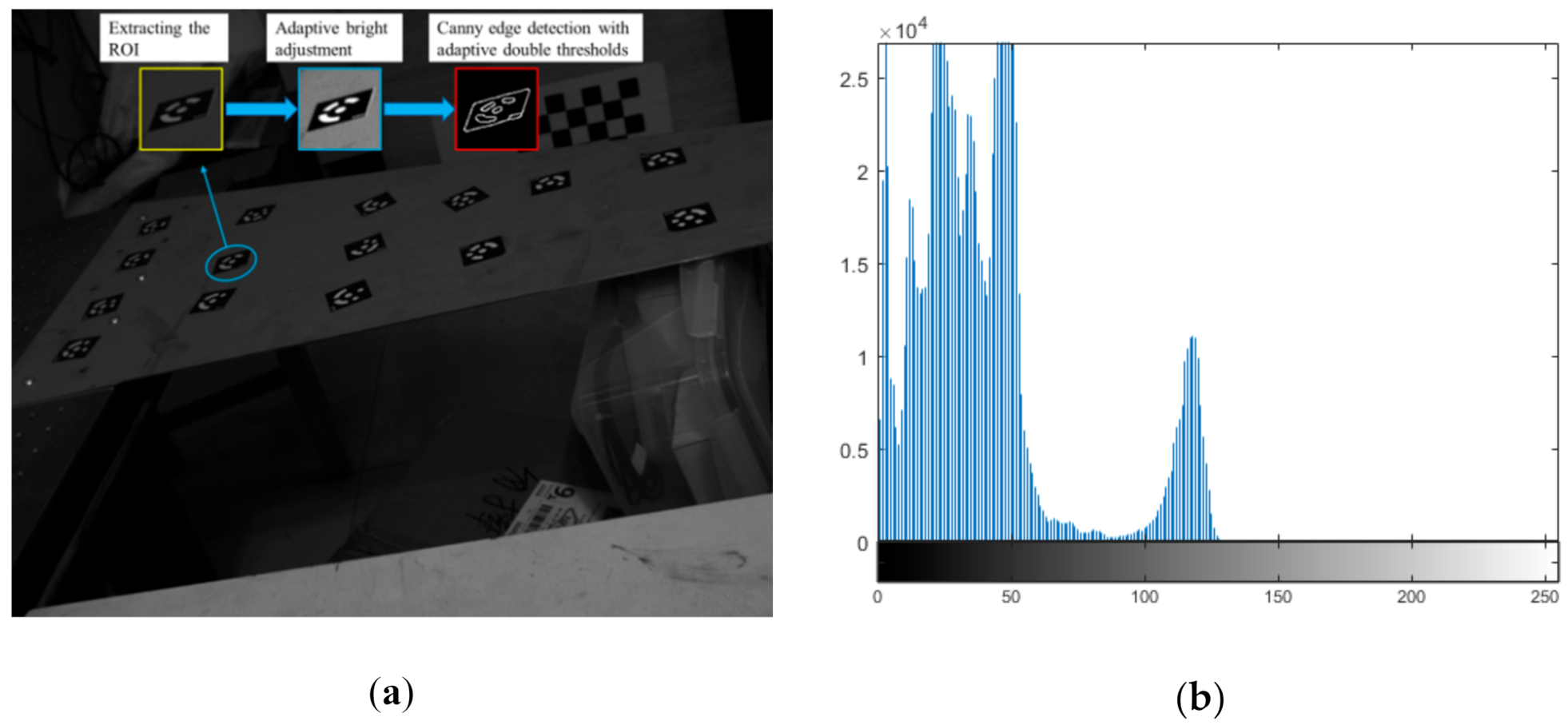

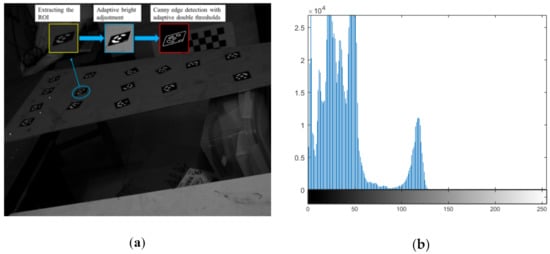

If the gray histogram of the extracted ROI is concentrated in a narrow range, details of the image are not clear enough, and hence the contrast is low. For instance, when the middle peak part of the histogram is too far to the left or right, it implies that the local image is underexposed or overexposed. In this case, an adaptive Gamma correction algorithm [58] has been performed to adjust the brightness to enhance the information of the coded targets in the image. The Stretchlim function of Matlab has been employed to find, adaptively, a segmentation threshold vector that can be provided for Gamma correction. One underexposed image from the experiment of Section 2 is taken as an example, as shown in Figure 7a. The gray histogram is plotted in Figure 7b. From Figure 7b, it is obvious that the histogram skews to the left. For the CCCT at the second row and second column in Figure 7a, the inset with the light blue border shows that the result of adaptive Gamma correction brightness. It can be seen that the brightness and contrast of the local image have been enhanced, and visual effects have been improved.

Figure 7.

Result of adaptive Gamma correction: (a) Enhancing local image; (b) Histogram of original image.

3.2.2. Canny Edge Detection with Adaptive Double Thresholds

In previous works, the majority of scholars have detected ellipses using the Canny method [59] with given double thresholds. The manual selection of thresholds may decrease the applicability of our methods. To meet the requirements under challenging lighting conditions, the Canny edge detection algorithm with adaptive double thresholds has been employed to extract the contour points in the local gray image. The high and low thresholds can be obtained with the following expressions:

where TH and TL are high and low thresholds of Canny edge detection, m and n the width and height of the image, and I(x, y) representing the gray value of pixel (x, y). Further, G(x, y) is defined as

where gx(x,y) and gy(x,y) are horizontal and vertical gradients of pixel (x, y), respectively. They can be calculated as

Therefore, the appropriate thresholds for the images in changing environments can be obtained automatically. Using a pair of thresholds TH and TL, the Canny edge detection algorithm has been used to extract strong edge pixels. In Figure 7a, the inset with red border shows the result of Canny edge detection with adaptive double thresholds.

3.2.3. Detecting the Centers of Concentric Ellipse Pairs

- ◼

- Screening out each closed edge

The central concentric ellipses would be imaged to closed contours. However, certain straight edges appear in the image after Canny edge detection. These straight lines might be generated by the noise or other objects in the background. Therefore, the possible edges of central circles that meet the following closed criteria should be screened out. Suppose that is the j’th extracted contour points, is the i’th pixel on the j’th contour, and N is the number of pixels on this contour. If the end points of the edge satisfy the following formula in Equation (5), this edge is considered closed.

The subscripts 1 and N denote the start and end points of the j’th contour. A typical value for T1 is 2 or 3 pixels [60].

- ◼

- Removing excessively long (short) edges

According to our previous study on suitable size selection of artificial coded targets [57], the approximate sizes of the imaging ellipses of central circles could be determined in advance. They could not be too long or too short. The number N, of pixels on the closed edge, is taken as the length. If the edge whose length satisfies Equation (6), it might be regarded as a false edge and should be removed as per the condition given as

N < T2 or N > T3

The thresholds T2 and T3 represent the minimum and maximum lengths, respectively, which are known in advance from the given imaging conditions.

- ◼

- Ellipse fitting

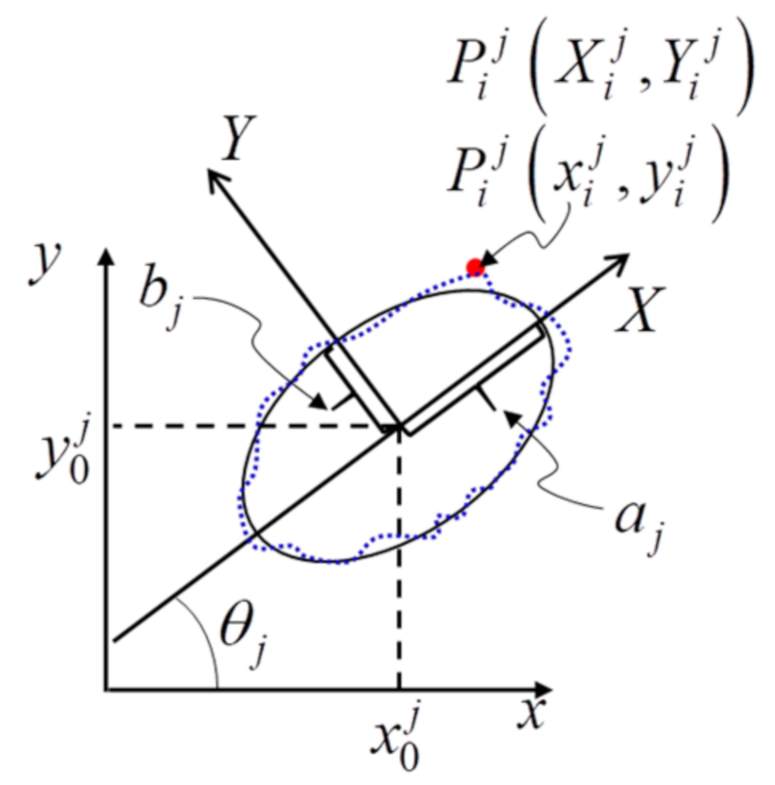

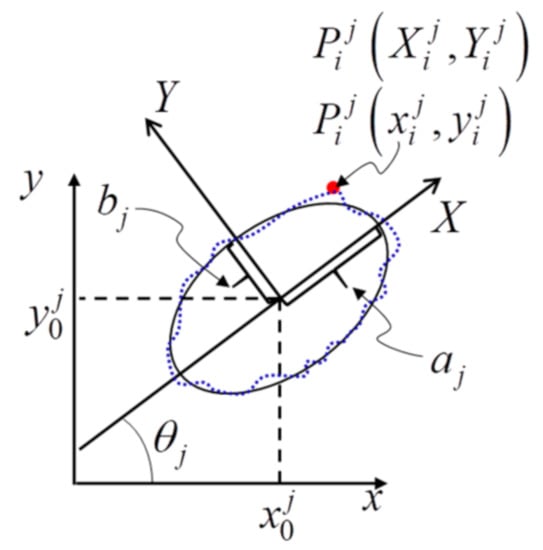

Subsequent to removing edges whose lengths do not satisfy the requirements, the bulk of the remaining edges might be the imaging ellipses of central circles. A least-squares ellipse fitting algorithm [45] has been adopted to obtain five ellipse parameters including the center coordinates of j’th contour, semi-major axis , semi-minor axis , and orientation angle , as shown in Figure 8.

Figure 8.

A fitting ellipse is located at in the image coordinate system xy. The broken blue edge denotes the j’th closed edge in the image plane; and are semi-major axis and semi-minor axis, respectively; is the orientation angle; a temporary coordinate system XY at is introduced to determine the ellipse fitting error for the j’th edge.

- ◼

- Fitting error constraint

While fitting the ellipse, certain closed edges that satisfy the length criteria might be the contours of objects in the background. However, they also have been fit to ellipses. Therefore, the fitting error constraint is applied to select the edges whose shapes are close to ellipses. In Figure 8, the broken blue edge denotes the j’th closed edge in the image plane, and (x, y) is the global image coordinate system. We introduce a temporary coordinate system XY at , and assume that the semi-major axis is X axis, and semi-minor axis is Y axis. For a given pixel on the j’th edge, the corresponding coordinates in XY coordinate system can be obtained as

Therefore, the ellipse fitting error for the j’th edge is computed as

The edge whose > T4 is considered as an undemand contour, and it should be deleted. A typical value of T4 is 1 pixel.

- ◼

- Shape criteria

The rate of semi-minor axis to semi-major axis has a maximum value of 1 for a circular contour, and decreases with the decreasing viewing angle. According to Ahn’s study [45], the form factor is defined as

At the observe angle of 70°, the form factor has a value up to 1.5. The perimeter and area of an ellipse can be expressed by

Combining Equation (9) with Equation (10), the rate of semi-minor axis to semi-major axis should satisfy

where T5 is set to 0.41 at the viewing angle of 70°.

- ◼

- Seeking the centers of ellipse pairs

For our proposed CCCT, a pair of ellipses is the projection of central concentric circles onto the image plane. Although the two ellipses have their separate centers in one image, the distance between the two centers is not too far. According to this characteristic, a threshold T6 could be set for the distance between the centers of inner and outer ellipses. If the centers of the ellipse pairs meet the following formula in Equation (12), they are considered as candidate contours of ellipse pair produced by coded targets and should be withheld.

The superscripts in and out denotes the inner and outer ellipses, respectively.

3.2.4. Determining the Truth Projected Center of CCCT

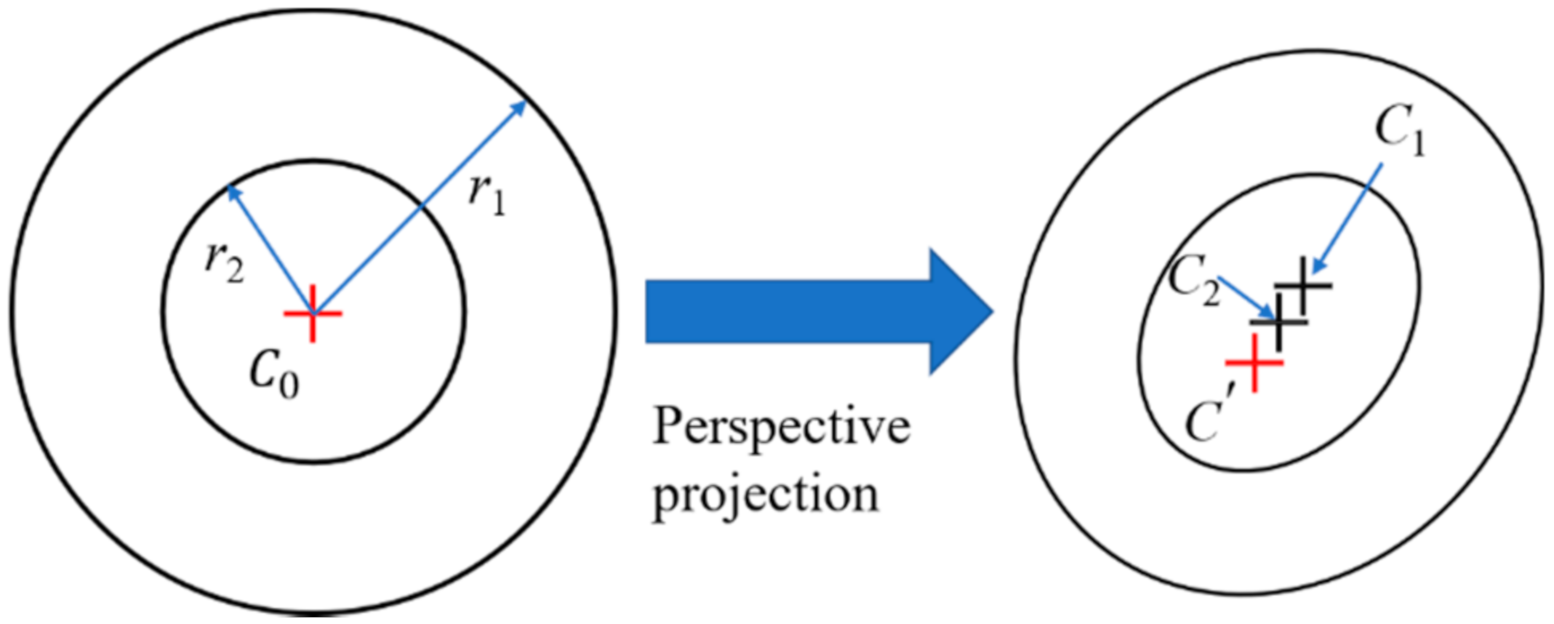

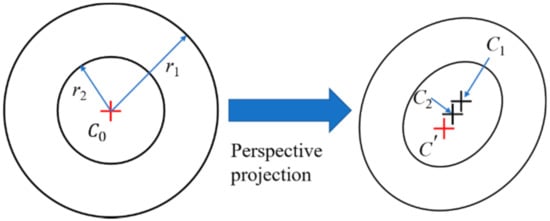

To obtain the true projected centers of the CCCTs, the two centers of each of the ellipse pair are used to correct the eccentricity errors. As shown in Figure 9, the central ring of a CCCT appears as two ellipses separated by two centers in the perspective image. Suppose that C0 is the center of CCCT in object space; r1 and r2 are the radii of outer and inner circles; C’ is the image point of C0; C1 and C2 are the centers of outer and inner ellipses, respectively. From the works of Ahn [56] and He [61], the eccentricity errors of the outer and inner ellipses could be described as

where Kx and Ky are two coefficients independent of the radii r1 and r2, and are equal for two circles in central positioning ring of CCCT.

Figure 9.

Perspective projection of central positioning ring of the coded target.

Combining Equations (13) and (14), the true projected center of the CCCT can be obtained as

where and are the center coordinates of the outer and inner ellipses.

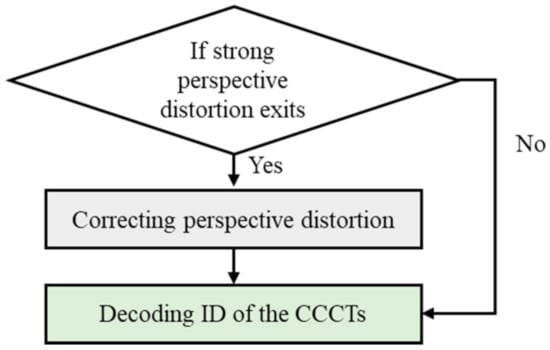

3.3. Identification of Concentric Circular Coded Targets

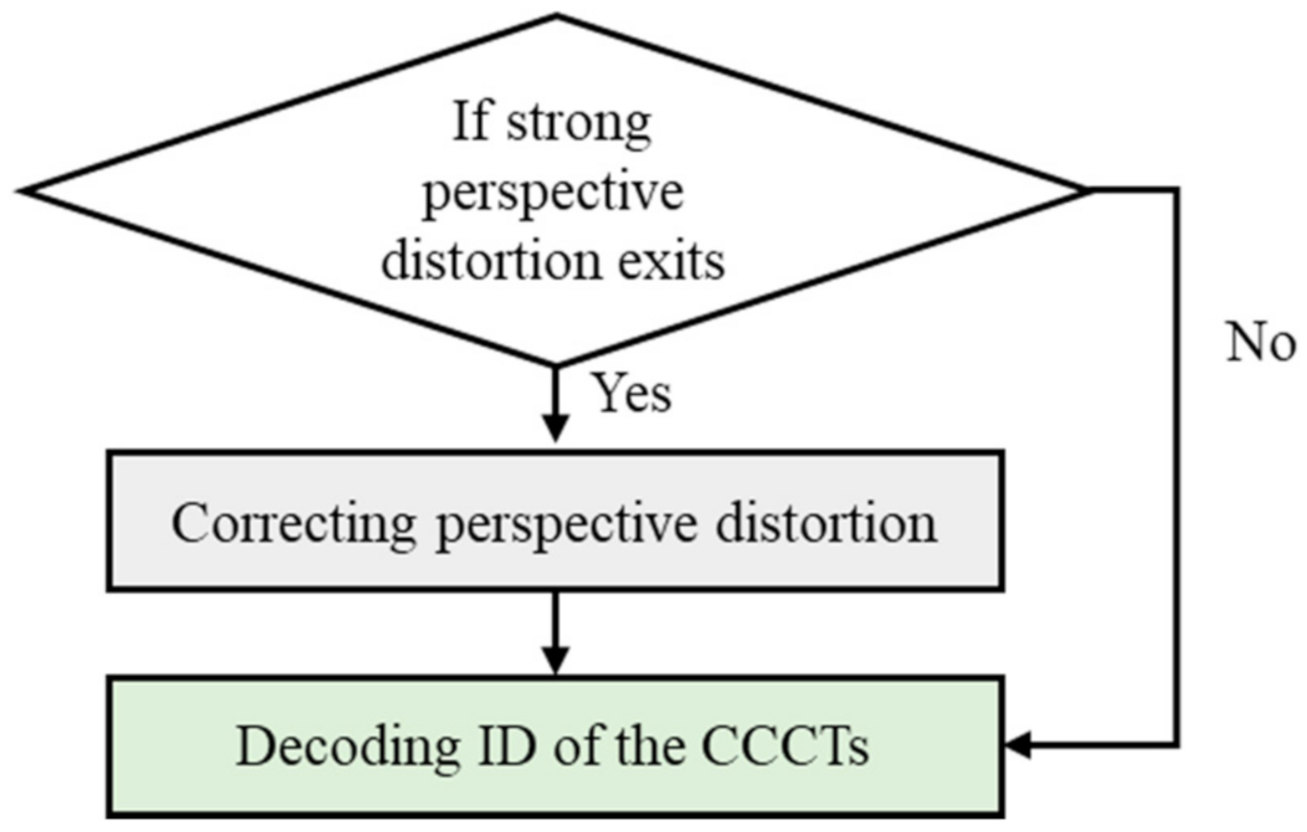

Subsequent to accurate locating of the centers of CCCTs, this part focuses on identifying the associated number of each CCCT. In Figure 4, the identified results indicate that serious perspective distortion occurs at the flat viewing angle, besides being more difficult in identifying the coded targets. In this case, it is necessary to correct perspective distortion, with an alternative of direct identification of CCCTs. The workflow is given in Figure 10.

Figure 10.

The workflow process to identify the number of CCCT.

3.3.1. Correcting Perspective Distortion

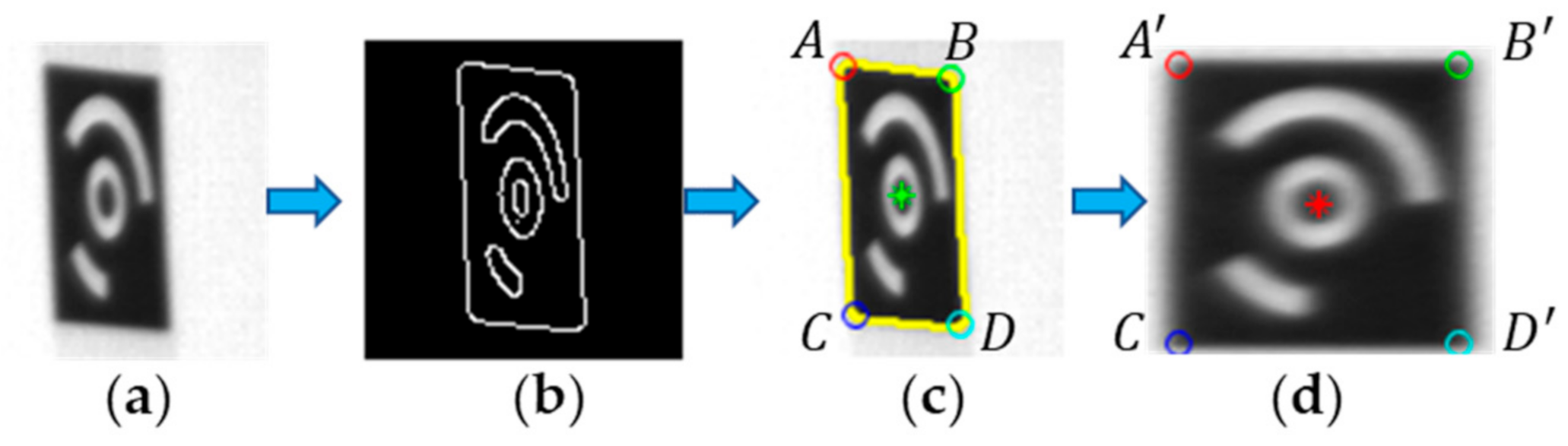

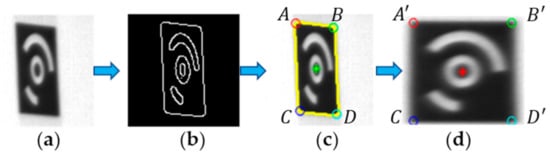

For the previously obtained center coordinates of candidate CCCTs, perspective correction is implemented for the ROIs covering them if no CCCT is identified in this region. First, the four vertices of the background area of the CCCT are determined. In this step, the Canny edge detection is performed over the local CCCT image shown in Figure 11a to get all the contours shown in Figure 11b. Thereafter, each of the detected contours is extracted and analyzed to see whether it is closed and comply with the size constraint. For a closed and large contour, for example, the yellow edge in Figure 11c, Gaussian convolution is performed to smooth the contour. Subsequently, a corner detection method based on global and local curvature properties [62] has been employed to obtain the four true vertices (see the color ‘O’).

Figure 11.

(a) The raw local image (ROI); (b) The result of Canny edge detection with adaptive double thresholds; (c) The result of determining the truth projected center (the green ‘*’) and the four vertices (the color ‘O’) of background area; (d) The result of correcting perspective distortion.

Perspective distortion correction is an inverse process over perspective imaging. The basic step is to obtain perspective transformation matrix. Perspective transformation can be expressed as

In Equation (16), and are the points in the raw local image and corrected image, respectively. Further, is the perspective transformation matrix. If a33 = 1, Equation (16) becomes

In Figure 11c, A, B, C, and D represent the four vertices (top-left, top-right, bottom-left, and bottom-right) of the black background area in the raw local image. Subsequent to perspective rectification, we set the side length of the background area as . Then, , , , and are the four vertices in the corrected image. Substituting the four pairs of image coordinates into Equation (5), TP can be solved by the following equations:

Base on the perspective transformation matrix TP, each pixel of the raw local image in Figure 11a can be mapped to the corrected image through Equation (16). However, this may be a one-to-one mapping or many-to-one mapping, and the mapped pixels (x, y) may not be integers, hence the bilinear interpolation is employed to calculate the gray value of each pixel in the corrected image. Figure 11d displays the result of perspective correction in Figure 11a, as it verifies the effectiveness of the perspective correction method in this paper.

3.3.2. Identifying the ID of Each CCCT

Identifying the ID of each CCCT can be performed over the extracted ROI or its corrected local image at a flat viewing angle. The detailed process is as follows:

- ◼

- First, scan the outward from the center of the CCCT to search the outer and inner boundaries of the circular coded band. The Otsu algorithm is employed to calculate the average gray of the pixels contained in the outer ellipse of the coded band, Further, this average value is taken as a threshold to distinguish the black background and white target.

- ◼

- Then, for 15-bits CCCTs, rays are radiated outward from the center with an angular step of 2.4°. All pixels on each ray that fall between the outer and inner boundaries are sampled. The median gray value of these pixels is compared with the gray threshold obtained previously, and if it is greater than the threshold, the ray gray sample value is marked as “1.” Otherwise it is “0.” A 150-bits binary sequence can be obtained by scanning one circle clockwise or anticlockwise.

- ◼

- The ideal starting point to decode is from the place where the gray level fluctuates sharply to avoid the small section in the coded band being erroneously identified. Therefore, we reverse the 150-bits binary sequence until the head and tail are not the same. The reordered sequence is divided into several blocks according to consecutive “1” or “0”. The number of “1” or “0” contained in the k’th block is defined as P[k], and the “1” or “0” information of the block is placed in the array C[k].

- ◼

- Since the 15-bits binary code is adopted for the proposed CCCT, every 10 of 150 bits represent a binary bit, which is determined as

The binary bits obtained from all coded blocks form a 15-bits binary number. For example, the CCCT shown in Figure 11 has 6 blocks, viz., P = {60, 30, 10, 9, 10, 31}, C = {1, 0, 1, 0, 1, 0}, B = {6, 3, 1, 1, 1, 3}, and hence the 15-bits binary number is 111111000101000.

- ◼

- Finally, the 15-bits binary number is ordered, the corresponding decimal number is computed, and the minimum value is used as the ID of CCCT.

4. Experiments and Results

To verify the accuracy and effectiveness of the proposed CCCT scheme and its positioning and identifying algorithm, the simulation and real experiments have been conducted in this work.

4.1. Positioning Accuracy Verification Experiment

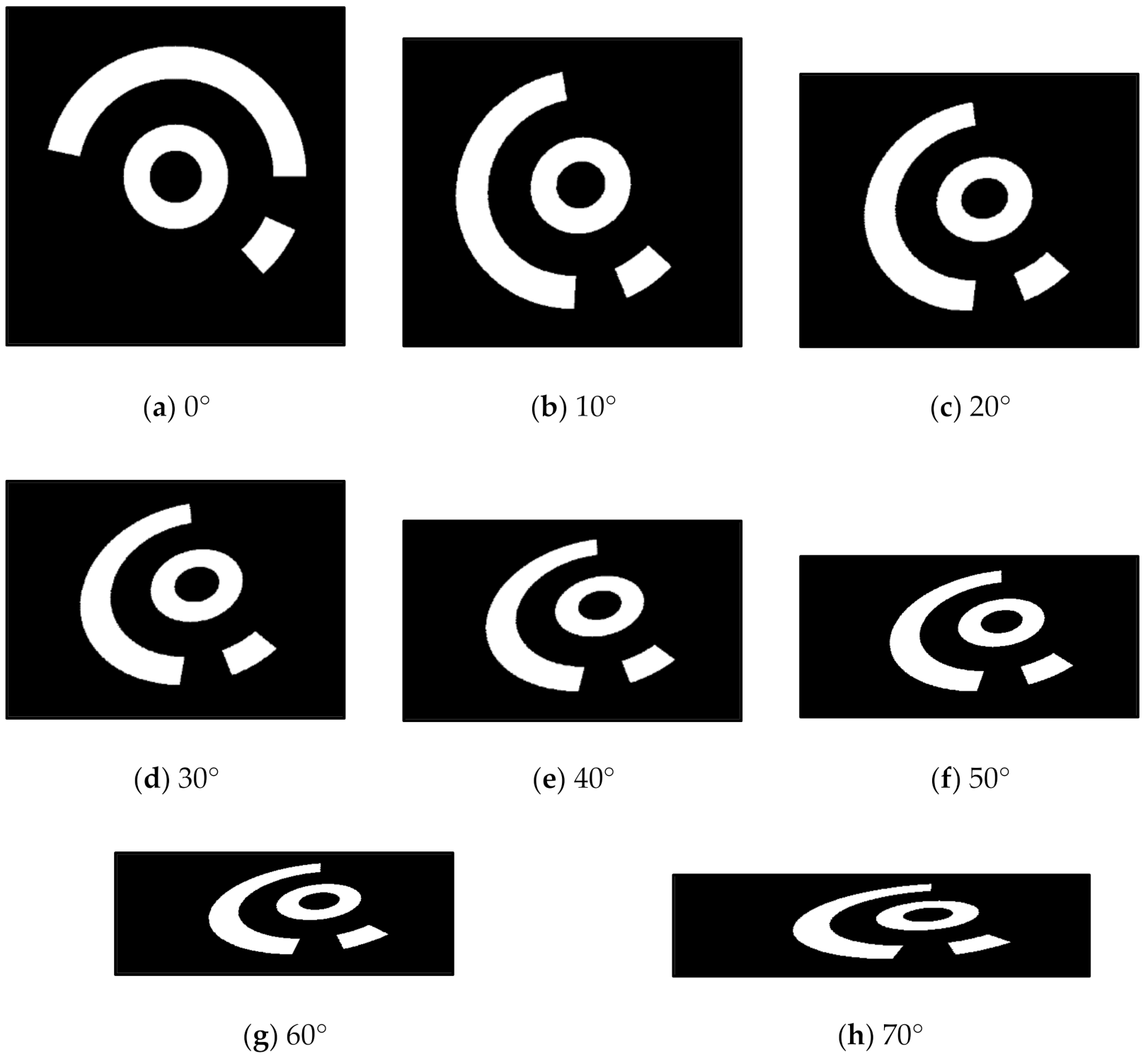

4.1.1. Influence of Different Viewing Angles

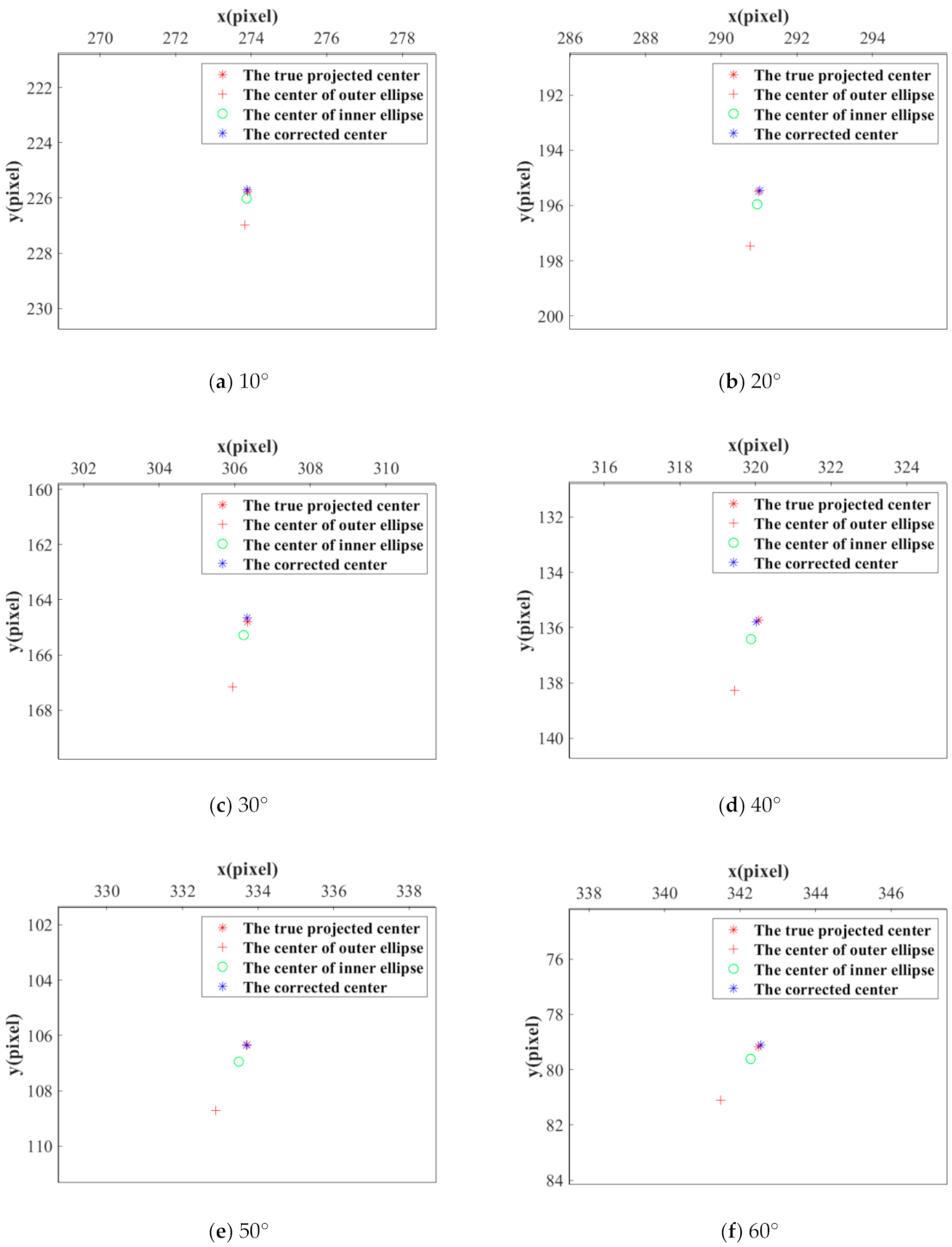

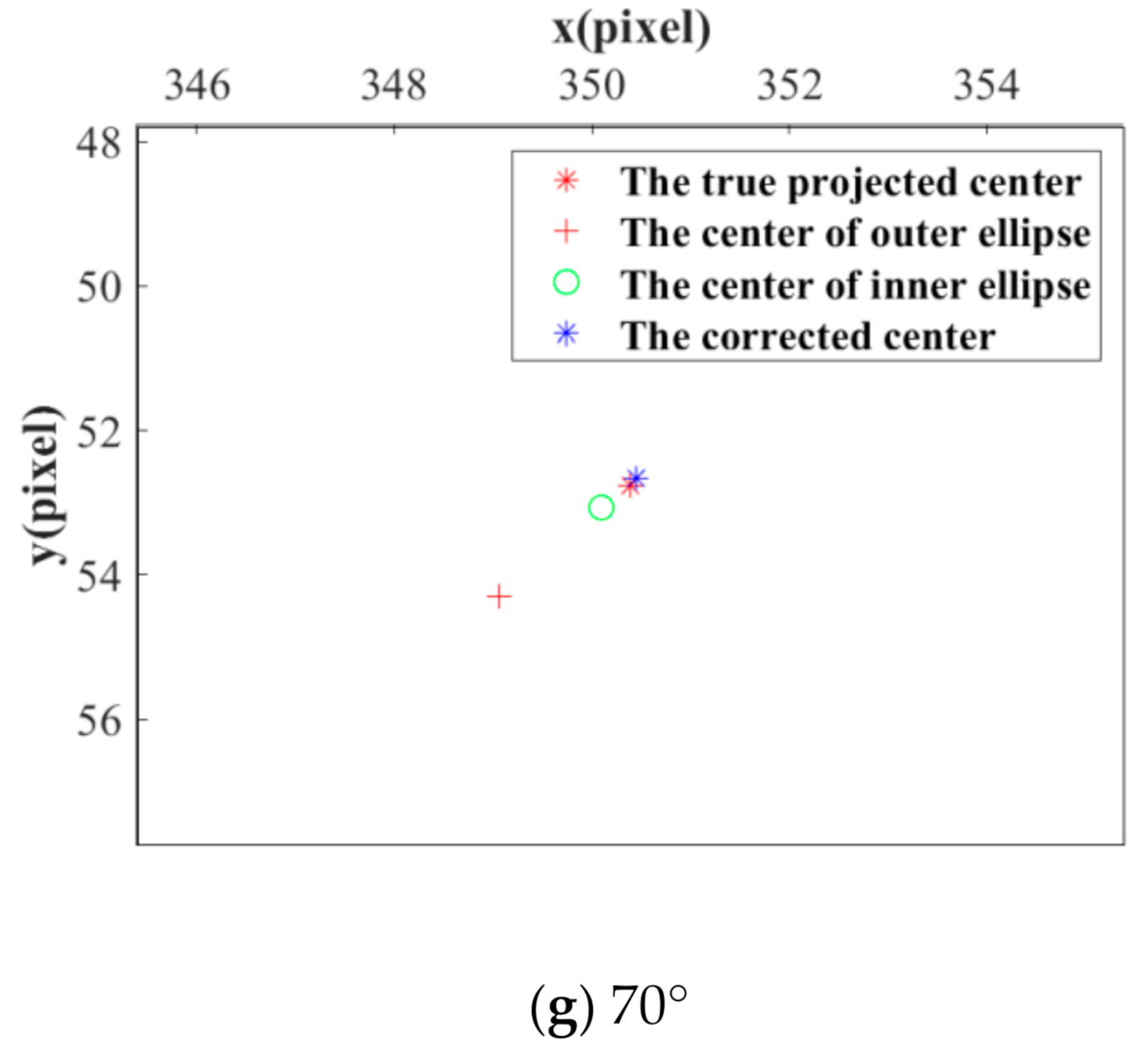

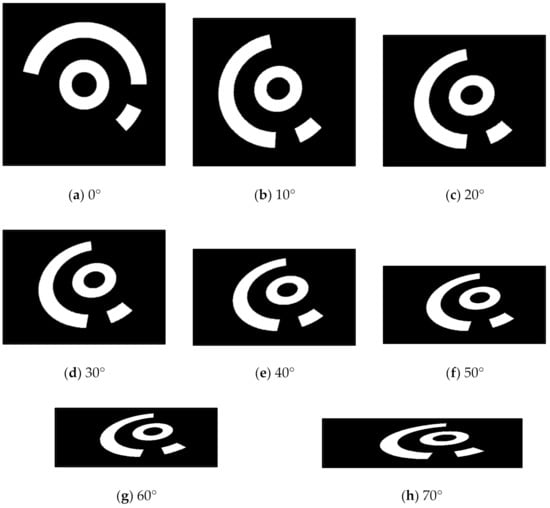

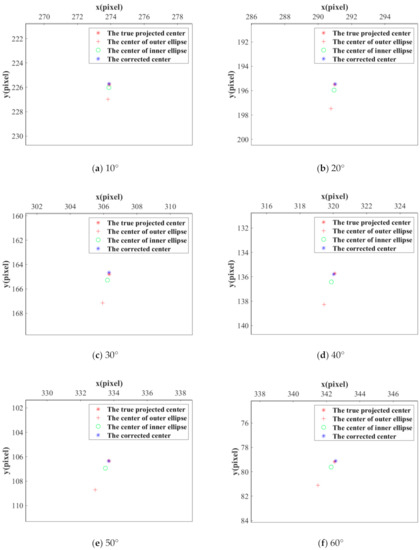

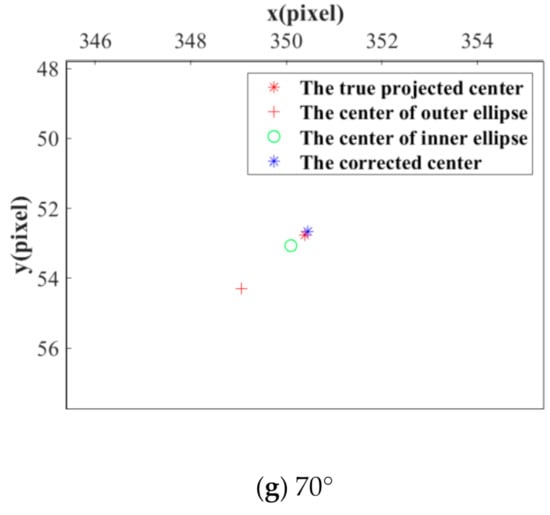

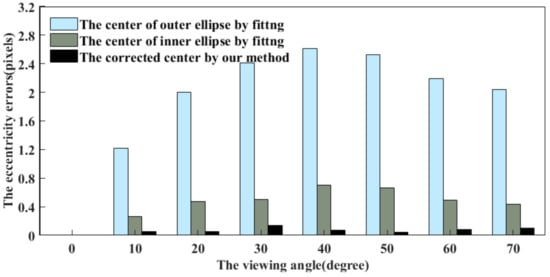

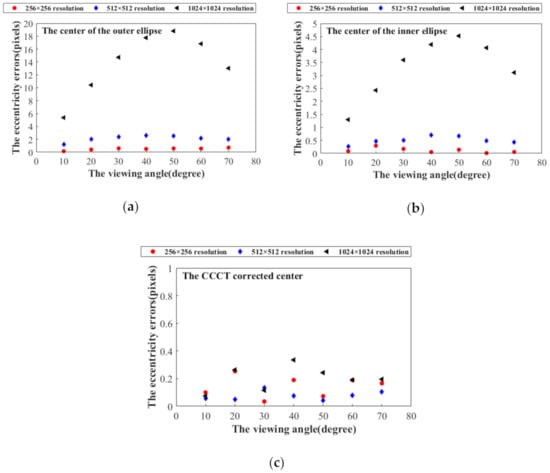

First, a front-view image of No.6 CCCT has been established by the simulated camera, as shown in Figure 12a, i.e., the viewing angle between the camera optical axis and the CCCT plane normal is 0°. The resolution is 512 × 512 pixels, and the coordinates of the centers of the two concentric circles are given as (257, 257) pixels. In Figure 12b–h, the viewing angle is set from 10° to 70° with an interval of 10°. For each image, the conventional ellipse fitting method is employed to obtain the centers of the outer and inner ellipses of the central positioning ring. The proposed method is used to compensate the eccentricity error. Figure 13 shows the center positioning results. The red ‘+’ and green ‘o’ represent the centers of the outer and inner ellipses; the blue ‘*’ represents the CCCT center obtained by our method, and the red ‘*’ is the location of the true projected center. The results show that the corrected center by our method is closer to the true projected center of each CCCT. To demonstrate the correction performance more clearly, a statistical analysis of the eccentricity errors is carried out, as shown in Figure 14. We note that both the centers of the outer and inner ellipses obtained by ellipse fitting method have a large eccentricity error, which increases significantly with the radius of the circle. Subsequent to correcting the eccentricity errors using our method, the eccentricity errors keep a very low level. At a large viewing angle of 70°, the eccentricity errors of larger and smaller ellipses reach 2.04 and 0.44 pixels while the eccentricity error by our method is 0.10 pixels. The eccentricity error is reduced by 95% and 77%. Therefore, the eccentricity error has been greatly decreased by positioning the center of the CCCT with our method.

Figure 12.

Simulation images with different viewing angles: (a) 0°; (b) 10°; (c) 20°; (d) 30°; (e) 40°; (f) 50°; (g) 60°; (h) 70°.

Figure 13.

The center positioning results for different viewing angles: (a) 10°; (b) 20°; (c) 30°; (d) 40°; (e) 50°; (f) 60°; (g) 70°. The red “+” and green “o” represent the centers of the outer and inner ellipses detected by fitting method; the blue ‘*’ represents the CCCT corrected center obtained by our method; the red ‘*’ is the location of the true projected center.

Figure 14.

For different viewing angles, the eccentricity errors of the centers of the outer and inner ellipses using the fitting method in comparison with that of the CCCT corrected center by our method.

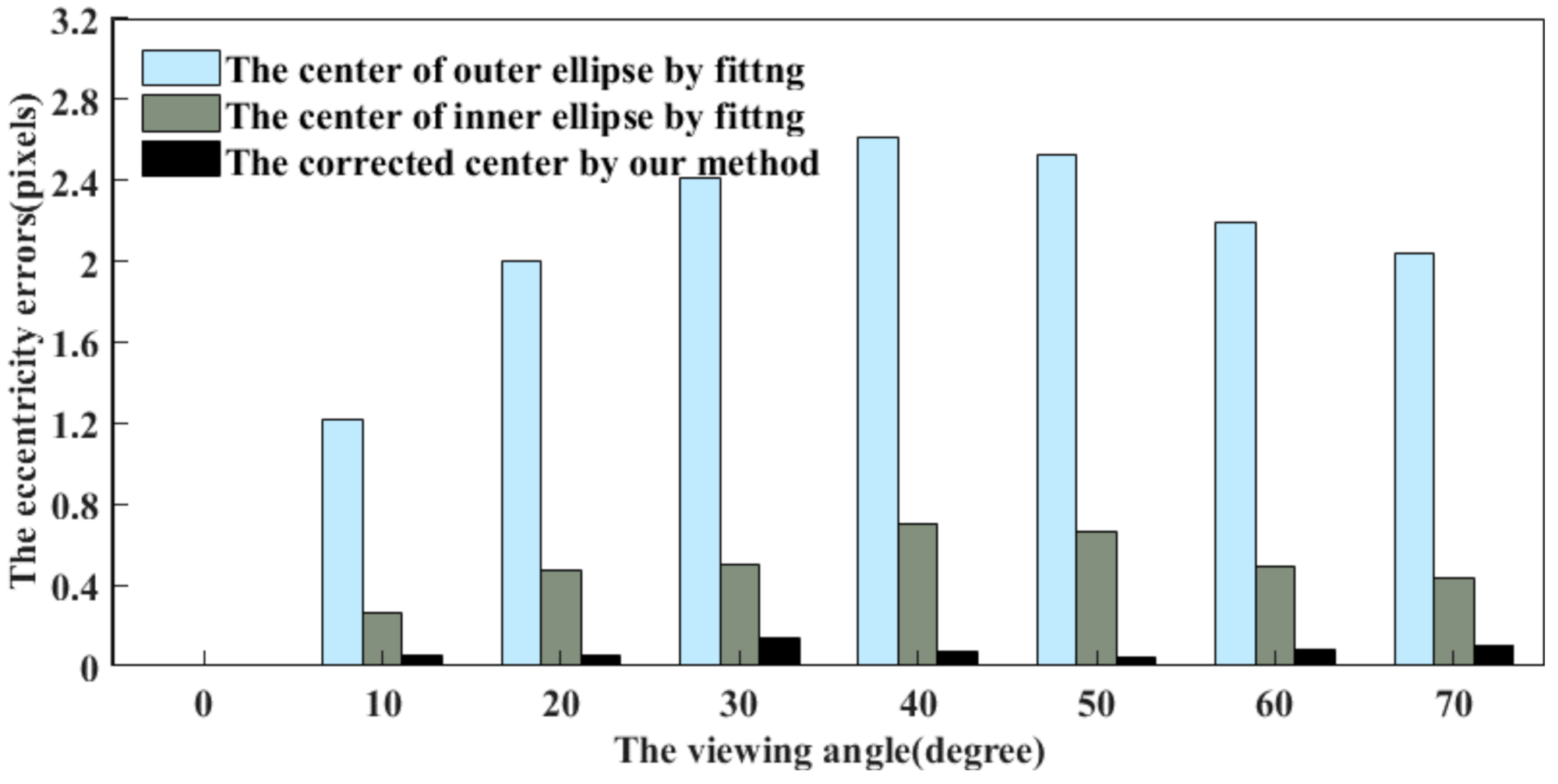

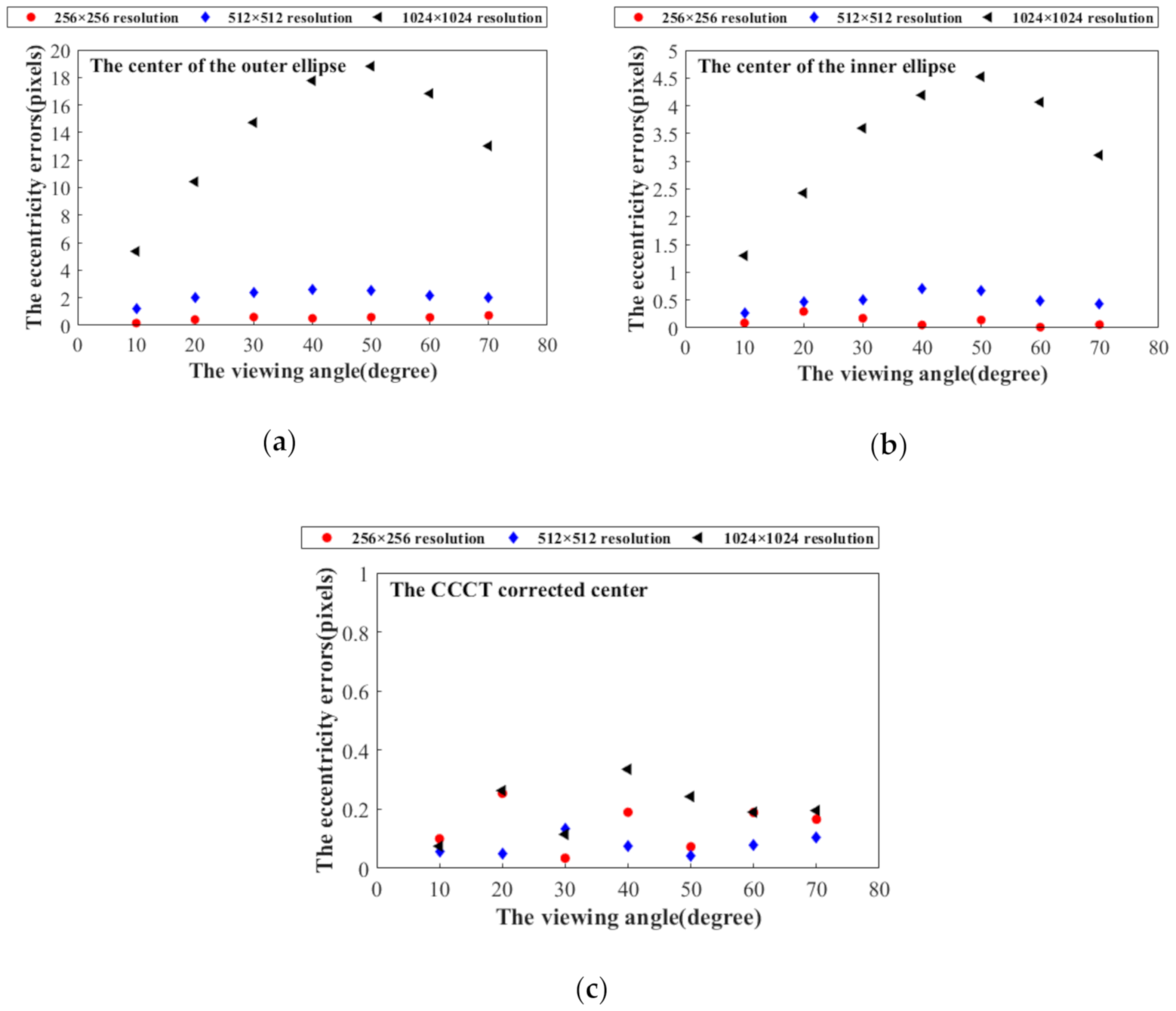

4.1.2. Influence of Front-View Image Resolution

The purpose of this subsection is to study how the CCCT detection algorithm is affected if the front-view image resolution is increased or decreased. Besides the series of images based on 512 × 512 resolution in Figure 12, we also adopt the simulated camera to produce the front-view images with 256 × 256 and 1024 × 1024 resolutions, respectively. Figure 15a,b shows the eccentricity errors of the centers of the outer and inner ellipses, obtained by the fitting method. Further, the results that are subjected to the correction by our method are shown in Figure 15c. By employing the ellipse fitting method, the eccentricity errors are enlarged with the increase of image resolution. Subsequent to the correction by our method, the eccentricity errors are smaller than 0.4 pixels under the influence of image resolution. Therefore, the front-view image resolution has little or no impact on the CCCT detection algorithm.

Figure 15.

(a,b): The eccentricity errors of the outer and inner ellipses’ centers obtained by fitting method; (c) The results after correction by our method 10°.

4.1.3. The Performance against the Image Noises

To validate the performance against the image noises, we add a Gaussian noise and pepper noise to the simulated image of 30° in Figure 13d. The variance of the Gaussian noise, besides the density of pepper noise, is set from 0.005 to 0.02. Table 1 shows the eccentricity errors (EEs) under different image noises. The results suggest that the eccentricity error of the outer ellipse center obtained by fitting is five times larger than that of the inner ellipse center, and twenty times that of the CCCT corrected center. The EEs experience negligible change when the image noise is increased. Therefore, our positioning method is more accurate even under the condition of noise with varying intensities.

Table 1.

The EEs under different image noises.

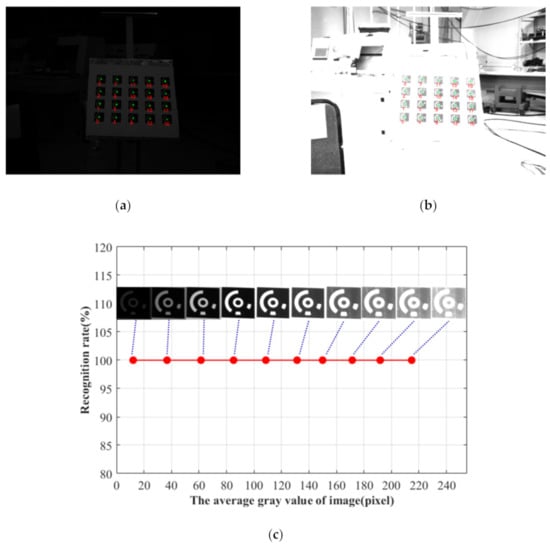

4.2. Identification Performance Verification Experiment

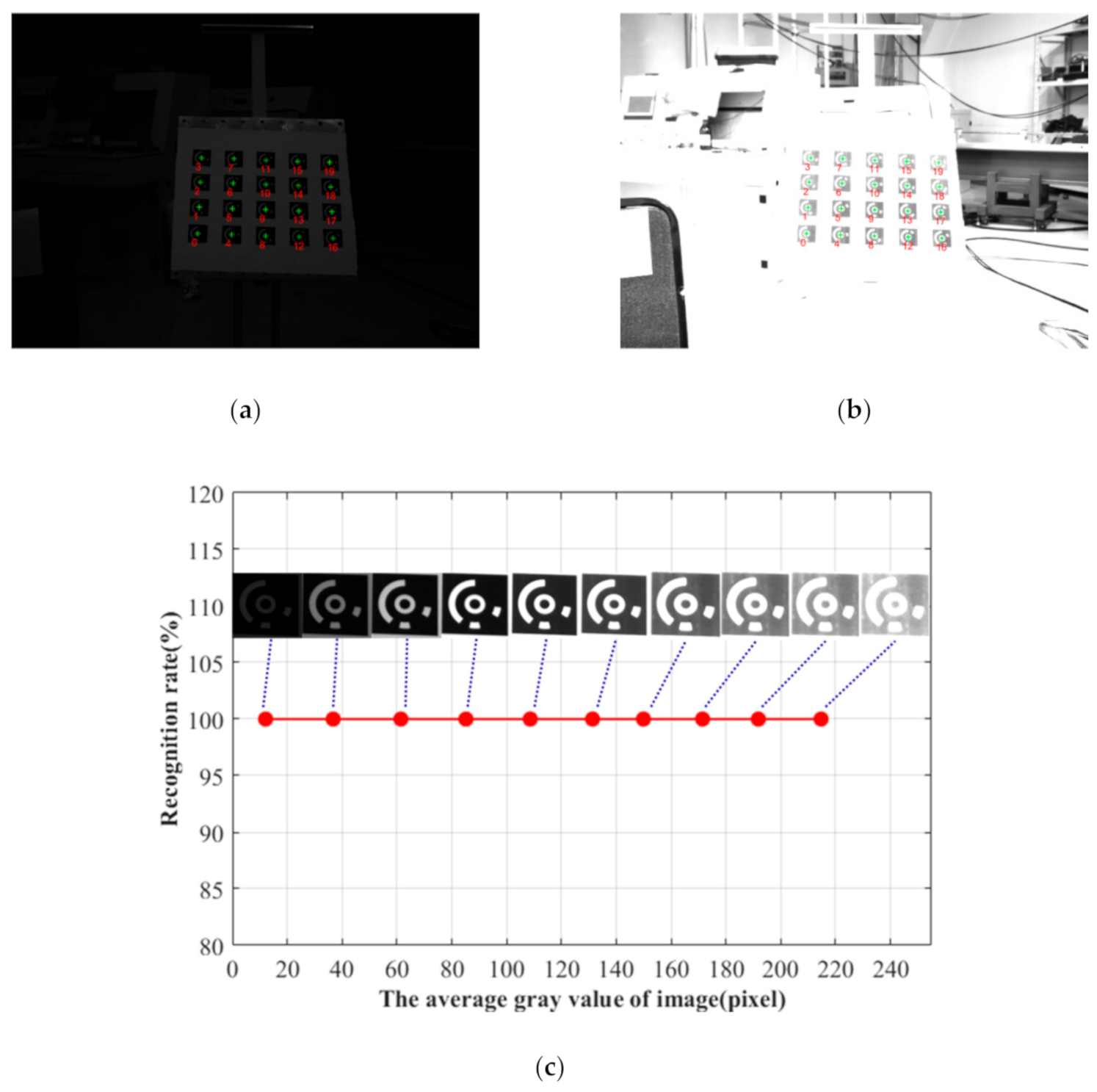

To check the proposed identifying method, the experiments under different lighting conditions and viewing angles have been conducted. The recognition rate of all the CCCTs in the field of view is regarded as a criterion for evaluating the recognition performance. A Daheng digital camera (DAHENG_MER-1070-14U3x) with a rolling shutter has been utilized to capture the images with the resolution of 3840 × 2748 pixels. It is noted that the following images are all taken when neither the camera nor the imaged object is moved, so the quality of the recorded coded targets is not affected by a rolling shutter effect. Each image contains 20 coded targets. By adjusting the brightness of the LED light source, ten images with varying exposure levels are acquired. Figure 16a,b shows under- and overexposed examples. In Figure 16, the green “+” represent the centers of the CCCTs by the presented positioning method, and the numbers in red represent their ID obtained by our identification method. Taking as an example the CCCT with ID 9, the average gray value of its ROI is calculated to estimate the exposure level. As shown in Figure 16c, the recognition rate for all the 20 coded targets is still 100% when the average gray value is varying from 12 to 215 pixels. It can be seen that our method can identify all the coded targets even when the illumination condition is poor (e.g., overexposure, underexposure).

Figure 16.

Experimental results of CCCT recognition under varying exposure levels: (a) Overexposed image; (b) Underexposed image; (c) The recognition rate for different exposure levels.

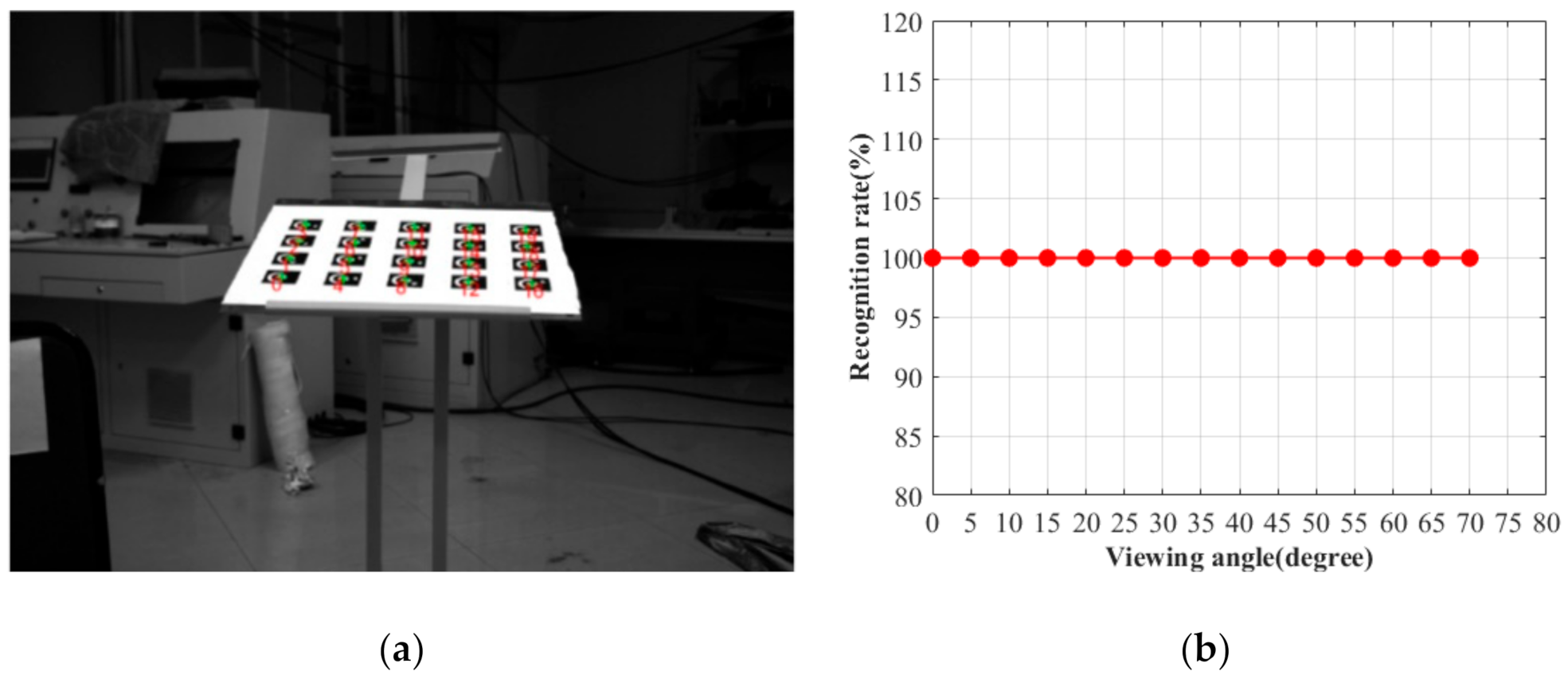

In order to check the recognition performance at a flat viewing angle, the camera viewing angle is varying from 0° to 70° by a rotation stage. Figure 17a shows the image at the viewing angle of 70°. The green ‘+’ represent the centers of the CCCTs by the presented positioning method, and the numbers in red represent their ID obtained by our identification method. In Figure 17b, we plot the identification rate as a function of viewing angle. All the coded targets can be identified accurately by our method even at the flat viewing angle of 70°. Therefore, the presented CCCT scheme and related algorithms demonstrate outstanding performances in challenging conditions.

Figure 17.

Experimental results of CCCT recognition under different viewing angles. (a) The viewing angle of 70°; (b) The recognition rate for different viewing angles.

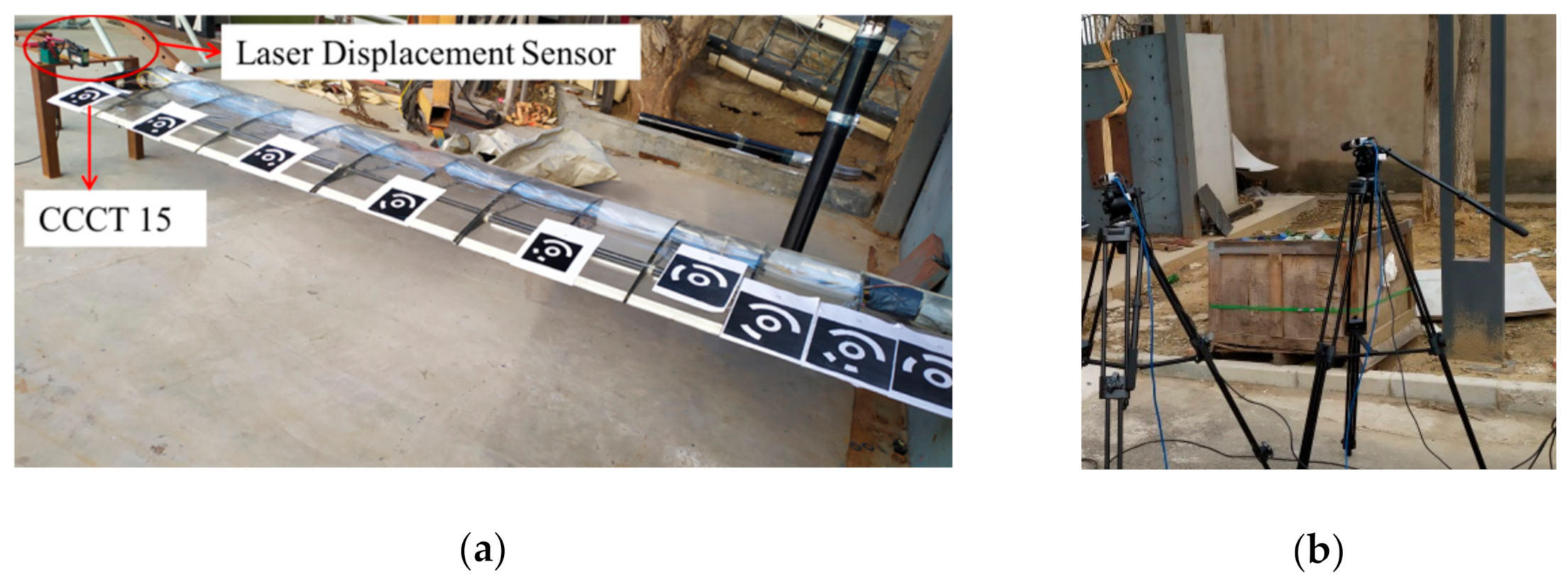

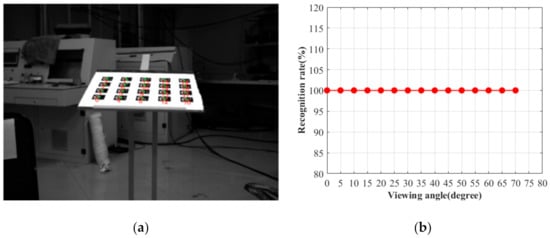

4.3. Wing Deformation Measurement Experiment

For a typical application case mentioned above, we employ the CCCTs to measure the wing deformation of an unmanned aerial vehicle. The length of the wing is about 3.5 m. Nine CCCTs are attached on the wing surface, as shown in Figure 18a. Two Basler digital cameras (Basler acA1300-200 um with a global shutter) both have the resolutions of 1280 × 1024 pixels and are used as image collection equipment, as shown in Figure 18b. Natural sunlight has been adopted for illumination. Before loading the wing, we use Zhang’s method [63] to calibrate the camera parameters of the binocular vision system. Then, a pair of images of the wing is acquired under the unloaded condition. Subsequently, three pairs of images of the wing are taken by the left and right camera when three types of weights (2, 4, and 6 kg) are loaded to the wing tip. Under each loading weight, a KEYENCE G150 laser displacement sensor (LDS) was used to obtain the displacement of CCCT 15 on the wing tip.

Figure 18.

Wing deformation experiment using CCCTs in field work: (a) Binocular cameras; (b) laser displacement sensor.

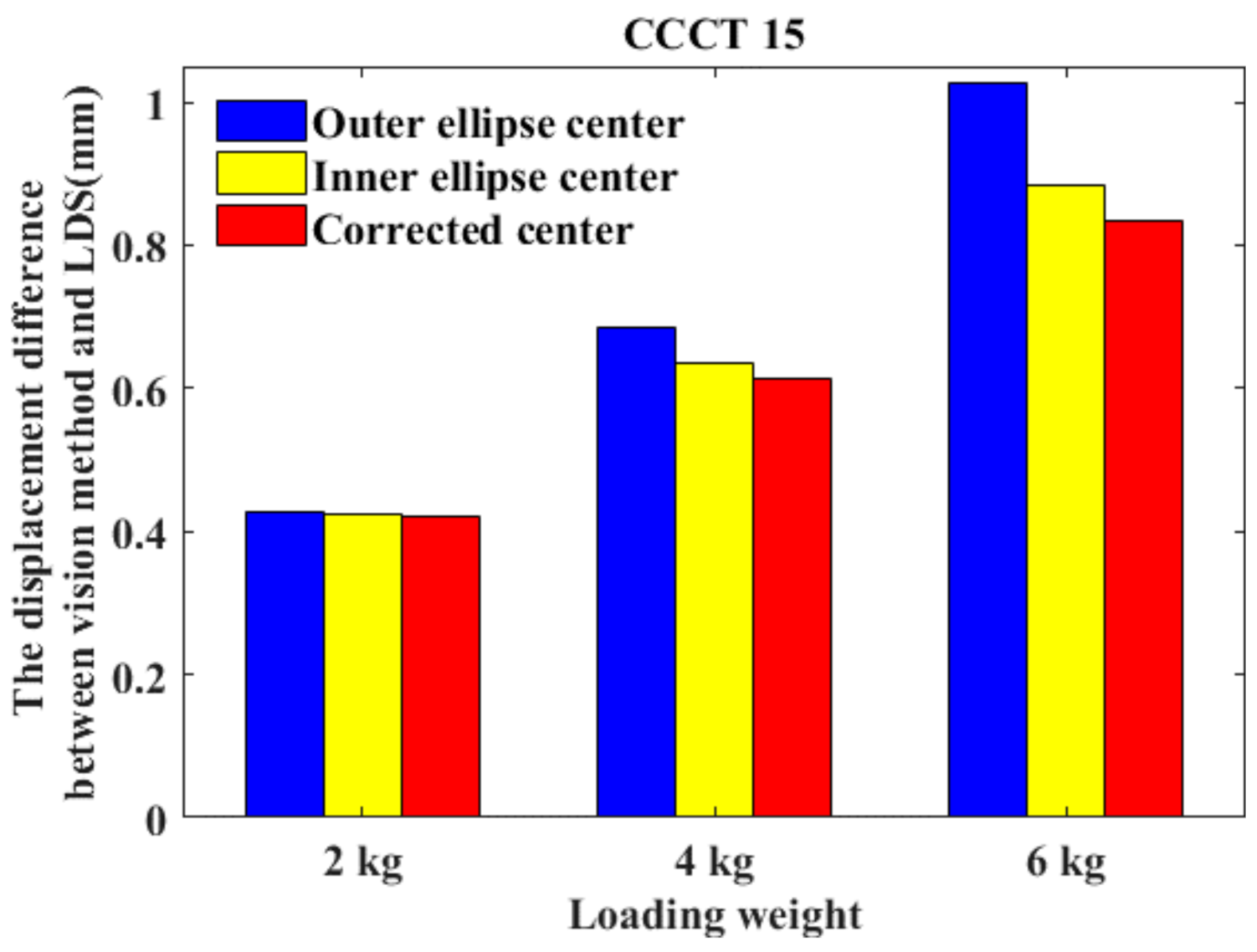

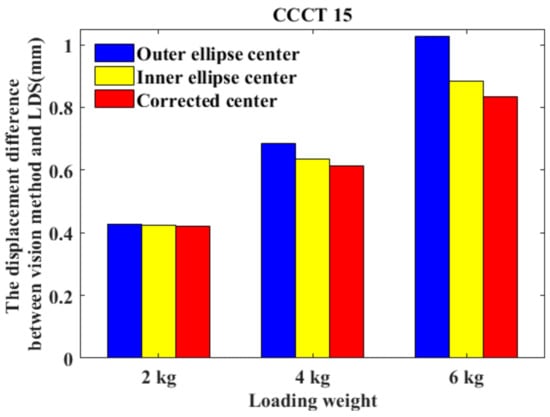

Using the proposed algorithms, we can determine the image coordinates of the outer and inner ellipse centers of all the CCCTs in each pair of images and the location of corrected centers. With the camera calibration parameters and the image coordinates, the 3D coordinate of each CCCT can be reconstructed by means of triangulation methods of photogrammetry. The difference between the 3D coordinates of CCCTs under loading the weights and unloaded condition yields the displacement of each CCCT. Under different loading weights, the displacements of CCCT 15 obtained by LDS and vision method based on outer ellipse center, inner ellipse center, and the corrected center are listed in Table 2. It is evident that the displacement obtained by the vision method based on the corrected center is closer to the result of LDS. The displacement difference between the vision method and LDS is shown in Figure 19. Compared with the vision method based on the outer ellipse center, the difference of vision measurement based on the corrected center is reduced by 1.59%, 10.59%, and 18.54% when loading the wing with the weight of 2 kg, 4 kg, and 6 kg. The maximum difference is less than 0.85 mm. When the loading weight is increased, the vision measurement using the proposed eccentricity-error-compensation model has higher accuracy in comparison with the current vision method using only the ellipse center.

Table 2.

The displacement of CCCT 15 on the wing.

Figure 19.

The displacement difference between vision method and laser displacement sensor (LDS) under different loading weights.

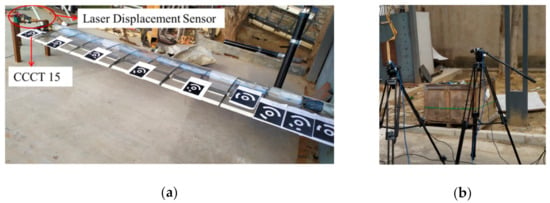

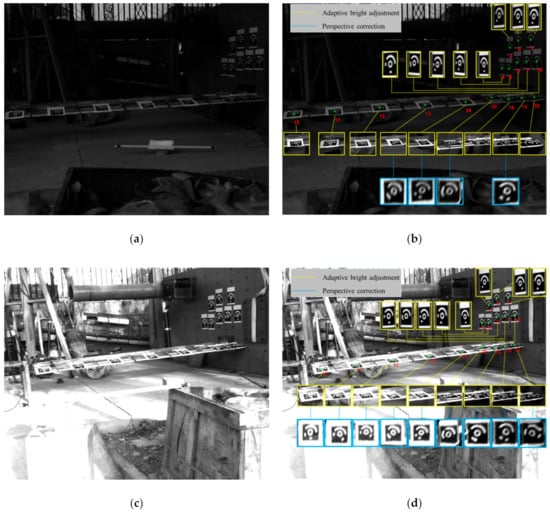

In addition, Figure 20a shows an example of an underexposed image owing to the significant change in the illumination. Figure 20c gives an example of an overexposed image recorded while having the position of the sun on the unfavorable side of the wing. These two images with difficult illumination conditions have been taken as examples to demonstrate the proposed algorithms. The nine CCCTs attached on the wing have been imaged at a flat viewing angle. Eight CCCTs are attached on the bearing wall for comparison because they are imaged under favorable viewing conditions. The proposed positioning and identification algorithm are applied to process the images. In Figure 20b,d, the numbers in red represent the recognized ID of the CCCTs, and the green “+” are the locations of the corrected centers. It can be seen that all the CCCTs are correctly identified, including a “difficult” one. The local images with yellow borders represent the results subsequent to adaptive bright adjustment. The local images with cerulean borders represent the results subsequent to the perspective correction. We note that the perspective distortions caused by the flat viewing angle are precisely corrected. In real in-flight applications, the above underexposure and overexposure of the recordings could happen owing to flying turns, being into clouds, and the flying direction turning towards the sun. Besides, the problem of perspective distortion at “flat” camera position is unavoidable. The proposed algorithms display a satisfactory effectiveness to cope with these challenges.

Figure 20.

Wing deformation experiment based on CCCTs in field work: (a) Underexposure of the image; (b) Identification of CCCTs; (c) Overexposure; (d) Identification of CCCTs.

5. Conclusions

This work proposes a novel concentric circular coded target and its detection algorithm to cope with the problems of coded targets-based vision measurement performed in challenging conditions of poor illumination and flat viewing angle. First, a new design for changing the central positioning dot to a ring has been introduced. This can correct the eccentricity error caused by perspective distortion to improve the positioning accuracy, besides solving the problem of adhesion between the central positioning circle and the circular coded band to improve the recognition rate. Thereafter, we propose a positioning algorithm to precisely locate the true projected center of the CCCT. In this algorithm, the adaptive brightness adjustment is employed to address the problems of poor illumination. Concomitantly, the eccentricity error caused by flat viewing angle is corrected based on a practical error-compensation model. Finally, we present an approach to cope with the decoding failure under a flat viewing angle making CCCTs’ use more robust in challenging environments.

To verify the accuracy and reliability of the proposed positioning algorithm, we have conducted certain simulation experiments under different viewing angles, image resolutions, and image noises. The results indicate that the eccentricity errors of the larger and smaller circles at a large viewing angle of 70° are reduced by 95% and 77% after correction using the proposed method. The front-view image resolution has little or no impact on the locating algorithm. When the image noise is increased, negligible change occurs in the eccentricity errors of outer and inner ellipse centers obtained by fitting, as well as the CCCT corrected center; however, the eccentricity error of the outer ellipse center is five times larger than that of the inner ellipse center, and twenty times that of the corrected center.

To check the proposed identifying method, the experiments under different lighting conditions and viewing angles have been conducted. The recognition rate of all the CCCTs in the field of view is regarded as a criterion for evaluating the recognition performance. The results show that the recognition rate of all the 20 coded targets is still 100% when the average gray value is varying from 12 pixels (underexposure) to 215 pixels (overexposure). All the coded targets can be identified accurately by our method even at the flat viewing angle of 70°.

To further verify the validity, we employ the proposed CCCTs to measure the wing deformation of an unmanned aerial vehicle. The result demonstrates that the error of the vision method based on the corrected center is reduced by up to 18.54% compared with the vision method based on only the ellipse center when the wing is loaded with a weight of 6 kg. Two underexposed and overexposed images captured under the difficult illumination conditions have been taken as examples to demonstrate the identification performance. The identified results demonstrate that the proposed algorithms show appreciable effectiveness to cope with the challenges, for e.g., poor illumination and flat viewing angle.

With the advantages of outstanding positioning and identification performance in challenging conditions of poor illumination and flat viewing angle, we expect an extensive application of the proposed CCCT and its detection algorithms in vision-based industrial measurements.

Author Contributions

Y.L., X.G. and T.S. designed the experiments; Y.L. and X.S. performed the experiments and analyzed the data; Y.L. wrote the paper; supervision, X.G., T.S. and Q.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China [Nos. 11372256, 11502216, 11527803, 11602201, 11602202 and 12072279] and the Natural Science Basic Research Plan in Shaanxi province of China [No. 2018JQ1060].

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chen, T.; Zhou, Z. An Improved Vision Method for Robust monitoring of Multi-Point Dynamic Displacements with Smartphones in an Interference Environment. Sensors 2020, 20, 5929. [Google Scholar] [CrossRef] [PubMed]

- Spencer, B.F.; Hoskere, V.; Narazaki, Y. Advances in computer vision-based civil infrastructure inspection and monitoring. Engineering 2019, 5, 199–248. [Google Scholar] [CrossRef]

- Liu, J.; Wu, J.; Li, X. Robust and Accurate Hand–Eye Calibration Method Based on Schur Matric Decomposition. Sensors 2019, 20, 4490. [Google Scholar] [CrossRef]

- Feng, D.; Feng, M.Q. Computer vision for SHM of civil infrastructure: From dynamic response measurement to damage detection—A review. Eng. Struct. 2018, 156, 105–117. [Google Scholar] [CrossRef]

- Yu, C.; Chen, X.; Xi, J. Determination of optimal measurement configurations for self-calibrating a robotic visual inspection system with multiple point constraints. Int. J. Adv. Manuf. Technol. 2018, 96, 3365–3375. [Google Scholar] [CrossRef]

- Jeong, H.; Yu, J.; Lee, D. Calibration of In-Plane Center Alignment Errors in the Installation of a Circular Slide with Machine-Vision Sensor and a Reflective Marker. Sensors 2020, 20, 5916. [Google Scholar] [CrossRef] [PubMed]

- Chen, R.; Li, Z.; Zhong, K.; Liu, X.; Wu, Y.; Wang, C.; Shi, Y. A Stereo-Vision System for Measuring the Ram Speed of Steam Hammers in an Environment with a Large Field of View and Strong Vibrations. Sensors 2019, 19, 996. [Google Scholar] [CrossRef]

- Dworakowski, Z.; Kohut, P.; Gallina, A.; Holak, K.; Uhl, T. Vision-based algorithms for damage detection and localization in structural health monitoring. Struct. Control. Health Monit. 2016, 23, 35–50. [Google Scholar] [CrossRef]

- Luo, L.; Feng, M.Q.; Wu, Z.Y. Robust vision sensor for multi-point displacement monitoring of bridges in the field. Eng. Struct. 2018, 163, 255–266. [Google Scholar] [CrossRef]

- Brosnan, T.; Sun, D.W. Improving quality inspection of food products by computer vision-A review. J. Food Eng. 2004, 61, 3–16. [Google Scholar] [CrossRef]

- Srivastava, B.; Anvikar, A.R.; Ghosh, S.K.; Mishra, N.; Kumar, N.; Houri-Yafin, A.; Pollak, J.J.; Salpeter, S.J.; Valecha, N. Computer-vision-based technology for fast, accurate and cost effective diagnosis of malaria. Malar. J. 2015, 14, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Tateno, S.; Meng, F.; Qian, R.; Hachiya, Y. Privacy-Preserved Fall Detection Method with Three-Dimensional Convolutional Neural Network Using Low-Resolution Infrared Array Sensor. Sensors 2020, 20, 5957. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Liu, W.; Han, Z. A High-Precision Detection Approach for Catenary Geometry Parameters of Electrical Railway. IEEE Trans. Instrum. Meas. 2017, 66, 1798–1808. [Google Scholar] [CrossRef]

- Sivaraman, S.; Trivedi, M.M. Looking at Vehicles on the Road: A Survey of Vision-Based Vehicle Detection, Tracking, and Behavior Analysis. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1773–1795. [Google Scholar] [CrossRef]

- Karwowski, K.; Mizan, M.; Karkosiński, D. Monitoring of current collectors on the railway line. Transport 2016, 33, 177–185. [Google Scholar] [CrossRef]

- Liu, G.; Xu, C.; Zhu, Y.; Zhao, J. Monocular vision-based pose determination in close proximity for low impact docking. Sensors 2019, 19, 3261. [Google Scholar] [CrossRef]

- Chen, L.; Huang, P.; Cai, J.; Meng, Z.; Liu, Z. A non-cooperative target grasping position prediction model for tethered space robot. Aerosp. Sci. Technol. 2016, 58, 571–581. [Google Scholar] [CrossRef]

- Liu, T.; Burner, A.W.; Jones, T.W.; Barrows, D.A. Photogrammetric Techniques for Aerospace Applications. Prog. Aerosp. Sci. 2012, 54, 1–58. [Google Scholar] [CrossRef]

- Zhang, J.; Ren, L.; Deng, H.; Ma, M.; Zhong, X.; Wen, P. Measurement of Unmanned Aerial Vehicle Attitude Angles Based on a Single Captured Image. Sensors 2018, 18, 2655. [Google Scholar] [CrossRef]

- Kim, J.; Jeong, Y.; Lee, H.; Yun, H. Marker-Based Structural Displacement Measurement Models with Camera Movement Error Correction Using Image Matching and Anomaly Detection. Sensors 2020, 20, 5676. [Google Scholar] [CrossRef]

- Berveglieri, A.; Tommaselli, A.M.G. Reconstruction of Cylindrical Surfaces Using Digital Image Correlation. Sensors 2018, 18, 4183. [Google Scholar] [CrossRef] [PubMed]

- Ahn, S.; Rauh, W. Circular Coded Target for Automation of Optical 3D-Measurement and Camera Calibration. Int. J. Pattern Recognit. Artif. Intell. 2001, 15, 905–919. [Google Scholar] [CrossRef]

- Scaioni, M.; Feng, T.; Barazzetti, L.; Previtali, M.; Lu, P.; Qiao, G.; Wu, H.; Chen, W.; Tong, X.; Wang, W.; et al. Some applications of 2-D and 3-D photogrammetry during laboratory experiments for hydrogeological risk assessment. Geomat. Nat. Hazards Risk 2015, 6, 473–496. [Google Scholar] [CrossRef]

- Liu, C.; Dong, S.; Mokhtar, M.; He, X.; Lu, J.; Wu, X. Multicamera system extrinsic stability analysis and large-span truss string structure displacement measurement. Appl. Opt. 2016, 55, 8153–8161. [Google Scholar] [CrossRef] [PubMed]

- Huang, G. Study on the Key Technologies of Digital Close Range Industrial Photogrammetry and Applications. Ph.D. Thesis, Tianjin University, Tianjin, China, May 2005. [Google Scholar]

- Xiao, Z. Study on the Key Technologies of 3D Shape and Deformation Measurement Based on Industrial Photogrammetry and Computer Vision. Ph.D. Thesis, Xi’an Jiaotong University, Xi’an, China, May 2010. [Google Scholar]

- Xiao, Z.; Liang, J.; Yu, D.; Tang, Z.; Asundi, A. An accurate stereo vision system using cross-shaped target self-calibration method based on photogrammetry. Opt. Laser Technol. 2010, 48, 1251–1261. [Google Scholar] [CrossRef]

- Fraser, C.S. Innovations in automation for vision metrology systems. Photogramm. Rec. 1997, 15, 901–911. [Google Scholar] [CrossRef]

- Ahn, S.J.; Schultes, M. A new circular coded target for the automation of photogrammetric 3D surface measurements. Optical 3-D Measurement Techniques IV. In In Proceedings of the 4th Conference on Optical 3D Measurement Techniques, Zurich, Switzerland, 29 September−2 October 1997; pp. 225–234. [Google Scholar]

- Hattori, S.; Akimoto, K.; Fraser, C.; Imoto, H. Automated Procedures with Coded Targets in Industrial Vision Metrology. Photogramm. Eng. Remote Sens. 2002, 68, 441–446. [Google Scholar]

- Shortis, M.R.; Seager, J.W.; Robson, S.; Harvey, E.S. Automatic recognition of coded targets based on a Hough transform and segment matching. In Proceedings of the SPIE 5013, Santa Clara, CA, USA, 20–24 January 2003; pp. 202–208. [Google Scholar]

- Shortis, M.R.; Seager, J.W. A practical target recognition system for close range photogrammetry. Photogramm. Rec. 2015, 29, 337–355. [Google Scholar] [CrossRef]

- Sukhovilov, B.; Sartasov, E.; Grigorova, E.A. Improving the accuracy of determining the position of the code marks in the problems of constructing three-dimensional models of objects. In Proceedings of the 2nd International Conference on Industrial Engineering, Applications and Manufacturing, Chelyabinsk, Russia, 19−20 May 2016; pp. 1–4. [Google Scholar]

- Tushev, S.; Sukhovilov, B.; Sartasov, E. Architecture of industrial close-range photogrammetric system with multi-functional coded targets. In Proceedings of the 2nd International Ural Conference on Measurements, Chelyabinsk, Russia, 16−19 October 2017; pp. 435–442. [Google Scholar]

- Tushev, S.; Sukhovilov, B.; Sartasov, E. Robust coded target recognition in adverse light conditions. In Proceedings of the International Conference on Industrial Engineering, Applications and Manufacturing, Moscow, Russia, 15−18 May 2018; pp. 790–797. [Google Scholar]

- Knyaz, V.A.; Sibiryakov, A.V. The development of new coded targets for automated point identification and non-contact 3D surface measurements. Int. Arch. Photogramm. Remote Sens. 1998, 32, 80–85. [Google Scholar]

- Guo, Z.; Liu, X.; Wang, H.; Zheng, Z. An ellipse detection method for 3D head image fusion based on color-coded mark points. Front. Optoelectron. 2012, 5, 395–399. [Google Scholar] [CrossRef]

- Yang, J.; Han, J.D.; Qin, P.L. Correcting error on recognition of coded points for photogrammetry. Opt. Precis. Eng. 2012, 20, 2293–2299. [Google Scholar] [CrossRef]

- Deng, H.; Hu, G.; Zhang, J.; Ma, M.; Zhong, X.; Yang, Z. An Initial Dot Encoding Scheme with Significantly Improved Robustness and Numbers. Appl. Sci. 2019, 9, 4915. [Google Scholar] [CrossRef]

- Schneider, C.T.; Sinnreich, K. Optical 3-D measurement systems for quality control in industry. Int. Arch. Photogramm. Remote Sens. 1992, 29, 56–59. [Google Scholar]

- Van Den Heuvel, F.; Kroon, R.; Poole, R. Digital close-range photogrammetry using artificial targets. Int. Arch. Photogramm. Remote Sens. 1992, 29, 222–229. [Google Scholar]

- Wang, C.; Dong, M.; Lv, N.; Zhu, L. New Encode Method of Measurement Targets and Its Recognition Algorithm. Tool Technol. 2007, 17, 26–30. [Google Scholar]

- Duan, K.; Liu, X. Research on coded reference point detection in photogrammetry. Transducer Microsyst. Technol. 2010, 29, 74–78. [Google Scholar]

- Liu, J.; Jiang, Z.; Hu, H.; Yin, X. A Rapid and Automatic Feature Extraction Method for Artificial Targets Used in Industrial Photogrammetry Applications. Appl. Mech. Mater. 2012, 170, 2995–2998. [Google Scholar] [CrossRef]

- Huang, X.; Su, X.; Liu, W. Recognition of Center Circles for Encoded Targets in Digital Close-Range Industrial Photogrammetry. J. Robot. Mechatron. 2015, 27, 208–214. [Google Scholar]

- Chen, M.; Zhang, L. Recognition of Motion Blurred Coded Targets Based on SIFT. Inf. Technol. 2018, 4, 83–85. [Google Scholar]

- Miguel, F.; Carmen, A.; Bertelsen, A.; Mendikute, A. Industrial Non-Intrusive Coded-Target Identification and Decoding Application. In Proceedings of the 6th Iberian Conference on Pattern Recognition and Image Analysis, Madeira, Portugal, 5−7 June 2013; pp. 790–797. [Google Scholar]

- Scaioni, M.; Feng, T.; Barazzetti, L.; Previtali, M.; Roncella, R. Image-based Deformation Measurement. Appl. Geomat. 2015, 7, 75–90. [Google Scholar] [CrossRef]

- Bao, Y.; Shang, Y.; Sun, X.; Zhou, J. A Robust Recognition and Accurate Locating Method for Circular Coded Diagonal Target. In Proceedings of the Applied Optics and Photonics China: 3D Measurement Technology for Intelligent Manufacturing, Beijing, China, 24 October 2017; p. 104580Q. [Google Scholar]

- Jin, T.; Dong, X. Designing and Decoding Algorithm of Circular Coded Target. Appl. Res. Comput. 2019, 36, 263–267. [Google Scholar]

- Moriyama, T.; Kochi, N.; Yamada, M.; Fukmaya, N. Automatic target-identification with the color-coded-targets. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 39–44. [Google Scholar]

- Wang, D. Study on the Embedded Digital Close-Range Photogrammetry System. Master’s Thesis, PLA Information Engineering University, Zhengzhou, China, April 2012. [Google Scholar]

- Yang, X.; Fang, S.; Kong, B.; Li, Y. Design of a color coded target for vision measurements. Opt. Int. J. Light Electron Opt. 2014, 125, 3727–3732. [Google Scholar] [CrossRef]

- Boden, F.; Lawson, N.; Jentink, H.W.; Kompenhans, J. Advanced In-Flight Measurement Techniques; Springer: Berlin, Germany, 2013; pp. 19–20. [Google Scholar]

- Li, L.; Liang, J.; Guo, X.; Guo, C.; Hu, H.; Tang, Z. Full-Field Wing Deformation Measurement Scheme for In-Flight Cantilever Monoplane Based on 3D Digital Image Correlation. Meas. Sci. Technol. 2014, 25, 11260–11276. [Google Scholar] [CrossRef]

- Ahn, S.; Warnecke, H. Systematic Geometric Image Measurement Errors of Circular Object Targets: Mathematical Formulation and Correction. Photogramm. Rec. 1999, 16, 485–502. [Google Scholar] [CrossRef]

- Liu, Y.; Su, X.; Guo, X.; Suo, T.; Li, Y.; Yu, Q. A novel method on suitable size selection of artificial circular targets in optical non-contact measurement. In Proceedings of the 8th Applied Optics and Photonics China, Beijing, China, 7−9 July 2019; p. 11338. [Google Scholar]

- Chen, R. Adaptive Nighttime Image Enhancement Algorithm Based on FPGA. Master’s Thesis, Guangdong University of Technology, Guangdong, China, May 2015. [Google Scholar]

- Tang, J.; Zhu, W.; Bi, Y. A Computer Vision-Based Navigation and Localization Method for Station-Moving Aircraft Transport Platform with Dual Cameras. Sensors 2020, 20, 279. [Google Scholar] [CrossRef]

- He, X.C.; Yung, N.H.C. Corner detector based on global and local curvature properties. Opt. Eng. 2008, 47, 057008. [Google Scholar]

- He, D.; Liu, X.; Peng, X.; Ding, Y.; Gao, B. Eccentricity Error Identification and Compensation for High-Accuracy 3D Optical Measurement. Meas. Sci. Technol. 2013, 24, 075402. [Google Scholar] [CrossRef]

- He, X.C.; Yung, N.H.C. Curvature Scale Space Corner Detector with Adaptive Threshold and Dynamic Region of Support. In Proceedings of the 17th International Conference on Pattern Recognition, Cambridge, UK, 26−26 August 2004; pp. 23–26. [Google Scholar]

- Zhang, Z.Y. A Flexible New Technique for Camera Calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).