Real-Time Multiobject Tracking Based on Multiway Concurrency

Abstract

1. Introduction

2. Related Works

3. Methodology

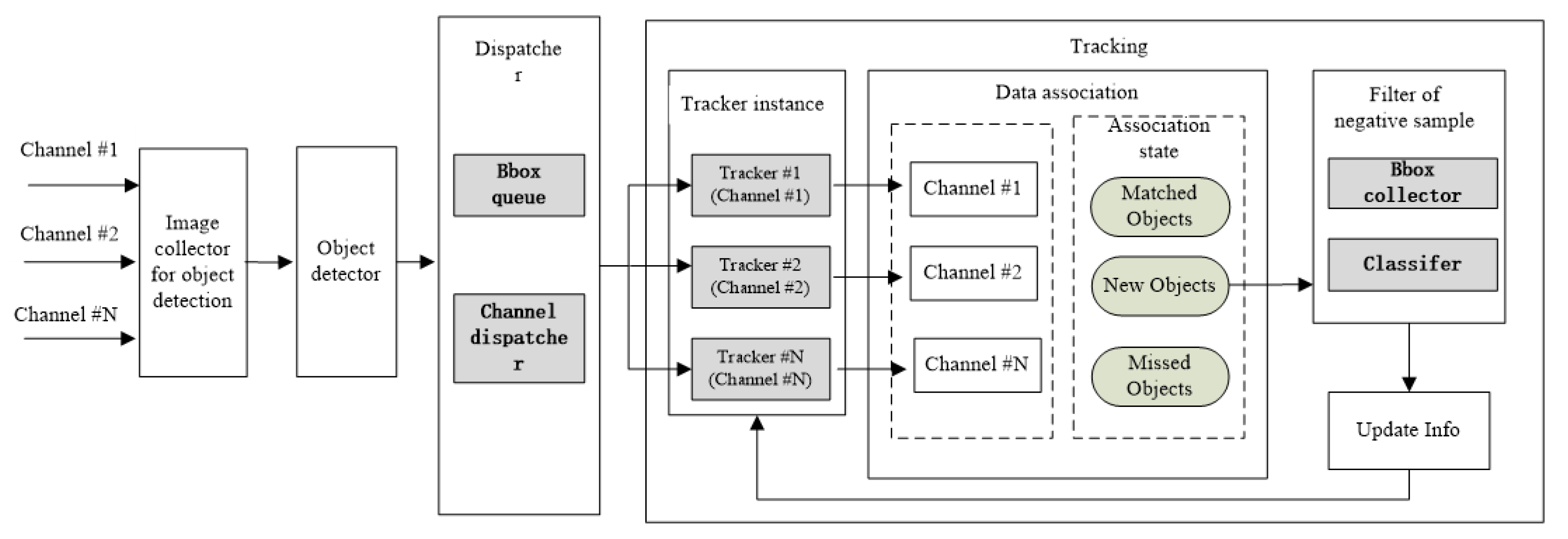

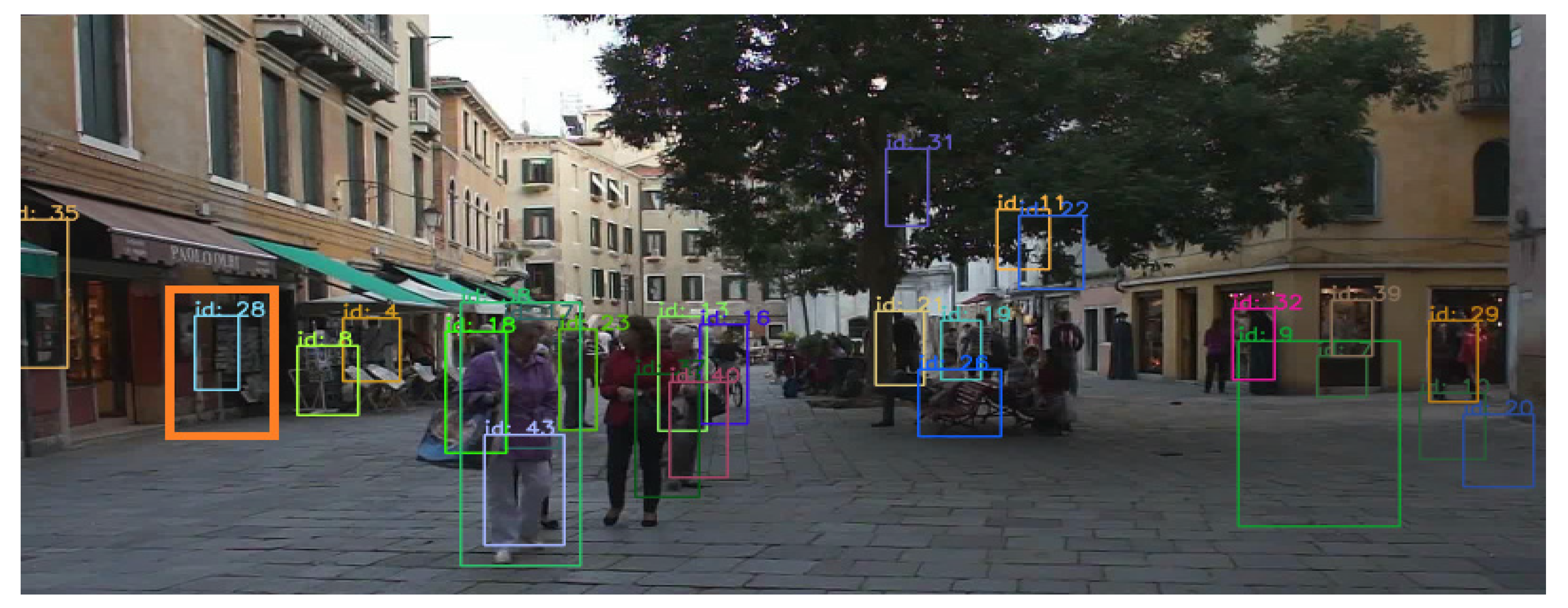

3.1. MOT Framework Of Multiway Concurrency

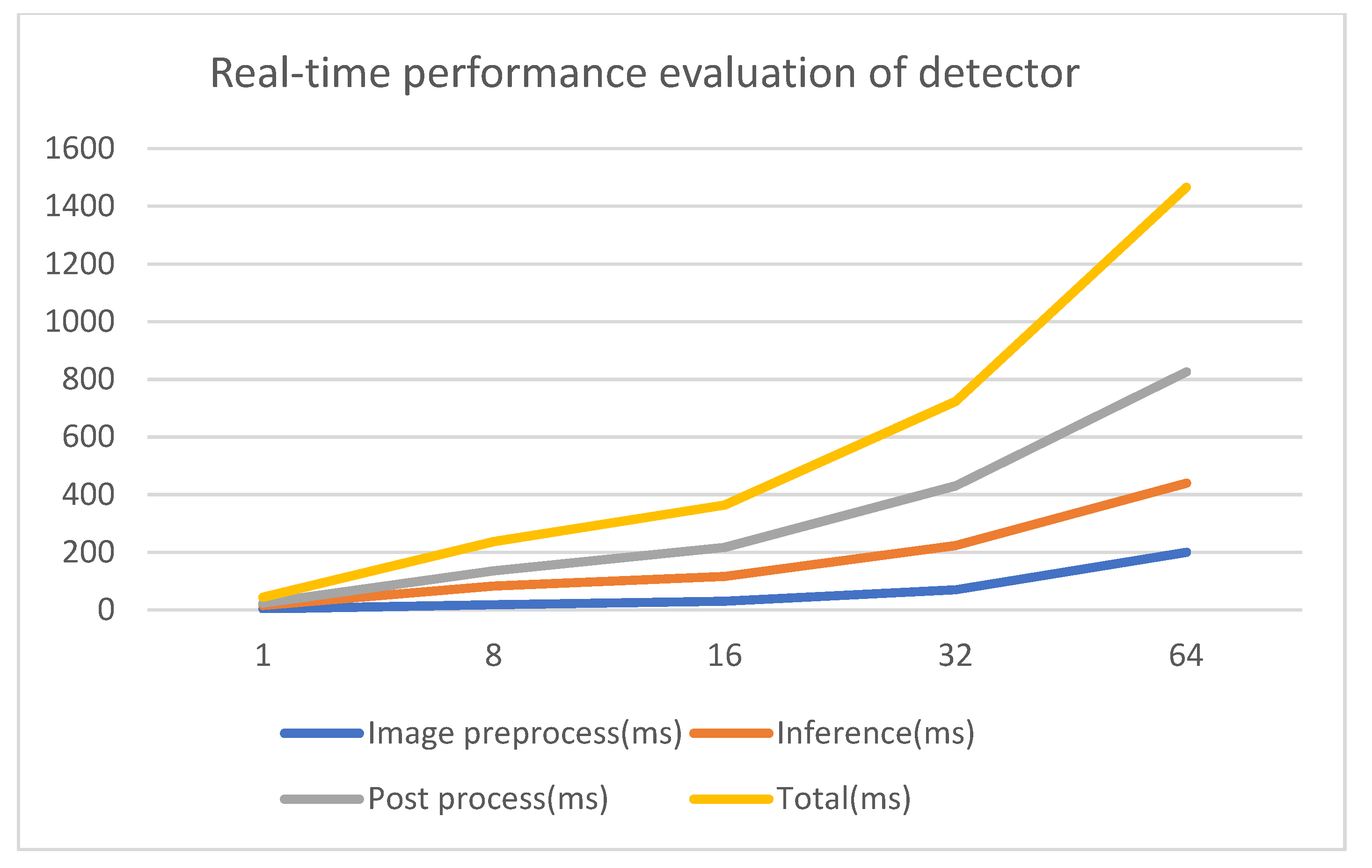

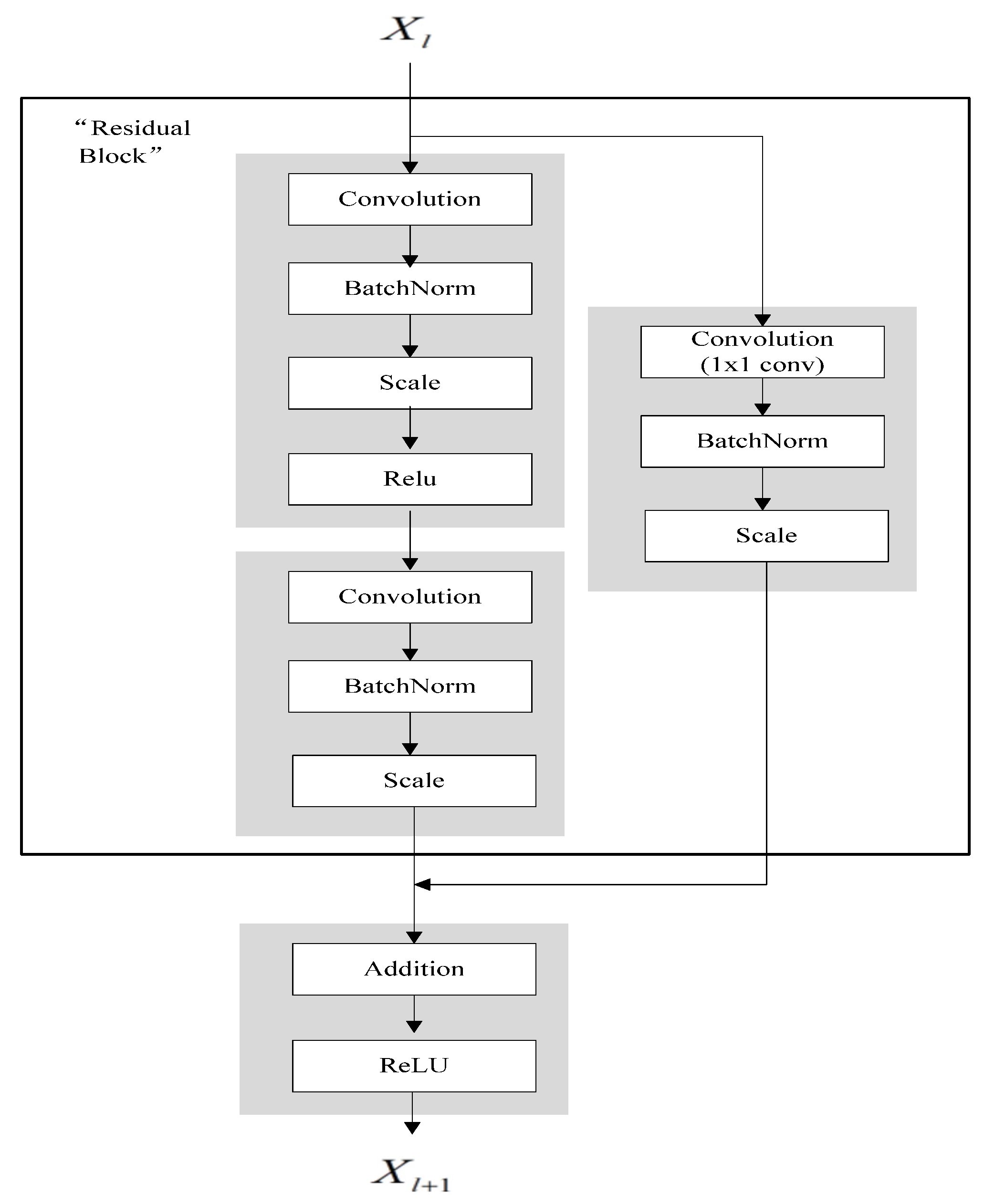

3.2. Multitarget Detection

3.3. MOT Based on Detection Driven

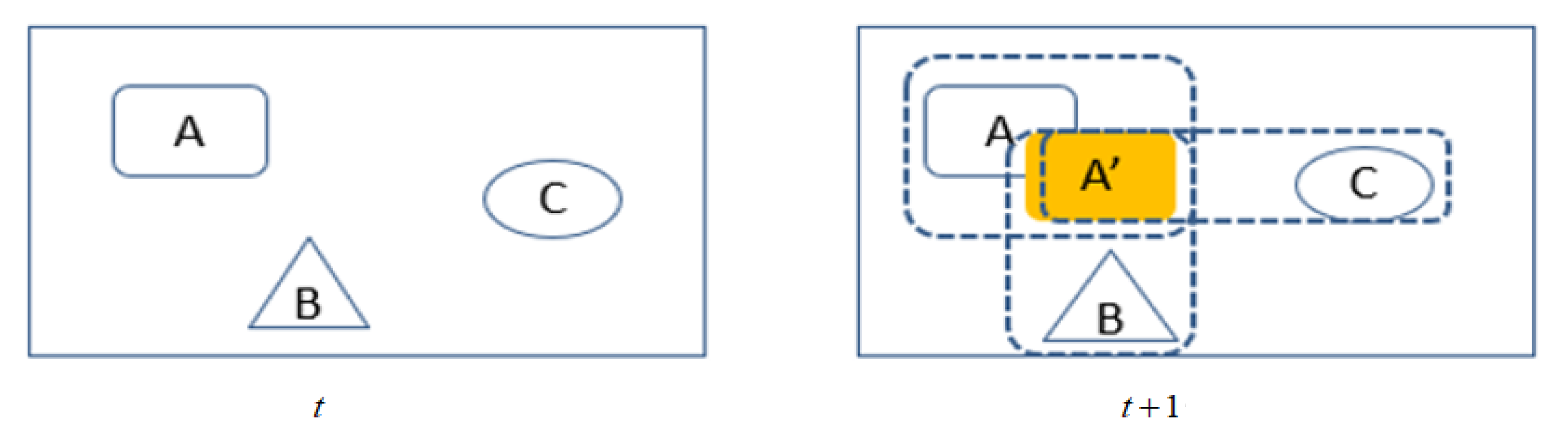

3.3.1. Data Association

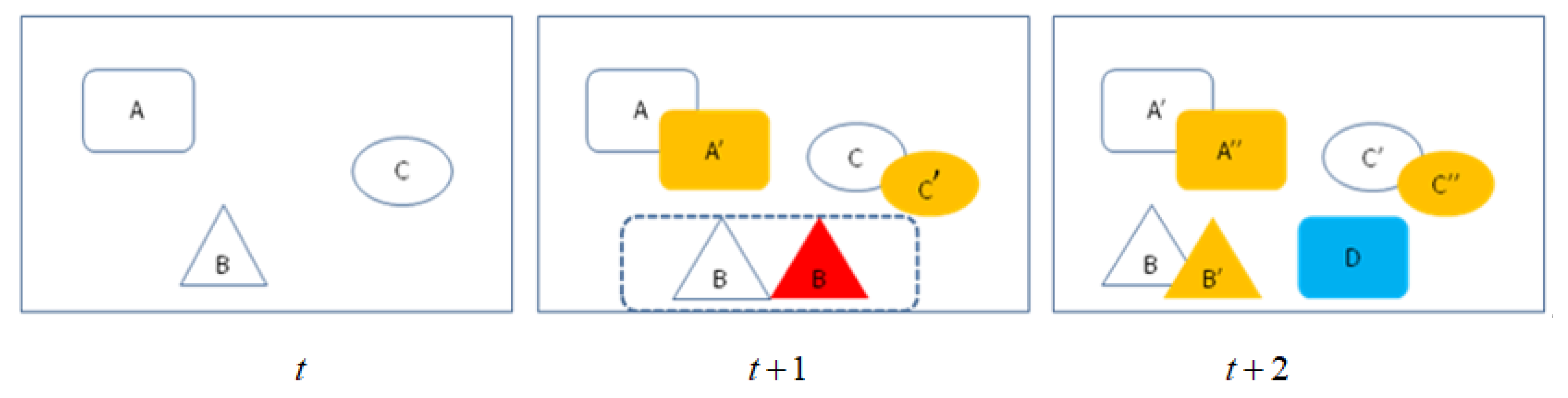

3.3.2. Object Creation and Disappearance

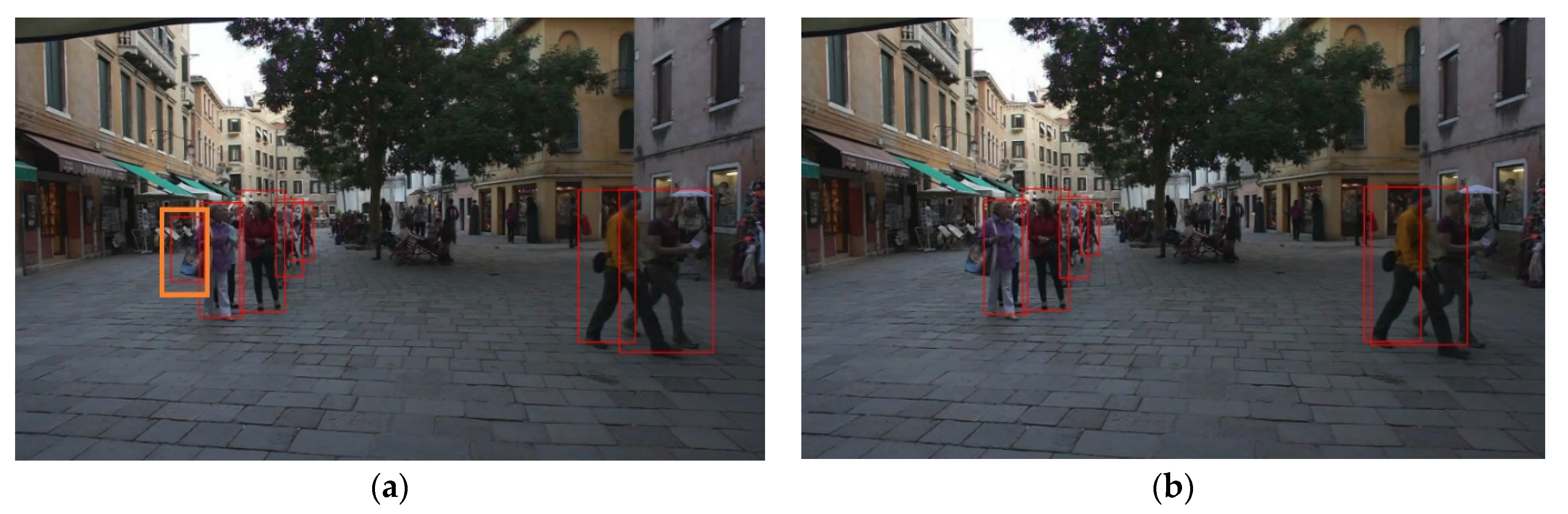

3.3.3. Filter of Negative Sample

4. Results

4.1. Experiment Environment

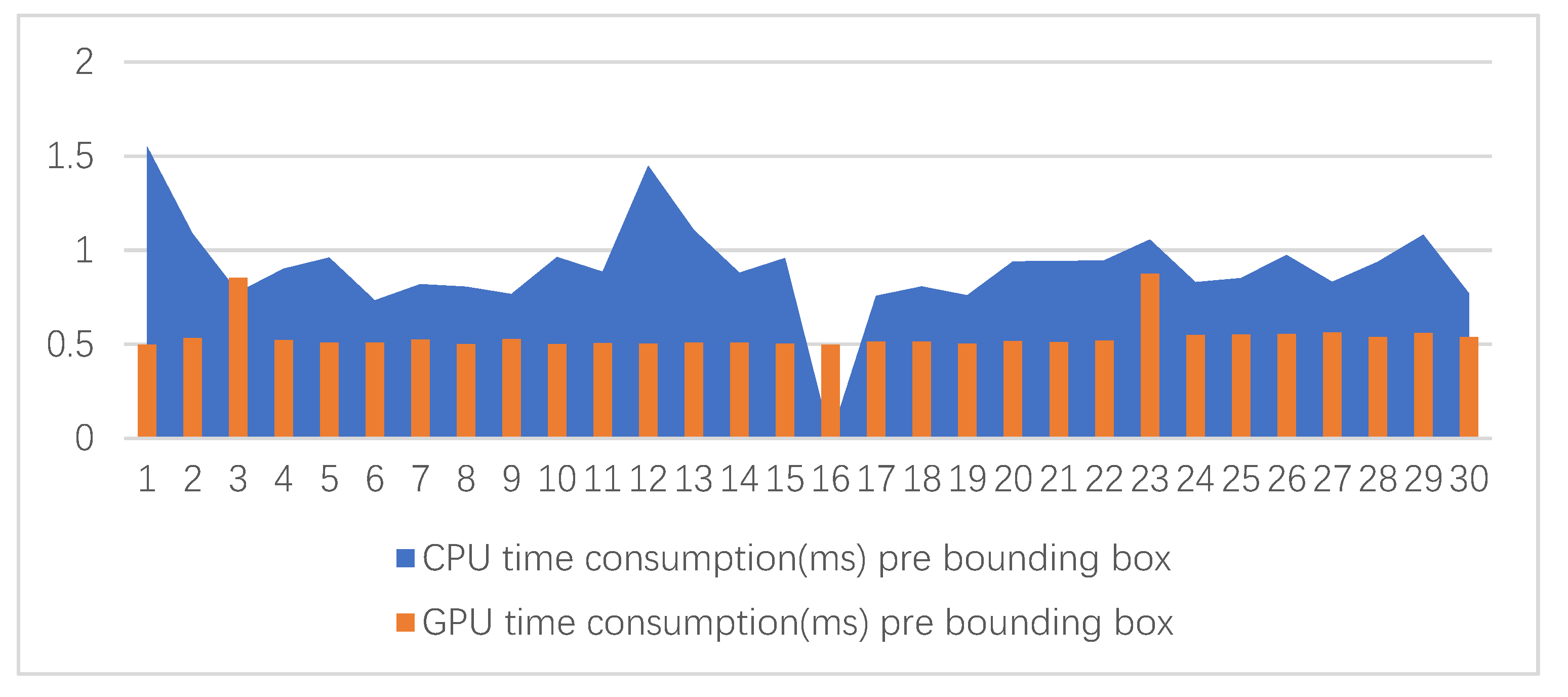

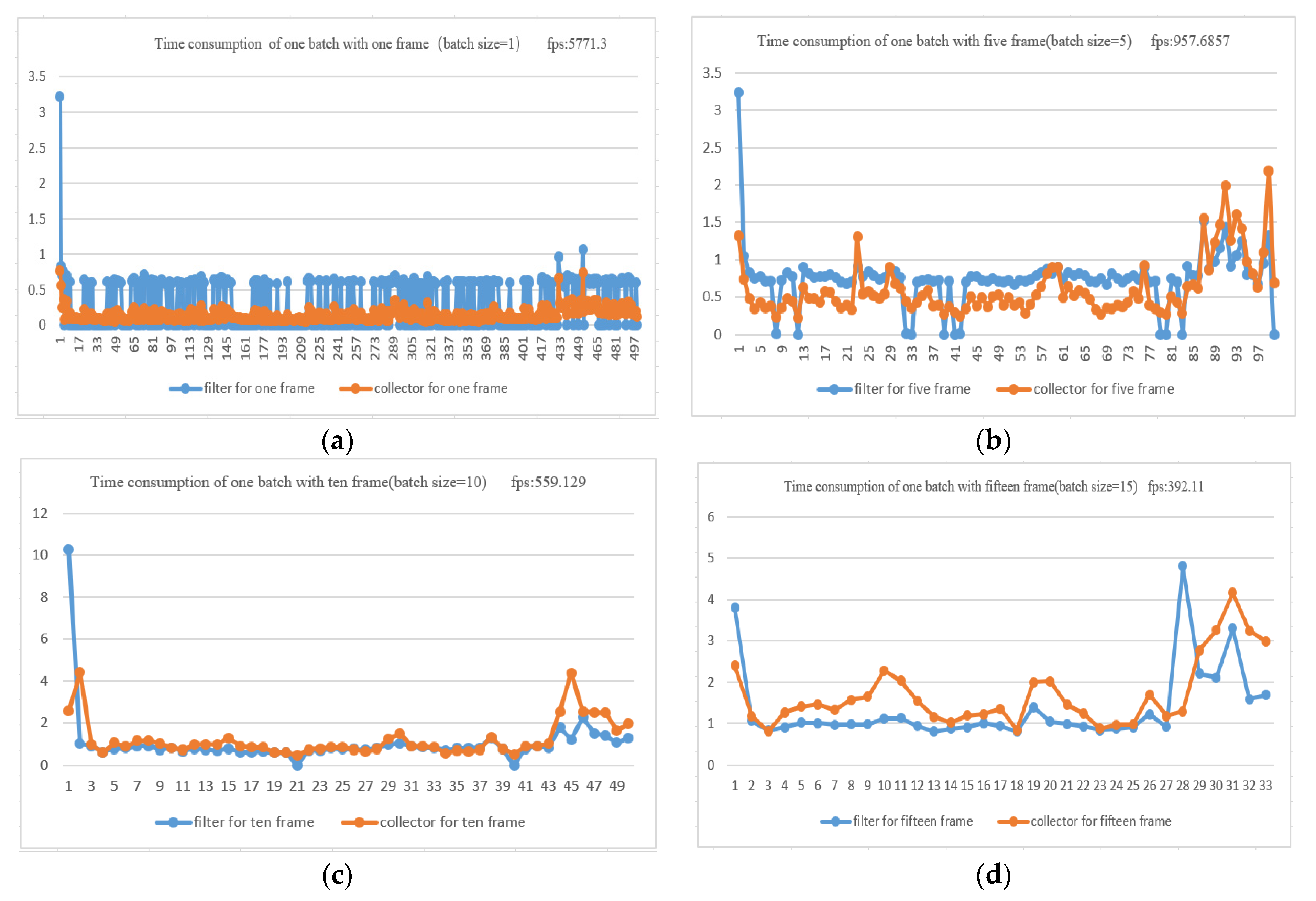

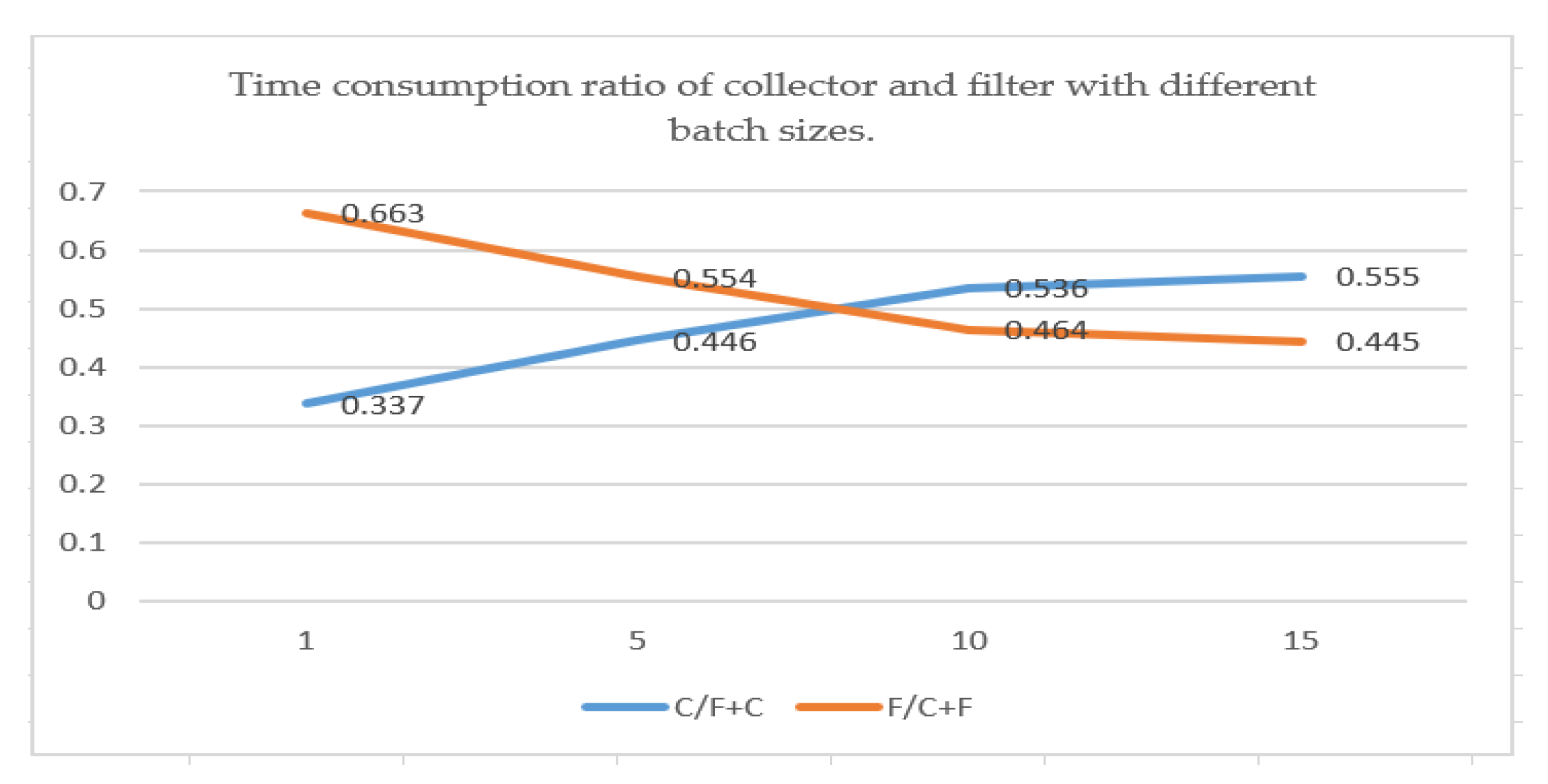

4.2. Real-Time Evaluation

4.3. Performance Evaluation

4.4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, X. Intelligent multi-camera video surveillance: A review. Pattern Recognit. Lett. 2013, 34, 3–19. [Google Scholar] [CrossRef]

- Pfister, T.; Charles, J.; Zisserman, A. Flowing convnets for human pose estimation in videos. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1913–1921. [Google Scholar]

- Choi, W.; Savarese, S. A unified framework for multi-target tracking and collective activity recognition. In Proceedings of the 12th European Conference on Computer Vision (ECCV), Florence, Italy, 7–13 October 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 215–230. [Google Scholar]

- Hu, W.; Tan, T.; Wang, L.; Maybank, S. A survey on visual surveillance of object motion and behaviors. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2004, 34, 334–352. [Google Scholar] [CrossRef]

- Luo, W.; Xing, J.; Milan, A.; Zhang, X.; Liu, W.; Zhao, X.; Kim, T.K. Multiple object tracking: A literature review. arXiv 2014, arXiv:1409.7618. [Google Scholar]

- Manzo, M.; Pellino, S. FastGCN + ARSRGemb: A novel framework for object recognition. arXiv 2020, arXiv:2002.08629. [Google Scholar]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple online and Realtime tracking. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3464–3468. [Google Scholar]

- Kalman, R. A New Approach to Linear Filtering and Prediction Problems. ASME J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Kuhn, H.W. The Hungarian method for the assignment problem. Nav. Res. Logist. Q. 1955, 2, 83–97. [Google Scholar] [CrossRef]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and Realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Zheng, Z.; Yang, X.; Yu, Z.; Zheng, L.; Yang, Y.; Kautz, J. Joint Discriminative and Generative Learning for Person Re-Identification. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 2133–2142. [Google Scholar]

- Kim, C.; Li, F.; Ciptadi, A.; Rehg, J.M. Multiple Hypothesis Tracking Revisited. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 4696–4704. [Google Scholar]

- Chu, Q.; Ouyang, W.; Li, H.; Wang, X.; Liu, B.; Yu, N. Online multi-object Tracking Using cnn-based Single Object Tracker with spatial-temporal Attention Mechanism. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4846–4855. [Google Scholar]

- Wang, Z.; Zheng, L.; Liu, Y.; Li, Y.; Wang, S. Towards real-time multi-object tracking. arXiv 2019, arXiv:1909.12605. [Google Scholar]

- Milan, A.; Leal-Taix’e, L.; Reid, I.; Roth, S.; Schindler, K. Mot16: A benchmark for multi-object tracking. arXiv 2016, arXiv:1603.00831. [Google Scholar]

- Fang, K.; Xiang, Y.; Li, X.; Savarese, S. Recurrent Autoregressive Networks for Online Multi-Object Tracking. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 1–10. [Google Scholar]

- Leal-Taix’e, L.; Milan, A.; Reid, I.; Roth, S.; Schindler, K. MOTChallenge 2015: Towards a Benchmark for Multi-Target Tracking. arXiv 2015, arXiv:1504.01942. [Google Scholar]

- Tian, W.; Lauer, M.; Chen, L. Online Multi-Object Tracking Using Joint Domain Information in Traffic Scenarios. IEEE Trans. Intell. Transp. Syst. 2019, 21, 374–384. [Google Scholar] [CrossRef]

- Lee, J.; Kim, S.; Ko, B.C. Online Multiple Object Tracking Using Rule Distillated Siamese Random Forest. IEEE Access 2020, 8, 182828–182841. [Google Scholar] [CrossRef]

- Pang, B.; Li, Y.; Zhang, Y.; Li, M.; Lu, C. TubeTK: Adopting Tubes to Track Multi-Object in a One-Step Training Model. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 6307–6317. [Google Scholar]

- Zhang, Y.; Sheng, H.; Wu, Y.; Wan, S.; Lyu, W.; Ke, W.; Xiong, Z. Long-term tracking with deep tracklet association. IEEE Trans. Image Process. 2020, 29, 6694–6706. [Google Scholar] [CrossRef]

- Milioto, A.; Stachniss, C. Bonnet: An open-source Training and Deployment Framework for Semantic Segmentation in Robotics using CNNs. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 7094–7100. [Google Scholar]

- Jain, P.; Mo, X.; Jain, A.; Subbaraj, H.; Durrani, R.S.; Tumanov, A.; Stoica, I. Dynamic space-time Scheduling for GPU Inference. arXiv 2018, arXiv:1901.00041. [Google Scholar]

- Alyamkin, S.; Ardi, M.; Brighton, A.; Berg, A.C.; Chen, Y.; Cheng, H.P.; Gauen, K. 2018 Low-Power Image Recognition Challenge. arXiv 2018, arXiv:1810.01732. [Google Scholar]

- Singhani, A. Real-time Freespace Segmentation on Autonomous Robots for Detection of diction and drop-offs. arXiv 2019, arXiv:1902.00842. [Google Scholar]

- Womg, A.; Shafiee, M.J.; Li, F.; Chwyl, B. Tiny SSD: A tiny single-shot detection deep convolutional neural network for real-time embedded object detection. In Proceedings of the 2018 15th Conference on Computer and Robot Vision (CRV), Toronto, ON, Canada, 8–10 May 2018; pp. 95–101. [Google Scholar]

- Lan, W.; Dang, J.; Wang, Y.; Wang, S. Pedestrian detection based on yolo network model. In Proceedings of the 2018 IEEE International Conference on Mechatronics and Automation (ICMA), Changchun, China, 5–8 August 2018; pp. 1547–1551. [Google Scholar]

- Mane, S.; Mangale, S. Moving object detection and tracking using convolutional neural networks. In Proceedings of the 2018 Second International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 14–15 June 2018; pp. 1809–1813. [Google Scholar]

- Zhang, Y.; Huang, Y.; Wang, L. What makes for good multiple object trackers? In Proceedings of the 2016 IEEE International Conference on Digital Signal Processing (DSP), Beijing, China, 16–18 October 2016; pp. 467–471. [Google Scholar]

- Abbas, S.M.; Singh, S.N. Region-based object detection and classification using faster R-CNN. In Proceedings of the 2018 4th International Conference on Computational Intelligence & Communication Technology (CICT), Ghaziabad, India, 9–10 February 2018; pp. 1–6. [Google Scholar]

- Özer, C.; Gürkan, F.; Günsel, B. Object tracking by deep object detectors and particle filtering. In Proceedings of the 2018 26th Signal Processing and Communications Applications Conference (SIU), Izmir, Turkey, 2–5 May 2018; pp. 1–4. [Google Scholar]

- Liu, Y.; Wang, P.; Wang, H. Target tracking algorithm based on deep learning and multi-video monitoring. In Proceedings of the 2018 5th International Conference on Systems and Informatics (ICSAI), Nanjing, China, 10–12 November 2018; pp. 440–444. [Google Scholar]

- Zhao, X.; Li, W.; Zhang, Y.; Gulliver, T.A.; Chang, S.; Feng, Z. A faster RCNN-based pedestrian detection system. In Proceedings of the 2016 IEEE 84th Vehicular Technology Conference (VTC-Fall), Montreal, QC, Canada, 18–21 September 2016; pp. 1–5. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Arulampalam, S.M.; Maskell, S.; Gordon, N.; Clapp, T. A tutorial on particle filters for online nonlinear/non-Gaussian Bayesian tracking. IEEE Trans. Signal Process. 2002, 50, 174–188. [Google Scholar] [CrossRef]

- Li, Y.F.; Zhou, S.R. Survey of online multi-object video tracking algorithms. Comput. Technol. Autom. 2018, 37, 73–82. [Google Scholar]

- Yang, F.D. Summary of data association methods in Multi-target tracking. Sci. Technol. Vis. 2016, 6, 164–194. [Google Scholar]

- Ciaparrone, G.; Luque Sánchez, F.; Tabik, S.; Troiano, L.; Tagliaferri, R.; Herrera, F. Deep learning in video multi-object tracking: A survey. Neurocomputing 2020, 381, 61–88. [Google Scholar] [CrossRef]

- Ran, N.; Kong, L.; Wang, Y.; Liu, Q. A robust multi-athlete tracking algorithm by exploiting discriminant features and long-term dependencies. In Proceedings of the 25th International Conference on MultiMedia Modeling (MMM 2019), Thessaloniki, Greece, 8–11 January 2019; Springer: Cham, Switzerland, 2019; pp. 411–423. [Google Scholar]

- Chen, L.T.; Peng, X.J.; Ren, M.W. Recurrent metric networks and batch multiple hypothesis for multi-object tracking. IEEE Access 2019, 7, 3093–3105. [Google Scholar] [CrossRef]

- Xiang, J.; Zhang, G.S.; Hou, J.H. Online multi-object tracking based on feature representation and bayesian filtering within a deep learning architecture. IEEE Access 2019, 7, 27923–27935. [Google Scholar] [CrossRef]

- Kwangjin, Y.; Du, Y.K.; Yoon, Y.-C.; Jeon, M. Data association for multi-object tracking via deep neural networks. Sensors 2019, 19, 559. [Google Scholar]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual Object Tracking using Adaptive Correlation Filters. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. Exploiting the circulant structure of tracking-by-detection with kernels. In Proceedings of the 2012 European Conference on Computer Vision (ECCV), Florence, Italy, 7–13 October 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 702–715. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-speed Tracking with Kernelized Correlation Filters. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 583–596. [Google Scholar] [CrossRef]

- Danelljan, M.; Häger, G.; Khan, F.; Felsberg, M. Accurate Scale Estimation for Robust Visual Tracking. In Proceedings of the 2014 British Machine Vision Conference (BMVC), Nottingham, UK, 1–5 September 2014. [Google Scholar]

- Danelljan, M.; Häger, G.; Khan, F.S.; Felsberg, M. Discriminative Scale Space Tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1561–1575. [Google Scholar] [CrossRef]

- Bernardin, K.; Stiefelhagen, R. Evaluating Multiple Object Tracking Performance: The CLEAR MOT Metrics. EURASIP J. Image Video Process. 2008, 1–10. [Google Scholar] [CrossRef]

- Yu, F.; Li, W.; Li, Q.; Liu, Y.; Shi, X.; Yan, J. POI: Multiple Object Tracking with High Performance Detection and Appearance Feature. In Proceedings of the 2016 European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016; Springer: Cham, Switzerland, 2016; pp. 36–42. [Google Scholar]

- Bergmann, P.; Meinhardt, T.; Leal-Taix’e, L. Tracking without bells and whistles. In Proceedings of the 2019 IEEE International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 941–951. [Google Scholar]

- Peng, J.; Wang, T.; Lin, W.; Wang, J.; See, J.; Wen, S.; Ding, E. TPM: Multiple Object Tracking with Tracklet-Plane Matching. Pattern Recognit. 2020, 107480. [Google Scholar] [CrossRef]

| The Name | The Kernel Size/Stride | Num Output |

|---|---|---|

| 1 Conv | 7 × 7/2 | 32 |

| Pool2 | 3 × 3/2 | 32 |

| Residual 3 | 3 × 3/1 | 32 |

| Residual 4 | 3 × 3/1, 1 × 1/2 | 128 |

| Residual 5 | 3 × 3/1, 1 × 1/2 | 256 |

| Residual 6 | 3 × 3/2, 1 × 1/2 | 128 |

| FC7 | 2 | |

| SoftMax | 2 |

| Batch Size (Concurrency) | fps |

|---|---|

| 1 | 5771.3 |

| 5 | 957.6857 |

| 10 | 559.129 |

| 15 | 392.11 |

| Evaluation Indications | Definitions |

|---|---|

| The multiple objects tracking accuracy [48] | |

| The multiple objects tracking precision [48] | |

| Ratio of successful tracking target trajectory to real target trajectory | |

| Proportion of lost target trajectory to real target trajectory | |

| The total number of target identity switch during the whole target tracking process | |

| The total number of false positives | |

| The total number false negatives | |

| The number of times the real trajectory is interrupted |

| Methods | ||||||||

|---|---|---|---|---|---|---|---|---|

| SORT [7] | 59.8% | 79.6% | 25.4% | 22.7% | 1423 | 8698 | 63,245 | 1835 |

| DeepSort [10] | 61.4% | 79.1% | 32.8% | 18.2% | 781 | 12,852 | 56,668 | 2008 |

| MHT_DAM [12] | 45.8% | 76.3% | 16.2% | 43.2% | 590 | 6412 | 91,758 | 781 |

| POI [49] | 66.1% | 79.5% | 34.0% | 20.8% | 805 | 5061 | 55,914 | 3093 |

| RAN [16] | 63% | 78.8% | 39.9% | 22.1% | 482 | 13,663 | 53,248 | 1251 |

| SiameseRF+rule distillation [19] | 57.2% | 79.4% | 28.2% | 23.5% | 2000 | 7265 | 68,860 | 2520 |

| Tracktor++ [50] | 54.4% | 78.2% | 19% | 36.9% | 682 | 3280 | 79,149 | 1480 |

| TPM [51] | 51.3% | 75.2% | 18.7% | 40.8% | 569 | 2701 | 85,504 | 707 |

| Ours | 52.2% | 77.6% | 21.3% | 31% | 1209 | 8214 | 41,514 | 1311 |

| Batch Size = 1 | Batch Size = 5 | Batch Size = 10 | Batch Size = 15 | |

|---|---|---|---|---|

| Collector (C) | 0.147 ms | 0.599 ms | 1.211 ms | 1.694 ms |

| Filter (F) | 0.289 ms | 0.744 ms | 1.047 ms | 1.361 ms |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gong, X.; Le, Z.; Wu, Y.; Wang, H. Real-Time Multiobject Tracking Based on Multiway Concurrency. Sensors 2021, 21, 685. https://doi.org/10.3390/s21030685

Gong X, Le Z, Wu Y, Wang H. Real-Time Multiobject Tracking Based on Multiway Concurrency. Sensors. 2021; 21(3):685. https://doi.org/10.3390/s21030685

Chicago/Turabian StyleGong, Xuan, Zichun Le, Yukun Wu, and Hui Wang. 2021. "Real-Time Multiobject Tracking Based on Multiway Concurrency" Sensors 21, no. 3: 685. https://doi.org/10.3390/s21030685

APA StyleGong, X., Le, Z., Wu, Y., & Wang, H. (2021). Real-Time Multiobject Tracking Based on Multiway Concurrency. Sensors, 21(3), 685. https://doi.org/10.3390/s21030685