Value Iteration Networks with Double Estimator for Planetary Rover Path Planning

Abstract

:1. Introduction

- By introducing a double estimator approach, we propose the double value iteration network, a variant of the VIN that can effectively learn to plan from natural images.

- We design a two-stage training strategy, based on the characteristics of VI-based models, which can help them achieve a better performance in large-size environments with a lower computational cost.

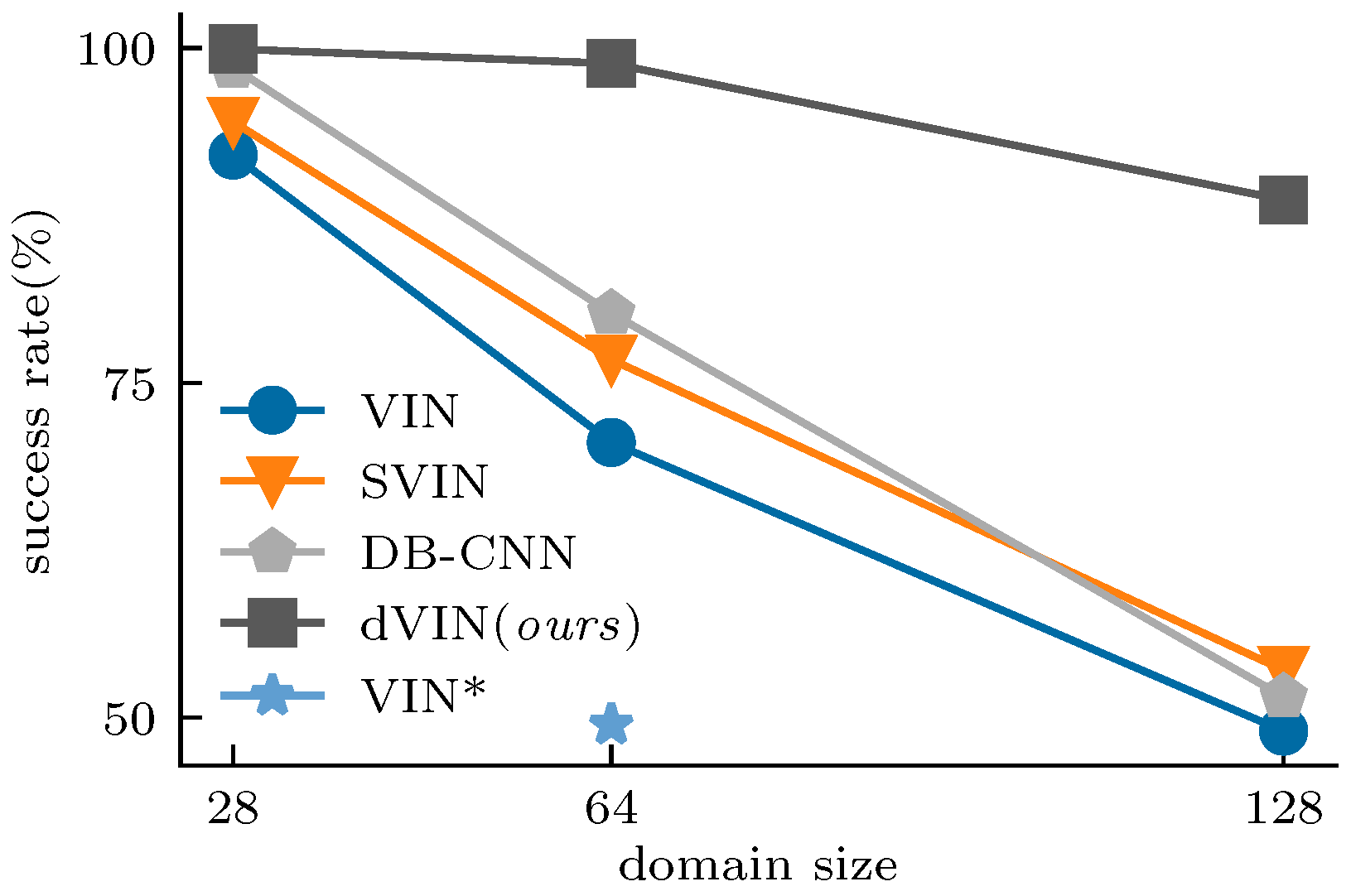

- Experimental results on grid-world maps and terrain images show that the dVIN significantly outperforms not only the previous VI-based models but also a CNN-based model.

2. Preliminaries

2.1. Markov Decision Processes

2.2. Double Q-Learning

2.3. Value Iteration Network

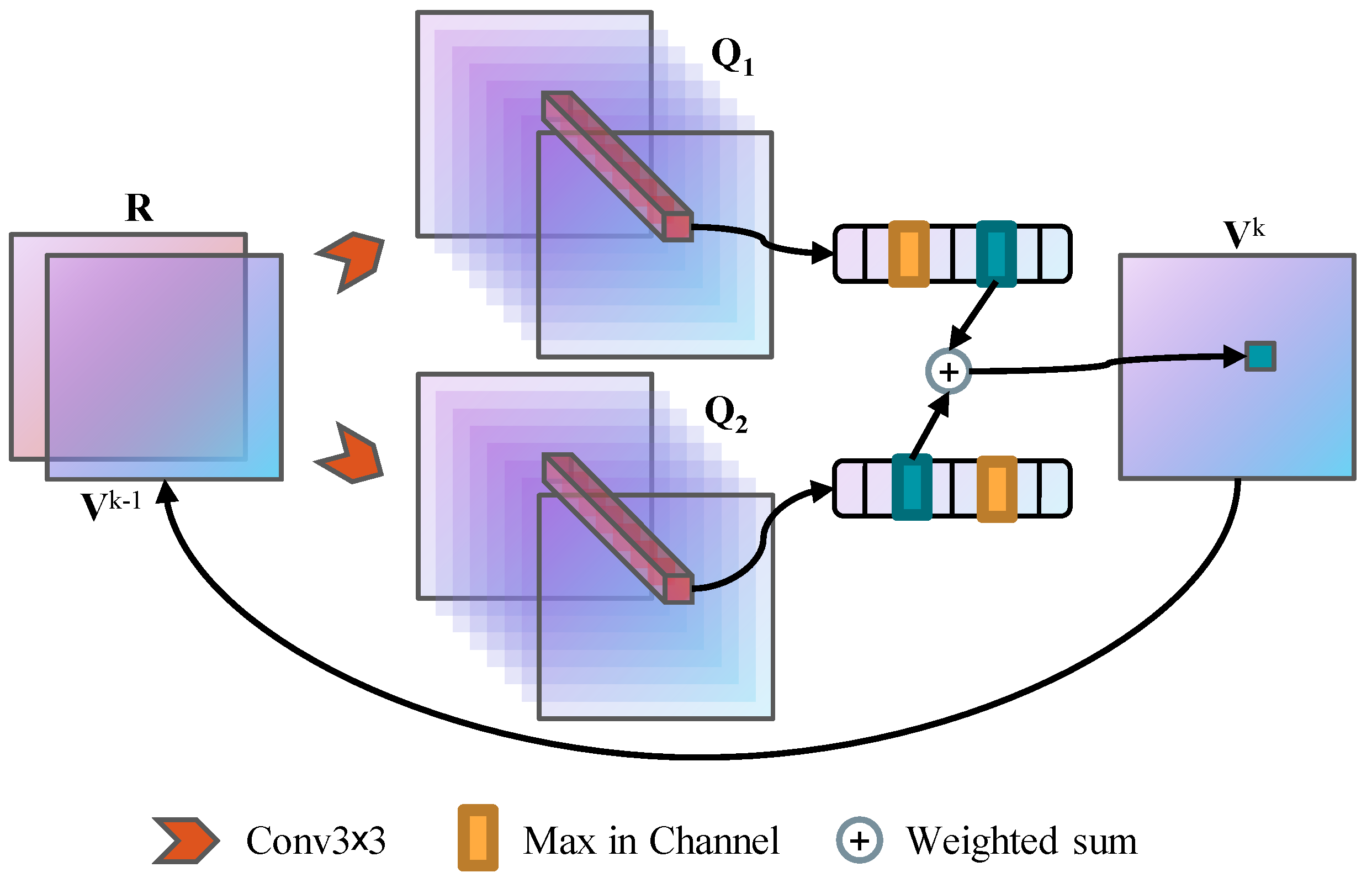

3. Methods

4. Experiments and Discussion

- VIN: It is the first proposed NN architecture with explicit planning computation by end-to-end training. Our implementation follows most of the original settings in [30], using a convolution layer to implement the reward function, convolutional layer to approximate the state-transition function, and maximization operation, along the action channel, to obtain the state values.

- SVIN: Compared to the VIN, two optimizations have been proposed to enhance the network training and improve performance. One is to construct a multi-layer convolution network to produce better reward mapping, as in [30]. The second is to replace the max-pooling operation with the softmax of Q function over actions, under the assumption of probabilistic action policy, with the aim of more efficient gradient propagation. However, in this work, we focus on the performance of the VI module, so the multi-layered reward network is not considered in our implementation.

- DB-CNN: The task setting of path planning is more like semantic segmentation than image classification, assigning a semantic label to each pixel, which is the optimal action in our case. Ref. [22] designed a two-branch convolutional network, where branch 1 is similar to conventional CNNs for classification tasks, which extract global features by a series of stacked convolution layers and down-sampling operations, while branch 2 always keeps the feature map resolution consistent with the input for better local feature representation.

4.1. Grid-World Domain

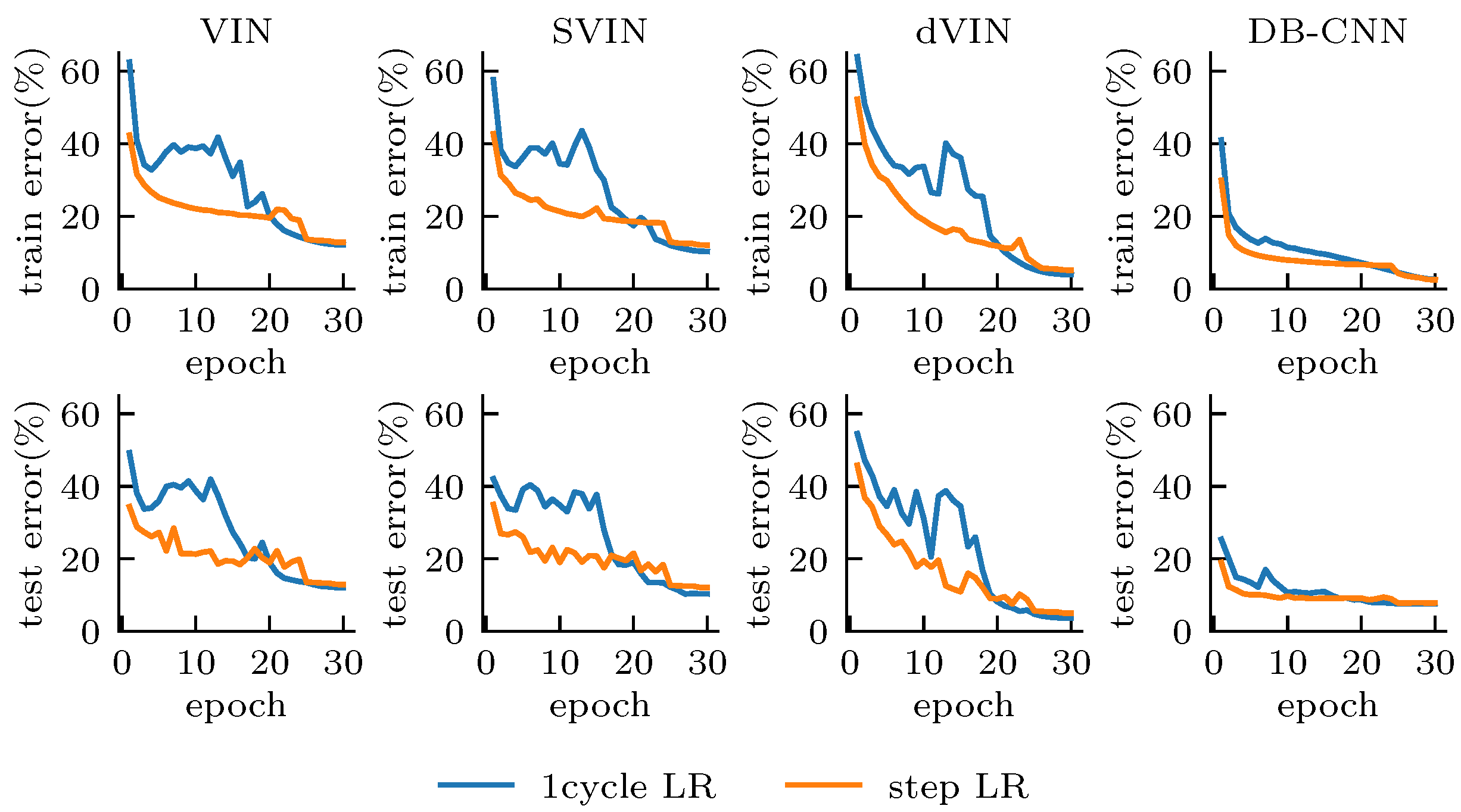

4.1.1. Performance in Small-Size Domains

4.1.2. Generalization in Large-Size Domains

4.1.3. Fine-Tuning in Large-Size Domains

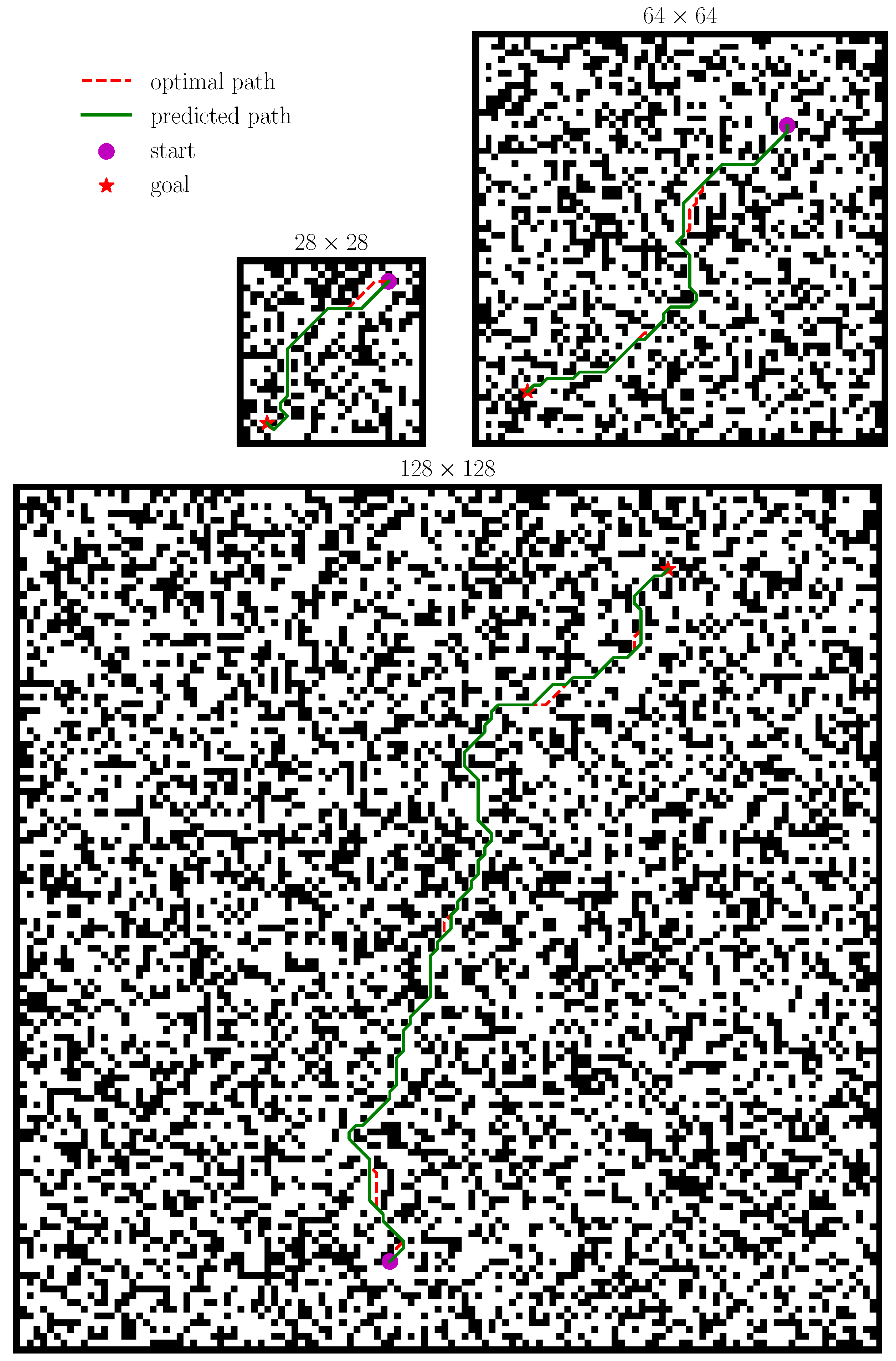

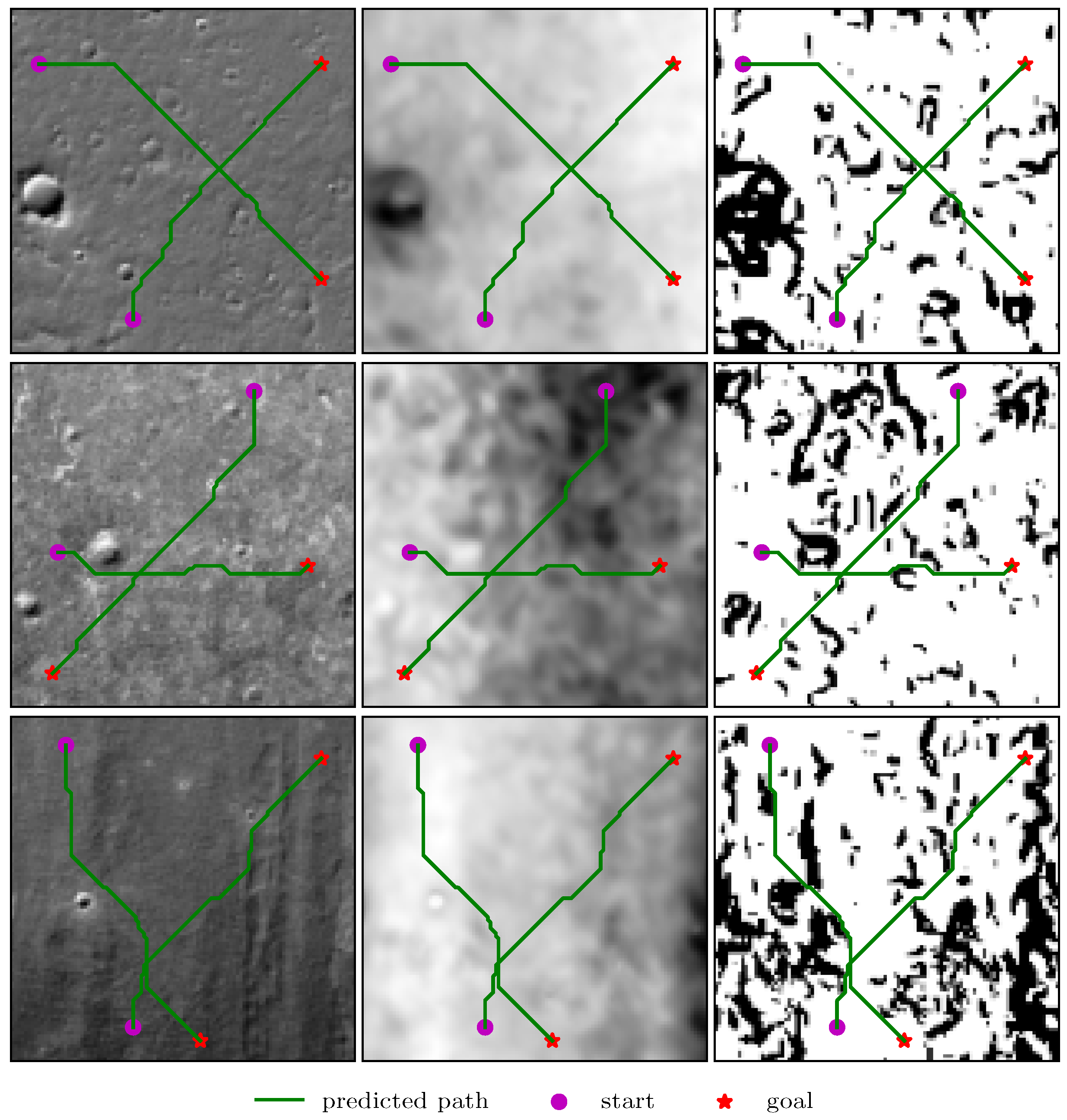

4.2. Rovers Navigation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sutoh, M.; Otsuki, M.; Wakabayashi, S.; Hoshino, T.; Hashimoto, T. The Right Path: Comprehensive path planning for lunar exploration rovers. IEEE Robot. Autom. Mag. 2015, 22, 22–33. [Google Scholar] [CrossRef]

- Meila, M.; Zhang, T. Path Planning using Neural A* Search. In Proceedings of the 38th International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 12029–12039. [Google Scholar]

- Toma, A.I.; Hsueh, H.Y.; Jaafar, H.A.; Murai, R.; Kelly, P.H.; Saeedi, S. PathBench: A Benchmarking Platform for Classical and Learned Path Planning Algorithms. In Proceedings of the 18th Conference on Robots and Vision, Burnaby, BC, Canada, 26–28 May 2021; pp. 79–86. [Google Scholar]

- Wahab, M.N.A.; Nefti-Meziani, S.; Atyabi, A. A comparative review on mobile robot path planning: Classical or meta-heuristic methods? Annu. Rev. Control 2020, 50, 233–252. [Google Scholar] [CrossRef]

- Raja, P.; Pugazhenthi, S. Optimal path planning of mobile robots: A review. Int. J. Phys. Sci. 2012, 7, 1314–1320. [Google Scholar] [CrossRef]

- Levine, S.; Finn, C.; Darrell, T.; Abbeel, P. End-to-end training of deep visuomotor policies. J. Mach. Learn. Res. 2016, 17, 1334–1373. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar] [CrossRef]

- Richard, S.; Andrew, B. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Schrittwieser, J.; Antonoglou, I.; Hubert, T.; Simonyan, K.; Sifre, L.; Schmitt, S.; Guez, A.; Lockhart, E.; Hassabis, D.; Graepel, T.; et al. Mastering Atari, Go, chess and shogi by planning with a learned model. Nature 2020, 588, 604–609. [Google Scholar] [CrossRef] [PubMed]

- Hafner, D.; Lillicrap, T.P.; Norouzi, M.; Ba, J. Mastering Atari with discrete world models. In Proceedings of the 9th International Conference on Learning Representations, Virtual, 3–7 May 2021; pp. 1–15. [Google Scholar]

- Tamar, A.; WU, Y.; Thomas, G.; Levineand, S.; Abbeel, P. Value iteration networks. In Advances in Neural Information Processing Systems; Curran Associates: New York, NY, USA, 2016; Volume 29, pp. 2154–2162. [Google Scholar] [CrossRef]

- Niu, S.; Chen, S.; Guo, H.; Targonski, C.; Smith, M.C.; Kovaevi, J. Generalized value iteration networks: Life beyond lattices. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 6246–6253. [Google Scholar]

- Deac, A.; Veličković, P.; Milinkovic, O.; Bacon, P.L.; Tang, J.; Nikolic, M. XLVIN: EXecuted Latent Value Iteration Nets. arXiv 2020, arXiv:2010.13146. [Google Scholar]

- Nardelli, N.; Kohli, P.; Synnaeve, G.; Torr, P.; Lin, Z.; Usunier, N. Value propagation networks. In Proceedings of the 7th International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019; pp. 1–13. [Google Scholar]

- Zhang, L.; Li, X.; Chen, S.; Zang, H.; Huang, J.; Wang, M. Universal value iteration networks: When spatially-invariant is not universal. In Proceedings of the 34th AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 6778–6785. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 29th IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef] [Green Version]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar] [CrossRef]

- Hasselt, H. Double Q-learning. In Advances in Neural Information Processing Systems; Curran Associates: New York, NY, USA, 2010; Volume 23, pp. 2613–2621. [Google Scholar] [CrossRef]

- Hasselt, H.; Guez, A.; Silver, D. Deep reinforcement learning with Double Q-Learning. In Proceedings of the 30th AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; pp. 2094–2100. [Google Scholar] [CrossRef]

- Zhang, J.; Xia, Y.; Shen, G. A novel learning-based global path planning algorithm for planetary rovers. Neurocomputing 2019, 361, 69–76. [Google Scholar] [CrossRef] [Green Version]

- Bachlechner, T.; Majumder, B.P.; Mao, H.H.H.; Cottrell, G.; McAuley, J. ReZero is all you need: Fast convergence at large depth. In Proceedings of the 37th Conference on Uncertainty in Artificial Intelligence, Online, 27–30 July 2021; pp. 1–10. [Google Scholar]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer Normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Pflueger, M.; Agha, A.; Sukhatme, G. Rover-IRL: Inverse reinforcement learning with soft value iteration networks for planetary rover path planning. IEEE Robot. Autom. Lett. 2019, 4, 1387–1394. [Google Scholar] [CrossRef]

- Lee, L.; Parisotto, E.; Chaplot, D.; Xing, E.; Salakhutdinov, R. Gated path planning networks. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 4597–4608. [Google Scholar]

- Agarwal, R.; Schuurmans, D.; Norouzi, M. An optimistic perspective on offline deep reinforcement learning. In Proceedings of the 37th International Conference on Machine Learning, Virtual, 13–18 July 2020; Volume 1, pp. 104–114. [Google Scholar]

- Peer, O.; Tessler, C.; Merlis, N.; Meir, R. Ensemble bootstrapping for Q-Learning. In Proceedings of the 38th International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 8454–8463. [Google Scholar]

- Allen-Zhu, Z.; Li, Y. Towards understanding ensemble, knowledge distillation and self-distillation in deep learning. arXiv 2020, arXiv:2012.09816. [Google Scholar]

- Murphy, K.P. Machine Learning: A Probabilistic Perspective; MIT Press: Cambridge, MA, USA, 2012. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An imperative style, high-performance deep learning library. In Advances in Neural Information Processing Systems; Curran Associates: New York, NY, USA, 2019; Volume 32, pp. 8026–8037. [Google Scholar] [CrossRef]

- He, K.; Girshick, R.; Dollar, P. Rethinking ImageNet pre-training. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 4918–4927. [Google Scholar] [CrossRef] [Green Version]

- Smith, L.N. A disciplined approach to neural network hyper-parameters: Part 1—Learning rate, batch size, momentum, and weight decay. arXiv 2018, arXiv:1803.09820. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking model scaling for convolutional neural networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

| Training Strategies | Methods | Pred. Loss | Succ. Rate | Traj. Diff. |

|---|---|---|---|---|

| step LR | VIN | 0.129 | 89.31% | 0.451 |

| SVIN | 0.121 | 89.72% | 0.405 | |

| DB-CNN | 0.079 | 97.78% | 0.402 | |

| dVIN (ours) | 0.050 | 99.45% | 0.120 | |

| 1cycle LR | VIN | 0.121 | 92.01% | 0.454 |

| SVIN | 0.103 | 94.50% | 0.330 | |

| DB-CNN | 0.076 | 98.57% | 0.402 | |

| dVIN (ours) | 0.033 | 99.93% | 0.032 |

| Methods | ||||

|---|---|---|---|---|

| Succ. Rate | Traj. Diff. | Succ. Rate | Traj. Diff. | |

| VIN | 69.96% | 1.623 | 35.10% | 2.502 |

| SVIN | 74.53% | 1.289 | 37.04% | 2.025 |

| DB-CNN* | 37.02% | 2.654 | 6.68% | 1.361 |

| dVIN (ours) | 87.65% | 0.169 | 60.90% | 0.183 |

| Methods | ||||||

|---|---|---|---|---|---|---|

| Pred. Loss | Succ. Rate | Traj. Diff. | Pred. Loss | Succ. Rate | Traj. Diff. | |

| VIN | 0.178 | 70.56% | 1.450 | 0.203 | 49.01% | 2.996 |

| SVIN | 0.170 | 76.75% | 1.336 | 0.184 | 53.56% | 2.207 |

| DB-CNN* | 0.178 | 55.70% | 3.374 | 0.348 | 16.74% | 6.203 |

| dVIN(ours) | 0.037 | 98.85% | 0.103 | 0.062 | 88.66% | 0.822 |

| Methods | ||||||

|---|---|---|---|---|---|---|

| Succ. Rate | Traj. Diff. | Succ. Rate | Traj. Diff. | Succ. Rate | Traj. Diff. | |

| VIN | 95.17% | 0.236 | 78.57% | 1.255 | 59.79% | 2.234 |

| SVIN | 96.43% | 0.193 | 80.65% | 1.131 | 63.23% | 2.173 |

| dVIN(ours) | 99.85% | 0.076 | 98.19% | 0.138 | 89.82% | 0.478 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jin, X.; Lan, W.; Wang, T.; Yu, P. Value Iteration Networks with Double Estimator for Planetary Rover Path Planning. Sensors 2021, 21, 8418. https://doi.org/10.3390/s21248418

Jin X, Lan W, Wang T, Yu P. Value Iteration Networks with Double Estimator for Planetary Rover Path Planning. Sensors. 2021; 21(24):8418. https://doi.org/10.3390/s21248418

Chicago/Turabian StyleJin, Xiang, Wei Lan, Tianlin Wang, and Pengyao Yu. 2021. "Value Iteration Networks with Double Estimator for Planetary Rover Path Planning" Sensors 21, no. 24: 8418. https://doi.org/10.3390/s21248418

APA StyleJin, X., Lan, W., Wang, T., & Yu, P. (2021). Value Iteration Networks with Double Estimator for Planetary Rover Path Planning. Sensors, 21(24), 8418. https://doi.org/10.3390/s21248418