Digital Twin-Driven Human Robot Collaboration Using a Digital Human

Abstract

1. Introduction

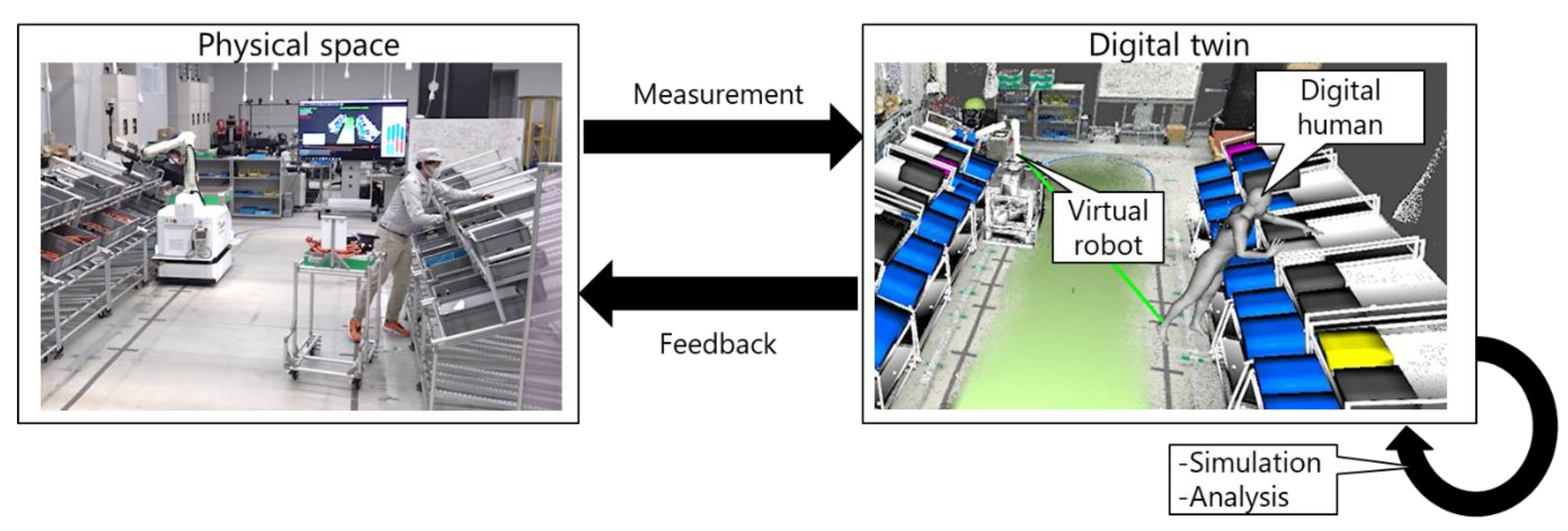

- The working capability and actual movements in real space are considered by measuring individual robot and worker behaviors.

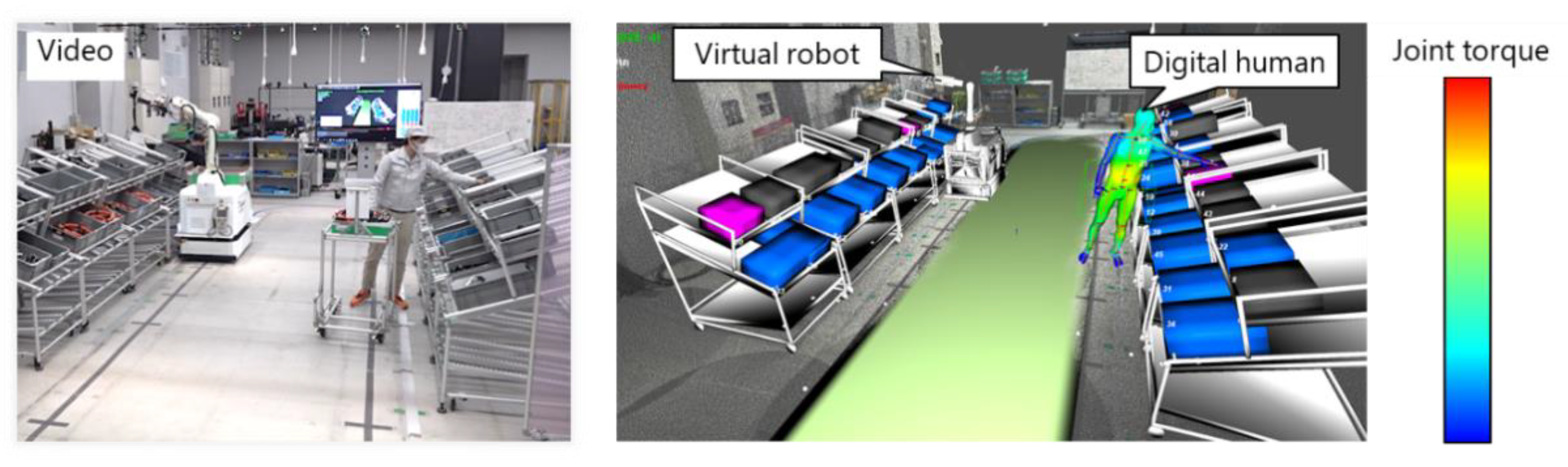

- The robot behavior and full-body posture are reflected in the virtual space. The DH module enables further analysis for the worker, such as picking detection and ergonomic evaluation.

- The virtual and real spaces are connected via real-time measurements, wireless communication, and feedback systems.

- The changes in the robot and worker in the real space are immediately reflected to the virtual twin. The dynamic scheduling results are immediately presented to the robot and worker to improve the production efficiency and the physical load of the worker.

- DH integration: A DH is applied to DT-driven HRC to realize the real-time monitoring, prediction, and ergonomic assessment based on the full-body dynamics of workers.

- Demonstration for a picking scenario: The proposed system was applied to a picking scenario to demonstrate the advantages of DT-driven HRC. The worker and robot picked parts as instructed by the production management module while moving over a wide area.

2. Related Works

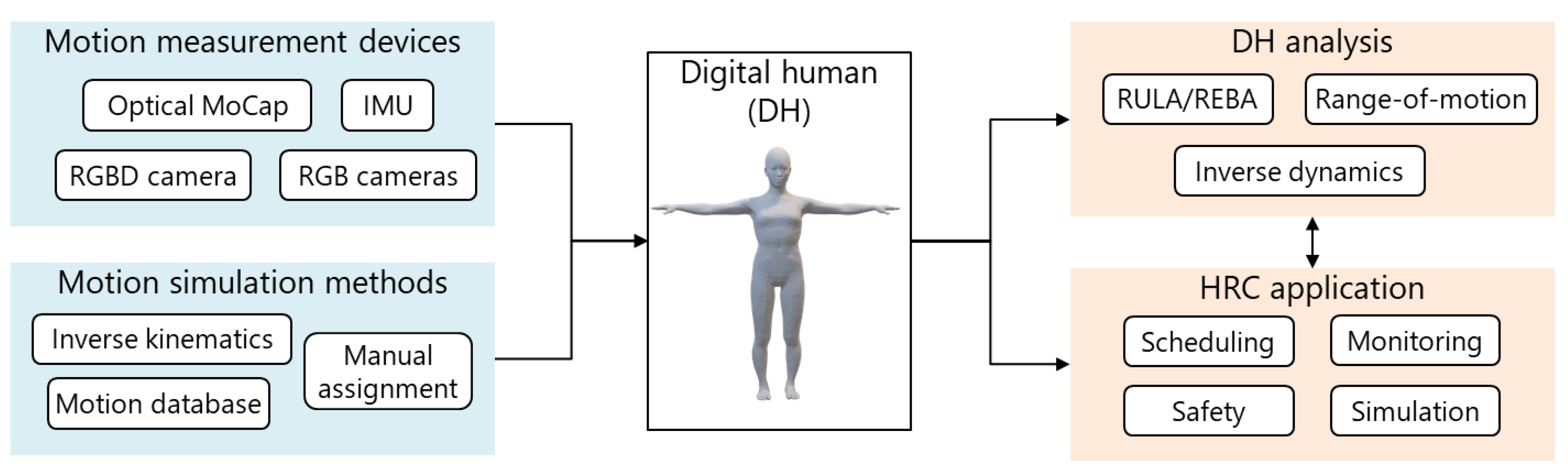

2.1. Human Representation in Research on Human–Robot Collaboration

2.2. Improvement in Production Efficiency by Human–Robot Collaboration

2.3. Ergonomic Assessment in Industry Fields

3. Method

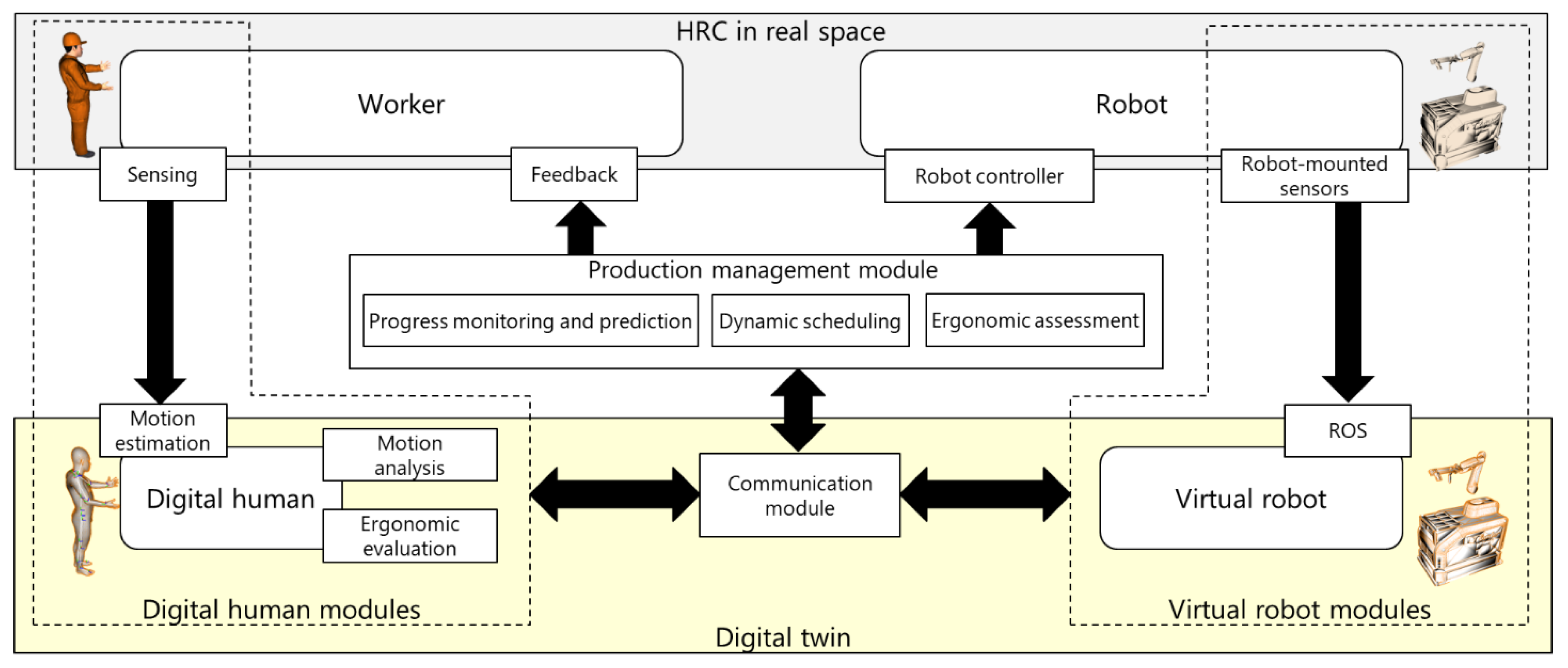

3.1. Overview

3.2. Digital Twin of the Worker

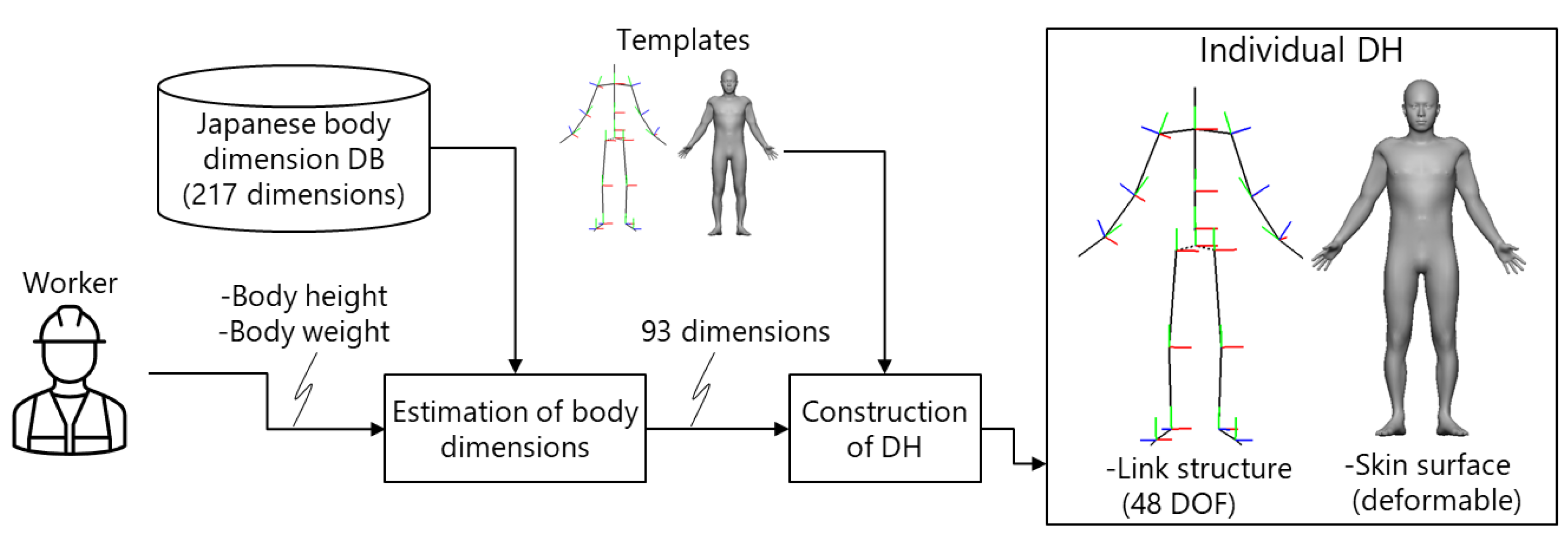

3.2.1. Digital Human Model

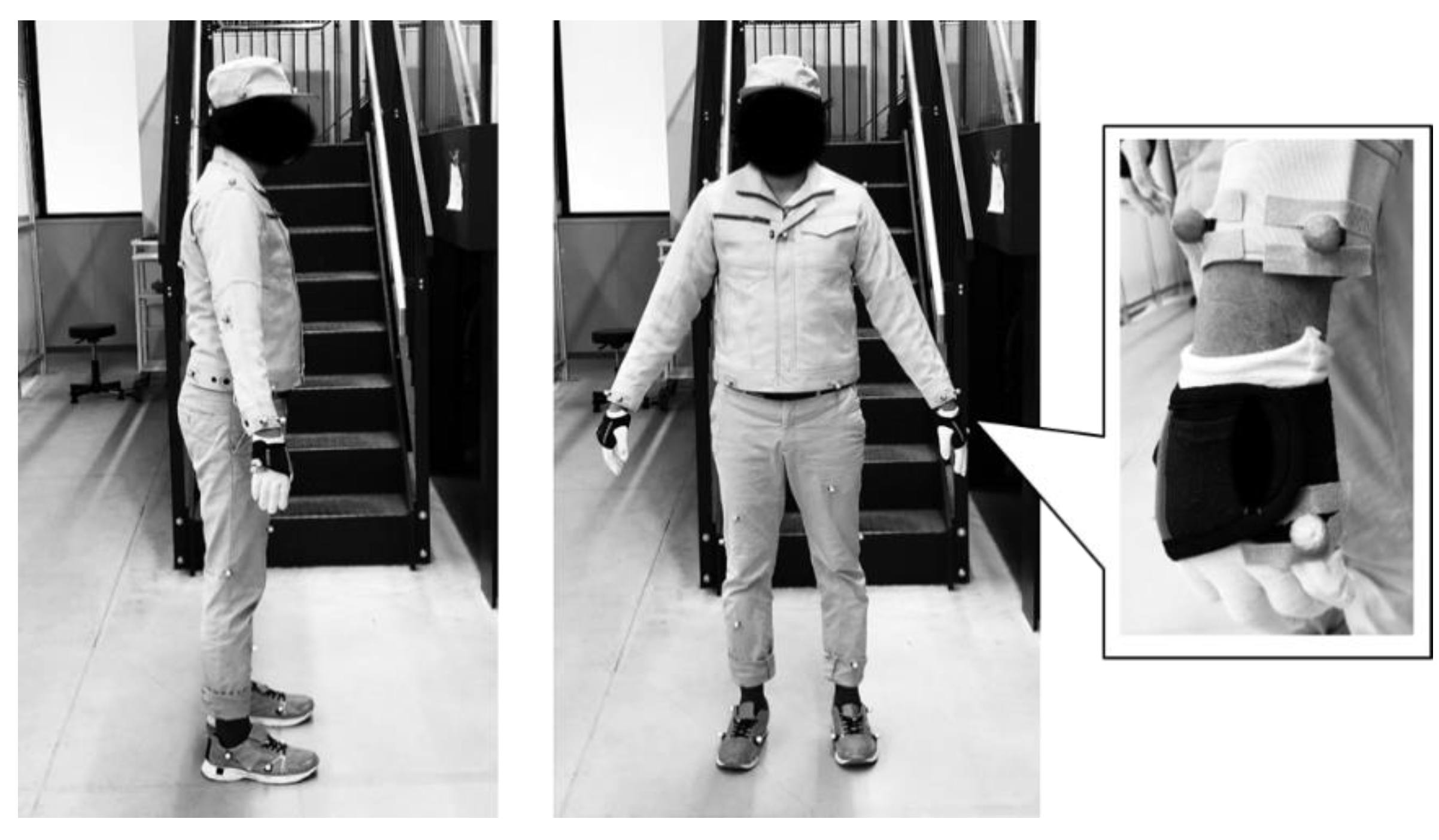

3.2.2. Sensing Submodule

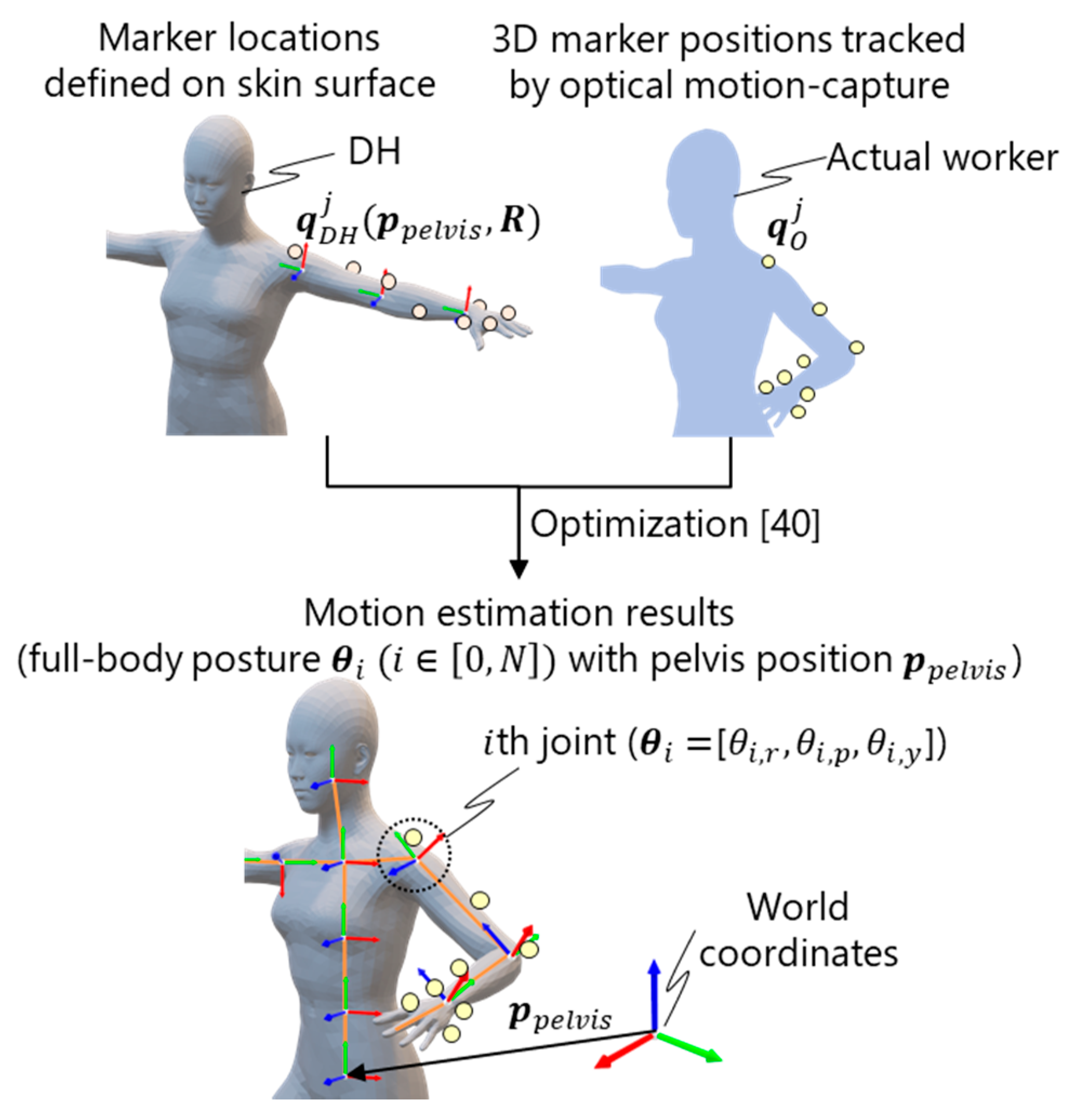

3.2.3. Motion Estimation

3.2.4. Motion Analysis

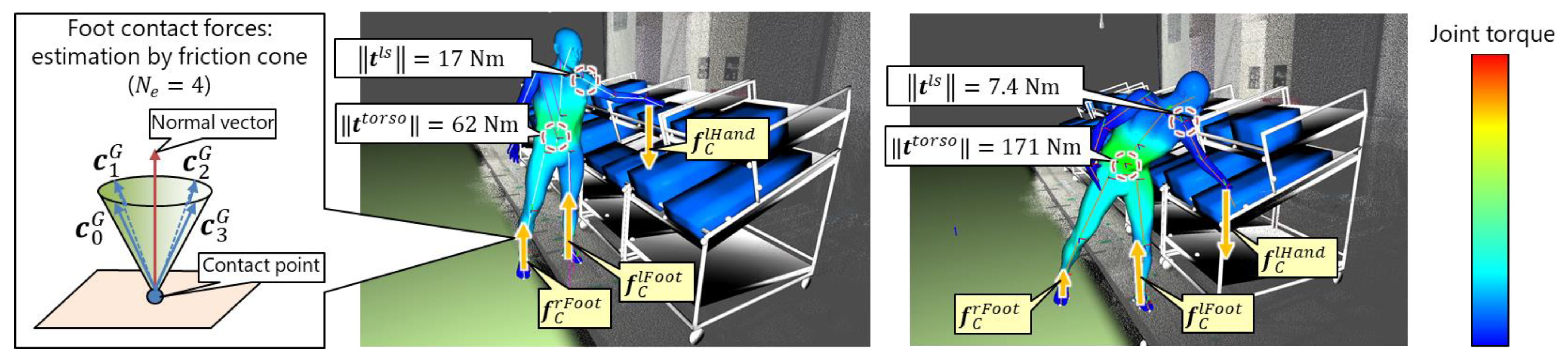

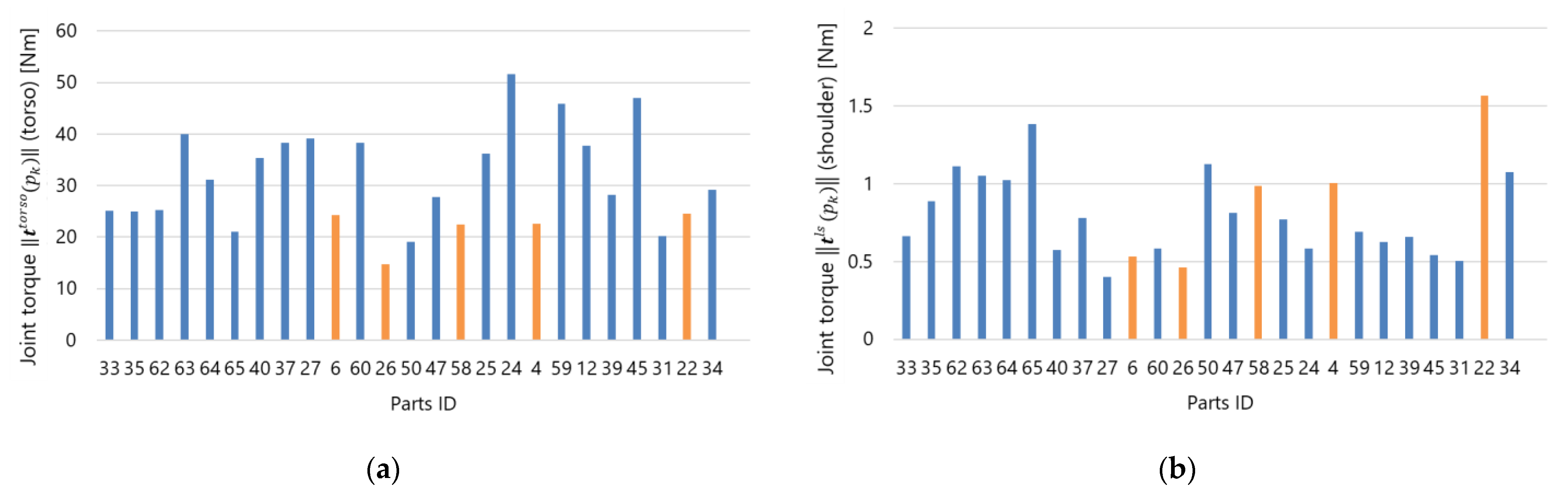

3.2.5. Ergonomic Assessment

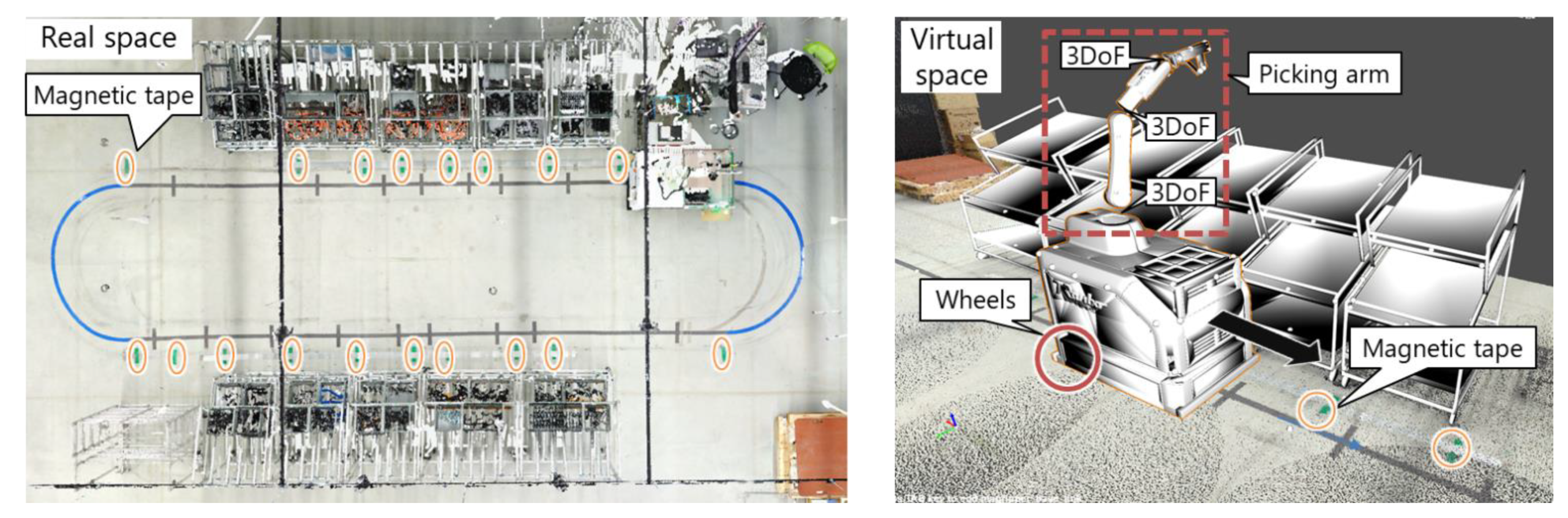

3.3. Digital Twin of the Robot

3.4. Communication Module

3.5. Production Management Module

3.5.1. Cycle and Parts Definition

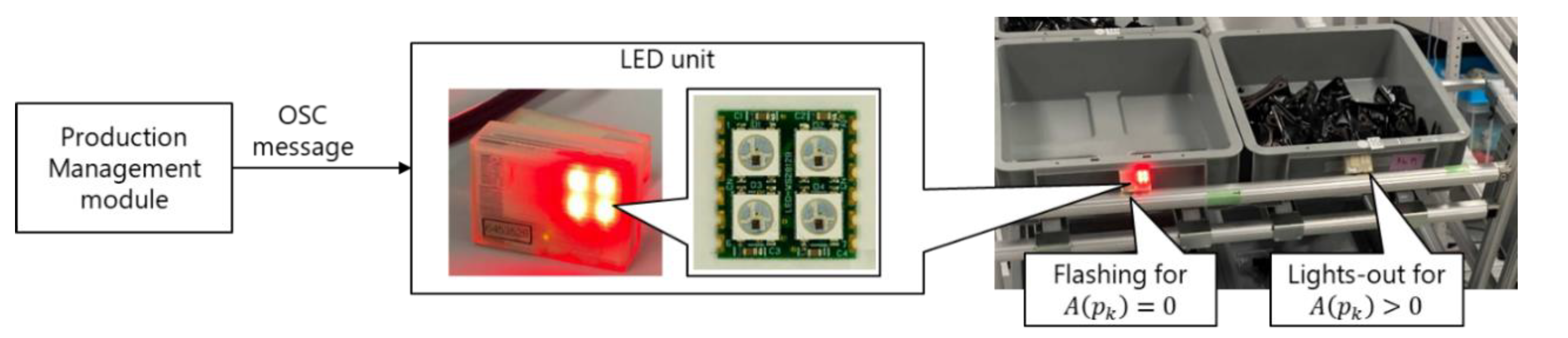

3.5.2. Progress Monitoring and Prediction

3.5.3. Dynamic Scheduling

3.5.4. Feedback Submodule

4. Results

4.1. Experimental Settings

4.2. Construction of the Digital Twin

4.3. Online Demonstration with an Operator

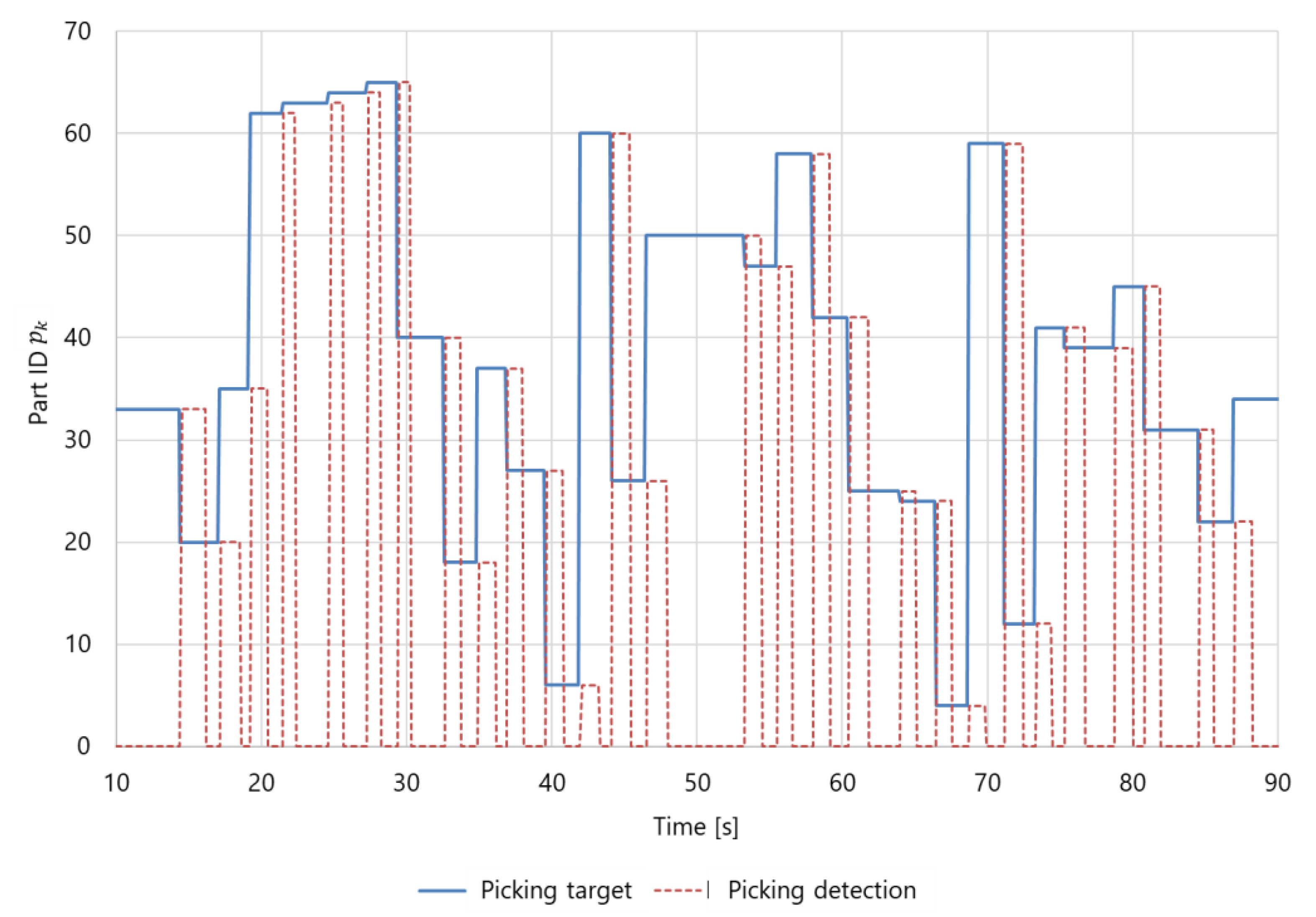

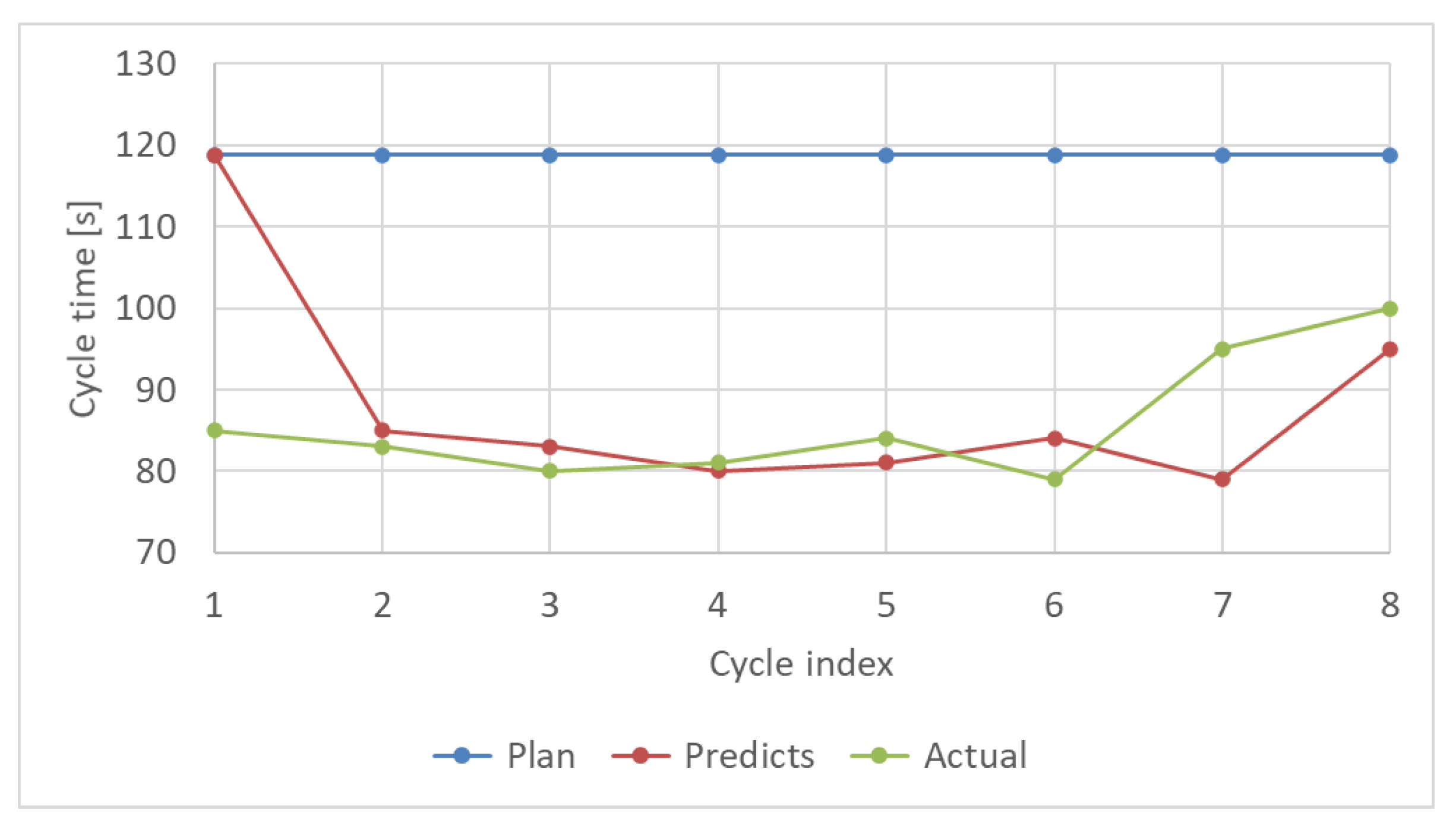

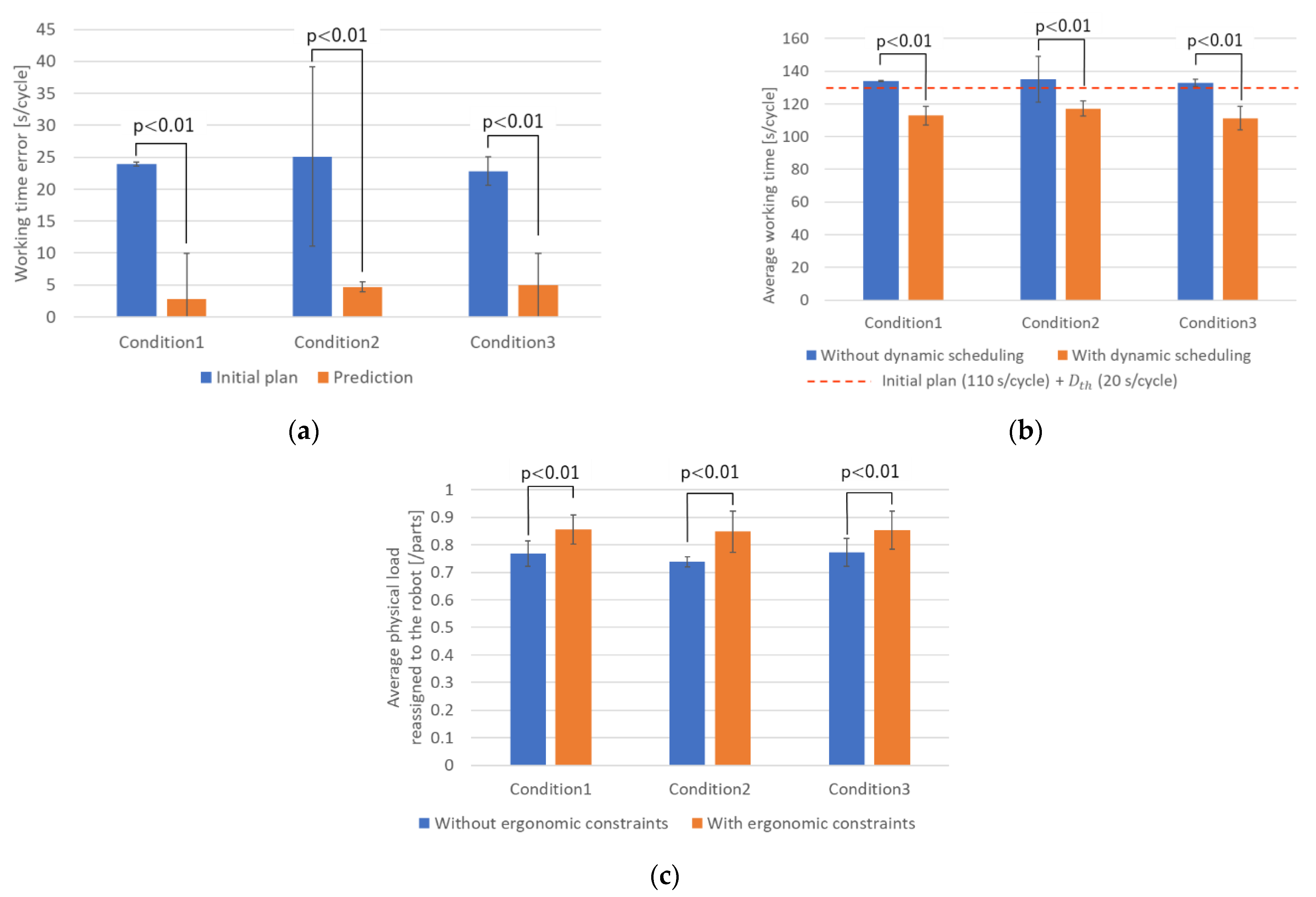

4.3.1. Results of Progress Prediction

4.3.2. Results for the Dynamic Scheduling

4.4. Offline Validation with Test Data

5. Discussion

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Neumann, W.P.; Winkelhaus, S.; Grosse, E.H.; Glock, C.H. Industry 4.0 and the human factor–A systems framework and analysis methodology for successful development. Int. J. Prod. Econ. 2021, 233, 107992. [Google Scholar] [CrossRef]

- Grieves, M. Digital Twin: Manufacturing Excellence through Virtual Factory Replication; A White Paper; Michael Grieves, LLC: Melbourne, FL, USA, 2014; pp. 1–7. [Google Scholar]

- Liu, M.; Fang, S.; Dong, H.; Xu, C. Review of digital twin about concepts, technologies, and industrial applications. J. Manuf. Syst. 2021, 58, 346–361. [Google Scholar] [CrossRef]

- Sepasgozar, S.M.E. Differentiating Digital Twin from Digital Shadow: Elucidating a Paradigm Shift to Expedite a Smart, Sustainable Built Environment. Buildings 2021, 11, 151. [Google Scholar] [CrossRef]

- Oyekan, J.; Farnsworth, M.; Hutabarat, W.; Miller, D.; Tiwari, A. Applying a 6 DoF Robotic Arm and Digital Twin to Automate Fan-Blade Reconditioning for Aerospace Maintenance, Repair, and Overhaul. Sensors 2020, 20, 4637. [Google Scholar] [CrossRef]

- Guo, D.; Zhong, R.Y.; Lin, P.; Lyu, Z.; Rong, Y.; Huang, G.Q. Digital twin-enabled Graduation Intelligent Manufacturing System for fixed-position assembly islands. Robot. Comput. Integr. Manuf. 2020, 63, 101917. [Google Scholar] [CrossRef]

- Sun, X.; Bao, J.; Li, J.; Zhang, Y.; Liu, S.; Zhou, B. A digital twin-driven approach for the assembly-commissioning of high precision products. Robot. Comput. Integr. Manuf. 2020, 61, 101839. [Google Scholar] [CrossRef]

- Robla-Gómez, S.; Becerra, V.M.; Llata, J.R.; González-Sarabia, E.; Torre-Ferrero, C.; Pérez-Oria, J. Working Together: A Review on Safe Human-Robot Collaboration in Industrial Environments. IEEE Access 2017, 5, 26754–26773. [Google Scholar] [CrossRef]

- Matheson, E.; Minto, R.; Zampieri, E.G.G.; Faccio, M.; Rosati, G. Human–Robot Collaboration in Manufacturing Applications: A Review. Robotics 2019, 8, 100. [Google Scholar] [CrossRef]

- Malik, A.A.; Bilberg, A. Digital twins of human robot collaboration in a production setting. Procedia Manuf. 2018, 17, 278–285. [Google Scholar] [CrossRef]

- Kousi, N.; Gkournelos, C.; Aivaliotis, S.; Giannoulis, C.; Michalos, G.; Makris, S. Digital twin for adaptation of robots’ behavior in flexible robotic assembly lines. Procedia Manuf. 2019, 28, 121–126. [Google Scholar] [CrossRef]

- Dröder, K.; Bobka, P.; Germann, T.; Gabriel, F.; Dietrich, F. A Machine Learning-Enhanced Digital Twin Approach for Human- Robot-Collaboration. Procedia CIRP 2018, 76, 187–192. [Google Scholar] [CrossRef]

- Bortolini, M.; Faccio, M.; Gamberi, M.; Pilati, F. Motion Analysis System (MAS) for production and ergonomics assessment in the manufacturing processes. Comput. Ind. Eng. 2020, 139, 105485. [Google Scholar] [CrossRef]

- McAtamney, L.; Nigel Corlett, E. RULA: A survey method for the investigation of work-related upper limb disorders. Appl. Ergon. 1993, 24, 91–99. [Google Scholar] [CrossRef]

- Hignett, S.; McAtamney, L. Rapid Entire Body Assessment (REBA). Appl. Ergon. 2000, 31, 201–205. [Google Scholar] [CrossRef]

- Ma, L.; Chablat, D.; Bennis, F.; Zhang, W.; Hu, B.; Guillaume, F. Fatigue evaluation in maintenance and assembly operations by digital human simulation in virtual environment. Virtual Real. 2011, 15, 55–68. [Google Scholar] [CrossRef]

- Michalos, G.; Kousi, N.; Karagiannis, P.; Gkournelos, C.; Dimoulas, K.; Koukas, S.; Mparis, K.; Papavasileiou, A.; Makris, S. Seamless human robot collaborative assembly–An automotive case study. Mechatronics 2018, 55, 194–211. [Google Scholar] [CrossRef]

- Bonci, A.; Cen Cheng, P.D.; Indri, M.; Nabissi, G.; Sibona, F. Human-Robot Perception in Industrial Environments: A Survey. Sensors 2021, 21, 1571. [Google Scholar] [CrossRef]

- Nikolakis, N.; Maratos, V.; Makris, S. A cyber physical system (CPS) approach for safe human-robot collaboration in a shared workplace. Robot. Comput. Integr. Manuf. 2019, 56, 233–243. [Google Scholar] [CrossRef]

- Cheng, Y.; Sun, L.; Liu, C.; Tomizuka, M. Towards Efficient Human-Robot Collaboration With Robust Plan Recognition and Trajectory Prediction. IEEE Robot. Autom. Lett. 2020, 5, 2602–2609. [Google Scholar] [CrossRef]

- Kanazawa, A.; Kinugawa, J.; Kosuge, K. Adaptive Motion Planning for a Collaborative Robot Based on Prediction Uncertainty to Enhance Human Safety and Work Efficiency. IEEE Trans. Robot. 2019, 35, 817–832. [Google Scholar] [CrossRef]

- Fera, M.; Greco, A.; Caterino, M.; Gerbino, S.; Caputo, F.; Macchiaroli, R.; D’Amato, E. Towards Digital Twin Implementation for Assessing Production Line Performance and Balancing. Sensors 2020, 20, 10097. [Google Scholar] [CrossRef]

- Lv, Q.; Zhang, R.; Sun, X.; Lu, Y.; Bao, J. A digital twin-driven human-robot collaborative assembly approach in the wake of COVID-19. J. Manuf. Syst. 2021, 60, 837–851. [Google Scholar] [CrossRef]

- Zhang, S.; Chen, Y.; Zhang, J.; Jia, Y. Real-Time Adaptive Assembly Scheduling in Human-Multi-Robot Collaboration Accord-ing to Human Capability*. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 3860–3866. [Google Scholar] [CrossRef]

- Bilberg, A.; Malik, A.A. Digital twin driven human–robot collaborative assembly. CIRP Ann. 2019, 68, 499–502. [Google Scholar] [CrossRef]

- Gualtieri, L.; Rauch, E.; Vidoni, R. Emerging research fields in safety and ergonomics in industrial collaborative robotics: A systematic literature review. Robot. Comput. Integr. Manuf. 2021, 67, 101998. [Google Scholar] [CrossRef]

- Oyekan, J.O.; Hutabarat, W.; Tiwari, A.; Grech, R.; Aung, M.H.; Mariani, M.P.; López-Dávalos, L.; Ricaud, T.; Singh, S.; Dupuis, C. The effectiveness of virtual environments in developing collaborative strategies between industrial robots and humans. Robot. Comput. Integr. Manuf. 2019, 55, 41–54. [Google Scholar] [CrossRef]

- Veikko, L. OWAS: A Method for the Evaluation of Postural Load during Work; Institute of Occupational Health: Edinburgh, UK, 1992. [Google Scholar]

- Waters, T.R.; Putz-Anderson, V.; Garg, A. Applications Manual for the Revised NIOSH Lifting Equation; National Institute for Occupational Safety and Health: Washington, DC, USA, 1994. [Google Scholar]

- Colombini, D.; Occhipinti, E.; Alverez-Casado, E. The Revised OCRA Checklist Method; Editorial Factor Humans: Barcelona, Spain, 2013. [Google Scholar]

- Maderna, R.; Poggiali, M.; Zanchettin, A.M.; Rocco, P. An online scheduling algorithm for human-robot collaborative kitting. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 11430–11435. [Google Scholar] [CrossRef]

- der Spaa, L.V.; Gienger, M.; Bates, T.; Kober, J. Predicting and Optimizing Ergonomics in Physical Human-Robot Cooperation Tasks. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 1799–1805. [Google Scholar] [CrossRef]

- Greco, A.; Caterino, M.; Fera, M.; Gerbino, S. Digital Twin for Monitoring Ergonomics during Manufacturing Production. Appl. Sci. 2020, 10, 7758. [Google Scholar] [CrossRef]

- Baskaran, S.; Niaki, F.A.; Tomaszewski, M.; Gill, J.S.; Chen, Y.; Jia, Y.; Mears, L.; Krovi, V. Digital Human and Robot Simulation in Automotive Assembly using Siemens Process Simulate: A Feasibility Study. Procedia Manuf. 2019, 34, 986–994. [Google Scholar] [CrossRef]

- Menychtas, D.; Glushkova, A.; Manitsaris, S. Analyzing the kinematic and kinetic contributions of the human upper body’s joints for ergonomics assessment. J. Ambient. Intell. Humaniz. Comput. 2020, 11, 6093–6105. [Google Scholar] [CrossRef]

- Endo, Y.; Tada, M.; Mochimaru, M. Hand modeling and motion reconstruction for individuals. Int. J. Autom. Technol. 2014, 8, 376–387. [Google Scholar] [CrossRef]

- Endo, Y.; Tada, M.; Mochimaru, M. Estimation of arbitrary human models from anthropometric dimensions. In Proceedings of the International Conference on Digital Human Modeling and Applications in Health Safety, Ergonomics and Risk Management, Los Angeles, CA, USA, 2–7 August 2015; pp. 3–14. [Google Scholar]

- AIST Japanese Body Size Database. Available online: https://unit.aist.go.jp/hiri/dhrg/ja/dhdb/91-92/index.html (accessed on 11 September 2019). (In Japanese).

- OptiTrack Motive. Available online: https://optitrack.com/software/motive/ (accessed on 8 December 2021).

- Maruyama, T.; Toda, H.; Ishii, W.; Tada, M. Inertial Measurement Unit to Segment Calibration Based on Physically Constrained Pose Generation. SICE J. Control. Meas. Syst. Integr. 2020, 13, 122–130. [Google Scholar] [CrossRef]

- Endo, Y.; Tada, M.; Mochimaru, M. Dhaiba: Development of virtual ergonomic assessment system with human models. In Proceedings of the 3rd International Digital Human Symposium, Tokyo, Japan, 20–22 May 2014; pp. 1–8. [Google Scholar]

- Specification, O.M.G. The Real-Time Publish-Subscribe Protocol (RTPS) DDS Interoperability Wire Protocol Specification. Object Management Group Pct07-08-04. 2007. Available online: https://www.omg.org/spec/DDSI-RTPS/2.2 (accessed on 8 December 2021).

- Yang, J.; Sandström, K.; Nolte, T.; Behnam, M. Data Distribution Service for industrial automation. In Proceedings of the 2012 IEEE 17th International Conference on Emerging Technologies Factory Automation (ETFA 2012), Krakow, Poland, 17–21 September 2012; pp. 1–8. [Google Scholar] [CrossRef][Green Version]

- eProsima FastDDS. Available online: https://www.eprosima.com/index.php/products-all/eprosima-fast-dds (accessed on 8 December 2021).

- Xsens MTwAwinda. Available online: https://www.xsens.com/products/mtw-awinda (accessed on 8 December 2021).

- AzureKinect DK. Available online: https://azure.microsoft.com/en-us/services/kinect-dk/ (accessed on 8 December 2021).

| Condition | Picking Speed [s/Parts] | Cycles |

|---|---|---|

| Initial plan 1 | 4.4 | 10 |

| Condition 1 | 4.4 + 1.0 | 10 |

| Condition 2 | 4.4 + 0.2 | 10 |

| Condition 3 | 4.4 + | 10 |

| Process | Elapsed Time [ms] 1 |

|---|---|

| Motion estimation | 15 |

| Visualizing virtual robot | 1 |

| Motion analysis | 3 |

| Ergonomic evaluation 2 | 138 |

| Progress monitoring and prediction | 1 |

| Dynamic scheduling 2 | 1 |

| Actual Cycle Time [s] | Difference between Actual and the Initial Plan [s] | Difference between Actual and the Prediction [s] | |

|---|---|---|---|

| Cycle 1 | 85 | 34 | 34 |

| Cycle 2 | 83 | 36 | 2 |

| Cycle 3 | 80 | 39 | 3 |

| Cycle 4 | 81 | 38 | 1 |

| Cycle 5 | 84 | 35 | 3 |

| Cycle 6 | 79 | 40 | 5 |

| Cycle 7 | 95 | 24 | 16 |

| Cycle 8 | 100 | 19 | 5 |

| 33 ± 7 | 9 ± 11 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maruyama, T.; Ueshiba, T.; Tada, M.; Toda, H.; Endo, Y.; Domae, Y.; Nakabo, Y.; Mori, T.; Suita, K. Digital Twin-Driven Human Robot Collaboration Using a Digital Human. Sensors 2021, 21, 8266. https://doi.org/10.3390/s21248266

Maruyama T, Ueshiba T, Tada M, Toda H, Endo Y, Domae Y, Nakabo Y, Mori T, Suita K. Digital Twin-Driven Human Robot Collaboration Using a Digital Human. Sensors. 2021; 21(24):8266. https://doi.org/10.3390/s21248266

Chicago/Turabian StyleMaruyama, Tsubasa, Toshio Ueshiba, Mitsunori Tada, Haruki Toda, Yui Endo, Yukiyasu Domae, Yoshihiro Nakabo, Tatsuro Mori, and Kazutsugu Suita. 2021. "Digital Twin-Driven Human Robot Collaboration Using a Digital Human" Sensors 21, no. 24: 8266. https://doi.org/10.3390/s21248266

APA StyleMaruyama, T., Ueshiba, T., Tada, M., Toda, H., Endo, Y., Domae, Y., Nakabo, Y., Mori, T., & Suita, K. (2021). Digital Twin-Driven Human Robot Collaboration Using a Digital Human. Sensors, 21(24), 8266. https://doi.org/10.3390/s21248266