DB-YOLO: A Duplicate Bilateral YOLO Network for Multi-Scale Ship Detection in SAR Images

Abstract

:1. Introduction

- (1)

- A CNN-based single-stage ship detector is realized, which can reach a higher standard in both accuracy and speed.

- (2)

- FEN is designed and reduced the complexity of the network through the residual structure. At the same time, the distribution of parameters and calculations of the C3 to C5 layers were optimized in view of the fact that the size of ships in SAR images is mainly small and medium.

- (3)

- DB-FPN is designed to improve the multi-scale detection capabilities of ships; it enhances the fusion of spatial information and semantic information and makes full use of feature maps at different locations through feature multiplexing.

- (4)

2. Proposed Method

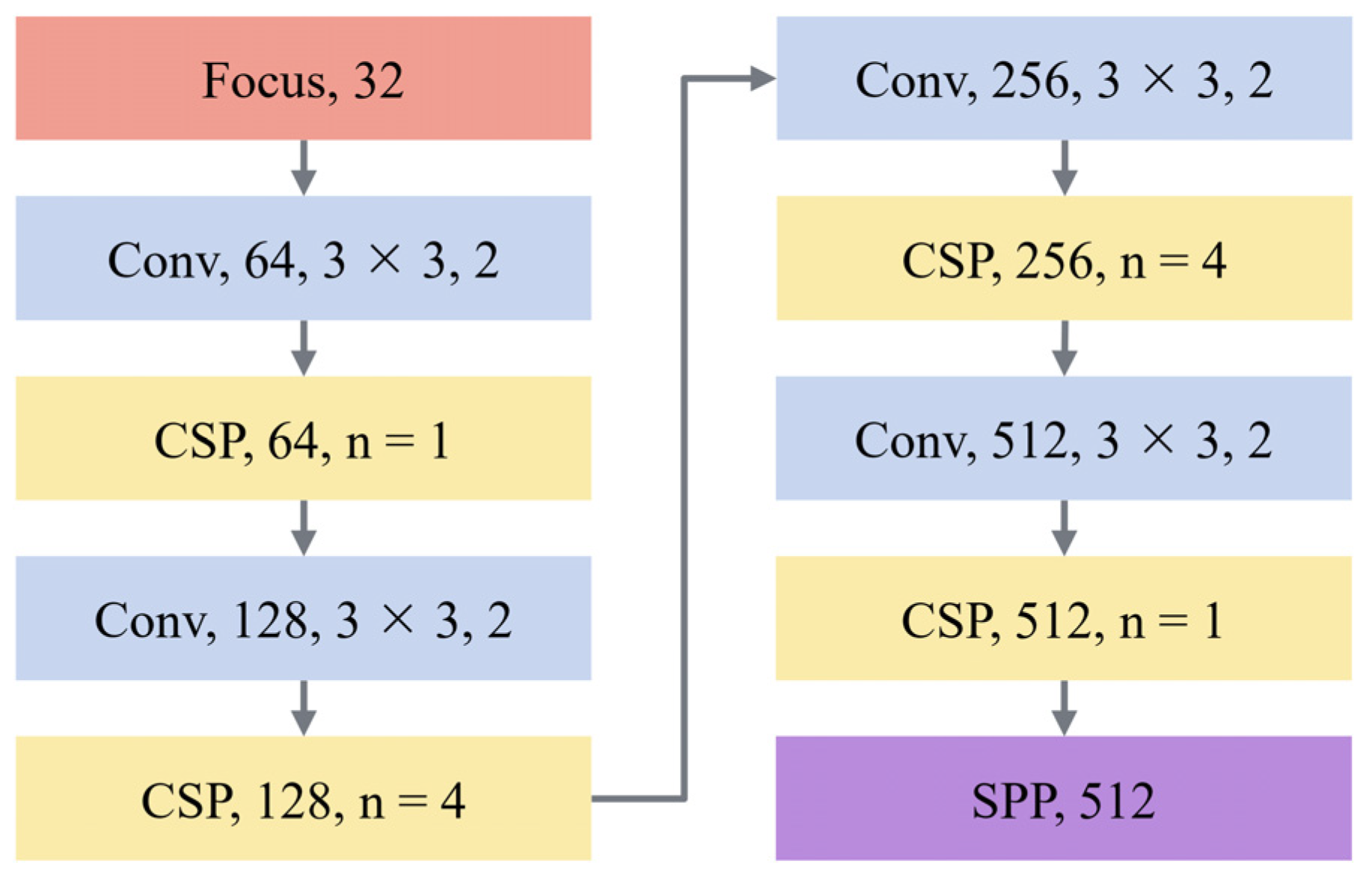

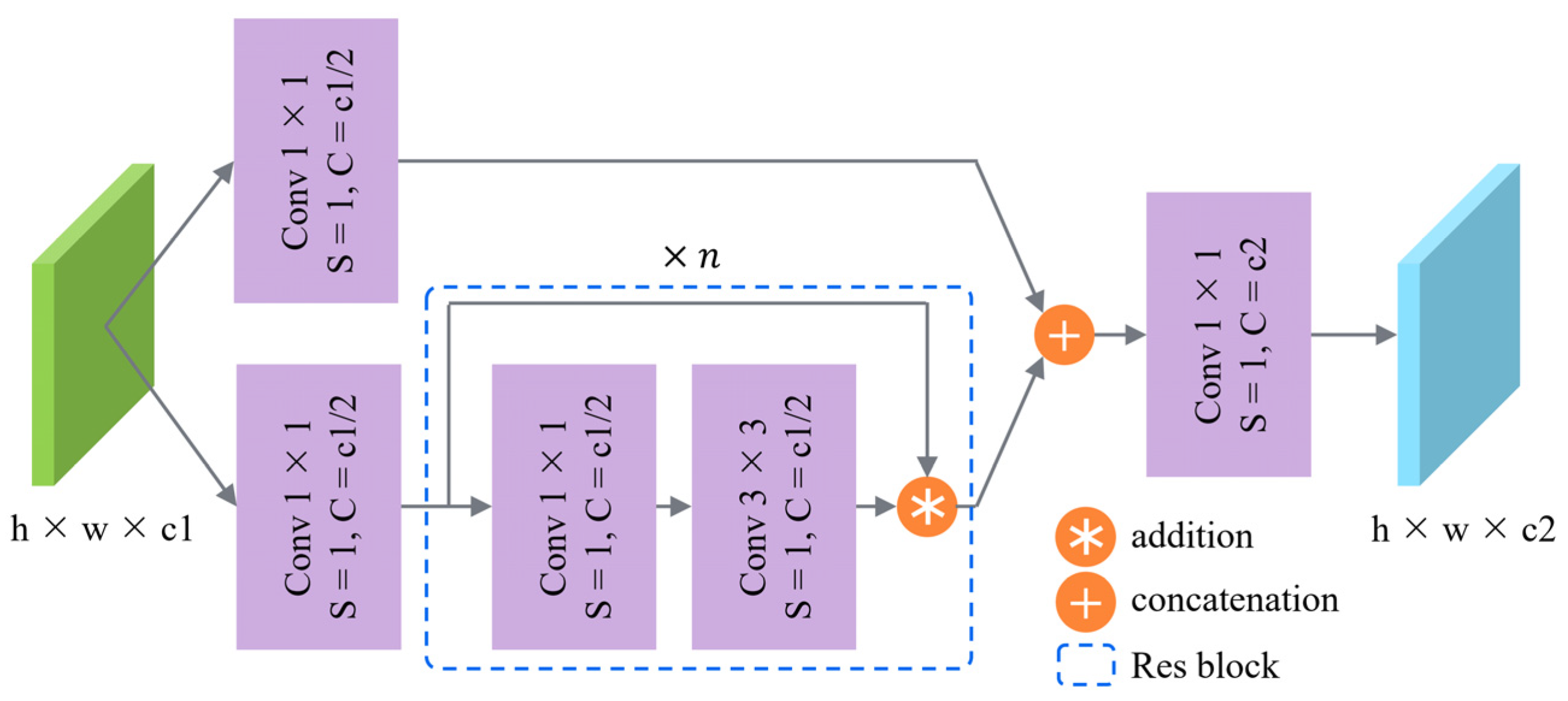

2.1. Feature Extraction Network

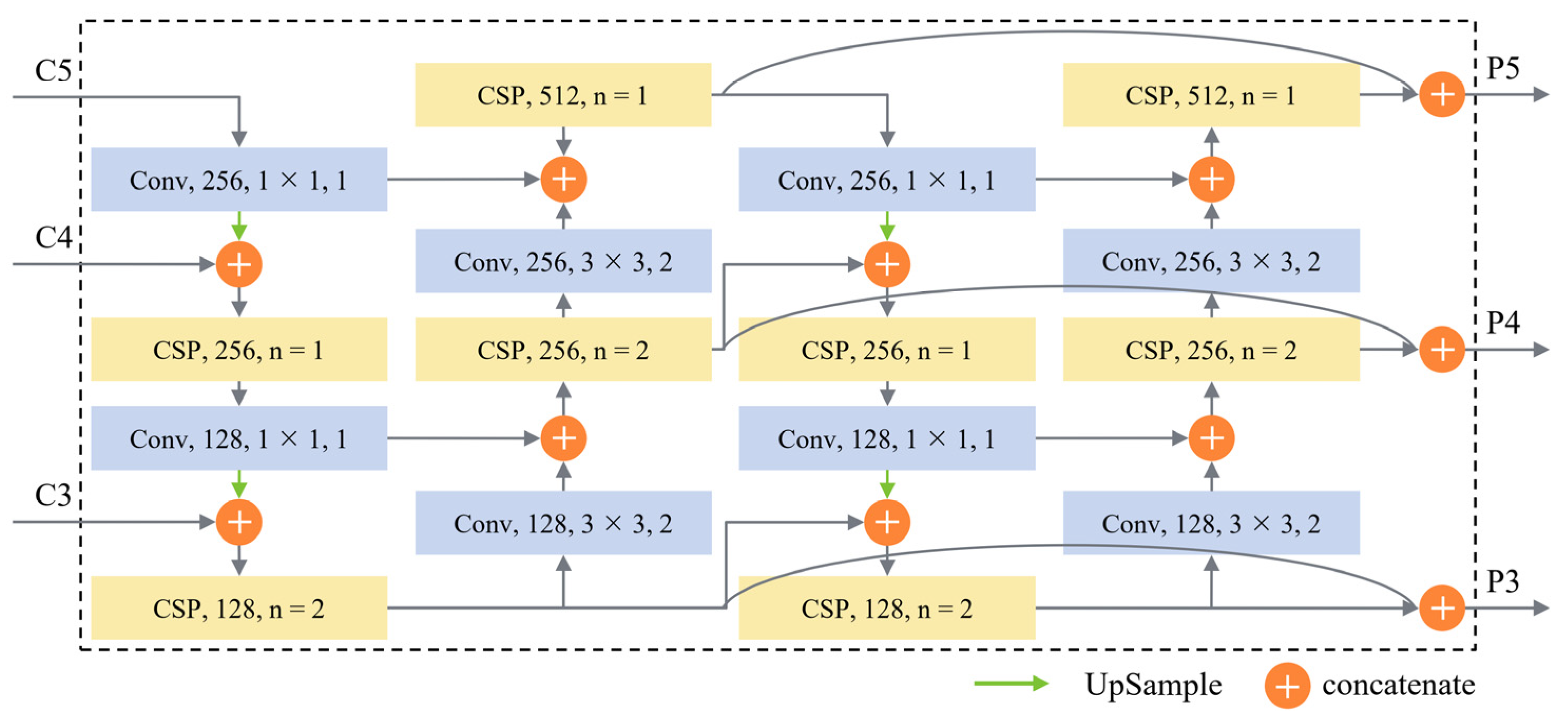

2.2. Duplicate Bilateral Feature Pyramid Network

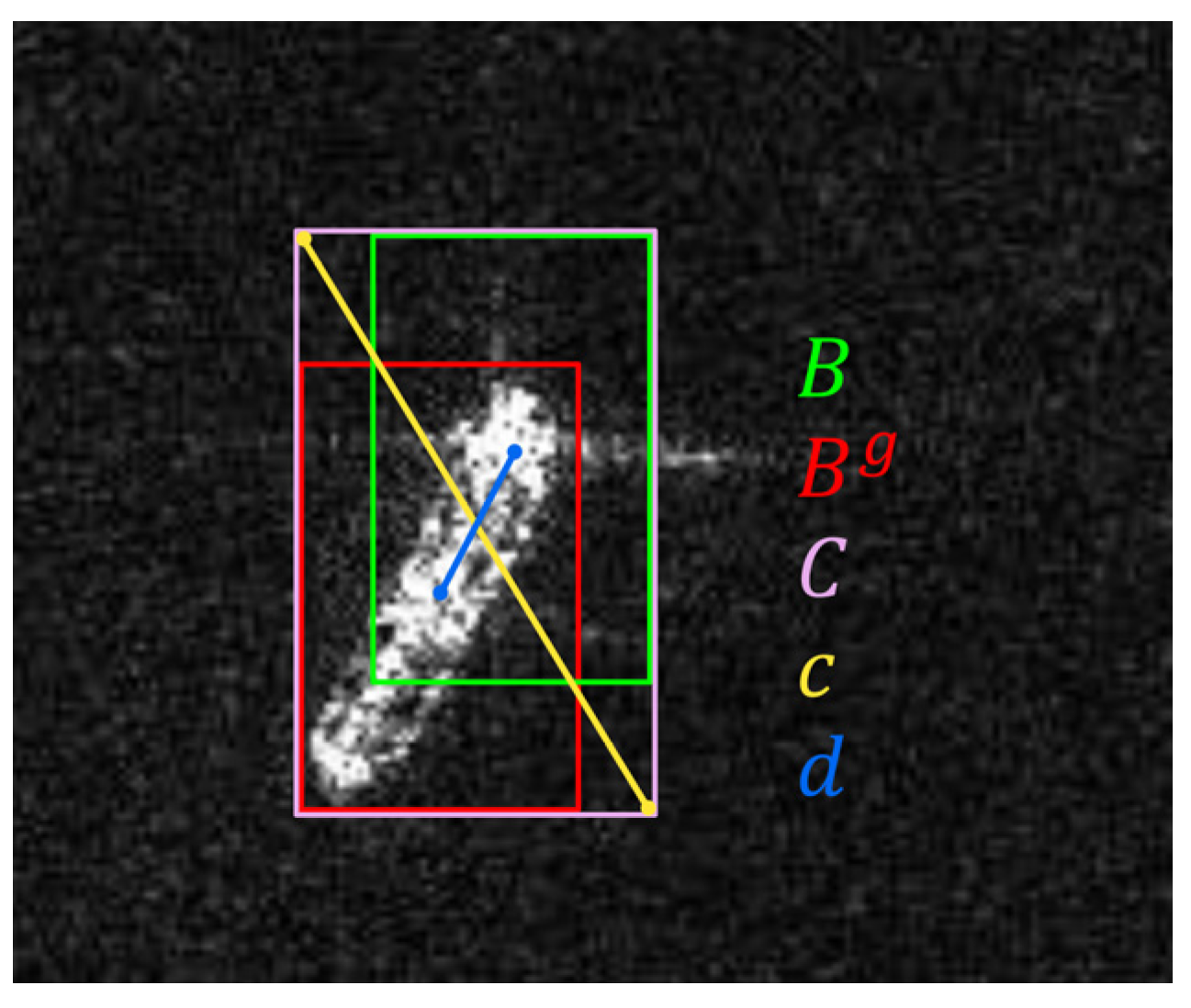

2.3. Detection Network

2.4. Loss Function

3. Experiments and Results

3.1. Experiment Settings

3.2. Data Sets

3.3. Evaluation Indexes

3.4. Results and Discussion

3.4.1. Effect of FEN

3.4.2. Effect of DB-FPN

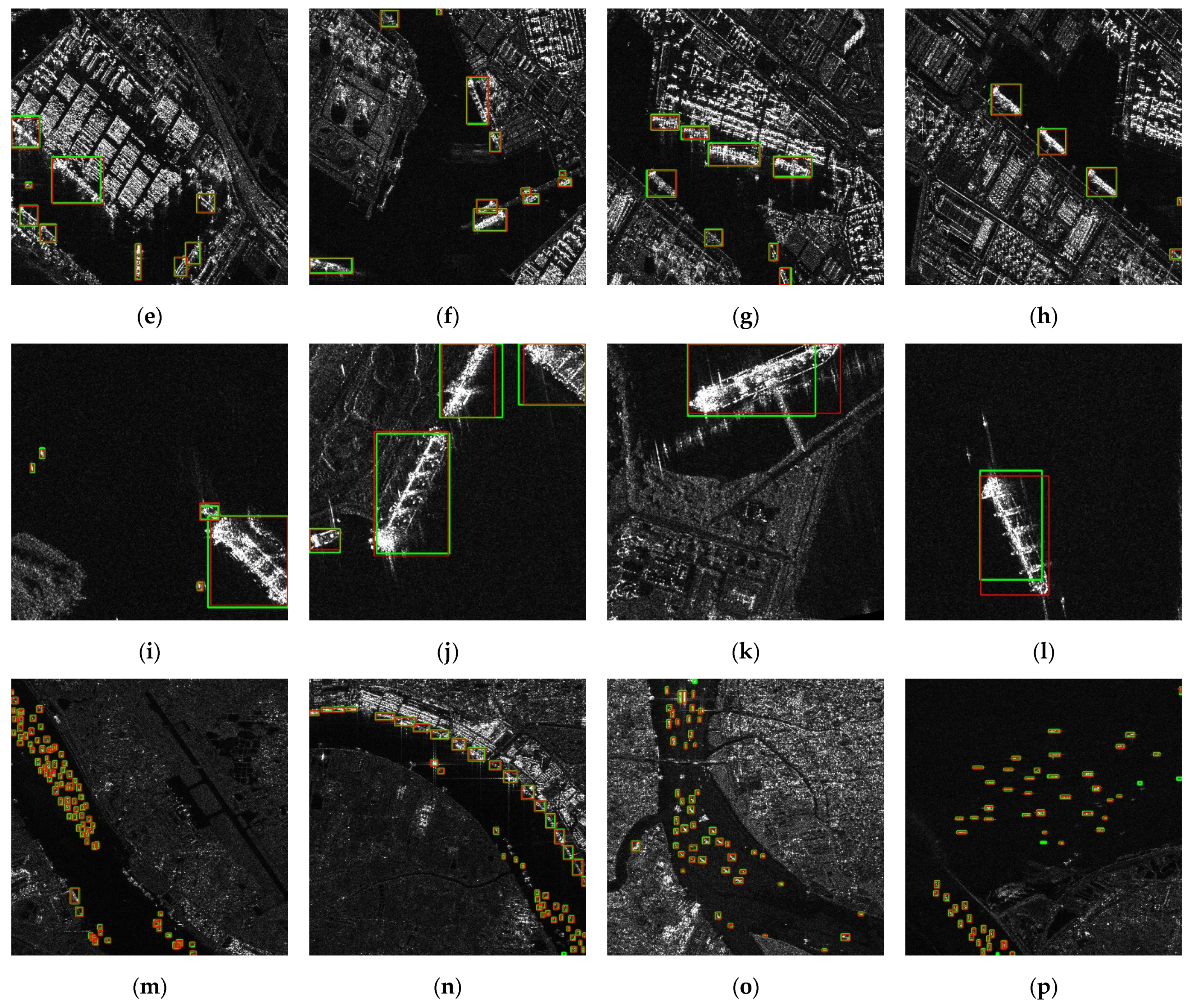

3.4.3. Comparison with the State-of-the-Art Methods

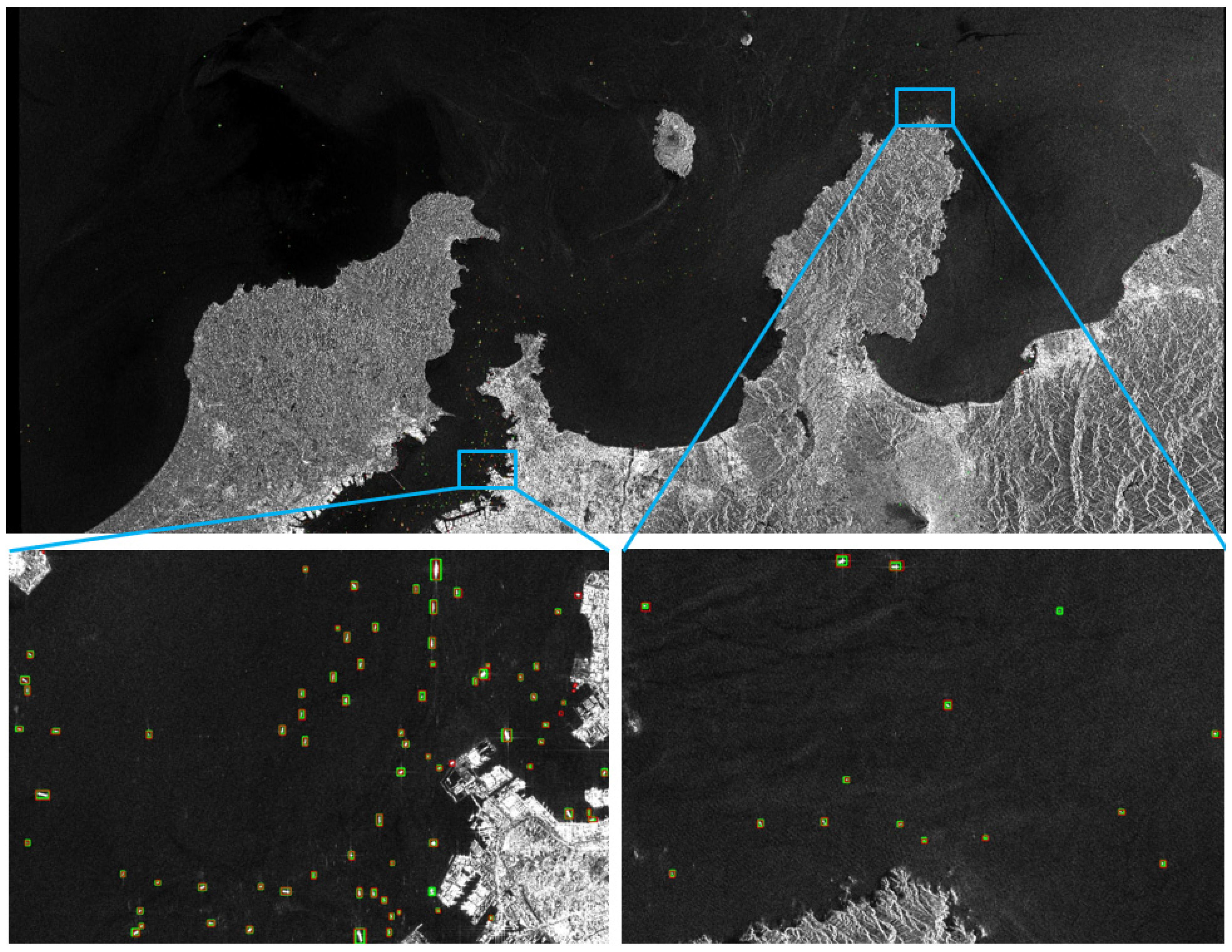

3.4.4. Result of DB-YOLO on Large-Scale SAR Images

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Brusch, S.; Lehner, S.; Fritz, T.; Soccorsi, M.; Soloviev, A.; van Schie, B. Ship surveillance with TerraSAR-X. IEEE Trans. Geosci. Remote Sens. 2011, 49, 1092–1103. [Google Scholar] [CrossRef]

- Moreira, A.; Krieger, G.; Hajnsek, I.; Papathanassiou, K.; Younis, M.; Lopez-Dekker, P.; Huber, S.; Villano, M.; Pardini, M.; Eineder, M. Tandem-L: A highly innovative bistatic SAR mission for global observation of dynamic processes on the Earth’s surface. Geosci. Remote Sens. Mag. IEEE 2015, 3, 8–23. [Google Scholar] [CrossRef]

- Shigang, W.; Min, W.; Shuyuan, Y.; Licheng, J. New hierarchical saliency filtering for fast ship detection in high-resolution SAR images. IEEE Trans. Geosci. Remote Sens. 2017, 55, 351–362. [Google Scholar]

- Pappas, O.; Achim, A.; Bull, D. Superpixel-level CFAR detectors for ship detection in SAR imagery. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1397–1401. [Google Scholar] [CrossRef] [Green Version]

- Salembier, P.; Liesegang, S.; Lopez-Martinez, C. Ship detection in SAR images based on Maxtree representation and graph signal processing. IEEE Trans. Geosci. Remote Sens. 2018, 57, 2709–2724. [Google Scholar] [CrossRef] [Green Version]

- Pitz, W.; Miller, D. The Terrasar X satellite. IEEE Trans. Geosci. Remote Sens. 2010, 48, 615–622. [Google Scholar] [CrossRef]

- Hou, B.; Chen, X.; Jiao, L. Multilayer CFAR detection of ship targets in very high resolution SAR images. IEEE Geosci. Remote Sens. Lett. 2014, 12, 811–815. [Google Scholar]

- Lu, G.; Peng, L.; Wang, L. Rotation sliding window of the hog feature in remote sensing images for ship detection. In Proceedings of the 9th International Symposium on Computational Intelligence & Design, Hangzhou, China, 10–11 December 2016; pp. 401–404. [Google Scholar]

- Schwegmann, C.P.; Kleynhans, W.; Salmon, B.P. Synthetic aperture radar ship detection using Haar-like features. IEEE Geosci. Remote Sens. Lett. 2017, 14, 154–158. [Google Scholar] [CrossRef] [Green Version]

- Zhang, T.; Zhang, X.; Shi, J.; Wei, S. Depthwise separable convolution neural network for high-speed SAR ship detection. Remote Sens. 2019, 11, 2483. [Google Scholar] [CrossRef] [Green Version]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-FCN: Object detection via region-based fully convolutional networks. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Yang, Z.; Liu, S.; Hu, H.; Wang, L.; Lin, S. RepPoints: Point set representation for object detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 9656–9665. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the 2016 European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully convolutional one-stage object detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019. [Google Scholar]

- Li, J.; Qu, C.; Shao, J. Ship detection in SAR images based on an improved faster R-CNN. In Proceedings of the SAR in Big Data Era: Models, Methods & Applications Conference, Beijing, China, 13–14 November 2017. [Google Scholar]

- Pan, Z.; Yang, R.; Zhang, A.Z. MSR2N: Multi-stage rotational region based network for arbitrary-oriented ship detection in SAR images. Sensors 2020, 20, 2340. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, Y.; Duan, T.; Wang, C.; Zhang, Y.; Huang, M. End-to-end ship detection in SAR images for complex scenes based on deep CNNs. J. Sens. 2021, 2021, 8893182. [Google Scholar] [CrossRef]

- Bao, W.; Huang, M.; Zhang, Y.; Xu, Y.; Liu, X.; Xiang, X. Boosting ship detection in SAR images with complementary pretraining techniques. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 8941–8954. [Google Scholar] [CrossRef]

- Hu, J.M.; Zhi, X.Y.; Shi, T.J.; Zhang, W.; Cui, Y.; Zhao, S.G. PAG-YOLO: A portable attention-guided YOLO network for small ship detection. Remote Sens. 2021, 13, 3059. [Google Scholar] [CrossRef]

- Jiang, Y.H.; Li, W.W.; Liu, L. R-CenterNet plus: Anchor-free detector for ship detection in SAR images. Sensors 2021, 21, 5693. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X. ShipDeNet-20: An only 20 convolution layers and <1-MB lightweight SAR ship detector. IEEE Geosci. Remote Sens. Lett. 2021, 18, 1234–1238. [Google Scholar]

- Zhang, T.; Zhang, X.; Ke, X.; Zhan, X.; Kumar, D. LS-SSDD-v1.0: A deep learning dataset dedicated to small ship detection from large-scale sentinel-1 SAR images. Remote Sens. 2020, 12, 2997. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and efficient object detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Wei, S.; Su, H.; Ming, J.; Wang, C.; Yan, M.; Kumar, D.; Shi, J.; Zhang, X. Precise and robust ship detection for high-resolution SAR imagery based on HR-SDNet. Remote Sens. 2020, 12, 167. [Google Scholar] [CrossRef] [Green Version]

- Guo, W.; Li, W.; Gong, W.; Cui, J. Extended feature pyramid network with adaptive scale training strategy and anchors for object detection in aerial images. Remote Sens. 2020, 12, 784. [Google Scholar] [CrossRef] [Green Version]

- Yang, R.; Pan, Z.; Jia, X.; Zhang, L.; Deng, Y. A novel CNN-based detector for ship detection based on rotatable bounding box in SAR Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 1938–1958. [Google Scholar] [CrossRef]

- Wang, C.Y.; Liao, H.; Wu, Y.H.; Chen, P.Y.; Yeh, I.H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Wei, S.; Zeng, X.; Qu, Q.; Wang, M.; Shi, J. HRSID: A high-resolution SAR images dataset for ship detection and instance segmentation. IEEE Access 2020, 8, 120234–120254. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Qiao, S.; Chen, L.C.; Yuille, A. DetectoRS: Detecting objects with recursive feature pyramid and switchable atrous convolution. arXiv 2020, arXiv:2006.02334. [Google Scholar]

- Bodla, N.; Singh, B.; Chellappa, R.; Davis, L.S. Soft-NMS—Improving object detection with one line of code. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–27 October 2017; pp. 5562–5570. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ren, D. Distance-IoU Loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 41, 318–327. [Google Scholar]

- Pang, J.; Chen, K.; Shi, J.; Feng, H.; Ouyang, W.; Lin, D. Libra R-CNN: Towards balanced learning for object detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Zhou, X.; Wang, D.; Krhenbühl, P. Objects as Points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Copernicus Open Access Hub. Available online: https://scihub.copernicus.eu/ (accessed on 15 July 2020).

| Data Sets | SSDD | HRSID | |

|---|---|---|---|

| Polarization | HH, HV, VV, VH | HH, HV, VV | |

| Image number | 1160 | 5604 | |

| Ship number | 2551 | 16,965 | |

| Image size (pixel) | 500 × 500, etc. | 800 × 800 | |

| Resolution (m) | 1–15 | 0.5, 1, 3 | |

| Size of ships (nums) | Small | 1529 | 9242 |

| Medium | 935 | 7388 | |

| Large | 76 | 321 | |

| {C3, C4, C5} | Params(M) | FLOPs(G) | P | R | F1 | AP50 | AP |

|---|---|---|---|---|---|---|---|

| {3, 3, 1} | 22.8 | 52.9 | 63.0 | 91.5 | 74.6 | 91.1 | 63.1 |

| {3, 3, 3} | 28.0 | 57.1 | 63.0 | 91.7 | 74.7 | 91.4 | 63.1 |

| {4, 4, 1} | 23.6 | 57.1 | 66.5 | 91.8 | 77.1 | 91.7 | 63.7 |

| {5, 5, 1} | 24.4 | 61.3 | 66.8 | 92.0 | 77.5 | 91.8 | 63.8 |

| {6, 6, 1} | 25.2 | 65.5 | 67.0 | 92.1 | 77.6 | 91.8 | 63.8 |

| Method | Params(M) | FLOPs(G) | P | R | F1 | AP50 | AP |

|---|---|---|---|---|---|---|---|

| FPN | 7.2 | 20.8 | 67.8 | 91.1 | 77.8 | 91.6 | 63.2 |

| PANet | 8.3 | 22.1 | 68.4 | 91.3 | 78.2 | 91.9 | 63.6 |

| BiFPN | 8.1 | 21.4 | 68.1 | 91.4 | 78.0 | 91.8 | 63.9 |

| DB-FPN | 11.6 | 29.8 | 68.6 | 91.8 | 78.5 | 92.2 | 64.3 |

| Method | Params (M) | FLOPs (G) | Fps | SSDD | HRSID | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| P | R | F1 | AP50 | AP | P | R | F1 | AP50 | AP | ||||

| Faster R-CNN | 41.4 | 134.4 | 6.9 | 82.4 | 94.5 | 88.0 | 94.3 | 59.3 | 67.2 | 90.5 | 77.1 | 89.5 | 67.6 |

| Cascade R-CNN | 67.2 | 153.2 | 6.0 | 84.2 | 95.6 | 89.5 | 96.3 | 61.8 | 68.7 | 91.3 | 78.4 | 90.7 | 69.5 |

| Libra R-CNN | 42.8 | 141.3 | 6.3 | 83.6 | 94.7 | 88.8 | 94.8 | 59.8 | 66.8 | 89.7 | 76.6 | 88.9 | 67.2 |

| FCOS | 32.1 | 126.0 | 7.8 | 84.7 | 95.8 | 89.9 | 95.8 | 59.6 | 62.9 | 86.1 | 72.7 | 84.5 | 63.4 |

| CenterNet | 16.5 | 72.5 | 13.9 | 83.2 | 96.1 | 89.1 | 95.3 | 60.7 | 65.3 | 92.1 | 78.3 | 91.3 | 68.6 |

| YOLOv5s | 7.1 | 16.4 | 63.3 | 83.2 | 97.1 | 89.6 | 97.5 | 63.9 | 66.9 | 94.2 | 78.2 | 93.8 | 69.8 |

| DB-YOLO | 10.8 | 25.6 | 48.1 | 87.8 | 97.5 | 92.4 | 97.8 | 64.9 | 72.4 | 94.9 | 82.1 | 94.4 | 72.0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, H.; Xie, Y.; Huang, H.; Jing, C.; Rong, Y.; Wang, C. DB-YOLO: A Duplicate Bilateral YOLO Network for Multi-Scale Ship Detection in SAR Images. Sensors 2021, 21, 8146. https://doi.org/10.3390/s21238146

Zhu H, Xie Y, Huang H, Jing C, Rong Y, Wang C. DB-YOLO: A Duplicate Bilateral YOLO Network for Multi-Scale Ship Detection in SAR Images. Sensors. 2021; 21(23):8146. https://doi.org/10.3390/s21238146

Chicago/Turabian StyleZhu, Haozhen, Yao Xie, Huihui Huang, Chen Jing, Yingjiao Rong, and Changyuan Wang. 2021. "DB-YOLO: A Duplicate Bilateral YOLO Network for Multi-Scale Ship Detection in SAR Images" Sensors 21, no. 23: 8146. https://doi.org/10.3390/s21238146

APA StyleZhu, H., Xie, Y., Huang, H., Jing, C., Rong, Y., & Wang, C. (2021). DB-YOLO: A Duplicate Bilateral YOLO Network for Multi-Scale Ship Detection in SAR Images. Sensors, 21(23), 8146. https://doi.org/10.3390/s21238146