Early Detection and Classification of Tomato Leaf Disease Using High-Performance Deep Neural Network

Abstract

:1. Introduction

- When the conditions of light differ from eligible photographs, color segmentation fails.

- Regional segmentation occurs because of initial seed selection.

- Texture varieties take too long to handle.

2. Related Work

- The dataset for tomato leaves is built using data augmentation and image annotation tools. It consists of laboratory photos and detailed images captured in actual field situations.

- The recognition of tomato leaves is proposed using a Deep Convolutional Neural Network (DCNN). Rainbow concatenation and GoogLeNet Inception V3 structure are all included.

- In the proposed INAR-SSD model, the Inception V3 module and Rainbow concatenation detect these five frequent tomato leaf diseases.

3. Materials and Methods

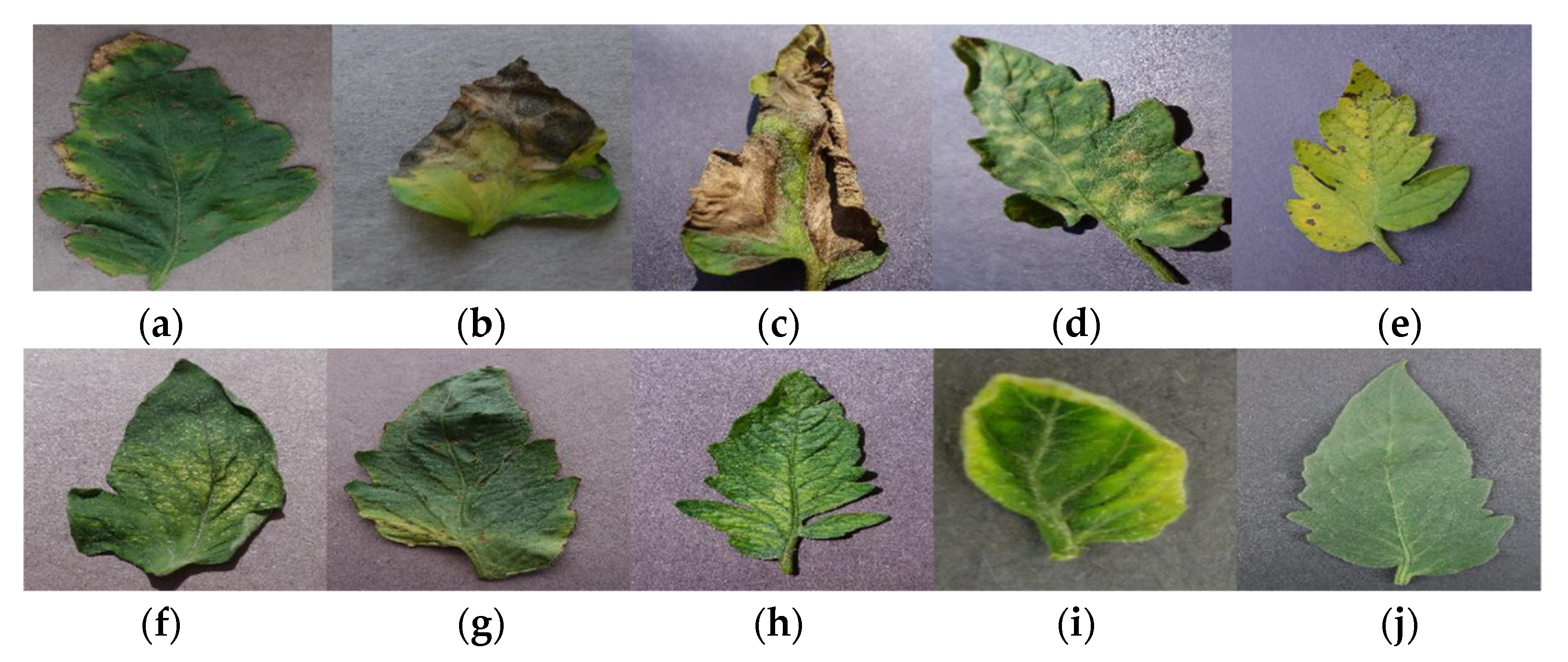

3.1. Dataset

3.2. Image Pre-Processing and Labelling

3.3. Training Dataset

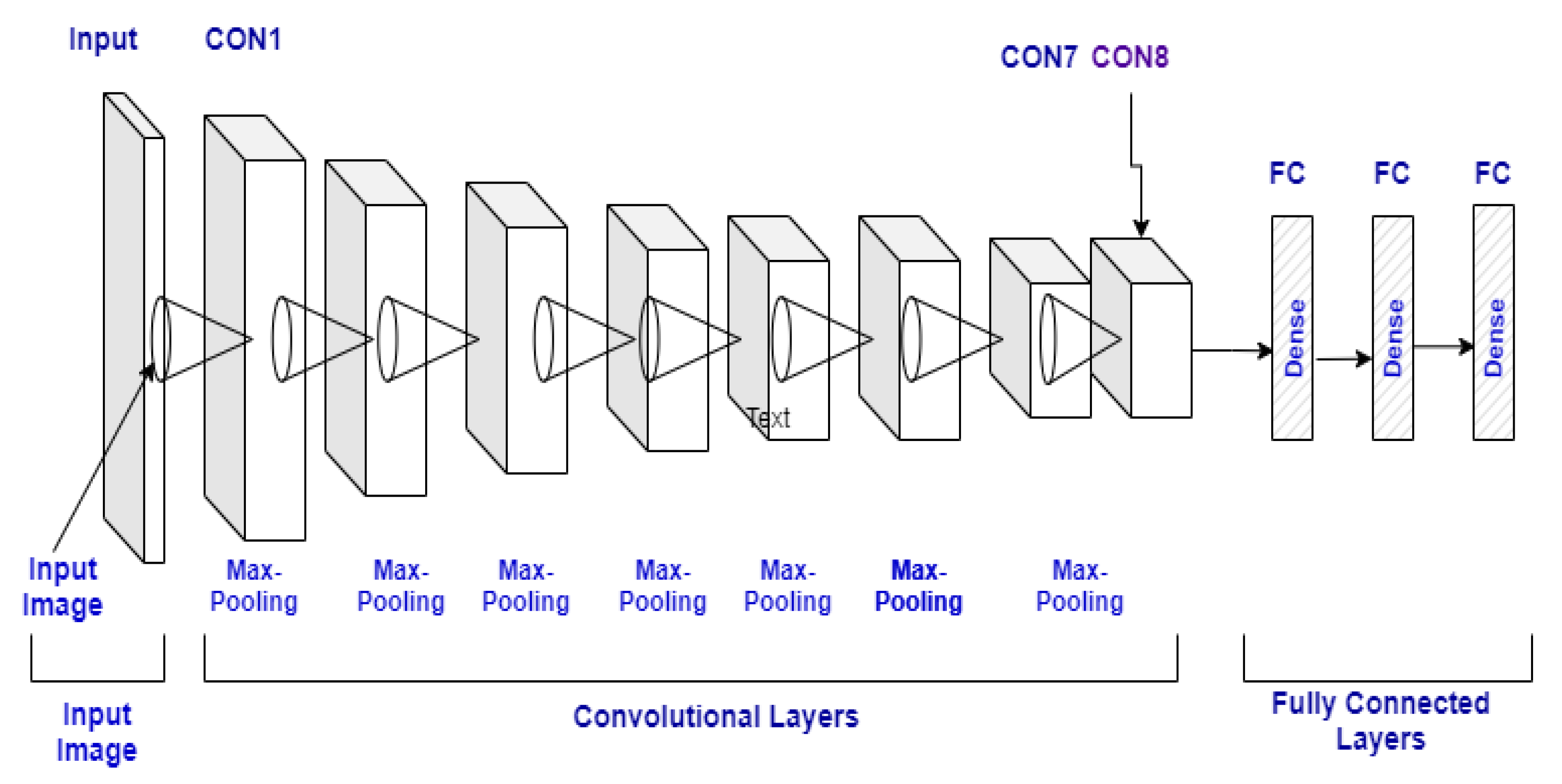

3.4. Convolutional Neural Network

3.4.1. Convolutional Layer

3.4.2. Pooling Layer

3.4.3. Fully Connected Layer

3.4.4. Dropout

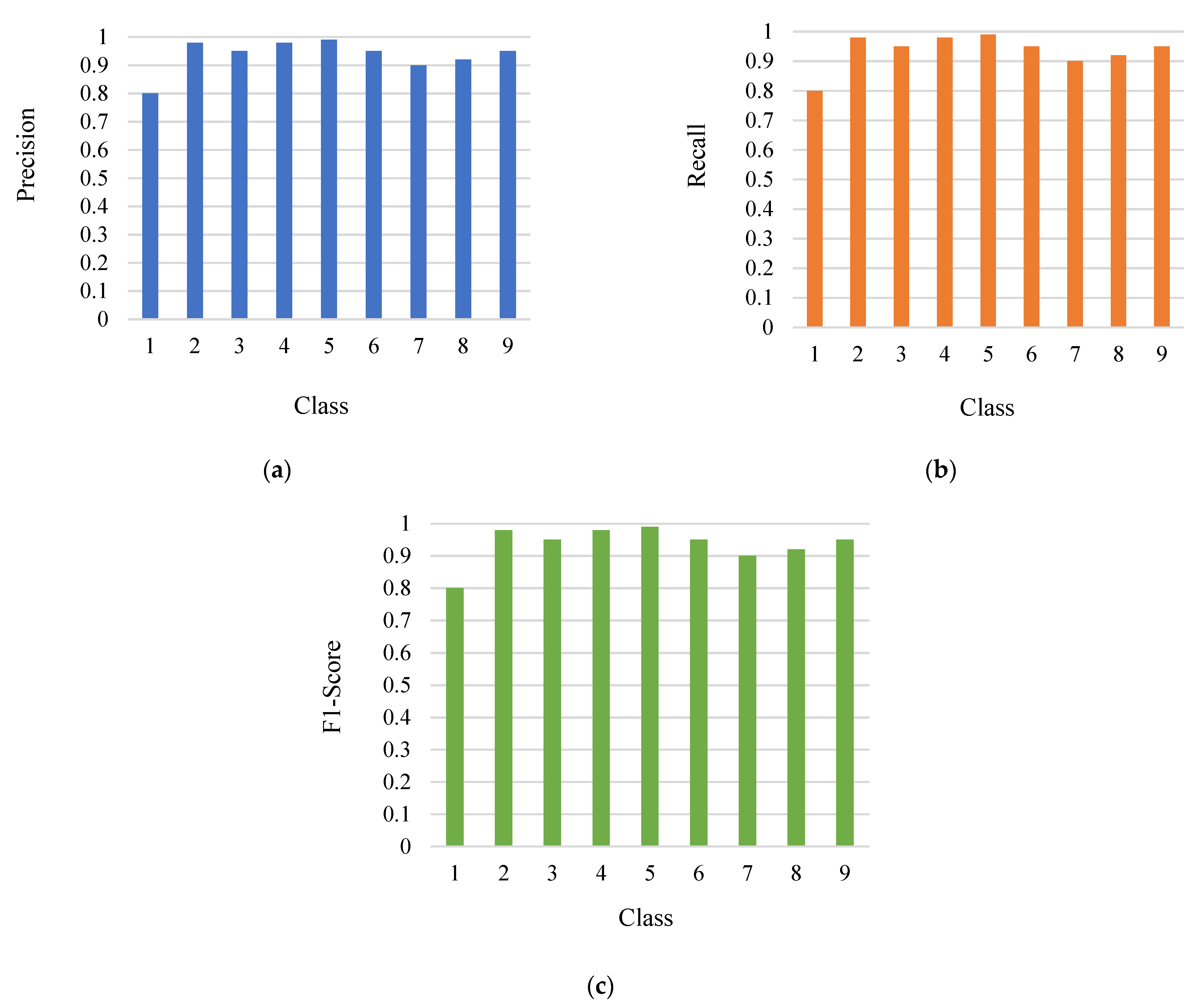

- Accuracy. Accuracy (Acc) is a measure of the proportion of accurately classified predictions that have been made so far. It is calculated as follows:

- Precision. Precision (Pre) is a metric that indicates the proportion of true positive outcomes. It is calculated as follows:

- Recall. Recall (Re) is a metric that indicates the proportion of true positives that were successfully detected. It is calculated as follows:

- F1-Score. The F1-Score is calculated as the harmonic mean of precision and recall and is defined as follows:

- Step 1: Input color of the image IRGB of the leaf procure from the PlantVillage dataset.

- Step 2: Given IRGB, generate the mask Mveq using CNN-based segmentation.

- Step 3: Encrust IRGB with Mveq to get Mmask.

- Step 4: Divide the image Mmask into smaller regions Ktiles (square tiles).

- Step 5: Classify Ktiles from Mmask into Tomato.

- Step 6: Finally, Ktiles is the leaf part to detect disease.

- Step 7: Stop.

| Algorithm: Disease Detection |

| Input: acquired from a dataset |

| Output: Disease detection; |

|

|

|

|

| tomato diseases; |

|

|

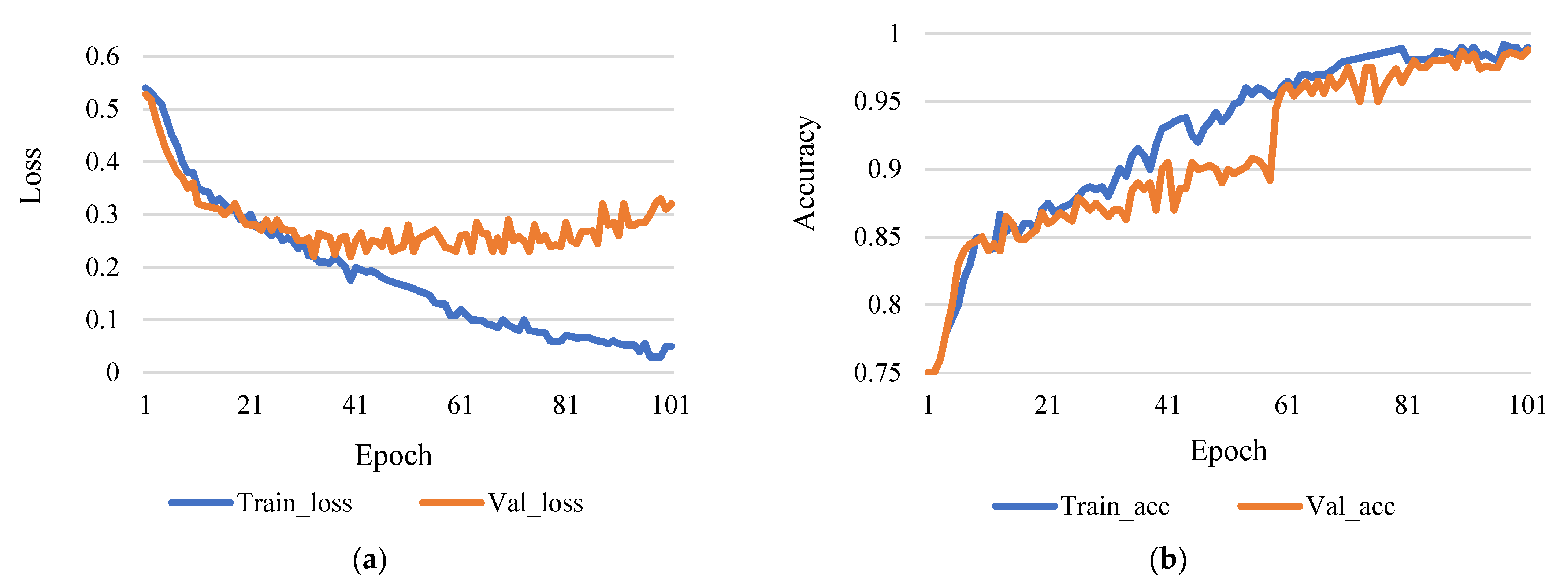

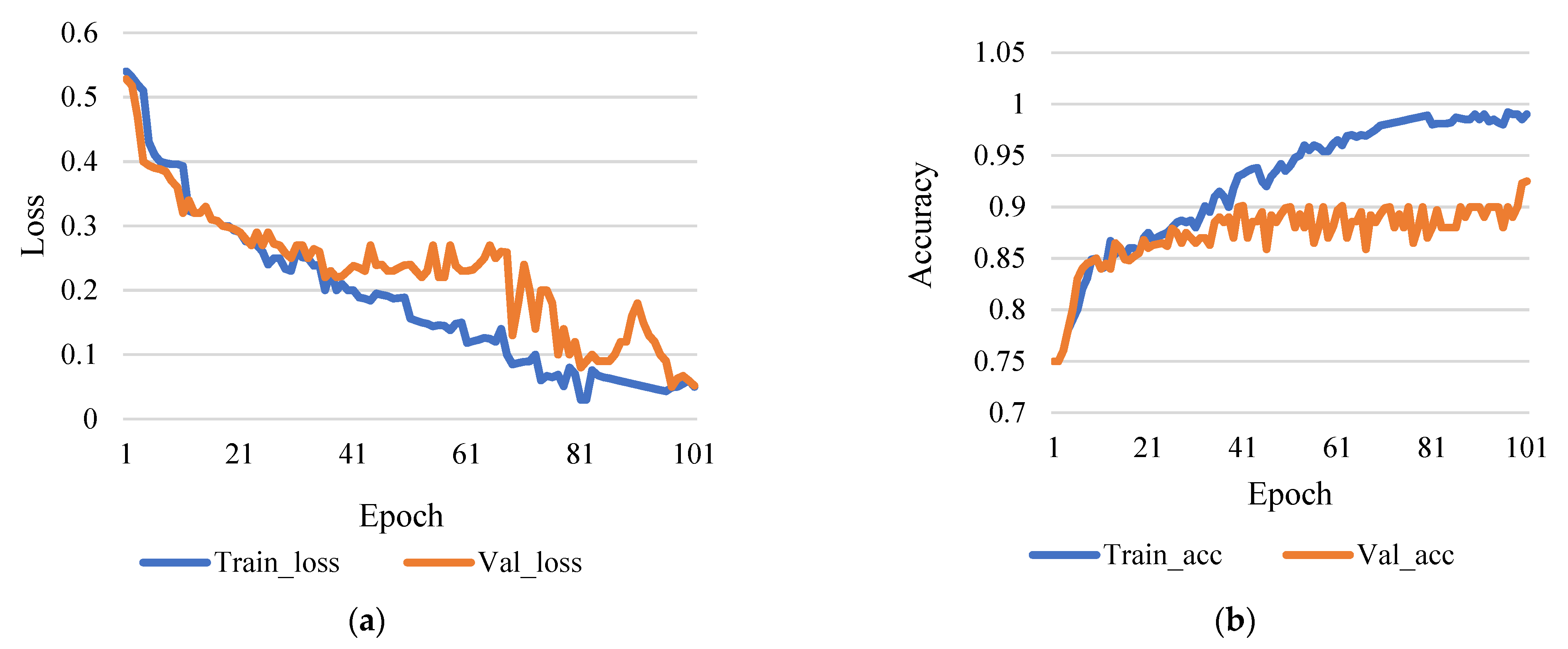

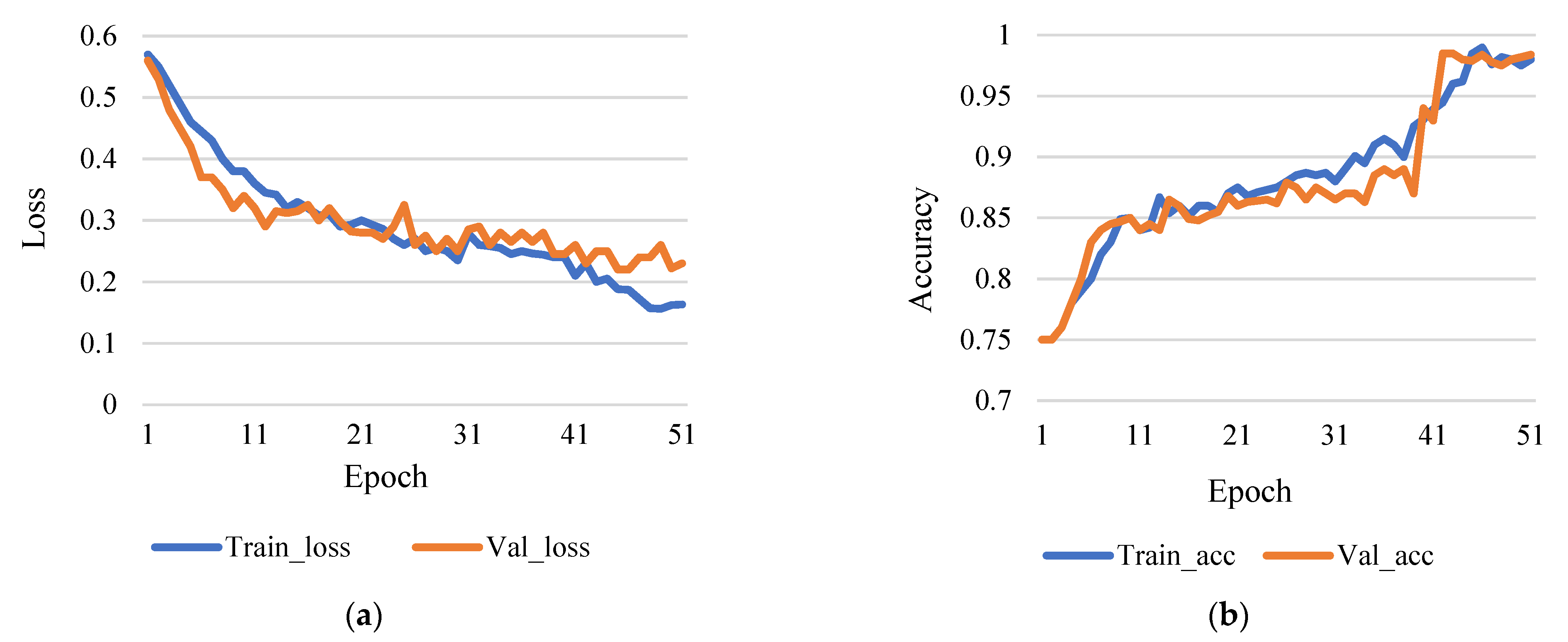

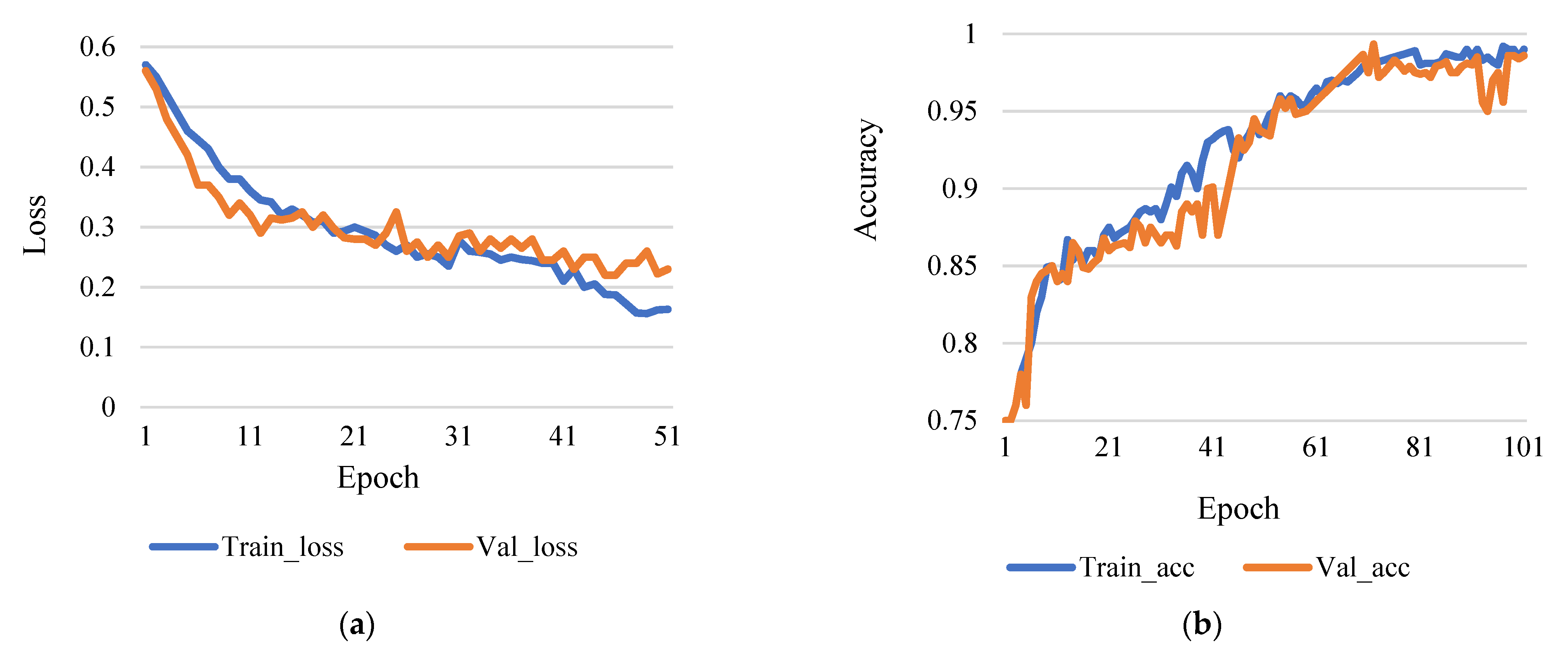

4. Results Analysis and Discussion

5. Conclusions and Future Scope

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Schreinemachers, P.; Simmons, E.B.; Wopereis, M.C. Tapping the economic and nutritional power of vegetables. Glob. Food Secur. 2018, 16, 36–45. [Google Scholar] [CrossRef]

- Stilwell, M. The global tomato online news processing in 2018. Available online: https://www.tomatonews.com/ (accessed on 15 November 2021).

- Wang, R.; Lammers, M.; Tikunov, Y.; Bovy, A.G.; Angenent, G.C.; de Maagd, R.A. The rin, nor and Cnr spontaneous mutations inhibit tomato fruit ripening in additive and epistatic manners. Plant Sci. 2020, 294, 110436–110447. [Google Scholar] [CrossRef]

- Sengan, S.; Rao, G.R.; Khalaf, O.I.; Babu, M.R. Markov mathematical analysis for comprehensive real-time data-driven in healthcare. Math. Eng. Sci. Aerosp. (MESA) 2021, 12, 77–94. [Google Scholar]

- Sengan, S.; Sagar, R.V.; Ramesh, R.; Khalaf, O.I.; Dhanapal, R. The optimization of reconfigured real-time datasets for improving classification performance of machine learning algorithms. Math. Eng. Sci. Aerosp. (MESA) 2021, 12, 43–54. [Google Scholar]

- Peet, M.M.; Welles, G.W. Greenhouse tomato production. Crop. Prod. Sci. Hortic. 2005, 13, 257–304. [Google Scholar]

- Khan, S.; Narvekar, M. Novel fusion of color balancing and superpixel based approach for detection of tomato plant diseases in natural complex environment. J. King Saud Univ.-Comput. Inf. Sci. 2020, in press. [Google Scholar] [CrossRef]

- Kovalskaya, N.; Hammond, R.W. Molecular biology of viroid–host interactions and disease control strategies. Plant Sci. 2014, 228, 48–60. [Google Scholar] [CrossRef] [Green Version]

- Wisesa, O.; Andriansyah, A.; Khalaf, O.I. Prediction Analysis for Business to Business (B2B) Sales of Telecommunication Services using Machine Learning Techniques. Majlesi J. Electr. Eng. 2020, 14, 145–153. [Google Scholar] [CrossRef]

- Wilson, C.R. Plant pathogens–the great thieves of vegetable value. In Proceedings of the XXIX International Horticultural Congress on Horticulture Sustaining Lives, Livelihoods and Landscapes (IHC2014), Brisbane, Australia, 17–22 August 2014. [Google Scholar]

- Zhang, S.W.; Shang, Y.J.; Wang, L. Plant disease recognition based on plant leaf image. J. Anim. Plant Sci. 2015, 25, 42–45. [Google Scholar]

- Agarwal, M.; Singh, A.; Arjaria, S.; Sinha, A.; Gupta, S. ToLeD: Tomato leaf disease detection using convolution neural network. Procedia Comput. Sci. 2020, 167, 293–301. [Google Scholar] [CrossRef]

- Ali, H.; Lali, M.I.; Nawaz, M.Z.; Sharif, M.; Saleem, B.A. Symptom based automated detection of citrus diseases using color histogram and textural descriptors. Comput. Electron. Agric. 2017, 138, 92–104. [Google Scholar] [CrossRef]

- Basavaiah, J.; Anthony, A.A. Tomato Leaf Disease Classification using Multiple Feature Extraction Techniques. Wirel. Pers. Commun. 2020, 115, 633–651. [Google Scholar] [CrossRef]

- Ma, J.; Du, K.; Zheng, F.; Zhang, L.; Sun, Z. A segmentation method for processing greenhouse vegetable foliar disease symptom images. Inf. Process. Agric. 2019, 6, 216–223. [Google Scholar] [CrossRef]

- Sharma, P.; Hans, P.; Gupta, S.C. Classification of plant leaf diseases using machine learning and image preprocessing tech-niques. In Proceedings of the 10th International Conference on Cloud Computing, Data Science & Engineering (Confluence), Noida, India, 29–31 January 2020. [Google Scholar]

- Li, G.; Liu, F.; Sharma, A.; Khalaf, O.I.; Alotaibi, Y.; Alsufyani, A.; Alghamdi, S. Research on the natural language recognition method based on cluster analysis using neural network. Math. Probl. Eng. 2021, 2021, 9982305. [Google Scholar] [CrossRef]

- Singh, V.; Misra, A.K. Detection of plant leaf diseases using image segmentation and soft computing techniques. Inf. Process. Agric. 2017, 4, 41–49. [Google Scholar] [CrossRef] [Green Version]

- Hasan, M.; Tanawala, B.; Patel, K.J. Deep learning precision farming: Tomato leaf disease detection by transfer learning. In Proceeding of the 2nd International Conference on Advanced Computing and Software Engineering (ICACSE), Sultanpur, Inida, 8–9 February 2019. [Google Scholar]

- Adhikari, S.; Shrestha, B.; Baiju, B.; Kumar, S. Tomato plant diseases detection system using image processing. In Proceedings of the 1st KEC Conference on Engineering and Technology, Laliitpur, Nepal, 27 September 2018; Volume 1, pp. 81–86. [Google Scholar]

- Sabrol, H.; Satish, K. Tomato plant disease classification in digital images using classification tree. In Proceedings of the International Conference on Communication and Signal Processing (ICCSP), Melmaruvathur, India, 6–8 April 2016; pp. 1242–1246. [Google Scholar]

- Salih, T.A. Deep Learning Convolution Neural Network to Detect and Classify Tomato Plant Leaf Diseases. Open Access Libr. J. 2020, 7, 12. [Google Scholar] [CrossRef]

- Ishak, S.; Rahiman, M.H.; Kanafiah, S.N.; Saad, H. Leaf disease classification using artificial neural network. J. Teknol. 2015, 77, 109–114. [Google Scholar] [CrossRef] [Green Version]

- Sabrol, H.; Kumar, S. Fuzzy and neural network-based tomato plant disease classification using natural outdoor images. Indian J. Sci. Technol. 2016, 9, 1–8. [Google Scholar] [CrossRef]

- Rangarajan, A.K.; Purushothaman, R.; Ramesh, A. Tomato crop disease classification using pre-trained deep learning algorithm. Procedia Comput. Sci. 2018, 133, 1040–1047. [Google Scholar] [CrossRef]

- Coulibaly, S.; Kamsu-Foguem, B.; Kamissoko, D.; Traore, D. Deep neural networks with transfer learning in millet crop images. Comput. Ind. 2019, 108, 115–120. [Google Scholar] [CrossRef] [Green Version]

- Sangeetha, R.; Rani, M. Tomato Leaf Disease Prediction Using Transfer Learning. In Proceedings of the International Advanced Computing Conference 2020, Panaji, India, 5–6 December 2020. [Google Scholar]

- Mortazi, A.; Bagci, U. Automatically designing CNN architectures for medical image segmentation. In Proceedings of the International Workshop on Machine Learning in Medical Imaging, Granada, Spain, 16 September 2018; pp. 98–106. [Google Scholar]

- Jiang, D.; Li, F.; Yang, Y.; Yu, S. A tomato leaf diseases classification method based on deep learning. In Proceedings of the Chinese Control and Decision Conference (CCDC), Hefei, China, 22–24 August 2020; pp. 1446–1450. [Google Scholar]

- Agarwal, M.; Gupta, S.K.; Biswas, K.K. Development of Efficient CNN model for Tomato crop disease identification. Sustain. Comput. Inform. Syst. 2020, 28, 100407–100421. [Google Scholar] [CrossRef]

- PlantVillege. Available online: https://www.kaggle.com/emmarex/plantdisease (accessed on 3 July 2021).

- Kaur, P.; Gautam, V. Research patterns and trends in classification of biotic and abiotic stress in plant leaf. Mater. Today Proc. 2021, 45, 4377–4382. [Google Scholar] [CrossRef]

- Kaur, P.; Gautam, V. Plant Biotic Disease Identification and Classification Based on Leaf Image: A Review. In Proceedings of the 3rd International Conference on Computing Informatics and Networks (ICCIN), Delhi, India, 29–30 July 2021; pp. 597–610. [Google Scholar]

- Suryanarayana, G.; Chandran, K.; Khalaf, O.I.; Alotaibi, Y.; Alsufyani, A.; Alghamdi, S.A. Accurate Magnetic Resonance Image Super-Resolution Using Deep Networks and Gaussian Filtering in the Stationary Wavelet Domain. IEEE Access 2021, 9, 71406–71417. [Google Scholar] [CrossRef]

- Wu, Y.; Xu, L.; Goodman, E.D. Tomato Leaf Disease Identification and Detection Based on Deep Convolutional Neural Net-work. Intelli. Autom. Soft Comput. 2021, 28, 561–576. [Google Scholar] [CrossRef]

- Tm, P.; Pranathi, A.; SaiAshritha, K.; Chittaragi, N.B.; Koolagudi, S.G. Tomato leaf disease detection using convolutional neural networks. In Proceedings of the Eleventh International Conference on Contemporary Computing (IC3), Noida, India, 2–4 August 2018; pp. 1–5. [Google Scholar]

- Kaushik, M.; Prakash, P.; Ajay, R.; Veni, S. Tomato Leaf Disease Detection using Convolutional Neural Network with Data Augmentation. In Proceedings of the 5th International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 10–12 June 2020; pp. 1125–1132. [Google Scholar]

- Lin, F.; Guo, S.; Tan, C.; Zhou, X.; Zhang, D. Identification of Rice Sheath Blight through Spectral Responses Using Hyperspectral Images. Sensors 2020, 20, 6243–6259. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Luo, Z.; Wang, F.; Wang, Y. Hyperspectral leaf image-based cucumber disease recognition using the extended collaborative representation model. Sensors 2020, 20, 4045–4058. [Google Scholar] [CrossRef]

- Yan, Q.; Yang, B.; Wang, W.; Wang, B.; Chen, P.; Zhang, J. Apple leaf diseases recognition based on an improved convolutional neural network. Sensors 2020, 20, 3535–3549. [Google Scholar] [CrossRef] [PubMed]

- Ashok, S.; Kishore, G.; Rajesh, V.; Suchitra, S.; Sophia, S.G.; Pavithra, B. Tomato Leaf Disease Detection Using Deep Learning Techniques. In Proceedings of the 5th International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 10–12 June 2020; pp. 979–983. [Google Scholar]

- Durmuş, H.; Güneş, E.O.; Kırcı, M. Disease detection on the leaves of the tomato plants by using deep learning. In Proceedings of the 6th International Conference on Agro-Geoinformatics, Fairfax, VA, USA, 7–10 August 2017; pp. 1–5. [Google Scholar]

- Ferentinos, K.P. Deep learning models for plant disease detection and diagnosis. Comput. Electron. Agric. 2018, 145, 311–318. [Google Scholar] [CrossRef]

- Lu, J.; Tan, L.; Jiang, H. Review on Convolutional Neural Network (CNN) Applied to Plant Leaf Disease Classification. Agriculture 2021, 11, 707. [Google Scholar] [CrossRef]

- Sharma, P.; Berwal, Y.P.; Ghai, W. Performance analysis of deep learning CNN models for disease detection in plants using image segmentation. Inf. Process. Agric. 2020, 7, 566–574. [Google Scholar] [CrossRef]

- De Luna, R.G.; Dadios, E.P.; Bandala, A.A. Automated image capturing system for deep learning-based tomato plant leaf disease detection and recognition. In Proceedings of the TENCON 2018—2018 IEEE Region 10 Conference, Jeju, Korea, 28–31 October 2018; pp. 1414–1419. [Google Scholar]

- Chowdhury, M.E.; Rahman, T.; Khandakar, A.; Ayari, M.A.; Khan, A.U.; Khan, M.S.; Al-Emadi, N.; Reaz, M.B.; Islam, M.T.; Ali, S.H. Automatic and Reliable Leaf Disease Detection Using Deep Learning Techniques. AgriEngineering 2021, 3, 294–312. [Google Scholar] [CrossRef]

- Zhao, S.; Peng, Y.; Liu, J.; Wu, S. Tomato Leaf Disease Diagnosis Based on Improved Convolution Neural Network by Attention Module. Agriculture 2021, 11, 651. [Google Scholar] [CrossRef]

- Boulent, J.; Foucher, S.; Théau, J.; St-Charles, P.L. Convolutional neural networks for the automatic identification of plant diseases. Front. Plant Sci. 2019, 10, 941. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Parameter | Description |

|---|---|

| No. of Convolution Layer | 8 |

| No. of Max Pulling Layer | 8 |

| Dropout Rate | 0.5 |

| Network Weight Assigned | Uniform |

| Activation Function | Relu |

| Learning Rates | 0.01, 0.01, 0.1 |

| Epocho | 50, 100, 150 |

| Batch Size | 36, 64, 110 |

| Dataset Amount | Image Size | Epoch | Learning Rate | Accuracy (%) |

|---|---|---|---|---|

| 3000 | 256 × 256 px | 50 | 0.0001 | 98.47% |

| 50 | 0.001 | 98.42% | ||

| 50 | 0.01 | 98.52% | ||

| 100 | 0.0001 | 98.43% | ||

| 100 | 0.001 | 98.58% | ||

| 100 | 0.01 | 98.5% |

| S.No. | Model | Accuracy Rate | Space | Training Parameters | Non-Trainable |

|---|---|---|---|---|---|

| 1 | Mobinet | 66.75 | 82,566 | 18,020,552 | 455,262 |

| 2 | VGG16 | 79.52 | 85,245 | 21,000,254 | 532,654 |

| 3 | InceptionV3 | 64.25 | 90,255 | 22,546,862 | 658,644 |

| 4 | Proposed | 98.49 | 22,565 | 1,422,542 | 0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Trivedi, N.K.; Gautam, V.; Anand, A.; Aljahdali, H.M.; Villar, S.G.; Anand, D.; Goyal, N.; Kadry, S. Early Detection and Classification of Tomato Leaf Disease Using High-Performance Deep Neural Network. Sensors 2021, 21, 7987. https://doi.org/10.3390/s21237987

Trivedi NK, Gautam V, Anand A, Aljahdali HM, Villar SG, Anand D, Goyal N, Kadry S. Early Detection and Classification of Tomato Leaf Disease Using High-Performance Deep Neural Network. Sensors. 2021; 21(23):7987. https://doi.org/10.3390/s21237987

Chicago/Turabian StyleTrivedi, Naresh K., Vinay Gautam, Abhineet Anand, Hani Moaiteq Aljahdali, Santos Gracia Villar, Divya Anand, Nitin Goyal, and Seifedine Kadry. 2021. "Early Detection and Classification of Tomato Leaf Disease Using High-Performance Deep Neural Network" Sensors 21, no. 23: 7987. https://doi.org/10.3390/s21237987

APA StyleTrivedi, N. K., Gautam, V., Anand, A., Aljahdali, H. M., Villar, S. G., Anand, D., Goyal, N., & Kadry, S. (2021). Early Detection and Classification of Tomato Leaf Disease Using High-Performance Deep Neural Network. Sensors, 21(23), 7987. https://doi.org/10.3390/s21237987