Dynamic Object Tracking on Autonomous UAV System for Surveillance Applications

Abstract

:1. Introduction

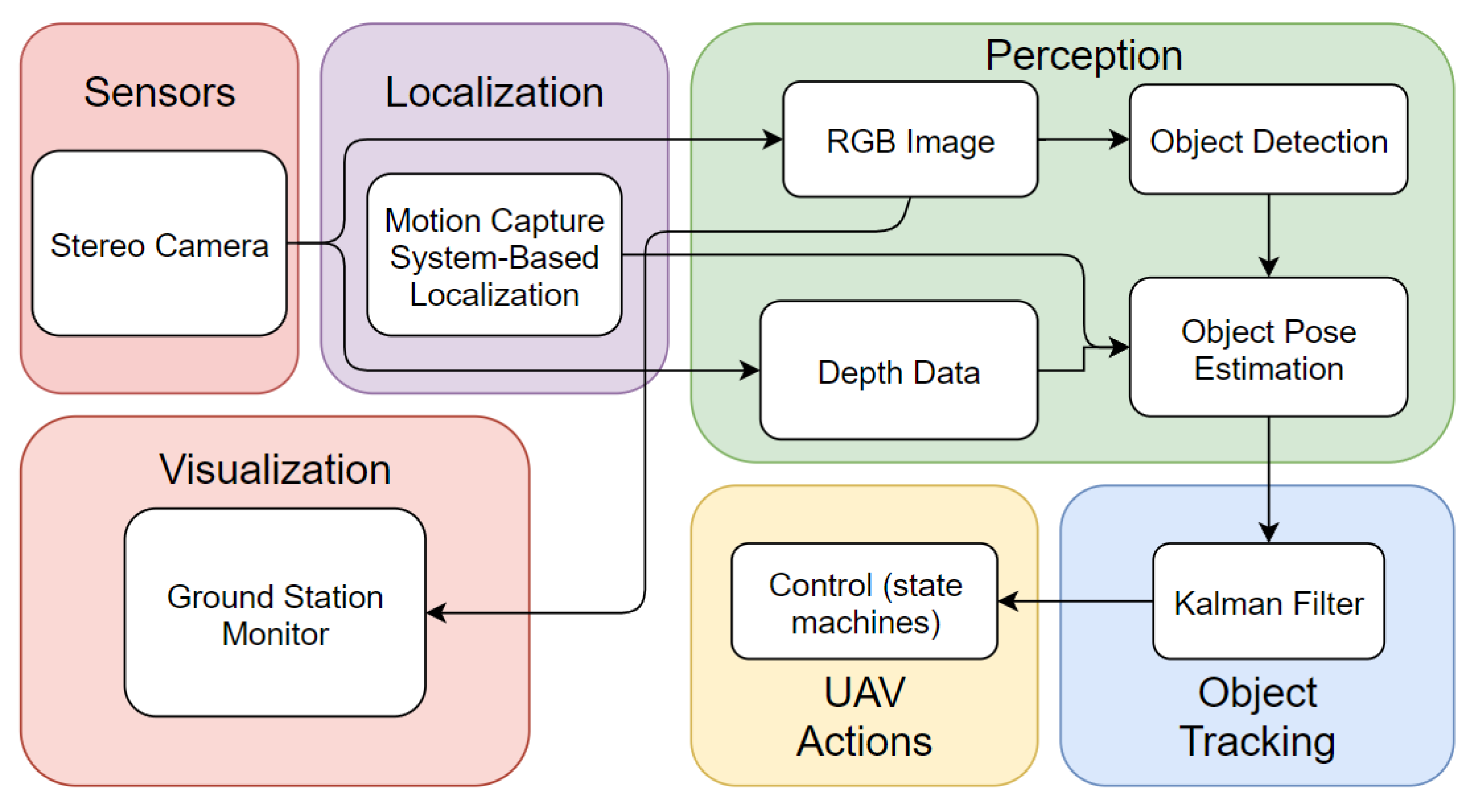

- real-time, learning-based object detection algorithm is integrated with the UAV embedded system to autonomous locate the desired object without human interference;

- a 3D pose tracking algorithm with object detection, stereo reconstruction techniques, and Kalman filter is implemented in a low-cost UAV system to recognize, locate and track the target object autonomously; whilst an UAV path planning is included for surveillance mission, which obeys the dynamic constraints for UAV to track and follow the target object movement;

- system experiments include both dynamic object and dynamic sensor, and the results validated good performance of the proposed system.

2. Related Work

2.1. Object Detection

2.2. Object Tracking

2.3. Unmanned Aerial Vehicle (UAV) Applications with Target Monitoring

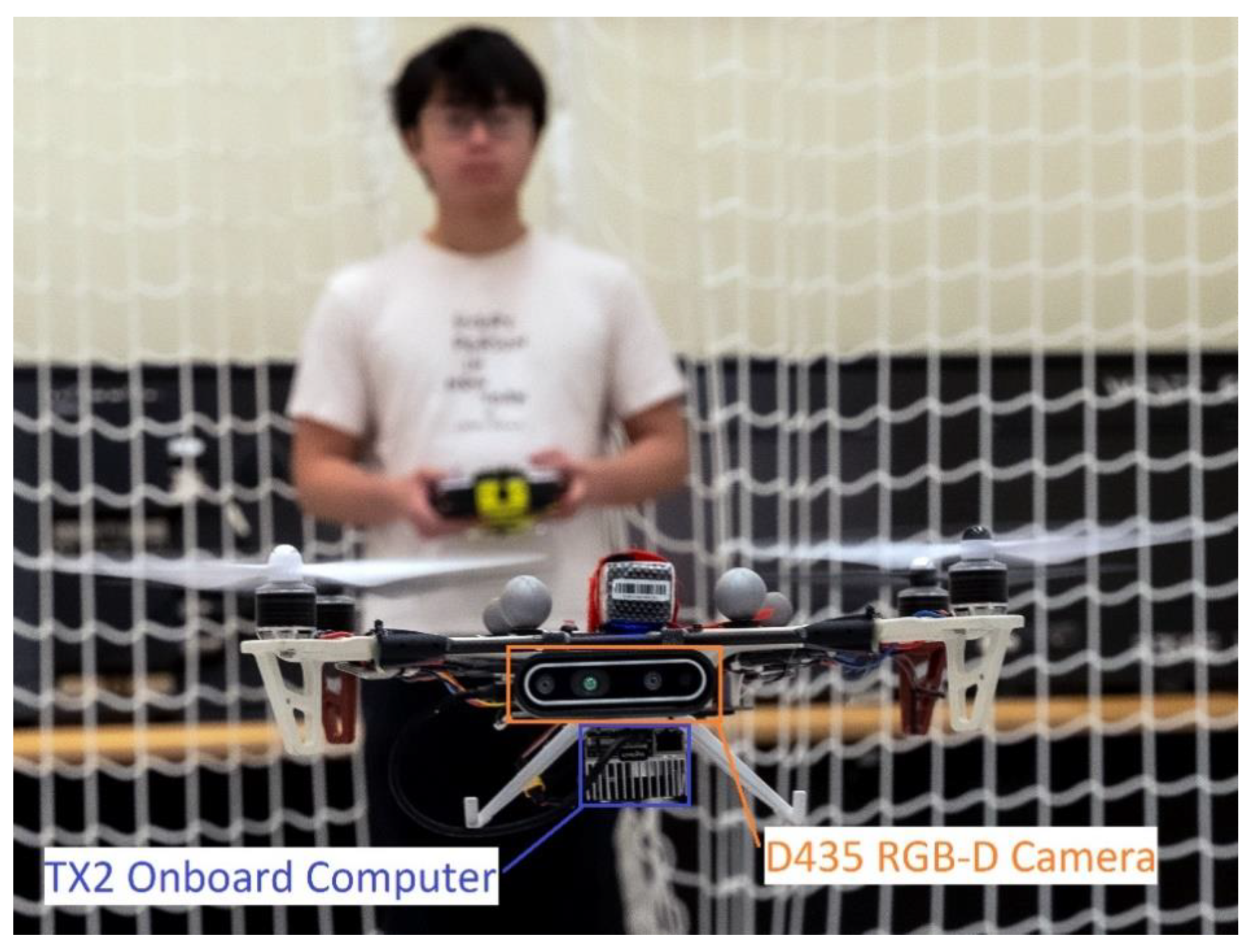

3. System Architecture

4. Object 3D State Estimation

4.1. Object Detection

4.1.1. Dataset Establishment and Training

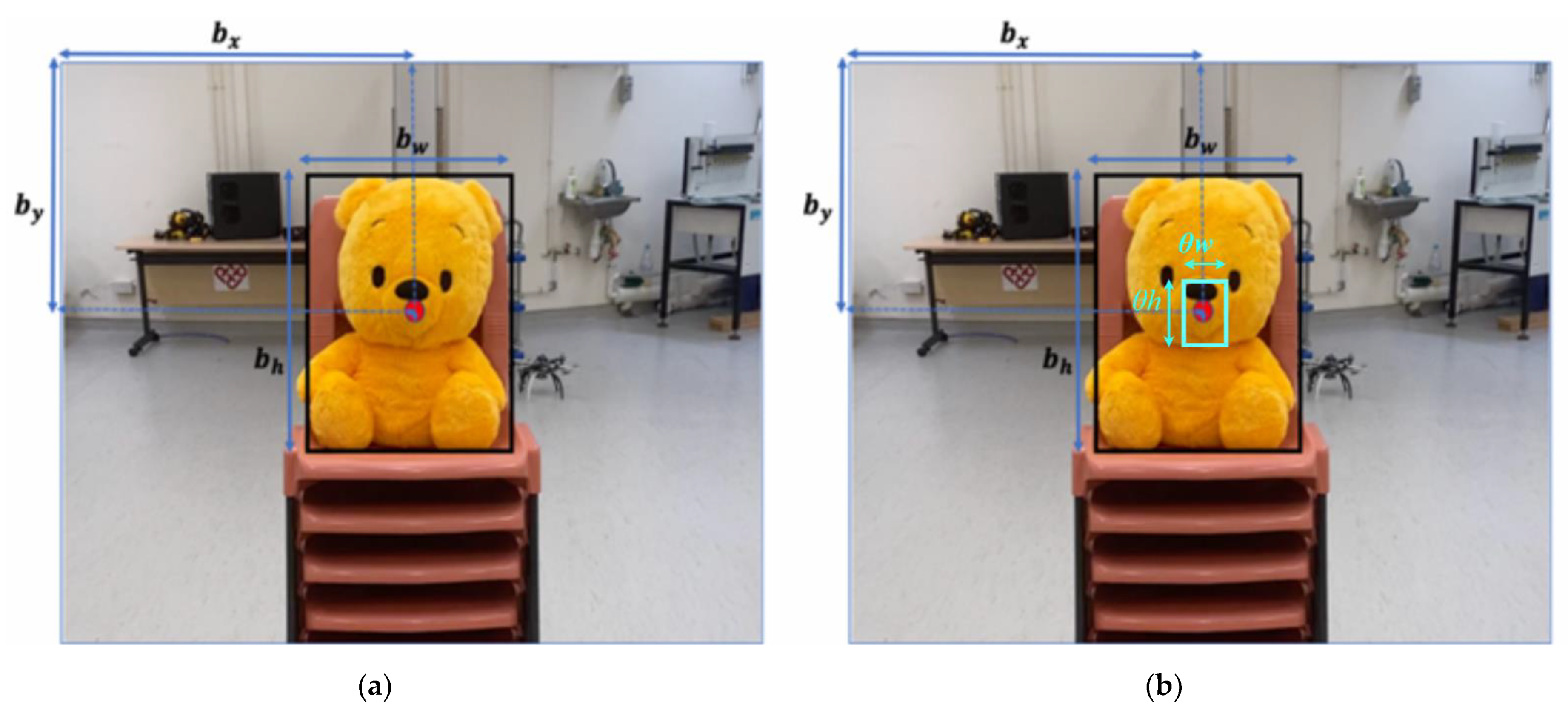

4.1.2. Two-Dimensional (2D) Bounding Box Prediction

- are coordinates of predicted bounding box in terms of x position, y position, width, and height, which are not finalized bounding box coordinates.

- are the offset of cell from the top left corner of the image.

- is the width and is the height of the predicted prior anchor box.

- σ is the sigmoid function applied to constrain the offset range between 0 and 1.

- are the finalized parameters of bounding box, where and are the center coordinates, and are the width and height respectively.

4.2. Three-Dimensional (3D) Pose Estimation

5. Filter Based Tracking and UAV Maneuvers

5.1. Relative Pose Estimation

5.1.1. Kalman Filter

5.1.2. Overall 3D Tracking Algorithm

| Algorithm 1: 3D Yolo-KF-Tracking |

| Notation: object states , measurement , Kalman filter KF, image set Input: image while true do Object-Detection (F) if object detected then trigger and initiate KF break else continue end if end while while true do KF.predict() Object-Detection (F) if object detected then if confidence score > 0.75 then = KF.update () else KF.update () = KF.update () end if else = KF.update (KF.predict()) end if Output: (posteriori estimate) continue end while |

5.2. Finite State Machine Definition

6. Experiment Results and Discussions

6.1. Training Result of YOLOv4-Tiny

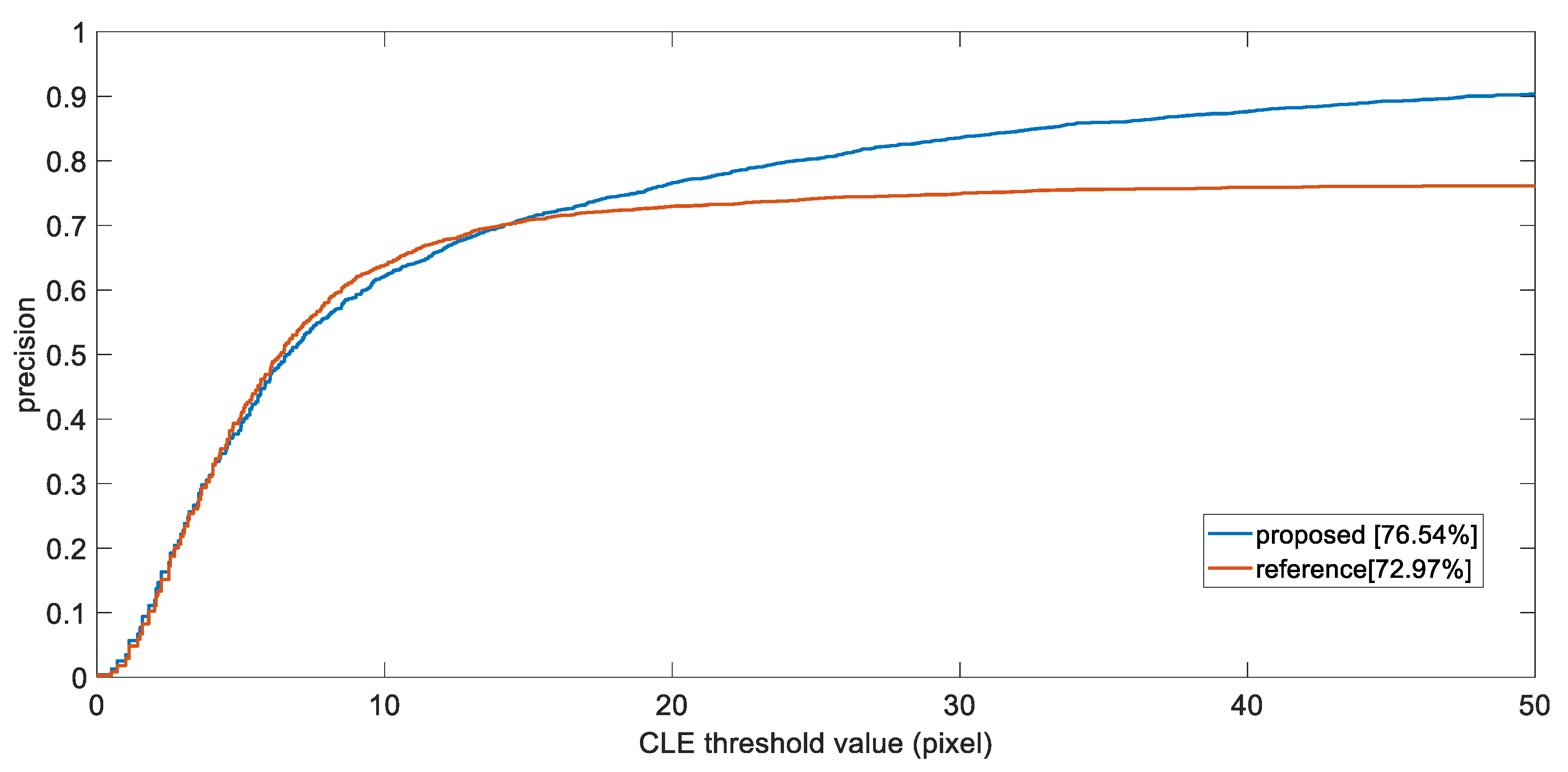

6.2. Tracking Performance on Target Object

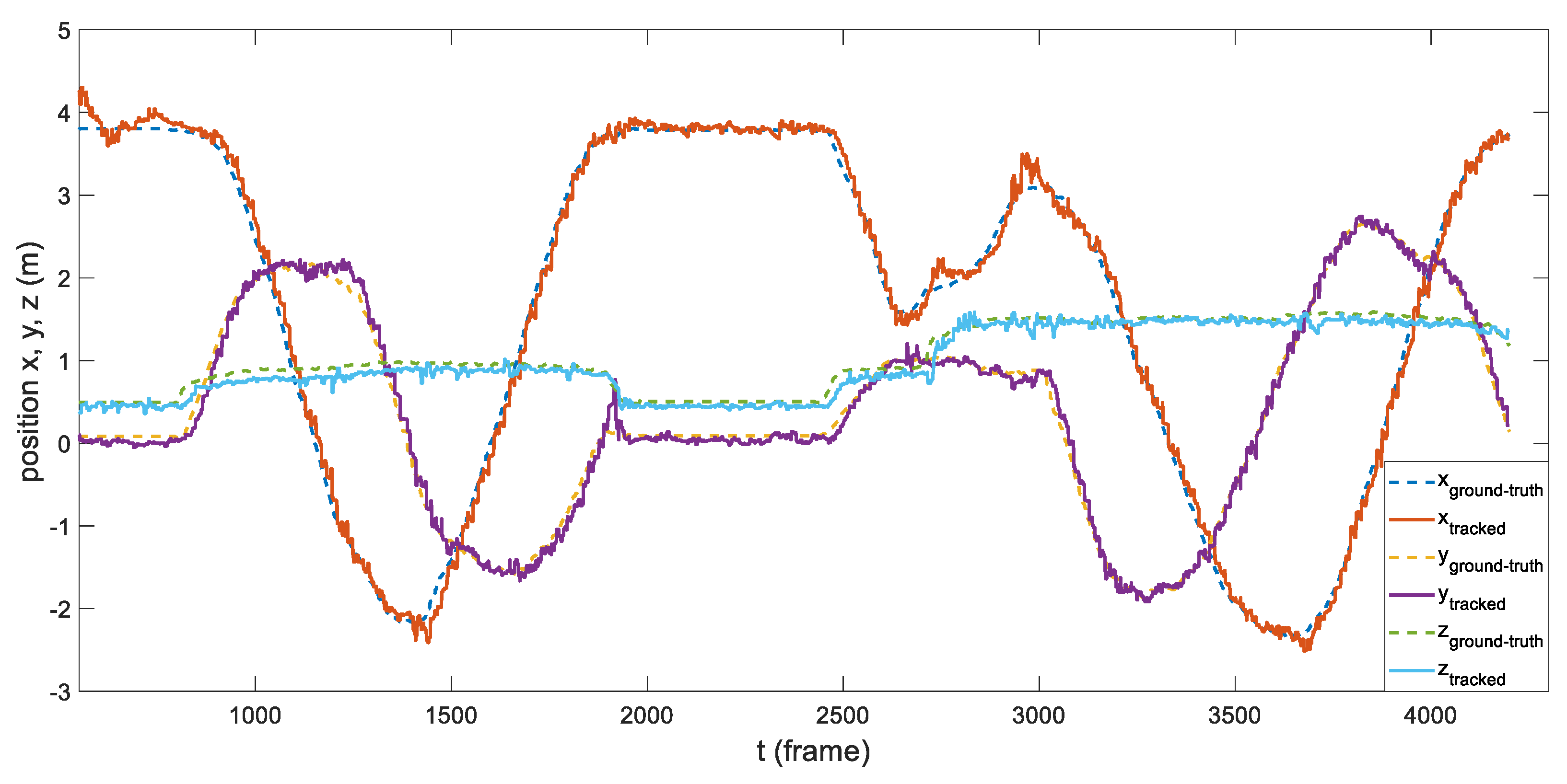

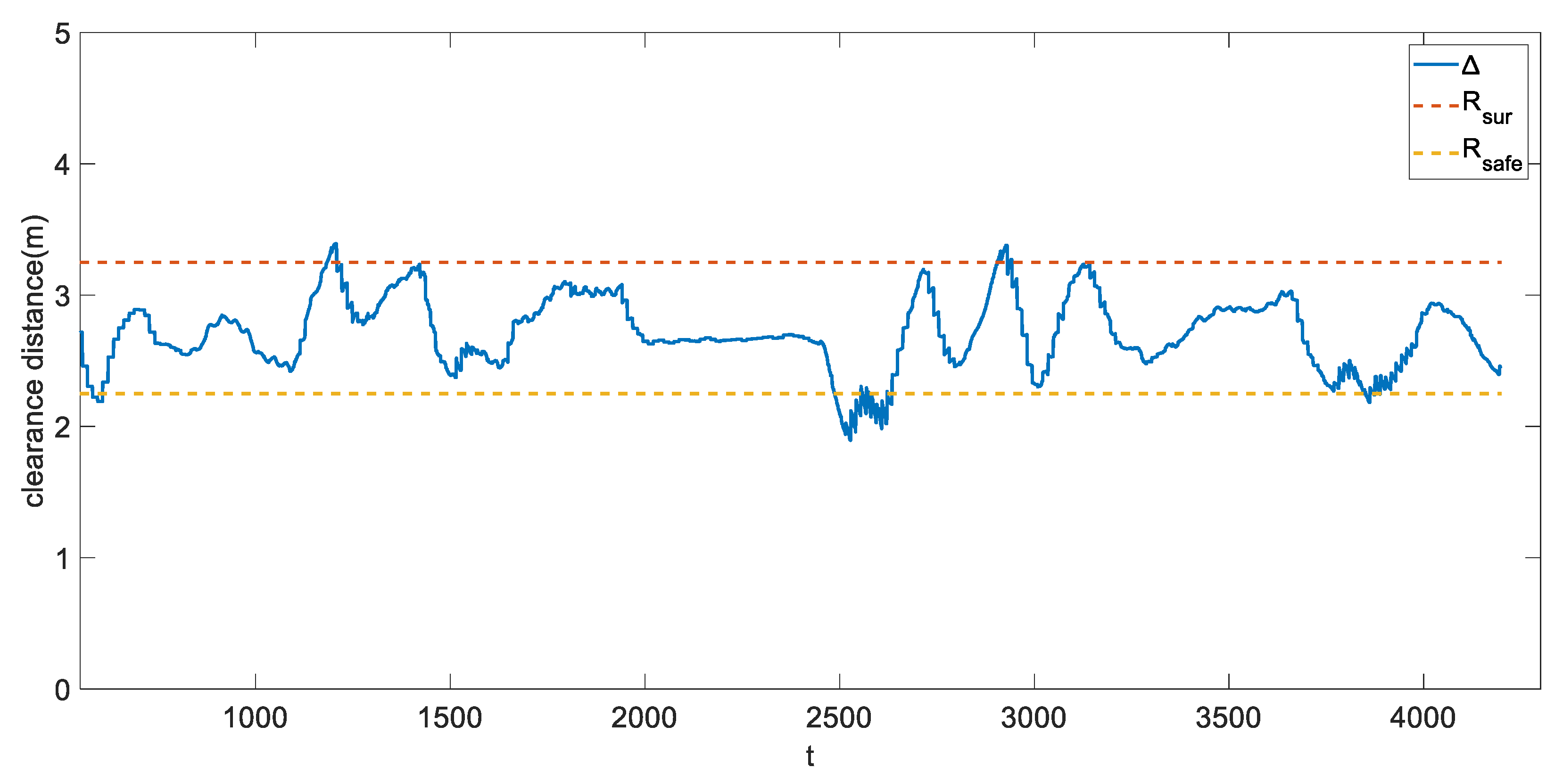

6.3. Flight Experiment in Indoor Environment Aided with External Locolization

7. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Máthé, K.; Buşoniu, L. Vision and control for UAVs: A survey of general methods and of inexpensive platforms for infrastructure inspection. Sensors 2015, 15, 14887–14916. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gurtner, A.; Greer, D.G.; Glassock, R.; Mejias, L.; Walker, R.A.; Boles, W.W. Investigation of fish-eye lenses for small-UAV aerial photography. IEEE Trans. Geosci. Remote Sens. 2009, 47, 709–721. [Google Scholar] [CrossRef] [Green Version]

- Škrinjar, J.P.; Škorput, P.; Furdić, M. Application of unmanned aerial vehicles in logistic processes. In Proceedings of the International Conference “New Technologies, Development and Applications”, Sarajevo, Bosnia and Herzegovina, 14–16 June 2018; pp. 359–366. [Google Scholar]

- Huang, C.-H.; Wu, Y.-T.; Kao, J.-H.; Shih, M.-Y.; Chou, C.-C. A hybrid moving object detection method for aerial images. In Proceedings of the Pacific-Rim Conference on Multimedia, Shanghai, China, 21–24 September 2010; pp. 357–368. [Google Scholar]

- Fang, P.; Lu, J.; Tian, Y.; Miao, Z. An improved object tracking method in UAV videos. Procedia Eng. 2011, 15, 634–638. [Google Scholar] [CrossRef]

- Comaniciu, D.; Ramesh, V.; Meer, P. Kernel-based object tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 564–577. [Google Scholar] [CrossRef] [Green Version]

- Teuliere, C.; Eck, L.; Marchand, E. Chasing a moving target from a flying UAV. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 4929–4934. [Google Scholar]

- Kim, B.; Min, H.; Heo, J.; Jung, J. Dynamic computation offloading scheme for drone-based surveillance systems. Sensors 2018, 18, 2982. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, S. Object tracking in unmanned aerial vehicle (uav) videos using a combined approach. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP’05), Philadelphia, PA, USA, 23–23 March 2005; Volume 682, pp. ii/681–ii/684. [Google Scholar]

- Carrillo, L.R.G.; López, A.E.D.; Lozano, R.; Pégard, C. Combining stereo vision and inertial navigation system for a quad-rotor UAV. J. Intell. Robot. Syst. 2012, 65, 373–387. [Google Scholar] [CrossRef]

- Cho, Y.; Lee, H. A position and velocity estimation using multifarious and multiple sensor fusion. Int. J. Fuzzy Log. Intell. Syst. 2017, 17, 121–128. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Wang, Q.; Hu, H.; He, Y. A novel real-time moving target tracking and path planning system for a quadrotor UAV in unknown unstructured outdoor scenes. IEEE Trans. Syst. Man Cybern. Syst. 2018, 49, 2362–2372. [Google Scholar] [CrossRef] [Green Version]

- Wang, S.; Jiang, F.; Zhang, B.; Ma, R.; Hao, Q. Development of UAV-based target tracking and recognition systems. IEEE Trans. Intell. Transp. Syst. 2019, 21, 3409–3422. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, J. A review of object detection based on convolutional neural network. In Proceedings of the 36th Chinese Control Conference (CCC), Dalian, China, 26–28 July 2017; pp. 11104–11109. [Google Scholar]

- Goodrich, M.A.; Morse, B.S.; Gerhardt, D.; Cooper, J.L.; Quigley, M.; Adams, J.A.; Humphrey, C. Supporting wilderness search and rescue using a camera-equipped mini UAV. J. Field Robot. 2008, 25, 89–110. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Andriluka, M.; Schnitzspan, P.; Meyer, J.; Kohlbrecher, S.; Petersen, K.; Von Stryk, O.; Roth, S.; Schiele, B. Vision based victim detection from unmanned aerial vehicles. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 1740–1747. [Google Scholar]

- Bejiga, M.B.; Zeggada, A.; Nouffidj, A.; Melgani, F. A convolutional neural network approach for assisting avalanche search and rescue operations with UAV imagery. Remote Sens. 2017, 9, 100. [Google Scholar] [CrossRef] [Green Version]

- Lygouras, E.; Santavas, N.; Taitzoglou, A.; Tarchanidis, K.; Mitropoulos, A.; Gasteratos, A. Unsupervised human detection with an embedded vision system on a fully autonomous UAV for search and rescue operations. Sensors 2019, 19, 3542. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tijtgat, N.; Van Ranst, W.; Goedeme, T.; Volckaert, B.; De Turck, F. Embedded real-time object detection for a UAV warning system. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 2110–2118. [Google Scholar]

- Kyrkou, C.; Plastiras, G.; Theocharides, T.; Venieris, S.I.; Bouganis, C.-S. DroNet: Efficient convolutional neural network detector for real-time UAV applications. In Proceedings of the Design, Automation & Test in Europe Conference & Exhibition (DATE), Dresden, Germany, 19–23 March 2018; pp. 967–972. [Google Scholar]

- Feng, Y.; Tse, K.; Chen, S.; Wen, C.-Y.; Li, B. Learning-Based Autonomous UAV System for Electrical and Mechanical (E&M) Device Inspection. Sensors 2021, 21, 1385. [Google Scholar] [PubMed]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Shafiee, M.J.; Chywl, B.; Li, F.; Wong, A. Fast YOLO: A Fast You only Look once System for Real-Time Embedded Object Detection in Video. arXiv 2017, arXiv:1709.05943. [Google Scholar] [CrossRef]

- Huang, R.; Pedoeem, J.; Chen, C. YOLO-LITE: A real-time object detection algorithm optimized for non-GPU computers. In Proceedings of the IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 2503–2510. [Google Scholar]

- Lee, B.Y.; Liew, L.H.; Cheah, W.S.; Wang, Y.C. Occlusion handling in videos object tracking: A survey. In Proceedings of the IOP Conference Series: Earth and Environmental Science, Kuching, Sarawak, Malaysia, 25 February 2014; p. 012020. [Google Scholar]

- Li, X.; Hu, W.; Shen, C.; Zhang, Z.; Dick, A.; Hengel, A.V.D. A survey of appearance models in visual object tracking. ACM Trans. Intell. Syst. Technol. 2013, 4, 1–48. [Google Scholar] [CrossRef] [Green Version]

- Smeulders, A.W.; Chu, D.M.; Cucchiara, R.; Calderara, S.; Dehghan, A.; Shah, M. Visual tracking: An experimental survey. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 36, 1442–1468. [Google Scholar]

- Breitenstein, M.D.; Reichlin, F.; Leibe, B.; Koller-Meier, E.; Van Gool, L. Robust tracking-by-detection using a detector confidence particle filter. In Proceedings of the IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 1515–1522. [Google Scholar]

- Danelljan, M.; Hager, G.; Shahbaz Khan, F.; Felsberg, M. Convolutional features for correlation filter based visual tracking. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Santiago, Chile, 7–13 December 2015; pp. 58–66. [Google Scholar]

- Tian, S.; Liu, X.; Liu, M.; Li, S.; Yin, B. Siamese tracking network with informative enhanced loss. IEEE Trans. Multimed. 2020, 23, 120–132. [Google Scholar] [CrossRef]

- Ning, G.; Zhang, Z.; Huang, C.; Ren, X.; Wang, H.; Cai, C.; He, Z. Spatially supervised recurrent convolutional neural networks for visual object tracking. In Proceedings of the IEEE International Symposium on Circuits and Systems (ISCAS), Baltimore, MD, USA, 28–31 May 2017; pp. 1–4. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Punn, N.S.; Sonbhadra, S.K.; Agarwal, S.; Rai, G. Monitoring COVID-19 Social Distancing with Person Detection and Tracking via Fine-Tuned YOLO v3 and Deepsort Techniques. arXiv 2020, arXiv:2005.01385. [Google Scholar]

- Wise, R.; Rysdyk, R. UAV coordination for autonomous target tracking. In Proceedings of the AIAA Guidance, Navigation, and Control Conference and Exhibit, Keystone, CO, USA, 21–24 August 2006; p. 6453. [Google Scholar]

- Ryan, A.; Hedrick, J.K. A mode-switching path planner for UAV-assisted search and rescue. In Proceedings of the 44th IEEE Conference on Decision and Control, Seville, Spain, 12–15 December 2005; pp. 1471–1476. [Google Scholar]

- Rathinam, S.; Almeida, P.; Kim, Z.; Jackson, S.; Tinka, A.; Grossman, W.; Sengupta, R. Autonomous searching and tracking of a river using an UAV. In Proceedings of the American Control Conference, New York, NY, USA, 9–13 July 2007; pp. 359–364. [Google Scholar]

- Siam, M.; ElHelw, M. Robust autonomous visual detection and tracking of moving targets in UAV imagery. In Proceedings of the IEEE 11th International Conference on Signal Processing, Beijing, China, 21–25 October 2012; pp. 1060–1066. [Google Scholar]

- Al-Kaff, A.; Gómez-Silva, M.J.; Moreno, F.M.; de la Escalera, A.; Armingol, J.M. An appearance-based tracking algorithm for aerial search and rescue purposes. Sensors 2019, 19, 652. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Xu, S.; Savvaris, A.; He, S.; Shin, H.-s.; Tsourdos, A. Real-time implementation of YOLO+ JPDA for small scale UAV multiple object tracking. In Proceedings of the International Conference on Unmanned Aircraft Systems (ICUAS), Dallas, TX, USA, 12–15 June 2018; pp. 1336–1341. [Google Scholar]

- Jayaweera, H.M.; Hanoun, S. A Dynamic Artificial Potential Field (D-APF) UAV Path Planning Technique for Following Ground Moving Targets. IEEE Access 2020, 8, 192760–192776. [Google Scholar] [CrossRef]

- Han, Z.; Zhang, R.; Pan, N.; Xu, C.; Gao, F. Fast-tracker: A robust aerial system for tracking agile target in cluttered environments. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 328–334. [Google Scholar]

- Haugen, J.; Imsland, L. Monitoring moving objects using aerial mobile sensors. IEEE Trans. Control. Syst. Technol. 2015, 24, 475–486. [Google Scholar] [CrossRef]

- Tzutalin, D. LabelImg. Available online: https://tzutalin.github.io/labelImg/ (accessed on 2 November 2021).

- Wu, Y.; Lim, J.; Yang, M.-H. Online object tracking: A benchmark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2411–2418. [Google Scholar]

- Opromolla, R.; Inchingolo, G.; Fasano, G. Airborne visual detection and tracking of cooperative UAVs exploiting deep learning. Sensors 2019, 19, 4332. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Peixoto, H.M.; Teles, R.S.; Luiz, J.V.A.; Henriques-Alves, A.M.; Santa Cruz, R.M. Mice tracking using the YOLO algorithm. PeerJ Prepr. 2019, 7, e27880v1. [Google Scholar]

- Steich, K.; Kamel, M.; Beardsley, P.; Obrist, M.K.; Siegwart, R.; Lachat, T. Tree cavity inspection using aerial robots. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 4856–4862. [Google Scholar]

| Method | Backbone | Size | mAP@0.50 (AP50) | FPS |

|---|---|---|---|---|

| YOLOv4-Tiny | CSPDarknet-53-tiny | 320 × 320 | 74.85% | 16.63 |

| 416 × 416 | 77.21% | 16.19 | ||

| 512 × 512 | 79.36% | 16.31 | ||

| 608 × 608 | 80.20% | 14.34 | ||

| YOLOv4 | CSPDarknet-53 | 416 × 416 | 97.09% | 3.16 |

| Backbone | Size | mAP@0.50 (AP50) with Negative Images | mAP@0.50 (AP50) without Negative Images |

|---|---|---|---|

| CSPDarknet-53-tiny | 320 × 320 | 74.85% | 59.97% |

| 416 × 416 | 77.21% | 62.87% | |

| 512 × 512 | 79.36% | 66.08% | |

| 608 × 608 | 80.20% | 66.40% |

| Experiments | No. of Consecutive Frames | Camera Ego-Motion | RMSE (Pixels) |

|---|---|---|---|

| Trial 1 | 487 | Static | 14.30 |

| Trial 2 | 356 | Static | 8.09 |

| Trial 3 | 220 | Static | 9.37 |

| Trial 4 | 705 | Dynamic | 17.88 |

| Trial 5 | 466 | Dynamic | 16.21 |

| Trial 6 | 533 | Dynamic | 18.47 |

| Parameters | Value |

|---|---|

| 0.15 | |

| 0.2 rad/s | |

| 0.4 m/s | |

| 0.4 m/s | |

| 2.25 m | |

| 3.25 m |

| Error Evaluation | X (m) | Y (m) | Z (m) |

|---|---|---|---|

| 0.1322 m | 0.1072 m | 0.0896 m | |

| 0.1033 m | 0.0812 m | 0.0728 m |

| Object Distance | 1–3 m | 8–10 m | ||||

|---|---|---|---|---|---|---|

| Error Evaluation | X (m) | Y (m) | Z (m) | X (m) | Y (m) | Z (m) |

| 0.1322 m | 0.1072 m | 0.0896 m | 0.1850 m | 0.1286 m | 0.1116 m | |

| 0.1033 m | 0.0812 m | 0.0728 m | 0.1172 m | 0.1019 m | 0.1002 m | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lo, L.-Y.; Yiu, C.H.; Tang, Y.; Yang, A.-S.; Li, B.; Wen, C.-Y. Dynamic Object Tracking on Autonomous UAV System for Surveillance Applications. Sensors 2021, 21, 7888. https://doi.org/10.3390/s21237888

Lo L-Y, Yiu CH, Tang Y, Yang A-S, Li B, Wen C-Y. Dynamic Object Tracking on Autonomous UAV System for Surveillance Applications. Sensors. 2021; 21(23):7888. https://doi.org/10.3390/s21237888

Chicago/Turabian StyleLo, Li-Yu, Chi Hao Yiu, Yu Tang, An-Shik Yang, Boyang Li, and Chih-Yung Wen. 2021. "Dynamic Object Tracking on Autonomous UAV System for Surveillance Applications" Sensors 21, no. 23: 7888. https://doi.org/10.3390/s21237888

APA StyleLo, L.-Y., Yiu, C. H., Tang, Y., Yang, A.-S., Li, B., & Wen, C.-Y. (2021). Dynamic Object Tracking on Autonomous UAV System for Surveillance Applications. Sensors, 21(23), 7888. https://doi.org/10.3390/s21237888