Abstract

To get more obvious target information and more texture features, a new fusion method for the infrared (IR) and visible (VIS) images combining regional energy (RE) and intuitionistic fuzzy sets (IFS) is proposed, and this method can be described by several steps as follows. Firstly, the IR and VIS images are decomposed into low- and high-frequency sub-bands by non-subsampled shearlet transform (NSST). Secondly, RE-based fusion rule is used to obtain the low-frequency pre-fusion image, which allows the important target information preserved in the resulting image. Based on the pre-fusion image, the IFS-based fusion rule is introduced to achieve the final low-frequency image, which enables more important texture information transferred to the resulting image. Thirdly, the ‘max-absolute’ fusion rule is adopted to fuse high-frequency sub-bands. Finally, the fused image is reconstructed by inverse NSST. The TNO and RoadScene datasets are used to evaluate the proposed method. The simulation results demonstrate that the fused images of the proposed method have more obvious targets, higher contrast, more plentiful detailed information, and local features. Qualitative and quantitative analysis results show that the presented method is superior to the other nine advanced fusion methods.

1. Introduction

Infrared (IR) and visible (VIS) images fusion focuses on synthesizing multiple images into one comprehensive image, which can be applied in face recognition [1], target detection [2], images enhancement [3], medicine field [4], remote sensing [5], and so on. The source images applied in image fusion come from different sensors. The IR sensor can capture the heat information radiated by objects. IR images have a low spatial resolution, less background information, poor imaging performance, and high contrast pixel intensities. In contrast, the VIS images provide abundant background, rich detailed texture information, and a high spatial resolution. Hence, the effective fusion of the two types of images will provide more useful information and better human visual effects, and that is beneficial for the subsequent research work [6,7].

The selection of the fusion rule is very crucial, and it decides the fusion effects. The essence of image fusion is how to reasonably choose the valuable pixels of multiple source images and integrate them into one image. Image fusion can be considered as the transfer of image information. The process is actually a many-to-one mapping, which contains strong uncertainty. To solve the problem, the energy-based fusion strategy is often used to enhance the image quality and reduce the uncertainty. Zhang [8] has presented a RE-based fusion rule for the IR and VIS image fusion which can preserve more prominent infrared targets information. Srivastava [9] has proposed a local energy-based method to fuse the multi-modal medical images that can obtain better fusion performance. Liu [10] has presented the average-RE fusion rule to fuse the multi-focus and medical images, and the results show that the fused image contains more information and edge details. Thanks to the consideration of the correlation of the pixels, the energy-based fusion strategies can overcome the uncertainty of improper pixels selection and improve the quality of the fused image to some extent.

In image fusion, the possibility that uncertainty and ambiguity occur can be considered extremely likely (due to sampling techniques, noising, blurring edges,...). Therefore, it is imperative the implementation of adaptive items to manipulate data uncertainty. Scientific research has produced a lot of good results by fuzzy logic and techniques. Versaci proposed a fuzzy geometrical approach to control the uncertainty of the image [11]. As the extension of the fuzzy sets (FS) theory, intuitionistic fuzzy sets (IFS) is described by membership, non-membership, and hesitation degrees which are more flexible and practical than FS in dealing with fuzziness and uncertainty [12]. In recent years, many relative methods have been developed in the field of image fusion. T. Tirupal [13] has presented Sugeno’s intuitionistic fuzzy set (SIFS) -based method to fuse multi-modal medical images. The image obtained by this algorithm can distinguish the edge of soft tissue and blood vessel clearly, which is helpful for case diagnosis. C. H. Seng [14] has proposed a method based on probabilistic fuzzy logic to fuse through-the-wall radar images, and the results show that fused image has a higher contrast to help improve the detection rate of the target. Zhang [12] has designed a method based on fractional-order derivative and IFS for multi-focus image fusion, and the results show that the method can avoid the artifacts and preserve the detailed information. It can be proved that IFS can solve the problems that existed in the image fusion process, which is suitable for image fusion.

In this paper, we combine RE and IFS to design a new image fusion strategy to enhance the fused image quality. To better extract the detailed features, we use non-subsampled shearlet transform (NSST) to decompose the IR and VIS images to get low- and high-frequency sub-bands. For the high-frequency sub-bands, the ‘max-absolute’ rule is adopted to obtain the fused detailed information. For the low-frequency sub-bands, the new fusion strategy is implemented to achieve the fused low-frequency components, and the strategy can be described by two steps as follows. Firstly, the RE-based fusion rule is performed on the low-frequency layers to get a pre-fusion image, which allows more target information preserved in the resulting image. Then, the IFS is introduced to obtain the final fused images, which enables more texture information to be transferred to the resulting image. We use the inverse NSST to reconstruct the final fused result. Simulation experiments on the public datasets demonstrate that this method outperforms other advanced fusion methods. The fused images have better stable quality, more obvious targets, higher contrast, more plentiful detailed information, and local features.

2. Related Works

2.1. Basic Principle of NSST

The fusion methods based on multi-scale geometric analysis (MGA) are widely used in IR and VIS image fusion [15,16,17,18,19,20,21,22,23]. MGA tools can represent the images at different scales and different directions, and these characteristics are helpful to extract more detailed information of the images. Among these MGA tools, NSST is regarded as the most popular one [24]. Many researchers have proved that the fused images of NSST-based method are more suitable for the human visual system. NSST is proposed by K. Guo and G. Easley et al. [25,26], and the model of NSST can be described as follows.

Assume n = 2, the affine systems with composite dilations are the collections of the form [25]:

where , is a 2 × 2 invertible matrix, so is . By choosing , , and appropriately, we can make an orthonormal basis or, more generally, a Parseval frame (PF) for . Typically, the members of are shear matrices (all eigenvalues are one), while the members of are matrices expanding or contracting on a proper subspace of . These wavelets are of interest in applications because of their tendency to produce “long, narrow” window functions well suited to edge detection.

Assume , , the shearlet system is shown as Equation (2), is a shearlet [26]. Shearlet can be considered as a special example of composite wavelets in , whose elements range not only at various scales and locations, like wavelets, but also at various orientations [27].

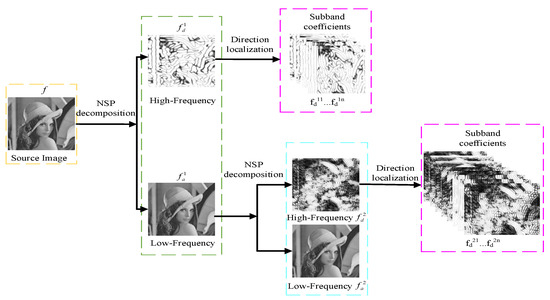

Figure 1 shows the NSST decomposition structure of two levels. The source image f is decomposed into a low-pass image and a band-pass image by a non-subsampled pyramid (NSP). After that, the NSP decomposition of each layer is iterated on the low-frequency component obtained from the upper layer decomposition. The shearlet filter banks are used to decompose and to attain the high-frequency sub-bands coefficients.

Figure 1.

NSST decomposition structure with two levels.

2.2. Fuzzy Set Theory

Zadeh presented the fuzzy set (FS) theory in 1965 [27]. According to the FS theory, the membership degree is used to quantify the uncertain information expressed by the interval [0,1]. A value between 0 and 1 is used to represent the membership degree. The value of 0 means the non-membership, and the value of 1 means the full membership. The sum of the element membership degree is 1.

FS theory is good at representing qualitative knowledge with unclear boundaries, which plays a vital role in eliminating the vagueness that existed in images [28]. Many studies show that the image fusion methods based on FS theory are superior to other conventional algorithm models. The composite methods that combine the FS theory with other representation methods can strictly select reliable pixel information of source images [29].

According to general set theory, there is a relationship of belonging to or not belonging between elements and sets. Let denote the universe, , , and the characteristic function of A is defined as follows [30]:

In FS theory, the definition of membership function evolves from the characteristic function in general set theory. Let denote a fuzzy subset of , and the membership function can be defined as follows [30]:

It can be seen from Equation (5), the FS theory is established based on the membership function. Therefore, the membership function is very important in fuzzy mathematics.

The image with the resolution of can be seen as a fuzzy pixel set, as follows [30]:

where means the grayscale value of the pixel . belongs to , which represents the membership degree of the pixel . represents the fuzzy characteristics plane, which is composed of all . is calculated by the membership degree function. Different membership degree functions can obtain different . Therefore, it is convenient to adjust the to acquire different enhancement effects.

3. Proposed Method

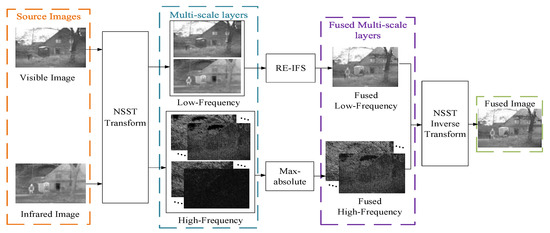

In this section, we propose a new fusion strategy for IR and VIS images: a combining RE and IFS method in NSST domain (RE-IFS-NSST). Figure 2 illustrates the overall framework of the algorithm. The fusion process can be mainly divided into 4 parts: NSST decomposition, the low-frequency sub-bands fusion, the high-frequency sub-bands fusion, and the NSST reconstruction.

Figure 2.

The overall framework of the proposed algorithm.

3.1. NSST Decomposition

In this paper, the NSST is used to decompose the source images. The IR and VIS images are decomposed by NSST to obtain high- and low-frequency sub-bands according to the Equations (7) and (8). The and are the low-frequency sub-bands of the IR and VIS images, respectively; the and are the high-frequency sub-bands of the IR and VIS images at the j level with the k direction, respectively.

where the represents the NSST decomposition function of the input image.

3.2. The Rule for Low-Frequency Components

The low-frequency components contain most of the energy information such as contour and background information [31]. In this paper, the RE and IFS are used to construct the fusion rule for the low-frequency components. The method consists of two steps: (1) the pre-fusion based on RE; (2) the final fusion based on IFS.

In the low-frequency of IR images, the salient targets are usually located in regions that have large energy. The fusion rule based on RE can transmit the energy information of the IR images to the fused image which can achieve better performance on the extraction of the target information. Therefore, we firstly adopt RE-based fusion rule to get the pre-fusion image. Based on the pre-fusion image, we secondly adopt the IFS-based method to get the final result. IFS can be described by membership, non-membership, and hesitation degrees at the same time. In accordance with the membership degree, the pixels of the source images can be easily, precisely, and effectively classified into targets and background information. By means of the IFS-based method, the texture information of VIS images can be transferred to the resulting image.

- (1)

- The pre-fusion based on RE

and represents the low-frequency components of IR and VIS images, respectively. and are firstly fused based on the RE-based fusion rule to achieve the pre-fused low-frequency image. The RE is calculated as follows [32]:

where is the energy of the region centered on the point , s represents IR or VIS; is the neighborhood window centered on the point ; is the low-frequency coefficient of the point ; is the function value of the mask window of the point . The window function W with a size of can be expressed as Equation (10) [32]:

Based on RE, the low-frequency image of IR and VIS are fused by the weighted average RE rule. The weights are shown in Equations (11)–(13).

where and are the fusion weights, f is the pre-fusion image.

- (2)

- The final fusion based on IFS

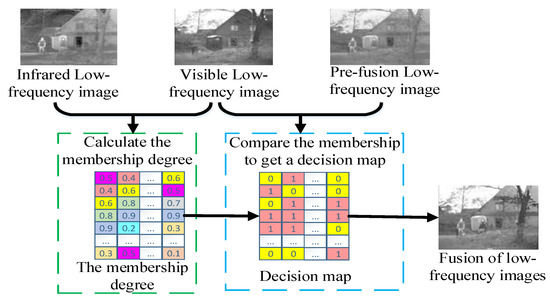

The IFS is introduced to calculate the membership degree of the IR and VIS low-frequency images, and the pre-fusion image is used as the reference to assist the final low-frequency image fusion. Figure 3 shows the low-frequency sub-bands fusion framework.

Figure 3.

The fusion framework of the detailed layer.

Gauss membership function is used to represent the membership degree of the coefficients, and the final low-frequency image is fused in accordance with the membership degree after defuzzification. The membership and non-membership of are respectively shown as follows [33]:

where represents the average value of , represents the standard deviation. and are Gaussian function adjustment parameters. Hesitation degree is obtained by and . is calculated as follows:

The difference correction method is used to transfer the IFS into FS. The is the membership degree of FS, and which is calculated as below [34]:

Similarly, the , , and of VIS low-frequency image can be calculated according to Equations (14)–(17). According to the empirical value, and are set to 0.8 and 1.2 respectively.

It can be seen from Equations (14)–(17) that the large gray value of the pixel corresponds to the small membership value. Therefore, in IR images, the targets own the smaller membership value. In VIS images, the background and texture features own the smaller membership value. The membership degree can be used to determine which valuable pixels can be integrated into the final resulting image. Using the pre-fusion image f as the reference image, the and are compared to get the decision map to generate the final fused image . The specific fusion rule is defined as below:

3.3. The Rule for High-Frequency Components

Different from the low-frequency sub-bands, the high-frequency sub-bands are usually used to reflect the texture and contour information of the source images. The edges and contours are important information carrying points used to display the visual structure of the image. Edges and contours often correspond to the pixels with a sharp decrease in brightness information. Therefore, we adopt the max-absolute fusion rule to fuse the high-frequency sub-bands to get rich texture information. The fusion rule can be described as follows:

where is the final fusion results of the high-frequency sub-bands.

3.4. NSST Reconstruction

The fused image is reconstructed by the inverse NSST transform according to Equation (20).

where the represents the inverse NSST transform function; is the output image.

The proposed RE-IFS-NSST method is summarized in Algorithm 1.

| Algorithm 1. The proposed RE-IFS-NSST fusion algorithm. |

| Input: Infrared image (IR), Visible image (VIS) |

| Out: Fused image (F). |

|

4. Experimental Results

4.1. Datasets

In order to test the effectiveness of the proposed method, the experiments are conducted on two public datasets, TNO Image Fusion Dataset and RoadScene Dataset, which are widely used in the field of IR and VIS image fusion. We chose 6 sets of IR and VIS images in TNO dataset and 5 sets of IR and VIS images in RoadScene dataset. All the images pairs can be downloaded from: https://github.com (accessed on 15 July 2021).

4.2. Experimental Setting

In order to test the practicability and effectiveness of the proposed method, we set up two groups of experiments. The first group compares the proposed method with RE-NSST and IFS-NSST methods, and the second group compares the proposed method with the other nine advanced fusion methods which are FPDE [35] (fourth-order partial differential equations), VSM [36] (visual saliency map), Bala [37] (Bala fuzzy sets), Gauss [34] (Gauss fuzzy sets), DRTV [38] (Different Resolutions via Total Variation Model), LATLRR [39] (latent Low-Rank Representation), SR [40] (sparse regularization), MDLatLRR [41] (decomposition method based on latent low-rank representation) and RFN-Nest [42] (residual fusion network).

The experimental parameters are set as follows:

- (1)

- The computer is configured as 2.6 Hz Intel Core CPU and 4GB memory, and all experimental codes run on the Matlab2017 platform.

- (2)

- In the proposed method, the ‘maxflat’ is chosen as the pyramid filter. The numbers of decomposition level and directions are 3 and {16,16,16}, respectively.

- (3)

- In the RE-NSST and IFS-NSST methods, the parameters of NSST are the same as that of the proposed method. The calculation of RE and IFS are the same as that of the proposed method.

- (4)

- In Bala and Gauss methods, the ‘9-7′ and ‘pkva’ are chosen as the pyramid filter and the directional filter respectively, and the decomposition scale is 3.

- (5)

- In the MDLatLRR method, the decomposition level selection 2.

- (6)

- The parameters of the other 9 methods are set following the best parameter setting reported in the corresponding papers.

4.3. Quantitative Evaluation

In this section, six types of quantitative evaluation indexes are introduced to evaluate the performance of every algorithm objectively, including E, AG, MI, CE, SPD, and PSNR. Among the indexes, the E and AG are non-reference indexes; the MI, CE, SPD, and PSNR are reference-based indexes. MI and CE utilize IR and VIS images as the reference to calculate the similarity and difference between the source image and the fused image. SPD and PSNR use the VIS image as the reference to reflect the interference information from the VIS image into the fused image. To comprehensively evaluate the fusion performance from different aspects, we employ both the reference-based and no-reference indexes in this study.

- (1)

- Entropy (E) [43]

E describes the average amount of information of the source image, and it is calculated using Equation (21):

where represents the total gray level, is the probability of the gray value .

- (2)

- Average Gradient (AG) [43]

AG reflects the micro-details contrast and texture features variation of the fused image. It can be expressed as below:

where the area with the pixel as the center and size , and are the difference in two directions of the fused image .

- (3)

- Mutual Information (MI) [44]

MI reflects the amount of information transferred from the source images to fused image, which can be calculated as follows:

where and respectively represent the gray distribution of the source images and the fused image, and are the joint probability distribution density. The sum of and denotes the mutual information value.

- (4)

- Cross Entropy (CE) [44]

CE reflects the different degree of gray distribution between fusion image and source images, which is defined as below:

where is gray levels, , and are the probability of the detected pixel with gray value appearing in the IR, VIS, and the fused images.

- (5)

- Spectral Distortion (SPD) [45]

SPD reflects the degree of color distortion between the fused image and the VIS image, the expression is shown in Equation (28):

where and represent the gray values of the fused image and the VIS image at respectively.

- (6)

- Peak signal to noise ratio (PSNR) [44]

PSNR is mainly used to measure the ratio between effective information and noise of image, and it can illustrate whether the image is distorted. It can be given as below:

where and represent the gray values of the fused image and the source image at respectively. MSE is the mean square error, and it reflects the degree of difference between variables. represents the difference between the maximum and minimum gray value of the source image.

4.4. Fusion Results on the TNO Dataset

4.4.1. Comparison with RE-NSST and IFS-NSST Methods

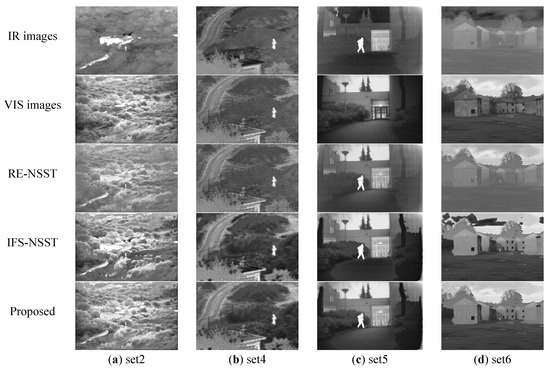

In the first group of simulation experiments, we firstly compare three methods, the RE-NSST, IFS-NSST, and proposed methods. The qualitative comparison results are shown in Figure 4.

Figure 4.

The fusion results in TNO Dataset. The first and second rows are the IR and VIS images. From the third to the fifth row are the fusion results of RE-NSST, IFS-NSST and Proposed methods on 4 sets of the source images.

As shown in Figure 4, the three methods can fuse the source images, but the results are different. The RE-NSST method can achieve relatively obvious targets, but the fused images have the problems of low contrast and image blurring. The fused images of the IFS-NSST method have higher contrast and more detailed information, but the problems of false contour and block effects are inevitable. The fused images have poor visual effects. Compare with the RE-NSST and IFS-NSST methods, the proposed method can extract the complete infrared targets and continuous and clear edge details. The fused images are more suitable for human-eye observation.

The quantitative comparison results are shown in Table 1. We use red and blue to represent the best and second-best results respectively. For the E, AG, MI, and PSNR, the large values mean better performance. For the CE and SPD, the low values mean better fusion performance. Except for AG, the proposed method is superior to RE-NSST and IFS-NSST in other parameters, which means that the proposed method has the best comprehensive performance.

Table 1.

Quantitative comparison results of RE-NSST, IFS-NSST, and proposed methods.

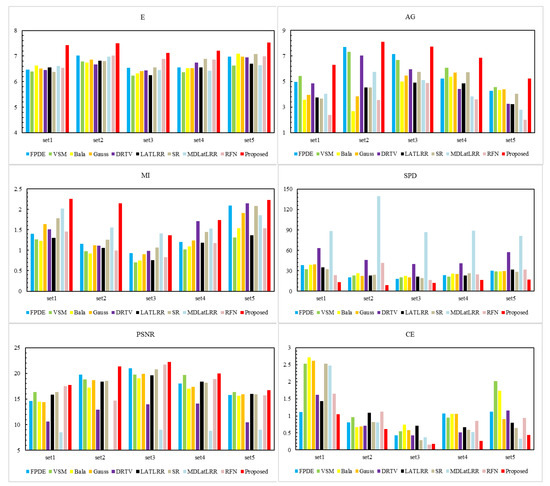

4.4.2. Comparison with the State-of-the-Art Methods

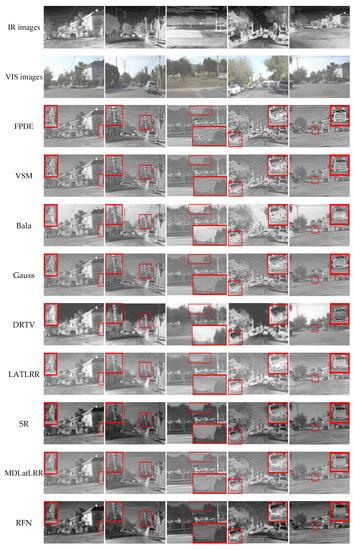

In the second group of the experiment, we compare the proposed method with other advanced methods. Figure 5 shows the qualitative fusion results on the TNO dataset. As shown in Figure 5, the compared Bala method can achieve complete infrared targets, but the background is blurring. The Gauss method can preserve more texture features (such as the edges of the window and road). However, the fused images have the problems of obvious block effects. The FPDE lost the detailed edge information (e.g., roads, trees, shrubs, windows, or street lights) and make the scene recognition difficult. The infrared targets information is highlighted in the fusion image of DRTV, but the background is blurred and the edges information is lost seriously. VSM, LATLRR, MDLatRR, SR, and RFN can achieve relatively rich details, but the brightness of the infrared targets is low. Compared with the nine methods, the proposed method can obtain better image performance. The fused images have more obvious infrared targets and abundant background and detailed information, which are more suitable for the human visual system.

Figure 5.

Results on TNO dataset. From the first row to the last row are IR images, VIS images, the fusion results of FPDE, VSM, Bala, Gauss, DRTV, LATLRR, SR, MDLatLRR, RFN, and the proposed methods.

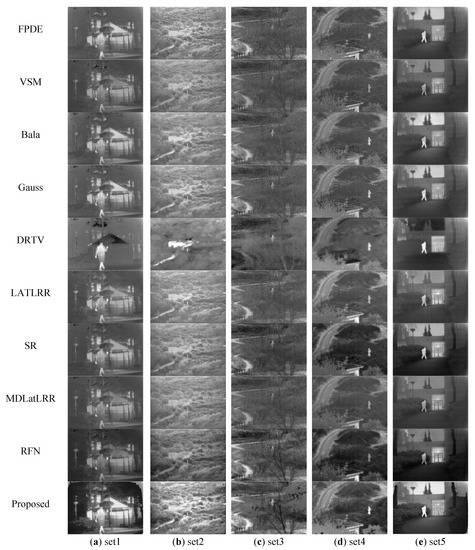

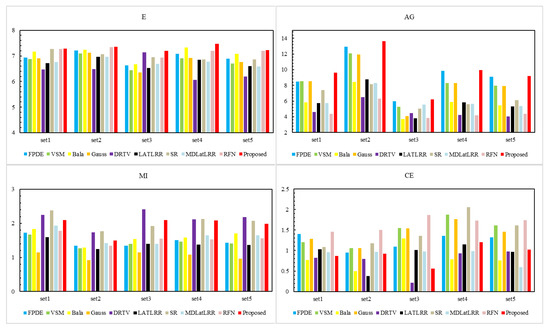

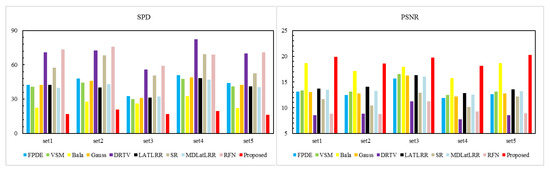

Figure 6 displays the quantitative fusion results of the ten methods. The results show that our proposed method gets the best performance on 4 objective evaluation metrics (E, AG, SPD, PSNR) and the second-best performance on 2 objective evaluation metrics (MI, CE). The differences between the best MI and CE are small, superior to other compared methods.

Figure 6.

Comparison results of six evaluation parameters. The nine methods, i.e., FPDE, VSM, Bala, Gauss, DRTV, LATLRR, SR, MDLatLRR, RFN are compared with the proposed method.

4.4.3. Analysis

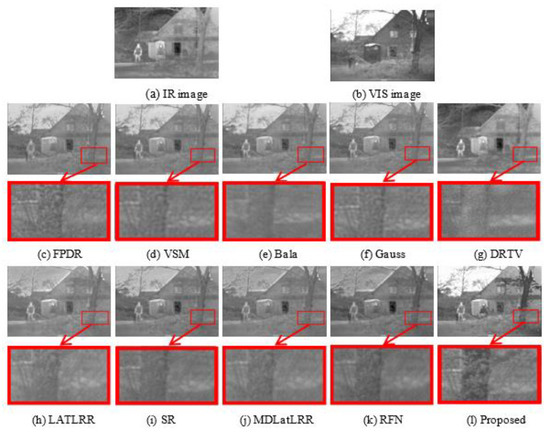

We use the ‘2_Men in front of house’ image as the example to further illustrate the superiority of this algorithm. We enlarge the region contained in the red rectangle in the fused image by the same multiple. The quantitative comparison results of all ten methods are shown in Figure 7.

Figure 7.

Fusion results on ‘2_Men in front of house’ image. From (c–l) are the fusion result of the ten methods.

The magnifying detailed images illustrate that the proposed method has the following advantages:

- (1)

- The proposed method can transfer more detailed textures features of shrub and tree to the resulting image.

- (2)

- The proposed method can preserve obvious infrared targets information in the resulting image.

- (3)

- The proposed method can improve the image contrast and brightness.

The objective evaluation results of ten methods on ‘2_Men in front of house’ image are shown in Table 2. Table 2 illustrates that the proposed method obtains the best results on the 6 parameters. In general, the proposed method performs better than other compared methods.

Table 2.

Quantitative comparison results of the ten methods on ‘2_Men in front of house’ image.

4.5. Fusion Results on the RoadScene Dataset

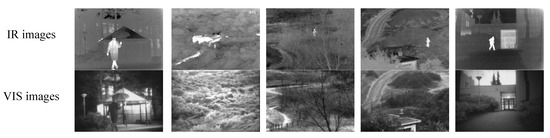

To further evaluate the applicability of the proposed method, we conduct experiments on the RoadScene dataset. The images on the RoadScene datasets contain rich road traffic scenes such as vehicles, pedestrians, and roads. The VIS images in the RoadScene dataset are color images. In the fusion experiment, they are first converted to gray images. We choose 5 pairs of typical images to carry on the comparison experiments. We enlarge the details of the region contained in the red rectangle in the fused images. The comparison results are shown in Figure 8.

Figure 8.

Results on the RoadScene dataset. From the first row to the last are IR images, VIS images, the fusion results of FPDE, VSM, Bala, Gauss, DRTV, LATLRR, SR, MDLatLRR, RFN, and the proposed method.

Figure 8 shows that the fusion image obtained by SR, and RFN methods are darker than other images. The infrared targets of FPDE and VSM methods are not prominent. Although the DRTV method can get an obvious target, it can’t identify the background texture features. The Bala method loses the image detail information. The fusion images of LATLRR and MDLatLRR methods have lower contrast. The fused images of the proposed method can achieve plentiful detailed texture features and apparent infrared targets. The qualitative results show that the proposed method can obtain better fusion performance, which is also applicable for the RoadScene dataset.

Figure 9 shows the quantitative data results on the RoadScene dataset of all the fusion methods. The results show that our proposed method performs best on 4 objective evaluation metrics (E, AG, SPD, PSNR) and the second-best on the other two metrics (MI and CE). Our proposed method achieves a lower value of MI and a higher value of CE. The reason is maybe that we discard some useless interference information, such as noise information, which existed since the image was collected by the RoadScene dataset. Therefore, the similarity between the fusion image and the source image is relatively small, resulting in lower MI and higher CE values.

Figure 9.

Quantitative results of six evaluation parameters. The nine methods, i.e., FPDE, VSM, Bala, Gauss, DRTV, LATLRR, SR, MDLatLRR, RFN are compared with the proposed method.

The results on the RoadScene dataset are basically consistent with those on the TNO dataset. Qualitative and quantitative experimental results show that the proposed method can generate fused images with prominent targets and abundant details, which is more appropriate for the human visual system.

4.6. The Computational Complexity Analysis

In order to analyze the computational complexity, we calculate the running time of different methods when fusing the above image pairs of TNO and RoadScene datasets. All experiments were performed under the same conditions. The results of the running time are shown in Table 3 and Table 4. The best and second-best running time is displayed in red and blue respectively. The results of the proposed method are shown in bold.

Table 3.

Running time T (s) of the ten methods on the TNO dataset.

Table 4.

Running time T (s) of the ten methods on the RoadScene dataset.

According to Table 3 and Table 4, the DRTV shows the best calculation efficiency than other fusion methods, but the fusion performance is not the most satisfactory. The calculation complexity of VSM is lower on TNO dataset, but it cannot get the same results on the RoadScene dataset. LATLRR and MDLatLRR are relatively long. The running time of the MDLatLRR method is closely related to the decomposition level. As the number of decomposition levels increases, the running time of the algorithm becomes increasingly longer. Although the proposed method cannot obtain the lowest running time, the fusion quality is superior to other methods. At the same time, the method is more stable when dealing with different datasets.

5. Conclusions

In this paper, we present a new fusion method employing RE and IFS in the NSST domain for IR and VIS images. Thanks to the RE-IFS-NSST fusion strategy, the fusion image can have apparent infrared targets information and more plentiful texture features simultaneously. We conduct the experiments on the two public datasets, and six evaluation indexes are used to test the performance of the presented method. Quantitative results show that the proposed method is superior to nine other methods. Compared with the best results of the nine methods, the E, AG, PSNR, and SPD of this method on the TNO dataset are increased by 7.2%, 12.9%, 5.5%, and 92.3%, respectively; the same four parameters on the RoadScene dataset are increased by 7.4%, 1.5%, 13.5%, and 25.7%. The qualitative results demonstrate that the fused images have better fusion quality, and they are more consistent with the human visual system. The proposed method has a good application in the target detection field, such as in the military, medical diagnosis, target tracking, etc.

Author Contributions

Conceptualization, T.X. and X.X.; methodology, X.X. and C.L. (Cong Luo); software, J.Z.; validation, C.L. (Cheng Liu) and M.Y.; investigation, C.L. (Cheng Liu); writing—original draft preparation, C.L. (Cong Luo); writing—review and editing, C.L. (Cong Luo), J.Z. and X.X.; supervision, T.X.; project administration, X.X. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Natural Science Foundation of China under [NO: 61805021], and in part by the funds of the Science Technology Department of Jilin Province [NO: 20200401146GX].

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hu, W.; Hu, H. Discriminant Deep Feature Learning based on joint supervision Loss and Multi-layer Feature Fusion for heterogeneous face recognition. Comput. Vis. Image Underst. 2019, 184, 9–21. [Google Scholar] [CrossRef]

- Sun, H.; Liu, Q.; Wang, J.; Ren, J.; Wu, Y.; Zhao, H.; Li, H. Fusion of Infrared and Visible Images for Remote Detection of Low-Altitude Slow-Speed Small Targets. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2971–2983. [Google Scholar] [CrossRef]

- Huang, H.; Dong, L.; Xue, Z.; Liu, X.; Hua, C. Fusion algorithm of visible and infrared image based on anisotropic diffusion and image enhancement. PLoS ONE 2021, 16, e0245563. [Google Scholar]

- Jose, J.; Gautam, N.; Tiwari, M.; Tiwari, T.; Suresh, A.; Sundararaj, V.; Mr, R. An image quality enhancement scheme employing adolescent identity search algorithm in the NSST domain for multimodal medical image fusion. Biomed. Signal Proces. Control 2021, 66, 102480. [Google Scholar] [CrossRef]

- Jin, X.; Huang, S.; Jiang, Q.; Lee, S.J.; Wu, L.; Yao, S. Semisupervised Remote Sensing Image Fusion Using Multiscale Conditional Generative Adversarial Network with Siamese Structure. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7066–7084. [Google Scholar] [CrossRef]

- Qi, G.; Chang, L.; Luo, Y.; Chen, Y.; Zhu, Z.; Wang, S. A Precise Multi-Exposure Image Fusion Method Based on Low-level Features. Sensors 2020, 20, 1597. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, Y.; Dong, L.; Ji, Y.; Xu, W. Infrared and Visible Image Fusion through Details Preservation. Sensors 2019, 19, 4556. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, S.; Liu, F. Infrared and visible image fusion based on non-subsampled shearlet transform, regional energy, and co-occurrence filtering. Electron. Lett. 2020, 56, 761–764. [Google Scholar] [CrossRef]

- Srivastava, R.; Prakash, O.; Khare, A. Local energy-based multimodal medical image fusion in curvelet domain. IET Comput. Vis. 2016, 10, 513–527. [Google Scholar] [CrossRef]

- Liu, X.; Zhou, Y.; Wang, J. Image fusion based on shearlet transform and regional features. AEU-Int. J. Electron. Commun. 2014, 68, 471–477. [Google Scholar] [CrossRef]

- Versaci, M.; Calcagno, S.; Morabito, F.C. Fuzzy Geometrical Approach Based on Unit Hyper-Cubes for Image Contrast Enhancement. In Proceedings of the 2015 IEEE International Conference on Signal and Image Processing Applications (ICSIPA), Kuala Lumpur, Malaysia, 19–21 October 2015; pp. 488–493. [Google Scholar]

- Zhang, X.F.; Yan, H.; He, H. Multi-focus image fusion based on fractional-order derivative and intuitionistic fuzzy sets. Front. Inf. Technol. Electron. Eng. 2020, 21, 834–843. [Google Scholar] [CrossRef]

- Tirupal, T.; Mohan, B.C.; Kumar, S.S. Multimodal medical image fusion based on Sugeno’s intuitionistic fuzzy sets. ETRI J. 2017, 39, 173–180. [Google Scholar] [CrossRef]

- Seng, C.H.; Bouzerdoum, A.; Amin, M.G.; Phung, S.L. Probabilistic Fuzzy Image Fusion Approach for Radar Through Wall Sensing. IEEE Trans. Image Process. 2013, 22, 4938–4951. [Google Scholar] [CrossRef] [PubMed]

- Ma, J.; Ma, Y.; Li, C. Infrared and visible image fusion methods and applications: A survey. Inform. Fusion 2019, 45, 153–178. [Google Scholar] [CrossRef]

- Zhou, Z.H.; Tan, M. Infrared Image and Visible Image Fusion Based on Wavelet Transform. Adv. Mater. Res. 2013, 756–759, 2850–2856. [Google Scholar] [CrossRef]

- Kou, L.; Zhang, L.; Zhang, K.; Sun, J.; Han, Q.; Jin, Z. A multi-focus image fusion method via region mosaicking on Laplacian pyramids. PLoS ONE 2018, 13, e0191085. [Google Scholar] [CrossRef] [PubMed]

- Guo, L.; Dai, M.; Zhu, M. Multifocus color image fusion based on quaternion curvelet transform. Opt. Express 2012, 20, 18846–18860. [Google Scholar] [CrossRef] [PubMed]

- Mosavi, M.R.; Bisjerdi, M.H.; Rezai-Rad, G. Optimal Target-Oriented Fusion of Passive Millimeter Wave Images with Visible Images Based on Contourlet Transform. Wireless Pers. Commun. 2017, 95, 4643–4666. [Google Scholar] [CrossRef]

- Adu, J.; Gan, J.; Wang, Y.; Huang, J. Image fusion based on nonsubsampled contourlet transform for infrared and visible light image. Infrared Phys. Technol. 2013, 61, 94–100. [Google Scholar] [CrossRef]

- Fan, Z.; Bi, D.; Gao, S.; He, L.; Ding, W. Adaptive enhancement for infrared image using shearlet frame. J. Opt. 2016, 18, 085706. [Google Scholar] [CrossRef]

- Guorong, G.; Luping, X.; Dongzhu, F. Multi-focus image fusion based on non-subsampled shearlet transform. IET Image Process. 2013, 7, 633–639. [Google Scholar] [CrossRef]

- El-Hoseny, H.M.; El-Rahman, W.A.; El-Shafai, W.; El-Banby, G.M.; El-Rabaie, E.S.M.; Abd El-Samie, F.E.; Faragallah, O.S.; Mahmoud, K.R. Efficient multi-scale non-sub-sampled shearlet fusion system based on modified central force optimization and contrast enhancement. Infrared Phys. Technol. 2019, 102, 102975. [Google Scholar] [CrossRef]

- Kong, W.; Wang, B.; Lei, Y. Technique for infrared and visible image fusion based on non-subsampled shearlet transform and spiking cortical model. Infrared Phys. Technol. 2015, 71, 87–98. [Google Scholar] [CrossRef]

- Guo, K.; Labate, D. Optimally Sparse Multidimensional Representation Using Shearlets. SIAM J. Math. Anal. 2007, 39, 298–318. [Google Scholar] [CrossRef] [Green Version]

- Easley, G.; Labate, D.; Lim, W.Q. Sparse directional image representations using the discrete shearlet transform. Appl. Comput. Harmon. Anal. 2008, 25, 25–46. [Google Scholar] [CrossRef] [Green Version]

- Deschrijver, G.; Kerre, E.E. On the relationship between some extensions of fuzzy set theory. Fuzzy Sets Syst. 2003, 133, 227–235. [Google Scholar] [CrossRef]

- Bai, X. Infrared and Visual Image Fusion through Fuzzy Measure and Alternating Operators. Sensors 2015, 15, 17149–17167. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Saeedi, J.; Faez, K. Infrared and visible image fusion using fuzzy logic and population-based optimization. Appl. Soft Comput. 2012, 12, 1041–1054. [Google Scholar] [CrossRef]

- Pal, S.K.; King, R. Image enhancement using smoothing with fuzzy sets. IEEE Trans. Syst. Man Cybern. 1981, 11, 494–500. [Google Scholar]

- Selvaraj, A.; Ganesan, P. Infrared and visible image fusion using multi-scale NSCT and rolling-guidance filter. IET Image Process. 2020, 14, 4210–4219. [Google Scholar] [CrossRef]

- Yu, S.; Chen, X. Infrared and Visible Image Fusion Based on a Latent Low-Rank Representation Nested with Multiscale Geometric Transform. IEEE Access 2020, 8, 110214–110226. [Google Scholar] [CrossRef]

- Cai, H.; Zhuo, L.; Zhu, P.; Huang, Z.; Wu, X. Fusion of infrared and visible images based on non-subsampled contourlet transform and intuitionistic fuzzy set. Acta Photon. Sin. 2018, 47, 610002. [Google Scholar] [CrossRef]

- Dai, Z.; Wang, Q. Research on fusion method of visible and infrared image based on PCNN and IFS. J. Optoelectron. Laser 2020, 31, 738–744. [Google Scholar]

- Bavirisetti, D.P.; Xiao, G.; Liu, G. In Multi-Sensor Image Fusion Based on Fourth Order Partial Differential Equations. In Proceedings of the 2017 20th International Conference on Information Fusion (Fusion), Xi’an, China, 10–13 July 2017; pp. 1–9. [Google Scholar]

- Ma, J.; Zhou, Z.; Wang, B.; Zong, H. Infrared and visible image fusion based on visual saliency map and weighted least square optimization. Infrared Phys. Technol. 2017, 82, 8–17. [Google Scholar] [CrossRef]

- Jingchao, Z.; Suzhen, L.; Dawei, L.; Lifang, W.; Xiaoli, Y. Comparative Study of Intuitionistic Fuzzy Sets in Multi-band Image Fusion. Infrared Technol. 2018, 40, 881. [Google Scholar]

- Du, Q.; Xu, H.; Ma, Y.; Huang, J.; Fan, F. Fusing infrared and visible images of different resolutions via total variation model. Sensors 2018, 18, 3827. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, H.; Wu, X.J. Infrared and visible image fusion using latent low-rank representation. arXiv 2018, arXiv:1804.08992. [Google Scholar]

- Anantrasirichai, N.; Zheng, R.; Selesnick, I.; Achim, A. Image fusion via sparse regularization with non-convex penalties. Pattern Recogn. Lett. 2020, 131, 355–360. [Google Scholar] [CrossRef] [Green Version]

- Li, H.; Wu, X.J.; Kittler, J. MDLatLRR: A novel decomposition method for infrared and visible image fusion. IEEE Trans. Image Process. 2020, 29, 4733–4746. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, H.; Wu, X.J.; Kittler, J. RFN-Nest: An end-to-end residual fusion network for infrared and visible images. Inf. Fusion 2021, 73, 72–86. [Google Scholar] [CrossRef]

- Xing, X.X.; Liu, C.; Luo, C.; Xu, T. Infrared and visible image fusion based on nonlinear enhancement and NSST decomposition. EURASIP J. Wirel. Commun. Netw. 2020, 2020, 162. [Google Scholar] [CrossRef]

- Yang, Y.C.; Li, J.; Wang, Y.P. Review of image fusion quality evaluation methods. J. Front. Comput. Sci. Technol. 2018, 12, 1021–1035. [Google Scholar]

- Guo, Q.; Liu, S. Performance analysis of multi-spectral and panchromatic image fusion techniques based on two wavelet discrete approaches. Optik 2011, 122, 811–819. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).