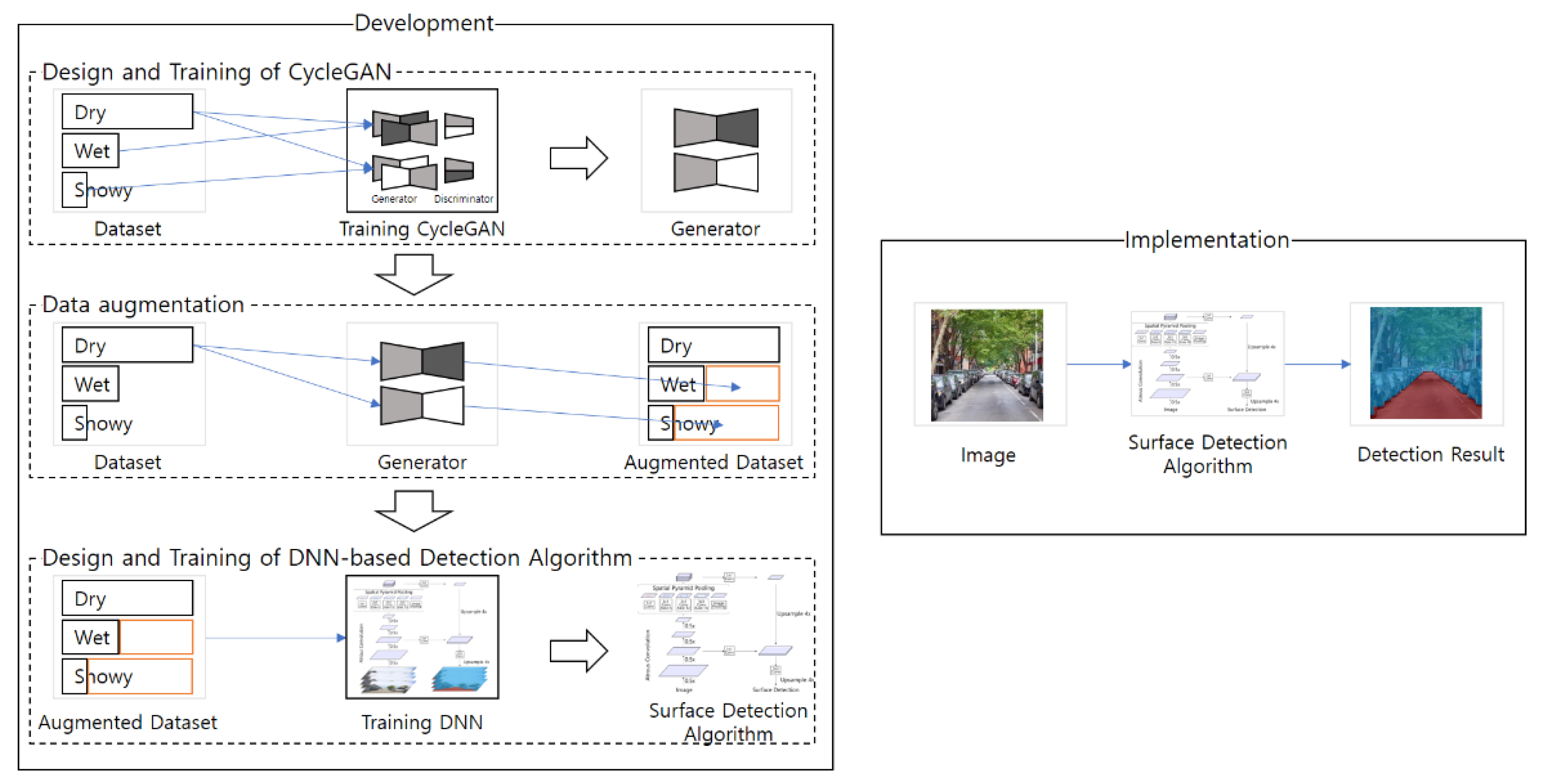

Development of Road Surface Detection Algorithm Using CycleGAN-Augmented Dataset

Abstract

:1. Introduction

2. Dataset and Methods

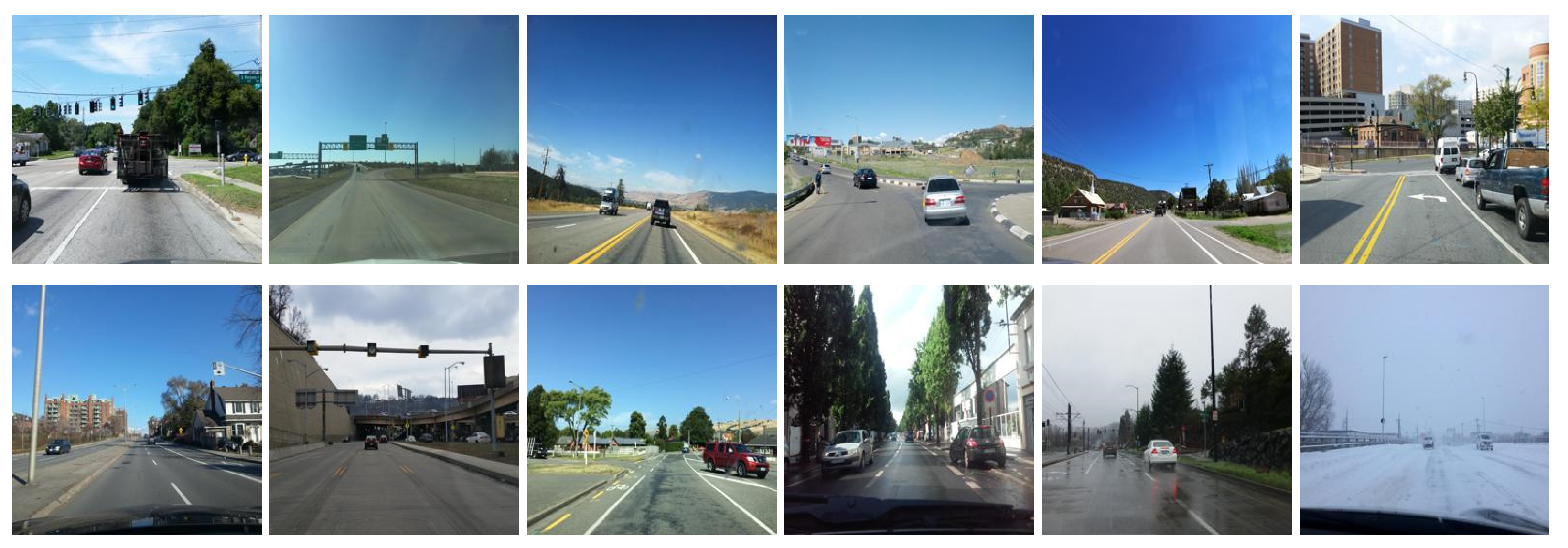

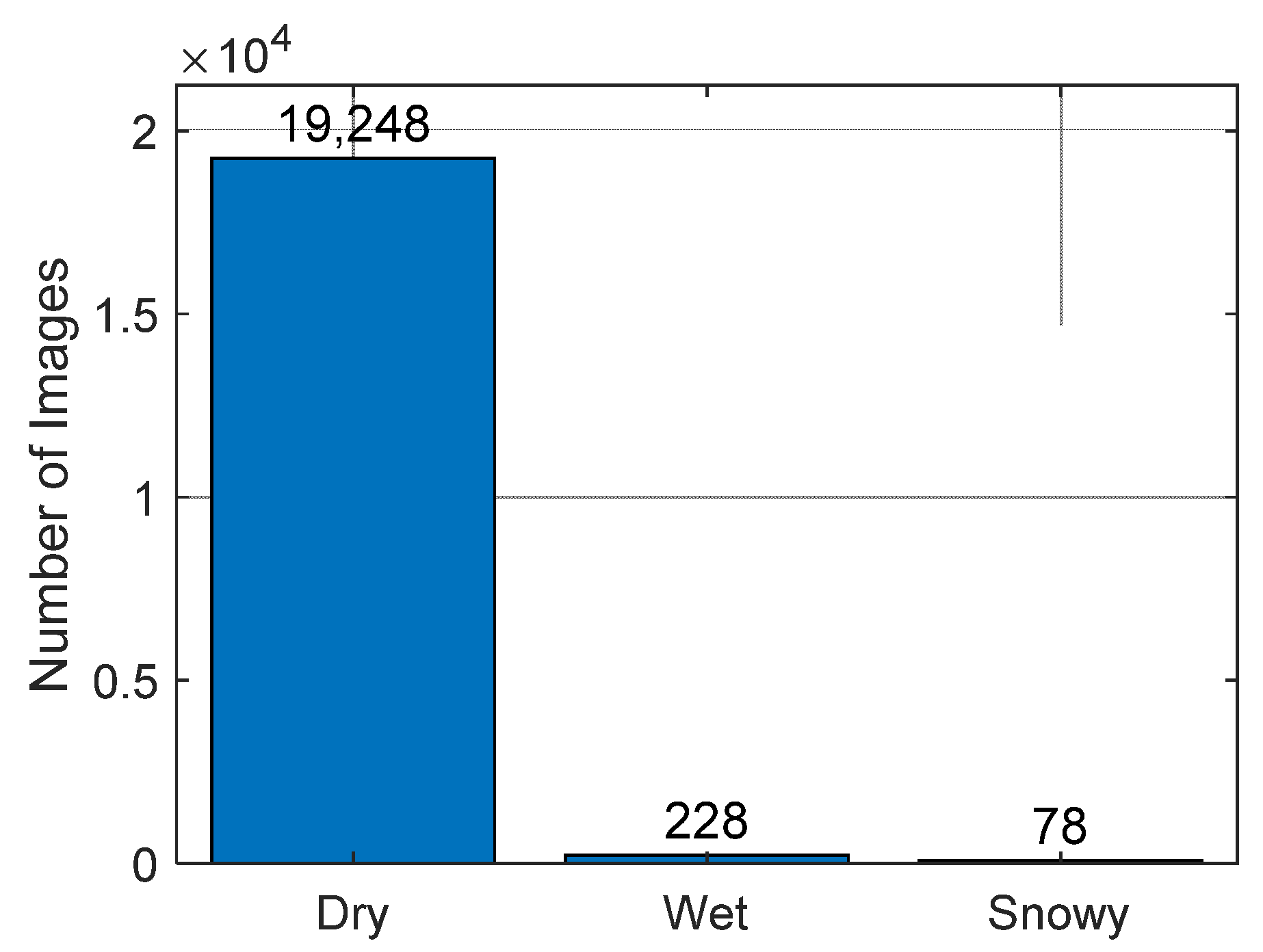

2.1. Base Dataset of Road Images

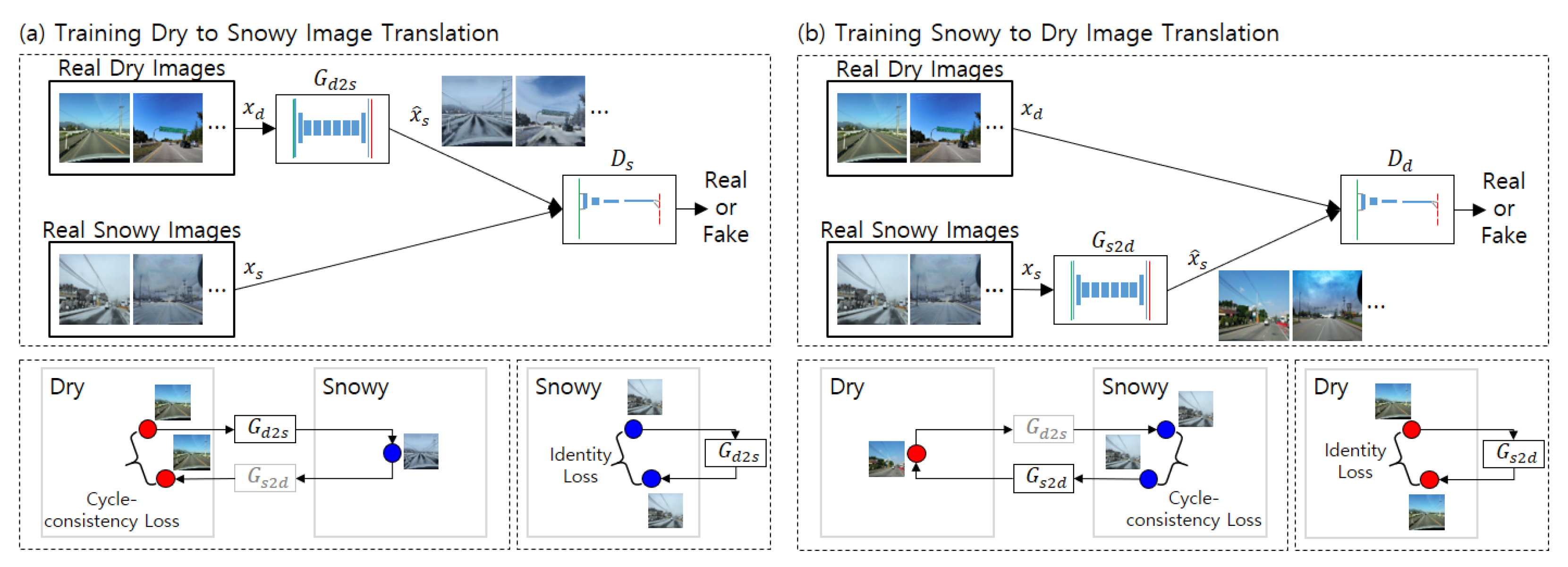

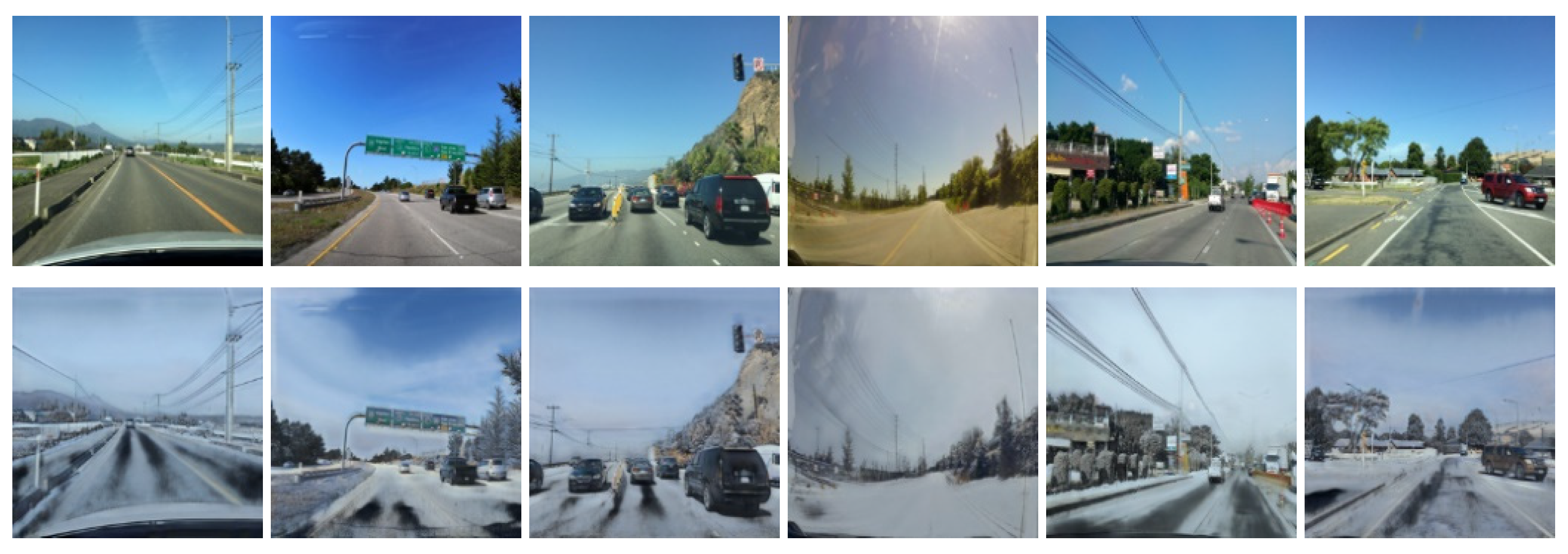

2.2. Data Augmentation by CycleGAN

2.3. Training Datasets for Road Surface Detection

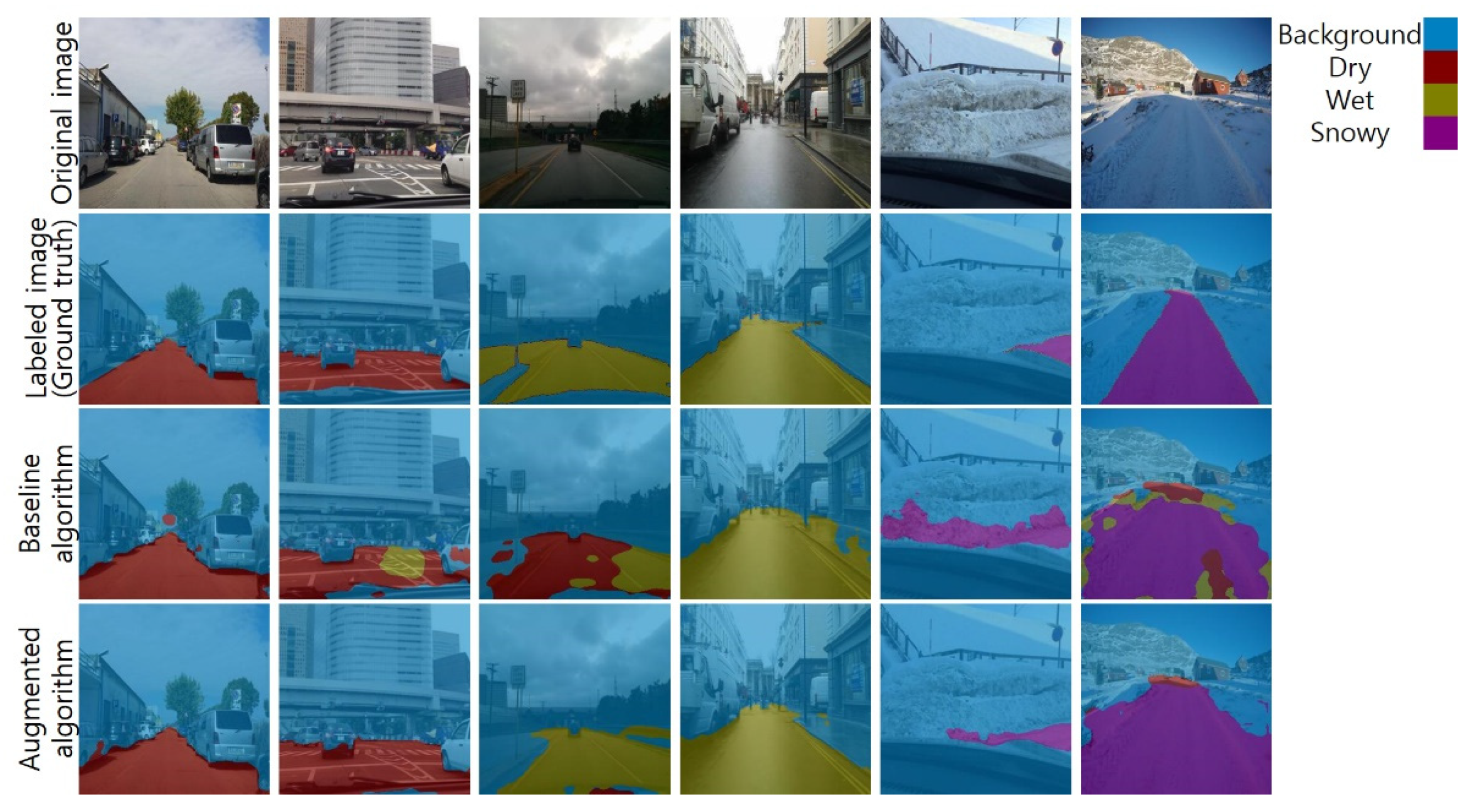

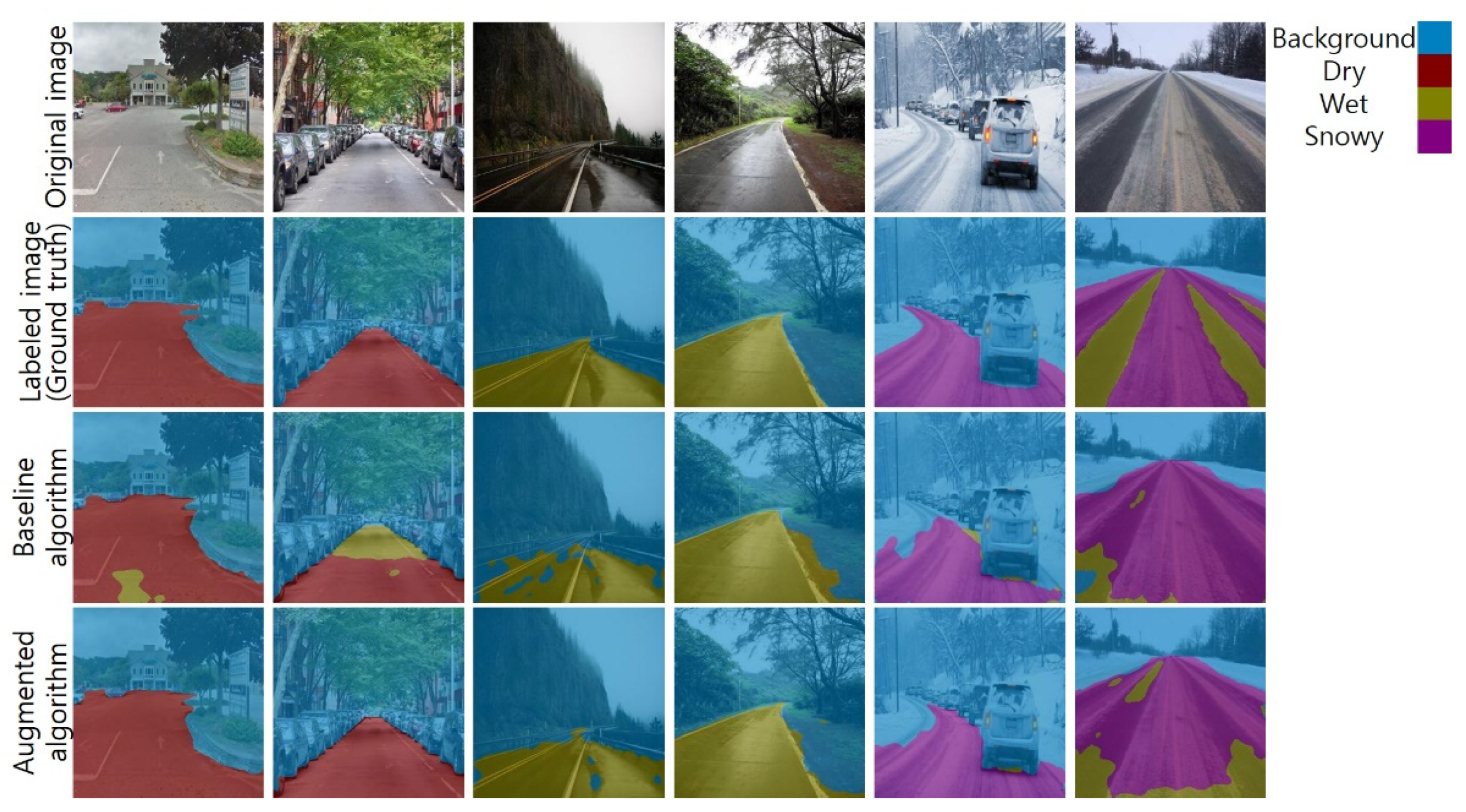

2.4. Detection Algorithm

3. Validation and Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wallman, C.-G.; Åström, H. Friction Measurement Methods and the Correlation between Road Friction and Traffic Safety: A Literature Review; Statens väg- och Transportforskningsinstitut: Linköping, Sweden, 2001. [Google Scholar]

- Hippi, M.; Juga, I.; Nurmi, P. A statistical forecast model for road surface friction. In Proceedings of the In SIRWEC 15th International Road Weather Conference, Quebec City, QC, Canada, 5–7 February 2010; pp. 5–7. [Google Scholar]

- Najafi, S.; Flintsch, G.W.; Medina, A. Linking roadway crashes and tire–pavement friction: A case study. Int. J. Pavement Eng. 2017, 18, 119–127. [Google Scholar] [CrossRef]

- Kuno, T.; Sugiura, H. Detection of road conditions with CCD cameras mounted on a vehicle. Syst. Comput. Jpn. 1999, 30, 88–99. [Google Scholar] [CrossRef]

- Holzmann, F.; Bellino, M.; Siegwart, R.; Bubb, H. Predictive estimation of the road-tire friction coefficient. In Proceedings of the 2006 IEEE Conference on Computer Aided Control System Design, 2006 IEEE International Conference on Control Applications, 2006 IEEE International Symposium on Intelligent Control, Munich, Germany, 4–6 October 2006; IEEE: Piscataway, NJ, USA, 2006; pp. 885–890. [Google Scholar]

- Shinmoto, Y.; Takagi, J.; Egawa, K.; Murata, Y.; Takeuchi, M. Road surface recognition sensor using an optical spatial filter. In Proceedings of the Conference on Intelligent Transportation Systems, Boston, MA, USA, 12 November 1997; IEEE: Piscataway, NJ, USA, 1997; pp. 1000–1004. [Google Scholar]

- Jokela, M.; Kutila, M.; Le, L. Road condition monitoring system based on a stereo camera. In Proceedings of the 2009 IEEE 5th International Conference on Intelligent Computer Communication and Processing, Cluj-Napoca, Romania, 27–29 August 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 423–428. [Google Scholar]

- Ahn, C.; Peng, H.; Tseng, H.E. Robust estimation of road frictional coefficient. IEEE Trans. Control. Syst. Technol. 2011, 21, 1–13. [Google Scholar] [CrossRef]

- Alvarez, L.; Yi, J.; Horowitz, R.; Olmos, L. Dynamic friction model-based tire-road friction estimation and emergency braking control. J. Dyn. Syst. Meas. Control. 2005, 127, 22–32. [Google Scholar] [CrossRef]

- Liu, C.-S.; Peng, H. Road friction coefficient estimation for vehicle path prediction. Veh. Syst. Dyn. 1996, 25, 413–425. [Google Scholar] [CrossRef]

- Yi, K.; Hedrick, K.; Lee, S.-C. Estimation of tire-road friction using observer based identifiers. Veh. Syst. Dyn. 1999, 31, 233–261. [Google Scholar] [CrossRef]

- Slavkovikj, V.; Verstockt, S.; De Neve, W.; Van Hoecke, S.; Van de Walle, R. Image-based road type classification. In Proceedings of the 2014 22nd International Conference on Pattern Recognition, Stockholm, Sweden, 24–28 August 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 2359–2364. [Google Scholar]

- Seeger, C.; Müller, A.; Schwarz, L.; Manz, M. Towards road type classification with occupancy grids. In Proceedings of the IVS Workshop, Gothenburg, Sweden, 19 June 2016. [Google Scholar]

- Cheng, L.; Zhang, X.; Shen, J. Road surface condition classification using deep learning. J. Vis. Commun. Image Represent. 2019, 64, 102638. [Google Scholar] [CrossRef]

- Šabanovič, E.; Žuraulis, V.; Prentkovskis, O.; Skrickij, V. Identification of road-surface type using deep neural networks for friction coefficient estimation. Sensors 2020, 20, 612. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shim, S.; Cho, G.-C. Lightweight semantic segmentation for road-surface damage recognition based on multiscale learning. IEEE Access 2020, 8, 102680–102690. [Google Scholar] [CrossRef]

- Rateke, T.; Von Wangenheim, A. Road surface detection and differentiation considering surface damages. Auton. Robot. 2021, 45, 299–312. [Google Scholar] [CrossRef]

- Wang, Z.-H.; Wang, S.-B.; Yan, L.-R.; Yuan, Y. Road Surface State Recognition Based on Semantic Segmentation. J. Highw. Transp. Res. Dev. 2021, 15, 88–94. [Google Scholar] [CrossRef]

- Liang, C.; Ge, J.; Zhang, W.; Gui, K.; Cheikh, F.A.; Ye, L. Winter road surface status recognition using deep semantic segmentation network. In Proceedings of the International Workshop on Atmospheric Icing of Structures (IWAIS 2019), Reykjavik, Iceland, 23–28 June 2019. [Google Scholar]

- Lyu, Y.; Bai, L.; Huang, X. Road segmentation using cnn and distributed lstm. In Proceedings of the 2019 IEEE International Symposium on Circuits and Systems (ISCAS), Sapporo, Japan, 26–29 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–5. [Google Scholar]

- Nolte, M.; Kister, N.; Maurer, M. Assessment of deep convolutional neural networks for road surface classification. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 381–386. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef] [Green Version]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Maddern, W.; Pascoe, G.; Linegar, C.; Newman, P. 1 year, 1000 km: The oxford robotcar dataset. Int. J. Robot. Res. 2017, 36, 3–15. [Google Scholar] [CrossRef]

- Zhao, A.; Balakrishnan, G.; Durand, F.; Guttag, J.V.; Dalca, A.V. Data augmentation using learned transformations for one-shot medical image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8543–8553. [Google Scholar]

- Qiao, Y.; Su, D.; Kong, H.; Sukkarieh, S.; Lomax, S.; Clark, C. Data augmentation for deep learning based cattle segmentation in precision livestock farming. In Proceedings of the 2020 IEEE 16th International Conference on Automation Science and Engineering (CASE), Hong Kong, China, 20–21 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 979–984. [Google Scholar]

- Eigen, D.; Fergus, R. Predicting depth, surface normals and semantic labels with a common multi-scale convolutional architecture. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2650–2658. [Google Scholar]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Neuhold, G.; Ollmann, T.; Rota Bulo, S.; Kontschieder, P. The mapillary vistas dataset for semantic understanding of street scenes. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4990–4999. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; p. 27. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Hoeser, T.; Kuenzer, C. Object detection and image segmentation with deep learning on earth observation data: A review-part i: Evolution and recent trends. Remote Sens. 2020, 12, 1667. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

| Dry | Wet | Snowy | Total | |

|---|---|---|---|---|

| Training | 300 | 137 | 47 | 484 |

| Validation | 100 | 46 | 16 | 162 |

| Test | 100 | 45 | 15 | 160 |

| Total | 500 | 228 | 78 | 806 |

| Dry | Wet | Snowy | Total | |

|---|---|---|---|---|

| Training | 600 | 737 | 647 | 1984 |

| Validation | 200 | 246 | 216 | 662 |

| Test | 200 | 245 | 215 | 660 |

| Total | 1000 | 1228 | 1078 | 3306 |

| Baseline | Augmented | |

|---|---|---|

| Background | 0.2358 | 0.1785 |

| Dry | 0.9805 | 1.5894 |

| Wet | 1.0203 | 1.1374 |

| Snowy | 1.0818 | 0.8922 |

| Baseline | Augmented | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | Accuracy | F1 | IoU | Precision | Recall | Accuracy | F1 | IoU | |

| Background | 0.84 | 0.95 | 0.77 | 0.89 | 0.93 | 0.95 | 0.94 | 0.89 | 0.94 | 0.93 |

| Dry | 0.93 | 0.91 | 0.84 | 0.92 | 0.79 | 0.91 | 0.96 | 0.87 | 0.94 | 0.80 |

| Wet | 0.89 | 0.87 | 0.76 | 0.88 | 0.66 | 0.90 | 0.91 | 0.81 | 0.90 | 0.73 |

| Snowy | 0.94 | 0.86 | 0.82 | 0.90 | 0.58 | 0.96 | 0.91 | 0.88 | 0.94 | 0.62 |

| Total | 0.90 | 0.90 | 0.80 | 0.90 | 0.74 | 0.93 | 0.93 | 0.86 | 0.93 | 0.77 |

| Baseline | Augmented | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | Accuracy | F1 | IoU | Precision | Recall | Accuracy | F1 | IoU | |

| Background | 0.81 | 0.94 | 0.72 | 0.87 | 0.90 | 0.89 | 0.92 | 0.81 | 0.90 | 0.90 |

| Dry | 0.97 | 0.91 | 0.89 | 0.94 | 0.81 | 0.96 | 0.99 | 0.96 | 0.98 | 0.84 |

| Wet | 0.88 | 0.86 | 0.75 | 0.87 | 0.71 | 0.97 | 0.85 | 0.82 | 0.91 | 0.77 |

| Snowy | 0.90 | 0.82 | 0.74 | 0.86 | 0.70 | 0.87 | 0.92 | 0.79 | 0.90 | 0.72 |

| Total | 0.89 | 0.89 | 0.77 | 0.89 | 0.78 | 0.92 | 0.92 | 0.84 | 0.92 | 0.81 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Choi, W.; Heo, J.; Ahn, C. Development of Road Surface Detection Algorithm Using CycleGAN-Augmented Dataset. Sensors 2021, 21, 7769. https://doi.org/10.3390/s21227769

Choi W, Heo J, Ahn C. Development of Road Surface Detection Algorithm Using CycleGAN-Augmented Dataset. Sensors. 2021; 21(22):7769. https://doi.org/10.3390/s21227769

Chicago/Turabian StyleChoi, Wansik, Jun Heo, and Changsun Ahn. 2021. "Development of Road Surface Detection Algorithm Using CycleGAN-Augmented Dataset" Sensors 21, no. 22: 7769. https://doi.org/10.3390/s21227769

APA StyleChoi, W., Heo, J., & Ahn, C. (2021). Development of Road Surface Detection Algorithm Using CycleGAN-Augmented Dataset. Sensors, 21(22), 7769. https://doi.org/10.3390/s21227769