Distributed Two-Dimensional MUSIC for Joint Range and Angle Estimation with Distributed FMCW MIMO Radars

Abstract

:1. Introduction

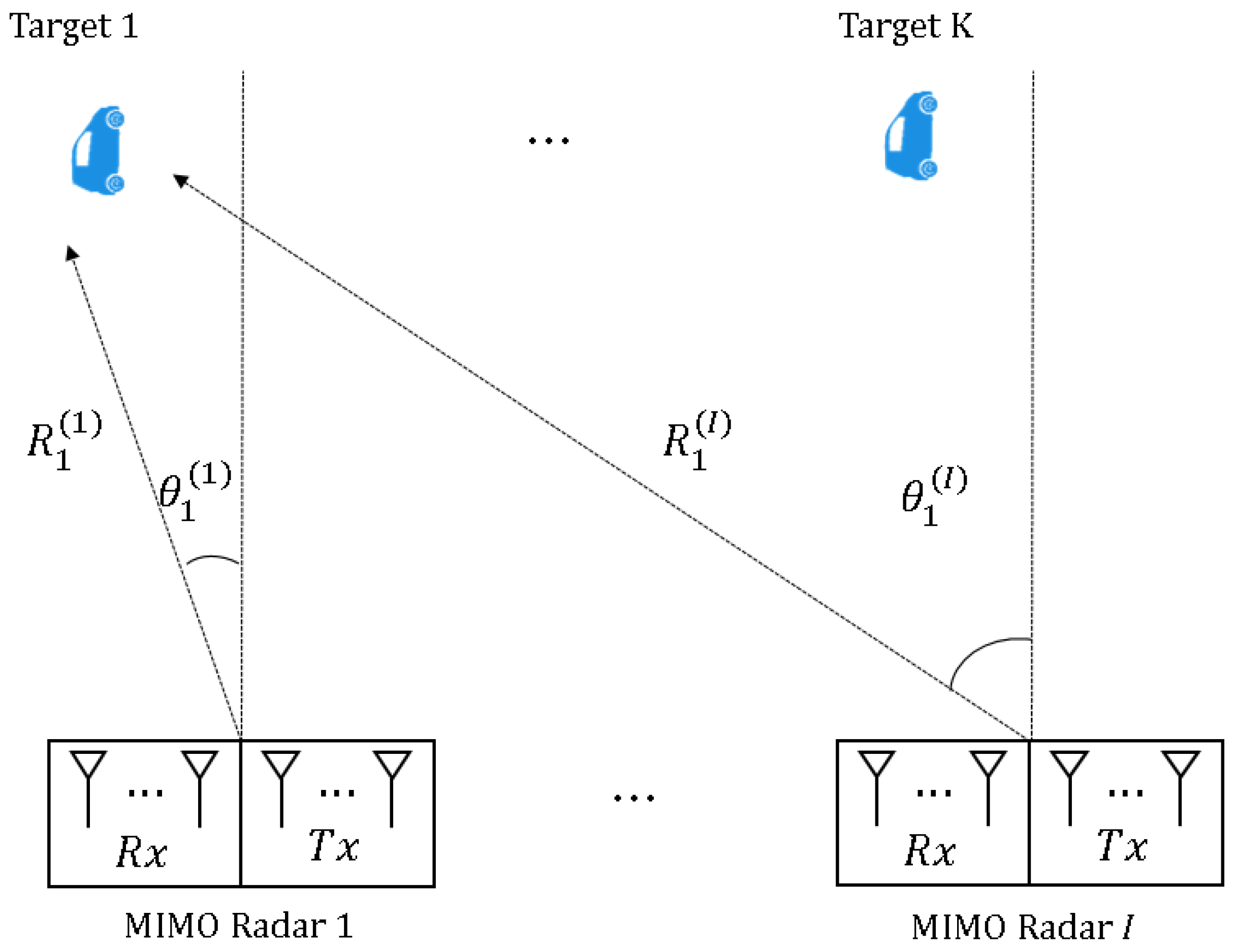

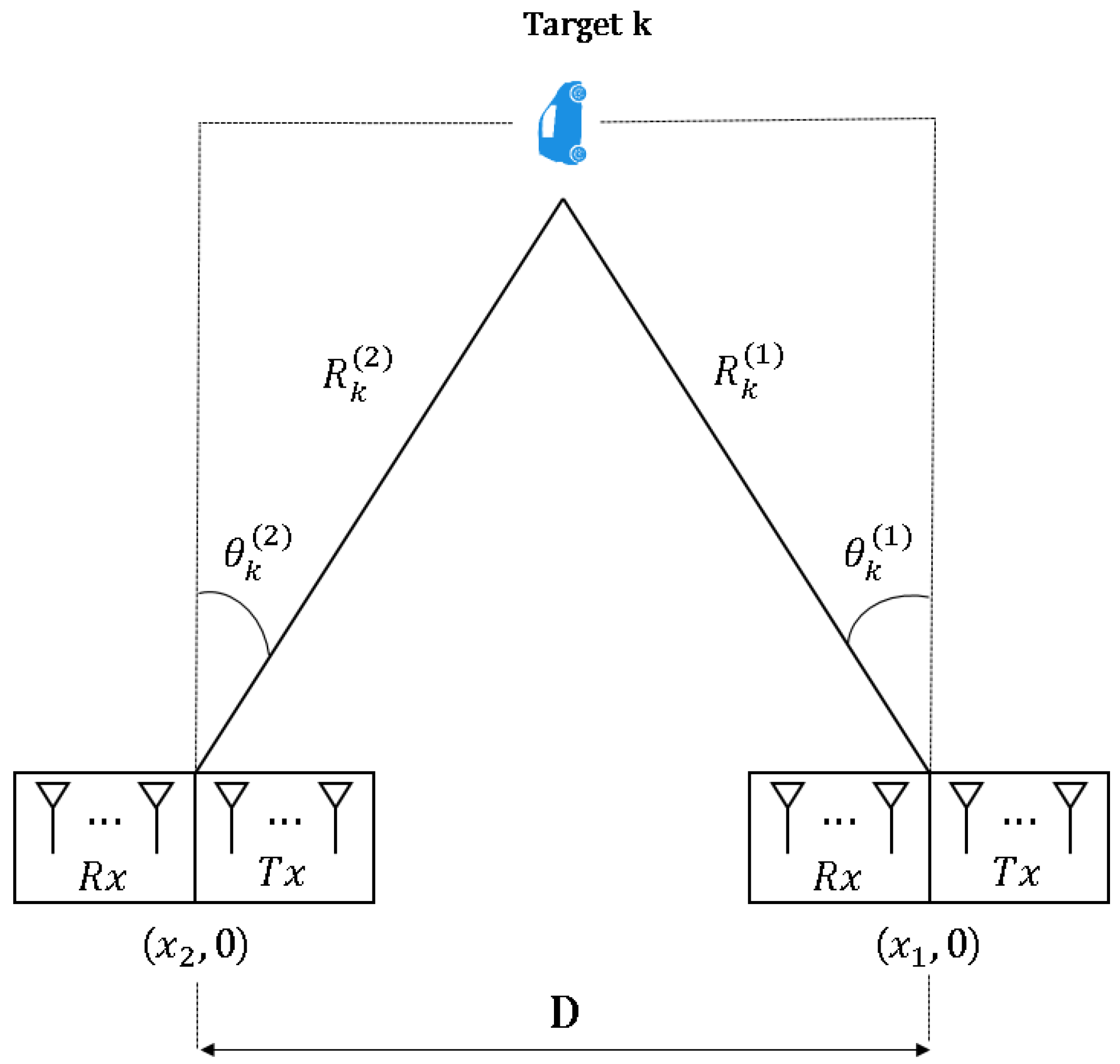

2. System Model for Distributed FMCW MIMO Radar System

2.1. Transmitted/Received Signal Model at Distributed FMCW MIMO Radar

2.2. Reconfiguration to Received Signals into Discrete-Time Signal Matrix

3. Distributed 2D MUSIC Algorithm for Joint Range and Angle Estimation

3.1. 2D MUSIC Algorithm with a Single FMCW MIMO Radar

3.2. Distributed 2D MUSIC Algorithm with Coordinate Transformation

| Algorithm 1 Distributed 2D MUSIC algorithm with coordinate transformation |

|

3.3. Discussion

4. Simulation Results

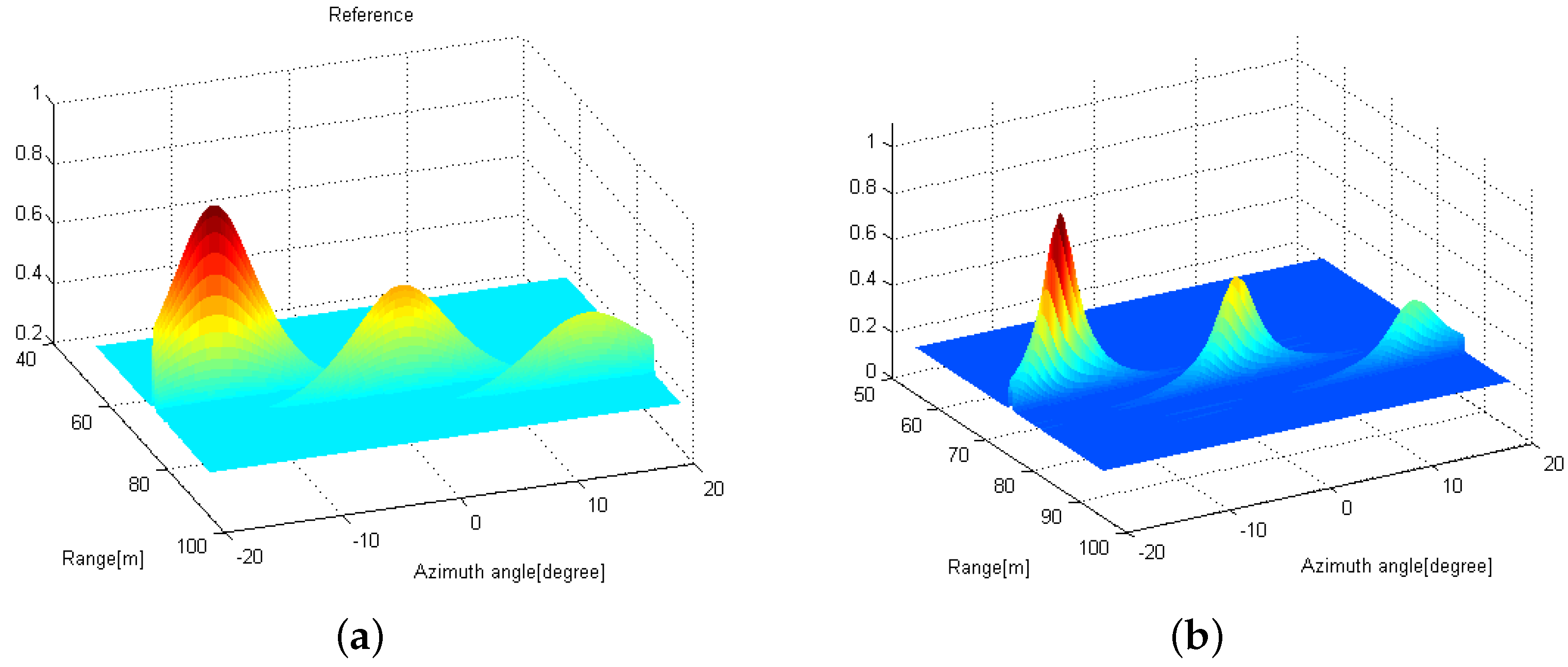

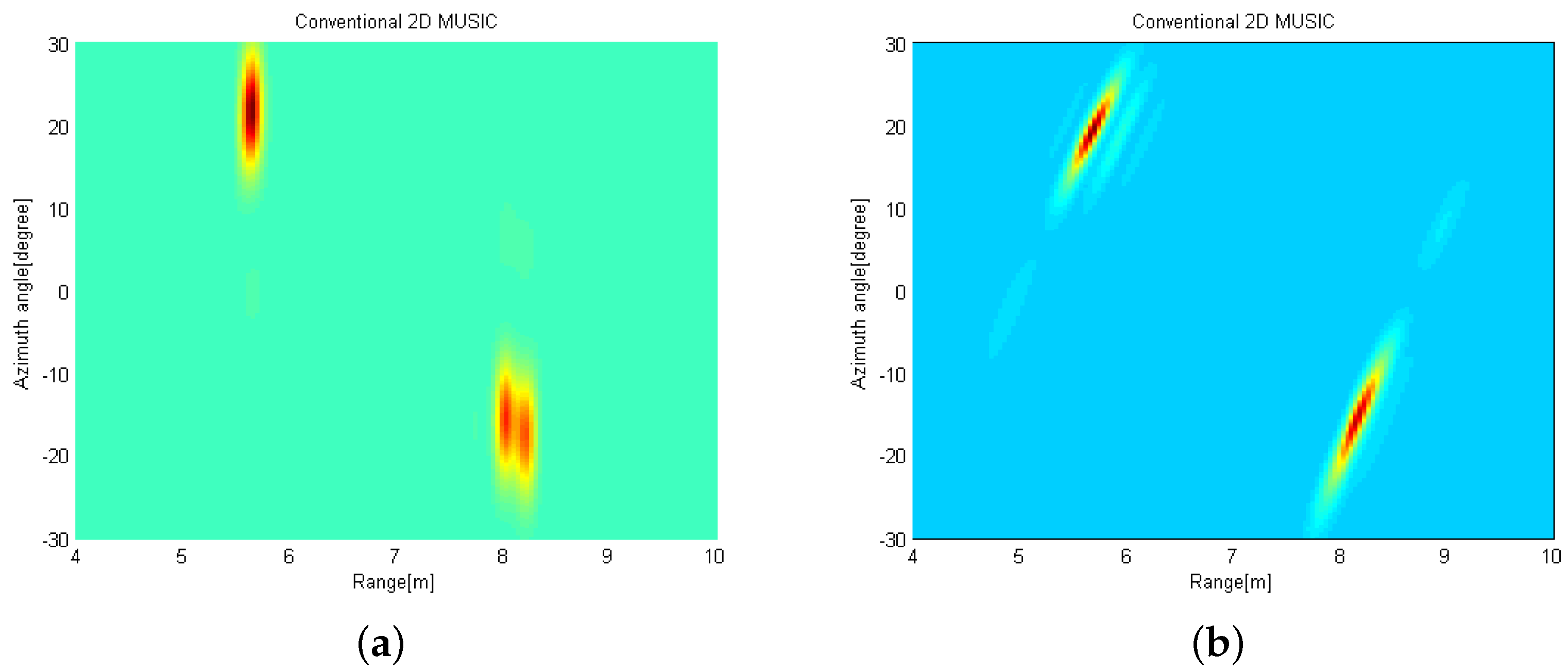

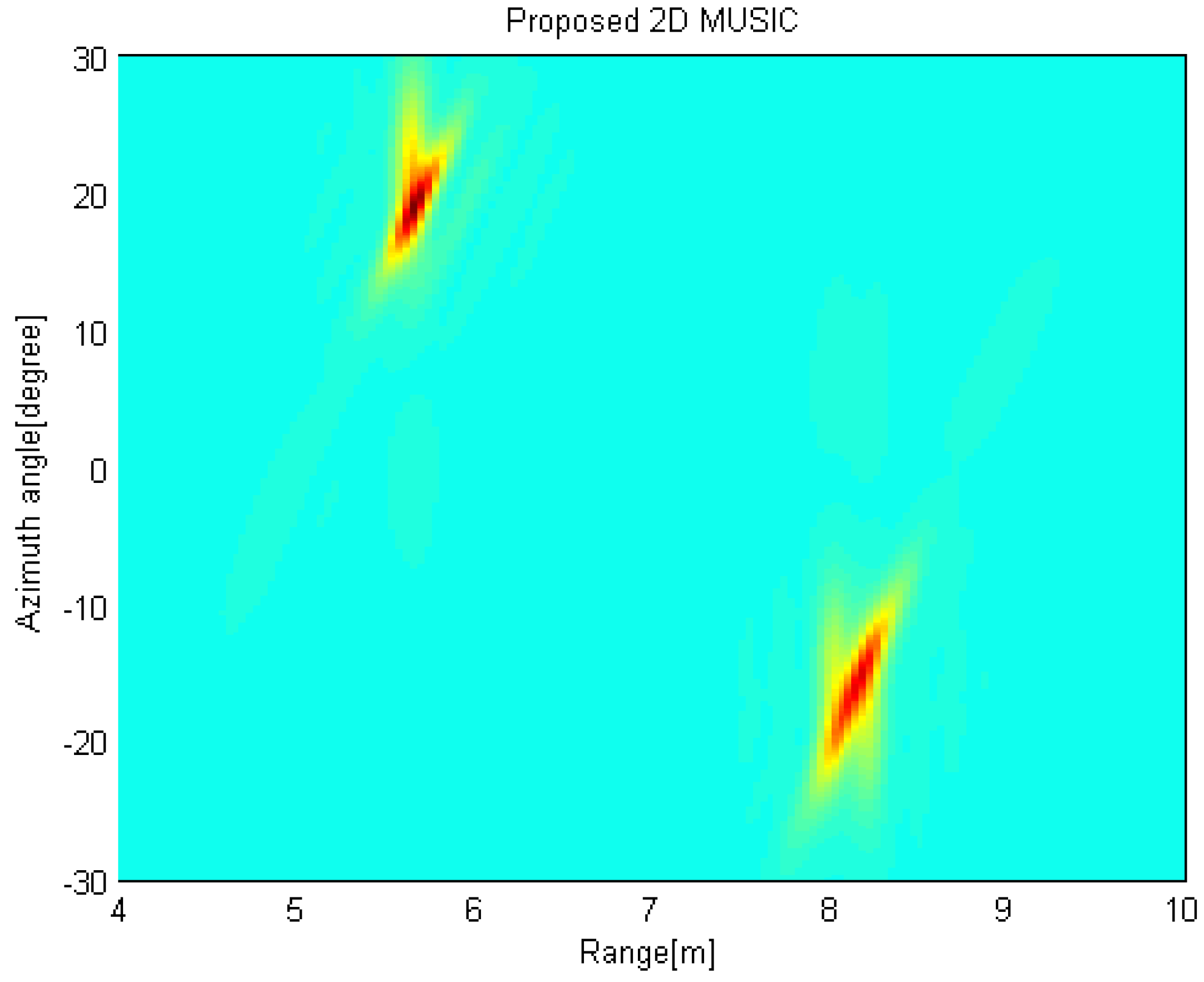

4.1. The 2D MUSIC-Based Radar Image Comparison

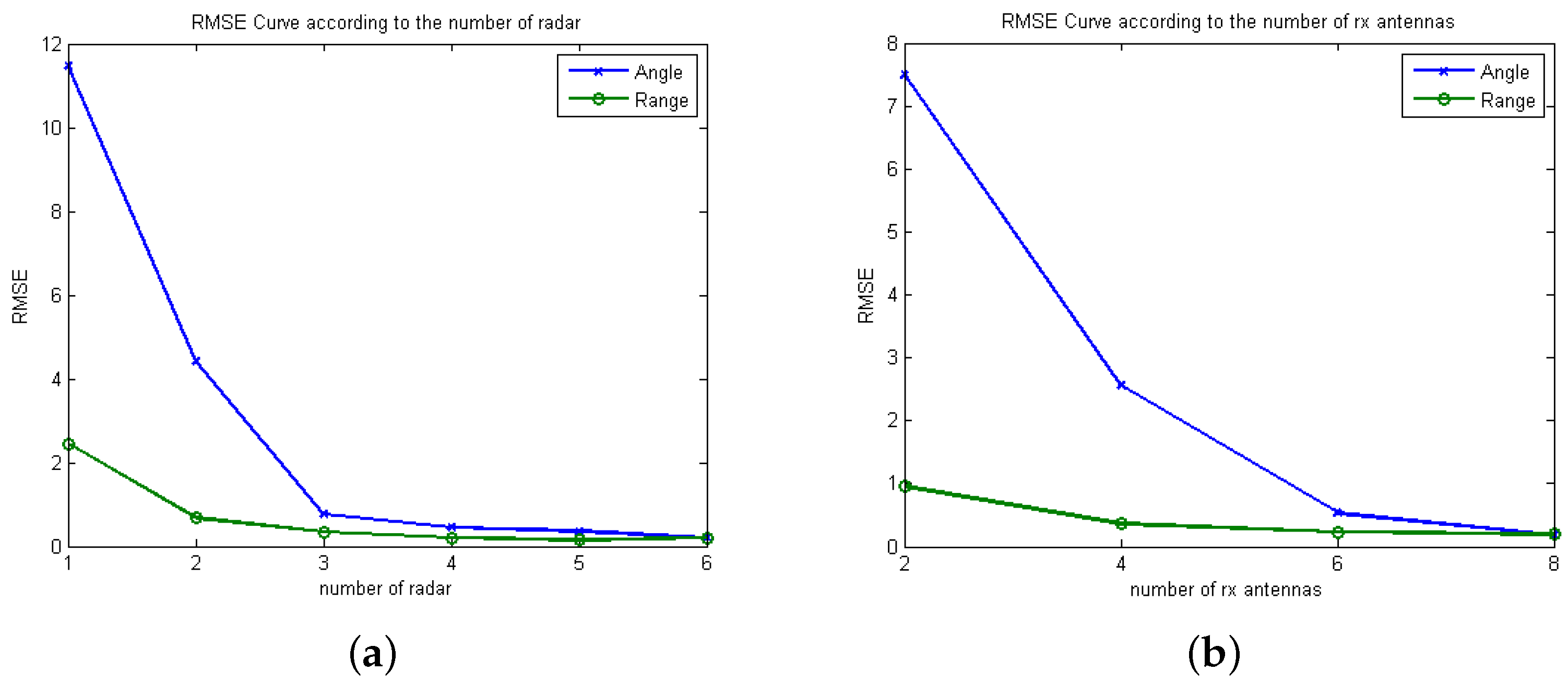

4.2. Mean Square Error Comparison

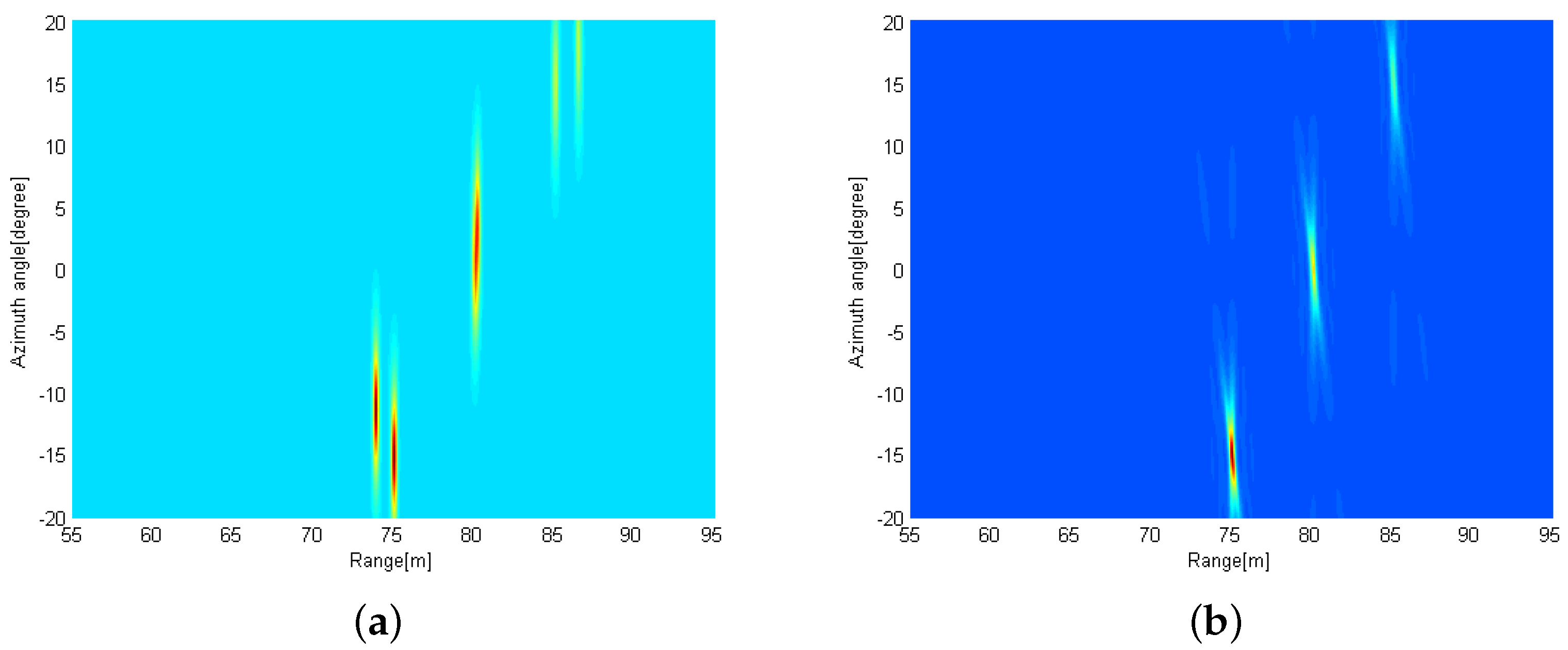

5. Experiment Results

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hasch, J.; Topak, E.; Schnabel, R.; Zwick, T.; Weigel, R.; Waldschmidt, C. Millimeter-Wave Technology for Automotive Radar Sensors in the 77 GHz Frequency Band. IEEE Trans. Microw. Theory Tech. 2012, 60, 845–860. [Google Scholar] [CrossRef]

- Jankiraman, M. Design of Multi-Frequency CW Radars; SciTech Publishing: Boston, MA, USA, 2007; Volume 2. [Google Scholar]

- Stove, A.G. Linear FMCW Radar Techniques. IEE Proc. F Radar Signal Process. 1992, 139, 343–350. [Google Scholar] [CrossRef]

- Hakobyan, G.; Yang, B. High-Performance Automotive Radar: A Review of Signal Processing Algorithms and Modulation Schemes. IEEE Signal Process. Mag. 2019, 36, 32–44. [Google Scholar] [CrossRef]

- Belfiori, F.; van Rossum, W.; Hoogeboom, P. 2D-MUSIC Technique Applied to a Coherent FMCW MIMO Radar. In Proceedings of the IET International Conference on Radar Systems (Radar 2012), Glasgow, UK, 22–25 October 2012; pp. 1–6. [Google Scholar] [CrossRef]

- Feger, R.; Wagner, C.; Schuster, S.; Scheiblhofer, S.; Jager, H.; Stelzer, A. A 77-GHz FMCW MIMO Radar Based on an SiGe Single-Chip Transceiver. IEEE Trans. Microw. Theory Tech. 2009, 57, 1020–1035. [Google Scholar] [CrossRef]

- De Wit, J.J.M.; van Rossum, W.L.; de Jong, A.J. Orthogonal Waveforms for FMCW MIMO Radar. In Proceedings of the 2011 IEEE RadarCon (RADAR), Kansas City, MO, USA, 23–27 May 2011; pp. 686–691. [Google Scholar]

- Kim, B.S.; Jin, Y.; Lee, J.; Kim, S. High-Efficiency Super-Resolution FMCW Radar Algorithm Based on FFT Estimation. Sensors 2021, 21, 4018. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.; Lee, K.K. Low-Complexity Joint Extrapolation-MUSIC-Based 2-D Parameter Estimator for Vital FMCW Radar. IEEE Sens. J. 2019, 19, 2205–2216. [Google Scholar] [CrossRef]

- Lee, J.; Park, J.; Chun, J. Weighted Two-Dimensional Root MUSIC for Joint Angle-Doppler Estimation with MIMO Radar. IEEE Trans. Aerosp. Electron. Syst. 2019, 55, 1474–1482. [Google Scholar] [CrossRef]

- Zhang, X.; Chen, W.; Zheng, W.; Xia, Z.; Wang, Y. Localization of Near-Field Sources: A Reduced-Dimension MUSIC Algorithm. IEEE Commun. Lett. 2018, 22, 1422–1425. [Google Scholar] [CrossRef]

- Kim, T.Y.; Hwang, S.S. Cascade AOA Estimation Algorithm Based on Flexible Massive Antenna Array. Sensors 2020, 20, 6797. [Google Scholar] [CrossRef] [PubMed]

- Nie, W.; Xu, K.; Feng, D.; Wu, C.Q.; Hou, A.; Yin, X. A Fast Algorithm for 2D DOA Estimation Using an Omnidirectional Sensor Array. Sensors 2017, 17, 515. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Amine, I.M.; Seddik, B. 2-D DOA Estimation Using MUSIC Algorithm with Uniform Circular Array. In Proceedings of the 2016 4th IEEE International Colloquium on Information Science and Technology (CiSt), Tangier, Morocco, 24–26 October 2016; pp. 850–853. [Google Scholar] [CrossRef]

- Goossens, R.; Rogier, H. A Hybrid UCA-RARE/Root-MUSIC Approach for 2-D Direction of Arrival Estimation in Uniform Circular Arrays in the Presence of Mutual Coupling. IEEE Trans. Antennas Propag. 2007, 55, 841–849. [Google Scholar] [CrossRef]

- Zhao, H.; Cai, M.; Liu, H. Two-Dimensional DOA Estimation with Reduced-Dimension MUSIC Algorithm. In Proceedings of the 2017 International Applied Computational Electromagnetics Society Symposium (ACES), Firenze, Italy, 26–30 March 2017; pp. 1–2. [Google Scholar]

- Xie, R.; Hu, D.; Luo, K.; Jiang, T. Performance Analysis of Joint Range-Velocity Estimator with 2D-MUSIC in OFDM Radar. IEEE Trans. Signal Process. 2021, 69, 4787–4800. [Google Scholar] [CrossRef]

- Shi, F. Two Dimensional Direction-of-Arrival Estimation Using Compressive Measurements. IEEE Access 2019, 7, 20863–20868. [Google Scholar] [CrossRef]

- Shin, D.H.; Jung, D.H.; Kim, D.C.; Ham, J.W.; Park, S.O. A Distributed FMCW Radar System Based on Fiber-Optic Links for Small Drone Detection. IEEE Trans. Instrum. Meas. 2017, 66, 340–347. [Google Scholar] [CrossRef]

- Lin, C.; Huang, C.; Su, Y. A Coherent Signal Processing Method for Distributed Radar System. In Proceedings of the 2016 Progress in Electromagnetic Research Symposium (PIERS), Shanghai, China, 8 August–11 September 2016; pp. 2226–2230. [Google Scholar] [CrossRef]

- Yin, P.; Yang, X.; Liu, Q.; Long, T. Wideband Distributed Coherent Aperture Radar. In Proceedings of the 2014 IEEE Radar Conference, Cincinnati, OH, USA, 19–23 May 2014; pp. 1114–1117. [Google Scholar] [CrossRef]

- Frischen, A.; Hasch, J.; Jetty, D.; Girma, M.; Gonser, M.; Waldschmidt, C. A Low-Phase-Noise 122-GHz FMCW Radar Sensor for Distributed Networks. In Proceedings of the 2016 European Radar Conference (EuRAD), London, UK, 5–7 October 2016; pp. 49–52. [Google Scholar]

- Commin, H.; Manikas, A. Virtual SIMO Radar Modelling in Arrayed MIMO Radar. In Proceedings of the Sensor Signal Processing for Defence (SSPD 2012), London, UK, 25–27 September 2012; pp. 1–6. [Google Scholar]

- Lee, J.; Hwang, S.; You, S.; Byun, W.; Park, J. Joint Angle, Velocity, and Range Estimation Using 2D MUSIC and Successive Interference Cancellation in FMCW MIMO Radar System. IEICE Trans. Commun. 2019. [Google Scholar] [CrossRef]

- Wax, M.; Kailath, T. Detection of Signals by Information Theoretic Criteria. IEEE Trans. Acoust. Speech Signal Process. 1985, 33, 387–392. [Google Scholar] [CrossRef] [Green Version]

- Grünwald, P.D.; Myung, I.J.; Pitt, M.A. Advances in Minimum Description Length: Theory and Applications; MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

| Parameter | Value |

|---|---|

| Type of Signal waveform | Linear Chirped waveform |

| Chirp BW | 1798.82 (Mhz) |

| Number of Chirps per frame | 256 |

| Number of Chirp Loops | 120 |

| Range resolution | 0.1953 (m) |

| Velocity resolution | 0.0472 (m/s) |

| Tx power | 12 dBm |

| Sampling Rate | 10,000 ksps |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Seo, J.; Lee, J.; Park, J.; Kim, H.; You, S. Distributed Two-Dimensional MUSIC for Joint Range and Angle Estimation with Distributed FMCW MIMO Radars. Sensors 2021, 21, 7618. https://doi.org/10.3390/s21227618

Seo J, Lee J, Park J, Kim H, You S. Distributed Two-Dimensional MUSIC for Joint Range and Angle Estimation with Distributed FMCW MIMO Radars. Sensors. 2021; 21(22):7618. https://doi.org/10.3390/s21227618

Chicago/Turabian StyleSeo, Jiho, Jonghyeok Lee, Jaehyun Park, Hyungju Kim, and Sungjin You. 2021. "Distributed Two-Dimensional MUSIC for Joint Range and Angle Estimation with Distributed FMCW MIMO Radars" Sensors 21, no. 22: 7618. https://doi.org/10.3390/s21227618

APA StyleSeo, J., Lee, J., Park, J., Kim, H., & You, S. (2021). Distributed Two-Dimensional MUSIC for Joint Range and Angle Estimation with Distributed FMCW MIMO Radars. Sensors, 21(22), 7618. https://doi.org/10.3390/s21227618