1. Introduction

Pollution represents probably the most important topic in the last decade, an issue that is constantly discussed in the media, in government meetings and environment activities. For the last decades, air pollution has seemed to be impossible to manage and control, but this can be combated with the help from advancements in technology. By making use of the Internet of Things and machine learning (ML) technology, the pollution could be contained and controlled, as well as predict future rises in air contamination in urban areas [

1].

Monitoring the air pollution levels implies the existence of a scale of the air quality, which can be measured with the help of sensor technology. The Air Quality Index (AQI) is based on the measuring of the liquid droplets and solid particles found in the air, which consists mostly of nitrogen dioxide (NO

2), ozone (O3), sulphur dioxide (SO

2) and carbon monoxide (CO). The key pollutants to calculate AQI are particulate matter (PM2.5 and PM10), which determines the class of air quality level (goof, satisfactory, moderately, poor, very poor and severe), with a range from 0 to 500; each category of AQI has an impact on human health and the environment. Each air pollutant has its own source and effects; thus, managing to get an image of the air pollution sources in an area based on the highest-level polluting particle, for example, a high level of nitrogen dioxide, tells us that in that area fossil fuel burning occurs, possible due to heavy traffic in the area, etc. [

2].

AQI, also referred to as the Air Pollution Index (API) or Pollutant Standard Index (PSI), creates an image of the air quality in a specific range for every air pollutant. For example, PM2.5 is determined by fitting the arithmetic average of hourly values recorded in the last 24 h. The specific index is classified from 1 to 6, each index having a PM2.5 concentration measured in µg/m

3. More details about the concentration of this particle are showed in

Table 1.

Each index is represented by a colour and indicates a level of health concern, as well as the measures needed to be applied regarding the level of air contamination. The example from the table is the standard for the National Air Quality Monitoring Network of Romania [

3].

In order to determine the exact value of AQI and to detect which air pollutants are responsible for this disaster, various sensors from several categories currently available could be used; for example, electrochemical sensors that are based on a chemical reaction between gases in the air and the electrode in a liquid inside a sensor, or photo ionization detectors, optical particle counters or even optical sensors [

4].

By placing such sensors across urban areas, in combination with weather detection sensors and creating a connection algorithm between them, one can create live reports of AQI and determine potentially dangerous zones. With the help of ML, one also can create forecasts regarding air pollution and prevent further increase in the Air Quality Index.

The paper is organized as follows: In Chapter 2, a literature review is presented. Chapter 3 includes details about the physical system. Chapter 4 presents details regarding the machine learning algorithm for combating pollution and the data collected and used in the case study. In Chapter 5 the results are analysed, while Chapter 6 discusses the conclusion of the research and future work.

2. Related Work

Awan et al. [

5] used a long short-term memory recurrent neural network (LSTM RNN) to perform traffic flow forecasting, with time-series traffic flow, air pollution and atmospheric data collected from the open datasets. The goal was to find solutions to obtain an accurate prediction for road traffic forecasting. In their paper, Zhu et al. [

6] proposed refined models to predict the hourly air pollution concentration on the basis of meteorological data of previous days by formulating the prediction over 24 h as a multi-task learning (MTL) problem. Kalajdjieski et al. [

7] evaluated four different architectures that utilize camera images to estimate the air pollution in big cities. Accurate air pollution prediction could be obtained by combining sensor data with camera images. Castelli et al. [

8] demonstrated that SVR (Support Vector Regression) with an RBF (radial basis function) kernel could assure accurate predictions of hourly pollutant concentrations, such as PM2.5, NO

2, SO

2 and carbon monoxide. Delavar et al. [

9] presented a comparative study of NARX (Nonlinear Autoregressive Exogenous Model), ANN (Artificial Neural Networks), GWR (Geographically Weighted Regression) and SVR machine learning methods in order to predict air pollution. The results revealed that the NARX method was the optimum one for their case study.

Wang et al. [

10] investigated the boundaries of Land-Use Regression (LUR) approaches and the potential of two different machine learning models: Artificial Neural Networks and Gradient Boost. They conclude that for the same pollutants, machine learning exhibited superior performance over LUR, demonstrating that LUR performance could benefit from understanding how the explanatory variables were expressed in the machine learning models. Guan and Sinnott [

11] used ANN models and LSTMs to predict high PM2.5. Their results show that accurate prediction was obtained with LSTM.

Adityia et al. [

12] used logistic regression to detect whether a data sample is either polluted or not polluted. The authors considered to predict future values of PM2.5 based on the previous PM2.5 readings. In [

13], several machine learning methods were analysed to predict the ozone level (O

3) in the Region of Murcia, Spain. The authors excluded from their study SO

2, NO

x(Nitrogen Oxides), NH

3 (Ammonia) and CO.

In [

14], a machine learning model that combines sparse fixed station data with dense mobile sensor data was used to estimate the air pollution in Sydney. Shaban et al. [

15] presented three machine learning algorithms to build accurate forecasting models for one-step and multi-step ahead of concentrations of ground-level ozone, nitrogen dioxide and sulphur dioxide.

Zang and Woo [

16] show that the hybrid distributed, fixed IoT sensor system is effective in predicting air quality. In [

17], the authors are presenting a review on studies related to air pollution prediction using machine learning algorithms based on sensor data in the context of smart cities.

Lim et al. [

18] show that data collected from mobile sampling with multiple low-cost sensors could be used to model and map street-level air pollution levels in urban locations. Kang et al. [

19] reviewed the published research results relating to air quality evaluation using methods of artificial intelligence, decision trees and deep learning.

In [

20] is proposed a methodology to evaluate and compare deep learning models for multivariate time series forecasting, which includes lagged transformations, hyper-parameter tuning, statistical tests and multi-criteria decision making. In [

21] are presented applications of deep learning (DL) techniques to predict air pollution time series. In their case study, 8 h-averaged surface ozone concentrations were predicted using deep learning consisting of a recurrent neural network (RNN) with long short-term memory

Song et al. [

22] proposed a machine learning framework (Deep-MAPS) to for fine-granular PM2.5 inference based on fixed and mobile air quality sensing data. Ameer et al. [

23] performed pollution prediction using four advanced regression techniques and present a comparative study to determine the best model for accurately predicting air quality with reference to data size and processing time.

In [

24] is presented a comparative study of various statistical and deep learning methods to forecast long-term pollution trends for PM2.5 and PM10. The case study is based on data from sensors available in a big city from India. In their paper, Chen et al. [

25] compared several Aerosol Optical Depth-PM2.5 models, including Extra Trees (ET), Random Forest (RF), Deep Neural Network (DNN), and Gradient Boosting Regression Tree (GBRT). Their results indicate that the ET model performs best in terms of the model effectiveness and feature interpretation on the training dataset. Lana et al. [

26] presented a methodology based on the construction of regression models to predict levels of different pollutants (CO, NO, NO

2, O

3 and PM10) based on traffic data and meteorological conditions, from which an estimation of the predictive relevance (importance) of each utilized feature can be estimated by virtue of their particular training procedure. The study was done considering historic traffic and pollution data of the city of Madrid, Spain.

Liang et al. [

27] conducted a study using zero-inflated negative binomial models to estimate the association between long-term county-level exposures to NO

2, PM2.5 and O

3 and county-level COVID-19 case-fatality and mortality rates in the United States. In [

28], the authors calculated the wildfire-smoke-related health burden and costs in Australia for the most recent 20 fire seasons. Their results show that the 2019–2020 season was a major anomaly in the recent record, with many smoke-related premature deaths in addition to a large number of hospital admissions for cardiovascular and respiratory disorders.

4. Machine Learning in Combating Pollution

In this paper, machine learning is used to predict behaviours regarding the air quality index near the six air quality sensing units installed in Bucharest by the Romanian National Environmental Protection Agency. The predicted behaviours should be used as an incentive to act, make the required changes in cleaning the air and recommending the population to avoid the area.

4.1. Data Collection and Their Use in Different Machine Learning Algorithms

The first step in using the ML algorithms is preparing the data; for this purpose, the data were split into 70% training data and 30% test data. Our data consist of 8700 entries from March 2019 to February 2020, during the height of the COVID-19 pandemic, when open circulation was not permitted, and another 8700 entries from March 2020 to February 2021, a period in which, depending on the country, restrictions were lifted. The data were separated into five columns: Temperature in Celsius, CO quantity, NO

2 quantity, SO

2 quantity and PM2.5 quantity. Afterwards, a suitable algorithm needs to be selected; some have tried using artificial neural networks [

1,

29,

33,

34], but others have used machine learning algorithms [

1,

13,

29].

The most common machine learning algorithm used in other papers is Random Forests; this algorithm works by generating multiple decision trees while it is being trained. A decision tree splits data into separate categories finishing only when there are no more distinctive elements that can be further classified. Each decision tree becomes a class on its own and each tree should be uncorrelated to another [

35].

By being a core component of the Random Forests approach, decision-tree learning is natural to be used on its own. This approach uses a singular decision tree to map the data, make observations and predict outcomes.

Another used algorithm is Support Vector Machines, which can do both classification and regression tasks. SVMs split the data along a hyperplane, a boundary that helps to classify data. The support vectors are the nearest points of each class, equidistant from the hyperplane. Any modification to these support vectors changes the classification [

36].

One of the aims of smart cities is to act based on data obtained through sensors. However, it must be noted that the sensors may cause failures and errors when obtaining data; hence, it is necessary to develop a model to predict the values of interest in order to control air quality [

13].

The data used for the case study were obtained from one of the six atmospheric stations in Bucharest. Each station is placed in key points of the city, where the number of people commuting and living in the area is high. The data were collected every hour, covering the climatic parameters and chemical elements present in the air. The stations can be different regarding the equipment, but they offer valuable data.

4.2. Examples of Using the Results Obtained after Processing with Machine Learning

The data collected and processed via the algorithms presented earlier can be used to create a forecast of the weather and the AQI. Since we live in the era of technology and every device is connected to the internet, no matter its dimensions or purpose, IoT technology became the main picture in the eyes of developers, companies and scientists. By connecting the system presented in this paper to the cameras inspecting the traffic and the big sources of air pollution, such as factories and landfills, one can create a complex system that can warn against and combat air pollution.

For example, if near an atmospheric station is recorded an increase in the NO

2 particles, one can conclude that these particles are originated from the burning of fossil fuels, whose main source is from the use of automobiles. From these data one can suppose that a traffic jam takes place near the station. The system can further check via the traffic cameras that this can really be the source of the air pollutant. If yes, the system can start searching for a way of manipulating the traffic lights so that the circulation can be improved, eventually warning other traffic participants to take other routes. The principle diagram of such a system is presented in

Figure 2.

Another example would be when high levels of CO2 are detected. This can further indicate a potential fire somewhere near the station. The source of the particles could be everywhere in a fairly wide radius. To limit the space, wind sensors can help find the source. By tracking the wind speed and direction, the system can create a map of possible sources for the pollutant. It can then search for the source visually with the help of the cameras, eventually warning the authorities of a potentially detected fire.

4.3. Using Machine Learning for Data Collected from an Atmospheric Station in Bucharest, Romania

As further presented in this paper, data were collected from one of the six atmospheric stations in Bucharest set in relevant areas of Bucharest. These are marked as B-x, where x is the number of the station from 1 to 6. Their emplacement is shown in

Figure 3. These stations are equipped with a multitude of sensors—gas, optical and meteorological ones—and transmit the information collected to the database, creating reports for every air pollutant. Such reports are showed in

Figure 4 and

Figure 5, where visible are the graphics and values collected by the B-1 station, placed in the vicinity of Lake Morii, the station from which the data used in this article were collected as input data for processing with specific machine learning algorithms.

Figure 3 shows the map with the positioning of the six atmospheric stations in Bucharest, from which data were taken about the level of pollution in that area, as we mentioned that these stations are positioned in crowded areas of Bucharest. In this figure, too, at the bottom of it, one can notice the pollution levels highlighted by distinct colours, presented in

Table 1, for all cases that may exist.

The acquired data on the pollution level are diverse (

Figure 2) and can reflect in time the evolution of the concentration of pollutants existing in the monitored area, thus providing access, through the pollution monitoring platform of Bucharest, to these data in real time but also to the archive of data stored over the past years. A concrete example in this sense is presented in

Figure 5, where the evolution of PM2.5 in a certain time interval is highlighted.

In this paper, through machine learning techniques, we highlighted the RMSE (Root Mean Square Error) value of the different models that predict the evolution of temperature. RMSE is the standard deviation of residues (prediction errors).

Therefore, we analysed based on several algorithms the evolution of temperature depending on the level of pollution, referring to several pollution factors.

These tests were initially performed for the past years, specifically to be able to validate the model of the proposed algorithm to be implemented. In this paper are presented the conclusive results from the proposed algorithms in relation to the types of pollutants, for two distinct time periods, with the aspects clarified in the next chapter.

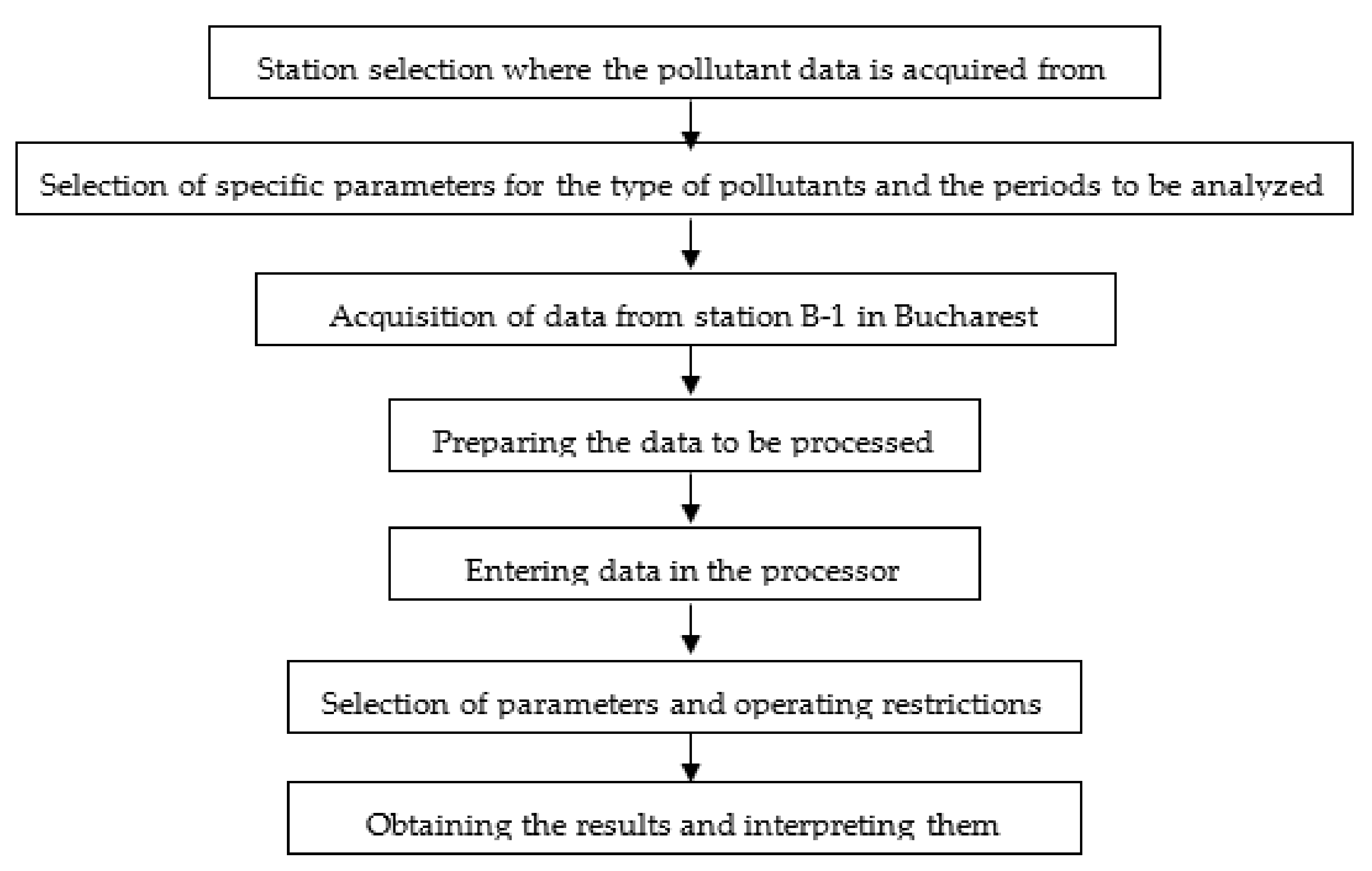

In order to eloquently synthesize the work stages, but also the specific implications necessary to be realized for carrying out this research, we present a descriptive diagram with all the steps taken to carry out this research.

Figure 6 shows the basic information flow that led to the results:

5. Results and Discussion

The study was conducted for the period March 2019–February 2021. For the analysis, three algorithms were used, and we wanted to highlight the evolution of temperature according to the different pollutants (nitrogen dioxide, sulphur dioxide, carbon monoxide and powder in suspension).

The algorithms used for the analysis are the linear regression algorithm, support vector machines with Gaussian kernel and Gaussian process regression (GPR) using an exponential kernel.

Gaussian process regression models are nonparametric kernel-based probabilistic models.

Consider the training set {(x

i,y

i); i = 1,2,...,n}, where x

i∈ℝd and y

i∈ℝ, drawn from an unknown distribution. A GPR model addresses the question of predicting the value of a response variable ynew, given the new input vector xnew, and the training data. A linear regression model is of the form

where ε∼N(0, σ

2).

The error variance σ2 and the coefficients β are estimated from the data. A GPR model explains the response by introducing latent variables, f(xi), i = 1, 2, ..., n, from a Gaussian process (GP), and explicit basis functions, h. The covariance function of the latent variables captures the smoothness of the response and basic functions project the inputs x into a p-dimensional feature space.

A GP is a set of random variables, such that any finite number of them have a joint Gaussian distribution. If {f(x), x ∈ ℝ

d} is a GP, then given n observations x

1, x

2, ..., x

n, the joint distribution of the random variables f(x

1), f(x

2), ..., f(x

n) is Gaussian. A GP is defined by its mean function m(x) and covariance function, k(x, x′); that is, if {f(x), x ∈ ℝ

d} is a Gaussian process, then E(f(x)) = m(x) and Cov[f(x), f(x′)] = E[{f(x) − m(x)} {f(x′) − m(x′)}] = k(x, x′)” [

37].

For the period March 2019–February 2020, the pollution sensors from the station for which the case study was performed did not provide enough data and thus could not perform a complete analysis, but the data were processed, and the graphs obtained presented in comparison with charts for the period March 2020–February 2021.

To highlight the difference between the two periods, namely, March 2019–February 2020 and March 2020–February 2021, the graphs presented in

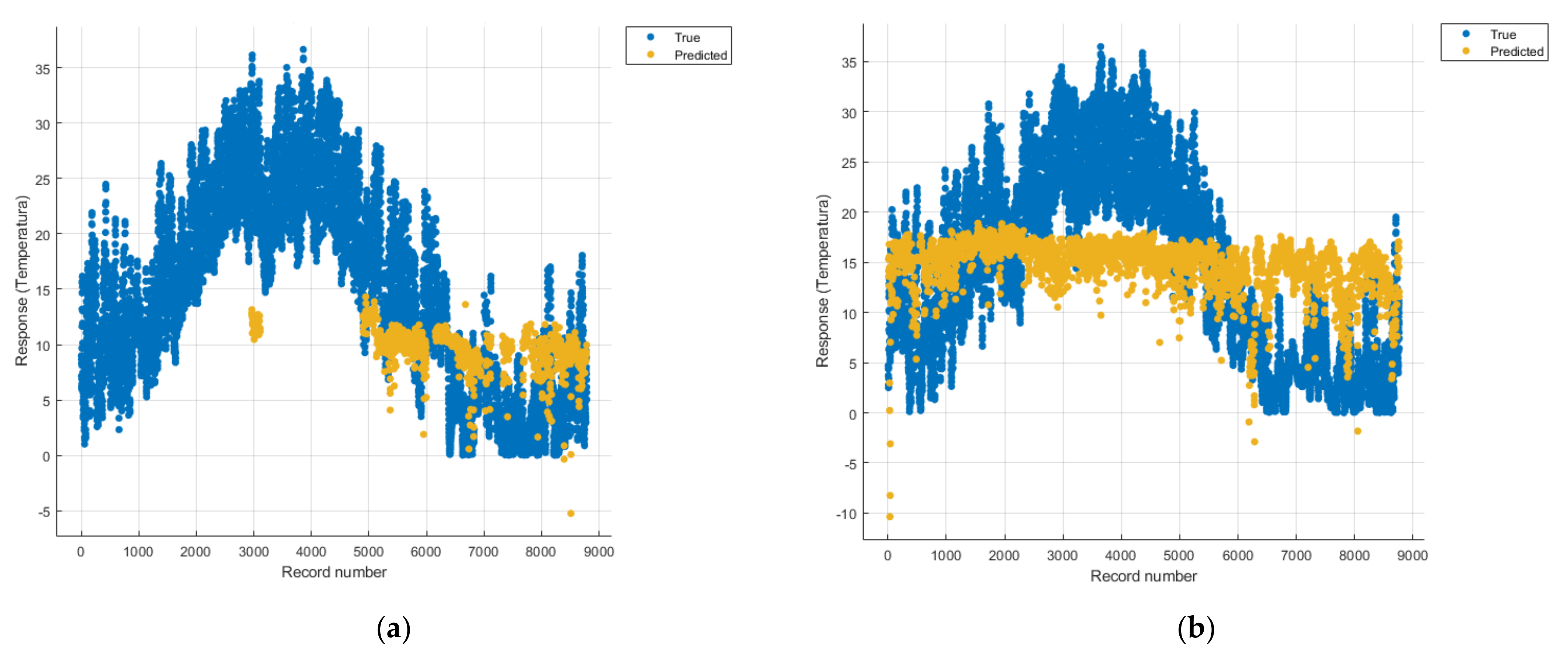

Figure 7 were drawn.

Both models are of the GPR type with an exponential kernel and were optimized. The introduction of the model without optimization was considered redundant since its performance was lower than the optimized one. On the red chart (March 2020–February 2021), around the records 2200–4400 are the summer months in which there is a natural increase in temperature, but in the rest of the chart one can see a tendency to increase temperatures over time.

The linear regression models were trained in 7 s while using a linear method. The SVM models were trained using a fine Gaussian function with the kernel scale of 0.5 and was trained in 11 s. The GPR models were optimised using a Bayesian optimization method with the acquisition function: expected improvement over an hour training time, the best kernel function found was a non-isotropic exponential.

Having a very large volume of input data and considering that it is not relevant to be presented in full in the article, we have highlighted in the

Table 2, some such data in order to exemplify the type and form of this input data used within machine learning algorithms.

The key inputs were: Temperature (in Celsius), NO2 concentration, SO2 concentration, CO concentration and PM2.5 concentration. All records in the dataset period were considered while training the models, and no filters were considered to be needed. Rows where one or more entries were missing have been used in each individual pollutant model and were shown but not considered in the model regarding all pollutants.

The output was a model that is used to predict how the temperature will fluctuate during the year.

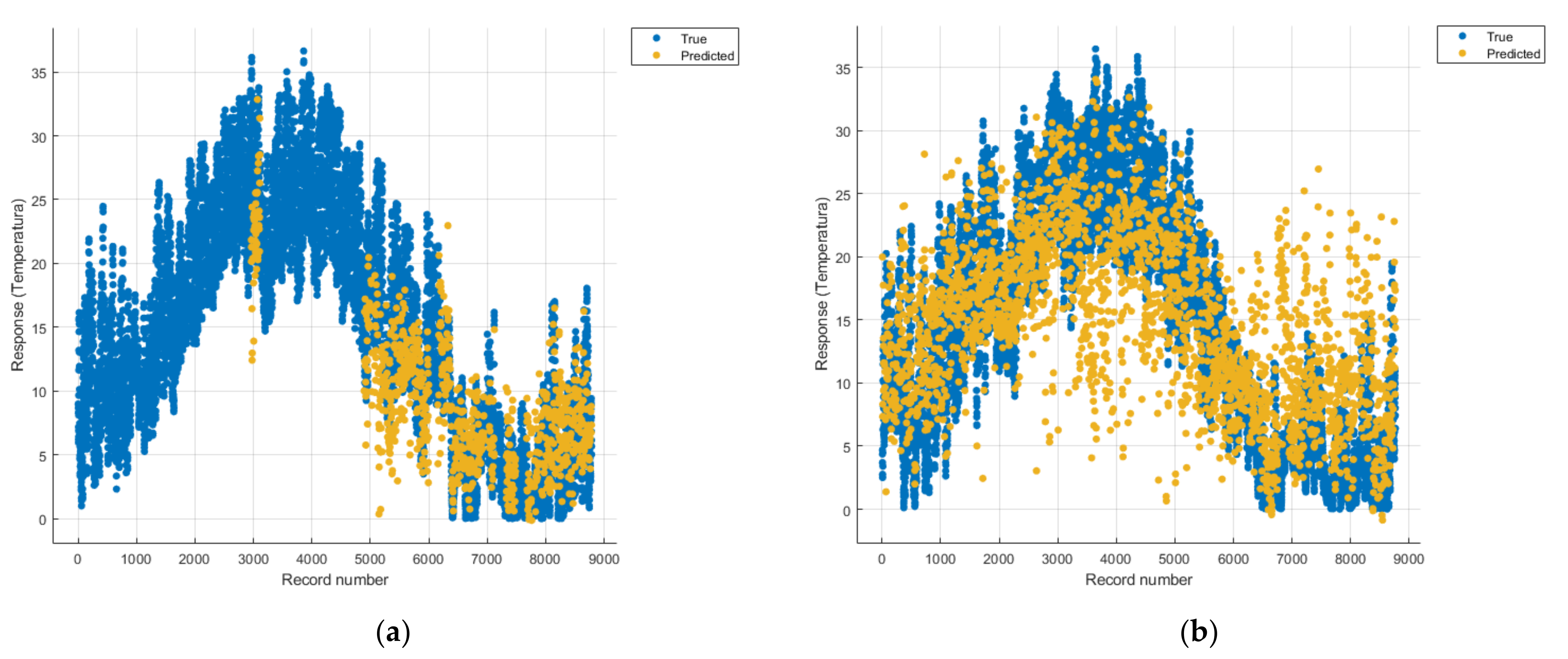

Figure 8,

Figure 9,

Figure 10,

Figure 11,

Figure 12,

Figure 13,

Figure 14,

Figure 15,

Figure 16,

Figure 17,

Figure 18,

Figure 19,

Figure 20,

Figure 21 and

Figure 22 show the graphs obtained based on the three algorithms: linear regression algorithm, support vector machines with the Gaussian kernel and Gaussian process regression, compared for the periods March 2019–February 2020 and March 2020–February 2021, with specifying that the data for the period March 2019–February 2020 were not complete.

Each model has a figure considering all the pollutants together as well as a figure of each one separately (only SO2, only NO2, only CO and only PM2.5), given that all the pollutants together give us a different model overall than when seeing the influence of only one pollutant.

In each analysis, the model and all the data were presented without the separation of the test and training data sets.

Each model has a figure considering all pollutants, only SO2, only NO2, only CO and only PM2.5; this considering all pollutants gives us a different model overall, compared to seeing the influence of only one pollutant.

GPR models were optimized in MATLAB using the Bayesian Optimization Algorithm using functions such as expected improvement. The Bayesian optimization algorithm tries to minimize the function of the model in a limited field, and the family of improvement functions evaluates the values that bring an improvement within the model and ignores those that do not minimize the model. The expected improvement functions use the following relation [

38]:

where x

best as the location of the lowest posterior mean.

In

Figure 18a one can see (in yellow) the identified model that makes a prediction of the temperature rise considering all types of pollutants, but due to a lack of data, cannot identify a correct prediction.

The model in

Figure 13a is unoptimized, and due to the Gaussian kernel, it looks like the model in

Figure 18a (discussed above), the lack of data not being able to lead to a correct prediction.

The linear regression model in

Figure 8a is unsuitable for analysis because no linear function can be identified to model the data correctly.

For the period March 2020–February 2021, the graphs were drawn based on complete data sets, the predictions obtained being much more suggestive.

In the graphs in

Figure 9b,

Figure 14b and

Figure 19b, where the influence of the amount of nitrogen dioxide on the temperature was considered, a tendency to increase the temperature at higher NO

2 concentrations can be observed. In contrast, in the graphs in

Figure 10b,

Figure 15b and

Figure 20b, where the influence of the amount of sulphur dioxide on the temperature was considered, a tendency to decrease the temperature at higher SO

2 concentrations can be observed.

Similarly, in the graphs in

Figure 11b,

Figure 16b and

Figure 21b, where the influence of monoxide amounts on temperature was influenced, a tendency to decrease the temperature at higher CO concentrations can be observed.

In the case of the graphs in

Figure 12b,

Figure 17b and

Figure 22b, where the influence of the amount of PM2.5 particles on temperature was considered, no significant increase in temperature was observed.

To help decide the performance of the models, we used the Root Mean Square Error (RMSE) value (

Table 3). RMSE is the standard deviation of the residuals (prediction errors). Residuals are a measure of how far from the regression line data points are. The lower the RMSE value the better the performance.

It was noted that the worst algorithm for the used datasets was the Linear Regression, and the Gaussian kernel SVM was the second worst, fitting the data a bit better; however, the best result was obtained by using an optimized GPR algorithm, with which much smaller error is obtained compared to the other methods.