Abstract

Fractional vegetation cover is a key indicator of rangeland health. However, survey techniques such as line-point intercept transect, pin frame quadrats, and visual cover estimates can be time-consuming and are prone to subjective variations. For this reason, most studies only focus on overall vegetation cover, ignoring variation in live and dead fractions. In the arid regions of the Canadian prairies, grass cover is typically a mixture of green and senescent plant material, and it is essential to monitor both green and senescent vegetation fractional cover. In this study, we designed and built a camera stand to acquire the close-range photographs of rangeland fractional vegetation cover. Photographs were processed by four approaches: SamplePoint software, object-based image analysis (OBIA), unsupervised and supervised classifications to estimate the fractional cover of green vegetation, senescent vegetation, and background substrate. These estimates were compared to in situ surveys. Our results showed that the SamplePoint software is an effective alternative to field measurements, while the unsupervised classification lacked accuracy and consistency. The Object-based image classification performed better than other image classification methods. Overall, SamplePoint and OBIA produced mean values equivalent to those produced by in situ assessment. These findings suggest an unbiased, consistent, and expedient alternative to in situ grassland vegetation fractional cover estimation, which provides a permanent image record.

1. Introduction

The Fractional vegetation cover (FVC) is defined as the percentage of the ground surface covered by vegetation elements from the overhead perspective [1]. This metric describes vegetation quality and composition, which contributes to ecosystem change and control transpiration and photosynthesis among other terrestrial processes [2]. For this reason, analyses of fractional vegetation cover are widely used in land surface modelling [3], ecosystem monitoring [4], and natural resource management [5]. The ability to conduct systematic, accurate, and repeatable vegetation fractional cover estimation is a fundamental part of ecosystem biodiversity and function studies.

In arid and semiarid rangelands, live and senescent vegetation materials are often intermixed and difficult to discriminate [6]. These materials both play vital, but different, roles in ecological cycling and natural grassland conservation. Senescent vegetation consists largely of prostrate litter and standing dead grass, but like live vegetation, provides forage for grazers [7], alters the microclimate at a local scale [8], provides habitats to many species at risk [9], and is crucial in grassland fire dynamics [10,11]. Hence, the simultaneous estimation of both green and senescent vegetation covers is important to understand, manage and conserve arid and semi-arid rangelands.

Many efforts have been made to measure vegetation fractional cover in the field. Conventional methods of ground FVC estimation include the line-point intercept transect (LPIT) [12] and the Daubenmire cover class methods [13]. Both are labor-intensive and prone to overestimating standing vegetation cover due to field survey design [14].

At large spatial scales, satellite-based remote sensing can be effective in estimating FVC [15,16]. Various vegetation indices have been developed to estimate FVC in arid and semiarid grasslands [2,15], and spectral mixing analysis (SMA) has been applied to FVC modeling [17]. However, satellite remote sensing approaches need to be calibrated with in situ data to maintain accuracy and account for spatial–temporal heterogeneity [18,19]. FVC field measurement remains critical to provide a baseline for improving inversion algorithms and validating remote sensing products [20].

Close range photography has become a popular method to estimate FVC in the field. It offers a nondestructive approach that has the potential to be equally as, or more accurate, faster, and less biased than, in situ techniques [21,22,23,24]. Digital images taken of small plots processed using supervised [25] or unsupervised [26] classification, object-based image analysis (OBIA) [27], and rule-based decision or machine learning [28] have been successful in objectively quantifying the percent of vegetation cover in a range of environments. In concert, many tools have been developed to process close-range digital images, including SamplePoint [29], VegMeasure [30], and Canopeo [23]. However, most research has focused on estimating the live or green vegetation fractional cover, ignoring senescent vegetation, which is a significant component of arid and semiarid prairie grasslands.

In arid and semiarid regions, senescent plant materials include standing dead biomass, dormant grass, or litter from nondecomposed biomass. These are often intermixed with the green vegetation making it challenging to differentiate the senescent and green vegetation fractional cover from the background [6]. While a few studies have compared the results of different image-processing methods to estimate green, nongreen and senescent vegetation [6,31], the accuracy of different approaches involving close-range imagery has yet to be thoroughly evaluated. This is particularly the case under conditions where senescent and green vegetation are mixed in native grasslands.

The main objective of this research is to evaluate the accuracy and consistency of vegetation fractional cover extracted using different methods, including in situ assessment and image analysis. To fulfill this objective, we used a camera stand to acquire close-range digital photographs in Grassland National Park (GNP), Saskatchewan, a typical Northern Mixed Grassland region. The digital images were then analyzed with SamplePoint software for visual interpretation and with Environment for Visualizing Image (ENVI) software [32] for pixel-based classification and object-based image analysis (OBIA) to obtain the fractional cover of senescent and green vegetation. We used in situ assessment to quantify the fractional vegetation cover (FVC) as a reference. FVC estimates from image processing were compared to field measurements to corroborate links and consistency.

2. Materials and Methods

2.1. Study Area

Fieldwork was conducted in the west block of Grasslands National Park (GNP; 49° N, 107° W), located in the semiarid, mixed grassland ecoregion of southern Saskatchewan, Canada [33], from 28 June to 3 July 2018. GNP lies in a region with a mean annual temperature of 4.1 °C and total annual precipitation of 352.5 mm [34]. Almost half of the annual precipitation falls as rain during the spring growing season, followed by a long, dry summer [35], during which annual plants die and perennial herbaceous plants wither above ground while their below-ground parts persist [36]. Therefore, there is a high proportion of brown and grey senescent vegetation (nonphotosynthetic biomass, NPV) above ground in addition to green, photosynthetic vegetation (PV).

2.2. Field Experiment Design

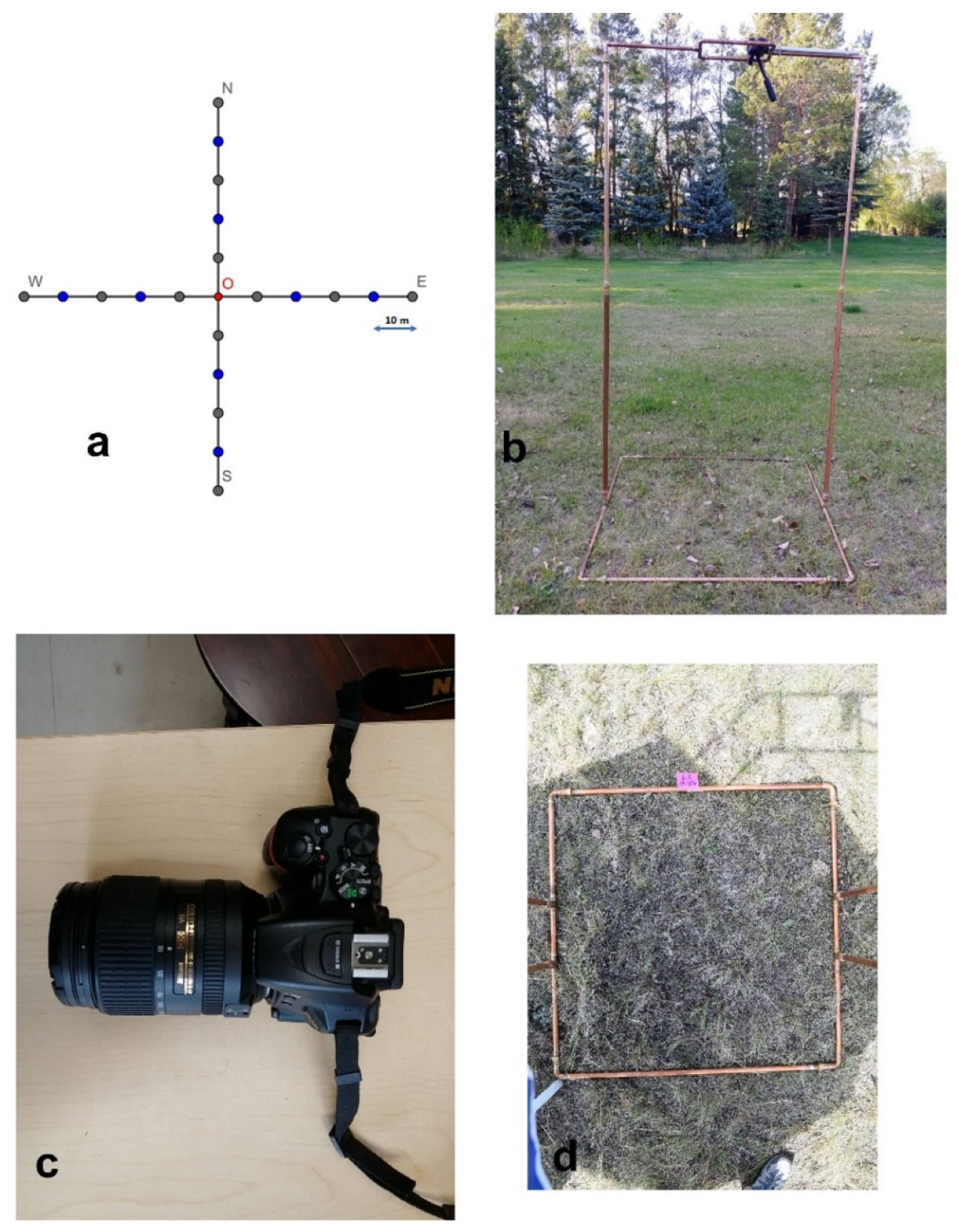

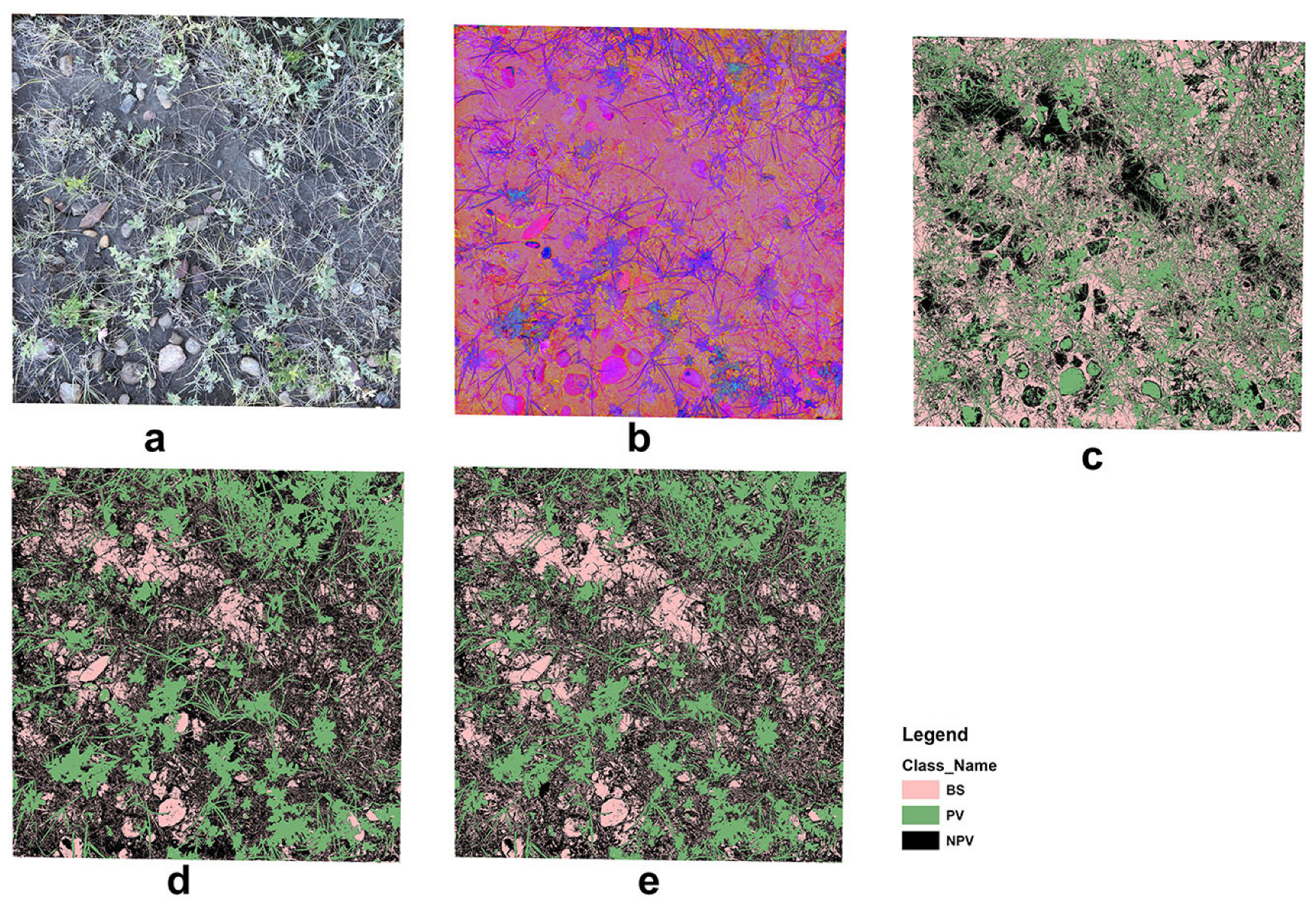

We built a collapsible camera stand, two meters high with a one square meter (1 m × 1 m) base frame; each edge of the frame was marked by decile ticks to facilitate in situ measurement (Figure 1). A tripod with a pan-tilt head and independent axes and controls was attached to the top of the camera stand (Figure 1b). A NIKON D5500 camera with an AF-S DX 18–55 mm f/3.5–5.6 G lens was attached to the head (Figure 1c). The camera was held at a nadir position relative to the base frame by adjusting the pan-tilt head. An umbrella was used to shade the base above the frame. We used a remote release to control the shutter and mobile phone connected with the camera by Bluetooth to check photos. The camera was preset to user-shutter-priority mode with a maximum of 1/200 s shutter speed to avoid wind effects on the grass canopy imagery [37].

Figure 1.

Illustration of field setup: (a) study-site sampling design, (b) camera stand, (c) NIKON D5500 camera, and (d) overhead photo of stand base frame.

We surveyed nine sites across the study area’s dominant topographical features: valley, sloped, and upland grasslands. The distance between sites was at least 1.5 km to prevent the spatial autocorrelation [38]. At each site, two perpendicular transects, 100 m long and intersecting at their centers, were surveyed, oriented in the cardinal directions (Figure 1a). Five images were taken along each arm at 10 m intervals, excluding the center, thus, 20 images were recorded per site (Figure 1d). At each plot, we recorded the percentage cover of grass, forb, shrub, standing dead material, litter, lichen, moss, bare soil, and rock by visual assessment within each base frame after taking the photo of that frame. Percent cover was estimated to the nearest 5% for cover values ranging from 10 to 90% and the nearest 1% for values less than 10% and greater than 90% [39,40]. To limit the subjective bias, two people independently assessed the in situ cover and their interpretations were averaged. We sorted the original record of each plot fraction by summing up into PV (green grass, forb, shrub, green moss), NPV (standing dead material, litter), and BS (bare soil, rock, lichen).

2.3. Image Analysis

We estimated the fractional cover of PV, NPV, and BS from the digital image using four methods: (1) visual classification using SamplePoint software, (2) unsupervised image classification, (3) supervised image classification, and (4) object-based image analysis (OBIA). The unsupervised and supervised classifications as well as the OBIA were conducted using ENVI 5.5 (Harris Geospatial Inc. Broomfield, CO, USA) and ArcMap 10.6 (Esri Inc. Redlands, CA, USA).

SamplePoint is a popular software for visual inspection of ground cover in grassland and pasture research and management [29]. This software loads images listed by the user in an Excel spreadsheet, and systematically or randomly identifies and locates a user-defined number of sample points in the image. It then moves from one point to the next so that the user can classify each point visually [22]. We identified the 10 × 10 (100 in total) points systematically spread across each image for visual classification using the same nine categories as in the field assessment and grouped the results into PV, NPV, and BS for future analysis. Two independent assessments were performed on 180 images using the SamplePoint software and the results from the two assessments were averaged.

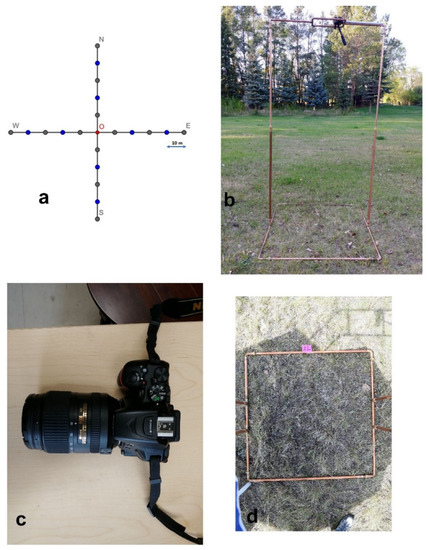

For the unsupervised classification, we followed methods described by Smith, Hill and Zhang [26,41]. Images were transferred from the original RGB (red, green, blue) color spectrum into HIS (hue, intensity, saturation) space. Images were divided into 14 classes using the ISODATA algorithm in ENVI 5.5 image analysis software. The original 14 classes were visually examined with reference to the original photos and were merged to derive PV, NPV, and BS. For the supervised classification, we used the maximum likelihood classification algorithm by predefining the regions of interest (ROI) for PV, NPV, and BS. For each class, at least 50 ROIs were selected for training.

For OBIA, we used the feature extraction module in ENVI 5.5. In this method, an image is segmented into homogeneous areas based on two parameters: scale and merging level (spectral information). The scale parameter is unitless and controls the relative size of image objects (polygon or segment), with a smaller scale parameter resulting in more image objects. Merging combines adjacent segments with similar spectral attributes, a larger merging parameter results in more adjacent segments with similar colors and border sizes. Images were segmented at a 40–70 scale level and 10–30 merging level; choosing a high scale level results in fewer defined segments, while choosing a high merging level results in more segments to be aggregated into small segments within larger, textured areas. Specific parameter settings were adjusted interactively using the preview window in the feature extraction module because the texture and color features for individual images were dependent on the site-specific plant composition and background [6,26]. This also allowed us to predefine the training data for PV, NPV, and BS. The image was classified using the support vector machine (SVM) algorithm with all available attributes (spatial, spectral, and textural).

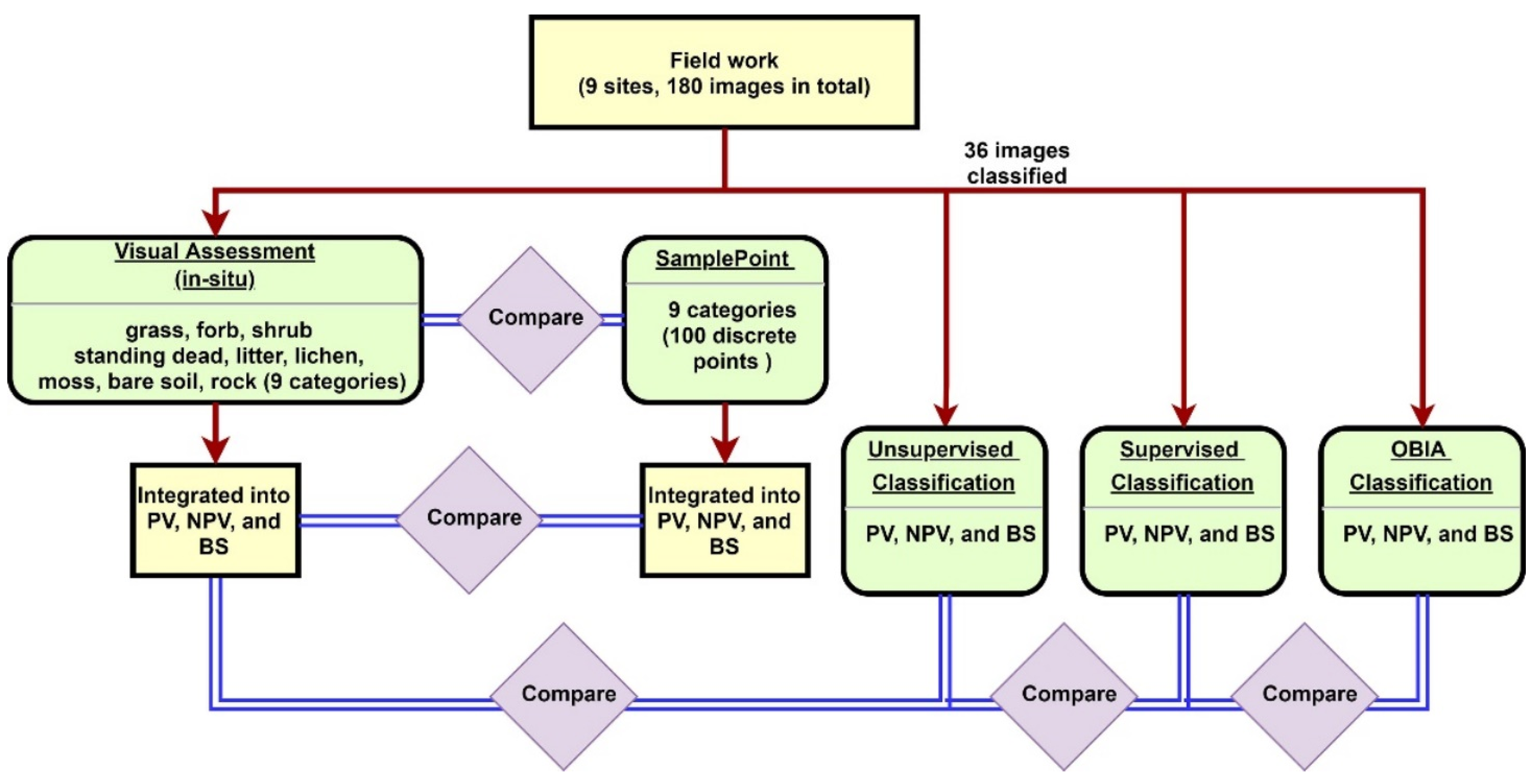

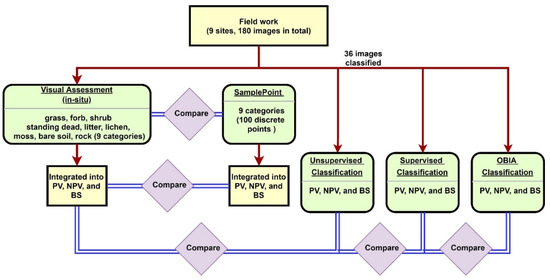

180 images (20 images per site for 9 sites) were used to compare the nine categories inventoried for both the field assessment and SamplePoint classification (Figure 2). We binned the nine categories into PV, NPV, and BS and compared results from both field and SamplePoint based on these bins. The unsupervised, supervised, and OBIA classification methods were applied to 36 selected images (4 images randomly per site) using PV, NPV, and BS categories. Results were compared to the field assessment.

Figure 2.

Methodology flowchart.

Coefficient of determination (R2), root-mean-square error (RMSE) [41,42,43], and the Bland-Altman plot (Tukey mean difference plot) [44] were used to evaluate the comparison. The Bland-Altman plot allows the identification of any systematic differences between two measurements or possible outliers by plotting the differences between the two methods against their averages. The Cartesian coordinates of a given sample S with values S1 and S2 are:

The mean of n sample pairs’ difference (S1-S2) is the estimated bias, and the standard deviation (SD, σ) of the differences indicating the random fluctuations around this mean.

In the Bland-Altman plot, horizontal lines are drawn at the mean difference and at the limits of agreement. These are defined as the mean difference plus and minus 1.96 times the SD of the differences. The mean difference plus and minus 3.0 times the SD lines are defined as the extreme limits of agreement. A Wilcoxon test [45] of the paired samples was performed to compare paired data among the in situ assessments and four other methods (SamplePoint estimation, unsupervised classification, supervised classification, and OBIA). The Wilcoxon test was chosen to assess whether the population mean ranks differed among two applied FVC estimation methods. The paired Student’s t-test was not used because the data violate the normality distribution assumption [46]. In the paired samples Wilcoxon test, a p-value of 0.05 was selected as the threshold for significance.

3. Results and Discussion

3.1. Comparison of SamplePoint Estimation and In Situ Assessment

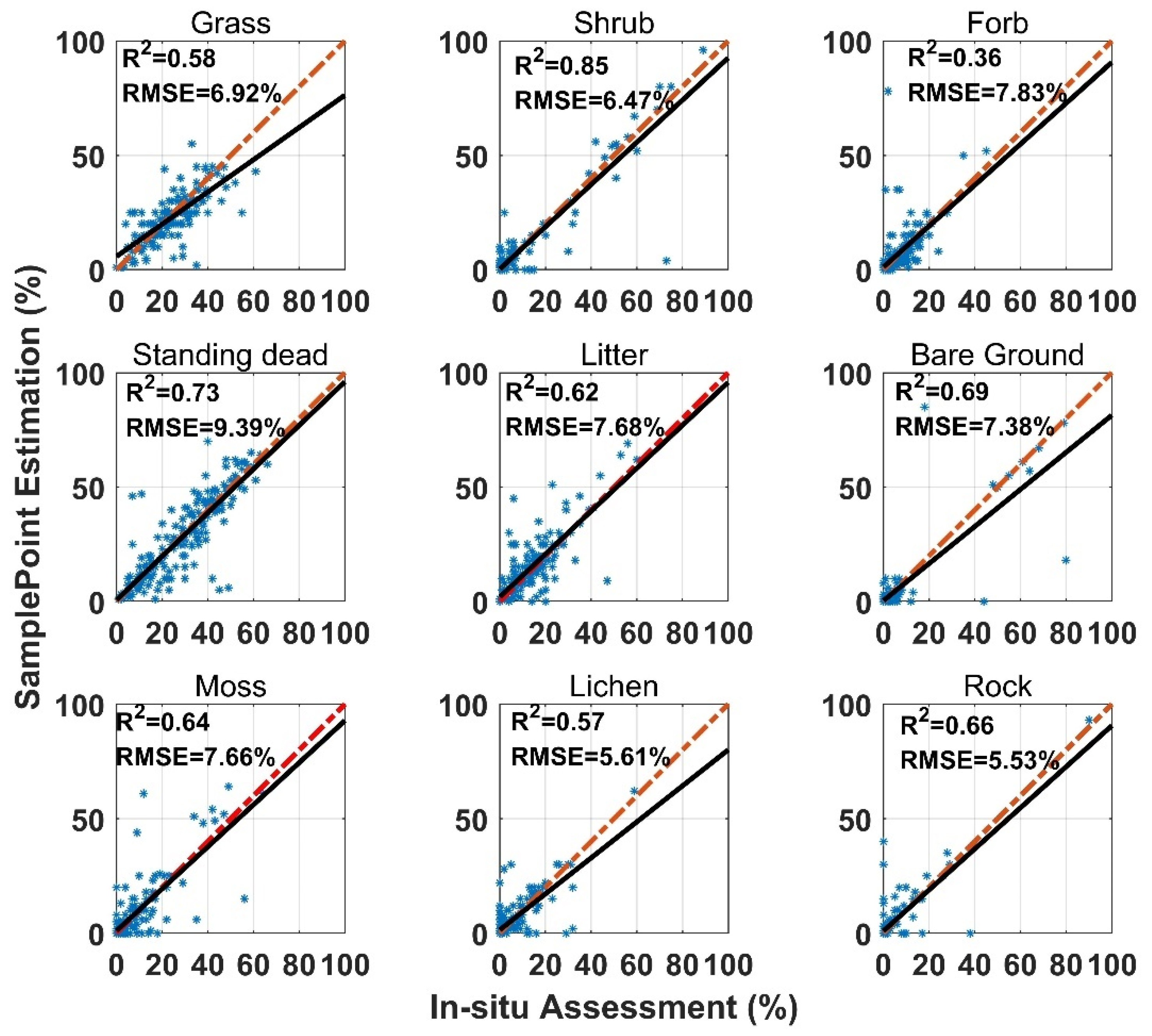

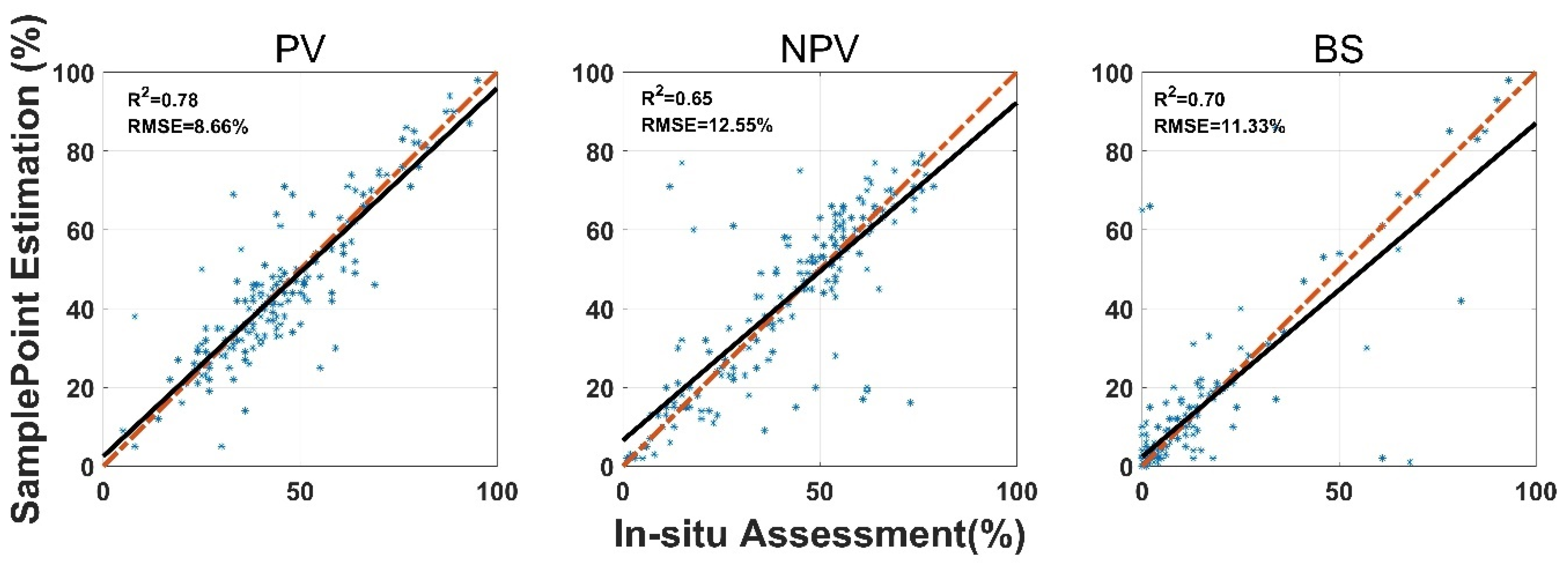

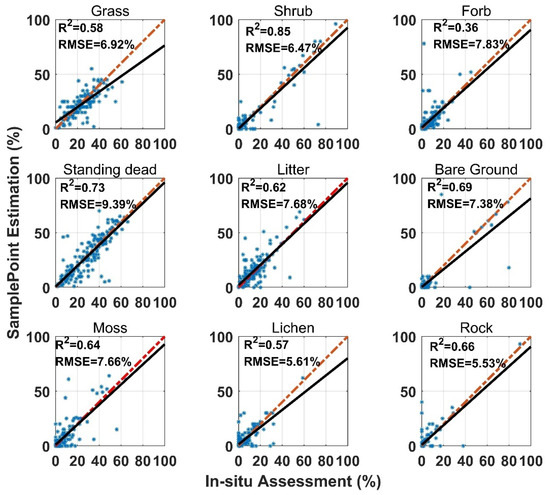

SamplePoint FVC estimation was consistent with the in situ assessment, however, the correlation coefficient (R2) varied among land surface-cover subcategories (Figure 3). Shrub and standing dead material had the highest R2 values (0.85 and 0.73), while forb and lichen had the lowest R2 values (0.36 and 0.57). Standing dead material had the highest RMSE (9.93%) (Figure 3). Shrub, standing dead material, and litter estimates from SamplePoint and in situ assessments were similar, as indicated by their regression and identity lines (Figure 3). Grass, bare ground, and lichen tend to be overestimated by SamplePoint when the fractional cover is larger than 20% (Figure 3). For the upscaling categories, the PV has the highest R2 (0.78), while the R2 values for NPV and the BS are 0.65 and 0.70, respectively (Figure 4).

Figure 3.

Comparison of in situ assessment and SamplePoint estimation for nine FVC categories (red dashed line is the 1:1 relationship; dark solid line is the linear regression). The regression significance level (p-value) is 0.01.

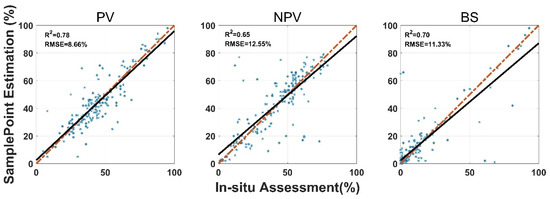

Figure 4.

Comparison of in situ assessment and SamplePoint estimation for PV, NPV, and BS fraction cover (red dashed line is the 1:1 relationship; dark solid line is the linear regression). The regression significance level (p-value) is 0.01.

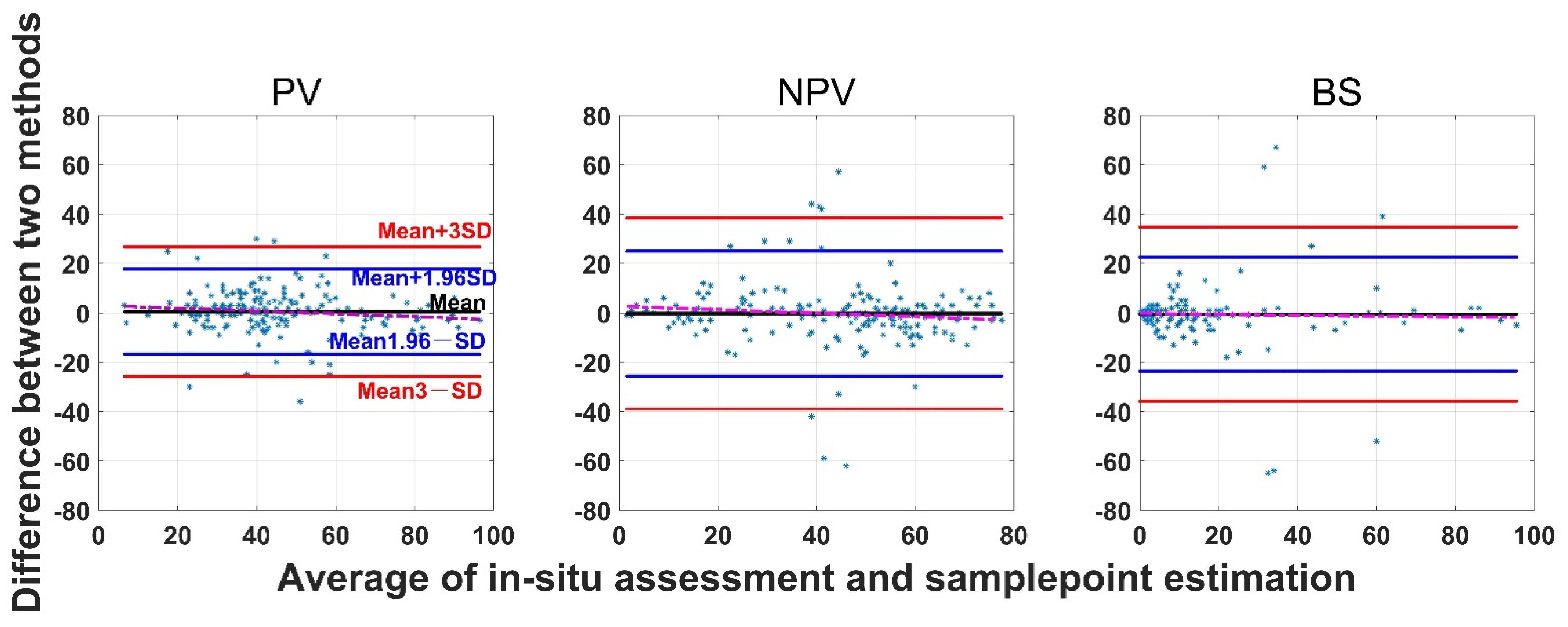

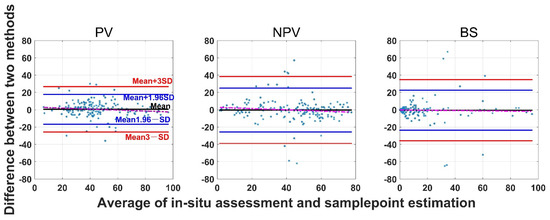

The SamplePoint and in situ estimates of PV are close (Figure 4). The BS from SamplePoint tends to be overestimated above 25% of the fractional cover, compared with the in situ assessment (Figure 4). Similarly, NPV from SamplePoint is overestimated above 55% of the fractional cover and underestimated below 55% of the fractional cover (Figure 4). Most of the differences between the SamplePoint assessment and the in situ estimation of PV are within a ±3σ range, with several exceptions very close to the ±3σ threshold (Figure 5). This indicates that the SamplePoint and in situ methods are very similar. For the NPV and BS, almost all the differences are within the ±3σ range, although there are outliers that depart from the ±3σ range (Figure 5 and Table 1). These results are comparable to those reported in Booth et al. [47]. Since the theoretical basis of SamplePoint relies on a discrete classification for certain points (10 × 10 grids in this study) rather than on global image classification, there may be considerable bias for images in complex scenes. Like in situ assessment, which can be subjective, visual interpretation using SamplePoint software depends on the investigator experience.

Figure 5.

Bland-Altman plot of the difference between SamplePoint estimation and in situ assessment for PV, NPV, and BS fraction covers. Refer to the left panel for threshold values. The red dotted line is the regression between the two-method mean and the two-method difference.

Table 1.

Mean, standard deviation (SD), and mean ± 1.96 (or 3) SD of the differences between in situ assessment and four imagery methods (SamplePoint estimation, unsupervised classification, supervised classification, and OBIA).

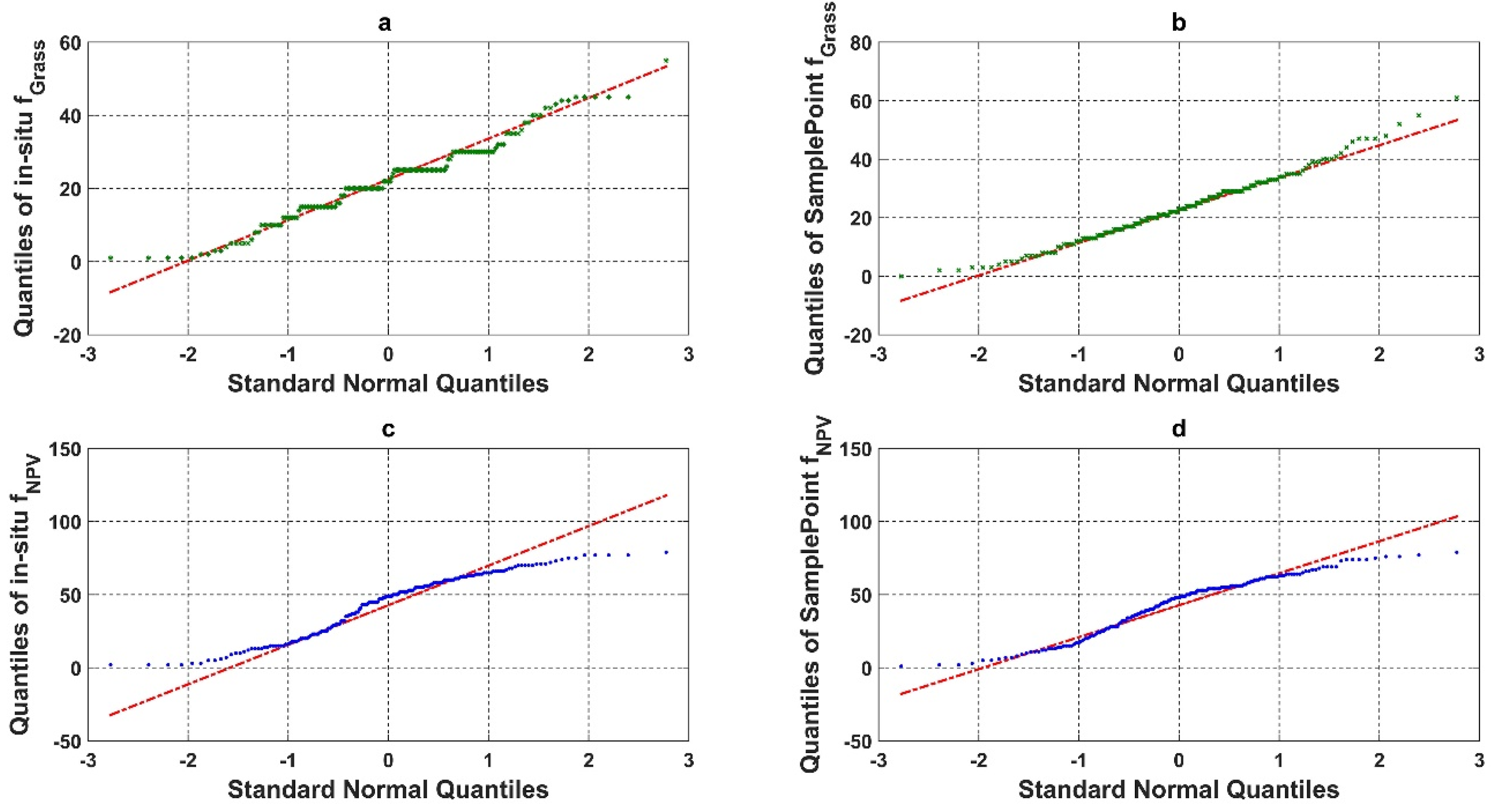

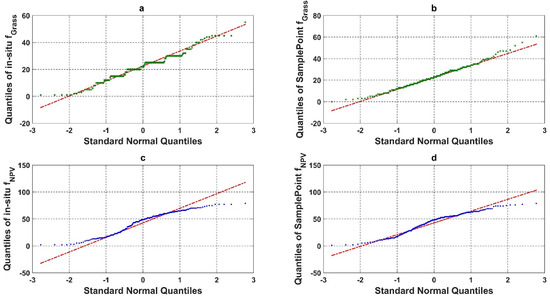

We compared the quantile–quantile plot for the in situ assessment and SamplePoint classification of grass and NPV fractional cover (Figure 6). The in situ assessment of green grass cover based on the nine FVC categories had a clear clustering pattern (step curves) (Figure 6a). The piecewise polyline indicated that the in situ assessment had a categorical trend for the fractional cover estimation. This was caused by the protocol used in situ (as described in Section 2.2) as the fractional cover was estimated to the nearest 5% for values ranging from 10 to 90% and to the nearest 1% for values less than 10% and greater than 90% [39,40]. SamplePoint results resembled a normal distribution with a slight, right-skewed distribution (Figure 6b). This phenomenon indicated that SamplePoint can achieve a continuous estimate of detailed ground fractional cover, even when inputs are discrete points (10 × 10 grid in this study).

Figure 6.

Quantile-quantile plot (qqplot) of (a) in situ assessment of Grass fractional cover (%), (b) SamplePoint classification of Grass fractional cover (%), (c) in situ assessment of NPV fractional cover (%), and (d) SamplePoint classification of NPV fractional cover (%).

The in situ assessment of green grass cover based on the three-category schema (PV, NPV, and BS) showed no categorical trend but an under dispersed trend with negative excess kurtosis (Figure 6c). Comparatively, the qqplot from SamplePoint showed a slightly S-shaped curve, suggesting that it is approaching a normal distribution.

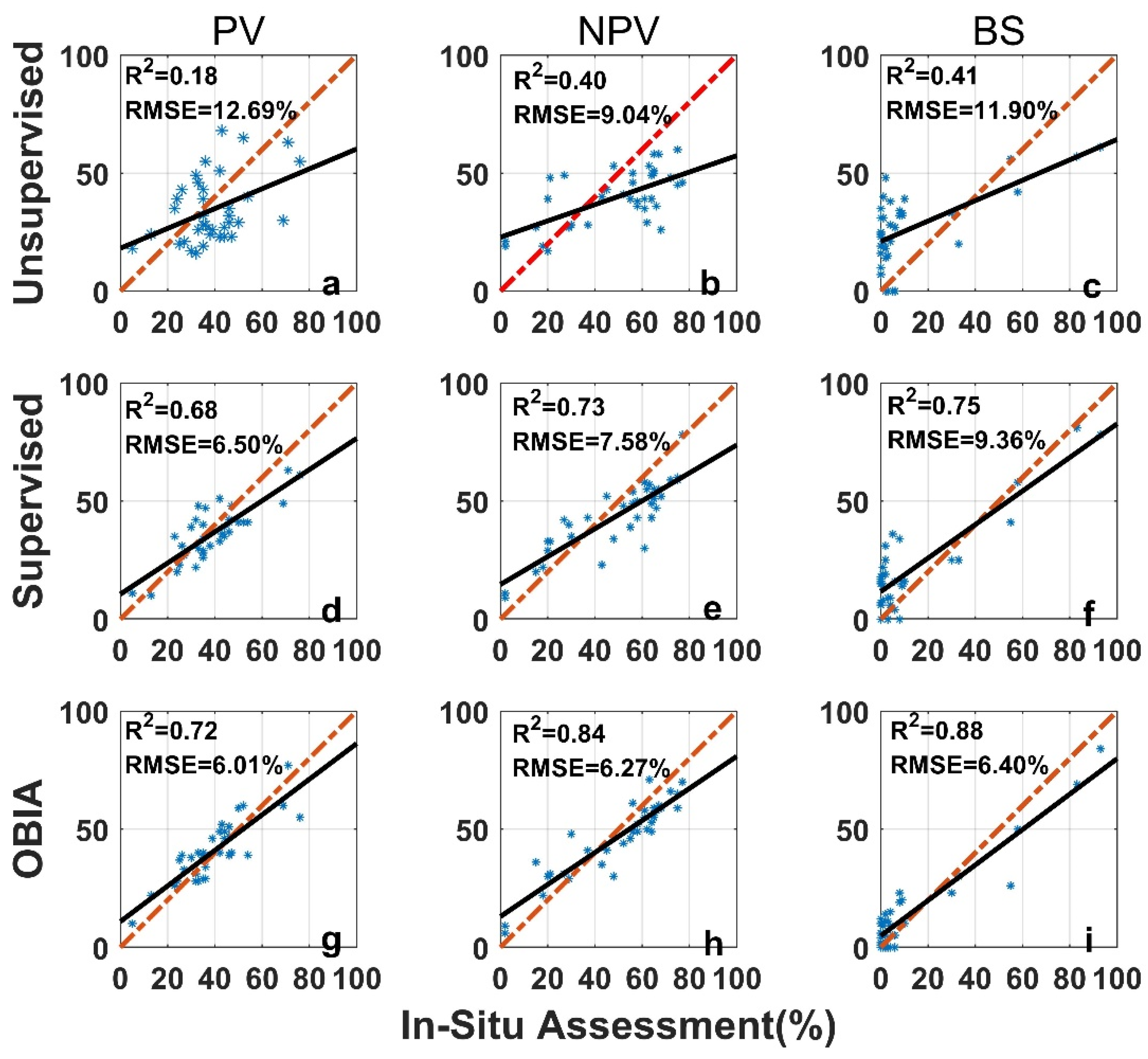

3.2. Comparison of Image Analysis Methods and In Situ Assessments

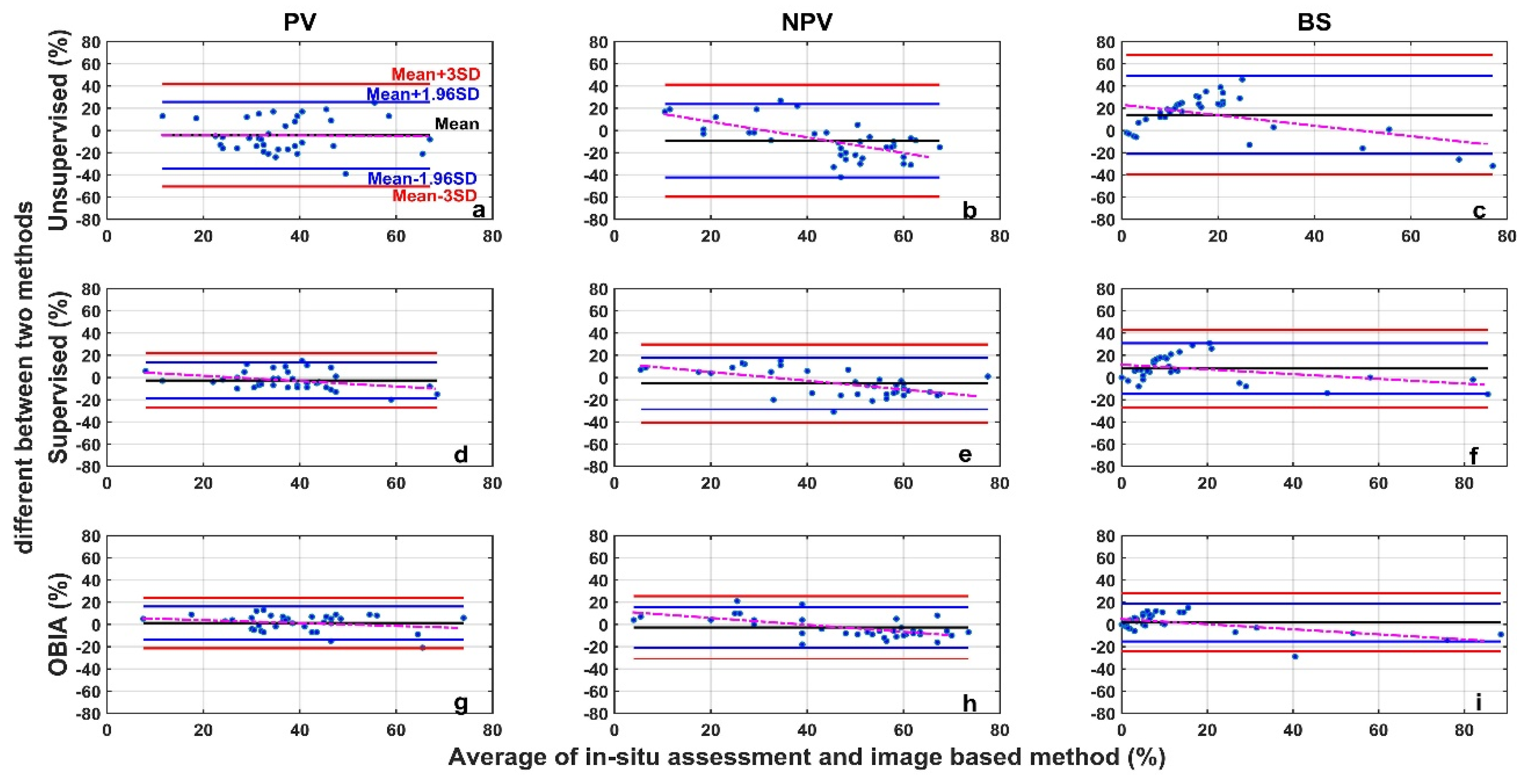

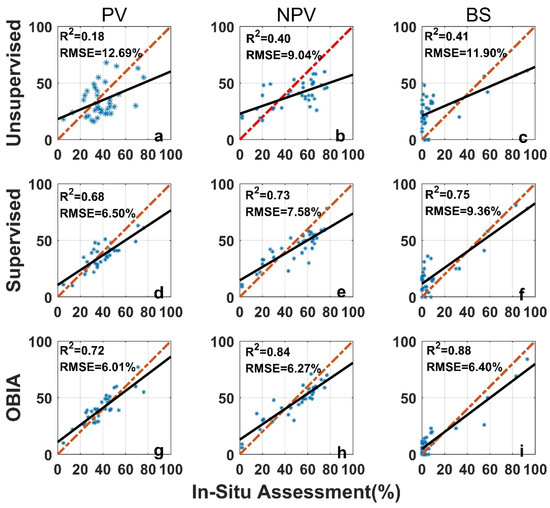

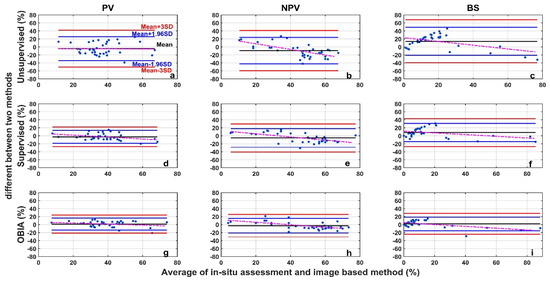

The image analysis methods used in this study, including the unsupervised classification, the supervised classification, and the OBIA, perform differently than the in situ assessment (Figure 7). For all three categories (PV, NPV, and BS), the unsupervised image classification has the lowest R2 (all below 0.5) and the largest RMSE (Figure 7). The OBIA has the highest R2 (all above 0.7) and a relatively smaller RMSE (Figure 7). The supervised image classification performance is moderate. For the 36 images tested, the differences between image analysis methods and in situ estimates are within the ±3σ range (Figure 8), however, the range varies among methods (Table 1 and Figure 8). We found larger SDs (>15%) for unsupervised classifications (Table 1). Hence, ranges of ±1.96σ and ±3σ are smaller for the OBIA, moderate for the supervised method, and largest for the unsupervised method (Figure 8).

Figure 7.

Comparison of in situ assessment and three image analysis methods (unsupervised (a–c), su-pervised (d–f), and OBIA (g–i)) for PV (a,d,g), NPV (b,e,h), and BS (c,f,i) fraction covers (the red dashed line is the 1:1 relationship and the dark solid line is the linear regression). The re-gression significance level (p-value) is 0.01.

Figure 8.

Bland-Altman plot of the difference between image analysis methods (unsupervised (a–c), supervised (d–f), and OBIA (g–i)) and in situ assessment for PV (a,d,g), NPV (b,e,h), and BS (c,f,i) fraction covers. Refer to the top-left panel for threshold values. The red dotted line is the regression between the two-method mean and the two-method difference.

Mean differences for both PV and NPV were negative (Table 1) when we performed supervised and unsupervised classification analyses, indicating that these two methods underestimate PV and NPV, compared with the in situ assessment. In the OBIA, PV and BS are overestimated (mean differences > 0) whereas NPV is underestimated (Table 1).

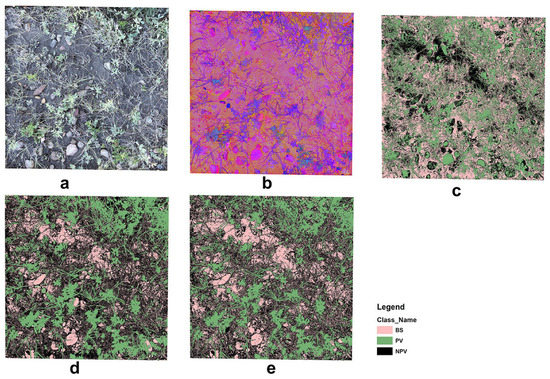

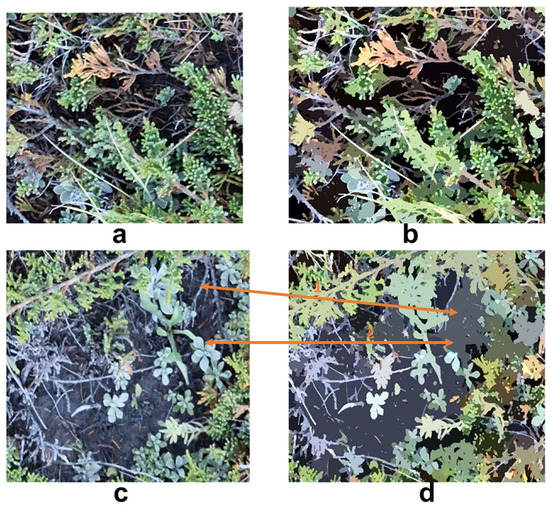

The unsupervised classification misclassified rock, moss/lichen, and high reflectance regions in the background leading to biased estimations of PV, NPV, and BS fractional cover (Figure 9). The supervised classification was an improvement on the unsupervised method; however, the OBIA identified greater detail in the three fractional covers. Our findings are similar to those reported by Laliberte, Rango, Herrick, Fredrickson and Burkett [27], in which OBIA was also used to investigate the fractional cover of North American grassland. They suggested that shadow is the greatest problem in scene decomposition when applying OBIA to high-resolution, close-range digital photographs. This concern was also raised in Song, Mu, Yan and Huang [20], and was partially resolved by using a shadow-resistant algorithm. We did not include a shadow-resistant method to estimate the fractional cover in our study. However, we acknowledge that it is a problem for close-range photo processing, especially in heterogeneous grasslands with complex vertical structures and high biomass volumes. Shadow not only affects fractional cover estimation but also affects visual interpretation. This partially explains the outliers in our SamplePoint estimation (Figure 5), as our original photos, despite being umbrella-shaded, still had shadow effects.

Figure 9.

Example of image analyses: (a) original RGB image, (b) HIS image, (c) unsupervised image classification, (d) supervised image classification, and (e) OBIA.

3.3. Differences between In Situ Assessment, Visual Classification with SamplePoint Software, and Image Classification Methods

We performed a paired-samples Wilcoxon test between the in situ assessment and four remote methods to assess PV, NPV, and BS fractional covers. Measurements assessed using SamplePoint software and in situ samplings were not significantly different (threshold p = 0.05) among the three fractional covers, while assessment of BS was marginally insignificant (Table 2). NPV and BS estimated from the unsupervised classification, and the BS estimated from the supervised classification, were significantly different from the in situ assessment (Table 2). The OBIA assessment was not significantly different from the in situ assessment, however, p values of NPV and BS were close to the threshold (Table 2). Thus, SamplePoint assessment was the most consistent with in situ assessment, compared to unsupervised classification. Although the reliability of the OBIA would make it a suitable alternative for in situ methods, the OBIA requires sophisticated image processing and human training before it can be effective [19,27].

Table 2.

p-value of the paired-samples Wilcoxon test between in situ assessment and four other approaches (SamplePoint estimation, unsupervised classification, supervised classification, and object-based image analysis (OBIA)).

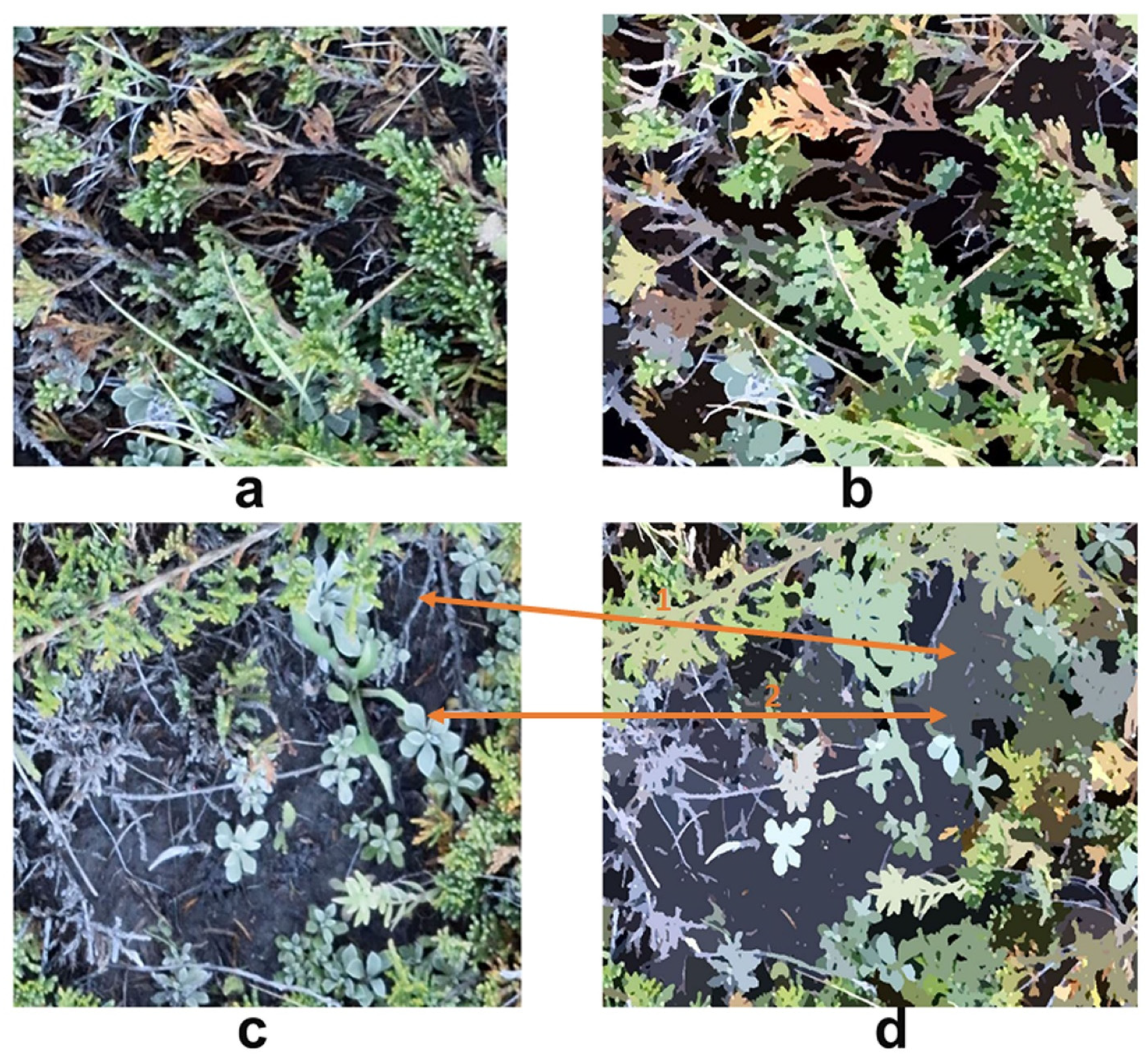

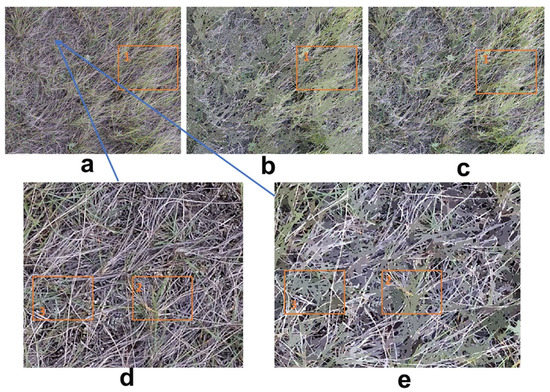

We found that spatial scale and merging level had varying effects on OBIA analyses processing within a single image. The vegetation-dominated part of the image was accurately assessed for green vegetation, dead and senescent materials, and background (Figure 10a,b). This suggests the reason why the OBIA had greater accuracy than supervised and unsupervised classifications as it is based on relatively homogeneous segmented objects rather than pixels. In contrast, training samples selected in the supervised classification were largely based on polygons containing numerous mixed pixels [48]. However, in bare-soil dominated images, shrubs were incorrectly classified as background (Figure 10c,d, double arrow 1), as well as portions of green leaf (Figure 10c,d, double arrow 2). Because shrub branches, green leaf, dead materials, and bare soil (as well as moss, lichen, and rocks) all had different morphologies, a global setting of scale and merging level was unable to segment a heterogeneous scene [49,50].

Figure 10.

Comparison between the original image and OBIA region means: (a) original image (vegetation (grass and shrub) dominated), (b) OBIA region mean of (a), (c) original image (bare-soil dominated), and (d) OBIA region mean of (c). (a,b) are subsets of site EC2, plot E3 (shrub (Juniperus) dominated). The scale level was 50 and the merging level was 10.

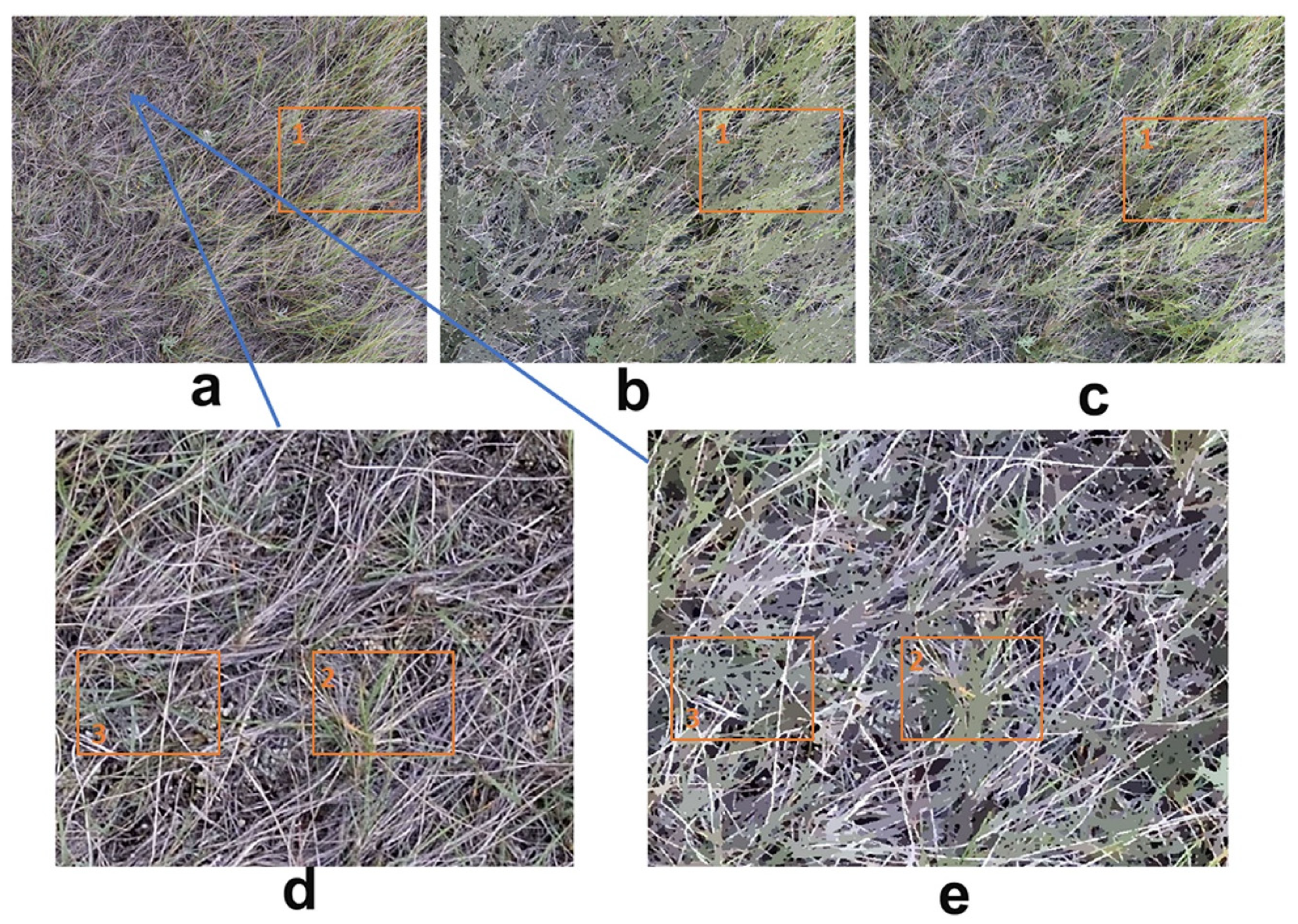

Different images with diverse species compositions required distinct scale and merging level settings when using OBIA (Figure 10 and Figure 11). Since juniper and needle-and-thread grass have different morphologies and community structure, the scale and merging level were 50 and 10 for the site EC2, plot E3, and 40 and 5 for the site UG2, plot S5. The latter image had greater fragmentation with layers of green grass (top), senescent grass (middle), and dead material (bottom). However, the scene was relatively simple in the former image except for the misclassification of shrub branches.

Figure 11.

Illustration of spatial scale and merging-level effects on OBIA classification: (a) original image, (b) OBIA region mean scale was 60 and merging level was 10, (c) OBIA region mean scale was 40 and merging level was 5, (d) subset of (a), and (e) subset of (b). (a) was from site UG2, plot S5 (needle-and-thread-grass (Hesperostipa comata) dominated).

We tested the effect of spatial scale and merging level on OBIA classification (Figure 11). Larger scale and merging levels (60 and 10) caused misclassification of green grass (Figure 11b), while the smaller scale and merging levels (40 and 5) had better results (orange rectangle 1 in Figure 11a–c). A scaled-in view (as shown in Figure 11d,e (orange rectangle 2 and 3)) resulted in the pseudo-enlargement of green grass objects. This indicated that the selection of proper segmentation and merging parameters related to scene composition was critical for accurate assessment using OBIA. As mentioned above, we used the preview window in the Feature Extraction Module of ENVI 5.5 for the interactive adjustment of these parameters. This is time-consuming and needs knowledge of OBIA.

As is apparent in these comparisons, the SamplePoint-processed results were highly related to in situ estimation, even with nine different categories (Figure 3). The unsupervised image classification method was unable to discriminate PV, NPV, and BS with the desired accuracy, while supervised image classification outperformed the unsupervised method. OBIA had the highest accuracy among the three image classification methods compared with the in situ estimation.

4. Conclusions

In this study, a mobile camera stand equipped with a NIKON D5500 camera was used to photograph vegetation plots in a typical northern mixed grassland, the Grassland National Park, Canada. This grassland type has a large amount of dead senescent vegetation material. The imagery was processed by SamplePoint, unsupervised, supervised, and object-based image classification approaches to derive the vegetation fractional covers, which were compared with in situ visual assessment.

Our results demonstrated that imagery processing methods for mixed grassland vegetation communities can accurately determine the fractional vegetation cover in sample plots, which is comparable to in situ measurement. We found that SamplePoint software estimates corresponded highly to in situ assessments, accurately distinguishing and quantifying PV, NPV, and BS fractional covers as well as the detailed vegetation community categories. The object-based image analysis method performed better than the unsupervised and supervised classification methods and produced reasonable coefficients of determination (>0.7) for PV, NPV, and BS, comparable to in situ assessment. The OBIA method nevertheless required sophisticated processing knowledge. Meanwhile, the unsupervised classification method lacked accuracy in the discrimination of fractional cover in mixed grassland plots. These results suggest that the in situ estimation method is comparable with a more accurate SamplePoint approach based purely on imagery. Further research into image-based estimation approaches could resolve ongoing issues with shadow and various image-scene compositions.

Author Contributions

Study conceptualization, manuscript review and editing, X.G.; established the methods, conducted fieldwork, prepared manuscript drafts and revisions, X.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the Canadian Natural Sciences and Engineering Research Council [No. RGPIN-2016-03960] (X.G.) and the China Scholarship Council scholarship (X.Y.).

Data Availability Statement

Data available on request due to restrictions.

Acknowledgments

The authors thank Parks Canada for assisting us with the fieldwork. We also thank Tengfei Cui and Thuy Chu from the University of Saskatchewan, and Yunpei Lu, for their contributions to the field data collection. We are grateful to D. Terrance Booth from the USDA for valuable suggestions regarding data analysis and the design of the camera frame.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Purevdorj, T.S.; Tateishi, R.; Ishiyama, T.; Honda, Y. Relationships between percent vegetation cover and vegetation indices. Int. J. Remote Sens. 1998, 19, 3519–3535. [Google Scholar] [CrossRef]

- Jiapaer, G.; Chen, X.; Bao, A. A comparison of methods for estimating fractional vegetation cover in arid regions. Agric. For. Meteorol. 2011, 151, 1698–1710. [Google Scholar] [CrossRef]

- Zeng, X.; Dickinson, R.E.; Walker, A.; Shaikh, M.; DeFries, R.S.; Qi, J. Derivation and evaluation of global 1-km fractional vegetation cover data for land modeling. J. Appl. Meteorol. 2000, 39, 826–839. [Google Scholar] [CrossRef]

- Yu, X.; Guo, X.; Wu, Z. Land Surface Temperature retrieval from Landsat 8 TIRS—Comparison between radiative transfer equation-based method, split window algorithm and single channel method. Remote Sens. 2014, 6, 9829–9852. [Google Scholar] [CrossRef] [Green Version]

- Asner, G.P.; Heidebrecht, K.B. Spectral unmixing of vegetation, soil and dry carbon cover in arid regions: Comparing multispectral and hyperspectral observations. Int. J. Remote Sens. 2002, 23, 3939–3958. [Google Scholar] [CrossRef]

- Yu, X.; Guo, Q.; Chen, Q.; Guo, X. Discrimination of senescent vegetation cover from landsat-8 OLI imagery by spectral unmixing in the northern mixed grasslands. Can. J. Remote Sens. 2019, 45, 1–17. [Google Scholar] [CrossRef]

- Skidmore, A.K.; Ferwerda, J.G.; Mutanga, O.; Van Wieren, S.E.; Peel, M.; Grant, R.C.; Prins, H.H.; Balcik, F.B.; Venus, V. Forage quality of savannas—Simultaneously mapping foliar protein and polyphenols for trees and grass using hyperspectral imagery. Remote Sens. Environ. 2010, 114, 64–72. [Google Scholar] [CrossRef]

- Asner, G.P.; Heidebrecht, K.B. Desertification alters regional ecosystem–climate interactions. Glob. Chang. Biol. 2005, 11, 182–194. [Google Scholar] [CrossRef]

- Lucas, R.; Blonda, P.; Bunting, P.; Jones, G.; Inglada, J.; Arias, M.; Kosmidou, V.; Petrou, Z.I.; Manakos, I.; Adamo, M. The earth observation data for habitat monitoring (EODHaM) system. Int. J. Appl. Earth Obs. Geoinform. 2015, 37, 17–28. [Google Scholar] [CrossRef]

- Hill, M.J. Vegetation index suites as indicators of vegetation state in grassland and savanna: An analysis with simulated SENTINEL 2 data for a North American transect. Remote Sens. Environ. 2013, 137, 94–111. [Google Scholar] [CrossRef]

- Daubenmire, R. Ecology of fire in grasslands. In Advances in Ecological Research; Elsevier: Amsterdam, The Netherlands, 1968; Volume 5, pp. 209–266. [Google Scholar]

- Floyd, D.A.; Anderson, J.E. A comparison of three methods for estimating plant cover. J. Ecol. 1987, 75, 221–228. [Google Scholar] [CrossRef]

- Hanley, T.A. A comparison of the line-interception and quadrat estimation methods of determining shrub canopy coverage. J. Range Manag. 1978, 60–62. [Google Scholar] [CrossRef] [Green Version]

- Jonasson, S. Evaluation of the point intercept method for the estimation of plant biomass. Oikos 1988, 52, 101–106. [Google Scholar] [CrossRef]

- Jia, K.; Liang, S.; Gu, X.; Baret, F.; Wei, X.; Wang, X.; Yao, Y.; Yang, L.; Li, Y. Fractional vegetation cover estimation algorithm for Chinese GF-1 wide field view data. Remote Sens. Environ. 2016, 177, 184–191. [Google Scholar] [CrossRef]

- Hill, M.J.; Zhou, Q.; Sun, Q.; Schaaf, C.B.; Palace, M. Relationships between vegetation indices, fractional cover retrievals and the structure and composition of Brazilian Cerrado natural vegetation. Int. J. Remote Sens. 2017, 38, 874–905. [Google Scholar] [CrossRef]

- Guerschman, J.P.; Hill, M.J.; Renzullo, L.J.; Barrett, D.J.; Marks, A.S.; Botha, E.J. Estimating fractional cover of photosynthetic vegetation, non-photosynthetic vegetation and bare soil in the Australian tropical savanna region upscaling the EO-1 Hyperion and MODIS sensors. Remote Sens. Environ. 2009, 113, 928–945. [Google Scholar] [CrossRef]

- Karl, J.W.; McCord, S.E.; Hadley, B.C. A comparison of cover calculation techniques for relating point-intercept vegetation sampling to remote sensing imagery. Ecol. Indic. 2017, 73, 156–165. [Google Scholar] [CrossRef] [Green Version]

- Liu, N.; Treitz, P. Modelling high arctic percent vegetation cover using field digital images and high resolution satellite data. Int. J. Appl. Earth Obs. Geoinform. 2016, 52, 445–456. [Google Scholar] [CrossRef]

- Song, W.; Mu, X.; Yan, G.; Huang, S. Extracting the green fractional vegetation cover from digital images using a shadow-resistant algorithm (SHAR-LABFVC). Remote Sens. 2015, 7, 10425. [Google Scholar] [CrossRef] [Green Version]

- Mu, X.; Hu, R.; Zeng, Y.; McVicar, T.R.; Ren, H.; Song, W.; Wang, Y.; Casa, R.; Qi, J.; Xie, D.; et al. Estimating structural parameters of agricultural crops from ground-based multi-angular digital images with a fractional model of sun and shade components. Agric. For. Meteorol. 2017, 246, 162–177. [Google Scholar] [CrossRef]

- Booth, D.T.; Cox, S.E.; Berryman, R.D. Point sampling digital imagery with ‘SamplePoint’. Environ. Monit. Assess. 2006, 123, 97–108. [Google Scholar] [CrossRef]

- Patrignani, A.; Ochsner, T.E. Canopeo: A Powerful new tool for measuring fractional green canopy cover. Agron. J. 2015, 107, 2312–2320. [Google Scholar] [CrossRef] [Green Version]

- Louhaichi, M.; Johnson, M.D.; Woerz, A.L.; Jasra, A.W.; Johnson, D.E. Digital charting technique for monitoring rangeland vegetation cover at local scale. Int. J. Agric. Biol. 2010, 12, 406–410. [Google Scholar]

- Liu, Y.; Mu, X.; Wang, H.; Yan, G. A novel method for extracting green fractional vegetation cover from digital images. J. Veg. Sci. 2012, 23, 406–418. [Google Scholar] [CrossRef]

- Smith, A.M.; Hill, M.J.; Zhang, Y. Estimating ground cover in the mixed prairie grassland of Southern Alberta Using vegetation indices related to physiological function. Can. J. Remote Sens. 2015, 41, 51–66. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Rango, A.; Herrick, J.E.; Fredrickson, E.L.; Burkett, L. An object-based image analysis approach for determining fractional cover of senescent and green vegetation with digital plot photography. J. Arid Environ. 2007, 69, 1–14. [Google Scholar] [CrossRef]

- Malenovský, Z.; Lucieer, A.; King, D.H.; Turnbull, J.D.; Robinson, S.A. Unmanned aircraft system advances health mapping of fragile polar vegetation. Methods Ecol. Evolut. 2017, 8, 1842–1857. [Google Scholar] [CrossRef] [Green Version]

- Booth, D.T.; Cox, S.E. Image-based monitoring to measure ecological change in rangeland. Front. Ecol. Environ. 2008, 6, 185–190. [Google Scholar] [CrossRef] [Green Version]

- Louhaichi, M.; Hassan, S.; Johnson, D.E. VegMeasure: Image processing software for grassland vegetation monitoring. In Advances in Remote Sensing and Geo Informatics Applications; Springer: Berlin/Heidelberg, Germany, 2019; pp. 229–230. [Google Scholar]

- Wang, B.; Jia, K.; Liang, S.; Xie, X.; Wei, X.; Zhao, X.; Yao, Y.; Zhang, X. Assessment of Sentinel-2 MSI spectral band reflectances for estimating fractional vegetation cover. Remote Sens. 2018, 10, 1927. [Google Scholar] [CrossRef] [Green Version]

- Canty, M.J. Image Analysis, Classification and Change Detection in Remote Sensing: With Algorithms for ENVI/IDL and Python; CRC Press: Boca Raton, FL, USA, 2014. [Google Scholar]

- Shorthouse, J.D. Ecoregions of Canada’s prairie grasslands. Arthropods Can. Grassl. 2010, 1, 53–81. [Google Scholar] [CrossRef]

- Environment-Canada. 1981–2010 Climate normals and averages. Can. Clim. Norm. 2015. Available online: https://climate.weather.gc.ca/climate_normals/index_e.html (accessed on 26 October 2021).

- Huang, J.; Ji, M.; Xie, Y.; Wang, S.; He, Y.; Ran, J. Global semi-arid climate change over last 60 years. Clim. Dyn. 2016, 46, 1131–1150. [Google Scholar] [CrossRef] [Green Version]

- Fischer, R.; Turner, N.C. Plant productivity in the arid and semiarid zones. Ann. Rev. Plant Physiol. 1978, 29, 277–317. [Google Scholar] [CrossRef]

- Booth, D.T.; Samuel, E.C.; Mounier, L.; Douglas, E.J. Technical note: Lightweight camera stand for close-to-earth remote sensing. J. Range Manag. 2004, 57, 675–678. [Google Scholar] [CrossRef]

- He, Y.; Guo, X.; Wilmshurst, J.; Si, B.C. Studying mixed grassland ecosystems II: Optimum pixel size. Can. J. Remote Sens. 2006, 32, 108–115. [Google Scholar] [CrossRef]

- Davidson, A.; Csillag, F. The influence of vegetation index and spatial resolution on a two-date remote sensing-derived relation to C4 species coverage. Remote Sens. Environ. 2001, 75, 138–151. [Google Scholar] [CrossRef]

- Zhang, C.; Guo, X. Measuring biological heterogeneity in the northern mixed prairie: A remote sensing approach. Can. Geogr. 2007, 51, 462–474. [Google Scholar] [CrossRef]

- Booth, D.T.; Cox, S.E.; Meikle, T.W.; Fitzgerald, C. The accuracy of ground-cover measurements. Rangel. Ecol. Manag. 2006, 59, 179–188. [Google Scholar] [CrossRef]

- Yu, X.; Wu, Z.; Jiang, W.; Guo, X. Predicting daily photosynthetically active radiation from global solar radiation in the Contiguous United States. Energy Convers. Manag. 2015, 89, 71–82. [Google Scholar] [CrossRef]

- Yu, X.; Guo, X. Hourly photosynthetically active radiation estimation in Midwestern United States from artificial neural networks and conventional regressions models. Int. J. Biometeorol. 2016, 60, 1247–1259. [Google Scholar] [CrossRef]

- Kozak, M.; Wnuk, A. Including the Tukey mean-difference (Bland–Altman) plot in a statistics course. Teach. Stat. 2014, 36, 83–87. [Google Scholar] [CrossRef]

- Wilcoxon, F. Individual comparisons by ranking methods. Biometr. Bull. 1945, 1, 80–83. [Google Scholar] [CrossRef]

- Pratt, J.W. Remarks on zeros and ties in the wilcoxon signed rank procedures. J. Am. Stat. Assoc. 1959, 54, 655–667. [Google Scholar] [CrossRef]

- Booth, D.T.; Cox, S.E.; Fifield, C.; Phillips, M.; Williamson, N. Image analysis compared with other methods for measuring ground cover. Arid Land Res. Manag. 2005, 19, 91–100. [Google Scholar] [CrossRef]

- Feizizadeh, B.; Blaschke, T.; Tiede, D.; Moghaddam, M.H.R. Evaluating fuzzy operators of an object-based image analysis for detecting landslides and their changes. Geomorphology 2017, 293, 240–254. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Hay, G.; Castilla, G. Object-based image analysis: Strengths, weaknesses, opportunities and threats (SWOT). In Proceedings of the 1st International Conference OBIA, Salzburg University, Salzburg, Austria, 4–5 July 2006; pp. 4–5. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).