Use of Force Feedback Device in a Hybrid Brain-Computer Interface Based on SSVEP, EOG and Eye Tracking for Sorting Items

Abstract

:1. Introduction

2. Materials and Methods

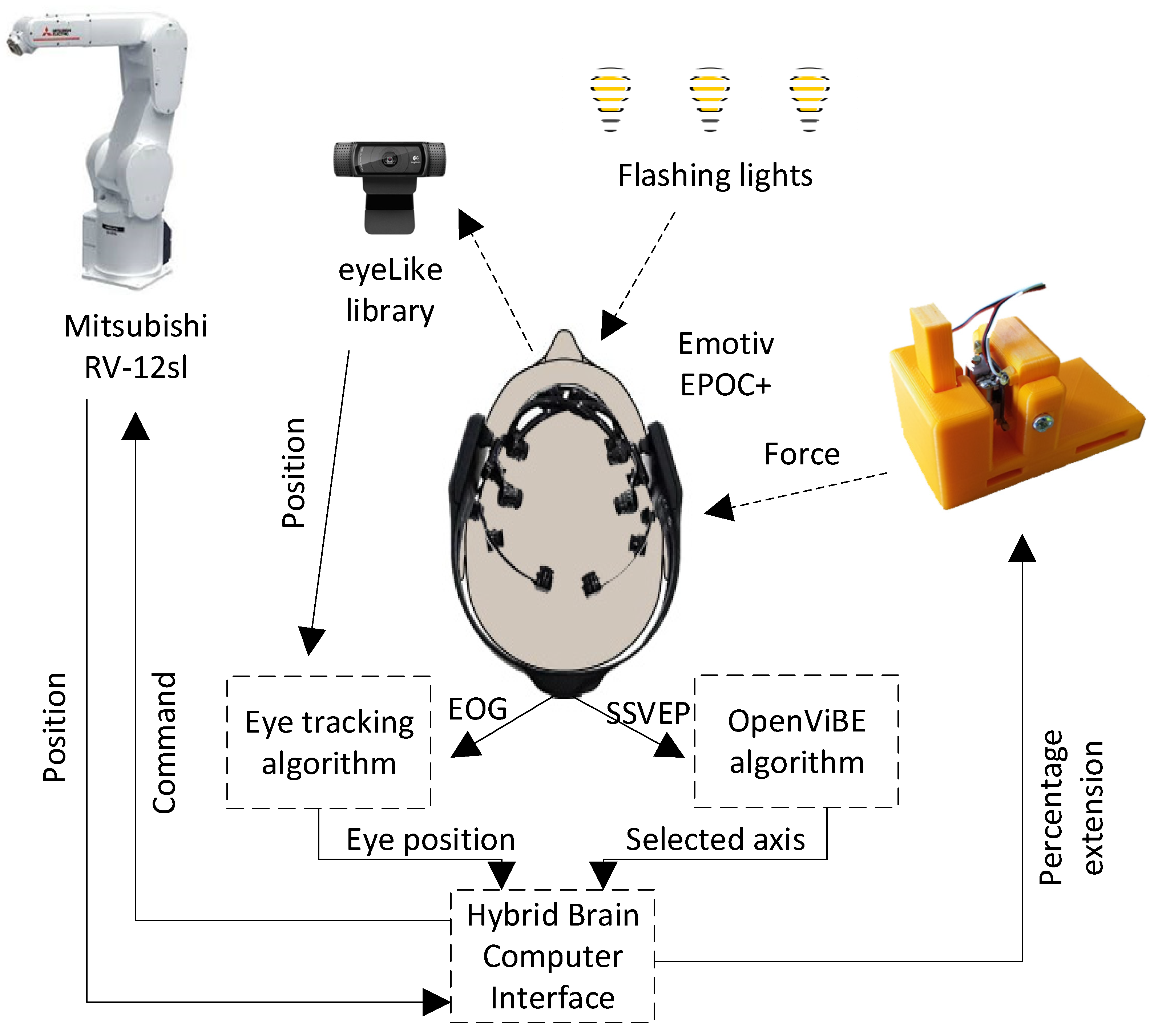

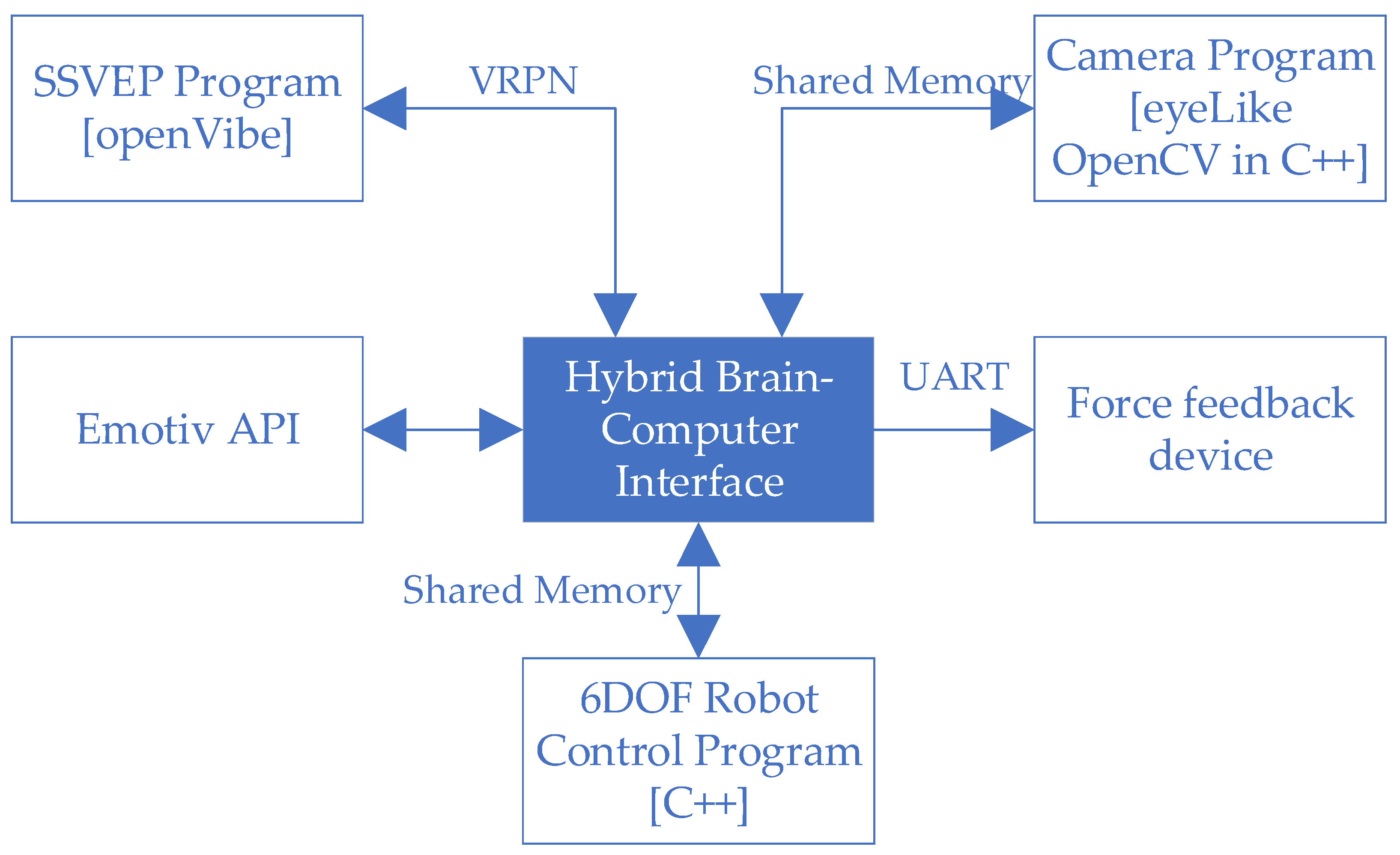

2.1. System Overview

2.2. EEG System

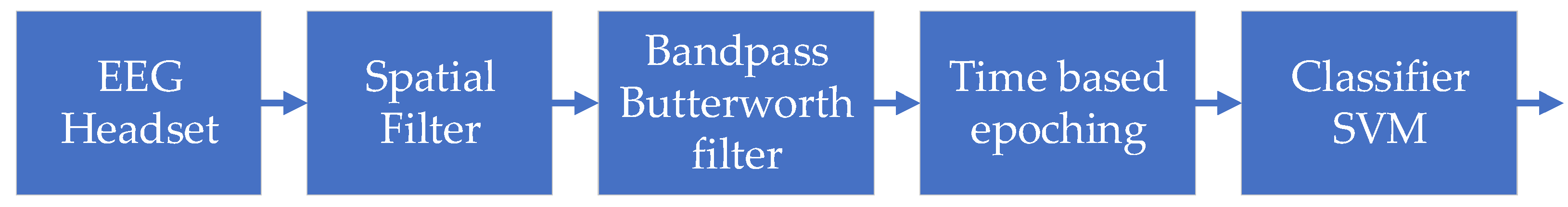

2.3. Steady-State Visual Evoked Potential (SSVEP)

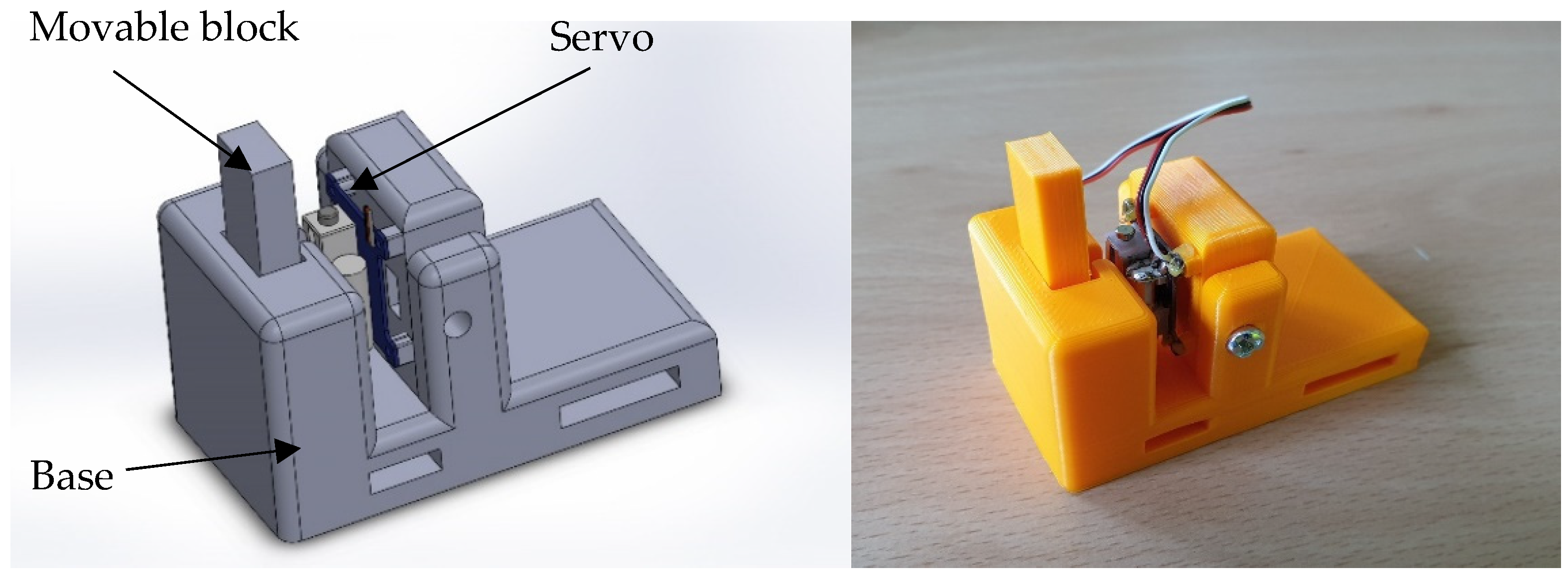

2.4. Force Feedback Device

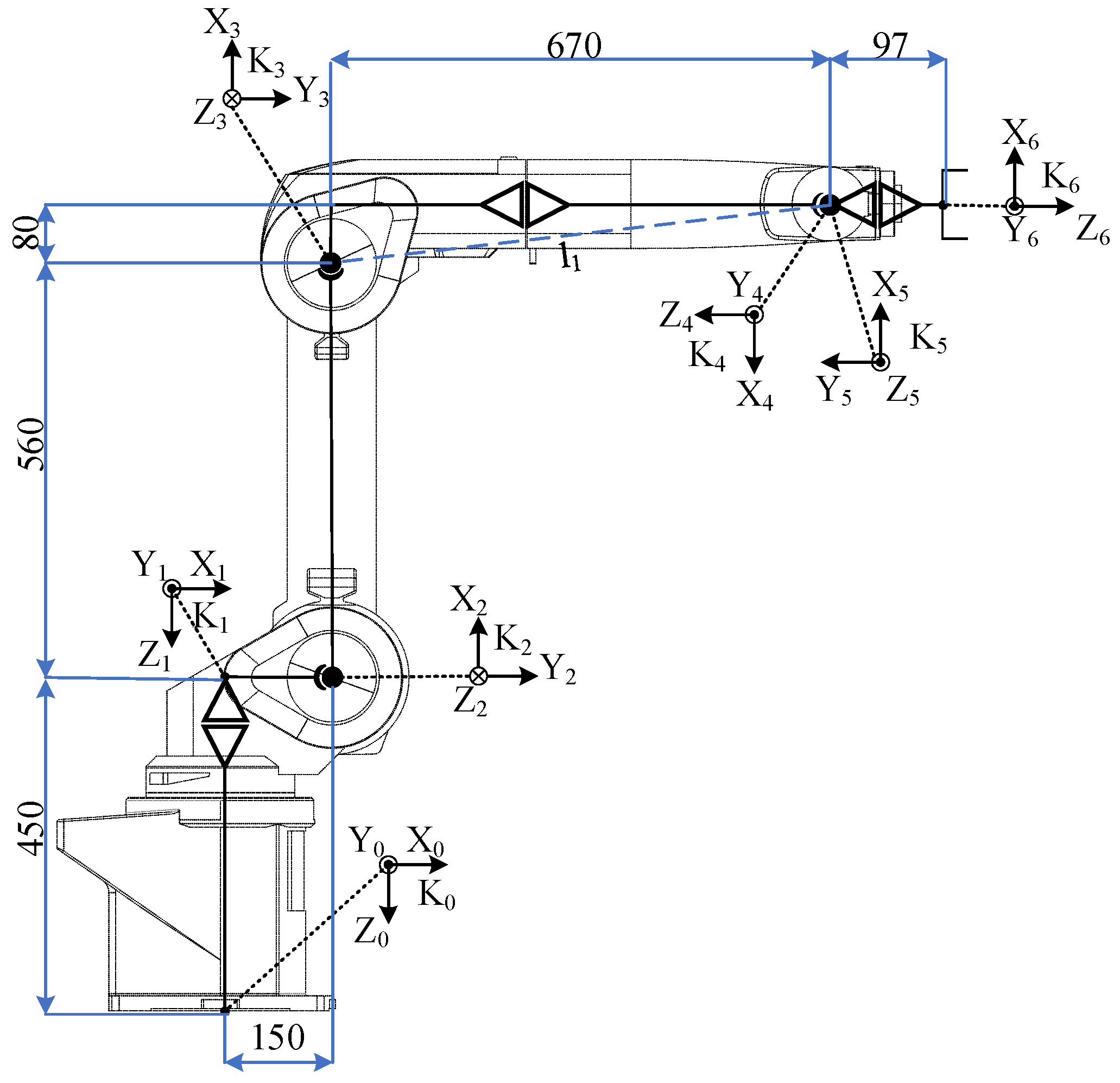

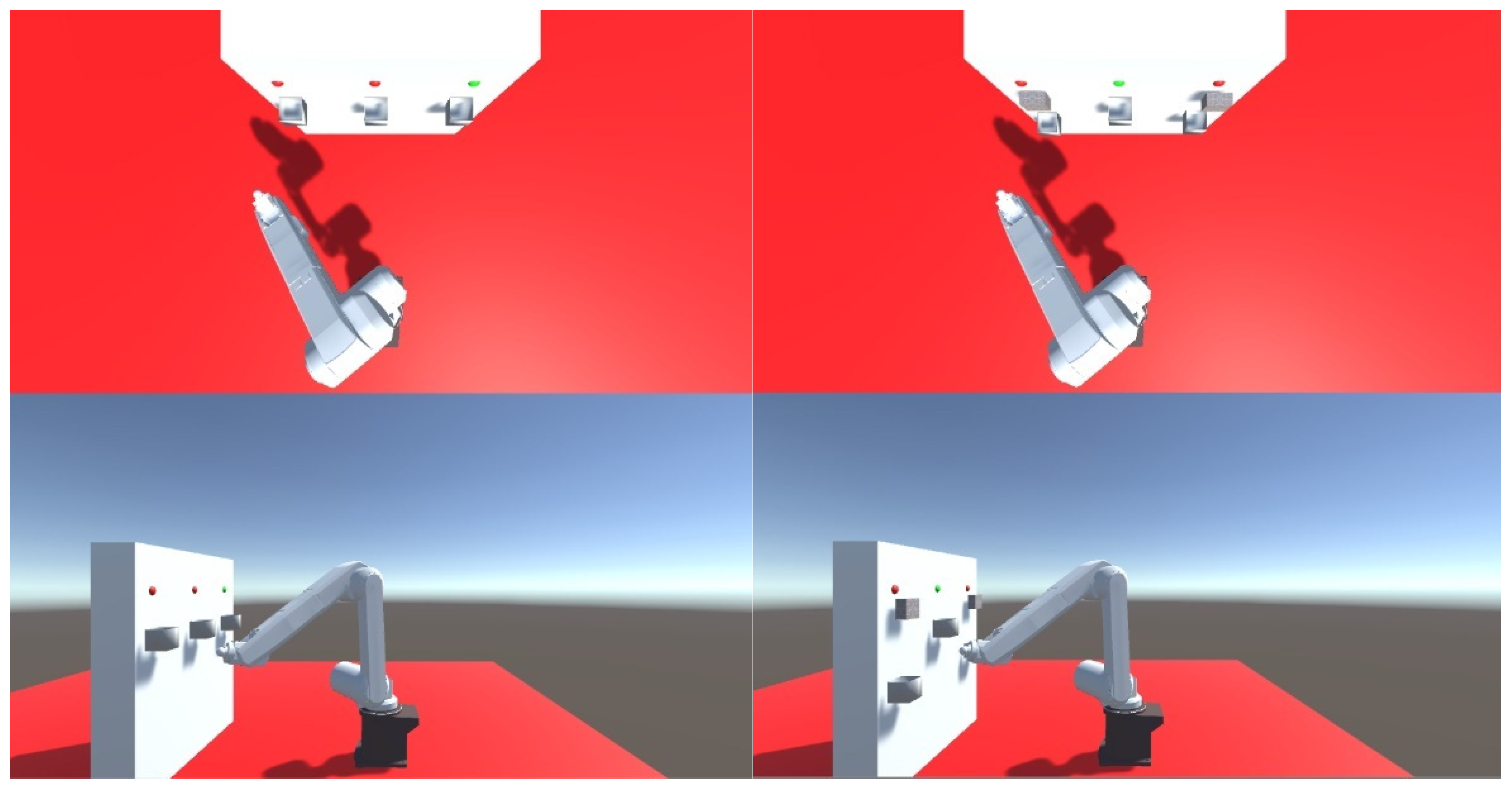

2.5. Mitsubishi RV-12sl Industrial Robot in the Simulation Environment

2.6. Changing the Robot’s Active Axis with Methods Utilising EEG and SSVEP Artefacts

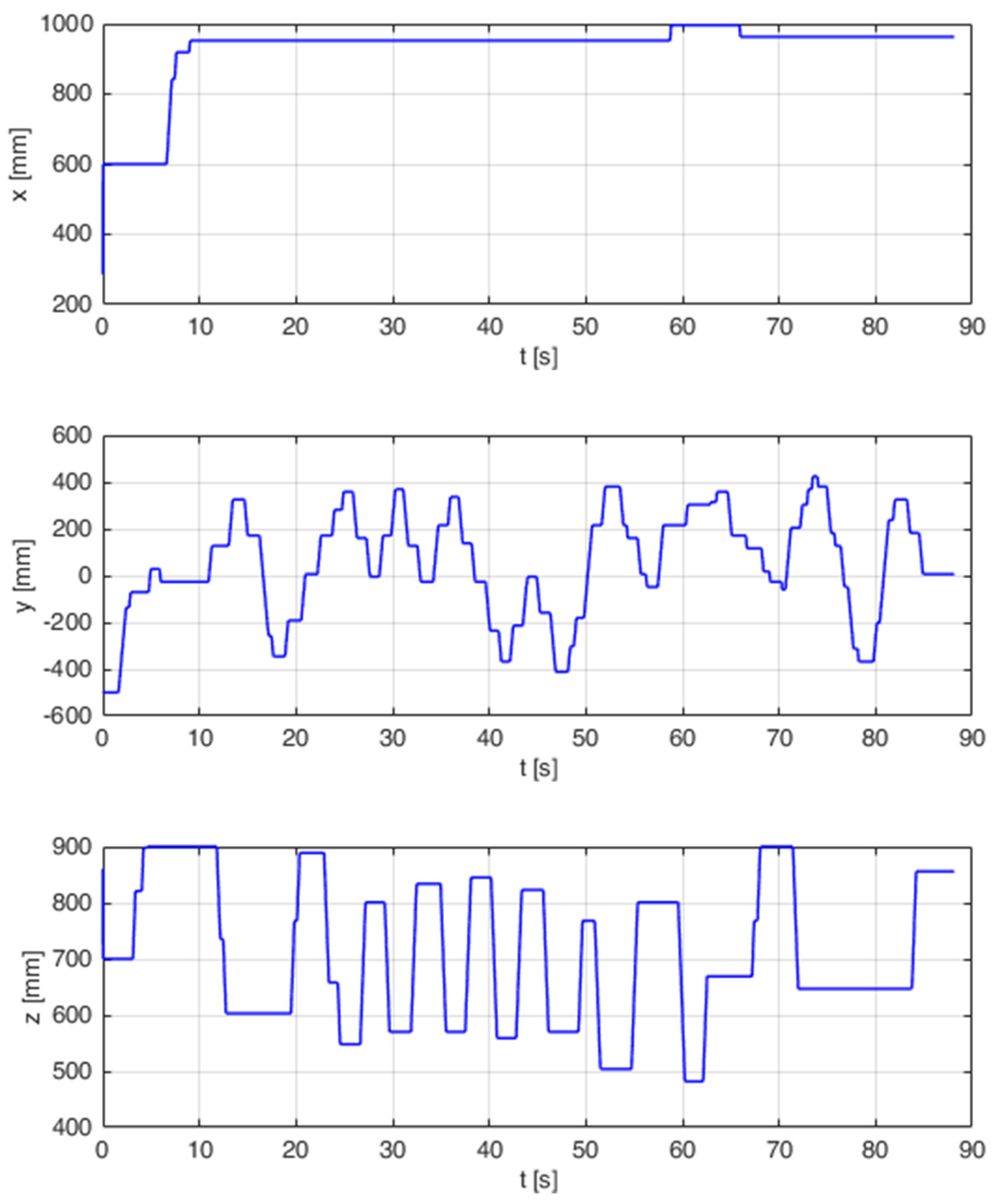

2.7. Comparison of the Accuracy of Robot Model Tip Positioning with and without Feedback

2.8. Sort Items Using a Hybrid Brain-Computer Interface with Force Feedback Enabled

3. Results

3.1. Results of Tests Concerning the Accuracy of the Robot Model Tip Positioning with and without Feedback

3.2. Results of Sorting Elements Using a Hybrid Brain-Computer Interface with Force Feedback Turned on

4. Discussion

4.1. Discussion on the Results of Tests on the Accuracy of the Robot Model Tip Positioning with and without Feedback

4.2. Discussion on the Results of Sorting Elements Using a Hybrid Brain-Computer Interface with Force Feedback Turned on

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Rezeika, A.; Benda, M.; Stawicki, P.; Gembler, F.; Saboor, A.; Volosyak, I. Brain—Computer Interface Spellers: A Review. Brain Sci. 2018, 8, 57. [Google Scholar] [CrossRef] [Green Version]

- Mezzina, G.; Venuto, D.D. Semi-Autonomous Personal Care Robots Interface driven by EEG Signals Digitization. In Proceedings of the 2020 Design, Automation Test in Europe Conference Exhibition, Grenoble, France, 9–13 March 2020; pp. 264–269. [Google Scholar] [CrossRef]

- Varadan, V.K.; Oh, S.; Kwon, H.; Hankins, P. Wireless Point-of-Care Diagnosis for Sleep Disorder With Dry Nanowire Electrodes. J. Nanotechnol. Eng. Med. 2010, 1, 031012. [Google Scholar] [CrossRef]

- Choi, J.; Kim, K.T.; Jeong, J.H.; Kim, L.; Lee, S.J.; Kim, H. Developing a Motor Imagery-Based Real-Time Asynchronous Hybrid BCI Controller for a Lower-Limb Exoskeleton. Sensors 2020, 20, 7309. [Google Scholar] [CrossRef]

- Chai, X.; Zhang, Z.; Guan, K.; Lu, Y.; Liu, G.; Zhang, T.; Niu, H. A hybrid BCI-controlled smart home system combining SSVEP and EMG for individuals with paralysis. Biomed. Signal. Proces. 2020, 56, 101687. [Google Scholar] [CrossRef]

- Chai, X.; Zhang, Z.; Lu, Y.; Liu, G.; Zhang, T.; Niu, H. A Hybrid BCI-Based Environmental Control System Using SSVEP and EMG Signals. In Proceedings of the Congress on Medical Physics and Biomedical Engineering, Prague, Czech Republic, 3–8 June 2018; pp. 59–63. [Google Scholar]

- Chowdhury, A.; Raza, H.; Meena, Y.K.; Dutta, A.; Prasad, G. An EEG-EMG correlation-based brain-computer interface for hand orthosis supported neuro-rehabilitation. J. Neurosci. Meth. 2019, 312, 1–11. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ferdiansyah, F.A.; Prajitno, P.; Wijaya, S.K. EEG-EMG based bio-robotics elbow orthotics control. J. Phys. Conf. Ser. 2020, 1528, 012033. [Google Scholar] [CrossRef]

- Kurapa, A.; Rathore, D.; Edla, D.R.; Bablani, A.; Kuppili, V. A Hybrid Approach for Extracting EMG signals by Filtering EEG Data for IoT Applications for Immobile Persons. Wireless Pers. Commun. 2020, 114, 3081–3101. [Google Scholar] [CrossRef]

- Jalilpour, S.; Hajipour Sardouie, S.; Mijani, A. A novel hybrid BCI speller based on RSVP and SSVEP paradigm. Comput. Meth. Prog. Bio. 2020, 187, 105326. [Google Scholar] [CrossRef]

- Kapgate, D.; Kalbande, D.; Shrawankar, U. An optimized facial stimuli paradigm for hybrid SSVEP+P300 brain computer interface. Cogn. Syst. Res. 2020, 59, 114–122. [Google Scholar] [CrossRef]

- Katyal, A.; Singla, R. A novel hybrid paradigm based on steady state visually evoked potential & P300 to enhance information transfer rate. Biomed. Signal Process. Control 2020, 59, 101884. [Google Scholar] [CrossRef]

- Katyal, A.; Singla, R. SSVEP-P300 hybrid paradigm optimization for enhanced information transfer rate. Biomed. Eng. Appl. Basis Commun. 2020, 32, 2050003. [Google Scholar] [CrossRef]

- Castillo-Garcia, J.F.; Müller, S.; Caicedo-Bravo, E.F.; Bastos, T.F.; De Souza, A. Nonfatigating Brain–Computer Interface Based on SSVEP and ERD to Command an Autonomous Car. Adv. Data Sci. Adapt. Data Anal. 2018, 10, 1840005. [Google Scholar] [CrossRef]

- Saravanakumar, D.; Ramasubba Reddy, M. A high performance hybrid SSVEP based BCI speller system. Adv. Eng. Info. 2019, 42, 100994. [Google Scholar] [CrossRef]

- Saravanakumar, D.; Reddy, M.R. A Novel Visual Keyboard System for Disabled People/Individuals Using Hybrid SSVEP Based Brain Computer Interface. In Proceedings of the 2018 International Conference on Cyberworlds (CW), Singapore, 3–5 October 2018; pp. 264–269. [Google Scholar] [CrossRef]

- Kubacki, A. Hybrid Brain-Computer Interface (BCI) Based on Electrooculography (EOG) and Center Eye Tracking. In Proceedings of the Conference on Automation 2018, Munich, Germany, 20–24 August 2018; Springer: Cham, Switzerland, 2018; pp. 288–297. [Google Scholar] [CrossRef]

- Antoniou, E.; Bozios, P.; Christou, V.; Tzimourta, K.D.; Kalafatakis, K.; Tsipouras, M.G.; Giannakeas, N.; Tzallas, A.T. EEG-Based Eye Movement Recognition Using Brain–Computer Interface and Random Forests. Sensors 2021, 21, 2339. [Google Scholar] [CrossRef]

- Cruz, A.; Pires, G.; Lopes, A.; Carona, C.; Nunes, U.J. A Self-Paced BCI With a Collaborative Controller for Highly Reliable Wheelchair Driving: Experimental Tests With Physically Disabled Individuals. IEEE Trans. Hum.-Mach. Syst. 2021, 51, 109–119. [Google Scholar] [CrossRef]

- Huang, X.; Xue, X.; Yuan, Z. A Simulation Platform for the Brain-Computer Interface (BCI) Based Smart Wheelchair. In Proceedings of the Artificial Intelligence and Security, Hohhot, China, 17–20 July 2020; Sun, X., Wang, J., Bertino, E., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 257–266. [Google Scholar] [CrossRef]

- Ng, D.W.-K.; Goh, S.Y. Indirect Control of an Autonomous Wheelchair Using SSVEP BCI. J. Robot. Mechatron. 2020, 32, 761–767. [Google Scholar] [CrossRef]

- Eidel, M.; Kübler, A. Wheelchair Control in a Virtual Environment by Healthy Participants Using a P300-BCI Based on Tactile Stimulation: Training Effects and Usability. Front. Hum. Neurosci. 2020, 14, 265. [Google Scholar] [CrossRef] [PubMed]

- Stawicki, P.; Gembler, F.; Volosyak, I. Driving a Semiautonomous Mobile Robotic Car Controlled by an SSVEP-Based BCI. Comput. Intell. Neurosci. 2016, 2016, e4909685. [Google Scholar] [CrossRef] [Green Version]

- Liu, C.; Xie, S.; Xie, X.; Duan, X.; Wang, W.; Obermayer, K. Design of a Video Feedback SSVEP-BCI System for Car Control Based on Improved MUSIC Method. In Proceedings of the 6th International Conference on Brain-Computer Interface (BCI), Gangwon, Korea, 15–17 January 2018; Institute of Electrical and Electronics Engineers, Inc.: Piscataway, NJ, USA, 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Basha, S.; Mahaboob, R.; Gayathri, J.; Anjana, J.; Sharon, J.; Pavaiyarkarasi, R.; Sankar, J.N. Implementation of Brain Controlled Robotic Car to Assist Paralytic and Physically Challenged People by Analyzing EEG Signals. Eur. J. Mol. Clin. Med. 2020, 7, 2191–2199. [Google Scholar]

- Park, J.; Park, J.; Shin, D.; Choi, Y. A BCI Based Alerting System for Attention Recovery of UAV Operators. Sensors 2021, 21, 2447. [Google Scholar] [CrossRef]

- Christensen, S.M.; Holm, N.S.; Puthusserypady, S. An Improved Five Class MI Based BCI Scheme for Drone Control Using Filter Bank CSP. In Proceedings of the 7th International Winter Conference on Brain-Computer Interface (BCI), High 1 Resort, Korea, 18–20 February 2019; Institute of Electrical and Electronics Engineers, Inc.: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar] [CrossRef] [Green Version]

- Nourmohammadi, A.; Jafari, M.; Zander, T.O. A Survey on Unmanned Aerial Vehicle Remote Control Using Brain–Computer Interface. IEEE Trans. Hum.-Mach. Syst. 2018, 48, 337–348. [Google Scholar] [CrossRef]

- Lindner, T.; Milecki, A.; Wyrwał, D. Positioning of the Robotic Arm Using Different Reinforcement Learning Algorithms. Int. J. Control Autom. Syst. 2021, 19, 1661–1676. [Google Scholar] [CrossRef]

- Achic, F.; Montero, J.; Penaloza, C.; Cuellar, F. Hybrid BCI System to Operate an Electric Wheelchair and a Robotic Arm for Navigation and Manipulation Tasks. In Proceedings of the 2016 IEEE Workshop on Advanced Robotics and Its Social Impacts, Shanghai, China, 8–10 July 2016; Institute of Electrical and Electronics Engineers, Inc.: Piscataway, NJ, USA, 2016; pp. 249–254. [Google Scholar] [CrossRef]

- Gao, Q.; Dou, L.; Belkacem, A.N.; Chen, C. Noninvasive Electroencephalogram Based Control of a Robotic Arm for Writing Task Using Hybrid BCI System. BioMed Res. Int. 2017, 2017, e8316485. [Google Scholar] [CrossRef] [Green Version]

- Ha, J.; Park, S.; Im, C.-H.; Kim, L. A Hybrid Brain–Computer Interface for Real-Life Meal-Assist Robot Control. Sensors 2021, 21, 4578. [Google Scholar] [CrossRef]

- Athanasiou, A.; Xygonakis, I.; Pandria, N.; Kartsidis, P.; Arfaras, G.; Kavazidi, K.R.; Foroglou, N.; Astaras, A.; Bamidis, P.D. Towards Rehabilitation Robotics: Off-the-Shelf BCI Control of Anthropomorphic Robotic Arms. BioMed Res. Int. 2017, 2017, e5708937. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Xiao, Y.; Deng, F.; Chen, Y.; Zhang, H. Eye-Movement-Controlled Wheelchair Based on Flexible Hydrogel Biosensor and WT-SVM. Biosensors 2021, 11, 198. [Google Scholar] [CrossRef] [PubMed]

- Cecotti, H. A Self-Paced and Calibration-Less SSVEP-Based Brain–Computer Interface Speller. IEEE Trans. Neural Syst. Rehabil. Eng. 2010, 18, 127–133. [Google Scholar] [CrossRef]

- Zhu, D.; Bieger, J.; Garcia Molina, G.; Aarts, R.M. A Survey of Stimulation Methods Used in SSVEP-Based BCIs. Comput. Intell. Neurosci. 2010, 2010, e702357. [Google Scholar] [CrossRef] [PubMed]

- Lin, Z.; Zhang, C.; Wu, W.; Gao, X. Frequency Recognition Based on Canonical Correlation Analysis for SSVEP-Based BCIs. IEEE Trans. BioMed. Eng. 2006, 53, 2610–2614. [Google Scholar] [CrossRef]

- Rybarczyk, D.; Owczarek, P.; Myszkowski, A. Development of Force Feedback Controller For the Loader Crane. In Proceedings of the Advances in Manufacturing, Skövde, Sweden, 11–13 September 2018; Hamrol, A., Ciszak, O., Legutko, S., Jurczyk, M., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 345–354. [Google Scholar] [CrossRef]

- Kubacki, A.; Jakubowski, A. Controlling the Industrial Robot Model with the Hybrid BCI Based on EOG and Eye Tracking. AIP Conf. Proc. 2018, 2029, 020032. [Google Scholar] [CrossRef] [Green Version]

| i | a [mm] | α [rad] | d [mm] | θ [rad] |

|---|---|---|---|---|

| 0 | 150 | 0 | - | - |

| 1 | 0 | 450 | ||

| 2 | 560 | 0 | 0 | |

| 3 | 80 | 0 | ||

| 4 | 0 | 670 | ||

| 5 | 0 | 0 | ||

| 6 | - | - | 97 |

| No Feedback | Feedback | |

|---|---|---|

| Average time | 48.26 s | 39.63 s |

| Standard deviation | 10.12 s | 9.83 s |

| Minimum time | 30.60 s | 20.40 s |

| Maximum time | 64.40 s | 54.30 s |

| Average distance | 41.29 mm | 9.00 mm |

| Standard distance deviation | 13.12 mm | 2.31 mm |

| Minimum distance | 10.40 mm | 4.48 mm |

| Maximum distance | 65.58 mm | 12.98 mm |

| No Feedback | Feedback | |

|---|---|---|

| Average time | 49.35 s | 38.36 s |

| Standard deviation | 14.03 s | 16.18 s |

| Minimum time | 25.26 s | 15.00 s |

| Maximum time | 74.72 s | 63.57 s |

| Average distance | 39.71 mm | 10.11 mm |

| Standard distance deviation | 12.81 mm | 3.2 mm |

| Minimum distance | 9.99 mm | 3.01 mm |

| Maximum distance | 64.82 mm | 15.31 mm |

| Model | Real Robot | |

|---|---|---|

| Average time | 58.18 s | 56.87 s |

| Standard deviation | 8.75 s | 8.50 s |

| Minimum time | 40.40 s | 38.10 s |

| Maximum time | 75.40 s | 69.90 s |

| Incorrectly sorted | 25 (2.1%) | 31 (2.6%) |

| Missing boxes | 56 (4.7%) | 60 (5%) |

| Total wrong | 81 | 91 |

| Correctly sorted | 1119 (93.2%) | 1109 (92.4%) |

| Flawless trials | 16 (26.7%) | 13 (21.7%) |

| Attempts with a maximum of 1 failure | 33 (55%) | 30 (50%) |

| Model | Real Robot | |

|---|---|---|

| Average time | 99.76 s | 101.33 s |

| Standard deviation | 11.51 s | 11.27 s |

| Minimum time | 80.70 s | 70.90 s |

| Maximum time | 118.80 s | 129.20 s |

| Incorrectly sorted | 38 (3.2%) | 42 (3.5%) |

| Missing boxes | 99 (8.25%) | 102 (8.5%) |

| Total wrong | 137 | 144 |

| Correctly sorted | 1063 (88.6%) | 1056 (88%) |

| Flawless trials | 12 (20%) | 11 (18.3%) |

| Attempts with a maximum of 1 failure | 25 (41.7%) | 22 (36.7%) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kubacki, A. Use of Force Feedback Device in a Hybrid Brain-Computer Interface Based on SSVEP, EOG and Eye Tracking for Sorting Items. Sensors 2021, 21, 7244. https://doi.org/10.3390/s21217244

Kubacki A. Use of Force Feedback Device in a Hybrid Brain-Computer Interface Based on SSVEP, EOG and Eye Tracking for Sorting Items. Sensors. 2021; 21(21):7244. https://doi.org/10.3390/s21217244

Chicago/Turabian StyleKubacki, Arkadiusz. 2021. "Use of Force Feedback Device in a Hybrid Brain-Computer Interface Based on SSVEP, EOG and Eye Tracking for Sorting Items" Sensors 21, no. 21: 7244. https://doi.org/10.3390/s21217244

APA StyleKubacki, A. (2021). Use of Force Feedback Device in a Hybrid Brain-Computer Interface Based on SSVEP, EOG and Eye Tracking for Sorting Items. Sensors, 21(21), 7244. https://doi.org/10.3390/s21217244