Abstract

Ischemic stroke is one of the leading causes of death among the aged population in the world. Experimental stroke models with rodents play a fundamental role in the investigation of the mechanism and impairment of cerebral ischemia. For its celerity and veracity, the 2,3,5-triphenyltetrazolium chloride (TTC) staining of rat brains has been extensively adopted to visualize the infarction, which is subsequently photographed for further processing. Two important tasks are to segment the brain regions and to compute the midline that separates the brain. This paper investigates automatic brain extraction and hemisphere segmentation algorithms in camera-based TTC-stained rat images. For rat brain extraction, a saliency region detection scheme on a superpixel image is exploited to extract the brain regions from the raw complicated image. Subsequently, the initial brain slices are refined using a parametric deformable model associated with color image transformation. For rat hemisphere segmentation, open curve evolution guided by the gradient vector flow in a medial subimage is developed to compute the midline. A wide variety of TTC-stained rat brain images captured by a smartphone were produced and utilized to evaluate the proposed segmentation frameworks. Experimental results on the segmentation of rat brains and cerebral hemispheres indicated that the developed schemes achieved high accuracy with average Dice scores of 92.33% and 97.15%, respectively. The established segmentation algorithms are believed to be potential and beneficial to facilitate experimental stroke study with TTC-stained rat brain images.

1. Introduction

A stroke is a medical condition in which the blood supply to part of the brain is interrupted or diminished and this prevents brain tissue from receiving oxygen and nutrients. Particularly, ischemic stroke, which accounts for the majority of strokes, is one of the leading causes of death among the aged population worldwide. Cerebral ischemia can induce many injuries including energy failure, intracellular calcium overload, and cell death, which eventually result in the loss of neurological functions and permanent disabilities [1]. To understand the mechanism of cerebral ischemia, evaluate the effect of therapeutic interventions, and study the scope of behavioral manifestations, experimental ischemic stroke models play an essential role. Among the various models, the middle cerebral artery occlusion (MCAO) model in rodents has been widely employed. In this model, the middle cerebral artery is occluded to cause brain tissue ischemia, which results in a massive amount of cell deaths, called the infarct.

In addition to magnetic resonance imaging (MRI) [2,3], 2,3,5-triphenyltetrazolium chloride (TTC) staining has been extensively employed to visualize the infarction for its celerity, veracity and cost effectiveness [4]. The colorless TTC reacts with the living cells, which results in the red compound, so that the red region essentially unveils the healthy/normal tissue. In contrast, the whiteness in the ischemic tissue reflects the absence of living cells, which generally indicates the infarct region. Consequently, TTC staining is powerful for the macroscopic differentiation between ischemic and non-ischemic tissue [5]. Requiring manual intervention, the image acquisition of TTC staining can be classified into two categories: scanner-based and camera-based. Figure 1 illustrates some typical camera-based TTC-stained rat brain images, which are captured using a modern smartphone. To process the TTC-stained rat brain images associated with the MCAO model, the first step is to extract the rat brain slices from the originally captured image, which usually contains a scale and a label. To further analyze the extracted brain slice, it is required to find the midline that separates the rat brain into two hemispheres.

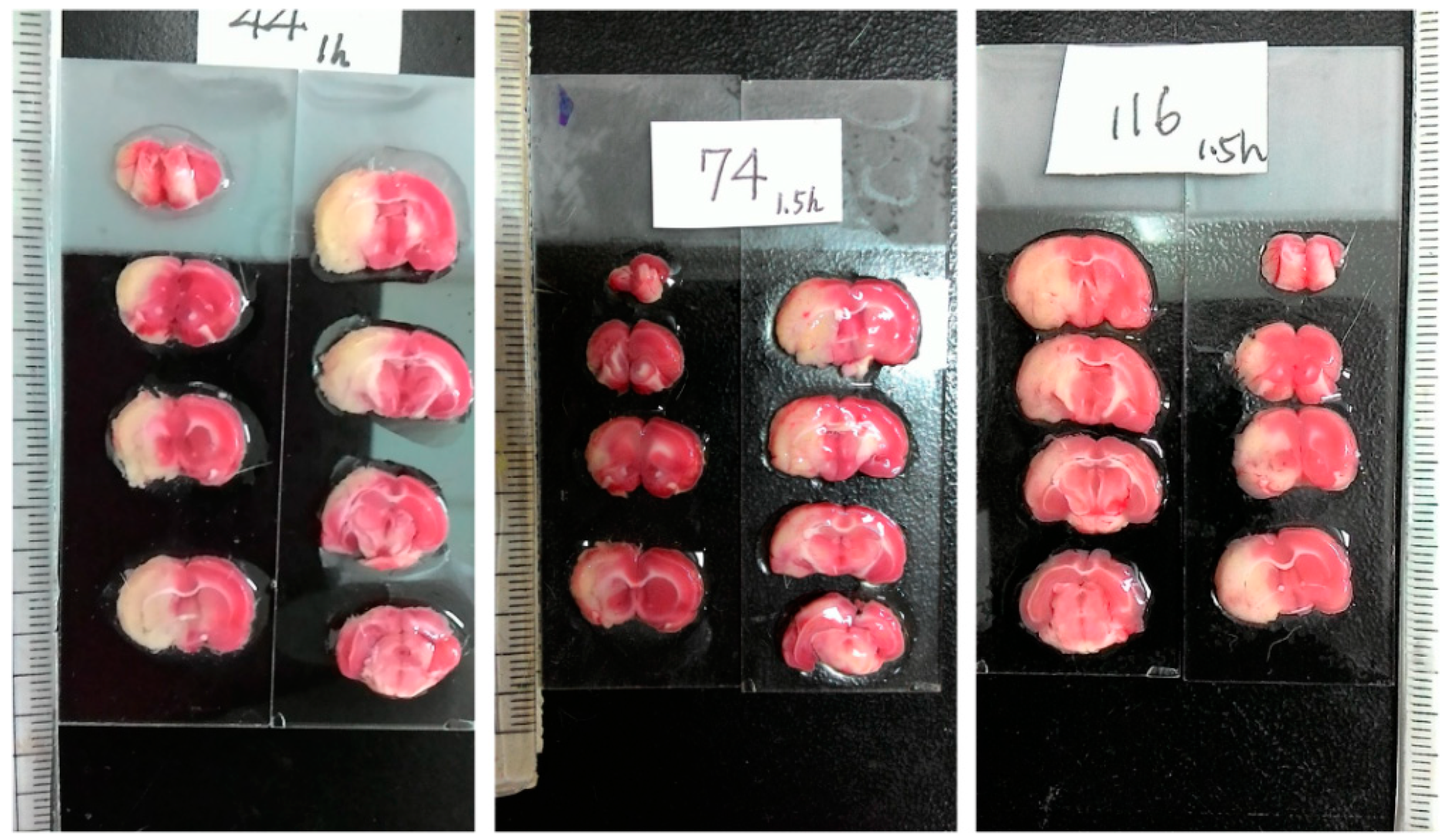

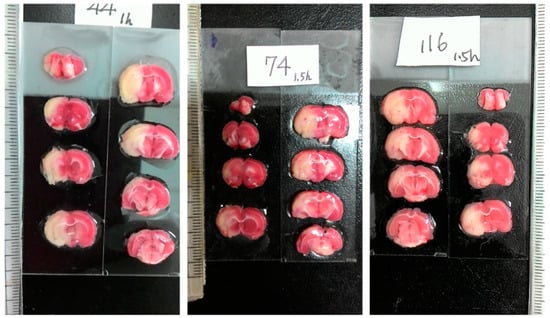

Figure 1.

Representative examples of three camera-based TTC-stained rat brain images (900 × 1600) after ischemic stroke captured by a smartphone. Each image represents an individual rat subject with the MCAO model. Each image includes eight different rat brain slices, where the massive white regions indicate infarction.

Although there are abundant techniques in the literature for brain segmentation in medical images, the majority are dedicated to human brain investigation in MRI. Existing human brain segmentation methods with MR images can be broadly classified into six categories: mathematical morphology-based [6], image intensity-based [7], deformable model-based [8], anatomy atlas-based [9], deep learning-based [10] and hybrid methods [11]. Alternatively, some approaches are devoted to rodent brain extraction in MRI. For example, an automatic method based on the pulse coupled neural network was proposed to crop MR images of rat brain volumes [12]. An automatic brain extraction scheme that requires a brain template mask was introduced to extract the brain tissue in rat MR images [13]. Unfortunately, a specific computer-aided algorithm for TTC-stained rat brain image processing has been lacking. Manual delineation [4,14] and simple thresholding [15] of the rat brain and hemisphere regions on numerous images have been traditionally utilized. For example, Goldlust et al. [16] described a pipeline using thresholding and edge detection to segment the rat brain whereas a commercial image processing program (PhotoFinish, ZSoft Software) was adopted to manually draw the midline to bisect the brain. The Environment for Visualizing Images (ENVI 4.5) software associated with an image analysis tool (ImageJ, https://imagej.nih.gov/ij/) (accessed on 19 October 2021) were employed in [17] to process rat brain images. A semi-automated analysis program was proposed to segment rodent brain sections with TTC staining [18]. While manual delineation is time consuming, the outcome by thresholding approaches is inaccurate and case-dependent, which requires heavier human intervention to complete the segmentation task. To lessen the laborious burden and obtain accurate brain segmentation, an automatic and reliable method for brain extraction and hemisphere segmentation in TTC-stained rat brain images is fundamental.

We have conducted a preliminary study of brain extraction and hemisphere segmentation in TTC-stained rat brain images based on simple saliency detection associated with edge detection and morphological operations [19]. From a different perspective, this paper attempts to develop fully automatic algorithms to address the problems of brain extraction and hemisphere segmentation starting from initially captured TTC-stained rat brain images as shown in Figure 1. The proposed framework consists of two consecutive stages that correspond to the two different tasks. In the first stage of brain extraction, a superpixel oversegmentation associated with salient region detection algorithm is proposed to efficiently segment the brain slices, followed by a parametric deformable model for accuracy improvement. In the second stage of hemisphere segmentation, the midline of the brain is obtained through brain edge detection and initial midline estimation followed by an advanced deformable model for midline refinement. In summary, there are five and three phases for the brain extraction and hemisphere segmentation tasks, respectively. In contrast to our prior investigation [19], considerably distinct approaches are developed except for the first phase of oversegmentation in the brain extraction mission. The major contributions of the current work are summarized as follows:

- Challenges to brain extraction and hemisphere segmentation in TTC-stained rat images captured by a smartphone are discussed.

- An automatic rat brain extraction algorithm in light of saliency region detection and active contour rectification is investigated.

- An automatic rat hemisphere segmentation scheme based on initial midline estimation refined by the gradient vector flow is introduced.

- Influences of light reflection and brain distortion on the segmentation accuracy are reduced due to the proposed frameworks.

- Massive experiments in fair comparison with competitive methods are administered for segmentation performance evaluation.

- A computer-aided tool is provided for closer monitoring of the rat brain region.

- Overall rat brain processing time is reduced in contrast to manual delineation.

2. Brain Extraction

2.1. Challenges

As illustrated in Figure 1, the extraction of the individual rat brain slices from the originally captured TTC-stained image by a smartphone is challenging according to the following observations:

- In addition to the stained rat brain slices, there are a scale and a label indicating the status of the subject being experimented, both of which need to be eliminated.

- The shape of the brains is irregular with broken and ambiguous boundaries.

- The colors of the brain slices range from white, pink, to cardinal with a nonuniform distribution.

- There are some bright stains on the brain regions due to the reflection of the moisture in the organ.

- The background is not clean and simple with a varying intensity distribution and a complicated pattern of light reflection.

To address these challenges, an efficient rat brain extraction algorithm in TTC-stained images is proposed, which consists of superpixel oversegmentation, salient feature computation, saliency trimap construction, salient region extraction, and final brain segmentation, as described in the following.

2.2. Superpixel Oversegmentation

A superpixel is a perceptually meaningful region that comprises several pixels with prescribed conditions, which can be employed to replace the strict structure of the pixel grid. Features acquired from superpixels have been shown to be effective and efficient for salient object detection [20,21]. Salient region detection has been successfully applied to many image-processing applications including segmentation [22]. As such, our approach first segments the TTC-stained rat brain image so that the segmented elements exhibit similar color characteristics with comparable dimensions and salient boundaries. To achieve this, we perform oversegmentation on the TTC-stained image to establish the set of superpixels , where represents the th superpixel and is the total number of superpixels being constructed. Considering its high efficiency and low complexity, we utilize the simple linear iterative clustering (SLIC) method [23], which employs a k-means clustering technique to produce superpixels. The number of superpixels is empirically set as to facilitate the subsequent process.

2.3. Salient Feature Computation

To retrieve each individual brain slice from the initial oversegmentation map, we compute a series of salient features for each superpixel . Since our biological vision system is highly perceptive to color contrast, a histogram-based contrast scheme is introduced to define saliency values based on color statistics. Let denote the color of the th superpixel ; the global color saliency is defined in light of its color contrast to all other superpixels as

where represents the global color saliency of and indicates the color distance metric between and that corresponds to superpixels and respectively. The metric is computed according to the Euclidean distance between the th and the th superpixels in both the RGB and CIELab color spaces. Specifically, there are six dimensions in the color distance computation to accommodate sharp color variation in the TTC-stained brain image.

In addition to the global color saliency, a local color saliency feature is defined as

where represents the local color saliency of superpixel and denotes the local proximity weight with

where is a normalization term, is the standard deviation, and and are the coordinates of superpixels and respectively. For long distance pairs, the value of is small, the local color saliency feature would be similar to the central peripheral contrast. If is constant, is analogous to the local contrast in [24]. In contrast to the global color saliency, the local color saliency introduces the proximity weight to reinforce the relevance of neighboring superpixels. This is advantageous to aggregate adjacent superpixels into a large component in the brain region while reducing the light reflection effect.

As the histogram is one of the most powerful saliency measures, we integrate color histogram characteristics into a histogram-based contrast feature. This color histogram feature is defined using the chi-square distance between different histogram pairs using quantized colors, i.e.,

where represents the color histogram contrast of superpixel , is the total number of histogram bins, and and indicate the th histogram bins of superpixels and respectively.

Based on the observation of the TTC-stained rat brain images, the color of the background is generally more diversified whereas the foreground color is more concentrated, which implies saliency. To understand the occurrence probability of each superpixel, a feature pertinent to the spatial coordinate distribution of superpixels is defined as

where represents the spatial coordinate distribution of superpixel and symbolizes the weighted mean position of , which is computed using

and indicates the color affinity weight between the colors of superpixels and , which is similar to the form of with

where is a normalization term and is the standard deviation for controlling the shape of the function.

2.4. Saliency Trimap Construction

Once the saliency features for all superpixels are computed, a classification algorithm is employed to inspect whether each region is salient or not. In respect to effectiveness and generalization, the random forest classifier [25] is exploited. A random forest or random decision forest is an ensemble learning scheme mainly for classification and regression. By constructing multiple decision trees at training time, it operates in such a way that each individual tree generates a class indicator and the class with the most votes grows into the model’s prediction. The fundamental concept behind the random forest is to integrate the bootstrap aggregating idea with random feature selection to maximize the prediction accuracy. Specifically, the code provided in [26] is adopted for our random forest classification implementation, which is trained using the image dataset with labeled images as described in [27]. A three-class classification design is utilized because the trimap strategy has been commonly manipulated in matting [28] and saliency [29] methods. In our approach, a superpixel is judged by two different thresholds to generate the trimap. If the prediction value of a superpixel is larger than , where denotes the threshold for the foreground, it is designated to the foreground class. Alternatively, if the prediction outcome is smaller than , where signifies the threshold for the background, the superpixel belongs to the background class. However, if the prediction score is in between and , it is regarded as the unidentified candidate. Comparing to the strict binary map, the trimap with an additional ambiguous class provides more reliable and flexible segmentation of salient regions in the TTC-stained rat brain images.

2.5. Salient Region Extraction

The construction of the trimap is for initial saliency estimation, which requires further processing to refine the three-class classification into rigid foreground and background segmentation. This is achieved by the use of the SaliencyCut algorithm [30], which takes the computed saliency trimap to facilitate automatic salient region segmentation. Founded on the GrabCut [31], the SaliencyCut accomplishes automation by two enhancements: iterative refining and adaptive fitting. Particularly, the SaliencyCut algorithm iteratively refines the initial salient regions to handle noisy initialization given a high recall of potential foreground regions and enables the iterative optimization process to boost the precision. Based on the observation that regions closer to an initial salient object are presumably part of that object, the SaliencyCut adaptively adjusts the initial condition to fit with newly segmented salient regions. As such, the new initialization scheme in the SaliencyCut allows the GrabCut to include adjacent salient superpixels and exclude non-salient superpixels in the trimap of the TTC-stained rat brain image. After each GrabCut iteration, the algorithm incorporates the restraints provided by the updated trimap into consideration to output the final segmentation.

2.6. Final Brain Segmentation

After obtaining the TTC-stained image segmentation outcome, the rat brain regions indicating saliency are often with ragged boundaries. This is primarily due to the fact of inevitable bright stains inside the brain regions and around the brain surfaces, which are caused by the light reflection effect. These bright stains exhibit quite a different color tone from the brain so that they are excluded from the salient regions, i.e., the segmented brain slices. In consequence, the interior bright stains result in holes in the brain regions, which are resolved with a morphological hole-filling process

where symbolizes the dilation operator, is a symmetric structuring element of cross, and is the complement of , which is the binary mask produced from the salient region extraction process. This procedure terminates at iteration step when , where contains all the filled holes in . The final segmentation mask without holes is attained by superimposing on :

where denotes the union operator and is the improved segmentation mask without interior holes.

To tackle the ragged boundary problem, a parametric deformable model is suggested. Thanks to its simplicity and popularity, the parametric active contour (also known as Snakes) [32], which has been broadly applied in image segmentation and object tracking, is exploited. A snake is defined as a set of ordered points or snaxels , , which are usually generated counter-clockwise. A parametric active contour advances through the spatial domain of an image to minimize the energy functional

where and denote the internal energy and external energy, respectively. The internal energy in Equation (10) is defined as

where and are weighting functions that control the tension and rigidity of the contour, respectively. The first order derivative with respect to adjusts the distance between adjacent snaxels. During energy minimization, the second order term enables the contour to resist flection.

Alternatively, the external energy in Equation (10) is designed to let the contour interact with the image. To handle the blurred boundaries of the rat brain in the TTC-stained image, the segmented color image after the hole filling process is transformed using

where is the transformed gray scale image; is a threshold; and , and are the red, green and blue channels of respectively. Since the rat brain is primarily red, for the blurred brain boundaries the absolute difference between the red and blue channels should be relatively small. As such, the intensity in the brain regions is boosted whereas the intensity in the blurred boundaries is diminished. Subsequently, the external energy is derived from using

where is the weight, symbolizes the gradient operator, denotes the convolution operation and represents a 2-D Gaussian function with a standard deviation . The boundary of the segmented brain mask after hole filling is utilized for the initial contour of the proposed deformable model. The contour evolves towards the brain surface based on Equation (10) until convergence is achieved, where the entire rat brain extraction procedure is completed. The extracted brain slices are saved in each individual image with a fixed dimension to facilitate subsequent processing.

3. Hemisphere Segmentation

3.1. Challenges

After extracting the rat brain slices, the next stage is performing hemisphere segmentation, which is also challenging as detailed in the following:

- Due to the manual placement of the rat brain slices, the midline is randomly oriented, not vertically.

- The rat brain can be seriously distorted due to the infarction of the induced stroke so that the midline is convoluted.

- The midline exhibits a similar color tone to its surrounding tissues and is visible in short segments to the naked eyes.

- There are merely few anatomically salient structures around the midline that can provide meaningful information for the identification.

We tackle the rat hemisphere segmentation problems with the described difficulties using a series of image processing steps containing medial subimage extraction, initial midline detection, and final midline estimation.

3.2. Medial Subimage Extraction

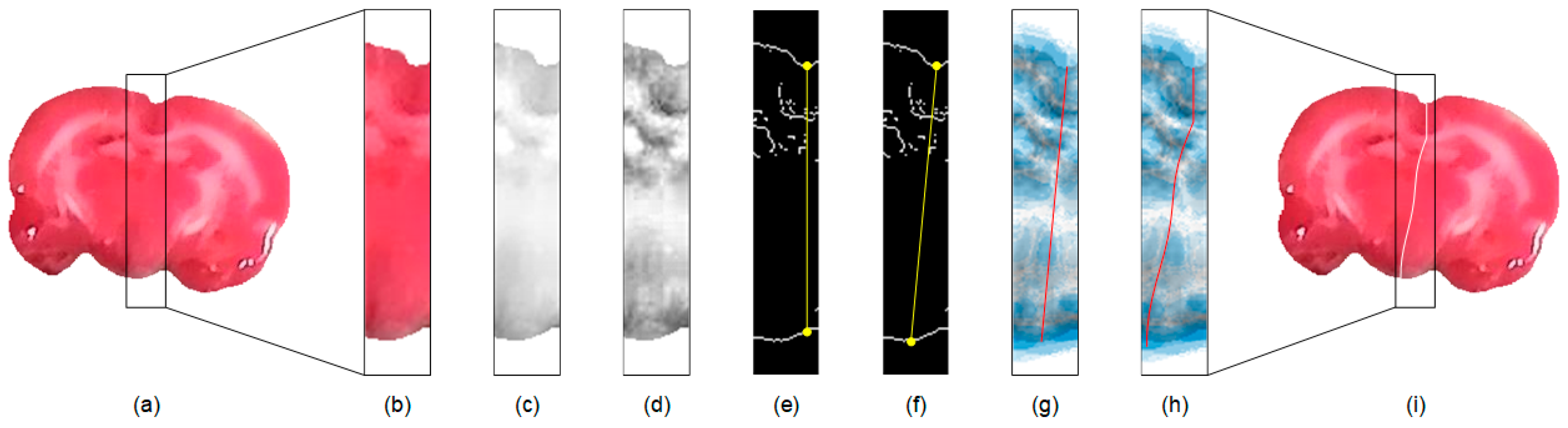

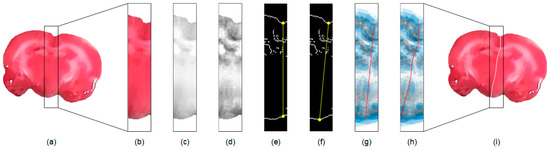

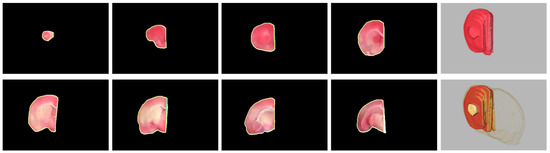

Since the midline is across the medial part of the brain, an efficient approach for hemisphere segmentation is to restrict the processing region to the proximity of the midline. To achieve this, in each extracted rat brain image, e.g., Figure 2a, we first compute the center of mass using the corresponding binary mask. A rectangular subimage containing the medial brain based on the coordinates of the center of mass with a width, is then computed as illustrated in Figure 2b. Herein, an appropriate size is empirically determined by setting pixels. In our experience, this setting suffices for the inclusion of most midlines in the extracted subimage. If necessary, the value of can be increased to involve greatly convoluted midlines. To facilitate the subsequent processing, in Figure 2c, only the red channel of the TTC-stained subimage is utilized, which is denoted as . This gray scale image is further enhanced using the adaptive histogram equalization method [33] to increase the contrast as shown in Figure 2d and denoted as .

Figure 2.

Illustration of the proposed rat hemisphere segmentation algorithm. (a) Extracted rat brain slice. (b) Segmented medial subimage from (a). (c) Red channel image of (b). (d) Enhanced image of (c). (e) Edge map of (d). (f) Initial straight midline connecting the brain boundaries. (g) Initial midline superimposed on the GVF field. (h) Estimated midline after curve evolution. (i) Final midline superimposed on the input image for separation.

3.3. Initial Midline Detection

Subsequently, the edge map of is computed using the Sobel operator and denoted as . The edge map presents apparent brain borders, which are managed for the initial midline estimation. The computation starts from the searching of the superior groove and inferior concave points in the edge map of the subimage as illustrated in Figure 2e. In our approach, the superior groove point is detected by searching for the lowest position from the highest location along the superior brain boundary towards the medial axis. A vertical line is drawn from the identified groove point to intersect the inferior brain boundary. The inferior concave point is then detected by exploring the deepest position with the longest length around the intersection to accommodate distorted brain shapes. As depicted in Figure 2f, an initial straight midline is generated from the superior groove point to the inferior concave point for further evolution.

3.4. Final Midline Estimation

Because the cerebral hemispheres are rarely symmetric, especially in ischemic rat brains, the preceding midline usually provides no accurate estimation of the midsurface that separates the rat brain. A parametric deformable model that is derived from snakes [32], which is called the gradient vector flow (GVF) [34], is exploited to address this issue. The GVF improves the traditional snake model by introducing the GVF field, which is a vector field with that minimizes the energy functional

where is a regularization parameter controlling the weight between the first term and the second term in the integrand; is an edge map produced from the input subimage ; and and are the vector field component in the - and - axes, respectively. An open GVF contour is initialized using the straight midline as delineated in Figure 2g. To guide the contour advancing towards the rat brain midsurface, the GVF field is computed by solving the following Euler equations

where indicates the Laplacian operator. The GVF field is interpolated from the image edge map that reflects a kind of competition among the boundary vectors, which helps restrict the curve evolution inside . When the stopping criterion is reached, the contour locates in the proximity of the midsurface as shown in Figure 2h. A morphological operation of thinning is applied to the GVF curve to produce a one-pixel-wide contour to reinforce segmentation accuracy, which is superimposed on the input image to separate the rat brain as illustrated in Figure 2i. The abovementioned procedures are independently applied to each extracted rat brain TTC-stained image.

4. Results and Discussion

4.1. Implementation and Image Acquisition

The proposed brain extraction and hemisphere segmentation algorithms in rat TTC-stained images were implemented and programmed in MATLAB R2019a (The MathWorks Inc. Natick, MA, USA). All experiments were executed on an Intel® Core (TM) i5-3210M CPU @ 2.50GHz with 8 GB RAM running 64-bit Windows 10. The deformable model parameters were set as follows: , , and . This study employed an ischemia-reperfusion model based on MCAO on the right side with a silicon-coated nylon filament. Different ischemic durations were designed to develop a wide range of infarcts. Male Sprague-Dawley rats with ages of 7–9 weeks old and body weights of 180–340 g were adopted as experimental specimens. There were 40 rats sacrificed for in vitro TTC staining to evaluate the proposed brain extraction and hemisphere segmentation algorithms. Each stained rat brain was cut into eight slices along the coronal direction with each slice 2 mm in thickness. The experiments were carried out in accordance with the principles of the Basel Declaration. All eight stained slices were coded and photographed together using a modern smartphone (Zenfone Z00D, ASUSTeK Computer Inc., Taipei, Taiwan) located at a fixed position for the experiments as illustrated in Figure 1.

4.2. Evaluation Metrics

We computed different similarity metrics between the segmentation and gold standard masks to evaluate the performance of the proposed brain extraction and hemisphere segmentation frameworks. The gold standard outlines of the brains and hemispheres were delineated by an experienced neurologist in our team while referring to the rat brain atlas. Particularly, the conformity metric was adopted to evaluate the overall accuracy by measuring the unity subtracted by the ratio of the number of mis-segmented pixels to the number of correctly segmented pixels using [35]

where indicates false positives, indicates false negatives, and indicates true positives. Two other global similarity metrics, Jaccard [36] and Dice [37], were also utilized to understand the segmentation performance with

and

Additionally, two local similarity metrics of sensitivity and sensibility [35] were employed to evaluate the degree of under-segmentation and over-segmentation using

and

Finally, a pixel number difference measure was included to compute the absolute difference between the pixel number of the segmentation result and the pixel number of the gold standard with

Another pixel number distinction metric was adopted to realize the number of wrong pixels with spatial correlation:

A pixel number error ratio metric indicating the percentage of the pixel number distinction to the ground truth pixel number was exploited to understand the overall segmentation error with

4.3. Evaluation of Rat Brain Extraction

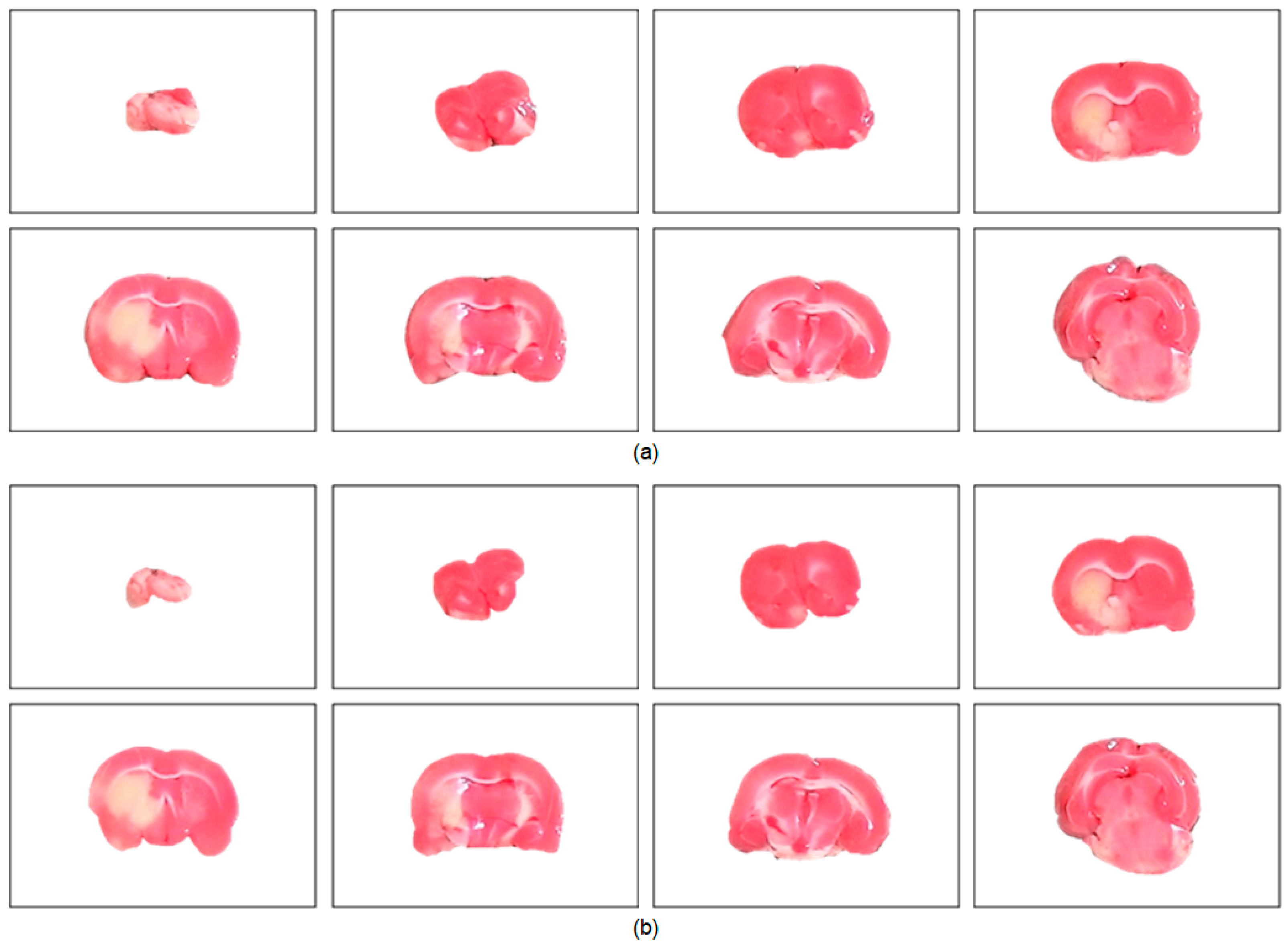

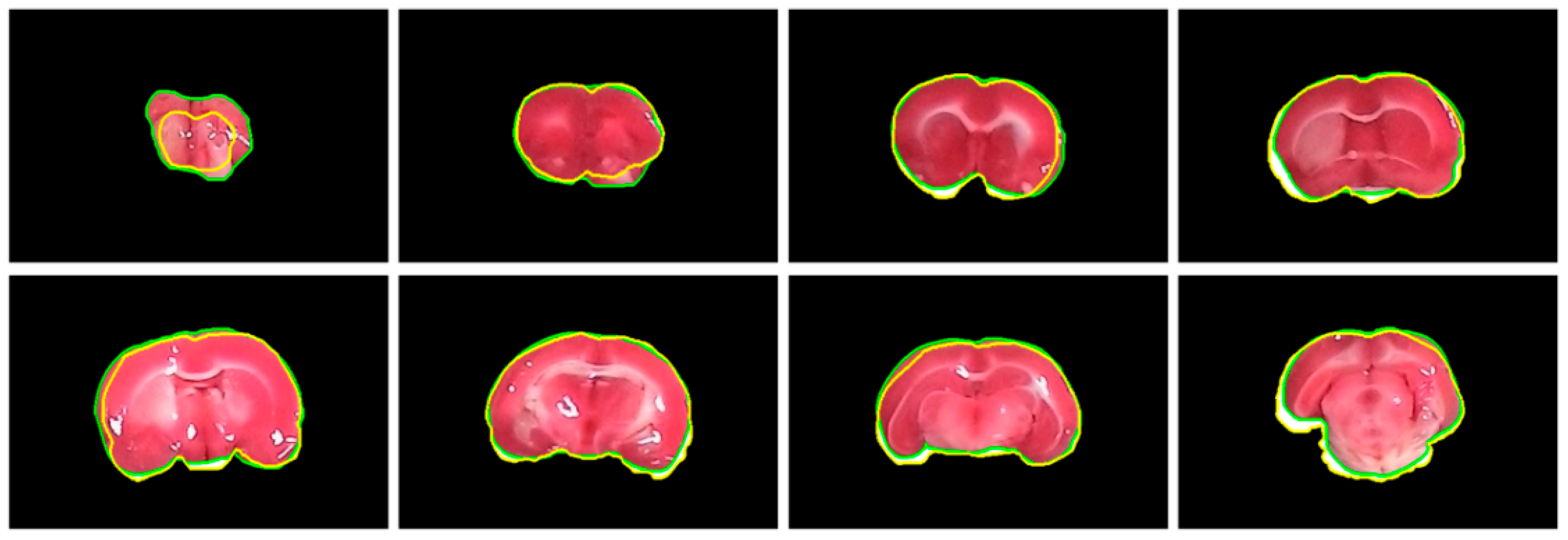

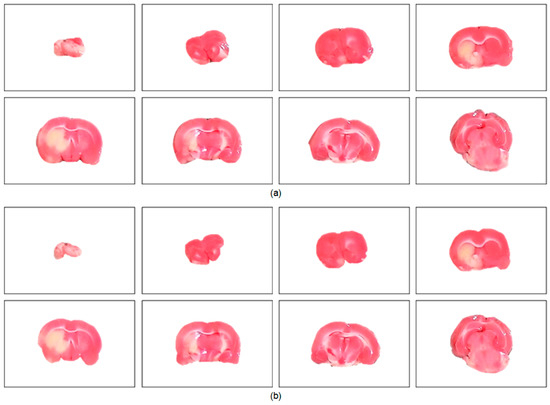

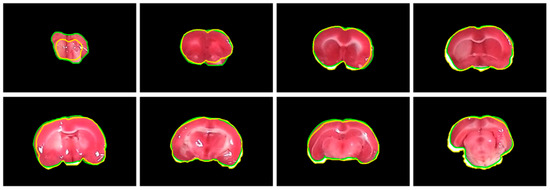

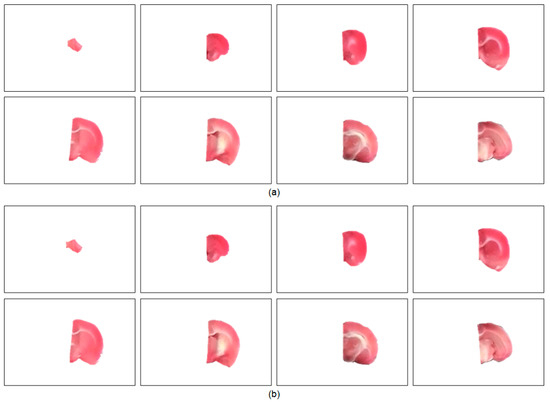

The performance of the proposed brain extraction algorithm in the experimental image data was first evaluated. The input images were the original camera-based TTC-stained rat brain slices as illustrated in Figure 1. The segmentation goal is to generate eight individual brain slices per specimen from the big photographic images with complicated scenes. Figure 3 visually demonstrates the rat brain extraction results of a representative example (Subject 37) in comparison with the gold standard slices. To facilitate subsequent processing, each brain slice was stored in a 480 × 320 image. It was noted that the extracted brain slices in Figure 3a overcame the influences of light reflection and exhibited quite clean boundaries and complete structures. Another brain extraction instance (Subject 20) is depicted in Figure 4, where the green contours represent the segmented brain boundaries and the yellow contours indicate the gold standard outlines. The two different contours mostly overlapped each other but with apparent distinction in some segments. The separation distances between our automatic extraction curves and the gold standard outlines were relatively larger as the brain regions were getting smaller, especially for the first two slices. This is mainly because the rat brain is roughly an ellipsoid with varied cross sections. The inevitable thickness inherited in each cut brain slice results in two different brain sections being captured, where the top brain region is the desired target not the bottom brain section. Particularly for the first slice, which belongs to the olfactory bulb, the upper brain section is considerably smaller than the bottom brain section due to the rapidly changed geometry. Since the distinction between the bottom brain section and the background is stronger than the discrimination between the top and bottom brain sections, the proposed rat brain extraction algorithm mistakenly selected the bottom brain boundary as the segmentation output.

Figure 3.

Visual evaluation of the rat brain extraction results of Subject 37. There are eight brain slices extracted from a large compound image (Figure 1) and stored in the same 480 × 320 image. (a) The proposed algorithm. (b) The gold standard.

Figure 4.

Visual comparison of subject 20 between our rat brain extraction contours (green) and the gold standard outlines (yellow). The brain extraction results were superimposed on the gold standard images with a dimension of 480 × 320 for all slices.

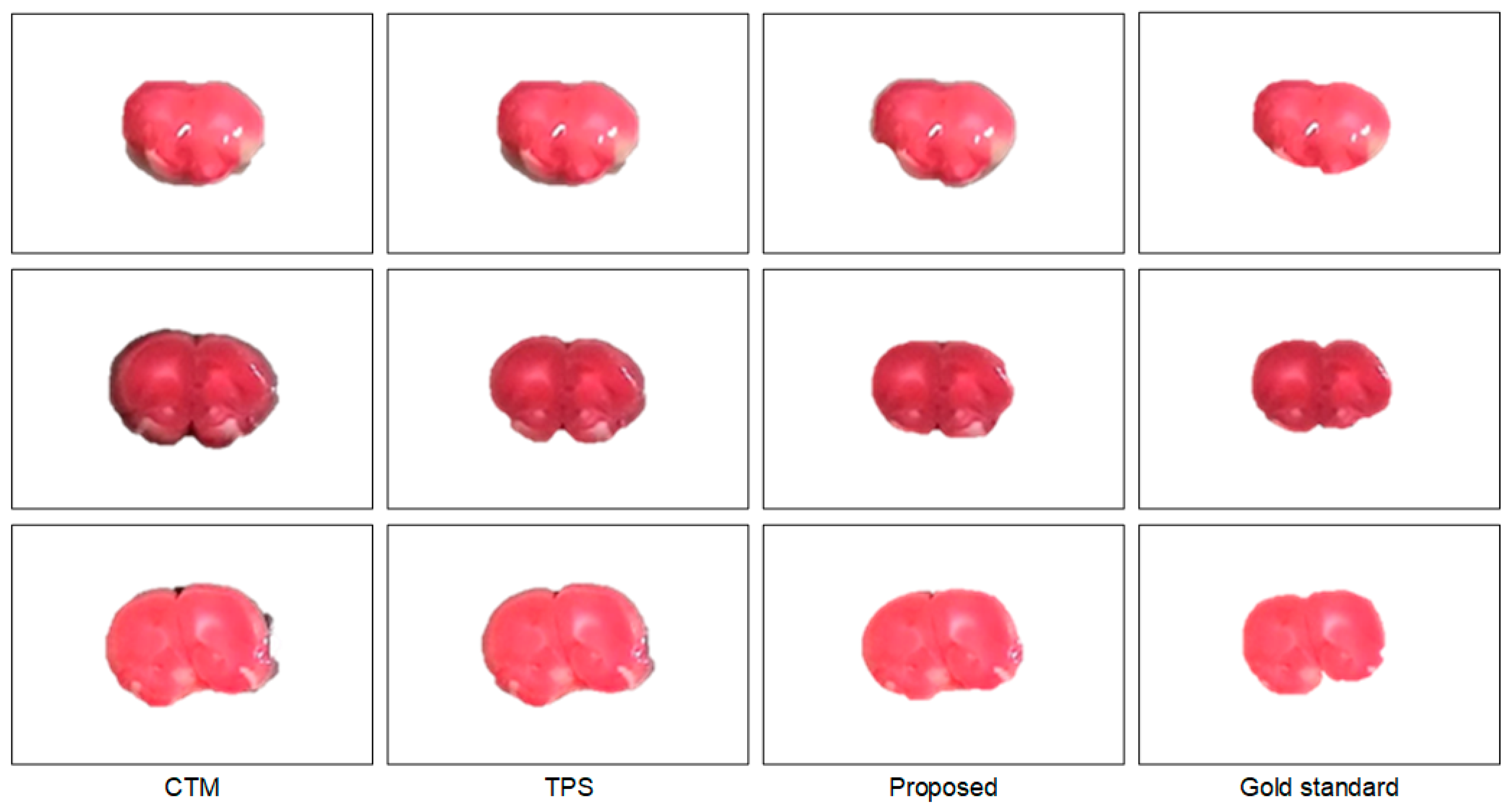

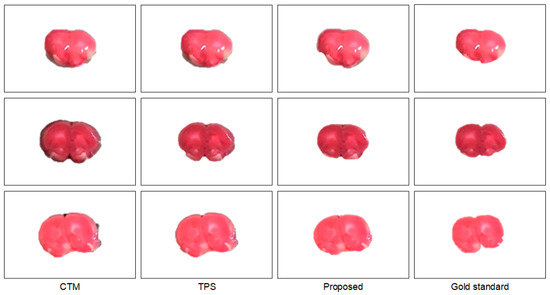

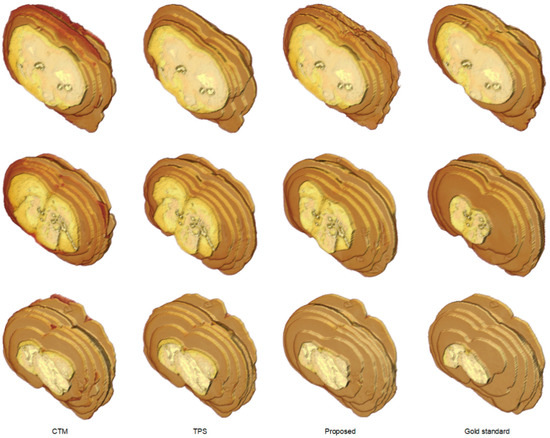

To further understand the characteristics of the proposed algorithm and demonstrate the difficulty of rat brain extraction in TTC-stained images, we compared our segmentation results with two different approaches: one is the color thresholding method (CTM) [38] and the other is the two phase segmentation (TPS) using active contours [39]. Without available codes of rat brain extraction methods for comparison, the reasons for choosing these two methods are that the simple CTM provides a benchmark whereas the popular TPS enables perceiving the improvement with an advanced scheme. Figure 5 illustrates the extracted brain slices of three different subjects using the CTM, TPS and proposed methods along with the gold standard. The segmentation results produced by the CTM included visible background pixels around the brain surfaces, which led to relatively larger brain areas. Fewer background pixels were observed in the TPS brain slices comparing to the CTM results. It was our rat brain extraction algorithm that outperformed the competitive methods generating the brain slices that best resembled the gold standard in all scenarios.

Figure 5.

Visual comparison of brain extraction results in rat TTC-stained images using different methods. Top row: slice 1 of Subject 2. Middle row: slice 2 of Subject 20. Bottom row: slice 3 of Subject 37.

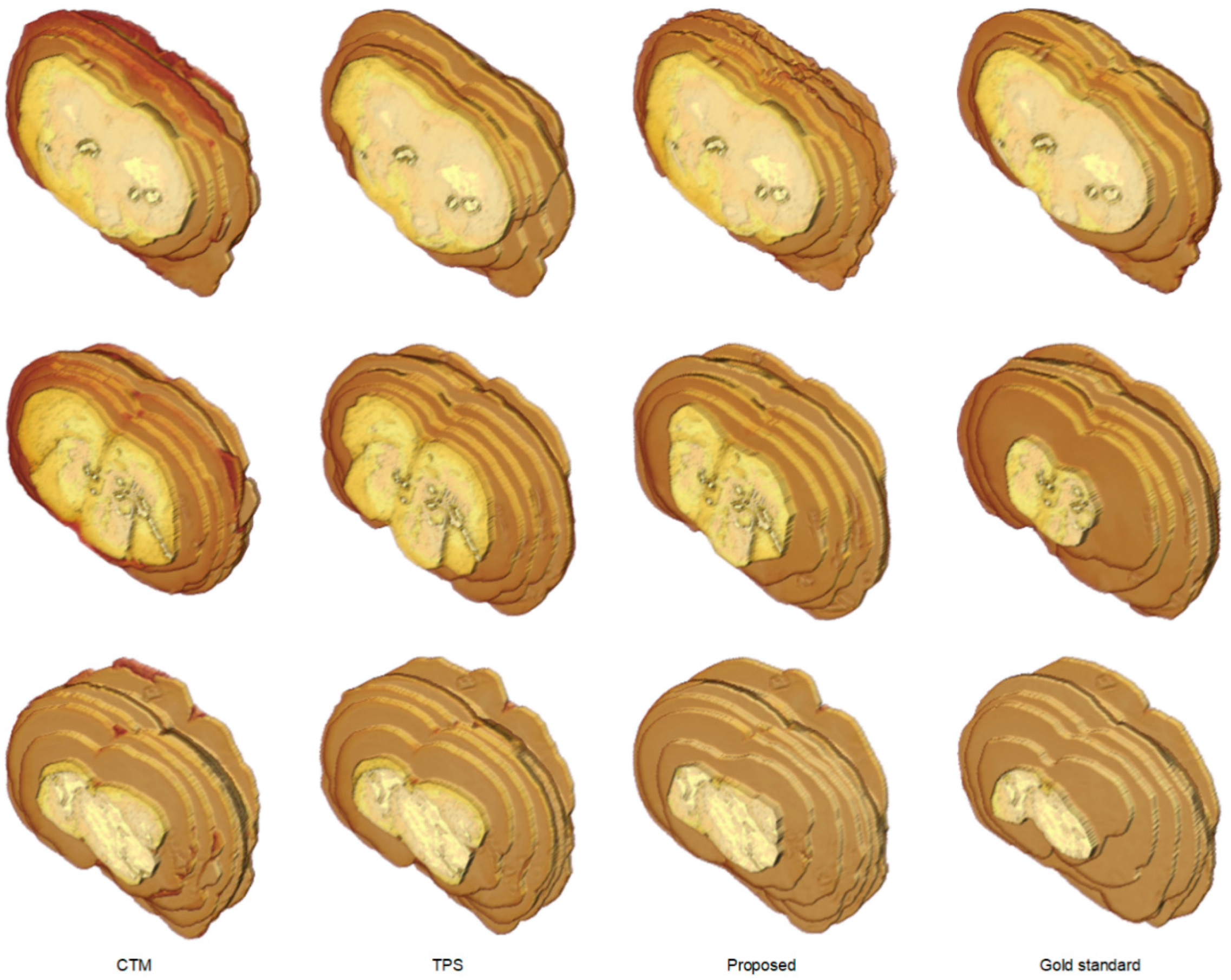

The segmentation outcomes of all brain slices of the representative examples in Figure 5 were demonstrated in Figure 6 with 3-D view. While the CTM results exhibited larger geometry differences to the gold standard, the difference was diminished by the TPS method and further reduced by the proposed framework. Table 1 presents the segmentation accuracy in terms of for the brain extraction outcomes in Figure 5 and Figure 6, where our scheme obtained the highest scores in all instances. Quantitative performance assessment of all experimented methods using the 40 rat brain subjects in the acquired dataset in light of five different evaluation metrics was summarized in Table 2. Both CTM and TPS methods produced high average scores but low average values, which corresponded to the observation in Figure 5 and Figure 6 that excessive background regions were involved in the segmentation results. The proposed rat brain extraction algorithm, in contrast, provided a much higher average score than the CTM and TPS methods, which resulted in the greatest average value. Indeed, our segmentation scheme yielded the best average scores in all performance evaluation metrics. Nevertheless, the efficacy of the proposed method can be deteriorated if the light reflection region is tightly connected to the brain boundaries or the light reflection region inside the brain produces a deep cave. The computation times were approximately 0.53 s, 1.67 s and 1.52 s for the CTM, TPS and proposed methods, respectively.

Figure 6.

Visual comparison of rat brain extraction results in TTC-stained images using different methods in 3-D view. Top row: Subject 2. Middle row: Subject 20. Bottom row: Subject 37.

Table 2.

Quantitative performance analyses of the rat brain extraction methods applied to the TTC-stained image. dataset.

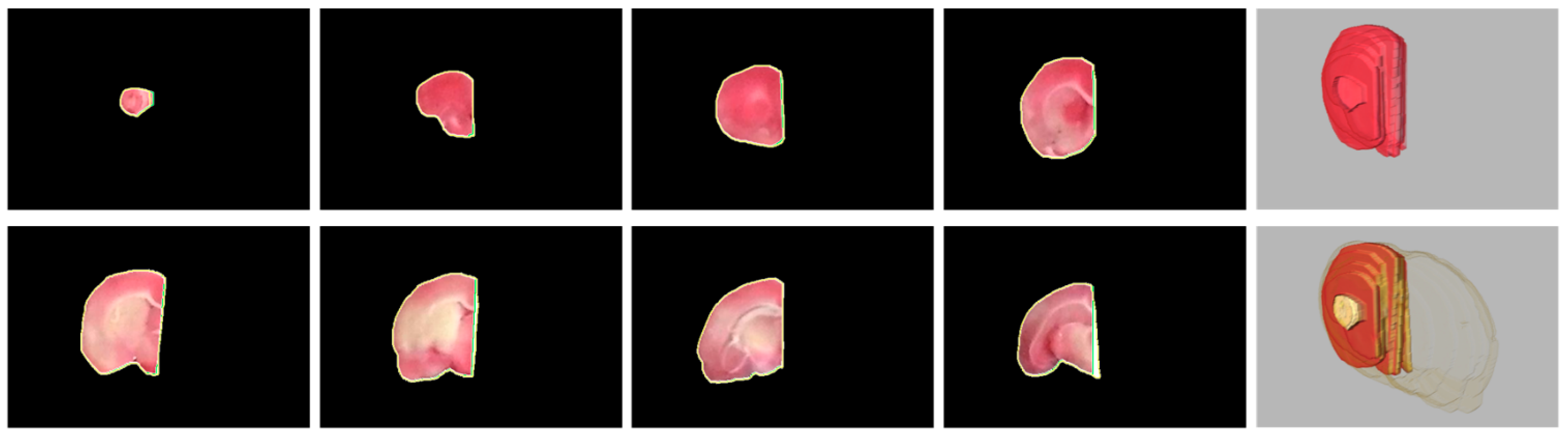

4.4. Evaluation of Rat Hemisphere Segmentation

After accomplishing the individual rat brain slices, an important task is to separate the brain into two hemispheres for further processing and analysis. Figure 7 illustrates the split left brain regions of a representative specimen using the proposed hemisphere segmentation algorithm along with the gold standard hemisphere. The segmented left brain slices approximately conformed to the gold standard brain surfaces with a volumetric . Figure 8 shows the eight separated right brain regions and their 3-D view of the same subject in Figure 7, where the segmentation contours overlapped the gold standard contours. The midlines estimated by our framework were quite close to the midlines delineated by the expert in most brain slices, leading to high evaluation scores of , and . It was noted that some midlines were convoluted due to the distorted brain caused by the infarction, which increased the difficulty of accurate segmentation. Since rat hemisphere segmentation in TTC-stained images is a particular task and, to the best of our knowledge, no other available code has been publically released for comparison, the uniquely developed scheme for our dataset was evaluated by computing the abovementioned performance measure metrics based on the gold standard. Table 3 presents the quantitative similarity evaluation scores of our rat hemisphere segmentation results in the TTC-stained image dataset. The segmentation accuracy of both left and right hemispheres was comparable in all performance measure metrics, which exhibited high overall average values of , and . Finally, Table 4 summarized our hemisphere segmentation error analyses, where the left hemisphere outcome indicated a lower average score whereas the right hemisphere outcome revealed a smaller average score. The overall volumetric segmentation error attained a low average .

Figure 7.

Visual evaluation of the left hemisphere segmentation results of Subject 16. (a) The proposed algorithm. (b) The gold standard. The values were 93.06%, 98.29%, 97.92%, 98.22%, 98.51%, 97.53%, 99.02% and 96.52% from top left to bottom right, respectively.

Figure 8.

Visual comparison between the rat hemisphere segmentation contours (green) and the gold standard outlines (yellow). From top left, the values were 88.58%, 97.58%, 97.01%, 97.24%, 98.03%, 96.26%, 98.78% and 92.79%, respectively. Top right: 3-D view of the segmented right hemisphere, . Bottom right: 3-D view of the segmented hemisphere superimposed with the gold standard, .

Table 3.

Quantitative performance analyses of the rat hemisphere segmentation results in the TTC-stained image dataset based on similarity measure metrics.

Table 4.

Quantitative performance evaluation of the rat hemisphere segmentation results in the TTC-stained image dataset based on error analysis metrics.

Thanks to the stimulation of the assistance in experimental stroke investigation with TTC-stained rat images, we exclusively developed brain extraction and hemisphere segmentation algorithms in this work. To manage the raw TTC-stained image data captured by a smartphone and to handle the light reflection and background noise issues caused by the camera sensor, the brain extraction framework consisted of five different processing phases. By aggregating similar pixels into superpixels, the subsequent application of the salient region extraction scheme efficiently separated the brain foreground from the complicated background while reducing the influence of the sensor-related headaches. To eliminate the thickness regions contained in the initial brain extraction results, the parametric deformable model associated with the color image transformation scheme adequately achieved rat brain refinements as demonstrated in Figure 3 and Figure 4. Qualitative and quantitative comparisons between the proposed approach and other competitive methods further validated the effectiveness of our tactics as presented in Figure 5 and Figure 6 as well as Table 1 and Table 2, respectively. On the other hand, three different phases were designed in the hemisphere segmentation framework to deal with the difficulty of the midline computation. By restricting the estimation in the medial subimage followed by the GVF model, more accurate hemisphere segmentation results were acquired as shown in Figure 7 and Figure 8, and evaluated in Table 3 and Table 4. Lastly, the average hemisphere segmentation processing time was 0.85 s.

5. Conclusions

Devoted to brain extraction and hemisphere segmentation in TTC-stained rat images, this paper introduced various strategies to tackle these two individual tasks. To diminish the influence caused by the optical and sensor-related issues, the proposed rat brain extraction algorithm was founded on the integration of superpixel saliency detection and parametric contour segmentation. For rat hemisphere segmentation, open curve evolution guided by the gradient vector flow in a restricted subimage was exploited. A wide variety of TTC-stained rat brain images captured by a smartphone were generated and employed to evaluate our established frameworks. Experimental results on the segmentation of rat brains and cerebral hemispheres suggested high segmentation accuracy. The developed segmentation algorithms are promising and beneficial in facilitating experimental stroke studies with TTC-stained rat brain images. It is worth investigating the discrimination between the desired brain surfaces and the color tone-oriented segmentation to diminish the gap between human interpretation and machine recognition.

Author Contributions

Conceptualization, H.-H.C. and S.-T.H.; methodology, H.-H.C. and S.-J.Y.; software, H.-H.C.; validation, H.-H.C. and S.-J.Y.; formal analysis, S.-J.Y. and M.-C.C.; investigation, M.-C.C.; resources, S.-T.H.; data curation, S.-J.Y.; writing—original draft preparation, H.-H.C.; writing—review and editing, S.-J.Y., M.-C.C. and S.-T.H.; visualization, H.-H.C.; supervision, S.-T.H.; project administration, H.-H.C. and S.-T.H.; funding acquisition, H.-H.C. and S.-T.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by the Ministry of Science and Technology of Taiwan under Grant MOST 107-2320-B-002-043-MY3 and MOST 108-2221-E-002-080-MY3.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Basel Declaration, and approved by the Institutional Animal Care and Use Committee (IACUC) of National Taiwan University College of Medicine (protocol code: 20180432 and date of approval: 14 January 2019).

Informed Consent Statement

Not applicable.

Data Availability Statement

The data that support the findings of this study are available for sharing from the corresponding authors upon reasonable request.

Acknowledgments

The authors would like to thank Min-Yi Chen for running the experiments and preparing the data.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhu, L.-H.; Zhang, Z.-P.; Wang, F.-N.; Cheng, Q.-H.; Guo, G. Diffusion kurtosis imaging of microstructural changes in brain tissue affected by acute ischemic stroke in different locations. Neural Regen. Res. 2019, 14, 272–279. [Google Scholar]

- Tsai, Y.-H.; Yang, J.-L.; Lee, I.-N.; Yang, J.-T.; Lin, L.-C.; Huang, Y.-C.; Yeh, M.-Y.; Weng, H.-H.; Su, C.-H. Effects of Dehydration on Brain Perfusion and Infarct Core After Acute Middle Cerebral Artery Occlusion in Rats: Evidence From High-Field Magnetic Resonance Imaging. Front. Neurol. 2018, 9, 786. [Google Scholar] [CrossRef] [PubMed]

- Majumdar, A.; Ward, R. Rank Awareness in Group-Sparse Recovery of Multi-Echo MR Images. Sensors 2013, 13, 3902–3921. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Yu, Q.; Liang, W. Use of 2,3,5-triphenyltetrazolium chloride-stained brain tissues for immunofluorescence analyses after focal cerebral ischemia in rats. Pathol.–Res. Pract. 2018, 214, 174–179. [Google Scholar] [CrossRef]

- Benedek, A.; Móricz, K.; Jurányi, Z.; Gigler, G.; Lévay, G.; Hársing, L.G.; Mátyus, P.; Szénási, G.; Albert, M. Use of TTC staining for the evaluation of tissue injury in the early phases of reperfusion after focal cerebral ischemia in rats. Brain Res. 2006, 1116, 159–165. [Google Scholar] [CrossRef] [PubMed]

- Shattuck, D.W.; Sandor-Leahy, S.R.; Schaper, K.A.; Rottenberg, D.A.; Leahy, R.M. Magnetic Resonance Image Tissue Classification Using a Partial Volume Model. NeuroImage 2001, 13, 856–876. [Google Scholar] [CrossRef]

- Chen, H.; Xie, Z.; Huang, Y.; Gai, D. Intuitionistic Fuzzy C-Means Algorithm Based on Membership Information Transfer-Ring and Similarity Measurement. Sensors 2021, 21, 696. [Google Scholar] [CrossRef]

- Liu, H.-T.; Sheu, T.H.; Chang, H.-H. Automatic segmentation of brain MR images using an adaptive balloon snake model with fuzzy classification. Med. Biol. Eng. Comput. 2013, 51, 1091–1104. [Google Scholar] [CrossRef]

- Eskildsen, S.F.; Coupé, P.; Fonov, V.; Manjón, J.V.; Leung, K.K.; Guizard, N.; Wassef, S.N.; Østergaard, L.R.; Collins, D.L. BEaST: Brain extraction based on nonlocal segmentation technique. NeuroImage 2012, 59, 2362–2373. [Google Scholar] [CrossRef]

- Dayananda, C.; Choi, J.-Y.; Lee, B. Multi-Scale Squeeze U-SegNet with Multi Global Attention for Brain MRI Segmentation. Sensors 2021, 21, 3363. [Google Scholar] [CrossRef]

- Kalavathi, P.; Prasath, V.B.S. Methods on Skull Stripping of MRI Head Scan Images—A Review. J. Digit. Imaging 2016, 29, 365–379. [Google Scholar] [CrossRef]

- Murugavel, M.; Sullivan, J.M. Automatic cropping of MRI rat brain volumes using pulse coupled neural networks. NeuroImage 2009, 45, 845–854. [Google Scholar] [CrossRef]

- Liu, Y.; Unsal, H.S.; Tao, Y.; Zhang, N. Automatic Brain Extraction for Rodent MRI Images. Neuroinformatics 2020, 18, 395–406. [Google Scholar] [CrossRef] [PubMed]

- He, Q.; Li, S.; Li, L.; Hu, F.; Weng, N.; Fan, X.; Kuang, S. Total Flavonoids in Caragana (TFC) Promotes Angiogenesis and Enhances Cerebral Perfusion in a Rat Model of Ischemic Stroke. Front. Neurosci. 2018, 12, 635. [Google Scholar] [CrossRef]

- Wexler, E.J.; Peters, E.E.; Gonzales, A.; Gonzales, M.L.; Slee, A.M.; Kerr, J.S. An objective procedure for ischemic area evaluation of the stroke intraluminal thread model in the mouse and rat. J. Neurosci. Methods 2002, 113, 51–58. [Google Scholar] [CrossRef]

- Goldlust, E.J.; Paczynski, R.P.; He, Y.Y.; Hsu, C.Y.; Goldberg, M.P. Automated Measurement of Infarct Size With Scanned Images of Triphenyltetrazolium Chloride–Stained Rat Brains. Stroke 1996, 27, 1657–1662. [Google Scholar] [CrossRef]

- Fu, C.; Ma, K.; Li, Z.; Wang, H.; Chen, T.; Zhang, D.; Wang, S.; Mu, N.; Yang, C.; Zhao, L.; et al. Rapid, label-free detection of cerebral ischemia in rats using hyperspectral imaging. J. Neurosci. Methods 2020, 329, 108466. [Google Scholar] [CrossRef] [PubMed]

- Shi, X.-F.; Ai, H.; Lu, W.; Cai, F. SAT: Free Software for the Semi-Automated Analysis of Rodent Brain Sections With 2,3,5-Triphenyltetrazolium Chloride Staining. Front. Neurosci. 2019, 13, 102. [Google Scholar] [CrossRef] [PubMed]

- Chang, H.H.; Yeh, S.J.; Chiang, M.C.; Hsieh, S.T. Automated Brain Extraction and Separation in Triphenyltetrazolium Chloride-Stained Rat Images. In Proceedings of the 2020 28th European Signal Processing Conference (EUSIPCO), Amsterdam, The Netherlands, 18–21 January 2021; pp. 1362–1366. [Google Scholar]

- Perazzi, F.; Krähenbühl, P.; Pritch, Y.; Hornung, A. Saliency filters: Contrast based filtering for salient region detection. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 733–740. [Google Scholar]

- Yang, C.; Zhang, L.; Lu, H.; Ruan, X.; Yang, M. Saliency Detection via Graph-Based Manifold Ranking. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 3166–3173. [Google Scholar]

- Liu, Z.; Shi, R.; Shen, L.; Xue, Y.; Ngan, K.N.; Zhang, Z. Unsupervised Salient Object Segmentation Based on Kernel Density Estimation and Two-Phase Graph Cut. IEEE Trans. Multimed. 2012, 14, 1275–1289. [Google Scholar] [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC Superpixels Compared to State-of-the-Art Superpixel Methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [PubMed]

- Cheng, M.; Zhang, G.; Mitra, N.J.; Huang, X.; Hu, S. Global contrast based salient region detection. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 409–416. [Google Scholar]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Becker, C.; Rigamonti, R.; Lepetit, V.; Fua, P. Supervised Feature Learning for Curvilinear Structure Segmentation; Springer: Berlin/Heidelberg, Germany, 2013; pp. 526–533. [Google Scholar]

- Liu, T.; Sun, J.; Zheng, N.; Tang, X.; Shum, H. Learning to Detect A Salient Object. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Wang, J.; Cohen, M.F. Optimized Color Sampling for Robust Matting. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Kim, J.; Han, D.; Tai, Y.; Kim, J. Salient Region Detection via High-Dimensional Color Transform and Local Spatial Support. IEEE Trans. Image Process. 2016, 25, 9–23. [Google Scholar] [CrossRef] [PubMed]

- Cheng, M.; Mitra, N.J.; Huang, X.; Torr, P.H.S.; Hu, S. Global Contrast Based Salient Region Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 569–582. [Google Scholar] [CrossRef]

- Rother, C.; Kolmogorov, V.; Blake, A. “GrabCut”: Interactive foreground extraction using iterated graph cuts. ACM Trans. Graph. 2004, 23, 309–314. [Google Scholar] [CrossRef]

- Kass, M.; Witkin, A.; Terzopoulos, D. Snakes: Active contour models. Int. J. Comput. Vis. 1988, 1, 321–331. [Google Scholar] [CrossRef]

- Pizer, S.M.; Amburn, E.P.; Austin, J.D.; Cromartie, R.; Geselowitz, A.; Greer, T.; ter Haar Romeny, B.; Zimmerman, J.B.; Zuiderveld, K. Adaptive histogram equalization and its variations. Comput. Vis. Graph. Image Process. 1987, 39, 355–368. [Google Scholar] [CrossRef]

- Xu, C.; Prince, J.L. Snakes, Shapes, and Gradient Vector Flow. IEEE Trans. Image Process. 1998, 7, 359–369. [Google Scholar]

- Chang, H.-H.; Zhuang, A.H.; Valentino, D.J.; Chu, W.-C. Performance measure characterization for evaluating neuroimage segmentation algorithms. Neuroimage 2009, 47, 122–135. [Google Scholar] [CrossRef]

- Jaccard, P. The distribution of flora in the alpine zone. New Phytol. 1912, 11, 37–50. [Google Scholar] [CrossRef]

- Dice, L.R. Measures of the amount of ecologic association between species. Ecology 1945, 26, 297–302. [Google Scholar] [CrossRef]

- Kulkarni, N. Color Thresholding Method for Image Segmentation of Natural Images. Int. J. Image Graph. Signal Process. 2012, 4, 28–34. [Google Scholar] [CrossRef]

- Chan, T.F.; Vese, L.A. Active contours without edges. IEEE Trans. Image Process. 2001, 10, 266–277. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).