Support Vector Machine Classifiers Show High Generalizability in Automatic Fall Detection in Older Adults

Abstract

:1. Introduction

2. Related Work

3. Materials and Methods

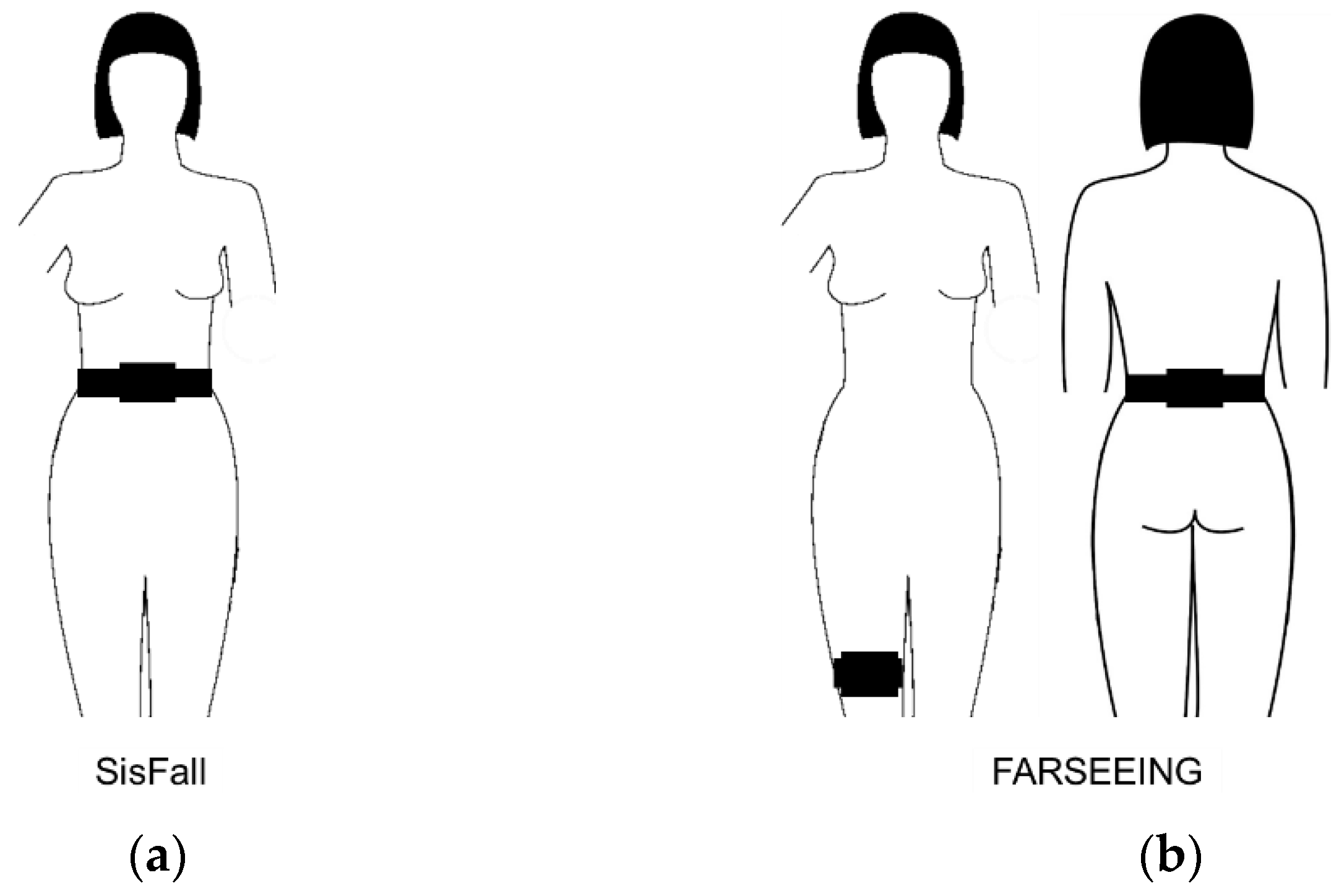

3.1. Dataset

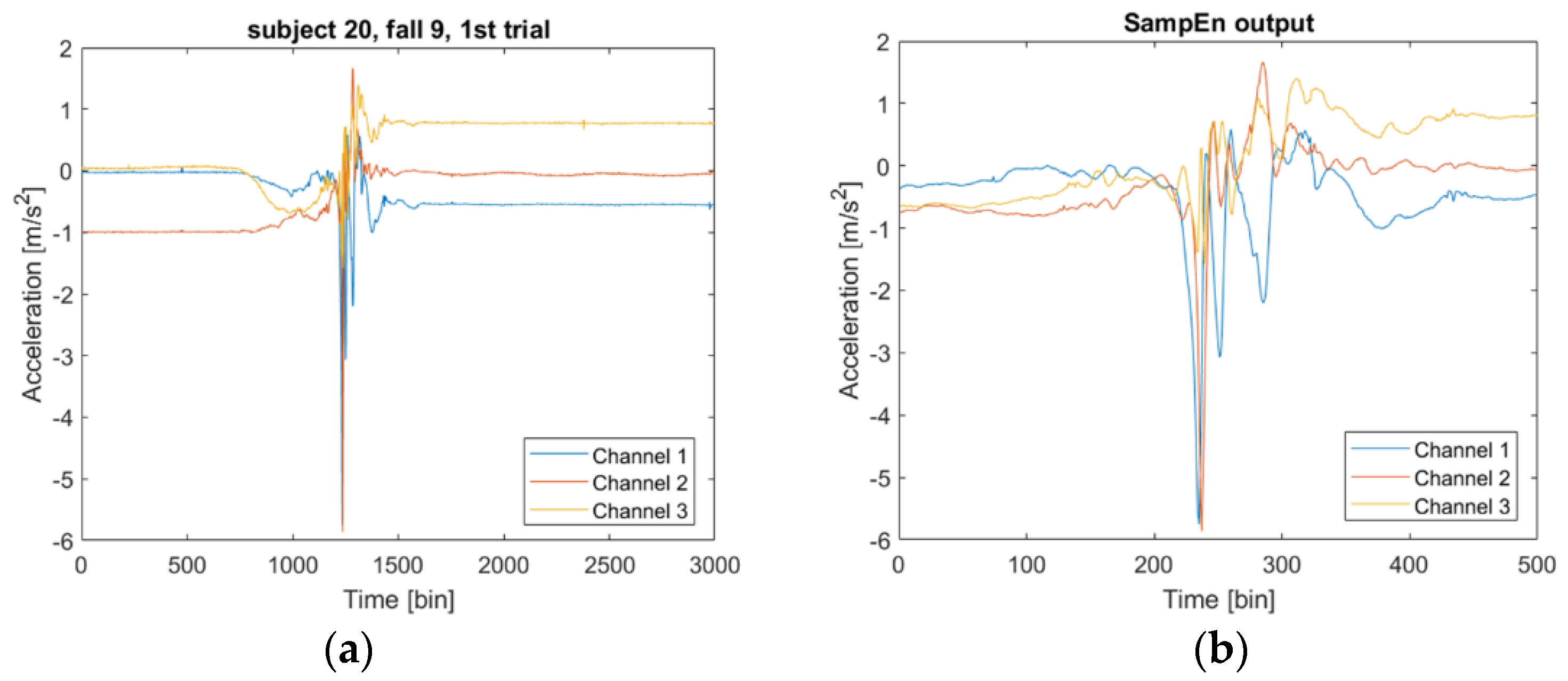

3.2. Data Preprocessing

3.3. Classification Algorithms

3.4. Feature Extraction

3.5. Training of the Classifiers, Classifier Performance, and Statistical Procedures

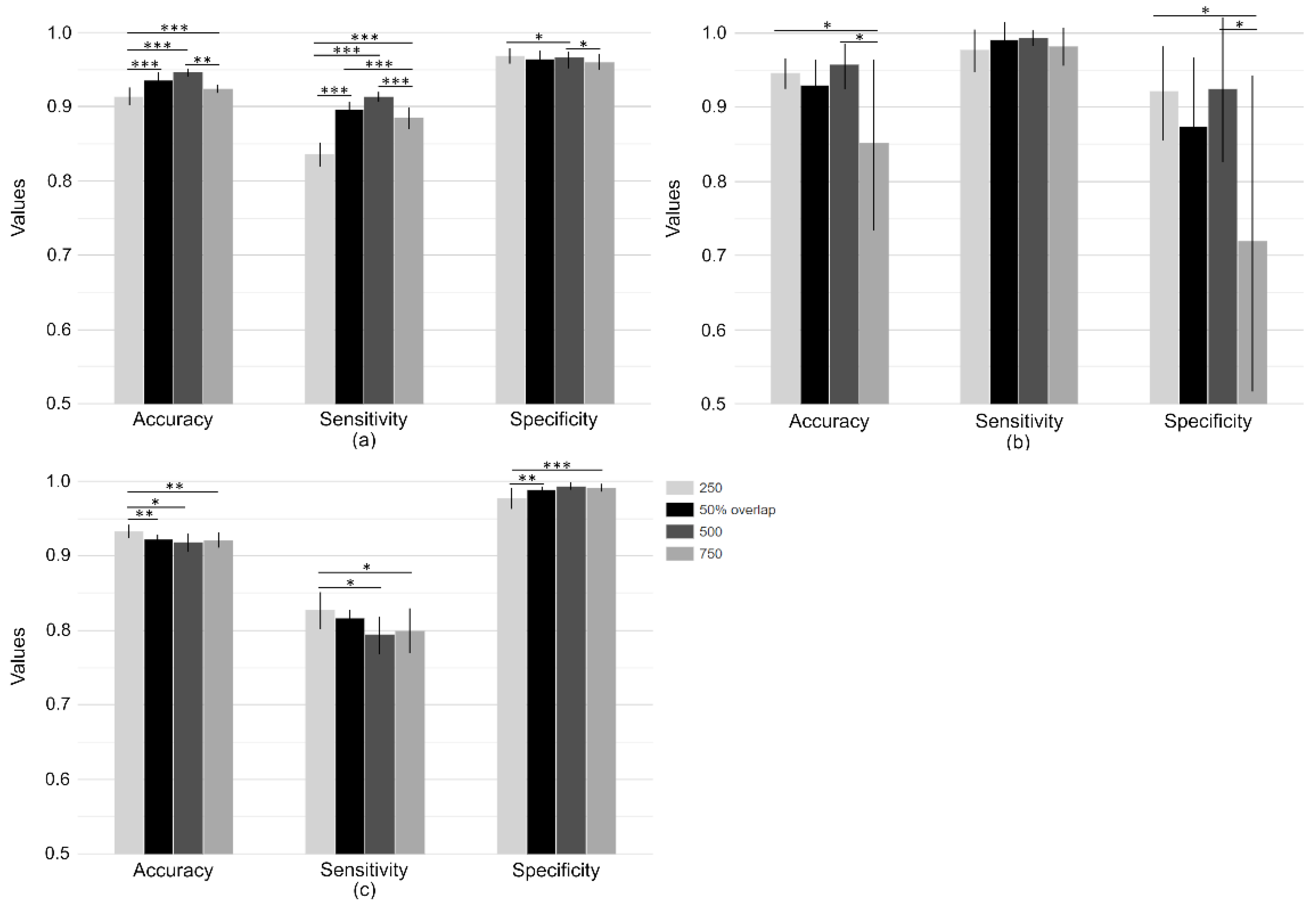

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Baker, J.M. Gait Disorders. Am. J. Med. 2018, 131, 602–607. [Google Scholar] [CrossRef]

- Gale, C.R.; Cooper, C.; Aihie Sayer, A. Prevalence and risk factors for falls in older men and women: The English Longitudinal Study of Ageing. Age Ageing 2016, 45, 789–794. [Google Scholar] [CrossRef] [Green Version]

- Booth, F.W.; Roberts, C.K.; Thyfault, J.P.; Ruegsegger, G.N.; Toedebusch, R.G. Role of Inactivity in Chronic Diseases: Evolutionary Insight and Pathophysiological Mechanisms. Physiol. Rev. 2017, 97, 1351–1402. [Google Scholar] [CrossRef]

- Thiem, U.; Klaaßen-Mielke, R.; Trampisch, U.; Moschny, A.; Pientka, L.; Hinrichs, T. Falls and EQ-5D rated quality of life in community-dwelling seniors with concurrent chronic diseases: A cross-sectional study. Health Qual. Life Outcomes 2014, 12, 2. [Google Scholar] [CrossRef]

- Van Schooten, K.S.; Pijnappels, M.; Rispens, S.M.; Elders, P.J.M.; Lips, P.; van Dieën, J.H. Ambulatory fall-risk assessment: Amount and quality of daily-life gait predict falls in older adults. J. Gerontol. A Biol. Sci. Med. Sci. 2015, 70, 608–615. [Google Scholar] [CrossRef] [Green Version]

- Ramachandran, A.; Karuppiah, A. A Survey on Recent Advances in Wearable Fall Detection Systems. Biomed. Res. Int. 2020, 2020, 2167160. [Google Scholar] [CrossRef]

- Sorvala, A.; Alasaarela, E.; Sorvoja, H.; Myllylä, R. A two-threshold fall detection algorithm for reducing false alarms. In Proceedings of the 2012 6th International Symposium on Medical Information and Communication Technology (ISMICT), La Jolla, CA, USA, 25–29 March 2012; pp. 1–4, ISBN 1467312363. [Google Scholar]

- Bourke, A.K.; Lyons, G.M. A threshold-based fall-detection algorithm using a bi-axial gyroscope sensor. Med. Eng. Phys. 2008, 30, 84–90. [Google Scholar] [CrossRef]

- Wang, F.-T.; Chan, H.-L.; Hsu, M.-H.; Lin, C.-K.; Chao, P.-K.; Chang, Y.-J. Threshold-based fall detection using a hybrid of tri-axial accelerometer and gyroscope. Physiol. Meas. 2018, 39, 105002. [Google Scholar] [CrossRef]

- Aziz, O.; Musngi, M.; Park, E.J.; Mori, G.; Robinovitch, S.N. A comparison of accuracy of fall detection algorithms (threshold-based vs. machine learning) using waist-mounted tri-axial accelerometer signals from a comprehensive set of falls and non-fall trials. Med. Biol. Eng. Comput. 2017, 55, 45–55. [Google Scholar] [CrossRef]

- Casilari, E.; Santoyo-Ramón, J.-A.; Cano-García, J.-M. Analysis of Public Datasets for Wearable Fall Detection Systems. Sensors 2017, 17, 1513. [Google Scholar] [CrossRef] [Green Version]

- Bagalà, F.; Becker, C.; Cappello, A.; Chiari, L.; Aminian, K.; Hausdorff, J.M.; Zijlstra, W.; Klenk, J. Evaluation of accelerometer-based fall detection algorithms on real-world falls. PLoS ONE 2012, 7, e37062. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Igual, R.; Medrano, C.; Plaza, I. A comparison of public datasets for acceleration-based fall detection. Med. Eng. Phys. 2015, 37, 870–878. [Google Scholar] [CrossRef] [Green Version]

- Delgado-Escaño, R.; Castro, F.M.; Cózar, J.R.; Marín-Jiménez, M.J.; Guil, N.; Casilari, E. A cross-dataset deep learning-based classifier for people fall detection and identification. Comput. Methods Programs Biomed. 2020, 184, 105265. [Google Scholar] [CrossRef] [PubMed]

- Stack, E. Falls are unintentional: Studying simulations is a waste of faking time. J. Rehabil. Assist. Technol. Eng. 2017, 4, 2055668317732945. [Google Scholar] [CrossRef] [Green Version]

- Klenk, J.; Schwickert, L.; Palmerini, L.; Mellone, S.; Bourke, A.; Ihlen, E.A.F.; Kerse, N.; Hauer, K.; Pijnappels, M.; Synofzik, M.; et al. The FARSEEING real-world fall repository: A large-scale collaborative database to collect and share sensor signals from real-world falls. Eur. Rev. Aging Phys. Act. 2016, 13, 8. [Google Scholar] [CrossRef] [Green Version]

- Sucerquia, A.; López, J.D.; Vargas-Bonilla, J.F. SisFall: A fall and movement dataset. Sensors 2017, 17, 198. [Google Scholar] [CrossRef]

- Iliev, I.T.; Tabakov, S.D.; Dotsinsky, I.A. Automatic fall detection of elderly living alone at home environment. Glob. J. Med. Res. 2011, 11, 49–54. [Google Scholar]

- Wu, F.; Zhao, H.; Zhao, Y.; Zhong, H. Development of a wearable-sensor-based fall detection system. Int. J. Telemed. Appl. 2015, 2015, 576364. [Google Scholar] [CrossRef] [Green Version]

- Zhao, S.; Li, W.; Niu, W.; Gravina, R.; Fortino, G. Recognition of human fall events based on single tri-axial gyroscope. In Proceedings of the IEEE 15th International Conference on Networking, Sensing and Control (ICNSC), Zhuhai, China, 27–29 March 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar]

- Mao, A.; Ma, X.; He, Y.; Luo, J. Highly Portable, Sensor-Based System for Human Fall Monitoring. Sensors 2017, 17, 2096. [Google Scholar] [CrossRef] [Green Version]

- Chaitep, T.; Chawachat, J. A 3-phase threshold algorithm for smartphone-based fall detection. In Proceedings of the 2017 14th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology, Phuket, Thailand, 27–30 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 183–186, ISBN 9781538604496. [Google Scholar]

- Chen, J.; Kwong, K.; Chang, D.; Luk, J.; Bajcsy, R. Wearable Sensors for Reliable Fall Detection. In Proceedings of the 2005 IEEE Engineering in Medicine and Biology 27th Annual Conference, Shanghai, China, 17–18 January 2006; IEEE: Piscataway, NJ, USA, 2006; pp. 3551–3554. [Google Scholar]

- de Quadros, T.; Lazzaretti, A.E.; Schneider, F.K. A movement decomposition and machine learning-based fall detection system using wrist wearable device. IEEE Sens. J. 2018, 18, 5082–5089. [Google Scholar] [CrossRef]

- Özdemir, A.T.; Barshan, B. Detecting falls with wearable sensors using machine learning techniques. Sensors 2014, 14, 10691–10708. [Google Scholar] [CrossRef]

- Choi, Y.; Ralhan, A.S.; Ko, S. A Study on Machine Learning Algorithms for Fall Detection and Movement Classification. In International Conference on Information Science and Applications (ICISA), Jeju Island, Korea, 26–29 April 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 1–8. ISBN 9781424492220. [Google Scholar]

- Vallabh, P.; Malekian, R.; Ye, N.; Bogatinoska, D.C. Fall detection using machine learning algorithms. In Proceedings of the 2016 24th International Conference on Software, Telecommunications and Computer Networks (SoftCOM), Split, Croatia, 22–24 September 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–9. [Google Scholar]

- Liu, K.-C.; Hsieh, C.-Y.; Hsu, S.J.-P.; Chan, C.-T. Impact of Sampling Rate on Wearable-Based Fall Detection Systems Based on Machine Learning Models. IEEE Sens. J. 2018, 18, 9882–9890. [Google Scholar] [CrossRef]

- Putra IP, E.S.; Brusey, J.; Gaura, E.; Vesilo, R. An event-triggered machine learning approach for accelerometer-based fall detection. Sensors 2018, 18, 20. [Google Scholar] [CrossRef] [Green Version]

- Hussain, F.; Hussain, F.; Ehatisham-ul-Haq, M.; Azam, M.A. Activity-Aware Fall Detection and Recognition Based on Wearable Sensors. IEEE Sens. J. 2019, 19, 4528–4536. [Google Scholar] [CrossRef]

- Koo, B.; Kim, J.; Nam, Y.; Kim, Y. The Performance of Post-Fall Detection Using the Cross-Dataset: Feature Vectors, Classifiers and Processing Conditions. Sensors 2021, 21, 4638. [Google Scholar] [CrossRef]

- Fricke, C.; Alizadeh, J.; Zakhary, N.; Woost, T.B.; Bogdan, M.; Classen, J. Evaluation of Three Machine Learning Algorithms for the Automatic Classification of EMG Patterns in Gait Disorders. Front. Neurol. 2021, 12, 666458. [Google Scholar] [CrossRef] [PubMed]

- Musci, M.; de Martini, D.; Blago, N.; Facchinetti, T.; Piastra, M. Online Fall Detection Using Recurrent Neural Networks on Smart Wearable Devices. IEEE Trans. Emerg. Top. Comput. 2021, 9, 1276–1289. [Google Scholar] [CrossRef]

- Fakhrulddin, A.H.; Fei, X.; Li, H. Convolutional neural networks (CNN) based human fall detection on Body Sensor Networks (BSN) sensor data. In Proceedings of the 2017 4th International Conference on Systems and Informatics, Hangzhou Xiangyuan Hotel, Hangzhou, China, 11–13 November 2017; pp. 1461–1465. [Google Scholar]

- Santos, G.L.; Endo, P.T.; Monteiro KH, D.C.; Rocha ED, S.; Silva, I.; Lynn, T. Accelerometer-based human fall detection using convolutional neural networks. Sensors 2019, 19, 1644. [Google Scholar] [CrossRef] [Green Version]

- Mauldin, T.R.; Canby, M.E.; Metsis, V.; Ngu, A.H.H.; Rivera, C.C. SmartFall: A Smartwatch-Based Fall Detection System Using Deep Learning. Sensors 2018, 18, 3363. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Thompson, N.C.; Greenewald, K.; Lee, K.; Manso, G.F. The Computational Limits of Deep Learning. 2020. Available online: https://arxiv.org/pdf/2007.05558 (accessed on 10 July 2020).

- Hsieh, C.-Y.; Liu, K.-C.; Huang, C.-N.; Chu, W.-C.; Chan, C.-T. Novel Hierarchical Fall Detection Algorithm Using a Multiphase Fall Model. Sensors 2017, 17, 307. [Google Scholar] [CrossRef]

- Medrano, C.; Igual, R.; Plaza, I.; Castro, M. Detecting falls as novelties in acceleration patterns acquired with smartphones. PLoS ONE 2014, 9, e94811. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Medrano, C.; Plaza, I.; Igual, R.; Sánchez, Á.; Castro, M. The Effect of Personalization on Smartphone-Based Fall Detectors. Sensors 2016, 16, 117. [Google Scholar] [CrossRef] [PubMed]

- Ngu, A.H.; Metsis, V.; Coyne, S.; Chung, B.; Pai, R.; Chang, J. Personalized Fall Detection System. In Proceedings of the 2020 IEEE International Conference on Pervasive Computing and Communications Workshops (PerCom Workshops), Austin, TX, USA, 23–27 March 2020; pp. 1–7. [Google Scholar]

- Bourke, A.K.; Klenk, J.; Schwickert, L.; Aminian, K.; Ihlen, E.A.F.; Mellone, S.; Helbostad, J.L.; Chiari, L.; Becker, C. Fall detection algorithms for real-world falls harvested from lumbar sensors in the elderly population: A machine learning approach. In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; 2016; pp. 3712–3715, ISBN 1457702207. [Google Scholar] [CrossRef]

- Palmerini, L.; Klenk, J.; Becker, C.; Chiari, L. Accelerometer-based fall detection using machine learning: Training and testing on real-world falls. Sensors 2020, 20, 6479. [Google Scholar] [CrossRef] [PubMed]

- Aziz, O.; Klenk, J.; Schwickert, L.; Chiari, L.; Becker, C.; Park, E.J.; Mori, G.; Robinovitch, S.N. Validation of accuracy of SVM-based fall detection system using real-world fall and non-fall datasets. PLoS ONE 2017, 12, e0180318. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Silva, J.; Sousa, I.; Cardoso, J. Transfer learning approach for fall detection with the FARSEEING real-world dataset and simulated falls. In 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); IEEE: Piscataway, NJ, USA, 2018; pp. 3509–3512. [Google Scholar]

- Kangas, M.; Konttila, A.; Lindgren, P.; Winblad, I.; Jämsä, T. Comparison of low-complexity fall detection algorithms for body attached accelerometers. Gait Posture 2008, 28, 285–291. [Google Scholar] [CrossRef]

- Boser, B.E.; Guyon, I.M.; Vapnik, V.N. A training algorithm for optimal margin classifiers. In Proceedings of the Fifth Annual Workshop on Computational Learning Theory, Pittsburgh, PE, USA, 27–29 July 1992; pp. 144–152. [Google Scholar]

- Altman, N.S. An Introduction to Kernel and Nearest-Neighbor Nonparametric Regression. Am. Stat. 1992, 46, 175–185. [Google Scholar] [CrossRef] [Green Version]

- Ho, T.K. Random decision forests. In Proceedings of the Third International Conference on Document Analysis and Recognition, Montréal, QC, Canada, 14–16 August 1995; IEEE Computer Society Press: Los Alamitos, CA, USA, 1995; pp. 278–282. [Google Scholar]

- Albert, M.V.; Kording, K.; Herrmann, M.; Jayaraman, A. Fall classification by machine learning using mobile phones. PLoS ONE 2012, 7, e36556. [Google Scholar] [CrossRef] [Green Version]

- Bilski, P.; Mazurek, P.; Wagner, J. Application of k Nearest Neighbors Approach to the fall detection of elderly people using depth-based sensors. In 2015 IEEE 8th International Conference on Intelligent Data Acquisition and Advanced Computing Systems: Technology and Applications (IDAACS); IEEE: Piscataway, NJ, USA, 2015; Volume 2, pp. 733–739. ISBN 1467383619. [Google Scholar]

- Zurbuchen, N.; Bruegger, P.; Wilde, A. A Comparison of Machine Learning Algorithms for Fall Detection using Wearable Sensors. In Proceedings of the 2020 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Fukuoka, Japan, 19–21 February 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 427–431. [Google Scholar]

- Li, S.; Jia, M.; Le Kernec, J.; Yang, S.; Fioranelli, F.; Romain, O. Elderly Care: Using Deep Learning for Multi-Domain Activity Classification. In 2020 International Conference on UK-China Emerging Technologies (UCET); IEEE: Piscataway, NJ, USA, 2020; pp. 1–4. ISBN 1728194881. [Google Scholar]

- Noi, P.T.; Kappas, M. Comparison of Random Forest, k-Nearest Neighbor, and Support Vector Machine Classifiers for Land Cover Classification Using Sentinel-2 Imagery. Sensors 2018, 18, 18. [Google Scholar]

- Gong, N.; Wang, Z.; Chen, S.; Liu, G.; Xin, S. Decision Boundary Extraction of Classifiers. J. Phys. Conf. Ser. 2020, 1651, 12031. [Google Scholar] [CrossRef]

- Tang, C.; Garreau, D.; von Luxburg, U. When Do Random Forests Fail? In Proceedings of the 32nd International Conference on Neural Information Processing Systems, Montréal, QC, Canada, 3–8 December 2018; Curran Associates Inc: Red Hook, NY, USA, 2018; pp. 2987–2997. [Google Scholar]

- Jiang, L.; Cai, Z.; Wang, D.; Jiang, S. Survey of Improving K-Nearest-Neighbor for Classification. In Proceedings of the Fourth International Conference on Fuzzy Systems and Knowledge Discovery, Haikou, Hainan, China, 24–27 August 2007; pp. 679–683. [Google Scholar]

- Ali, N.; Neagu, D.; Trundle, P. Evaluation of k-nearest neighbour classifier performance for heterogeneous data sets. SN Appl. Sci. 2019, 1, 1559. [Google Scholar] [CrossRef] [Green Version]

- Klenk, J.; Becker, C.; Lieken, F.; Nicolai, S.; Maetzler, W.; Alt, W.; Zijlstra, W.; Hausdorff, J.M.; van Lummel, R.C.; Chiari, L.; et al. Comparison of acceleration signals of simulated and real-world backward falls. Med. Eng. Phys. 2011, 33, 368–373. [Google Scholar] [CrossRef] [PubMed]

- Casilari, E.; Oviedo-Jiménez, M.A. Automatic Fall Detection System Based on the Combined Use of a Smartphone and a Smartwatch. PLoS ONE 2015, 10, e0140929. [Google Scholar] [CrossRef] [PubMed]

- Bourke, A.K.; van de Ven, P.W.J.; Chaya, A.E.; OLaighin, G.M.; Nelson, J. Testing of a long-term fall detection system incorporated into a custom vest for the elderly. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2008, 2008, 2844–2847. [Google Scholar] [CrossRef]

- Dinh, C.; Struck, M. A new real-time fall detection approach using fuzzy logic and a neural network. In Proceedings of the 6th International Workshop on Wearable Micro and Nano Technologies for Personalized Health (pHealth), Oslo, Norway, 24–26 June 2009; pp. 57–60. [Google Scholar]

| Activities | Activities |

|---|---|

| Fall forward while walking caused by a slip | Fall backward while walking caused by a slip |

| Lateral fall while walking caused by a slip | Fall forward while walking caused by a trip |

| Fall forward while jogging caused by a trip | Vertical fall while walking caused by fainting |

| Fall backward while sitting, caused by fainting or falling asleep | Fall forward when trying to get up |

| Lateral fall when trying to get up | Fall forward when trying to sit down |

| Fall backward when trying to sit down | Lateral fall when trying to sit down |

| Fall forward while sitting, caused by fainting or falling asleep | Lateral fall while sitting, caused by fainting or falling asleep |

| Fall while walking, with use of hands on a table to dampen fall, caused by fainting |

| Variable | SVM | kNN | RF |

|---|---|---|---|

| Accuracy | 0.93 ± 0.01 | 0.92 ± 0.01 | 0.94 ± 0.01 |

| Sensitivity | 0.89 ± 0.02 | 0.87 ± 0.02 | 0.91 ± 0.01 |

| Specificity | 0.96 ± 0.01 | 0.97 ± 0.01 | 0.97 ± 0.01 |

| Variable | SVM | kNN | RF |

|---|---|---|---|

| Accuracy | 0.93 ± 0.01 | 0.94 ± 0.01 | 0.94 ± 0.01 |

| Sensitivity | 0.90 ± 0.01 | 0.91 ± 0.01 | 0.90 ± 0.01 |

| Specificity | 0.97 ± 0.01 | 0.96 ± 0.01 | 0.97 ± 0.01 |

| Variable | SVM | kNN | RF |

|---|---|---|---|

| Accuracy | 0.96 ± 0.02 | 0.75 ± 0.10 | 0.60 ± 0.03 |

| Sensitivity | 0.98 ± 0.04 | 0.76 ± 0.11 | 0.92 ± 0.07 |

| Specificity | 0.94 ± 0.04 | 0.74 ± 0.20 | 0.27 ± 0.04 |

| Variable | SVM | kNN | RF |

|---|---|---|---|

| Accuracy | 0.93 ± 0.05 | 0.75 ± 0.07 | 0.58 ± 0.02 |

| Sensitivity | 0.99 ± 0.03 | 0.78 ± 0.12 | 0.95 ± 0.07 |

| Specificity | 0.87 ± 0.10 | 0.73 ± 0.10 | 0.22 ± 0.06 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alizadeh, J.; Bogdan, M.; Classen, J.; Fricke, C. Support Vector Machine Classifiers Show High Generalizability in Automatic Fall Detection in Older Adults. Sensors 2021, 21, 7166. https://doi.org/10.3390/s21217166

Alizadeh J, Bogdan M, Classen J, Fricke C. Support Vector Machine Classifiers Show High Generalizability in Automatic Fall Detection in Older Adults. Sensors. 2021; 21(21):7166. https://doi.org/10.3390/s21217166

Chicago/Turabian StyleAlizadeh, Jalal, Martin Bogdan, Joseph Classen, and Christopher Fricke. 2021. "Support Vector Machine Classifiers Show High Generalizability in Automatic Fall Detection in Older Adults" Sensors 21, no. 21: 7166. https://doi.org/10.3390/s21217166

APA StyleAlizadeh, J., Bogdan, M., Classen, J., & Fricke, C. (2021). Support Vector Machine Classifiers Show High Generalizability in Automatic Fall Detection in Older Adults. Sensors, 21(21), 7166. https://doi.org/10.3390/s21217166