1. Introduction

Research in autonomous driving has aroused increasingly more attention of late [

1,

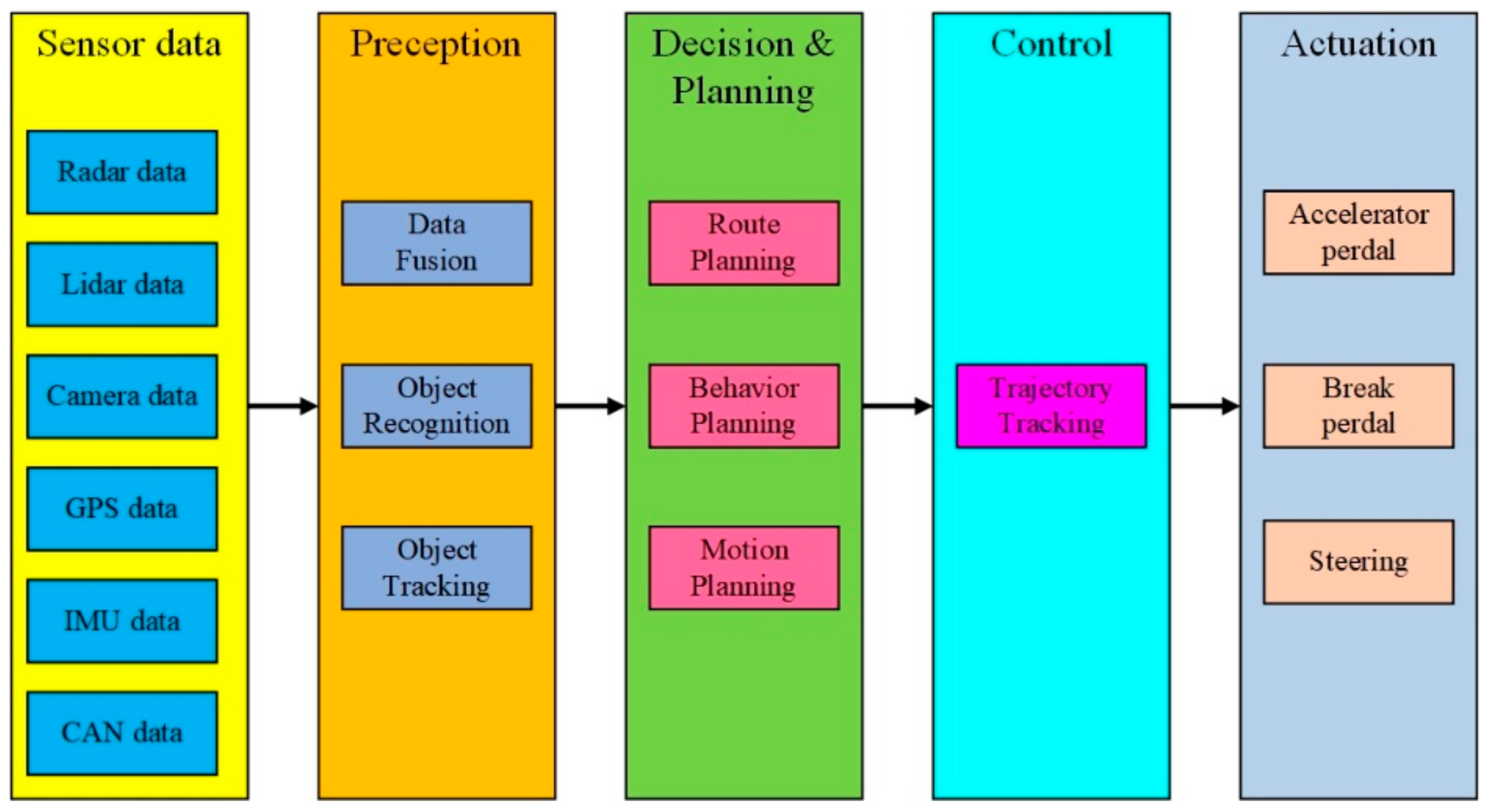

2]. The most basic and important goal of an autonomous passenger vehicle is to free people from driving and safely take passengers from an initial state to a final state in a desired interval of time. The architecture of contemporary autonomous driving systems is typically organized into the perception system and the decision-making system [

3]. The perception system takes charge of estimating the vehicle states and representing the surrounding environment using data from sensors, including Light Detection and Ranging (LIDAR), Radio Detection and Ranging (RADAR), cameras, a Global Positioning System (GPS), and an Inertial Measurement Unit (IMU). In particular, camera data is of vital importance. Tesla released its fully self-driving version 9 Beta software on 10 July 2021, which relies on camera vision and neural net processing to deliver autopilot. The Lane Support System (LSS) uses cameras to identify the road lines and alert drivers to potential hazards. However, there is still much uncertainty regarding the vision needs of LSS and the results of the experimental tests for LSS are quite limited [

4,

5]. Cafiso and Pappalardo [

4] developed logit models to investigate road characteristics and conditions that affects LSS performance and employed the Firth’s penalized maximum-likelihood method to estimate the logistic regression coefficients and standard errors to describe the rareness of the events. They gave threshold values for the luminance coefficient in diffuse lighting conditions and horizontal curvature radius, and presented remarks on road maintenance and design standards. Pappalardo et al. [

5] experimentally tested LSS performance in two lane rural roads with distinct geometric alignments and road marking conditions. They proposed a decision tree method to analyze the cause of the LSS faults and the effects of the variables involved. On the other hand, the decision-making system takes charge of navigating a car from the current position to a goal position safely, feasibly, timely, and comfortably [

6]. The decision-making system can be further divided into three subsystems: a decision and planning system, a control system, and an actuation system. Of them, motion planning is a key autonomous driving technique. Li and Shao [

7] proposed a motion planner for autonomous parking, and the time-optimal dynamic optimization problem with vehicle kinematics, collision-avoidance conditions and mechanical constraints was solved using a simultaneous approach using the interior-point method. Zhang [

8] proposed a hierarchical three-layer trajectory planning framework to realize real-time collision avoidance on highways under complex driving conditions. Therefore, a general framework of an autonomous driving system is shown in

Figure 1. Besides a perception and decision-making system, advanced X-by-wire chassis, including drive-by-wire, steer-by-wire, brake-by-wire and active/semi-active suspension subsystems are of vital importance to improving the performance and safety of connected and autonomous vehicles. Zhang et al. [

9] proposed a fault-tolerant control method for steer-by-wire systems to mitigate the undesirable influence of front wheel steering angle sensor faults via the use of the Kalman filtering technique. A complete and systematic survey on chassis coordinated control methods for full X-by-wire vehicles can be found in [

10]. Here we focus on trajectory tracking, which is a key technology for precisely controlling autonomous vehicles.

The trajectory tracking algorithms are designed to ensure that a vehicle follows a predetermined trajectory generated either offline using navigation systems or online using the motion planning module. The performance of trajectory tracking directly determines the performance of autonomous vehicles, which involves driving safety, passenger comfort, travel efficiency, and energy consumption [

11]. The trajectory tracking control of autonomous vehicles is a challenging research area because these systems typically are nonlinear systems with non-holonomic constraints.

Pure-pursuit [

12] and the Stanley method [

13] are two prevalent geometric controllers. The main advantage of these methods is that they use simple geometric models with few parameters, and therefore can give timely feedback on the current state and constraints to meet the real-time requirement of an autonomous vehicle. The pure-pursuit method and its variants are one of the most commonly used methods to solve the path-tracking problem for mobile robots [

14]. The Stanley method is the path-tracking approach used by Stanley, Stanford University’s autonomous car; Stanley won the DARPA Grand Challenge in 2005 [

13]. However, these methods have their limitations. Pure-pursuit control works as a proportional controller of the steering angle operating on the cross-track error by calculating the curvature from the current position to some goal position. When the look-ahead distance is too large, its performance is poor, and the vehicle may cut corners when changing direction or making a U-turn. The Stanley method considers both the heading and cross-track errors and therefore it is more effective and steady than the pure-pursuit method. But it does not perform well on discontinuous paths. To sum up, geometric-based tracking controllers (pure pursuit, Stanley, etc.) have a simple structure and are easy to implement. However, they are not suitable for applications that need to consider vehicle dynamics (e.g., high-speed trajectory tracking, extreme path curvature, etc.). It is also difficult to achieve a trade-off between stability and tracking performance [

15].

Proportional-Integral-Derivative controllers (PID) [

16] and sliding model controllers (SMC) [

17] are two prevalent classical control algorithms. Although PID controllers have good tracking performance, there is a major challenge in the tuning of the parameters because of the vehicle and tire nonlinearities. SMC is a well-developed nonlinear state-feedback controller and has been used to design vehicle trajectory tracking controllers. Because of the nonlinear control law, SMC shows good tracking accuracy. However, there are several drawbacks: first, its performance is sensitive to the sampling rate of the controller; second, chattering problems exist under certain conditions [

18]; third, robustness is only guaranteed on the sliding surface; and lastly, it needs prior knowledge [

19]. To sum up, compared with geometric-based tracking controllers, model-based tracking methods are more feasible and reliable in real driving scenarios at the cost of the increase in computational burden and complexity.

Reinforcement learning (RL) has shown an ability to achieve super-human results at turn-based games like Go [

20] and chess [

21]. Deep RL has been applied to the decision-making system of autonomous driving in several simulated environments [

22]. Mohammadi et al. [

23] proposed an optimal tracking controller for nonlinear continuous-time systems with time-delay, mismatched external disturbances, and input constraints, using the technique of integral reinforcement learning and a Hamilton-Jacobi-Bellman equation. However, there are two main limitations for RL-based methods. First, they require large amounts of data to build up a feasible model; specifically, data is sometimes expensive and hard to obtain. Second, they require a sufficiently long time to train the model to complete the specific tasks due to significant data manipulation. The performance of the controllers using machine-learning methods relies on the learning capability of the model and the quality of the data.

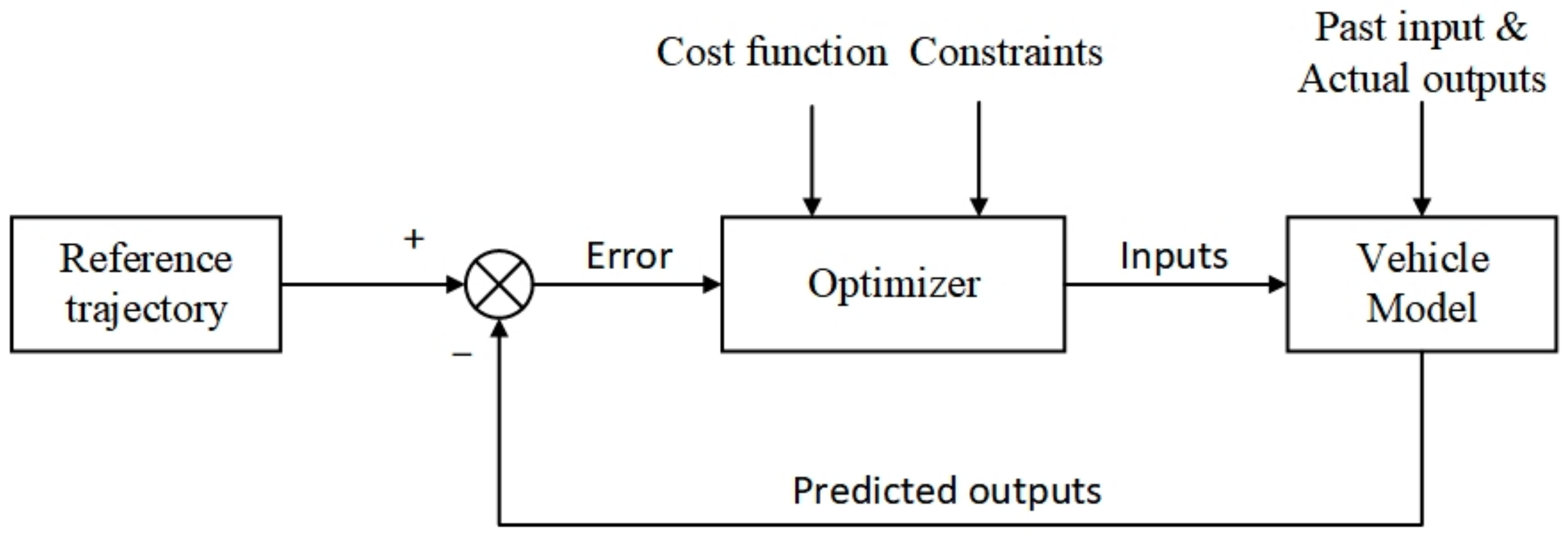

Model Predictive Control (MPC) has been applied to trajectory planning and tracking of an autonomous vehicle due to its flexibility and ability to compute optimal solutions with hard and soft constraints [

24,

25]. Shen et al. [

24] proposed a unified receding-horizon optimization scheme for the integrated path-planning and tracking control of an autonomous underwater vehicle using nonlinear MPC techniques. Borrelli et al. [

25] proposed a novel approach to autonomous steering systems based on an MPC scheme. The general framework of an MPC structure is shown in

Figure 2. However, these MPC-based tracking controllers are feasible only in low-speed scenarios.

The accuracy of the trajectory tracking control can be greatly improved by improving the accuracy of the predictive model. Most researchers have attempted to improve the accuracy of the kinematic or dynamic model to improve the accuracy of the controller. Few people paid attention to the computation errors during the integration process. With the accumulation of the computation errors, the controller could lose its stability or an accident might even result.

In this paper, we propose a new trajectory-tracking algorithm based on MPC. Instead of using the forward Euler integration method, the backward Euler integration method is used to establish the predictive model.

The contributions here can be summarized as follows:

A trajectory tracking method is proposed based on MPC. Instead of using the forward Euler method, the backward Euler method is used to establish the predictive model. The proposed method is designed to meet the real-time requirement of autonomous vehicles by structuralizing the control law and employing the warm-start strategy.

Unlike conventional MPC-based controllers, both the acceleration and steer angle are control inputs. The proposed MPC-based controller can automatically adjust the velocity according to the information of the reference trajectory.

The dynamic regret of the proposed controller is tightly bounded, and the closed-loop controller is proved to be stable.

The MPC controller using the backward Euler method has a better tracking accuracy in the lateral error, and it is more robust.

This paper is organized as follows. The MPC-based controller of autonomous vehicles is described in

Section 2. After that, the stabilizability of the controller is discussed in

Section 3. Simulation results are shown in

Section 4.

Section 5 concludes this paper by summarizing all of the main results.

2. Control Design

Establishing a prediction model and designing a rolling optimization function are the kernels of designing a path tracking controller. Due to the strongly nonlinearity of vehicle dynamics, it is very hard to establish a model to describe the actual vehicle dynamics. Researchers generally use Ackermann steering geometry and its simplified bicycle models to describe the vehicle kinematics and dynamics. MPC schemes using dynamic vehicle models and various tire models are generally computationally expensive, and tire models may become singular at low speeds [

26]. Kong et al. [

26] compared a kinematic and a dynamic bicycle model, and showed that both models could correctly predict a vehicle’s future states, and combining MPC schemes with a simple kinematic bicycle model is less computationally expensive. Polack et al. [

27] compared a 3-DOF kinematic bicycle model with a 9-DOF model, and showed that the 3-DOF model could capture enough of the non-holonomic constraints of the actual vehicle dynamics. When the maximum-allowed lateral acceleration of a vehicle was no greater than 0.5

g m/s

2, where

g is the acceleration due to gravity, using a 3-DOF kinematic bicycle model produces acceptable results and could generate a feasible track. Chen et al. [

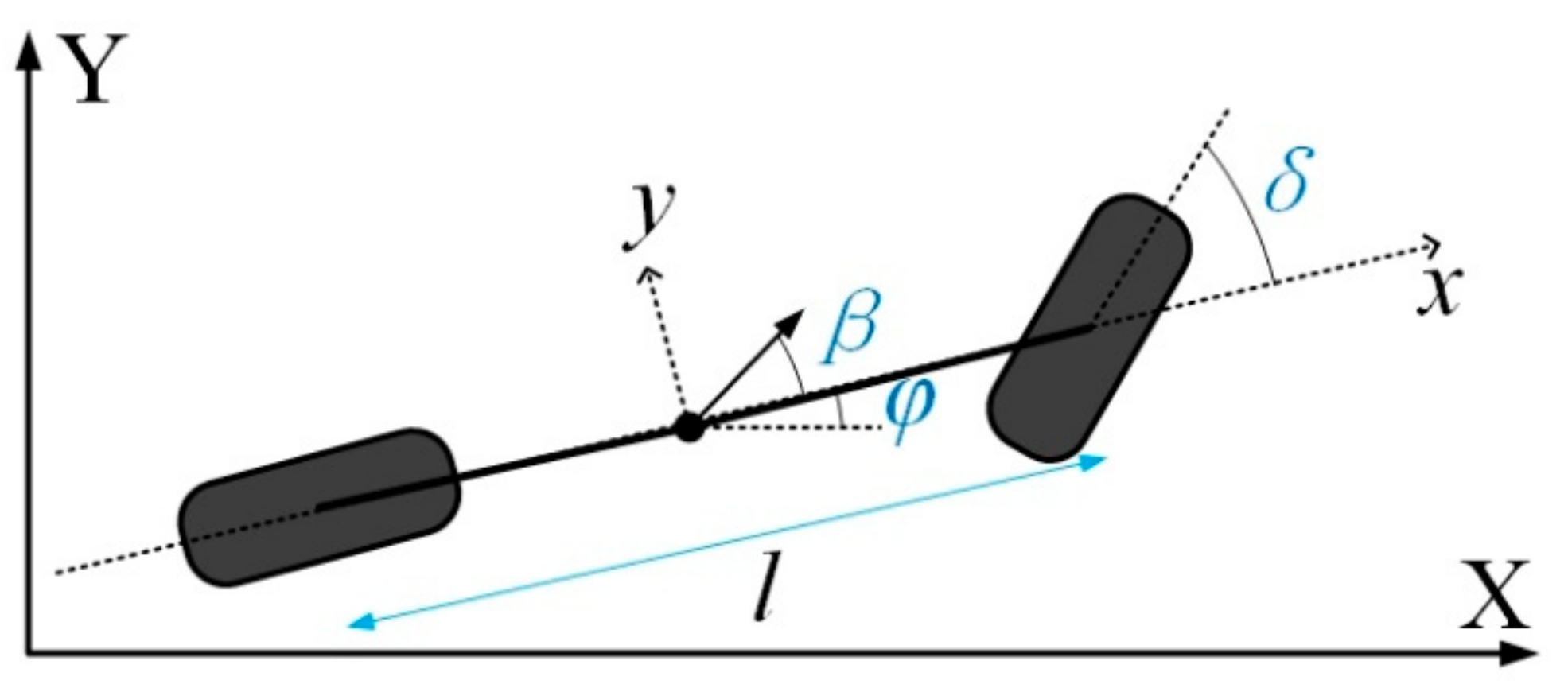

28] implemented an MPC-based controller for path-tracking using three vehicle dynamics models: a bicycle model, an 8-DOF model and a 14-DOF model. They showed that the bicycle controller could successfully navigate a vehicle along the given path and calculate the optimal steering sequences faster than the controllers with the 8-DOF and 14-DOF vehicle. They concluded that the bicycle controller is suitable for a possible physical implementation with real-time requirements. Therefore, in this paper, the kinematic model of autonomous vehicles is used [

26,

29]

where

x and

y are the coordinates of the center of mass in an inertial frame (

X,

Y).

φ is the inertial heading, and

v is the longitudinal speed of the vehicle. The parameters

lf and

lr are the distance from the center to the front and rear axles, and

δ is the front steering angle. Two front and two rear wheels of the vehicle are combined into single wheels located at the center of the front and rear axle, respectively, as illustrated in

Figure 3.

β is the slip angle at the center of gravity.

In our problem, X = [x, y, φ, v] is the vehicle state, U = [a, δ] is the control state. The model is established based on the following assumptions.

The vehicle is traveling on a flat surface, with the vehicle’s movement perpendicular to the road surface ignored.

Only the front wheel can be steered.

The wind resistance and ground-side friction that the wheels are subjected to while driving are ignored.

The wheels always maintain good rolling contact with the ground.

The impact of the vehicle suspension is not taken into account.

Load transfer is not considered.

The state-space equations of the vehicle system (1) are continuous in time and cannot be used for the design of the MPC algorithm directly. Therefore, the model of the system was converted to discrete state-space equations by discretizing the state-space equations. We assume that the model can be rewritten as

Generally, the state at

k + 1 instant at time

t is computed using the forward Euler integration method

where

Ts is the sampling time.

In this paper, instead of the forward Euler method, the backward Euler method is used to establish the predictive model. Although it requires an extra computation at each iteration, the backward Euler method has great stability properties and its local truncation error is of order , which is much smaller than using the forward Euler method. Hence, the backward Euler method’s error generally decreases faster as

The state at

k + 1 instant at time

t is computed using the backward Euler method

Equation system (8) can be rewritten as

Therefore, the state information of vehicles in the prediction horizon

NP can be obtained

where

Nc is the control horizon and 1 ≤

Nc ≤

NP, which denotes component-wise inequality.

The differences between the predictive states and the reference trajectory X

ref are defined as follows

To ensure the passenger comfort and feasibility of the vehicle, the output control should be varied as smoothly as possible. Therefore, the optimization objective function is defined as

where

Q and

R are the weight matrices for the vehicle states and control states, respectively. Consequently, the rolling optimization can be obtained by solving the constrained optimization problem in every sampling period

where (

amin,

amax) and (

δmin,

δmax) are the hard constraints of the vehicle. The last constraints are added to ensure safety driving.

The control inputs are obtained by solving the optimization problem (9). The first element in the control inputs is taken as the optimal control at the current time. After the prediction and control of the current time step are completed, the states are updated with the actual ones, which are then used as the initial states for the optimization problem in the next predictive horizon. The process is repeated until the vehicle reaches the final state.

The problem (9) is a quadratic programming (QP) one which is a traditional optimization problem for trajectory tracking. The first term in the cost function requires that the actual trajectory be as close as possible to the reference trajectory to ensure the safety and feasibility of the trajectory. The second term requires that the control input be varied smoothly to ensure the feasibility of the vehicle and the comfort of passengers. The difference between the reference and the actual trajectory must be sufficiently small. Otherwise, it may lead to a crash, and the trajectory is no longer feasible.

To meet the real-time requirement, instead of directly calculating a control sequence by solving (9), we solve an approximate optimization problem by imposing the constraints

uk+1 =

uk+2 =

… =

uk+Nc on the control law. Therefore, we only need to calculate a ‘mediocre’ control to follow the given trajectory. This significantly reduces the complexity of the primal problem as it dramatically reduces the number of the variables. It is worth noting that imposing the constraint conditions

uk+1 = … = uk+Nc on (9) is equivalent to setting

Nc to 1. Besides greatly reducing the computational burden, one of the most telling advantages of structuralizing the control is to produce an improvement in the robustness and in the general behavior of the system, because allowing the free evolution of the manipulated variables could lead to undesirable high-frequency control signals and even to instability as noted in [

30]. We note that, if the coefficient matrices

Q and

R are positive semi-definite, the primal problem is tightly bounded and the approximate problem is also tightly bounded. Since

Q and

R are positive semi-definite,

x1TQx1 ≥ 0,

x2TRx2 ≥ 0. Hence, the cost function in (9) is convex. The feasible region subjected to the constraint conditions (linear equations and inequalities) in (9) is also convex. Thus, the optimal solution of (9) is located in either the interior or the boundary of the feasible region. Therefore, the value of the cost function does not go to infinity, and the primal problem is tightly bounded. When imposing the constraint condition

uk+1 = … = uk+Nc, the corresponding feasible region is still convex since the intersection of convex sets is still a convex set. Similarly, the approximate problem is tightly bounded. In the next section, we prove that the proposed close-loop MPC controller is stable if

Nc = N1 = 1,

λ = 0 and

NP is large.

3. Stabilizability of Controller

Combining (1) and (5) leads to

Equation system (10) can be rewritten as

For the sake of convenience, we omit the subscript k in the remainder of this paper.

Theorem 1. System (11) is controllable.

Proof of Theorem 1. First, we seek the eigenvalues λ of A. By solving the characteristic polynomial det(λI-A) = 0, we have

According to the definition of controllability proposed by Hautus [

31], system (4) is controllable if and only if, for all

λi,

i = 1, 2, 3, 4 Rank([

λiI-A,

B]) = 4. Here we only need to consider Rank([

λ1I-

A,

B]) due to (12)

Hence we have Rank(Ω) = 4 and 4 ≥ Rank([λ1I-A,B]) ≥ Rank(Ω) = 4. □

Theorem 2. System (11) is observable.

Proof of Theorem 2. According to the definition of observability proposed by Hautus [

31], system (4) is observable if and only if, for all

λi,

i = 1, 2, 3, 4 Rank([

λiI-

A;

C]) = 4.

In our problem, the output function is Y = X = CX, and thus C = I4×4 and Rank(C) = 4. Therefore, 4 ≥ Rank([λ1I-A;C]) ≥ Rank(C) = 4. □

Theorem 3. System (11) is stabilizable.

Proof of Theorem 3. According to the definition of stabilizability proposed by Hautus [

31], system (11) is stabilizable if and only if

λi ≥ 0,

i = 1, 2, 3, 4, and the system is controllable. Combining Theorem 1 and (12) proves that Theorem 3 holds. □

Theorem 4. The closed-loop MPC controller is stable for Nc = 1, λ = 0 and large NP.

Proof of Theorem 4. This proof is similar as that for Theorem 4 in [

32] for generalized predictive control. When

NP is sufficiently large, we have

where

Therefore,

GTG is a positive scalar, which is always invertible. Therefore, the matrix

GTG + λI is invertible, and a feasible control can be obtained using the expression in [

32]

Since our optimization problem is convex, there is only one optimal solution and thus our controller will asymptotically converge to (17). □

5. Conclusions

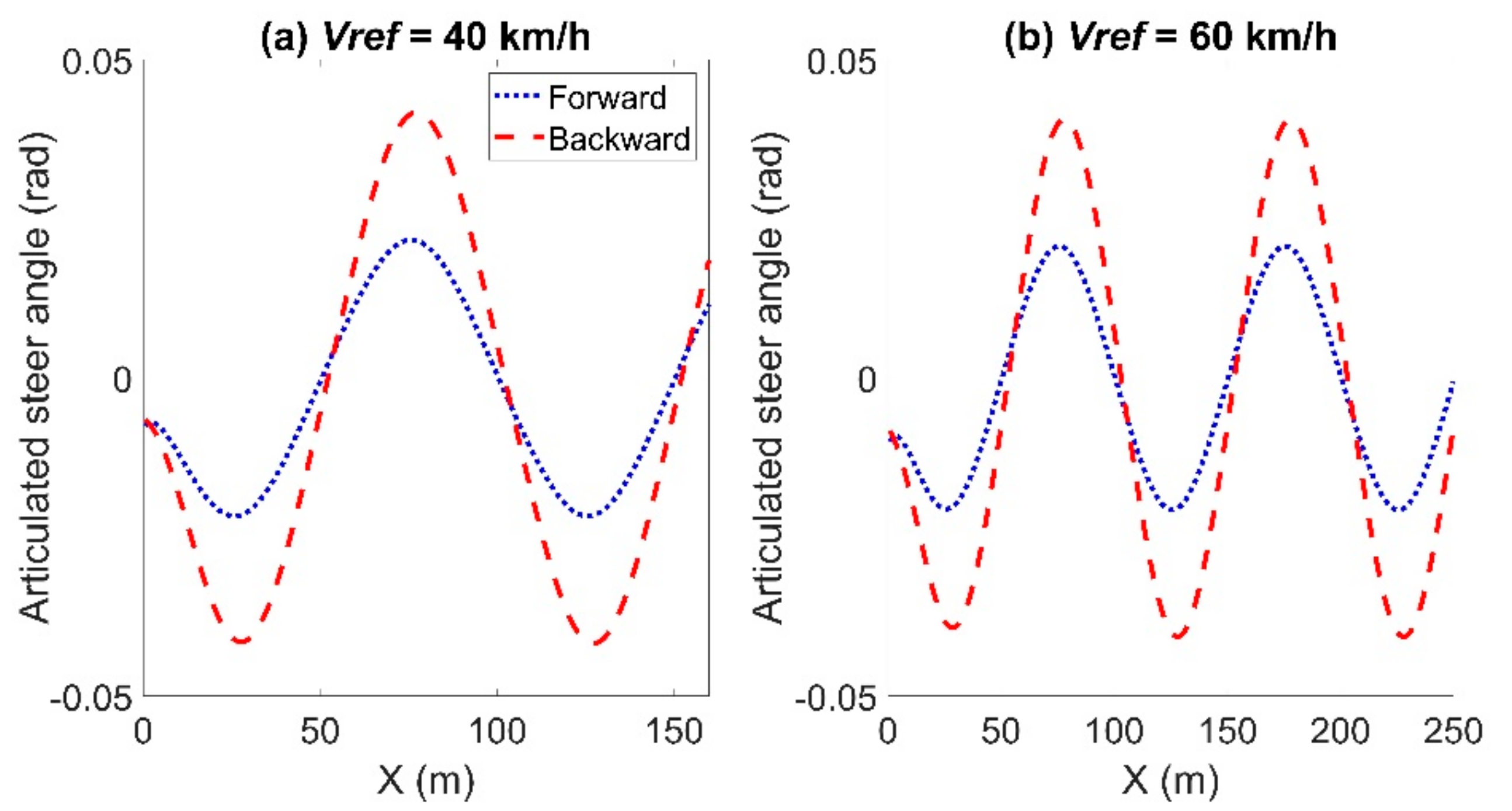

An effective and efficient method for generating a feasible trajectory is of vital importance to meet the requirement of instantaneous control for autonomous driving. In this paper, we have proposed a trajectory tracking controller based on MPC. Most MPC-based and other methods either set the velocity to a constant or cannot actively adjust the longitudinal velocity according to the information of the reference trajectory. To solve this problem, both the acceleration and steer angle are set to control inputs. Hence, the proposed controller can automatically adjust the velocity according to the information of the reference trajectory. Moreover, instead of the forward Euler integration method, the backward Euler integration method is used to establish the predictive model. To meet the real-time requirement, we impose the constraints uk+1 = … = uk+Nc on the control law. This significantly reduced the problem complexity. The warm-start technique was used to further accelerate the convergence of the optimization solver of the controller by using the previous results as a guess for the current optimization problem.

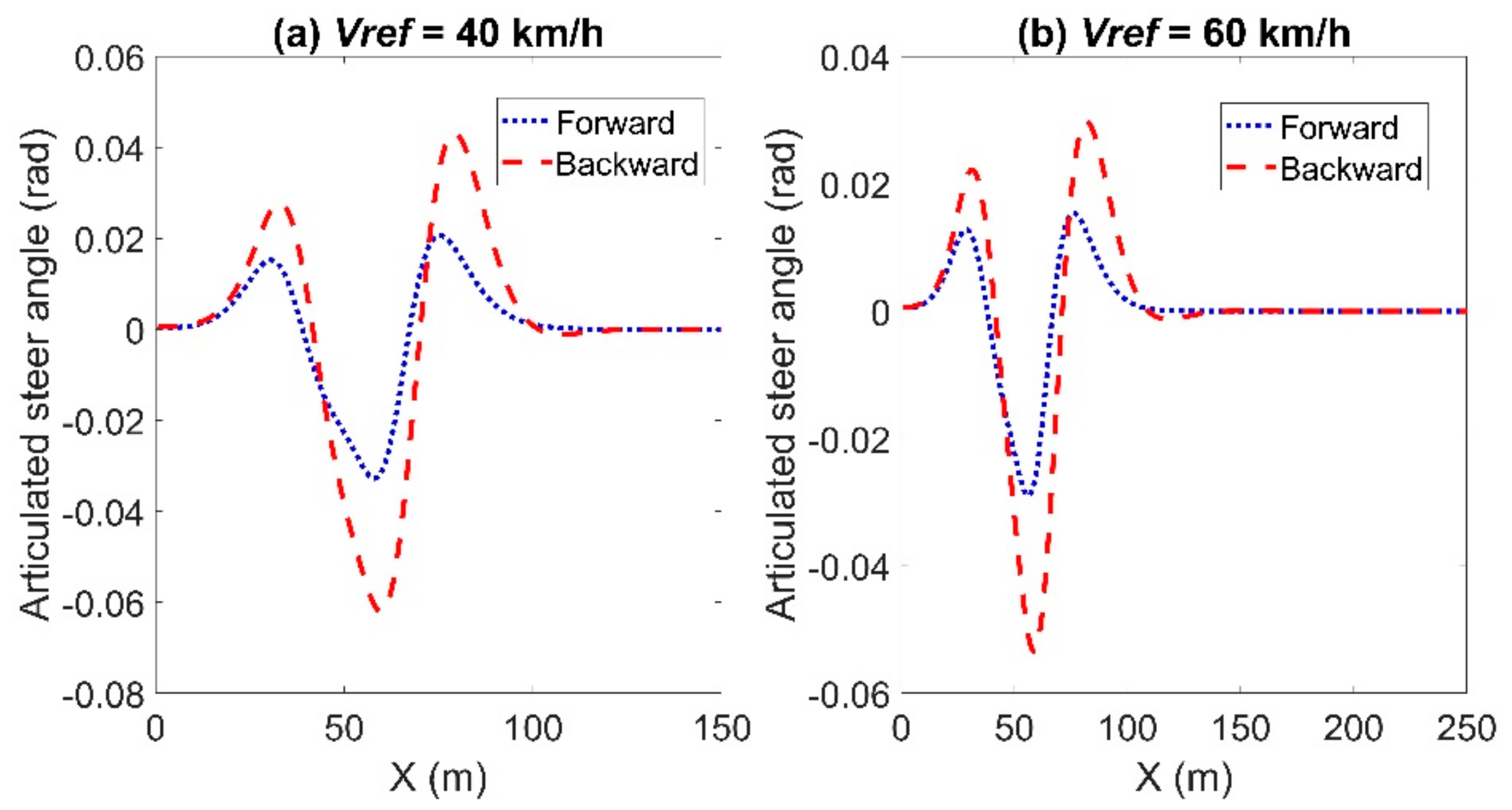

The proposed closed-loop MPC controller was stable and validated by simulation experiments. Compared with the MPC controller using the forward Euler method, the MPC controller using the backward Euler method had a much better accuracy in the lateral error, which is an important indicator to ensure driving safety. The lateral error could be reduced by up to 78%. There is little difference in the longitudinal error between the two controllers. However, the heading error of the MPC controller using the backward Euler method was larger than that of the MPC controller using the forward Euler method. The maximum and average computation times using the backward Euler method were almost the same or slightly larger than those using the forward Euler method. Moreover, the MPC controller using backward Euler method was more robust than that using the forward Euler method. The threshold value of the velocity for the MPC controller using the backward Euler method was larger than that using the forward Euler method (83 km/h versus 67.7 km/h for the sinusoidal trajectory). Overall, the MPC controller using the backward Euler method had a better tracking accuracy at the cost of no or little computation time.

The existence of the discrepancy between the actual trajectory and the reference trajectory is mainly due to the modelling errors, computation errors and disturbance errors. Recent studies on MPC-based controllers mainly focus on the modelling errors and disturbance errors. Few authors investigated the computation errors during the discretization for nonlinear systems. We hope that this paper is instructive and allows researchers new insight into creating MPC-based controllers.

From the perspective of science, our contribution is to give a new way to establish a predictive model, which is a cornerstone of designing a path tracking controller. Besides the forward and backward Euler methods, there are several integration methods, such as the midpoint method and Runge-Kutta methods. Improving the accuracy of the prediction model using other integration methods could be a promising way to get an effective control to maintain a good tracking accuracy.