1. Introduction

Structural health monitoring (SHM) remains a priority in large-scale industrial applications because of the multiple advantages in its implementation such as the use of sensors permanently attached to the structure for continuous monitoring and its readily available form for the application of data-driven approaches to determine the health of the structure under evaluation [

1,

2]. Structures in industrial processes require special attention since the inability to do so may put at risk its operation. Structural health uncertainty, in conjunction with variations in the operational or environmental conditions, increases the risk of accidents, which in turn may result in catastrophic events [

3]. One example of structures subjected to extreme operational conditions is the furnaces used in the smelting industry where temperatures, pressures, flows, among other variables, can vary considerably due to changes in the inputs (e.g., chemical composition of the raw material) or the operational conditions. This implies that operators are obliged to continuously monitor the structural health of the furnace before applying any changes in the operation set points, in order to maintain conditions that allow the secure operation of the system and the health of the structure. SHM is of particular interest for electric arc furnaces (EAF), which are characterized by the action of heating the materials using a covered electric arc for the smelting process. Routine operation causes wear in the wall lining of the furnace, hence monitoring wall thickness is of special interest to avoid run-outs of the smelting material. One strategy to evaluate the state of the structure is to directly monitor the wall thickness via routine inspection using specialized techniques. However, it is an expensive and difficult task because of the scale of the system. For this reason, it is of interest to use other measurements that can indirectly account for the thickness of the wall lining. As it turns out, the measured temperature in the wall is a good indicator of its health and can be used for monitoring tasks in a continuous (on-line) way.

Some recent works that use machine learning techniques to predict temperature variables within the smelting process have addressed the problem from different points of view. Mishra et al. [

4] compared five deep learning models for multivariate prediction of time series temperatures. The study yielded as a result that a deep convolutional network (DCN) performs best with wavelet and fast Fourier transform (FFT). An online estimation of electric arc furnace tap temperature by using fuzzy neural networks was developed by Fernandez et al. [

5] and its application of this helped reduce the consumption of energy in an electric arc furnace. In the work of Fontes et al. [

6], the hot metal temperature in a blast furnace was predicted using an approach based on fuzzy c-means (FCM) and exogenous nonlinear autoregressive model (NARX); the estimate was later implemented as a soft sensor for predicting temperature. In Shockaert and Hoyez [

7] a multivariate time series approach was built with a deep generative CycleGAN model combined with a long short-term memory (LSTM)-based autoencoder (AE). Particularly, that approach handles a transfer learning methodology in which data obtained from a source furnace is used to train a model that can be used to evaluate data from a target furnace. The forecasting of the hot metal temperature in a blast furnace is shown in the work of Iffat et al. [

8]. Specifically, an optimal time lag at which the input variables have an impact on the hot metal temperature is determined. Additionally, an incremental learning methodology that considers changes in raw material composition, process control methods, and aging equipment was developed.

In order to improve the efficiency in a blast furnace, a self-organizing Kohonen neural network approach was developed in [

9]. This approach managed to complement the control of process operating parameters for the blast furnace process. An artificial neural network (ANN) model was applied to predict the slag and metal composition in a ferromanganese production unit with a submerged arc furnace [

10]. The advantages of this ANN included the reduction of power and coke consumption. Similarly, in the work of Ducic et al. [

11], an ANN was derived as an intelligent soft sensor in the process of white cast iron production. An increase in productivity, as well as material and energy efficiency, can be translated into a reduction of the environmental impact and cost of the steel-making process in a basic oxygen furnace (BOF); in [

12], the aforementioned objectives were reached through the use of standard machine learning models to predict the end-point targets of variables like the final melt temperature and upper limits of the carbon and phosphorus content with minimum material loss. A random forest algorithm was used in [

13] to improve the quality of steel casting for tire reinforcement; 140 process variables were used as features and the output can take values 0 or 1 depending on whether the casting was rejected or not. The best area under the receiver operating characteristics (AUROC) in the test set was 0.85 obtained by the random forest classification method. The prediction issue of the amount of alloying additives in order to obtain the desired chemical composition of white cast iron was solved applying a neural network model in [

14]. A three-month-long monitoring of the metal melting process data set was used. Besides the data was split into training and test sets founding that the neural network model reached a mean squared error of 3.31% in the test set.

As previous works have shown, different variables can be predicted by using historical operation data to evaluate the health of furnaces, or to predict the behavior of the process when inputs are changed. Therefore, the development of prediction models becomes one of the main necessities in the areas of operation analysis, control, and maintenance of EAFs and remains an open research area. As a contribution, this work presents the development of a deep learning model to predict the temperature in the lining of the EAF. Details about the experimental setup, the data acquisition from sensors, its preprocessing step, model development, and its validation are also presented in this work. This work was carried out in a joint effort between academia and industry, Universidad Nacional de Colombia and Cerro Matoso S.A. (CMSA). The interested reader can acquire some more background of the research process by reviewing some of the previous works developed by the authors, where the problems associated with sensor networks and continuous monitoring in this kind of furnace, including temperature monitoring [

15,

16], gap monitoring [

17], and thickness monitoring [

18] using ultrasonic and ground penetrating radar (GPR) methods are also tackled.

This paper is organized as follows:

Section 2 includes a theoretical background where some concepts about the company, the process, and the methods used in the methodology are briefly introduced.

Section 3 provides information about the developed methodology including a description of the dataset used for the validation.

Section 4 presents the results and discussion; finally, conclusions are included in the last section.

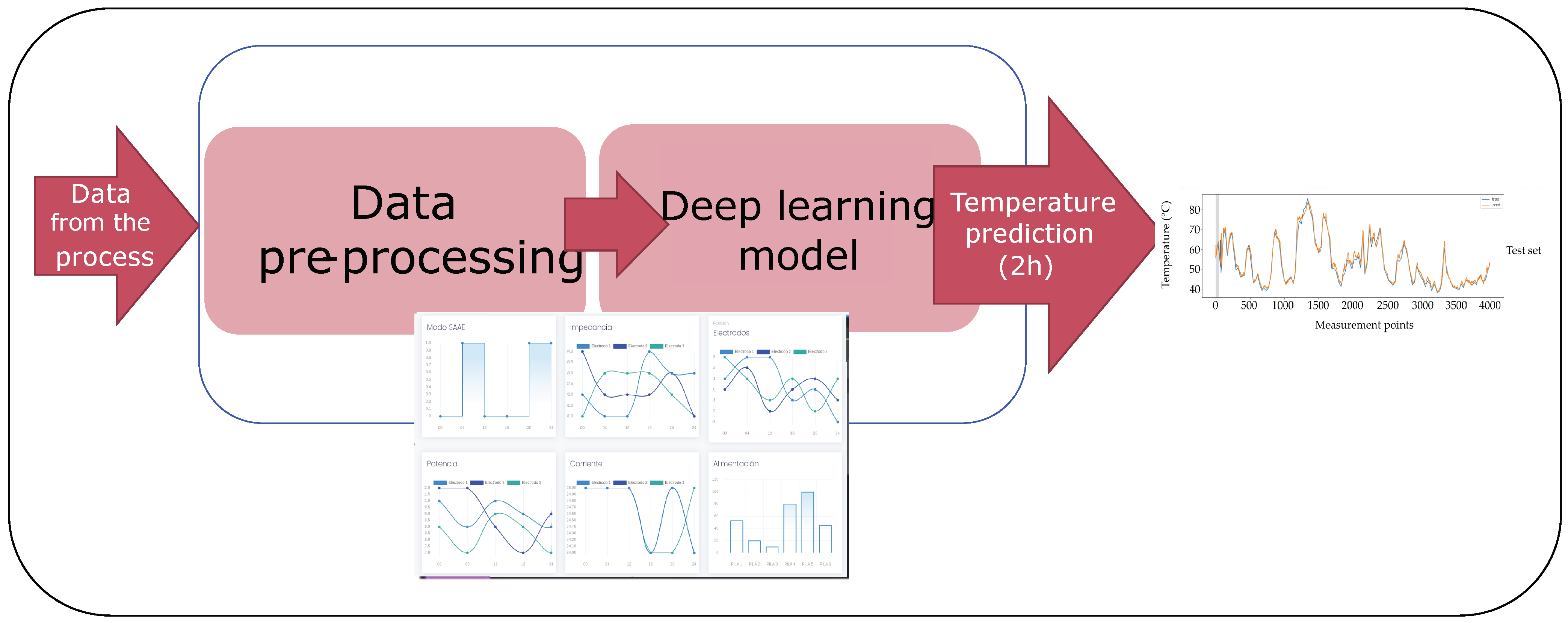

3. Temperature Prediction Methodology for the Furnace Lining

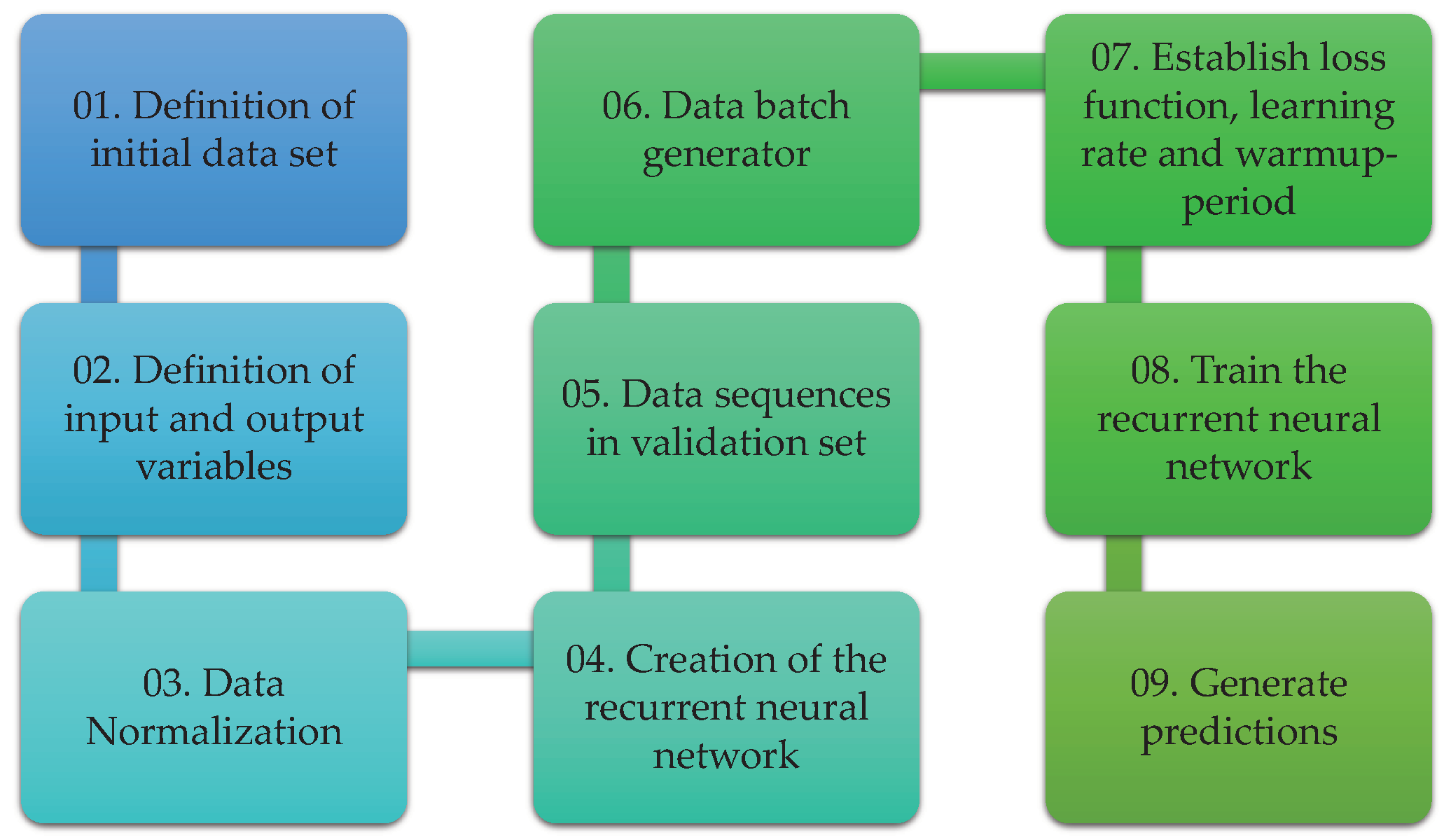

This section is devoted to introducing the proposed methodology for temperature prediction in the wall of the ferronickel furnace studied. Two general steps are considered after the data acquisition step: the data cleaning process and the development of the deep learning model as depicted in

Figure 5. Although the methodology considers some particular elements and variables of this specific smelting process, it can be generalized to other complex processes where a big number of sensors are used and it is necessary to predict the behavior of a variable.

Before presenting each step of the methodology, some context with regards to the data set obtained from the data acquisition system is given next.

3.1. Dataset for Methodology Validation

The data set corresponds to the measured variables of an electric arc furnace for ferronickel production located at Cerro Matoso SA (South32 company). The data set used was sampled every 15 min for a period of 416 days between the 11 August 2018 and 30 September 2019. The data set is composed of a total of 40,000 instances. Regarding the attributes, also called variables or features, an in-depth analysis with a group of expert furnace operators defined a group of 49 variables selected due to their importance in the furnace operation. These 49 variables are detailed in

Table 1 and serve as input variables to train and test the developed multivariate time series temperature prediction system.

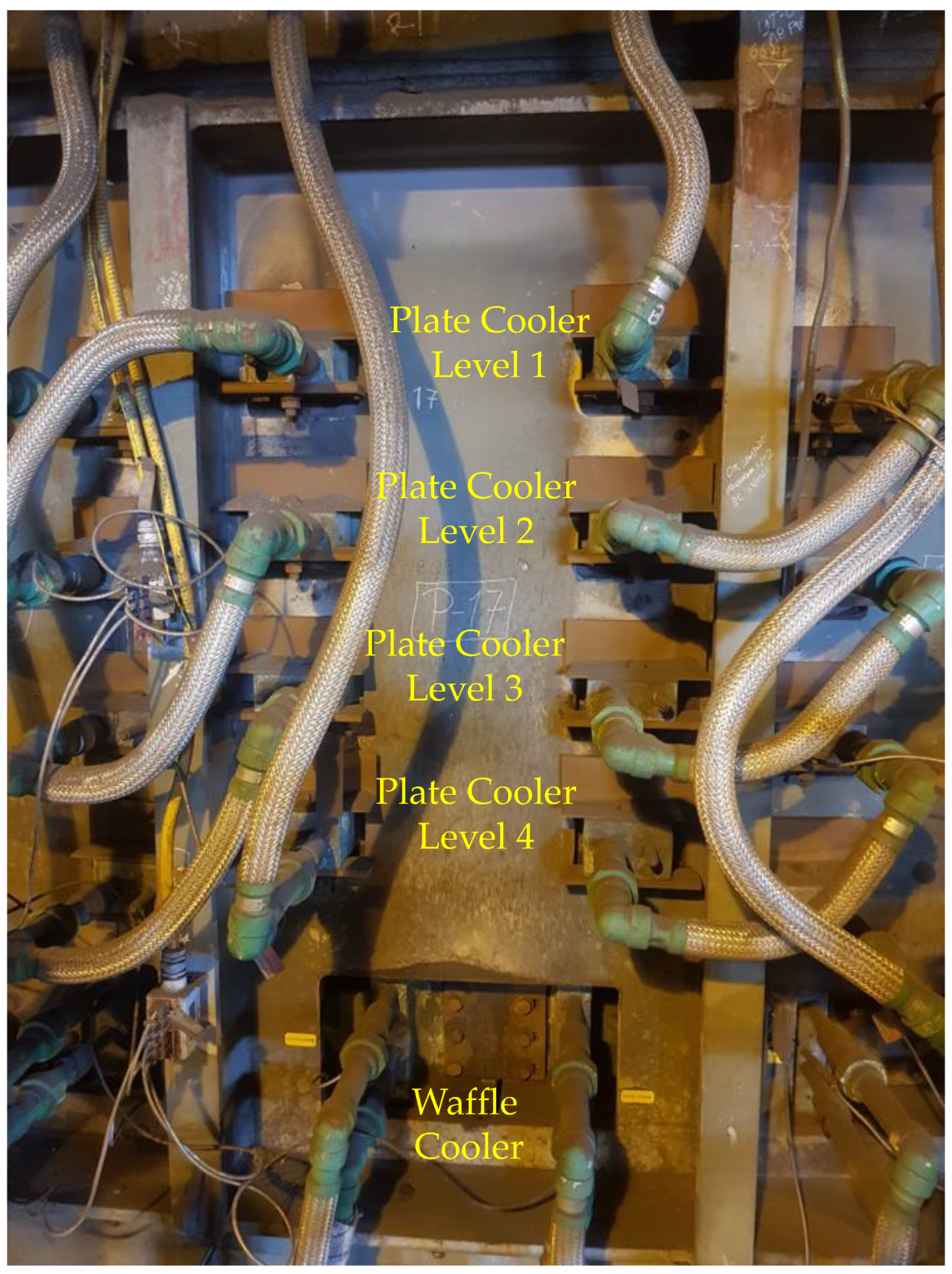

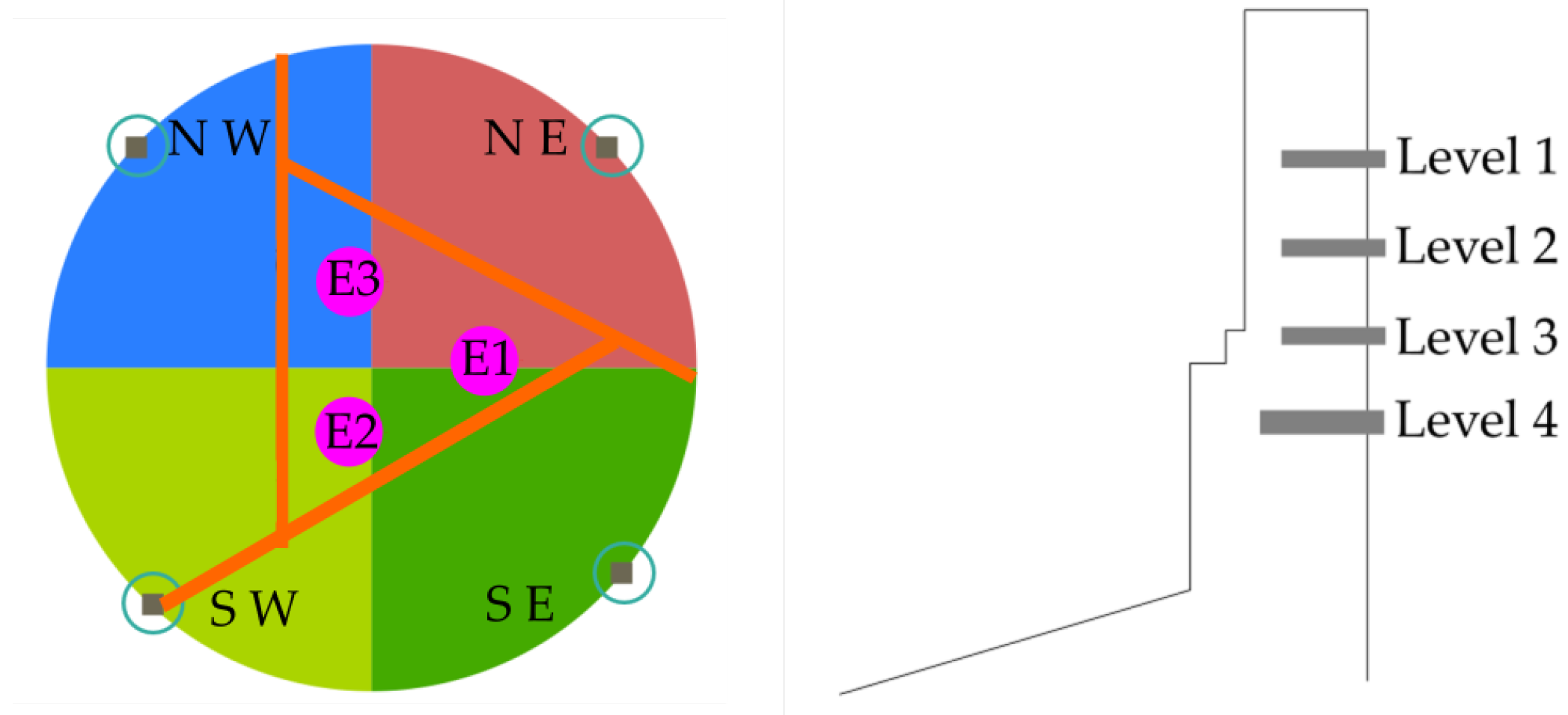

The electric arc furnace is built with the integration of 72 panels radially distributed on its perimeter. Each panel is composed of four plate coolers; these four plate coolers are located at four different heights labeled Level 1, Level 2, Level 3, and Level 4 as depicted in

Figure 6. Thus, there are 72 × 4 = 288 plate coolers radially distributed along the furnace. Each plate cooler has a thermocouple for temperature lining monitoring.

Figure 6 shows a section of the wall of the furnace where it is possible to observe the refrigeration system composed of 4 levels of plate coolers per panel, where the thermocouples, whose temperature measurement must be predicted, are located.

Due to the high number of plate cooler thermocouples in the lining furnace, a discrete group of 16 thermocouples were selected in this work as output variables to be predicted. Four panels of the furnace belonging to the North-West (NW), South-West (SW), South-East (SE), and North-East (NE) quadrants were selected. Each panel has four thermocouples, thus a 4 × 4 = 16 thermocouples in total were selected. The distribution of these 16 thermocouples is illustrated in

Figure 7. The location of the three furnace electrodes (E1, E2, and E3) is detailed in

Figure 7 (left).

3.2. Data Pre-Processing Step

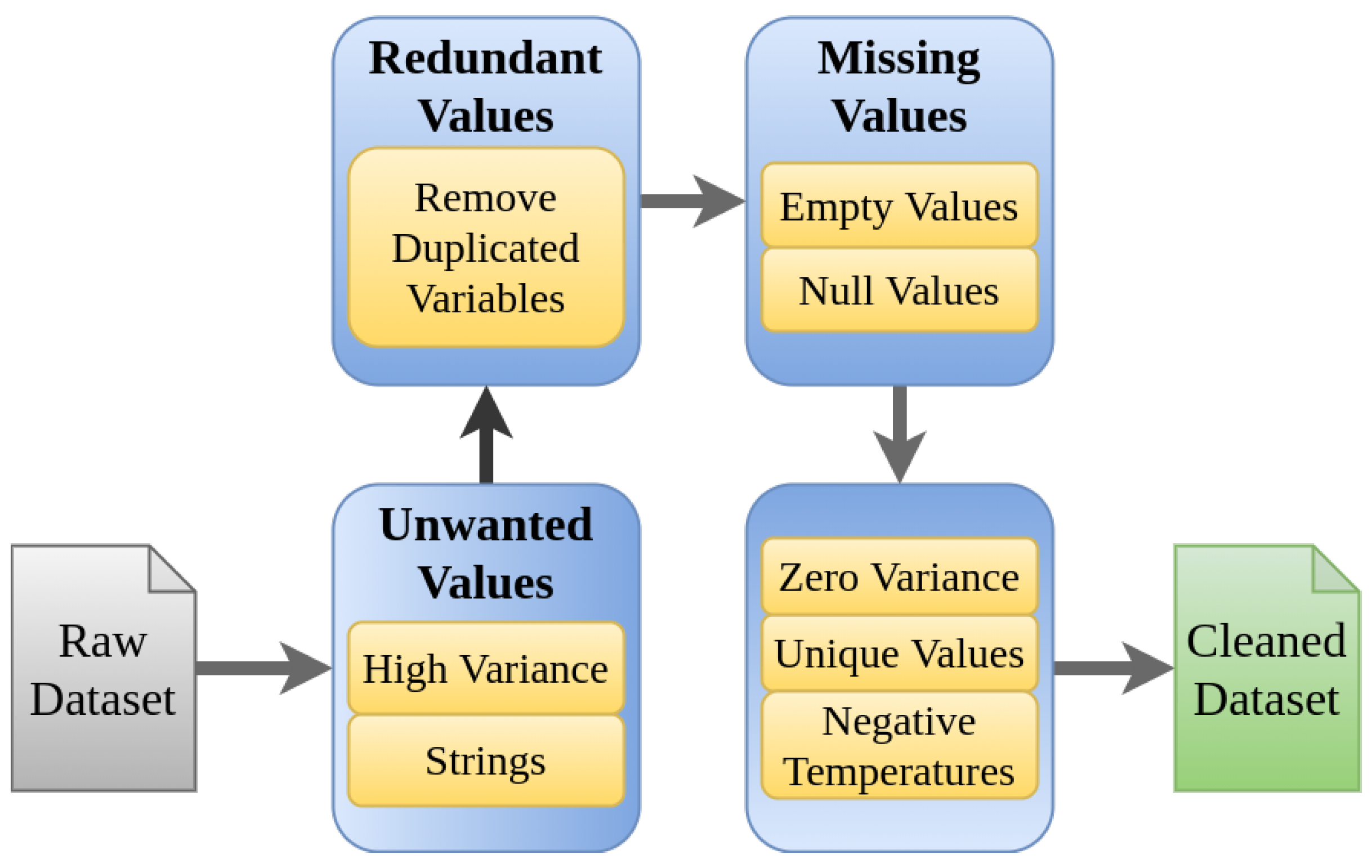

The ferronickel production process carried out at the CMSA facilities is made up of a large number of variables. Variables are acquired from different sources, including multiple on-line sensors, and collected by a data acquisition system (DAQ). The collected data can contain errors because of failures in sensors, noise, or missed data that the system fails to capture or store. These errors require revision and errors must be eliminated in the pre-processing step in order to reduce the errors in the model to be developed. Originally 1180 variables were provided by CMSA. Together with experts from the process operations area, some considerations about the range of the variables were identified, and the elements shown in

Figure 8 were defined as the set of rules to be considered. These elements constitute a workflow with seven steps, which contains different types of problems that might be present in the initial data set, for example, strings of characters in numeric variables, negative temperatures, variables that remain in a single value, variables with null data. With this workflow, it was possible to find variables that consistently presented problems in the data, and for this reason, they were eliminated. It is also noted that given the large number of variables available, it was not necessary to carry out the data restoration processes, avoiding in this way the appearance of gaps in the data set. The results obtained after applying the proposed workflow allowed to debug 340 variables with errors, which indicates that 28% of the data has problems.

The following steps in the data cleaning process were considered [

15]:

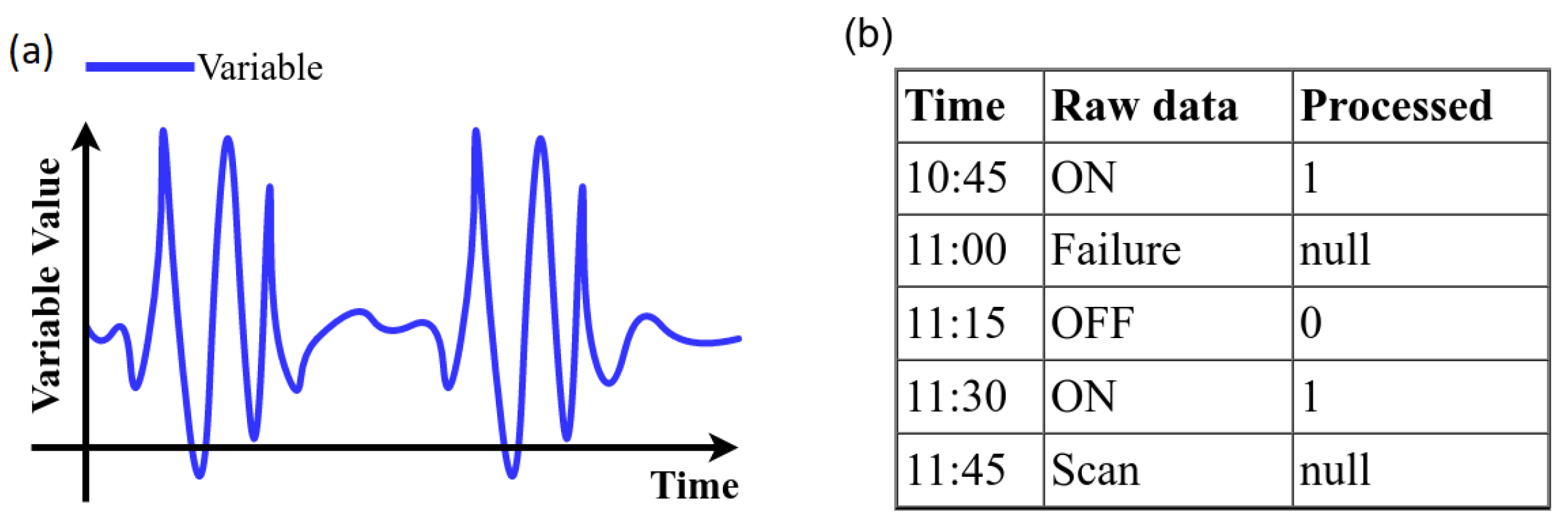

High variance: Data with values outside of the operational range should not be considered. Thus, a univariate measure for the measurement of quality based on percentage changes is calculated. A variable representation with variance is shown in

Figure 9a.

Strings: the variables that had non-numeric values were encoded with numerical values (see

Figure 9b).

Remove duplicates: The identification of duplicate variables results in drop the duplicate and stay with a single variable (see

Figure 10a).

Empty and null values: A 2% was selected as threshold, thus, variables with more than 98% with empty or null values are dropped. (see

Figure 10b)

Zero variance: If more than 50 % of the data of a variable does not vary and remains in a constant value the variable is dropped (

Figure 11a).

Unique values: Because it is desirable to find relationships between variables those that remain constant over time are dropped (see

Figure 11b).

Negative temperatures: The normal operating range of variables that measure temperature always takes positive values. Due to the above, some variables with negative temperatures are identified and these are dropped (

Figure 11c).

The amount of variables eliminated in each of the categories in the data cleaning process is described in

Table 2. As a result, after performing the cleaning process, 840 variables were obtained in a cleaned data set. Subsequently, through suggestions made by the furnace operators and the judgment of expert engineers belonging to CMSA, the 49 variables of the temperature prediction model developed in this work were selected. These 49 variables are listed in

Table 1.

3.3. Development of the Multivariate Time Series Deep Learning Model

The following section will the steps carried out in order to achieve our goal of training a temperature prediction model. To this end, we will first discuss the steps taken in order to define a suitable data set. This is followed by a discussion on the definition and development of the RNN proposed to be used in this paper.

The programming language selected to implement the temperature prediction models is Python. Together with this programming language the following libraries are used for data management, neural network training and visualization, among other functions:

3.3.1. Definition of the Dataset

The data set has input variables of different magnitudes and values. So the different values are scaled so that they are in the interval between −1 and 1. The target data comes from the same data set as the input signals, because they are the output thermocouple data that simply shifts in time.

The number of time steps that it will shift the target data is predefined. The dataset was sampled to have one observation every 15 min, thus there are 96 observations over 24 h. In particular, the shift is used to predict temperatures two hours in the future.

Due to the large number of instances that were taken in the dataset (40,000) it is impractical to perform a conventional division of 70% of the data for training and the remaining 30% for testing. In contrast, and due to the desire to have the greatest amount of data for training, the decision was made to divide the data into 90% for training and the remaining 10% for testing. In addition, the input and output variables for the training and test sets were defined. The dataset must be prepared as two-dimensional NumPy arrays. In this case, there are 49 input signals and 16 output signals.

Instead of training the recurrent neural network on the entire sequence of 40,000 observations, a function is used to create a batch of shorter 250 subsequences randomly selected from the training data. Thus, every sequence had a size of =1152 steps corresponding to 12 days. This period of time is defined because it is in which the pile of calcined material in the furnace is changed. The 40,000 data used in the training and testing of the temperature prediction model were cleaned before the development of the neural network. Thus, there are 250 random sequences (batch) with a size of 1152 steps and all the data belonging to this batch is clean following the steps described in

Section 3.2, where it was determined as a data cleaning policy that if a variable had any of the problems indicated, it would be eliminated, this was possible given a large amount of information available and variables in the initial data set. For that reason, the variables with data problems were not available for the training data set, because their information did not represent the real behavior of the furnace, therefore it is clarified that there was no partial elimination of values that generated discontinuity in the time series.

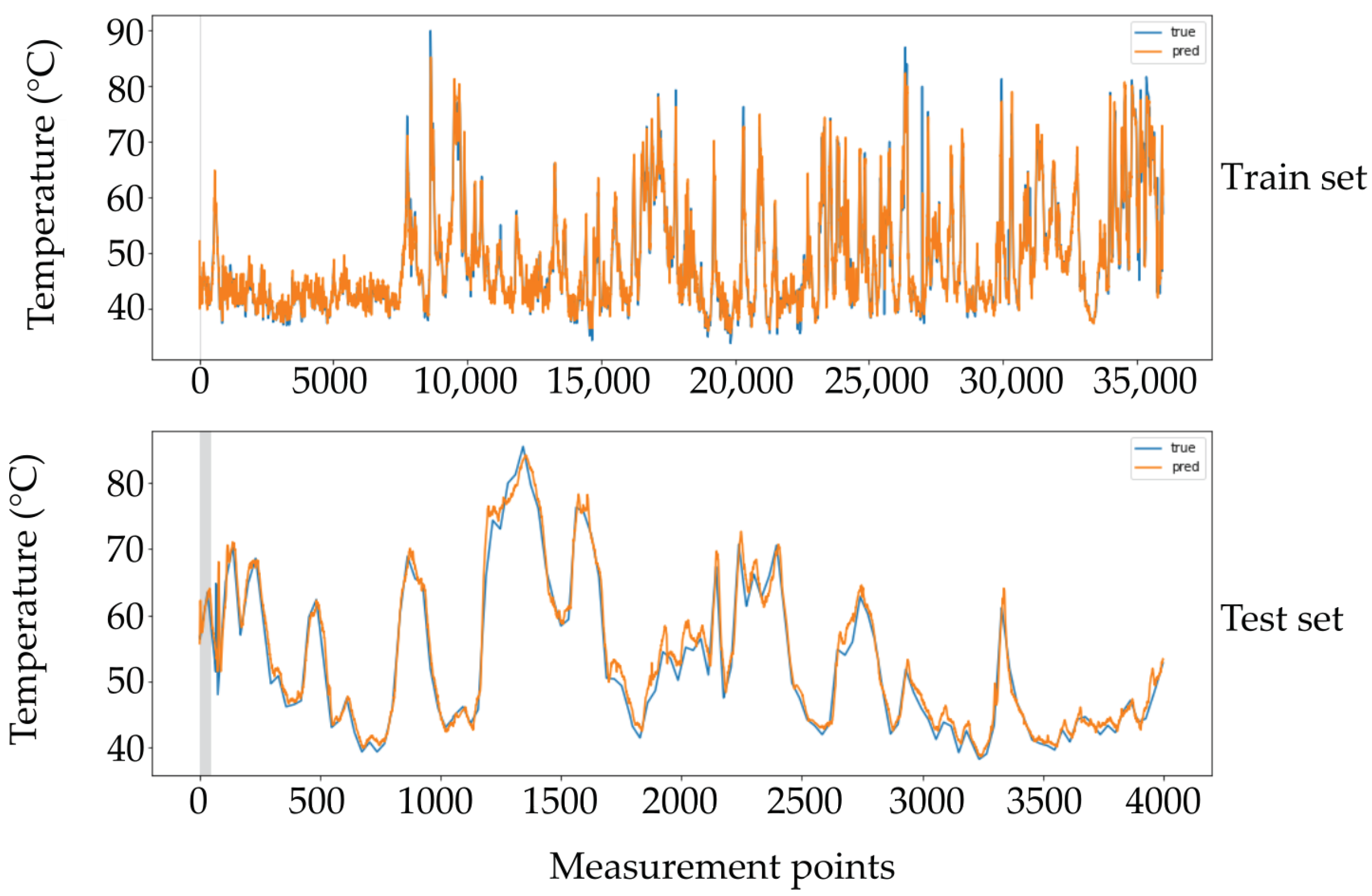

As described, the training is performed by taking 250 random sequences. However, to carry out the testing, the complete sequence of data is taken in the test set corresponding to 4000 records. These 4000 records in the test set are sufficient and still providing a good enough estimate of the model performance. In addition, the model performance is monitored after each epoch on the test set and only if the performance is improved on the test set the weights of the recurrent neural network are saved for the next epoch.

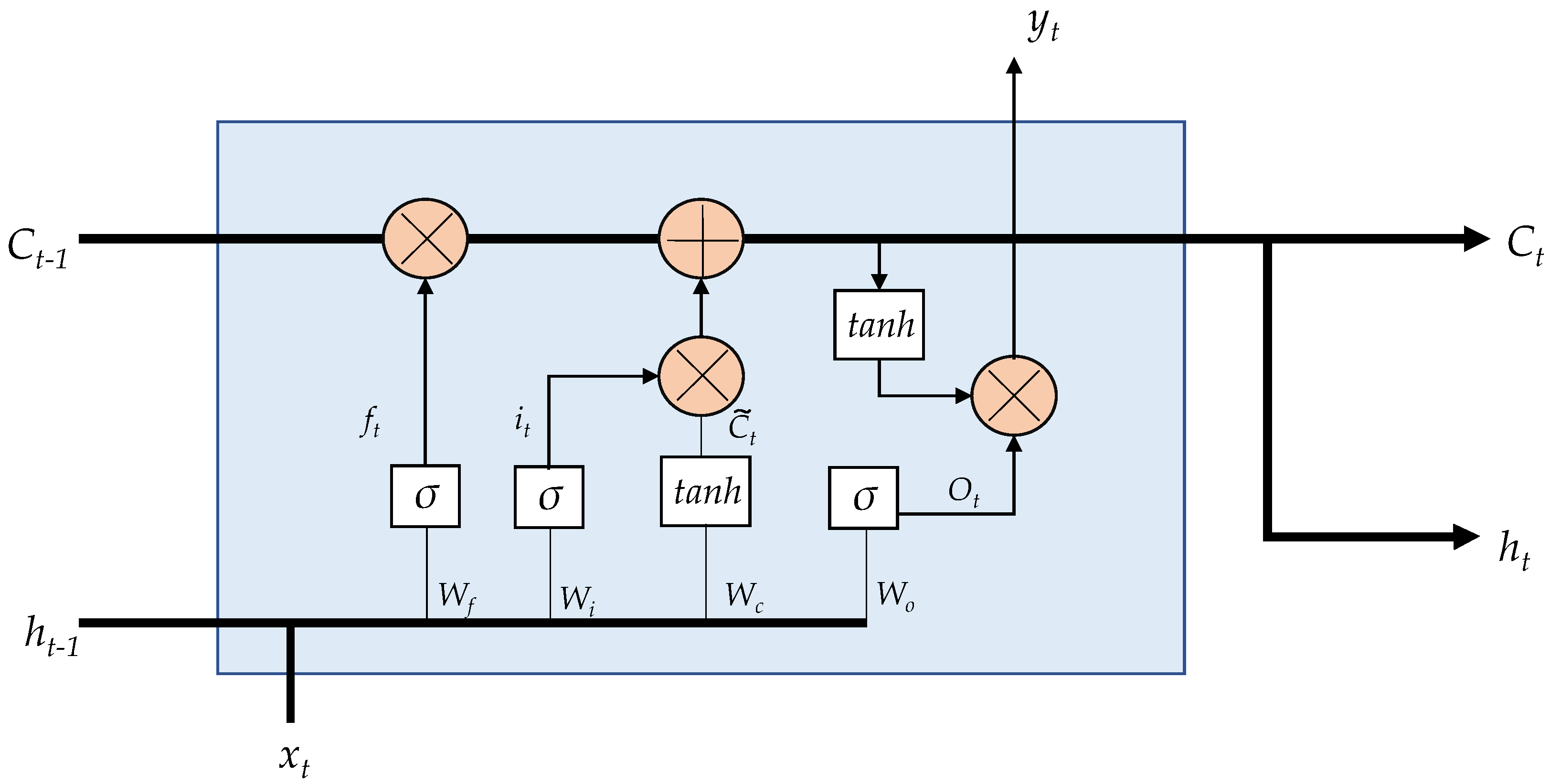

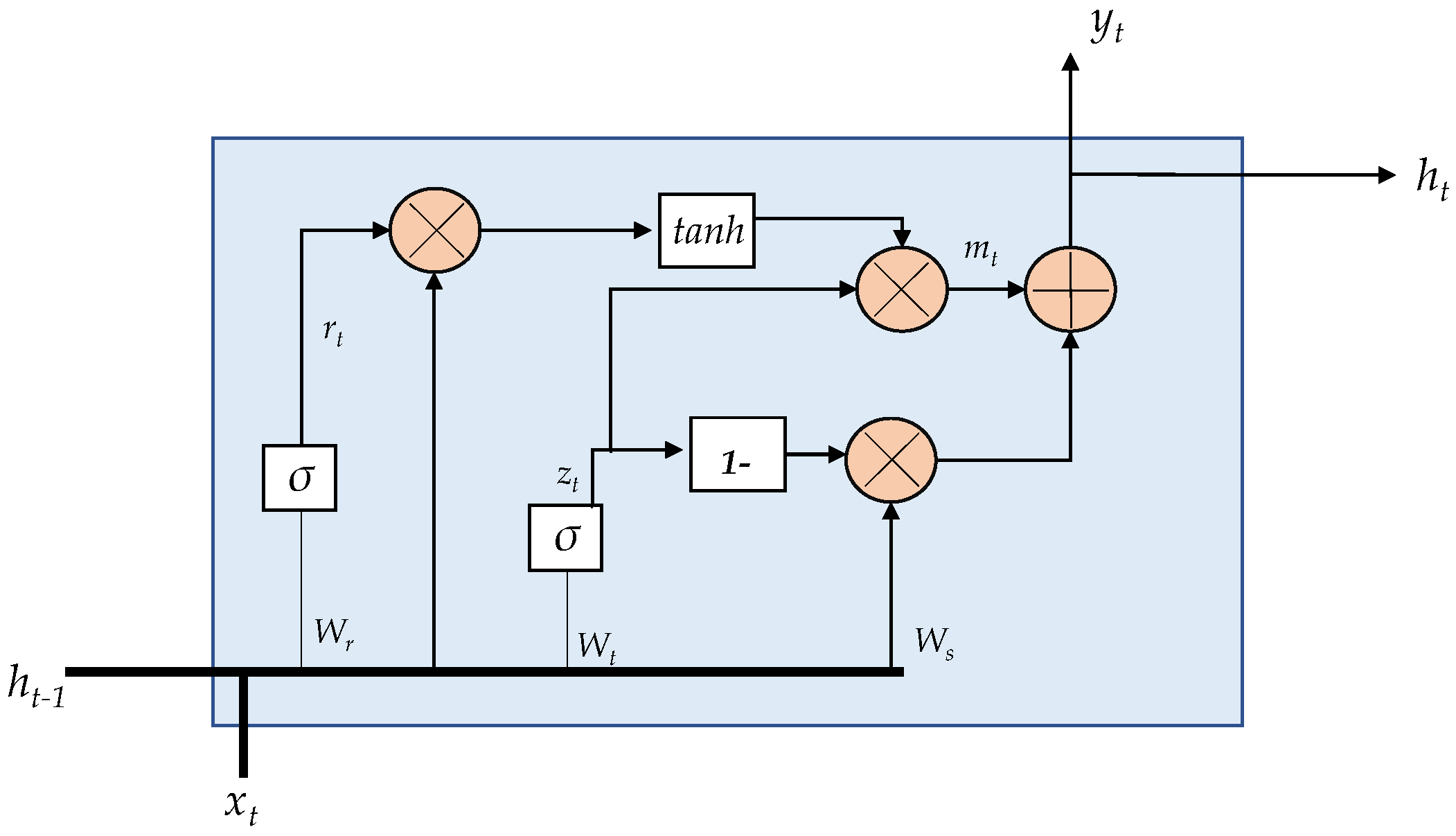

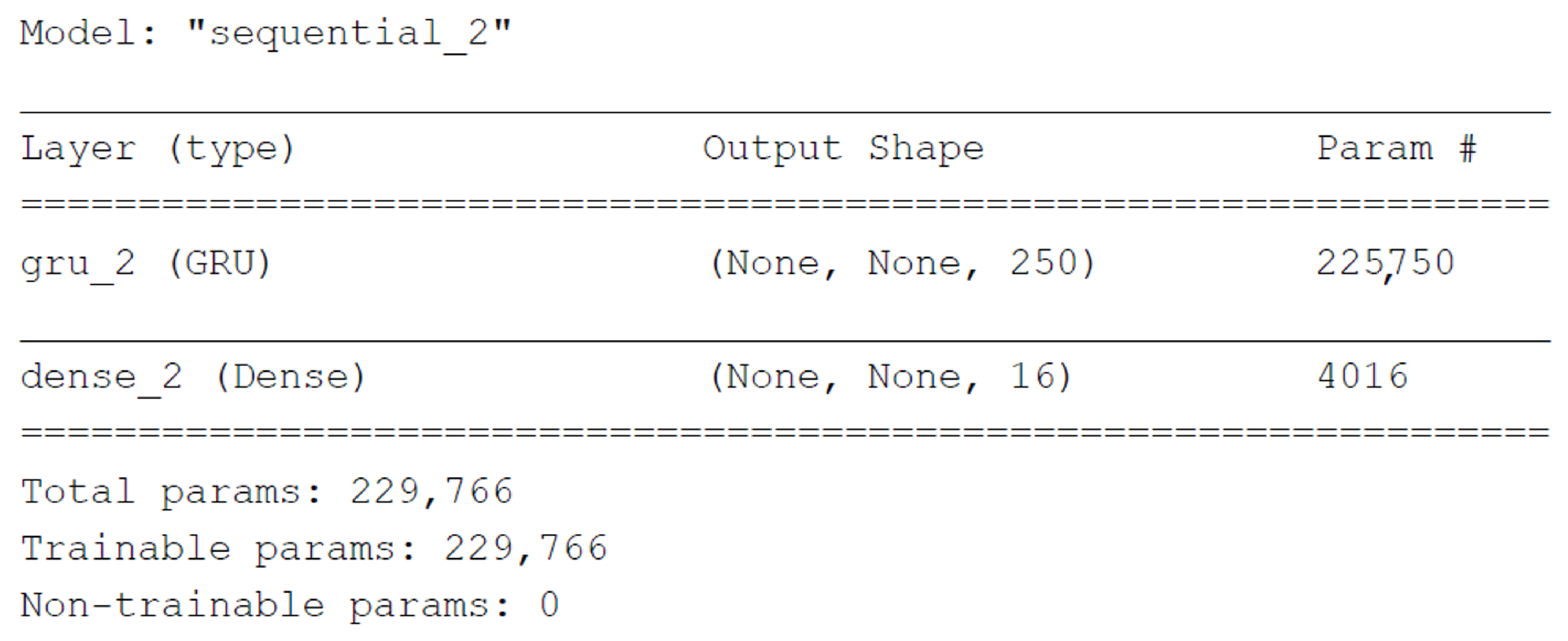

3.3.2. Creation of the Recurrent Neural Network

The neural network and its different layers are created using TensorFlow in a sequential model. The first layer consists of the use of a cell type gated recurrent unit (GRU) to create a recurrent neural network. This GRU layer had 250 outputs for each time step in the sequence. The information that enters this first GRU layer of the model is a batch of sequences of arbitrary length where each observation has several input signals. The GRU network generates a batch of sequences of 250 values. You want to predict 16 output signals; thus, a dense layer is added in the deep learning model that assigns 250 values to only 16 output values corresponding to the 16 thermocouples that it is desired to predict. A sigmoid activation function is used to ensure that the values are within the normalized values.

After each epoch of the neural network, the performance of the model in the test set is monitored and the model weights are only saved if the performance improves in the test set. In the training process, a batch of short sequences is randomly selected from the total training data. In this case the training data has 36,000 instances. In contrast, for the validation data, the entire sequence is run from the 4000 instances in the test set and the prediction accuracy is measured on that entire sequence.

It is important to discuss the loss function, learning rate, and warmup period. The loss function that is minimized is the mean square error (MSE). A warmup period of 50 time steps is assigned to the model so that the precision of these first 50 steps is not used in the stall function. The inclusion of this warmup period allows the model to present a better behavior for each of the 16 outputs in terms of root mean square error RMSE. Adam [

33] was selected as an optimizer and an initial learning rate of

is used. If the loss of validation has not improved since the last epoch, the learning rate changes to

. A two-layer model was defined, one GRU and the other dense. The output form of (None, None, 16) shown in

Figure 12 means that the model will generate a batch with an arbitrary number of sequences, each of which has an arbitrary number of observations, and each observation has 16 output signals.

3.3.3. Training of the Recurrent Neural Network

A single “epoch” does not correspond to a single training set processing, due to how the batch generator randomly selects subsequences from the training set. Instead, “steps-per-epoch” is selected to have an epoch processed in a few minutes. In this case, the number of steps-per-epoch used is equal to 100. The parameters of the joint GRU + Dense model are described in

Table 3.

3.3.4. Performance of the On-Line Prediction Model

The final step is to compare the predicted and true output signals. The time series prediction model performance is calculated using the root mean squared error (RMSE):

where

M is the number of data points in the time series to be estimated,

is the actual value of time series, and

is the estimated value at time

i by the prediction model [

34].

The steps to develop the different deep learning models to predict the temperature are described in this section. These steps are illustrated in

Figure 13.

5. Conclusions

In this paper, a multivariate time series deep learning model was developed to predict the temperature behavior in an electric arc furnace. The developed temperature prediction methodology was tested on a dataset of 416 days of an electric arc furnace operation, corresponding to 40,000 instances. Sixteen thermocouples radially distributed in the furnace at four different height levels were selected as output variables. The results yielded by the GRU (250 Cells) + Dense deep learning model showed an average RMSE of 1.19 °C for the test set using a training/test ratio of 90/10. This shows the goodness of the prediction in the SHM system for furnace lining temperature monitoring.

It was found that approximately 28% of the original dataset presented abnormalities, thus, it was very important to carry out a data preprocessing step including data cleansing, outlier removal, and removing redundant, null, and unwanted values.

The developed deep learning model allowed us to perform temperature predictions in the lining of the furnace at 2 h in the future. Consequently, the predicted behavior of the furnace facilitates decision-making associated with the possible high temperatures of the furnace hearth due to changes in the operational variables. These predictions contribute to carrying out correct structural health monitoring and preventive control of the furnace lining erosion, caused by excess temperature.

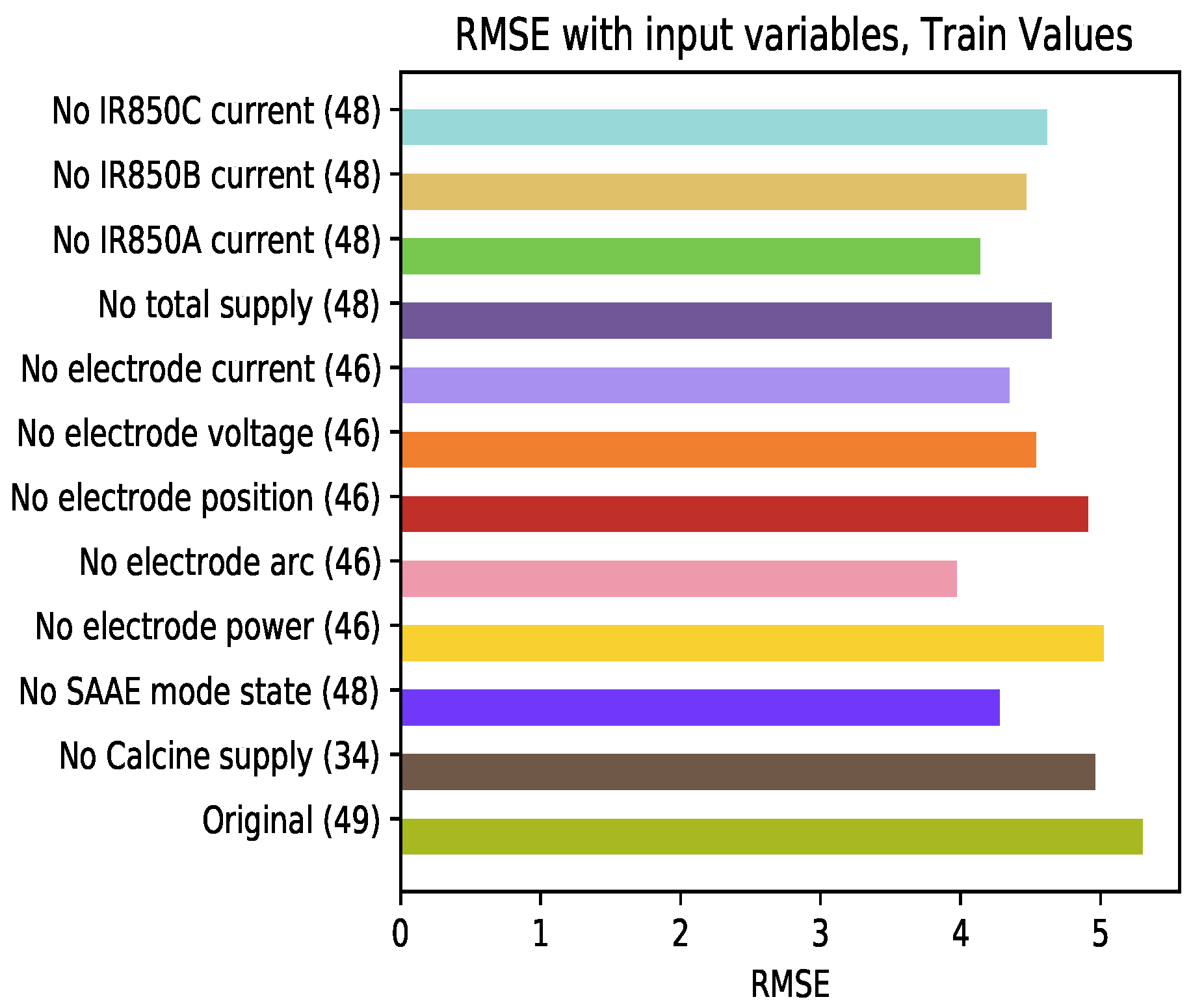

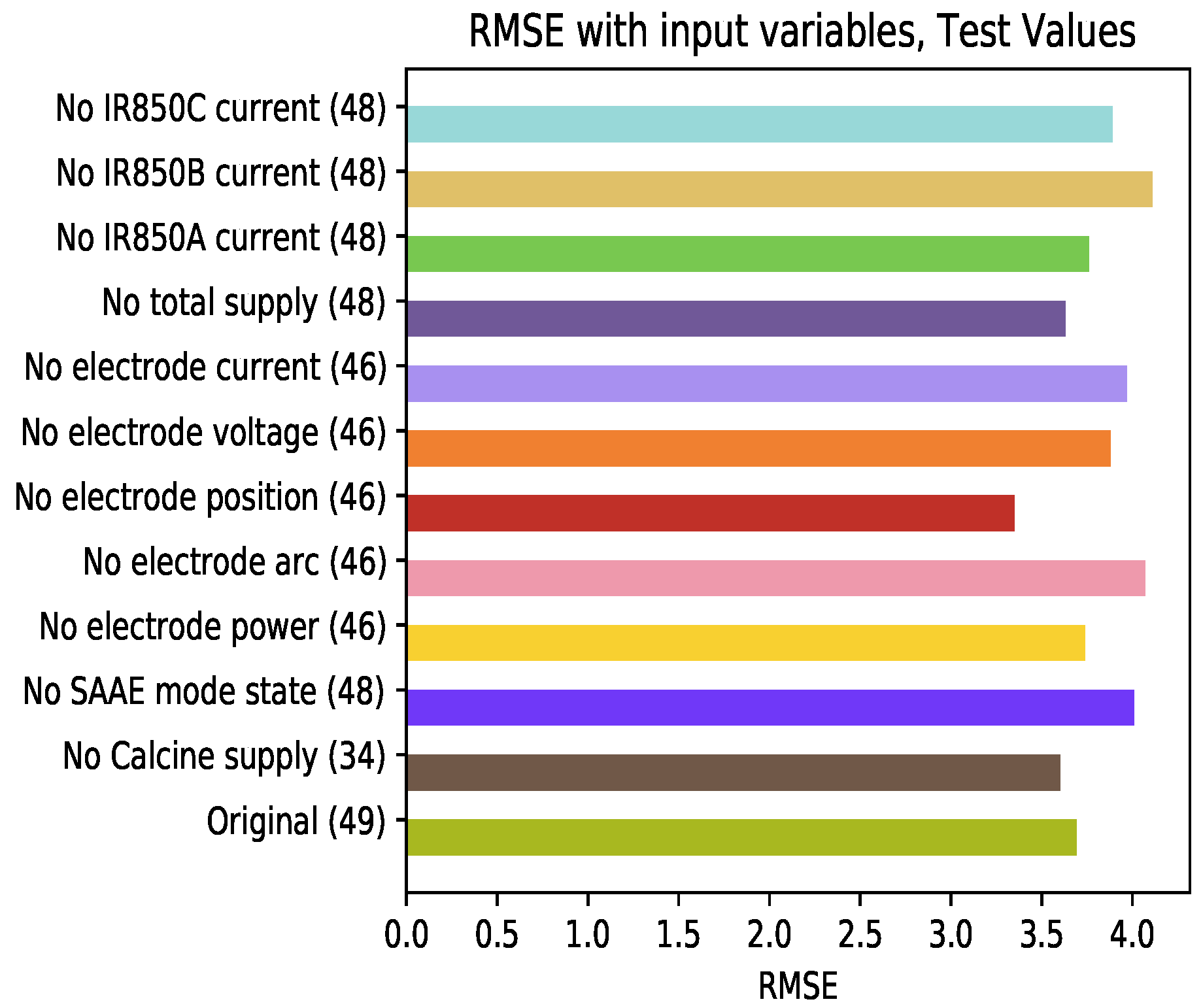

This research allowed us to determine which variables are relevant in the prediction of temperature, confirming the hypothesis about the relationship between each variable and the furnace lining temperature. This was necessary to determine the input variables of the multivariate time series deep learning model.

In future work, an attention-based model inspired by an encoder-decoder approach will be applied to predict the furnace temperature considering relations between variables in long and short term periods of time. Moreover, a more sophisticated architecture for the CNN1D, involving several layers of convolution and maxpooling will be proved in order to identify their capacity to catch more abstract features. Besides, the developed model will be tested in another electric arc furnace, and their ability to predict its lining temperature will be compared.