Air-Writing Character Recognition with Ultrasonic Transceivers

Abstract

:1. Introduction

2. System Description

2.1. Hardware

2.1.1. Signal Model/Target Detection

- Cross-correlation. The acquired signal is cross-correlated with a template containing the expected echo. This method will give a maximum value in the sample where the template and the acquired signal match.

- Dynamic Threshold. In order to distinguish whether there is an echo or not, the value of the cross-correlated signal needs to be greater than a threshold level. The dynamic threshold used in this step decrease the value with the time, to match the attenuation of the signal with the distance traveled [28]. This parameter can be increased or decreased to fit certain conditions, i.e., ambient noise. The cross-correlated signal obtained in the previous step is filtered to extract the envelope, and this envelope is then evaluated to check if and where it crosses the threshold level.

- ToF calculation. All previous calculations are done over the sample number. When the crossing point between the cross-correlation envelope and threshold is calculated, the sample can be converted to time using the ADC sampling frequency parameter.

- Distance calculation. Once the ToF is calculated, it can be converted to distance using the following equation [29]:where d is the distance between the hand marker and the transceiver, indicates the ToF calculated, and is the speed of sound.

2.1.2. Object Positioning

2.1.3. Track Definition and Filtering

2.1.4. Track Transformations

3. Character Recognition Algorithms

- Convolutional Neural Network. This DNN model is based on a set of convolutional filters that are applied sequentially to the input data to generate feature maps. The bias and kernel values of these filters are calculated during the training phase of the model. The features extracted with these convolutional filters are later used by fully connected layers for classification or prediction tasks as a traditional Multilayer Perceptron would do. In this work, the CNN will be used to classify the input data as one of the possible studied characters. The input data fed into the CNN are the final 2D-images where the whole characters are represented. Consequently, this DNN is trained to classify each input data point individually without taking into account the length of time of each character or the time distribution of the positions.This DNN structure was selected according to the high-accuracy results achieved in the literature for gesture recognition [3,6,8,9]. These works focus on gesture data recorded with multiple sensors such as radar or Wi-Fi. Because of this, it is desired to research if similar results can be achieved when using ultrasound data.

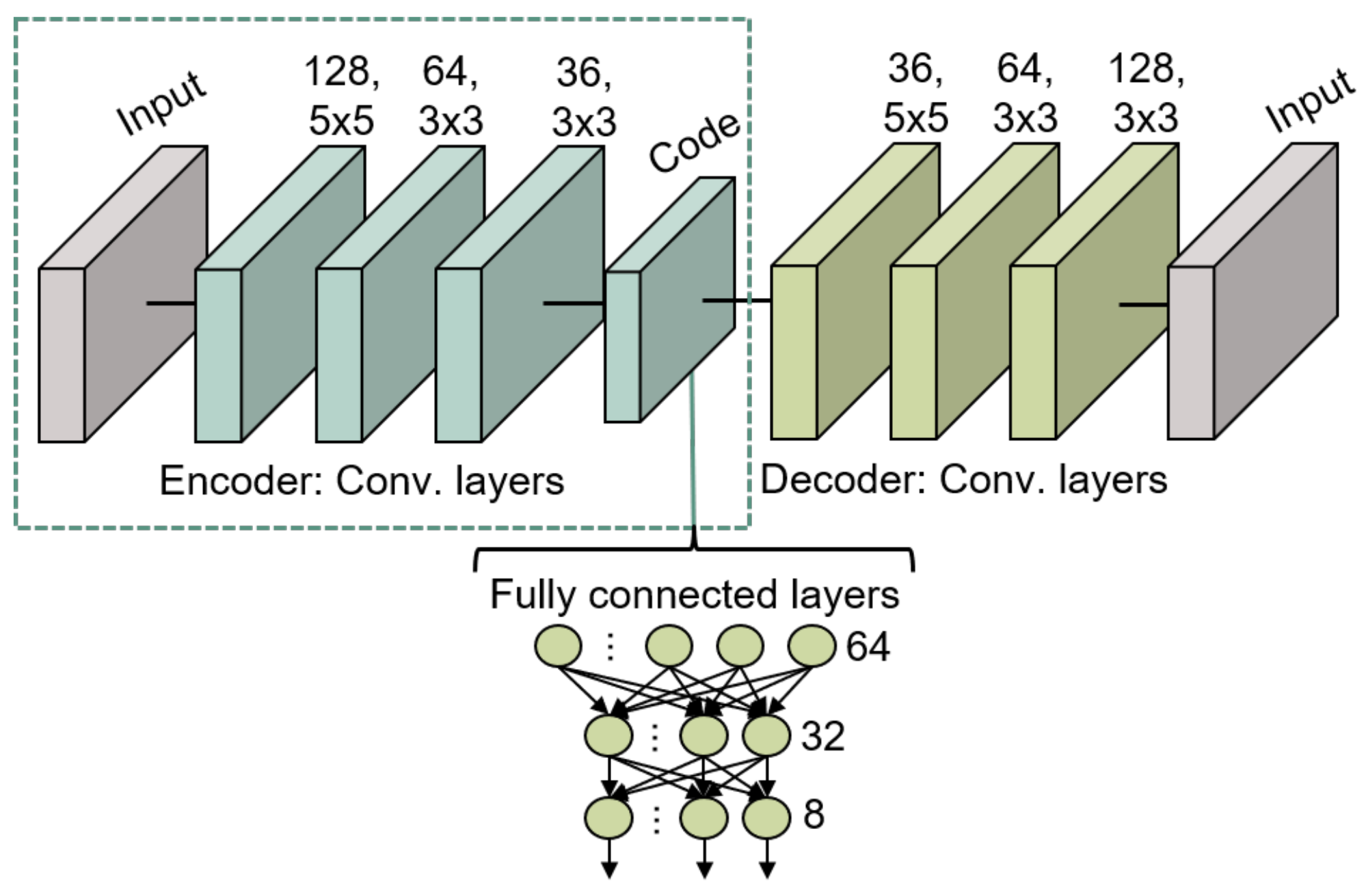

- Convolutional Autoencoder. The convolutional autoencoder can be employed to extract features from data in an unsupervised fashion [34]. This DNN consists of two main parts: an encoder, which maps the images into an embedded representation called code, and a decoder that reconstructs the original image from the code. Therefore, the encoder and decoder can be trained by using the same data as input data and expected output. The Autoencoder can also be combined with convolutional filters for efficient data coding when a more complex feature extraction is required. As for CNN, the encoder can be the input of fully connected layers for the classification of features in different categories. The use of encoders in classification tasks can bring several benefits such as dimensionality reduction and performance improvements in supervision [13,31,32,33]. As with CNN, the inputs fed into the convolutional autoencoder are the final 2D images where the whole characters are represented. Therefore, no time information is considered.

- Long Short-Term Memory (LSTM) DNN. This DNN structure focuses on studying temporal features of the input data by studying its evolution during a selected period of time following a window approach, as shown in Figure 5. The main characteristic of this structure lies in the fact that the output of a hidden layer is transferred, as part of the input, to the hidden layer of the next time step to preserve previous information. After temporal features are extracted, the data are transmitted to fully connected layers to perform the classification or prediction, as with the previously explained models.To extract these temporal features, the model maps the input data to a sequence of hidden parameters of the network. This leads to an output series of activation by implementing (3):where is the used nonlinear activation function for the DNN, represents the hidden parameters of the network, represents the input data, is the bias vector of the hidden layer and W represents the weights of the kernels. These weights can be divided into two sets of weights: input layer and hidden layers .The input data for this model are a time series that includes temporal features, which in our case are the 3D coordinates values. These values can be studied to extract the evolution of the movement (direction in 3 axes as well as the speed). This DNN structure has been analyzed in the literature for gesture recognition as well as trajectory prediction, due to its capabilities of extracting features from movements [4,6,7].

- Convolutional LSTM. This model, often called ConvLSTM, is a variation of the previous LSTM model that includes convolutional layers. These initial convolutional layers are used to extract non-temporal features in a previous step. To do so, this structure uses convolutions to study the input data executing convolutions at each gate in the LSTM structure rather than using matrix multiplications typical of the dense layer approach in the traditional LSTM structure. Because of this, apart from time series, image series can be studied with this algorithm to extract information about the time evolution of the images. However, non-image data type inputs can also be used in case this feature extraction step is desired as in this work, where 3D coordinate data will be used as input for this model. However, since the ConvLSTM studies the time evolution of the trajectory, to ensure the length of the characters is always the same, a number of 0s were included at the end of some samples to achieve the desired length.This DNN structure, as the previously mentioned ones, was selected due to the high-accuracy results achieved in the literature for gesture recognition and trajectory prediction tasks [6,35]. One of the possible reasons for its good performance results is the fact that this model can extract high-level features such as movement direction before studying its time evolution, leading to a more logical feature study pipeline.

4. Experiment Definition

4.1. Dataset

- Gesture translation (Figure 8b): The center positions of the initial gestures were not constant but they were always near the center of the image. To include more positions in the dataset, all the gesture were translated so their centers are located in the center position in the XY plane. After this step, a random translation is performed in the X- and Y-axis or only in one of them.

- Gesture scaling (Figure 8c): The gestures were scaled within a random percentage in the interval 20–50% to generate a more variate dataset. Since the data are represented in two dimensions, each time that scaling was applied, a random variable controls if the scaling was performed in one of the axis or in both as well as the scaling factor for each axis. Consequently, uniform scaled images as well as anisotropic scaled images are included in the dataset.

- Gesture rotations (Figure 8d): The images were rotated at a random angle in the interval 1–359° to generate positions different from the original. As a result of this, all writing directions are included in the generated dataset.

4.2. Deep Neural Networks Configuration

4.2.1. Convolutional Neural Network

4.2.2. Convolutional Autoencoder

- The two main components of the autoencoder are trained to reconstruct as output, the images provided as input. The structure of this model consists of three 2D convolutional layers for the encoder and another three identical and mirrored layers for the decoder. In the encoder, the first layer has 128 filters of dimensions 5 × 5, the second layer has 64 filters of dimensions 3 × 3, and the final convolutional layer has 32 filters of dimensions 3 × 3.The internal code layer, which provides the embedding, consists of a max 2D pooling applied on the 32 filters. All these convolutional layers use the ReLU activation function, except for the last layer of the decoder, which employs a sigmoid activation function for the nonlinear image reconstruction. The used loss function is the binary cross-entropy while the optimizer is Adam. The application of the autoencoder enables the reduction of dimensionality from 10,000 (100 × 100) corresponding to an image, to only 1296 (6 × 6 × 36) values, which represent the embedding space dimension.

- After the autoencoder training, the encoder part is extracted and kept frozen for training, so that it can be used as a feature extractor without further parameter tuning. A flatten layer and two dense layers consisting of 32 and 8 neurons respectively are then connected to the model. The parameters of the fully connected layer are trained so as to associate the information extracted from the encoder with the respective labels of the drawn characters. The first dense layer uses the ReLU activation function while the second one uses the softmax activation function for the categorization purpose.

4.2.3. Long Short-Term Memory Neural Network

4.2.4. 1D Convolutional LSTM Neural Network

5. Results

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Chen, M.; AlRegib, G.; Juang, B.H. Air-writing recognition—Part I: Modeling and recognition of characters, words, and connecting motions. IEEE Trans. Hum.-Mach. Syst. 2015, 46, 403–413. [Google Scholar] [CrossRef]

- Chen, M.; AlRegib, G.; Juang, B.H. Air-writing recognition—Part II: Detection and recognition of writing activity in continuous stream of motion data. IEEE Trans. Hum.-Mach. Syst. 2015, 46, 436–444. [Google Scholar] [CrossRef]

- Mukherjee, S.; Ahmed, S.A.; Dogra, D.P.; Kar, S.; Roy, P.P. Fingertip detection and tracking for recognition of air-writing in videos. Expert Syst. Appl. 2019, 136, 217–229. [Google Scholar] [CrossRef] [Green Version]

- Kumar, P.; Saini, R.; Behera, S.K.; Dogra, D.P.; Roy, P.P. Real-time recognition of sign language gestures and air-writing using leap motion. In Proceedings of the 2017 Fifteenth IAPR International Conference on Machine Vision Applications (MVA), Nagoya, Japan, 8–12 May 2017; pp. 157–160. [Google Scholar]

- Khan, N.A.; Khan, S.M.; Abdullah, M.; Kanji, S.J.; Iltifat, U. Use hand gesture to write in air recognize with computer vision. IJCSNS 2017, 17, 51. [Google Scholar]

- Arsalan, M.; Santra, A. Character recognition in air-writing based on network of radars for human-machine interface. IEEE Sens. J. 2019, 19, 8855–8864. [Google Scholar] [CrossRef]

- Wang, P.; Lin, J.; Wang, F.; Xiu, J.; Lin, Y.; Yan, N.; Xu, H. A Gesture Air-Writing Tracking Method that Uses 24 GHz SIMO Radar SoC. IEEE Access 2020, 8, 152728–152741. [Google Scholar] [CrossRef]

- Leem, S.K.; Khan, F.; Cho, S.H. Detecting mid-air gestures for digit writing with radio sensors and a CNN. IEEE Trans. Instrum. Meas. 2019, 69, 1066–1081. [Google Scholar] [CrossRef]

- Fang, Y.; Xu, Y.; Li, H.; He, X.; Kang, L. Writing in the air: Recognize Letters Using Deep Learning Through WiFi Signals. In Proceedings of the 2020 6th International Conference on Big Data Computing and Communications (BIGCOM), Deqing, China, 24–25 July 2020; pp. 8–14. [Google Scholar]

- Wang, H.; Gong, W. RF-Pen: Practical Real-Time RFID Tracking in the Air. IEEE Trans. Mob. Comput. 2020, 20, 3227–3238. [Google Scholar] [CrossRef]

- Zhang, X.; Ye, Z.; Jin, L.; Feng, Z.; Xu, S. A new writing experience: Finger writing in the air using a kinect sensor. IEEE Multimed. 2013, 20, 85–93. [Google Scholar] [CrossRef]

- Feng, Z.; Xu, S.; Zhang, X.; Jin, L.; Ye, Z.; Yang, W. Real-time fingertip tracking and detection using Kinect depth sensor for a new writing-in-the air system. In Proceedings of the 4th International Conference on Internet Multimedia Computing and Service, Wuhan, China, 9–11 September 2012; pp. 70–74. [Google Scholar]

- Chen, H.; Ballal, T.; Muqaibel, A.H.; Zhang, X.; Al-Naffouri, T.Y. Air Writing via Receiver Array-Based Ultrasonic Source Localization. IEEE Trans. Instrum. Meas. 2020, 69, 8088–8101. [Google Scholar] [CrossRef]

- Chen, J.; Yu, F.; Yu, J.; Lin, L. A Three-Dimensional Pen-Like Ultrasonic Positioning System Based on Quasi-Spherical PVDF Ultrasonic Transmitter. IEEE Sens. J. 2020, 21, 1756–1763. [Google Scholar] [CrossRef]

- Holm, S. Ultrasound positioning based on time-of-flight and signal strength. In Proceedings of the 2012 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sydney, NSW, Australia, 13–15 November 2012; pp. 1–6. [Google Scholar]

- Kumar, A.; McNames, J. Wideband acoustic positioning with precision calibration and joint parameter estimation. IEEE Trans. Instrum. Meas. 2017, 66, 1946–1953. [Google Scholar] [CrossRef]

- Saad, M.M.; Bleakley, C.J.; Ballal, T.; Dobson, S. High-accuracy reference-free ultrasonic location estimation. IEEE Trans. Instrum. Meas. 2012, 61, 1561–1570. [Google Scholar] [CrossRef]

- Ahmed, T.B.K. Angle-of-Arrival Based Ultrasonic 3-D Location for Ubiquitous Computing. Ph.D. Thesis, University College Dublin, Dublin, Ireland, 2010. [Google Scholar]

- Ruiz, D.; Ureña, J.; García, J.C.; Pérez, C.; Villadangos, J.M.; García, E. Efficient Trilateration Algorithm Using Time Differences of Arrival; Elsevier: Amsterdam, The Netherlands, 2013; Volume 193, pp. 220–232. [Google Scholar]

- Saez-Mingorance, B.; Escobar-Molero, A.; Mendez-Gomez, J.; Castillo-Morales, E.; Morales-Santos, D.P. Object Positioning Algorithm Based on Multidimensional Scaling and Optimization for Synthetic Gesture Data Generation. Sensors 2021, 21, 5923. [Google Scholar] [CrossRef]

- Anzinger, S.; Bretthauer, C.; Manz, J.; Krumbein, U.; Dehé, A. Broadband acoustical MEMS transceivers for simultaneous range finding and microphone applications. In Proceedings of the 2019 20th International Conference on Solid-State Sensors, Actuators and Microsystems & Eurosensors XXXIII (TRANSDUCERS & EUROSENSORS XXXIII), Berlin, Germany, 23–27 June 2019; pp. 865–868. [Google Scholar]

- Digilent. Analog Discovery 2. Available online: https://digilent.com/reference/test-and-measurement/analog-discovery-2/ (accessed on 31 August 2021).

- Saez, B.; Mendez, J.; Molina, M.; Castillo, E.; Pegalajar, M.; Morales, D.P. Gesture Recognition with Ultrasounds and Edge Computing. IEEE Access 2021, 9, 38999–39008. [Google Scholar] [CrossRef]

- Hayward, G.; Devaud, F.; Soraghan, J. P1g-3 evaluation of a bio-inspired range finding algorithm (bira). In Proceedings of the 2006 IEEE Ultrasonics Symposium, Vancouver, BC, Canada, 2–6 October 2006; pp. 1381–1384. [Google Scholar]

- Huang, K.N.; Huang, Y.P. Multiple-frequency ultrasonic distance measurement using direct digital frequency synthesizers. Sens. Actuators A Phys. 2009, 149, 42–50. [Google Scholar] [CrossRef]

- Cowell, D.M.; Freear, S. Separation of overlapping linear frequency modulated (LFM) signals using the fractional Fourier transform. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2010, 57, 2324–2333. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jackson, J.C.; Summan, R.; Dobie, G.I.; Whiteley, S.M.; Pierce, S.G.; Hayward, G. Time-of-flight measurement techniques for airborne ultrasonic ranging. IEEE Trans. Ultrason. Ferroelectr. Freq. Control. 2013, 60, 343–355. [Google Scholar] [CrossRef]

- Bass, H.E.; Sutherland, L.C.; Zuckerwar, A.J.; Blackstock, D.T.; Hester, D. Atmospheric absorption of sound: Further developments. J. Acoust. Soc. Am. 1995, 97, 680–683. [Google Scholar] [CrossRef]

- Kim, K.; Choi, H. High-efficiency high-voltage class F amplifier for high-frequency wireless ultrasound systems. PLoS ONE 2021, 16, e0249034. [Google Scholar]

- Guiñón, J.L.; Ortega, E.; García-Antón, J.; Pérez-Herranz, V. Moving average and Savitzki-Golay smoothing filters using Mathcad. In Proceedings of the International Conference on Engineering Education (ICEE), Coimbra, Portugal, 3–7 September 2007; Volume 2007. [Google Scholar]

- Wang, Y.; Yao, H.; Zhao, S.; Zheng, Y. Dimensionality reduction strategy based on auto-encoder. In Proceedings of the 7th International Conference on Internet Multimedia Computing and Service, Zhangjiajie, China, 19–21 August 2015; pp. 1–4. [Google Scholar]

- Perdios, D.; Besson, A.; Arditi, M.; Thiran, J.P. A deep learning approach to ultrasound image recovery. In Proceedings of the 2017 IEEE International Ultrasonics Symposium (IUS), Washington, DC, USA, 6–9 September 2017; pp. 1–4. [Google Scholar]

- Karaoğlu, O.; Bilge, H.Ş.; Uluer, İ. Reducing Speckle Noise from Ultrasound Images Using an Autoencoder Network. In Proceedings of the 2020 28th Signal Processing and Communications Applications Conference (SIU), Gaziantep, Turkey, 5–7 October 2020; pp. 1–4. [Google Scholar]

- Zeng, X.; Leung, M.R.; Zeev-Ben-Mordehai, T.; Xu, M. A convolutional autoencoder approach for mining features in cellular electron cryo-tomograms and weakly supervised coarse segmentation. J. Struct. Biol. 2018, 202, 150–160. [Google Scholar] [CrossRef] [PubMed]

- Kim, U.H.; Hwang, Y.; Lee, S.K.; Kim, J.H. Writing in The Air: Unconstrained Text Recognition from Finger Movement Using Spatio-Temporal Convolution. arXiv 2021, arXiv:2104.09021. [Google Scholar]

| Parameters Setting | |

|---|---|

| Actuation pulse frequency (Fc) | 30 kHz |

| Number of pulses | 6 |

| Pulse repetition interval | 20 ms |

| Sampling frequency (Fs) | 200 kHz |

| Algorithm | Number of Parameters | Latency (ms) | Accuracy |

|---|---|---|---|

| CNN | 1,730,472 | 63.43 | 97.39% |

| ConvAutoencoder | 184,396 | 45.50 | 98.28% |

| LSTM | 56,868 | 71.90 | 83.25% |

| ConvLSTM | 99,960 | 71.01 | 99.51% |

| Studies | No. of Characters | Accuracy | Latency (ms) | Method | Hardware |

|---|---|---|---|---|---|

| ORM Ultrasound [13] | 26 | 96.31% | 1.8 | Order-restricted matching (ORM) classifier | 2 ultrasound arrays |

| Radar DNN [6] | 15 | 98.33% | – | ConvLSTM-CTC | 3 radars |

| Radio CNN [8] | 10 | 99.7% | 52.2 | CNN | 3 radars |

| This work | 8 | 99.51% | 71.01 | ConvLSTM | 1 ultrasound array |

| This work | 8 | 98.28% | 45.5 | ConvAutoencoder | 1 ultrasound array |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Saez-Mingorance, B.; Mendez-Gomez, J.; Mauro, G.; Castillo-Morales, E.; Pegalajar-Cuellar, M.; Morales-Santos, D.P. Air-Writing Character Recognition with Ultrasonic Transceivers. Sensors 2021, 21, 6700. https://doi.org/10.3390/s21206700

Saez-Mingorance B, Mendez-Gomez J, Mauro G, Castillo-Morales E, Pegalajar-Cuellar M, Morales-Santos DP. Air-Writing Character Recognition with Ultrasonic Transceivers. Sensors. 2021; 21(20):6700. https://doi.org/10.3390/s21206700

Chicago/Turabian StyleSaez-Mingorance, Borja, Javier Mendez-Gomez, Gianfranco Mauro, Encarnacion Castillo-Morales, Manuel Pegalajar-Cuellar, and Diego P. Morales-Santos. 2021. "Air-Writing Character Recognition with Ultrasonic Transceivers" Sensors 21, no. 20: 6700. https://doi.org/10.3390/s21206700

APA StyleSaez-Mingorance, B., Mendez-Gomez, J., Mauro, G., Castillo-Morales, E., Pegalajar-Cuellar, M., & Morales-Santos, D. P. (2021). Air-Writing Character Recognition with Ultrasonic Transceivers. Sensors, 21(20), 6700. https://doi.org/10.3390/s21206700