A Camera Intrinsic Matrix-Free Calibration Method for Laser Triangulation Sensor

Abstract

1. Introduction

- (i)

- A new model that simultaneously optimizes the camera intrinsic matrix and the equation of the laser plane, instead of calculating the equation of the laser plane based on the known camera intrinsic matrix.

- (ii)

- A novel accurate and computationally efficient closed-form solution of the position of the colinear 1D target.

2. Related Works

2.1. Calibrating the LTS Based on the Patterns of the Targets

2.2. Calibrating the LTS Based on Target Geometries

2.3. Calibrating the LTS with Auxiliary Devices

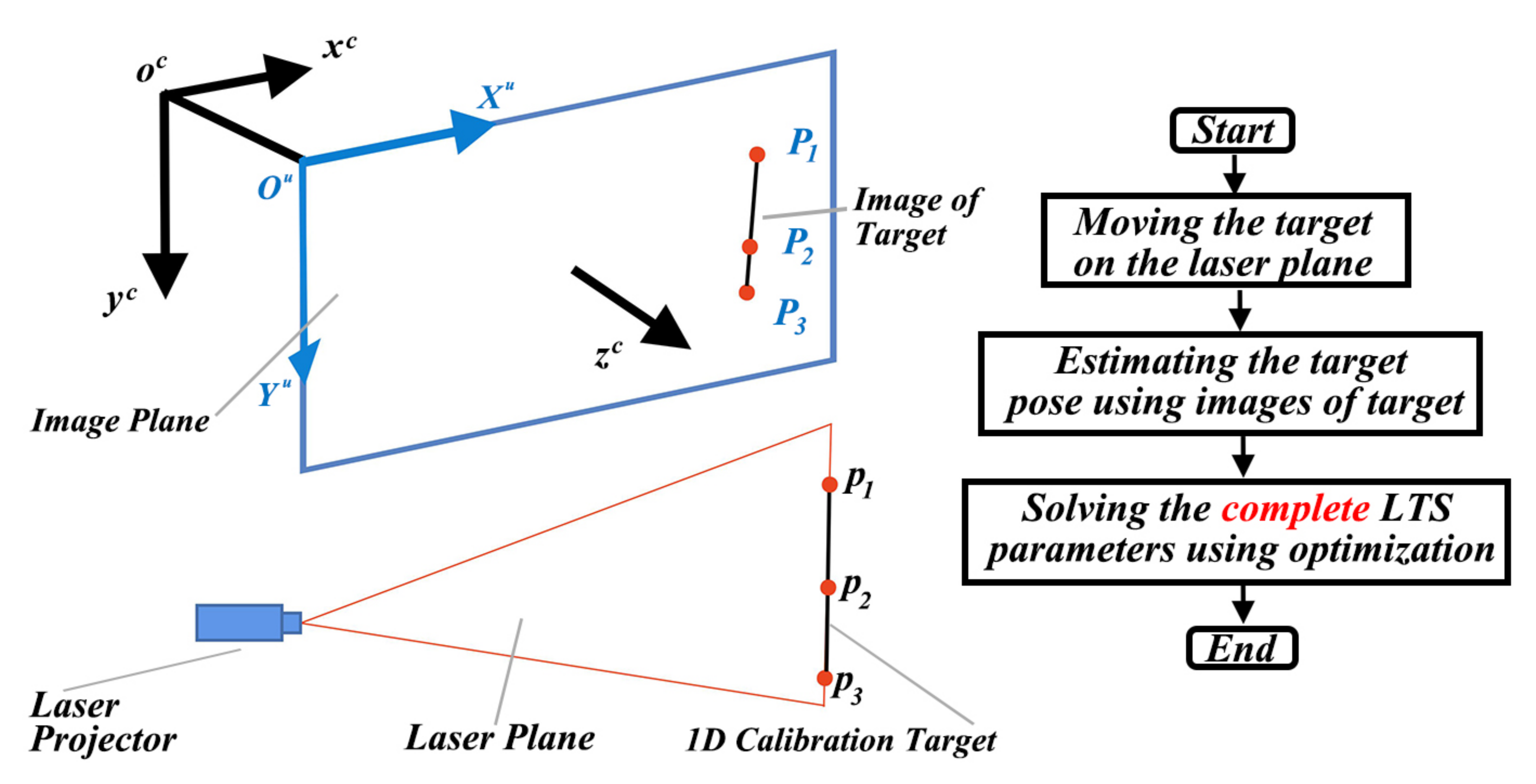

3. Method

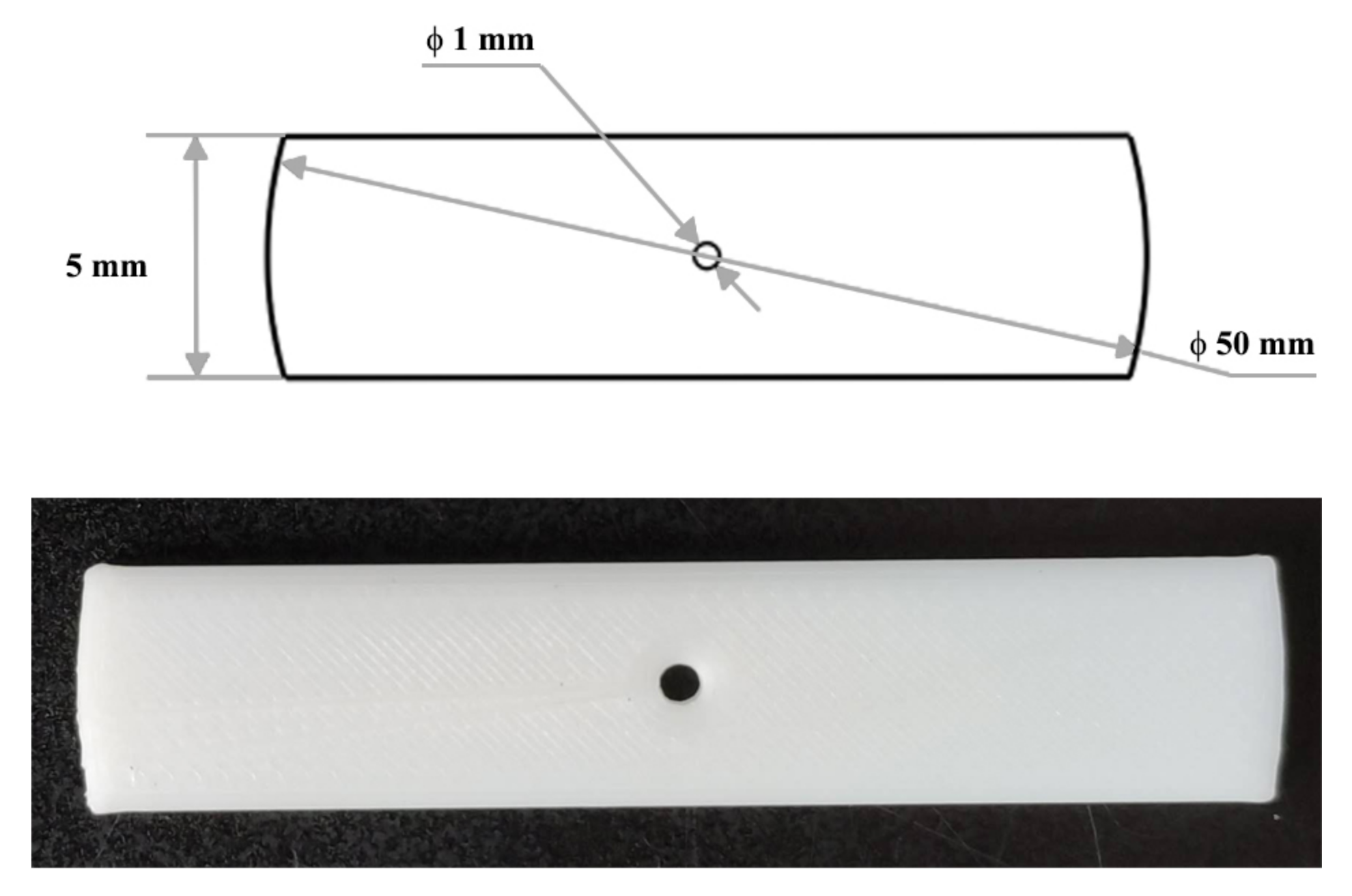

3.1. The Closed-Form Solution for Localizing the 1D Target

3.2. Solving the LTS Parameters Based on the Optimization

4. Experiments

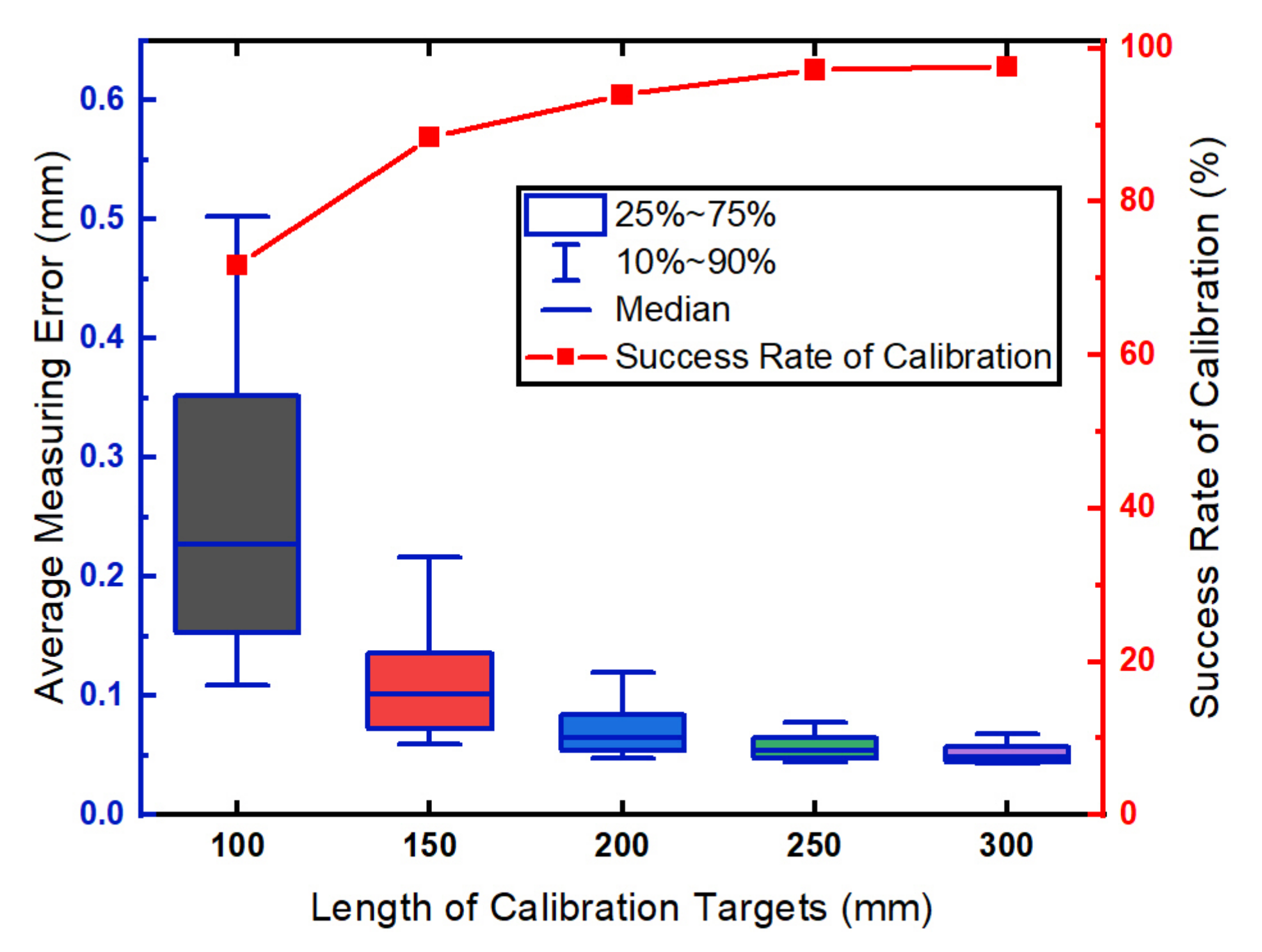

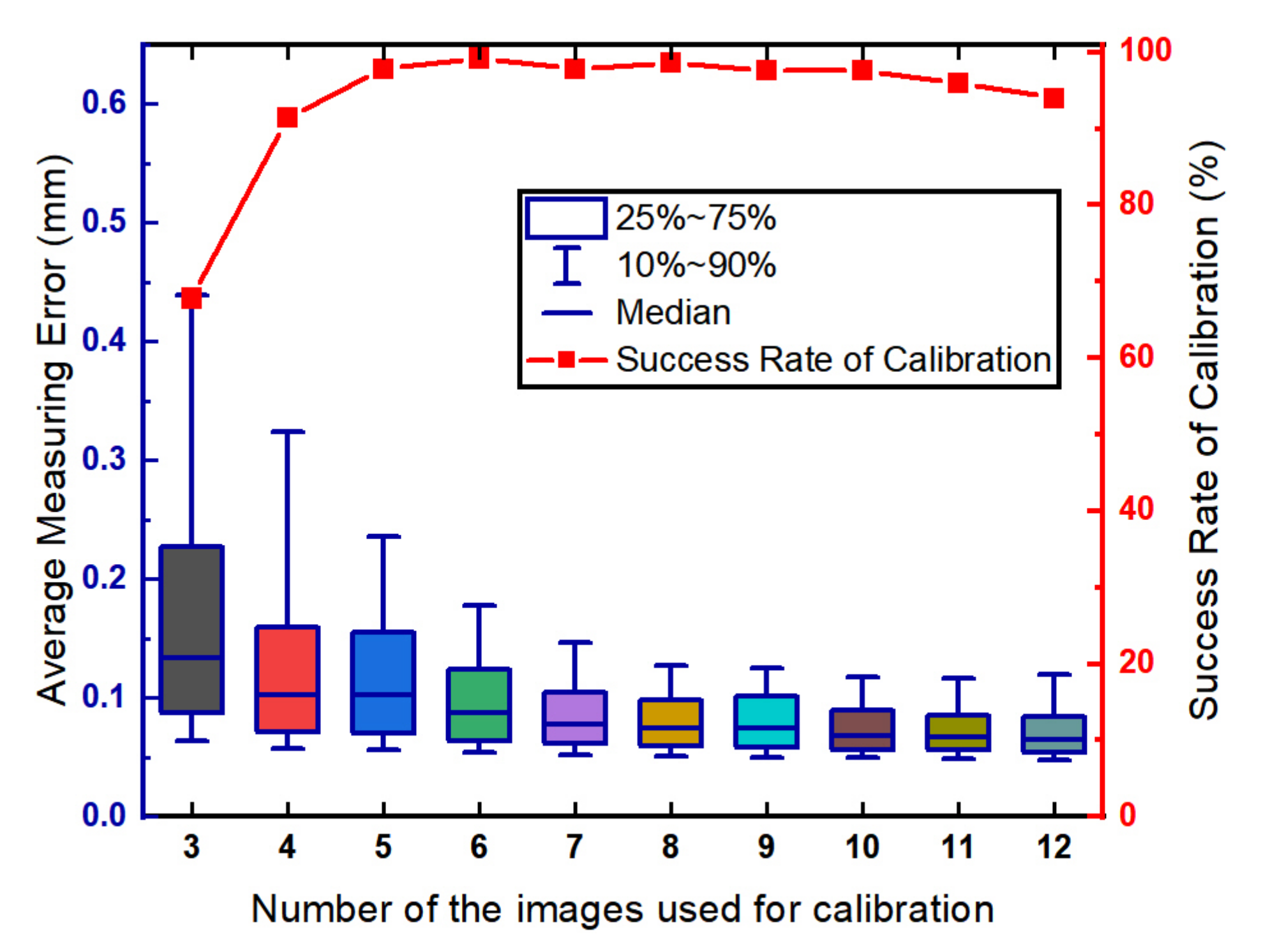

4.1. Simulation of the Proposed LTS Calibration Method

4.2. Real-World Experiment on the Proposed LTS Calibration Method

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Pan, X.; Liu, Z.; Zhang, G. Line Structured-Light Vision Sensor Calibration Based on Multi-Tooth Free-Moving Target and Its Application in Railway Fields. IEEE Trans. Intell. Transp. Syst. 2020, 1–10. [Google Scholar] [CrossRef]

- Xu, G.; Zheng, A.; Li, X.; Su, J. Optimization Solution of Laser Plane Generated from Maximum Likelihood Estimation of Projection Plane. Sens. Mater. 2018, 30, 1155–1164. [Google Scholar] [CrossRef]

- Song, L.; Sun, S.; Yang, Y.; Zhu, X.; Guo, Q.; Yang, H. A Multi-View Stereo Measurement System Based on a Laser Scanner for Fine Workpieces. Sensors 2019, 19, 381. [Google Scholar] [CrossRef]

- Zhou, F.; Zhang, G. Complete calibration of a structured light stripe vision sensor through planar target of unknown orientations. Image Vis. Comput. 2005, 23, 59–67. [Google Scholar] [CrossRef]

- Xu, G.; Zhang, X.; Su, J.; Li, X.; Zheng, A. Solution approach of a laser plane based on Plücker matrices of the projective lines on a flexible 2D target. Appl. Opt. 2016, 55, 2653–2656. [Google Scholar] [CrossRef]

- Xu, X.; Fei, Z.; Tan, Z.; Zhao, B.; He, J. Improved calibration method based on the RANSAC approach and an improved gray centroid method for a laser-line-based structured light system. Appl. Opt. 2019, 58, 9603. [Google Scholar] [CrossRef]

- Danyu, M.; Guili, X.; Wende, D. A simple calibration method for line-structured light vision sensor based on planar target of different positions. In Proceedings of the 2020 International Conference on Computer Vision, Image and Deep Learning (CVIDL), Chongqing, China, 10–12 July 2020; pp. 117–121. [Google Scholar] [CrossRef]

- Zou, W.; Wei, Z.; Liu, F. High-accuracy calibration of line-structured light vision sensors using a plane mirror. Opt. Express 2019, 27, 34681–34704. [Google Scholar] [CrossRef]

- Wei, Z.; Shao, M.; Zhang, G.; Wang, Y. Parallel-based calibration method for line-structured light vision sensor. Opt. Eng. 2014, 53, 033101. [Google Scholar] [CrossRef]

- Wei, Z.; Li, C.; Ding, B. Line structured light vision sensor calibration using parallel straight lines features. Optik 2014, 125, 4990–4997. [Google Scholar] [CrossRef]

- Shao, M.; Dong, J.; Madessa, A.H. A new calibration method for line-structured light vision sensors based on concentric circle feature. J. Eur. Opt. Soc. 2019, 15, 1. [Google Scholar] [CrossRef]

- Wu, X.; Tang, N.; Liu, B.; Long, Z. A novel high precise laser 3D profile scanning method with flexible calibration. Opt. Lasers Eng. 2020, 132, 105938. [Google Scholar] [CrossRef]

- Liu, Z.; Li, X.; Yin, Y. On-site calibration of line-structured light vision sensor in complex light environments. Opt. Express 2015, 23, 29896–29911. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Li, X.; Li, F.; Zhang, G. Calibration method for line-structured light vision sensor based on a single ball target. Opt. Lasers Eng. 2015, 69, 20–28. [Google Scholar] [CrossRef]

- Wei, Z.; Cao, L.; Zhang, G. A novel 1D target-based calibration method with unknown orientation for structured light vision sensor. Opt. Laser Technol. 2010, 42, 570–574. [Google Scholar] [CrossRef]

- Xu, G.; Hao, Z.; Li, X.; Su, J.; Liu, H.; Zhang, X. Calibration method of laser plane equation for vision measurement adopting objective function of uniform horizontal height of feature points. Opt. Rev. 2016, 23, 33–39. [Google Scholar] [CrossRef]

- Xu, G.; Sun, L.; Li, X.; Su, J.; Hao, Z.; Lu, X. Global calibration and equation reconstruction methods of a three dimensional curve generated from a laser plane in vision measurement. Opt. Express 2014, 22, 22043–22055. [Google Scholar] [CrossRef]

- Xu, G.; Hao, Z.; Li, X.; Su, J.; Liu, H.; Sun, L. An optimization solution of a laser plane in vision measurement with the distance object between global origin and calibration points. Sci. Rep. 2015, 5, 11928. [Google Scholar] [CrossRef] [PubMed]

- Xu, G.; Yuan, J.; Li, X.; Su, J.; Hao, Z. A global solution of laser plane using the reconstructed distance from coordinate axis to calibration points. Res. Phys. 2016, 6, 614–616. [Google Scholar] [CrossRef][Green Version]

- Huang, Y.G.; Li, X.H.; Chen, P.F. Calibration method for line-structured light multivision sensor based on combined target. EURASIP J. Wirel. Commun. Netw. 2013, 2013, 92. [Google Scholar] [CrossRef]

- Zhu, Z.; Wang, X.; Zhou, F.; Cen, Y. Calibration method for a line-structured light vision sensor based on a single cylindrical target. Appl. Opt. 2020, 59, 1376–1382. [Google Scholar] [CrossRef]

- Steger, C. An unbiased detector of curvilinear structures. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 113–125. [Google Scholar] [CrossRef]

| Paramters | u | v | a | b | c | ||

|---|---|---|---|---|---|---|---|

| Parameter Specification | 2727.27 | 2727.27 | 1296.00 | 972.00 | 125.622 | −0.134 | 0.312 |

| Parameter No. 1 | 798.15 | 800.87 | 1001.55 | 998.93 | 37.891 | −0.037 | 0.097 |

| Parameter No. 2 | 998.56 | 1000.71 | 1001.57 | 998.91 | 47.305 | −0.047 | 0.121 |

| Parameter No. 3 | 908.88 | 887.68 | 1102.83 | 1095.45 | 43.123 | −0.043 | 0.108 |

| Parameter No. 4 | 858.11 | 838.31 | 1102.88 | 1095.62 | 40.72 | −0.041 | 0.102 |

| Paramters | Parameter Specification | Parameter No. 1 | Parameter No. 2 | Parameter No. 3 | Parameter No. 4 |

|---|---|---|---|---|---|

| 50.23 | 50.14 | 50.14 | 50.13 | 50.14 | |

| 49.66 | 49.87 | 49.86 | 49.87 | 49.87 | |

| Measurement | 50.34 | 49.94 | 49.94 | 49.94 | 49.94 |

| results | 49.79 | 50.11 | 50.11 | 50.11 | 50.12 |

| 50.25 | 50.17 | 50.16 | 50.17 | 50.17 | |

| 50.77 | 50.01 | 50.03 | 50.03 | 50.02 | |

| Average | 50.17 | 50.04 | 50.04 | 50.04 | 50.04 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, X.; Chen, Y.; Chen, B.; He, Z.; Ma, Y.; Zhang, D.; Najjaran, H. A Camera Intrinsic Matrix-Free Calibration Method for Laser Triangulation Sensor. Sensors 2021, 21, 559. https://doi.org/10.3390/s21020559

Chen X, Chen Y, Chen B, He Z, Ma Y, Zhang D, Najjaran H. A Camera Intrinsic Matrix-Free Calibration Method for Laser Triangulation Sensor. Sensors. 2021; 21(2):559. https://doi.org/10.3390/s21020559

Chicago/Turabian StyleChen, Xuzhan, Youping Chen, Bing Chen, Zhuo He, Yunxiu Ma, Dailin Zhang, and Homayoun Najjaran. 2021. "A Camera Intrinsic Matrix-Free Calibration Method for Laser Triangulation Sensor" Sensors 21, no. 2: 559. https://doi.org/10.3390/s21020559

APA StyleChen, X., Chen, Y., Chen, B., He, Z., Ma, Y., Zhang, D., & Najjaran, H. (2021). A Camera Intrinsic Matrix-Free Calibration Method for Laser Triangulation Sensor. Sensors, 21(2), 559. https://doi.org/10.3390/s21020559