Weakly-Supervised Recommended Traversable Area Segmentation Using Automatically Labeled Images for Autonomous Driving in Pedestrian Environment with No Edges

Abstract

1. Introduction

- An automatic image labeling method based on human knowledge and experience is proposed, as well as leaning methods to detect appropriate directions as recommended traversable areas. In particular, a data augmentation method and a loss weighting method are focused on as learning methods.

- An evaluation dataset is created and metrics are designed to evaluate the effectiveness of the proposed learning methods. Specifically, the recommended traversable area detection performance is evaluated in environments where roadways and sidewalks coexist.

2. Related Works

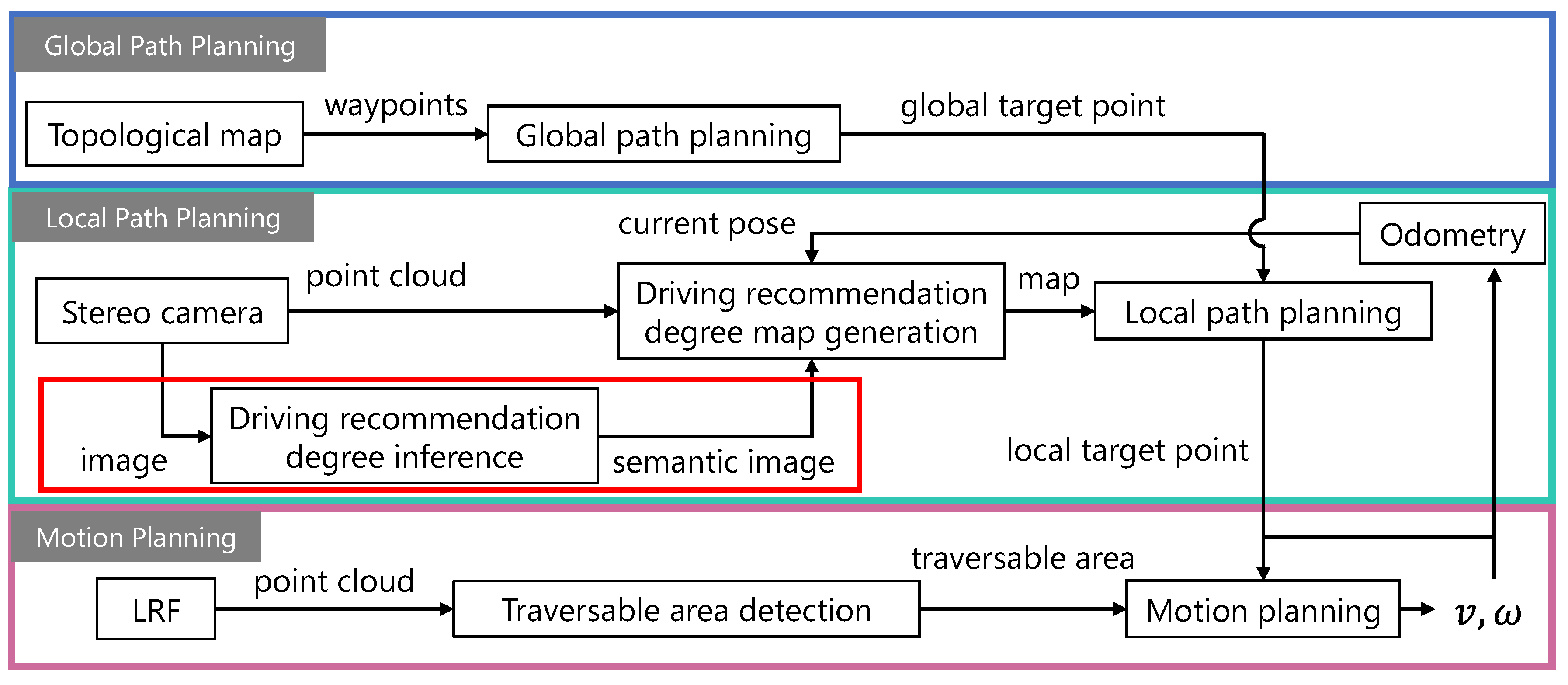

3. Methods

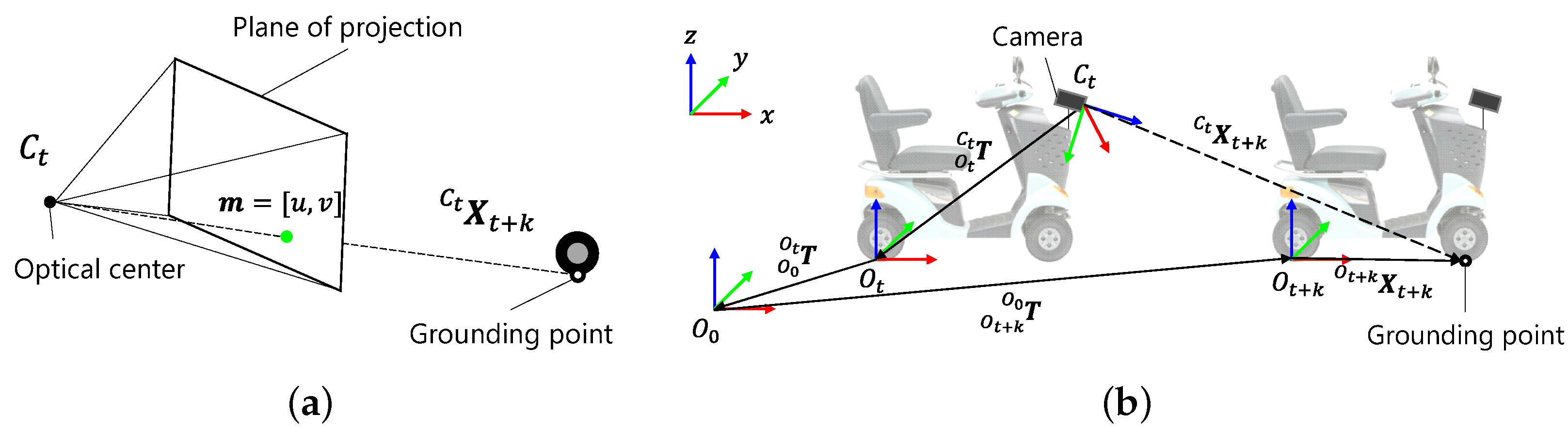

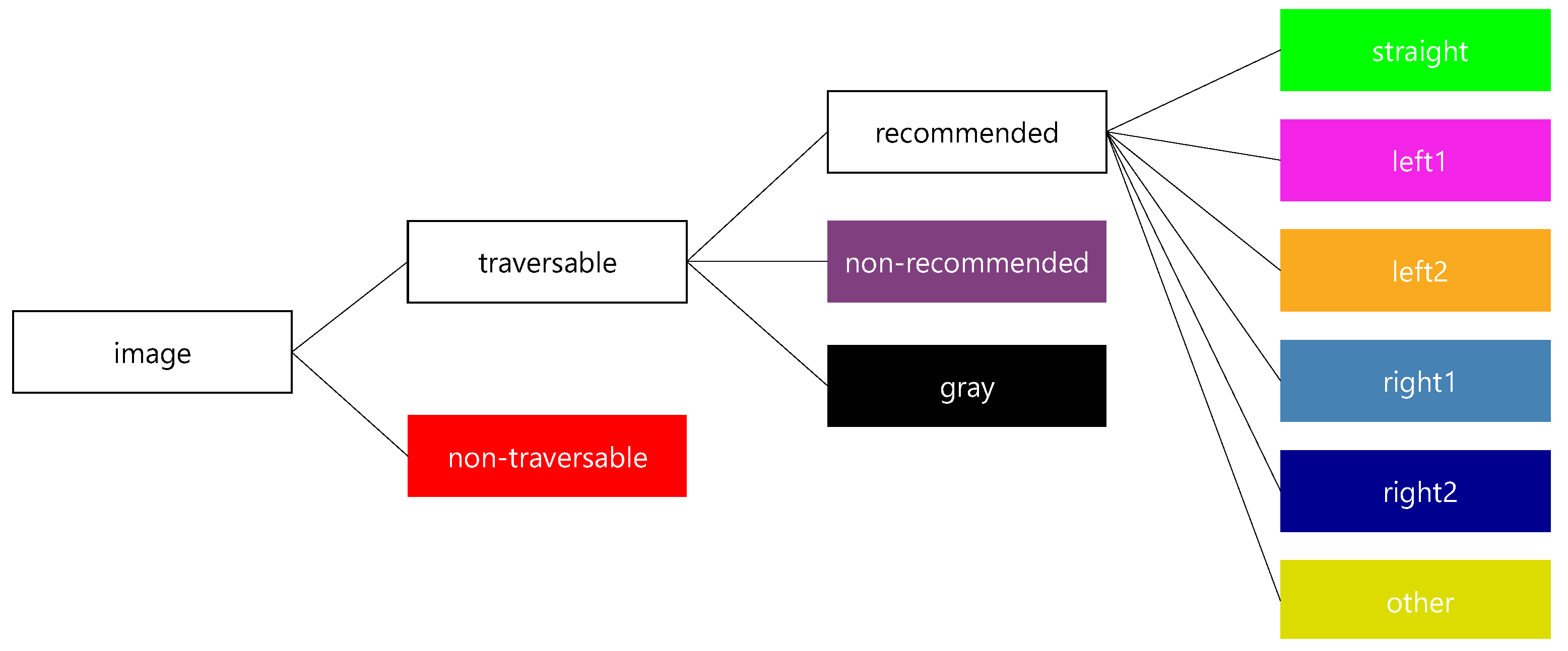

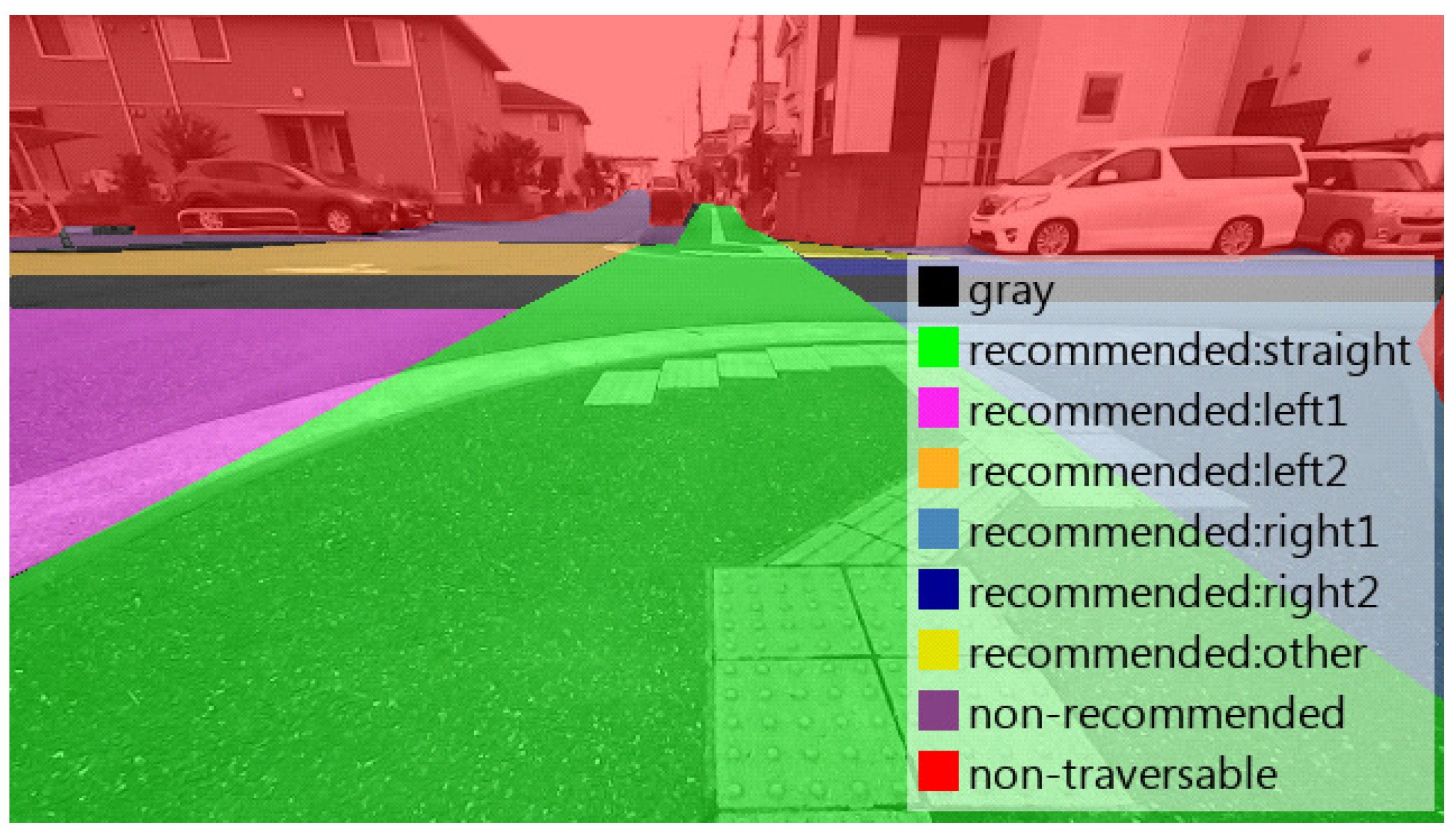

3.1. Automatic Image Labeling Method

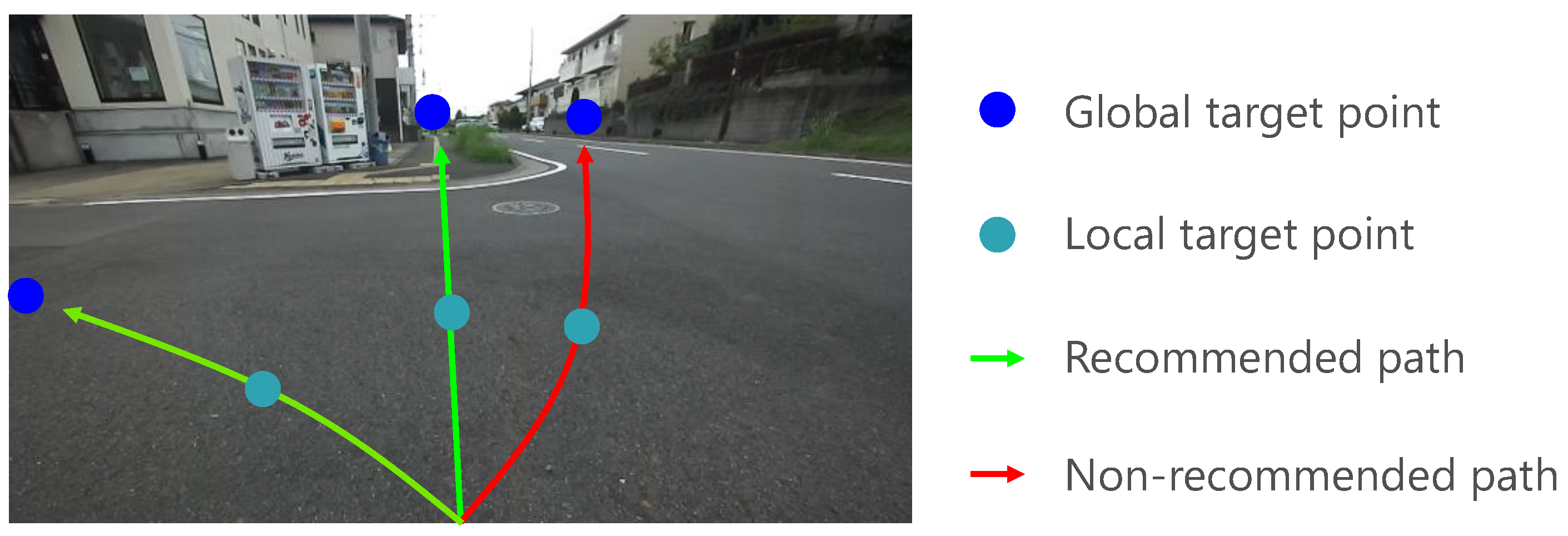

3.1.1. Automatic Recommended Area Labeling

3.1.2. Automatic Non-Traversable Area Labeling

3.1.3. Dataset for Training

- Roadway: The whole trajectory is on the area that are accessible to cars.

- Sidewalk: The whole trajectory is on the area that are inaccessible to cars.

- Roadway and sidewalk: Part of the trajectory is on the roadway and sidewalk.

- Crosswalk: Part of the trajectory is on the crosswalk.

3.2. Learning Method for Recommended Traversable Area Detection

- Detect selectable driving directions as recommended areas

- Do not detect non-recommended driving areas as recommended areas

- Detect inaccessible directions as non-traversable areas

- Do not misclassify traversable areas as non-traversable areas

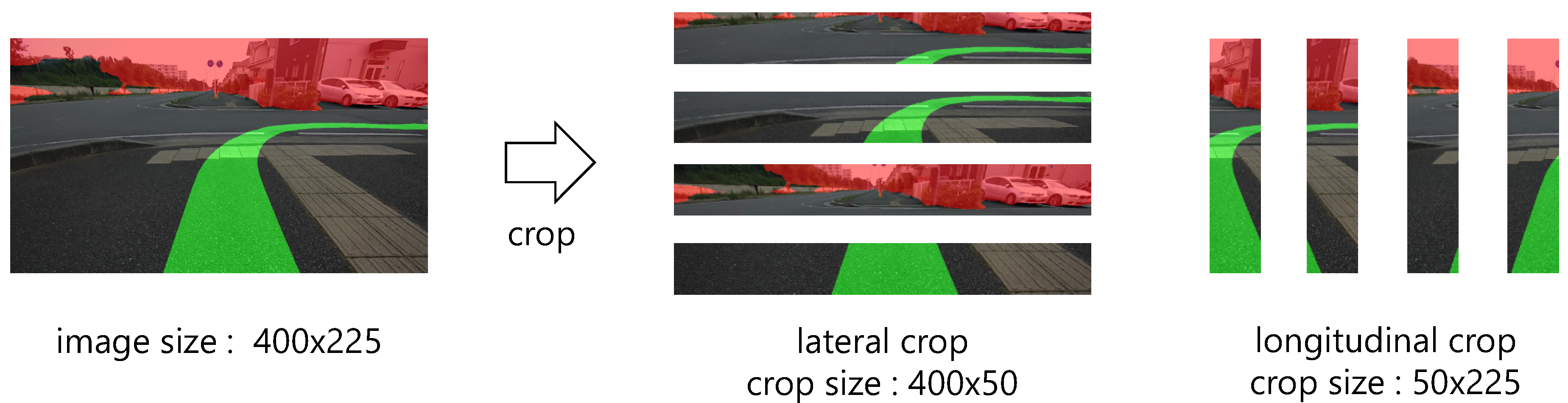

3.2.1. Data Augmentation

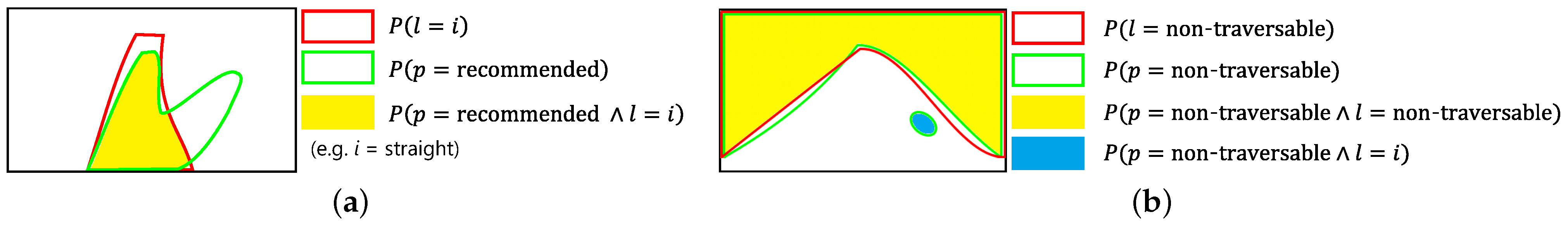

3.2.2. Loss Weighting

3.3. Evaluation Method

3.3.1. Evaluation Dataset

3.3.2. Evaluation Metrics

4. Experimental Results and Discussion

4.1. Learning Conditions for Baseline

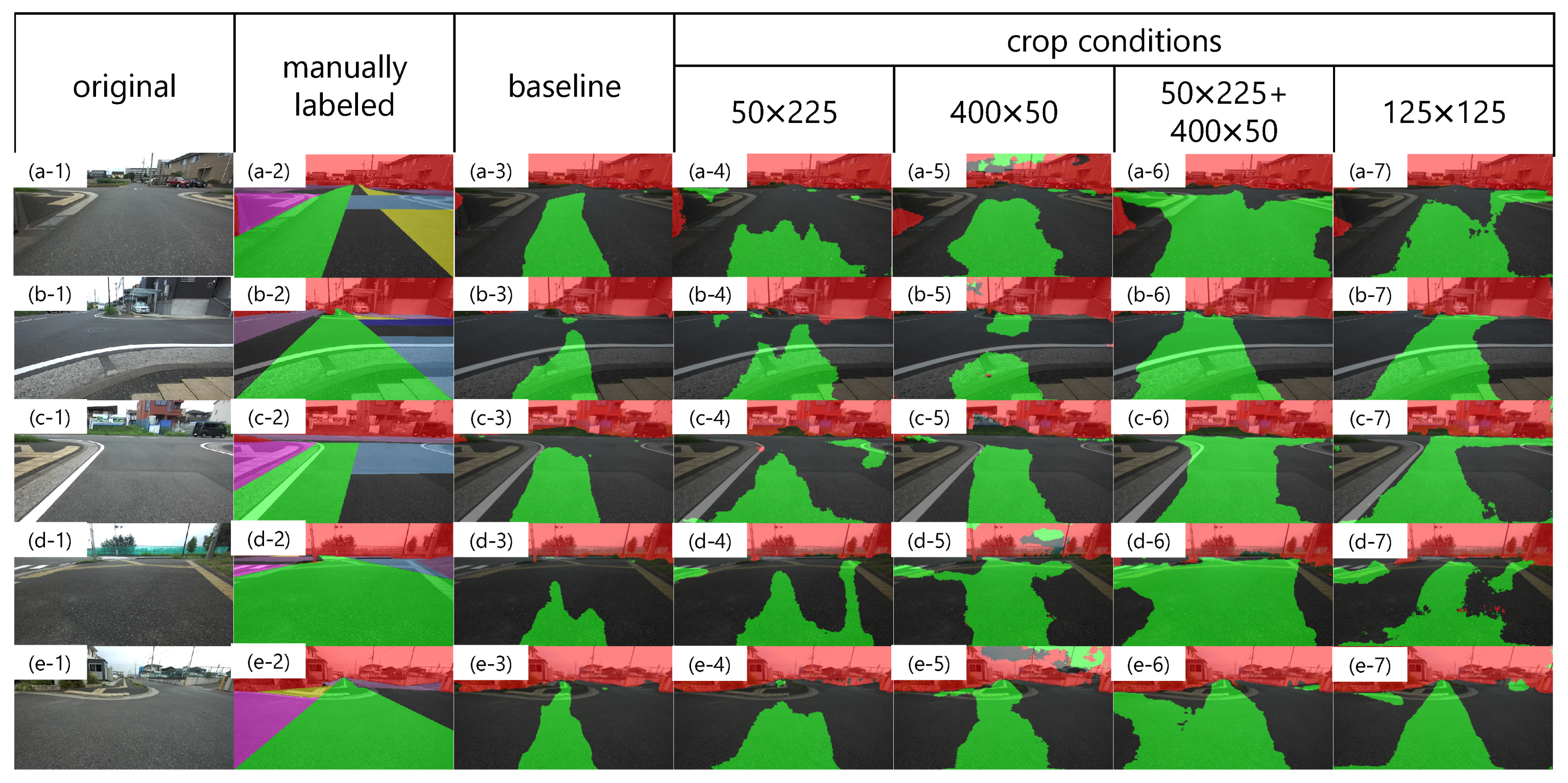

4.2. Experiments for Characterization by Cropping

4.2.1. Results

4.2.2. Discussion

4.3. Experiments for Characterization by Loss Weighting

- Recommended areas and unclassified areas are often adjacent to each other.

- Unclassified areas and non-traversable areas are often adjacent to each other.

- Recommended areas and non-traversable areas are rarely adjacent to each other.

4.3.1. Results

4.3.2. Discussion

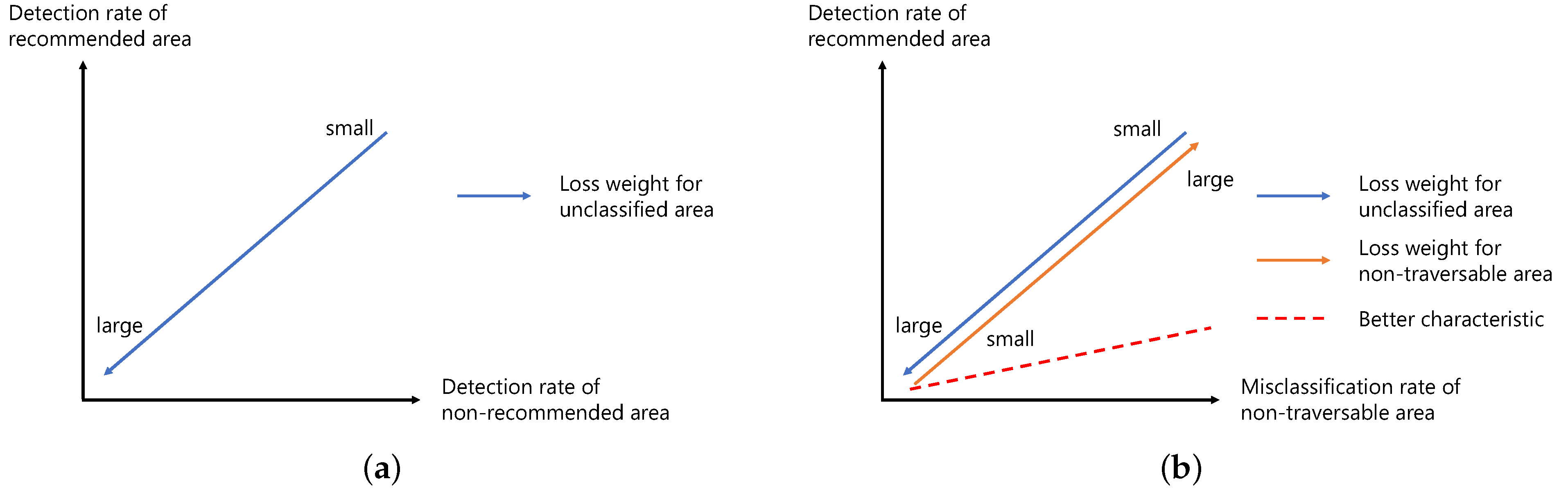

- The loss weight for the unclassified area is first set based on the trade-off between the detection rate of recommended and non-recommended areas.

- The loss weight for the non-traversable area is then adjusted based on the trade-off between the detection rate of recommended areas and the misclassification rate of non-traversable areas.

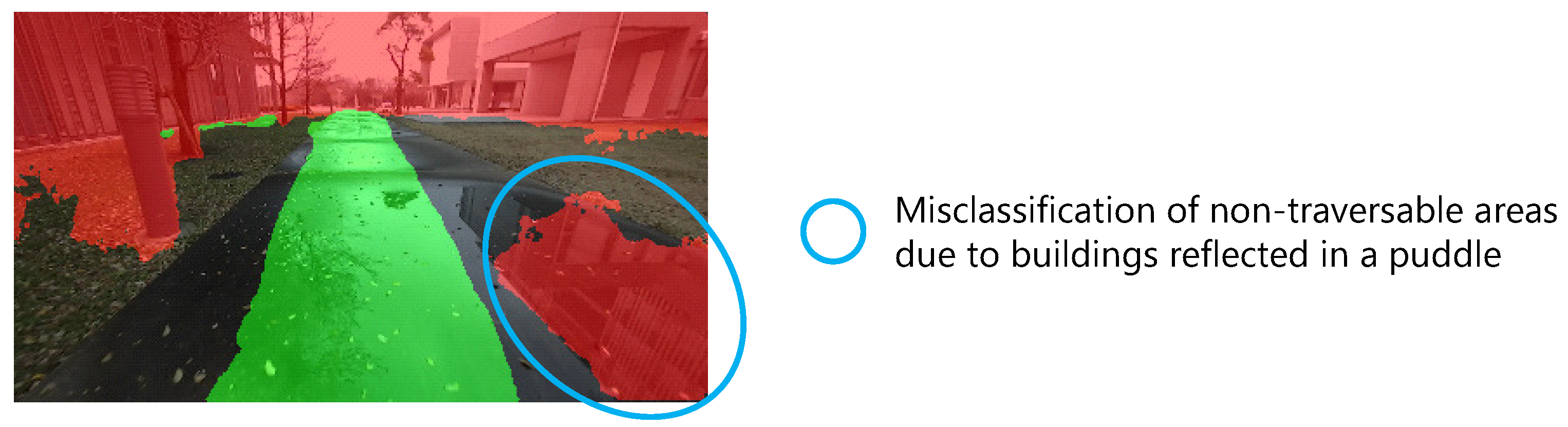

4.4. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| LRF | Laser Range Finder |

| IoU | Intersection over Union |

References

- Pendleton, S.D.; Andersen, H.; Shen, X.; Eng, Y.H.; Zhang, C.; Kong, H.X.; Leong, W.K.; Ang, M.H.; Rus, D. Multi-class autonomous vehicles for mobility-on-demand service. In Proceedings of the 2016 IEEE/SICE International Symposium on System Integration (SII), Sapporo, Japan, 13–15 December 2016; pp. 204–211. [Google Scholar]

- Fu, X.; Vernier, M.; Kurt, A.; Redmill, K.; Ozguner, U. Smooth: Improved Short-Distance Mobility for a Smarter City. In Proceedings of the 2nd International Workshop on Science of Smart City Operations and Platforms Engineering (SCOPE), Pittsburgh, PA, USA, 21 April 2017; pp. 46–51. [Google Scholar]

- Miyamoto, R.; Nakamura, Y.; Adachi, M.; Nakajima, T.; Ishida, H.; Kojima, K.; Aoki, R.; Oki, T.; Kobayashi, S. Vision-Based Road-Following Using Results of Semantic Segmentation for Autonomous Navigation. In Proceedings of the 2019 IEEE 9th International Conference on Consumer Electronics (ICCE-Berlin), Berlin, Germany, 8–11 September 2019; pp. 174–179. [Google Scholar]

- Bao, J.; Yao, X.; Tang, H.; Song, A. Outdoor Navigation of a Mobile Robot by Following GPS Waypoints and Local Pedestrian Lane. In Proceedings of the 2018 IEEE 8th Annual International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), Tianjin, China, 19–23 July 2018; pp. 198–203. [Google Scholar]

- Watanabe, A.; Bando, S.; Shinada, K.; Yuta, S. Road following based navigation in park and pedestrian street by detecting orientation and finding intersection. In Proceedings of the 2011 IEEE International Conference on Mechatronics and Automation (ICMA), Beijing, China, 7–10 August 2011; pp. 1763–1767. [Google Scholar]

- Ort, T.; Paull, L.; Rus, D. Autonomous Vehicle Navigation in Rural Environments Without Detailed Prior Maps. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 2040–2047. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Bchiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Barnes, D.; Maddern, W.; Posner, I. Find your own way: Weakly-supervised segmentation of path proposals for urban autonomy. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 203–210. [Google Scholar]

- Deng, F.; Zhu, X.; He, C. Vision-Based Real-Time Traversable Region Detection for Mobile Robot in the Outdoors. Sensors 2017, 17, 2101. [Google Scholar] [CrossRef] [PubMed]

- Lu, K.; Li, J.; An, X.; He, H. Vision Sensor-Based Road Detection for Field Robot Navigation. Sensors 2015, 15, 29594–29617. [Google Scholar] [CrossRef] [PubMed]

- Meyer, A.; Salscheider, N.O.; Orzechowski, P.F.; Stiller, C. Deep Semantic Lane Segmentation for Mapless Driving. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 869–875. [Google Scholar]

- Mayr, J.; Unger, C.; Tombari, F. Self-Supervised Learning of the Drivable Area for Autonomous Vehicles. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 362–369. [Google Scholar]

- Wang, H.; Sun, Y.; Liu, M. Self-Supervised Drivable Area and Road Anomaly Segmentation Using RGB-D Data For Robotic Wheelchairs. IEEE Robot. Autom. Lett. 2019, 4, 4386–4393. [Google Scholar] [CrossRef]

- Gao, B.; Xu, A.; Pan, Y.; Zhao, X.; Yao, W.; Zhao, H. Off-Road Drivable Area Extraction Using 3D LiDAR Data. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 1505–1511. [Google Scholar]

- Tang, L.; Ding, X.; Yin, H.; Wang, Y.; Xiong, R. From one to many: Unsupervised traversable area segmentation in off-road environment. In Proceedings of the 2017 IEEE International Conference on Robotics and Biomimetics (ROBIO), Macau, China, 5–8 December 2017; pp. 1–6. [Google Scholar]

- Mur-Artal, R.; Tardos, J. ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Onozuka, Y.; Matsumi, R.; Shino, M. Automatic Image Labeling Method for Semantic Segmentation toward Autonomous Driving in Pedestrian Environment. In Proceedings of the Forum on Information Technology 2020, Hokkaido, Japan, 1–3 September 2020; pp. 151–154. (In Japanese). [Google Scholar]

- Zhou, W.; Worrall, S.; Zyner, A.; Nebot, E. Automated Process for Incorporating driving Path into Real-Time Semantic Segmentation. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 1–6. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Proceedings of the Neural Information Processing Systems 2019 (NIPS 2019), Vancouver, BC, Canada, 8–14 December 2019; pp. 8024–8035. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations (ICLR 2015), San Diego, CA, USA, 7–9 May 2015; pp. 1–14. [Google Scholar]

| Conditions | Value | |

|---|---|---|

| learning rate (lr) | base_lr [20] | 0.01 |

| power [20] | 0.9 | |

| decoder_lr/encoder_lr | 0.1 | |

| optimizer | momentum | 0.9 |

| weight decay | 0.0001 | |

| data loader process | image size | 1024 × 512 |

| batch size | 2 | |

| max epoch | 200 | |

| GPU (NVIDIA Geforce RTX 2060) | memory size (GB) | 6 |

| Lateral Displacement (ld) | Roadway | Sidewalk | Roadway and Sidewalk | Crosswalk | ||

|---|---|---|---|---|---|---|

| training | ld < 6 | 11,210 (500) | 9144 (500) | 1116 (300) | 926 (300) | |

| data | ld ≥ 6 | 994 (300) | 938 (300) | 838 (500) | 1034 (500) | |

| validation | ld < 6 | 7466 (50) | 1150 (50) | 604 (50) | 150 (50) | |

| data | ld ≥ 6 | 306 (50) | 72 (50) | 298 (50) | 184(50) |

| Class | Definition |

|---|---|

| recommended |

|

| non-recommended |

|

| gray |

|

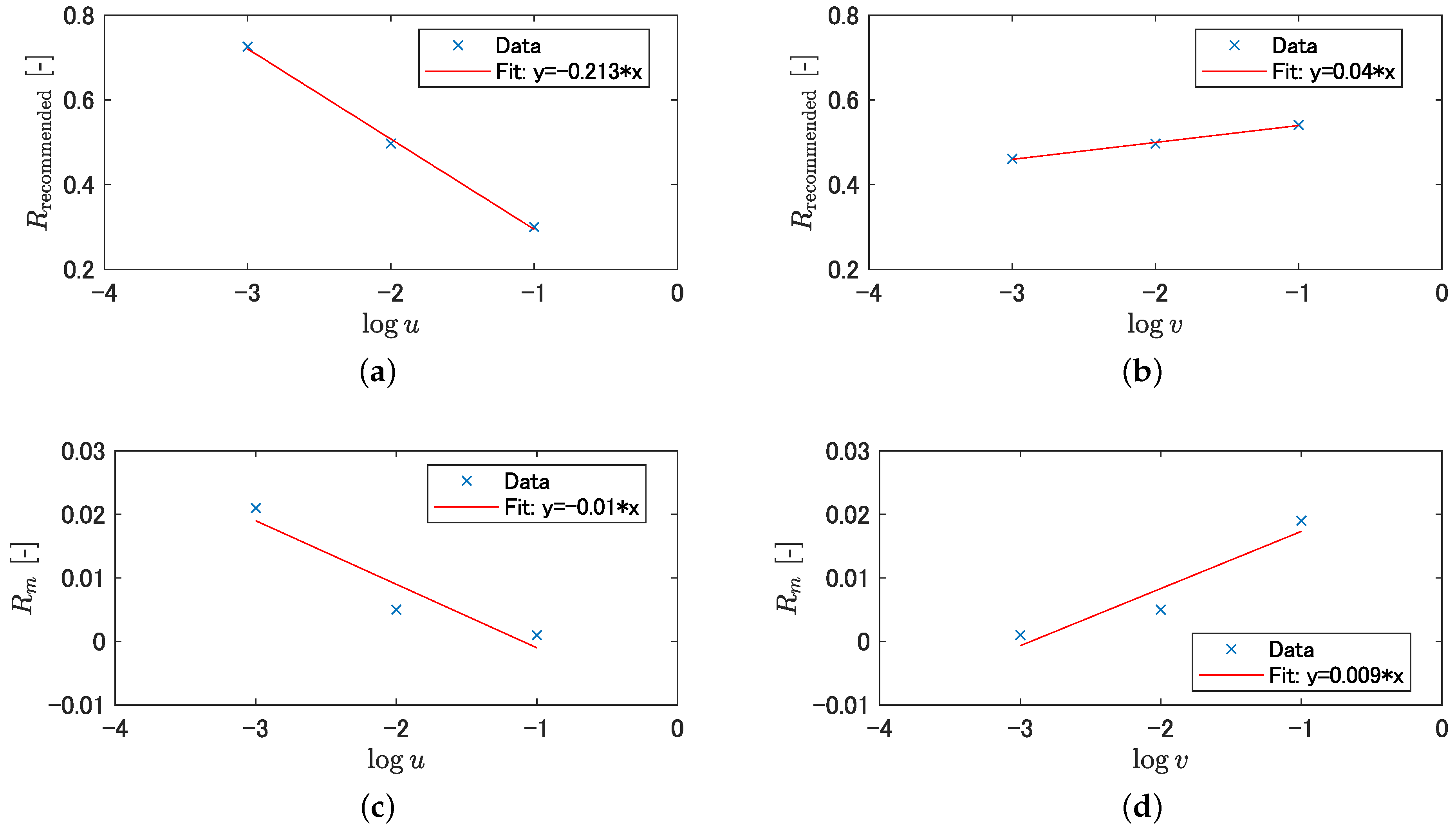

| −0.213 | −0.01 | 21.3 | ||

| 0.04 | 0.009 | 4.44 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Onozuka, Y.; Matsumi, R.; Shino, M. Weakly-Supervised Recommended Traversable Area Segmentation Using Automatically Labeled Images for Autonomous Driving in Pedestrian Environment with No Edges. Sensors 2021, 21, 437. https://doi.org/10.3390/s21020437

Onozuka Y, Matsumi R, Shino M. Weakly-Supervised Recommended Traversable Area Segmentation Using Automatically Labeled Images for Autonomous Driving in Pedestrian Environment with No Edges. Sensors. 2021; 21(2):437. https://doi.org/10.3390/s21020437

Chicago/Turabian StyleOnozuka, Yuya, Ryosuke Matsumi, and Motoki Shino. 2021. "Weakly-Supervised Recommended Traversable Area Segmentation Using Automatically Labeled Images for Autonomous Driving in Pedestrian Environment with No Edges" Sensors 21, no. 2: 437. https://doi.org/10.3390/s21020437

APA StyleOnozuka, Y., Matsumi, R., & Shino, M. (2021). Weakly-Supervised Recommended Traversable Area Segmentation Using Automatically Labeled Images for Autonomous Driving in Pedestrian Environment with No Edges. Sensors, 21(2), 437. https://doi.org/10.3390/s21020437