Image-Based Automatic Watermeter Reading under Challenging Environments

Abstract

1. Introduction

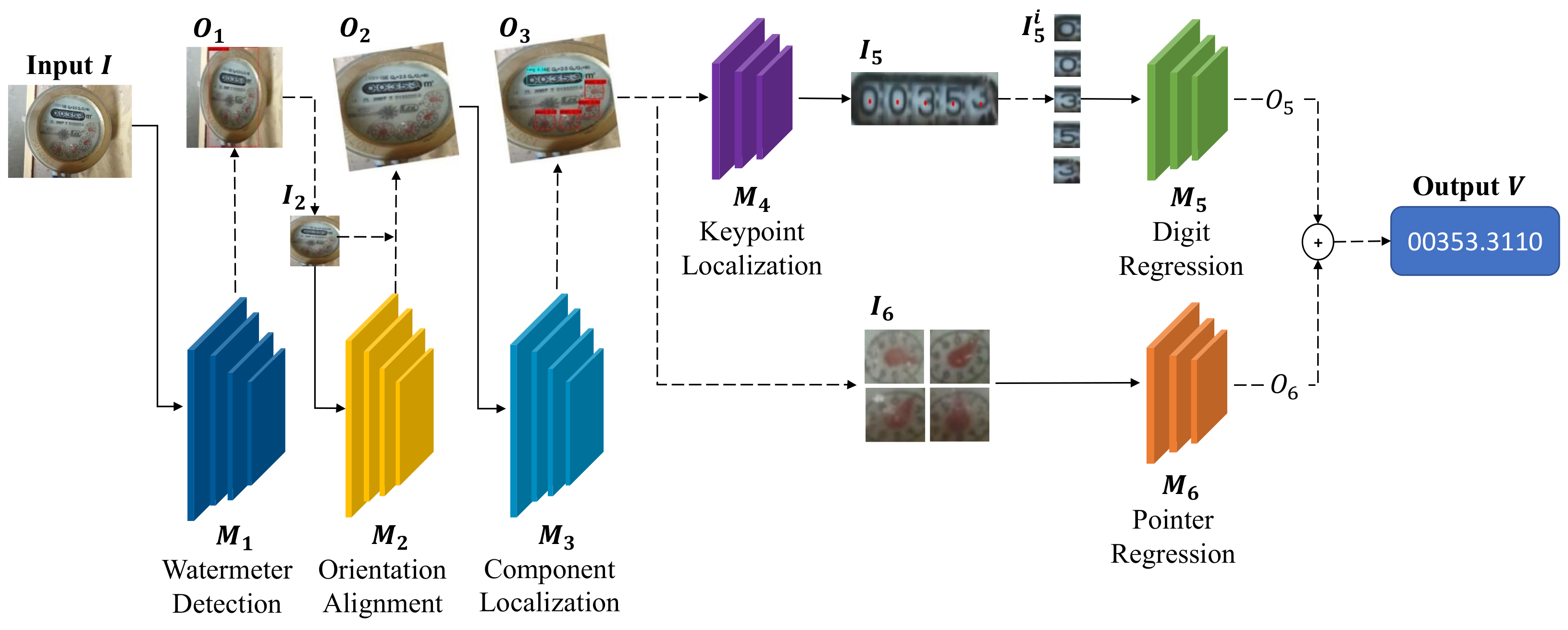

- We propose a robust end-to-end system based on convolutional neural networks for automatic reading of structured watermeter instruments. Our method tailors and combines the latest object detection, feature point location, and novel angle regression techniques.

- We design an orientation alignment algorithm for image correction and propose a spatial layout guidance algorithm to locate digits.

- We carry out a comprehensive experimental analysis that shows that our method effectively meets the challenges of various environmental factors and achieve reliable meter reading performance.

- We build a large-scale watermeter dataset including 9500 training images and 500 test images. To the best of our knowledge, this is the largest watermeter dataset with images taken under different challenging environments. This dataset can further improve the robustness of our automatic readings.

2. Related Work

2.1. Automatic Meter Reading

2.2. Object Detection

2.3. Text Detection

3. Proposed Method

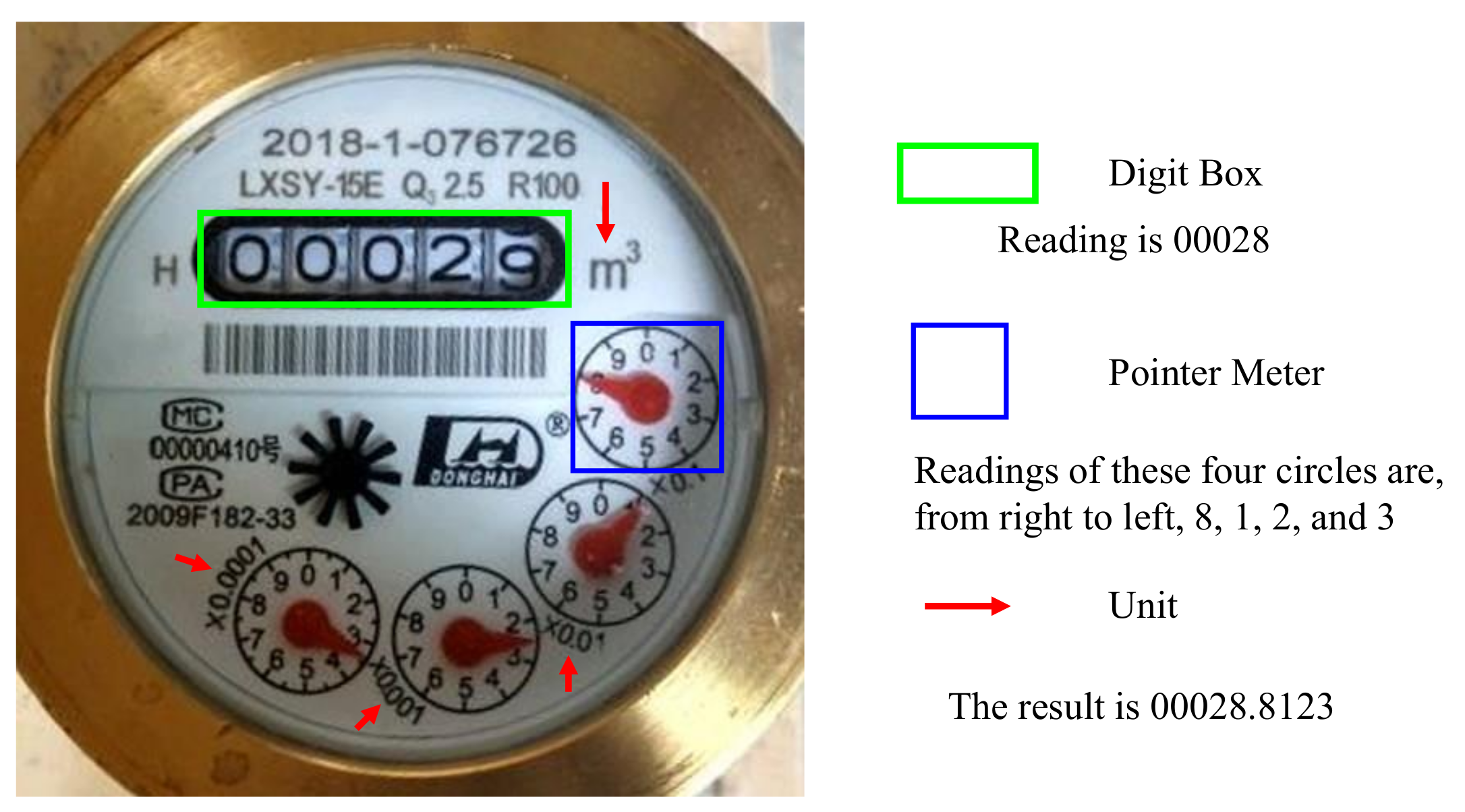

3.1. Reading Rule of Mechanical Watermeters

3.2. Overview

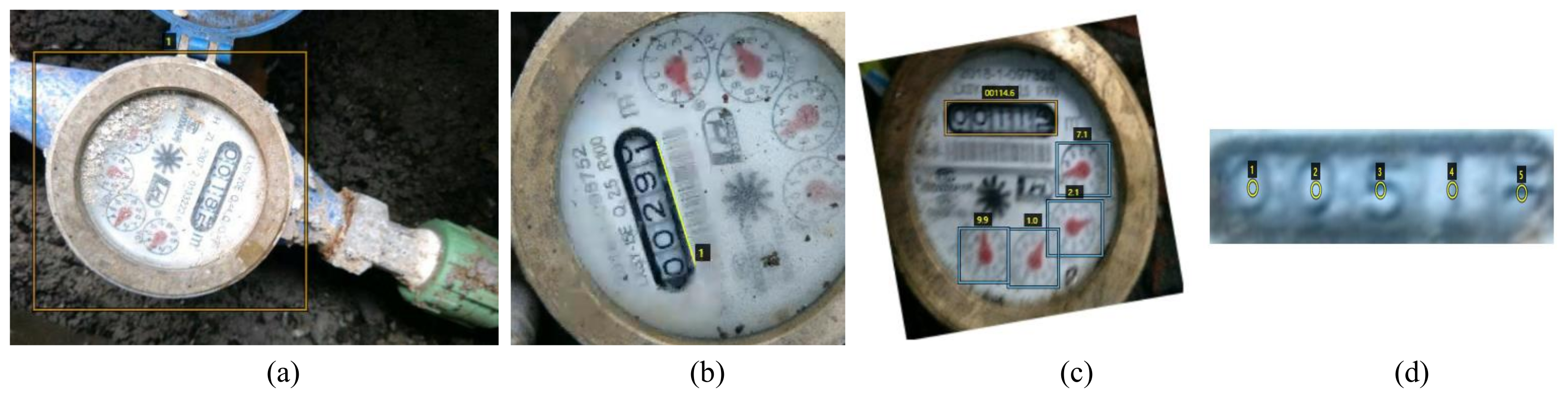

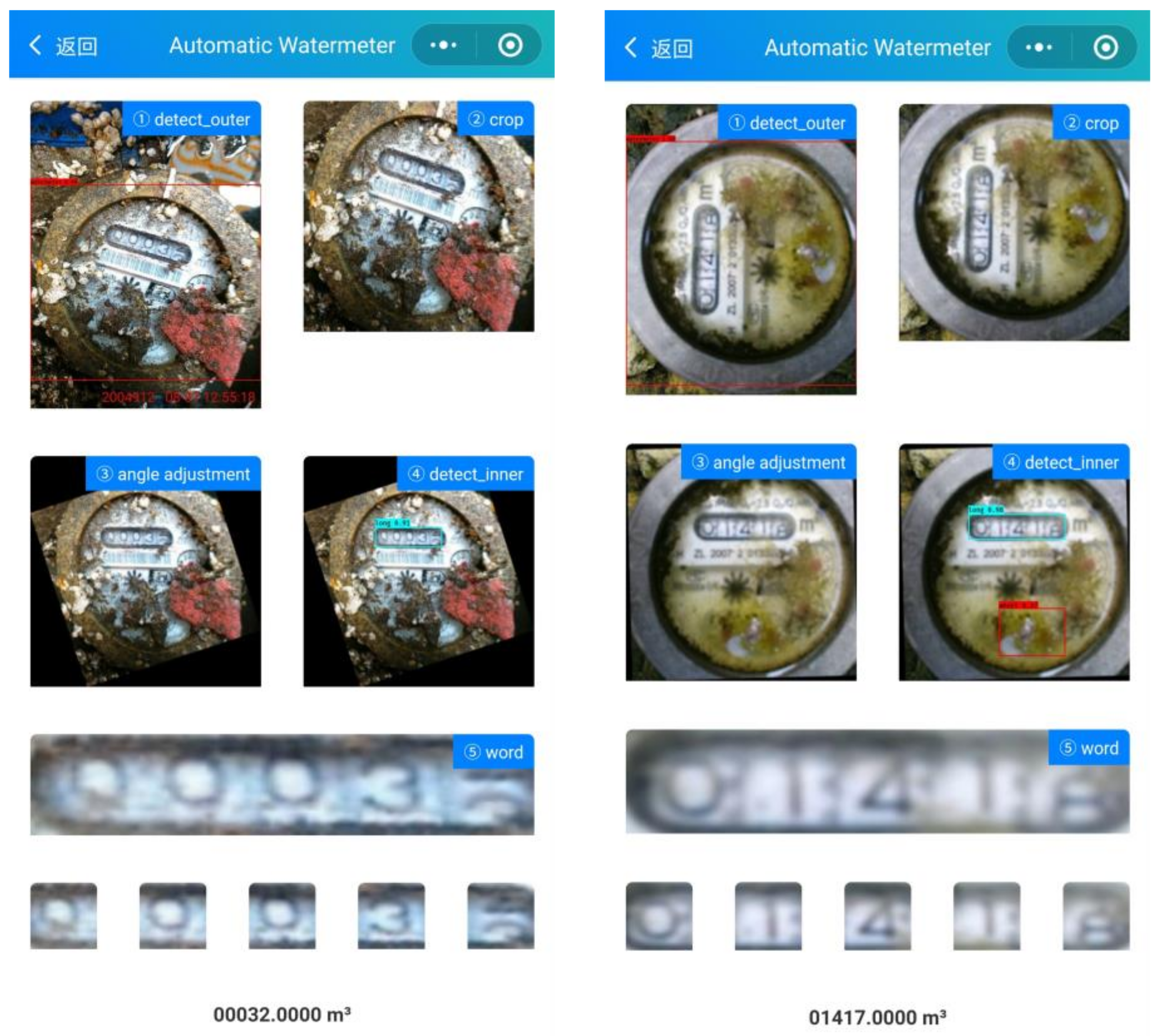

3.3. Watermeter Detection and Rotation Corrected Component Localization

3.3.1. Watermeter Detection

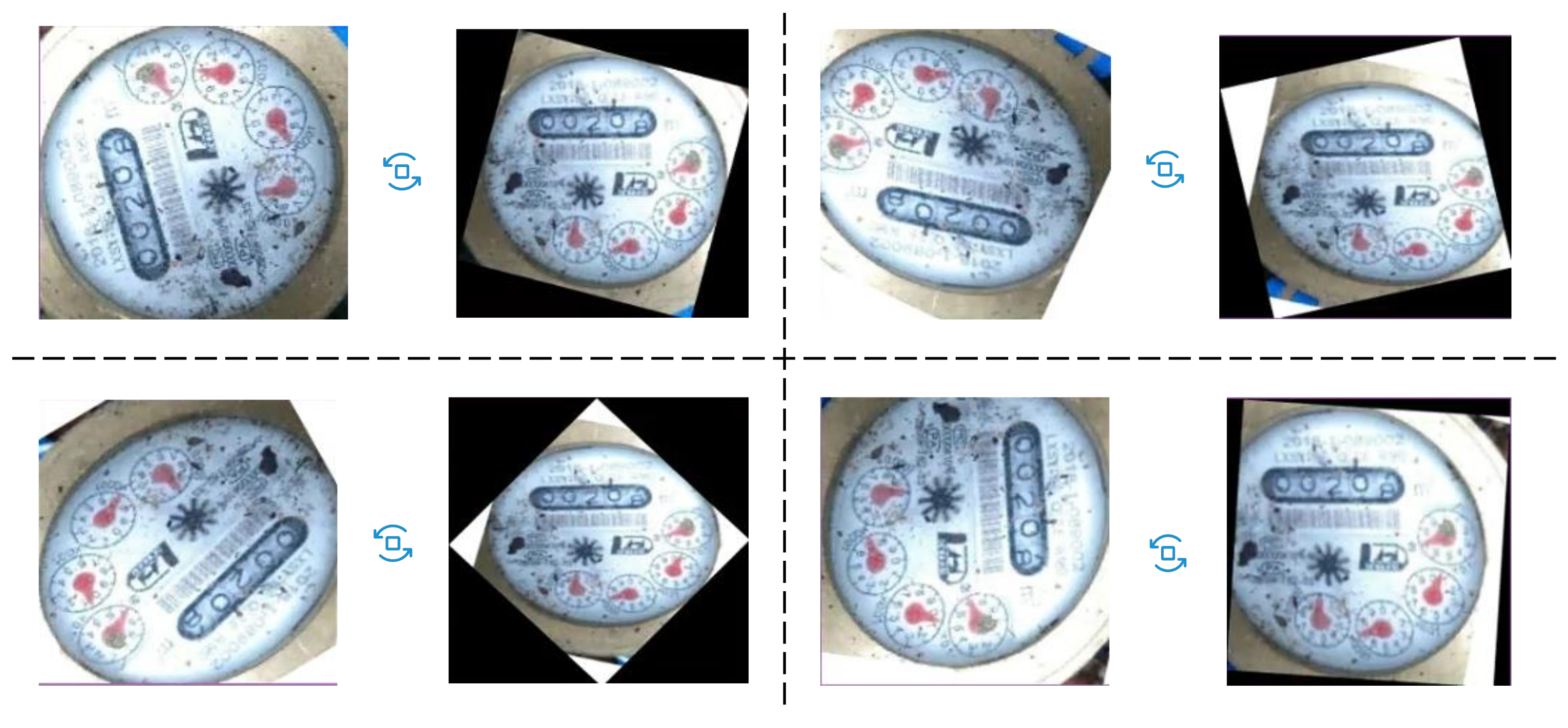

3.3.2. Orientation Alignment

3.3.3. Component Localization

3.4. Regression-Based Digit Reading with Spatial Layout Guidance

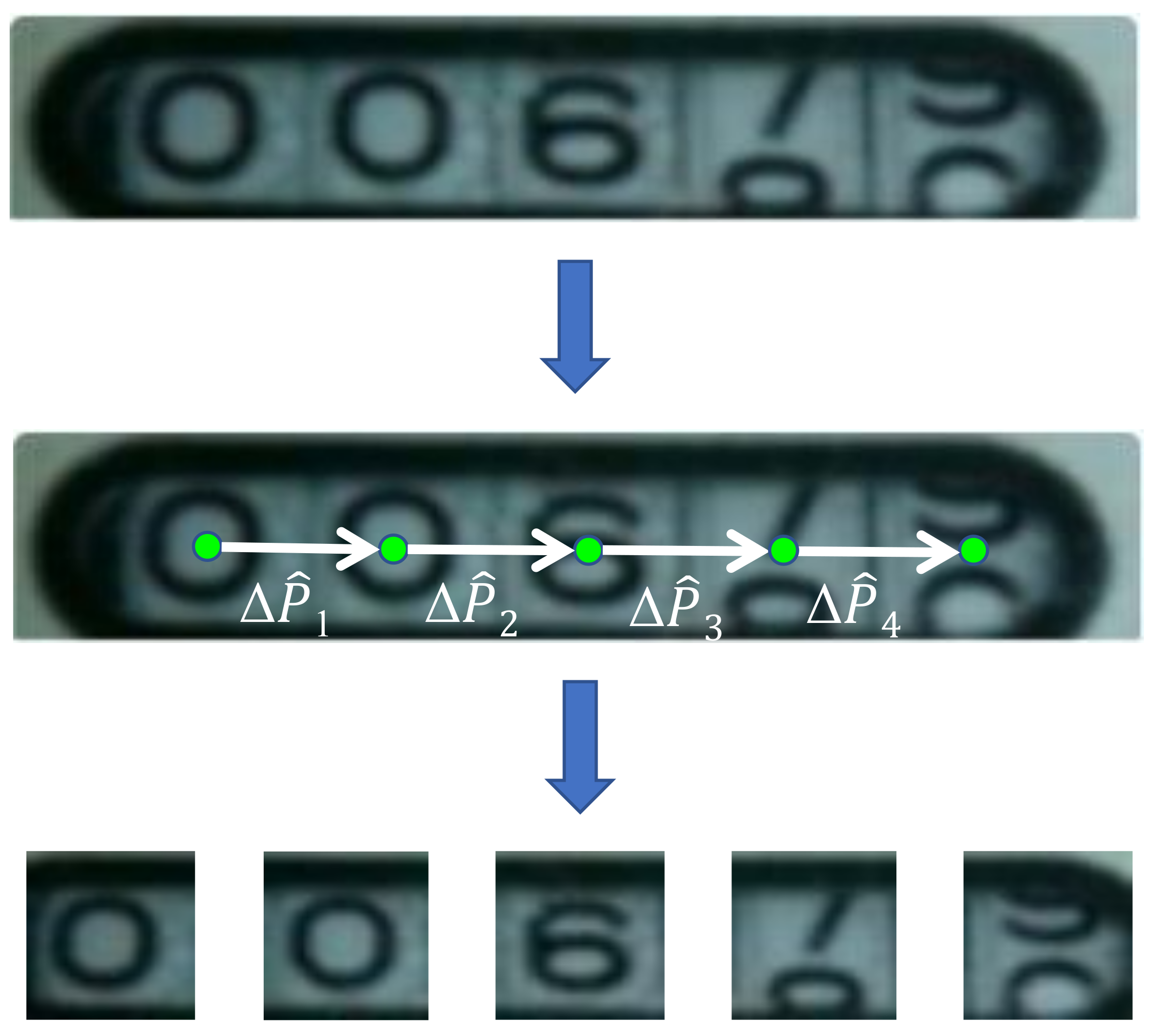

3.4.1. Spatial Layout Guidance for Digit Localization

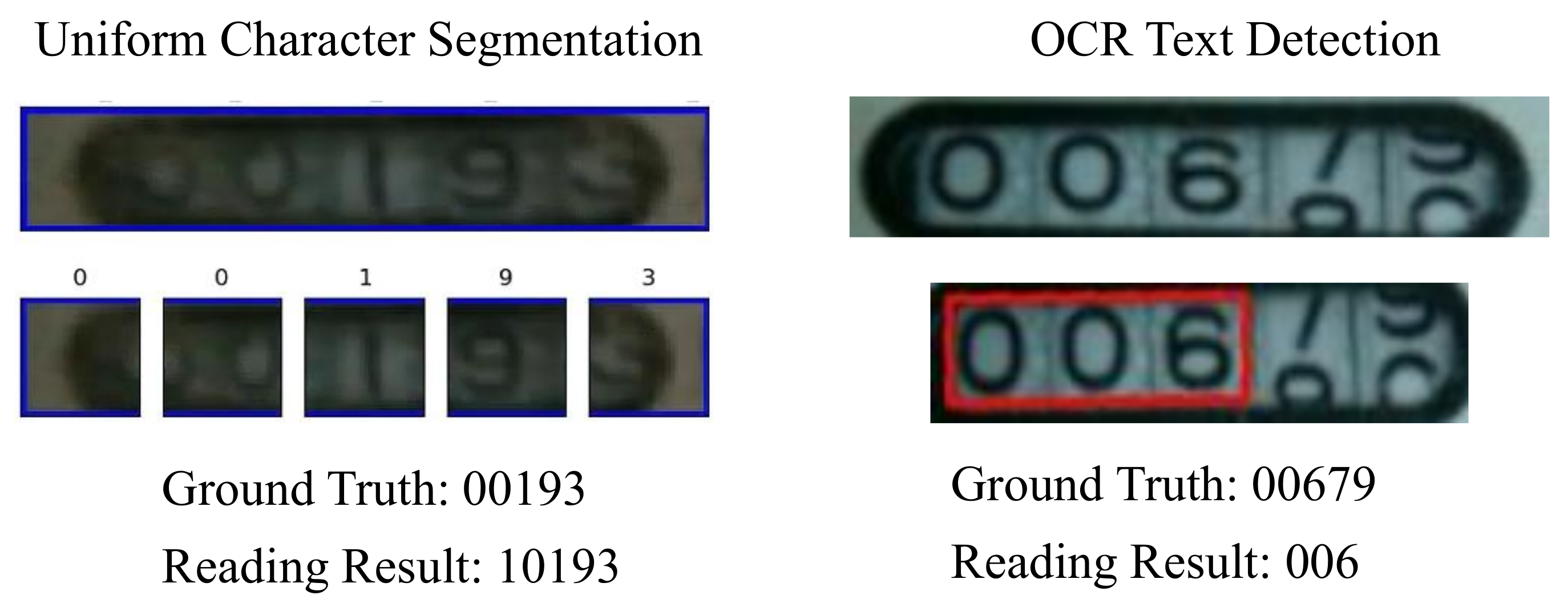

- Given the detected digital region, we uniformly separate each digit and then predict the value for each digit using regression.

- Directly leverage an off-the-shelf OCR module to recognize the digits.

3.4.2. Digit Reading

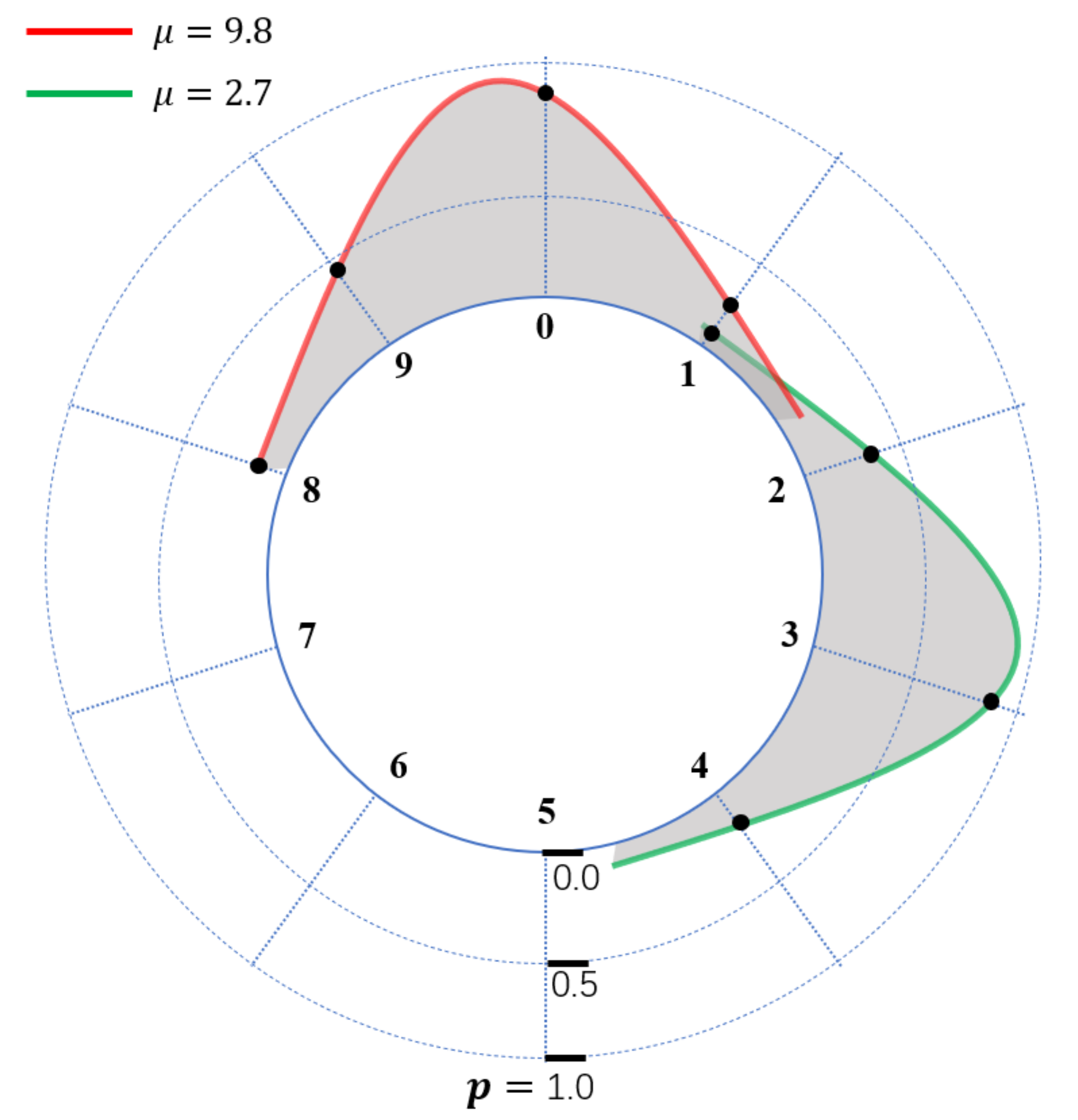

3.5. Regression-Based Pointer Reading

4. Experiments

4.1. Experiment Setup

4.1.1. Data Preparation

4.1.2. Implementation Details

4.1.3. Evaluation Metrics

4.2. Performance Evaluation for Key Modules

4.2.1. Orientation Alignment

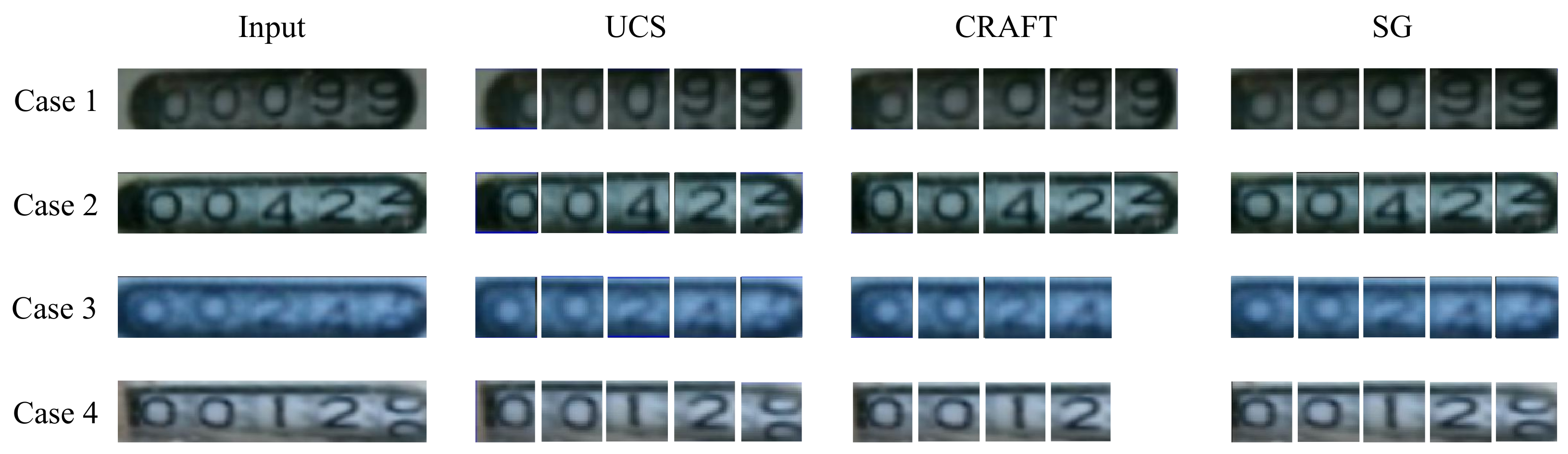

4.2.2. Spatial Layout Guidance for Digit Localization

4.3. Ablation Studies

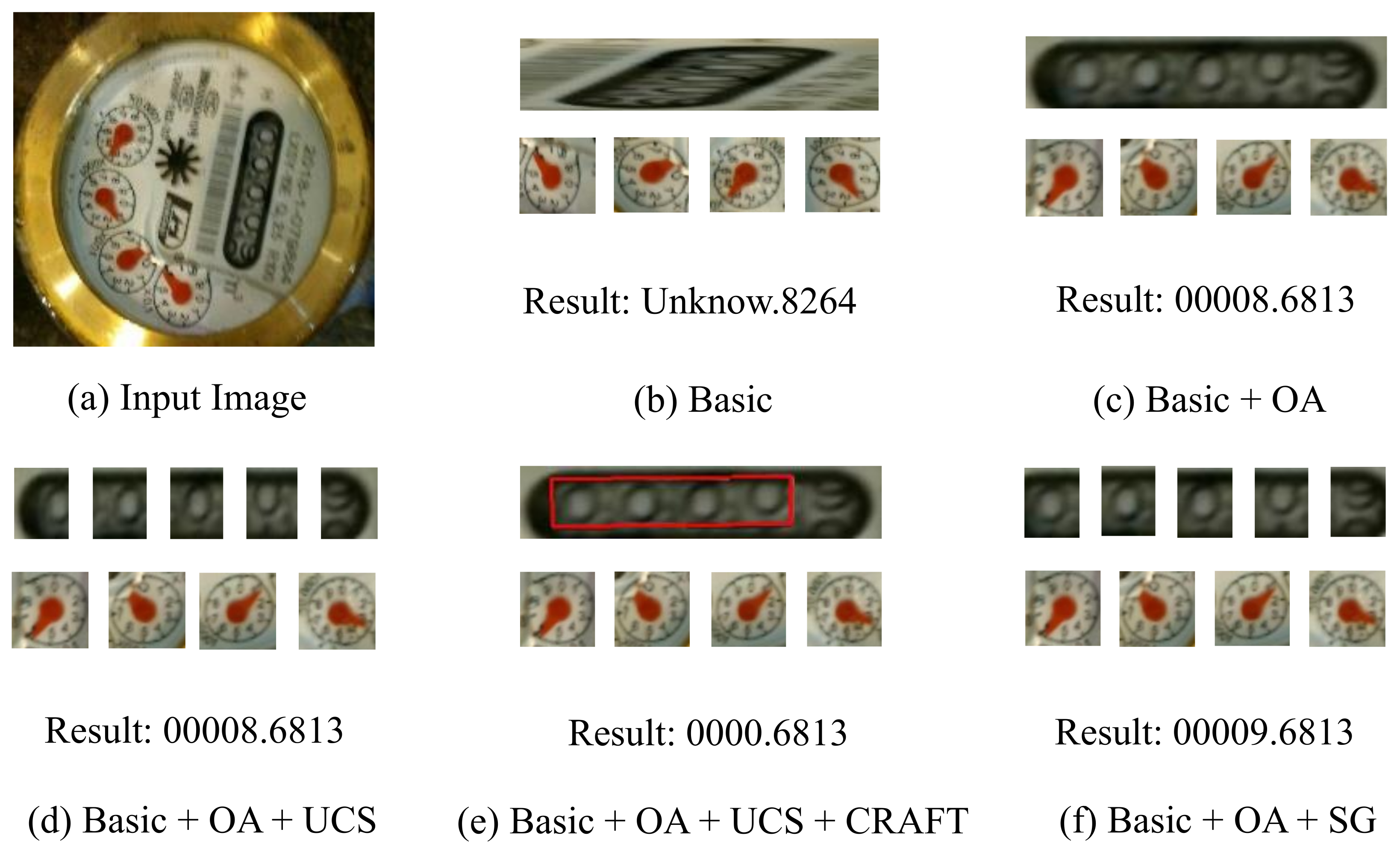

4.3.1. Effectiveness of Orientation Alignment

4.3.2. Effectiveness of Spatial Guidance

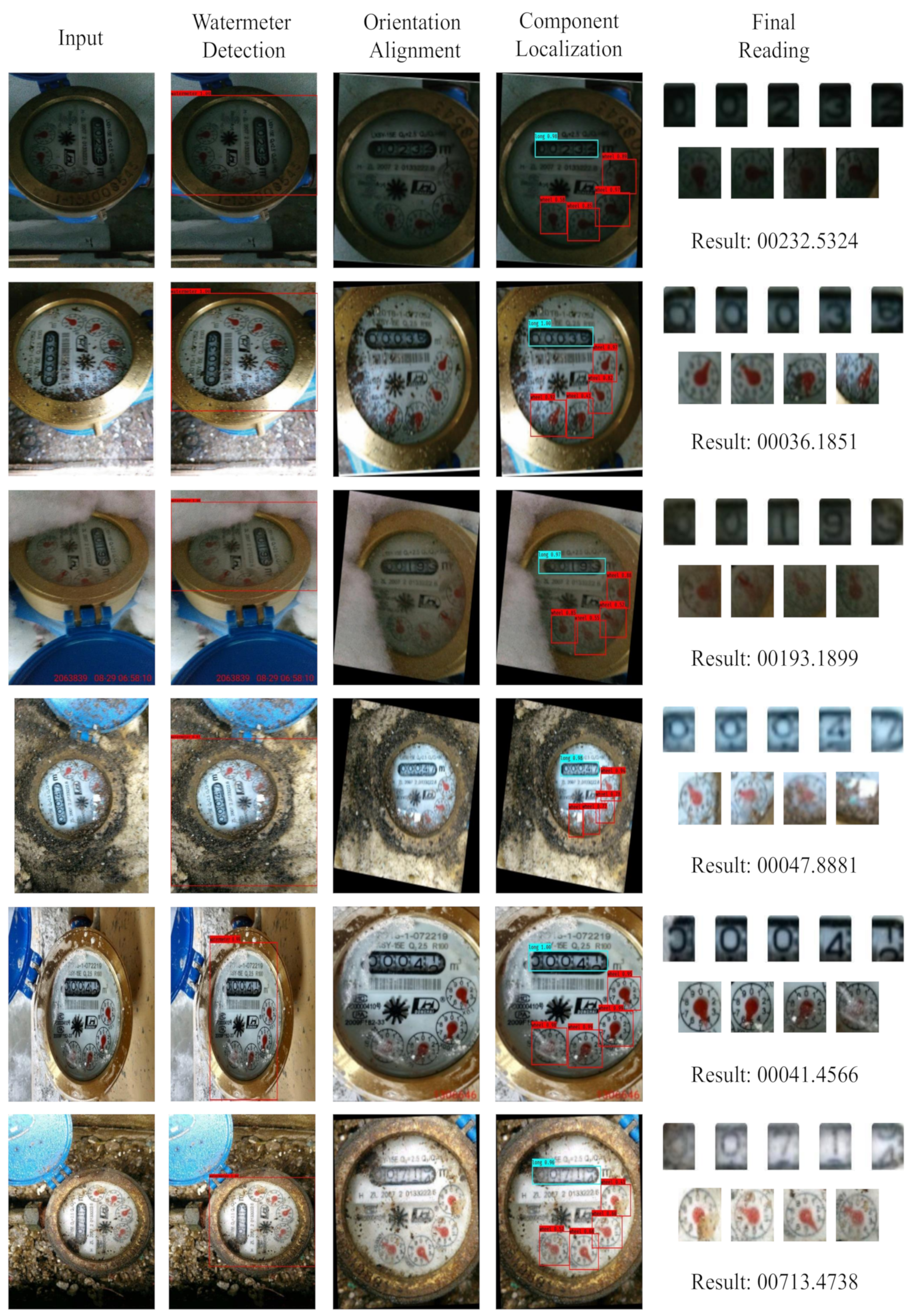

4.4. System Performance

4.4.1. Robustness to Challenging Environments

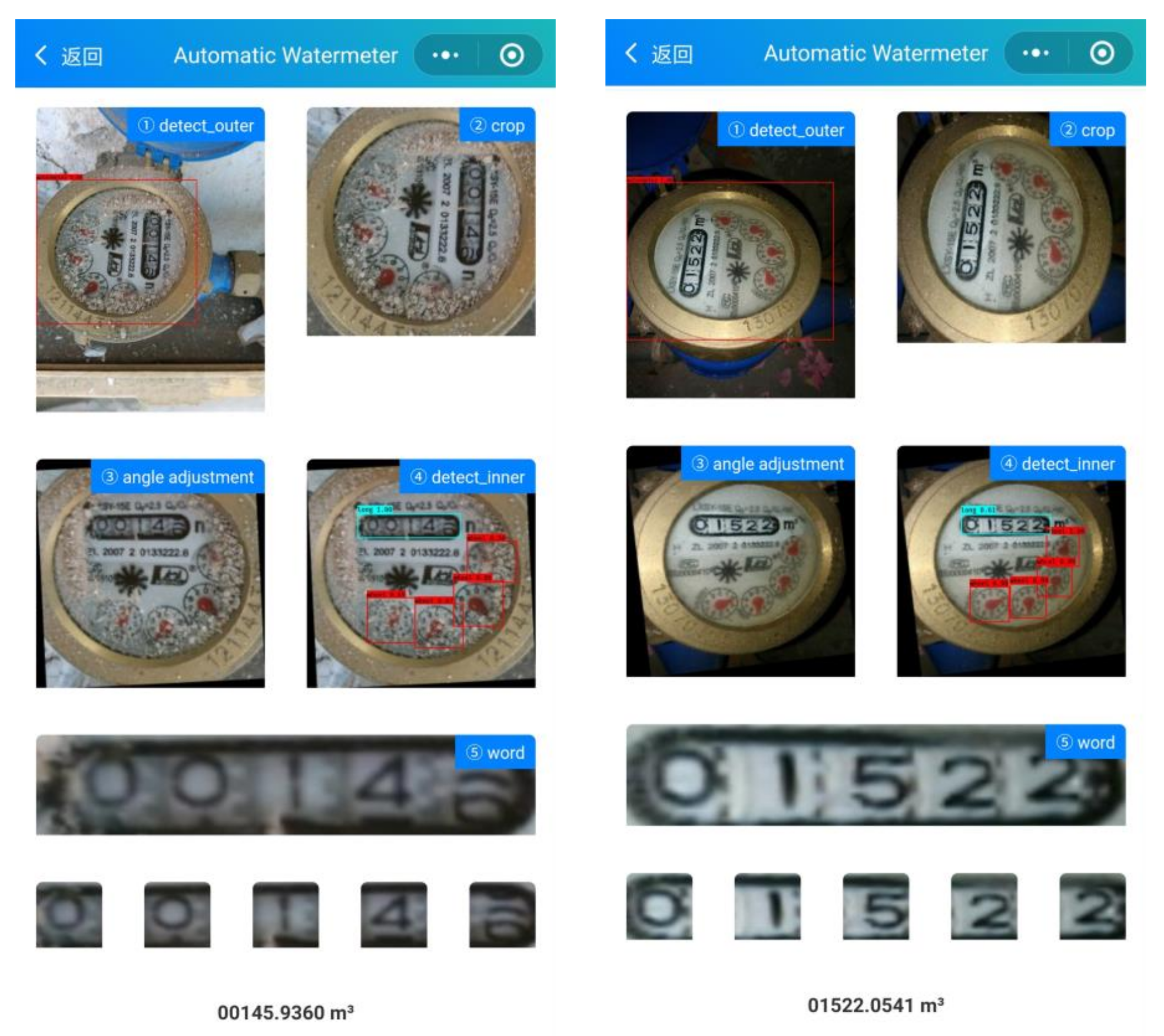

4.4.2. System Deployment

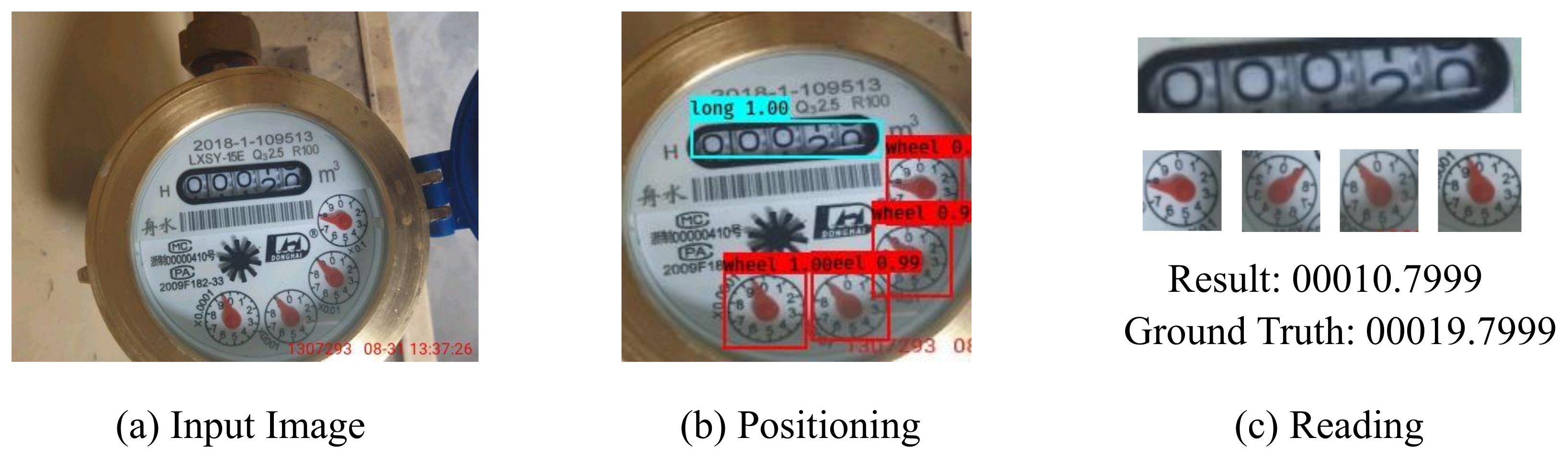

4.4.3. Failure Case

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Goldberg, B.G.; Messinger, G. Remote Meter Reader Using a Network Sensor System and Protocol. U.S. Patent 8,144,027, 27 March 2012. [Google Scholar]

- Jiale, H.; En, L.; Bingjie, T.; Ming, L. Reading recognition method of analog measuring instruments based on improved hough transform. In Proceedings of the IEEE 2011 10th International Conference on Electronic Measurement & Instruments, Chengdu, China, 16–19 August 2011; IEEE: Piscataway, NJ, USA, 2011; Volume 3, pp. 337–340. [Google Scholar]

- Wang, J.; Huang, J.; Cheng, R. Automatic Reading System for Analog Instruments Based on Computer Vision and Inspection Robot for Power Plant. In Proceedings of the 2018 10th International Conference on Modelling, Identification and Control (ICMIC), Guiyang, China, 2–4 July 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar]

- Zheng, W.; Yin, H.; Wang, A.; Fu, P.; Liu, B. Development of an automatic reading method and software for pointer instruments. In Proceedings of the 2017 First International Conference on Electronics Instrumentation & Information Systems (EIIS), Harbin, China, 3–5 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- Gastouniotis, C.S.; Bandeira, N.; Wilson, K.C. Automated Remote Water Meter Readout System. U.S. Patent 4,940,976, 10 July 1990. [Google Scholar]

- Mudumbe, M.J.; Abu-Mahfouz, A.M. Smart water meter system for user-centric consumption measurement. In Proceedings of the 2015 IEEE 13th International Conference on Industrial Informatics (INDIN), Cambridge, UK, 22–24 July 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 993–998. [Google Scholar]

- Li, X.J.; Chong, P.H.J. Design and Implementation of a Self-Powered Smart Water Meter. Sensors 2019, 19, 4177. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Cohen, G.; Afshar, S.; Tapson, J.; Van Schaik, A. EMNIST: Extending MNIST to handwritten letters. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2921–2926. [Google Scholar]

- Deng, L. The mnist database of handwritten digit images for machine learning research [best of the web]. IEEE Signal Process. Mag. 2012, 29, 141–142. [Google Scholar] [CrossRef]

- Jian, S.; Dong, Z.; Jianguo, H. Design of remote meter reading method for pointer type chemical instruments. Process Autom. Instrum. 2014, 35, 77–79. [Google Scholar]

- Gang, N.; Bin, Y. Pointer instrument image recognition based on priori characteristics of instrument structure. Electron. Sci. Technol. 2013, 26, 10–12. [Google Scholar]

- Zuo, L.; He, P.; Zhang, C.; Zhang, Z. A Robust Approach to Reading Recognition of Pointer Meters Based on Improved Mask-RCNN. Neurocomputing 2020, 388, 90–101. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Anis, A.; Khaliluzzaman, M.; Yakub, M.; Chakraborty, N.; Deb, K. Digital electric meter reading recognition based on horizontal and vertical binary pattern. In Proceedings of the 2017 3rd International Conference on Electrical Information and Communication Technology (EICT), Khulna, Bangladesh, 7–9 December 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- Laroca, R.; Barroso, V.; Diniz, M.A.; Gonçalves, G.R.; Schwartz, W.R.; Menotti, D. Convolutional neural networks for automatic meter reading. J. Electron. Imaging 2019, 28, 013023. [Google Scholar]

- LeCun, Y.; Jackel, L.; Bottou, L.; Brunot, A.; Cortes, C.; Denker, J.; Drucker, H.; Guyon, I.; Muller, U.; Sackinger, E.; et al. Comparison of learning algorithms for handwritten digit recognition. In Proceedings of the International Conference on Artificial Neural Networks, Perth, Australia, 27 November–1 December 1995; Volume 60, pp. 53–60. [Google Scholar]

- Bottou, L.; Cortes, C.; Denker, J.S.; Drucker, H.; Guyon, I.; Jackel, L.D.; LeCun, Y.; Muller, U.A.; Sackinger, E.; Simard, P.; et al. Comparison of classifier methods: A case study in handwritten digit recognition. In Proceedings of the 12th IAPR International Conference on Pattern Recognition, Vol. 3-Conference C: Signal Processing (Cat. No. 94CH3440-5), Jerusalem, Israel, 9–13 October 1994; IEEE: Piscataway, NJ, USA, 1994; Volume 2, pp. 77–82. [Google Scholar]

- Netzer, Y.; Wang, T.; Coates, A.; Bissacco, A.; Wu, B.; Ng, A.Y. Reading digits in natural images with unsupervised feature learning. Reading digits in natural images with unsupervised feature learning. In Proceedings of the NIPS Workshop on Deep Learning and Unsupervised Feature Learning 2011, Granada, Spain, 15–16 December 2011. [Google Scholar]

- Niu, X.X.; Suen, C.Y. A novel hybrid CNN–SVM classifier for recognizing handwritten digits. Pattern Recognit. 2012, 45, 1318–1325. [Google Scholar] [CrossRef]

- Edward, V.C.P. Support vector machine based automatic electric meter reading system. In Proceedings of the 2013 IEEE International Conference on Computational Intelligence and Computing Research, Enathi, India, 26–28 December 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1–5. [Google Scholar]

- Elrefaei, L.A.; Bajaber, A.; Natheir, S.; AbuSanab, N.; Bazi, M. Automatic electricity meter reading based on image processing. In Proceedings of the 2015 IEEE Jordan Conference on Applied Electrical Engineering and Computing Technologies (AEECT), Amman, Jordan, 3–5 November 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–5. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-fcn: Object detection via region-based fully convolutional networks. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 379–387. [Google Scholar]

- Pang, J.; Chen, K.; Shi, J.; Feng, H.; Ouyang, W.; Lin, D. Libra r-cnn: Towards balanced learning for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 821–830. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Li, Z.; Zhou, F. FSSD: Feature fusion single shot multibox detector. arXiv 2017, arXiv:1712.00960. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Epshtein, B.; Ofek, E.; Wexler, Y. Detecting text in natural scenes with stroke width transform. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 2963–2970. [Google Scholar]

- Matas, J.; Chum, O.; Urban, M.; Pajdla, T. Robust wide-baseline stereo from maximally stable extremal regions. Image Vis. Comput. 2004, 22, 761–767. [Google Scholar] [CrossRef]

- Liao, M.; Shi, B.; Bai, X.; Wang, X.; Liu, W. Textboxes: A fast text detector with a single deep neural network. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Liu, Y.; Jin, L. Deep matching prior network: Toward tighter multi-oriented text detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1962–1969. [Google Scholar]

- He, P.; Huang, W.; He, T.; Zhu, Q.; Qiao, Y.; Li, X. Single shot text detector with regional attention. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3047–3055. [Google Scholar]

- Long, S.; Ruan, J.; Zhang, W.; He, X.; Wu, W.; Yao, C. Textsnake: A flexible representation for detecting text of arbitrary shapes. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 20–36. [Google Scholar]

- Baek, Y.; Lee, B.; Han, D.; Yun, S.; Lee, H. Character region awareness for text detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–21 June 2019; pp. 9365–9374. [Google Scholar]

- Lyu, P.; Liao, M.; Yao, C.; Wu, W.; Bai, X. Mask textspotter: An end-to-end trainable neural network for spotting text with arbitrary shapes. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 67–83. [Google Scholar]

- Everingham, M.; Eslami, S.A.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes challenge: A retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 248–255. [Google Scholar]

- Dutta, A.; Gupta, A.; Zissermann, A. VGG image annotator (VIA). 2016. Available online: https://www.robots.ox.ac.uk/~vgg/software/via/ (accessed on 1 September 2020).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

| Angle Distribution | Max Ang. Err. | Min Ang. Err. | Average Ang. Err. |

|---|---|---|---|

| U(−10°, 10°) | 3.488° | 0.001° | 0.688° |

| U(−20°, 20°) | 3.736° | 0.001° | 0.713° |

| U(−30°, 30°) | 4.690° | 0.004° | 0.700° |

| U(−40°, 40°) | 4.363° | 0.003° | 0.822° |

| U(−50°, 50°) | 3.131° | 0.001° | 0.735° |

| U(−60°, 60°) | 4.791° | 0.001° | 0.753° |

| U(−70°, 70°) | 3.982° | 0.001° | 0.750° |

| U(−80°, 80°) | 3.585° | 0.003° | 0.726° |

| U(−90°, 90°) | 4.026° | 0.001° | 0.709° |

| Orientation Alignment | Average IOU | AP@0.5 |

|---|---|---|

| 0.51 | 42.11 | |

| ✓ | 0.92 | 98.92 |

| Approach | Basic | OA | UCS | CRAFT | SG | Digit Err. |

|---|---|---|---|---|---|---|

| Basic | ✓ | 24.32% | ||||

| Basic + OA | ✓ | ✓ | 13.20% | |||

| Basic + OA + UCS | ✓ | ✓ | ✓ | 8.52% | ||

| Basic + OA + UCS + CRAFT | ✓ | ✓ | ✓ | ✓ | 5.72% | |

| Basic + OA + SG | ✓ | ✓ | ✓ | 3.79% | ||

| * Basic | ✓ | 35.86% (+47%) | ||||

| * Basic + OA | ✓ | ✓ | 17.76% (+34%) | |||

| * Basic + OA + UCS | ✓ | ✓ | ✓ | 10.84 (+27%) | ||

| * Basic + OA + UCS + CRAFT | ✓ | ✓ | ✓ | ✓ | 6.76% (+18%) | |

| * Basic + OA + SG | ✓ | ✓ | ✓ | 4.04% (+6%) |

| Digit Err. | Pointer Err. | ||||

|---|---|---|---|---|---|

| Base | Base + OA + SG | Base | Base + OA + SG | ||

| Cleanness | Normal | 15.0% | 3.4% | 1.0% | 1.0% |

| Dirty | 19.2% | 3.6% | 7.0% | 3.0% | |

| Lighting | Normal | 11.4% | 3.4% | 2.0% | 1.0% |

| Bright | 13.9% | 3.6% | 4.0% | 2.0% | |

| Dark | 14.2% | 3.8% | 4.0% | 2.0% | |

| Clarity | Normal | 13.8% | 2.0% | 1.0% | 0.0% |

| Down × 2 | 14.6% | 2.2% | 2.0% | 2.0% | |

| Down × 3 | 16.4% | 4.0% | 7.0% | 3.0% | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hong, Q.; Ding, Y.; Lin, J.; Wang, M.; Wei, Q.; Wang, X.; Zeng, M. Image-Based Automatic Watermeter Reading under Challenging Environments. Sensors 2021, 21, 434. https://doi.org/10.3390/s21020434

Hong Q, Ding Y, Lin J, Wang M, Wei Q, Wang X, Zeng M. Image-Based Automatic Watermeter Reading under Challenging Environments. Sensors. 2021; 21(2):434. https://doi.org/10.3390/s21020434

Chicago/Turabian StyleHong, Qingqi, Yiwei Ding, Jinpeng Lin, Meihong Wang, Qingyang Wei, Xianwei Wang, and Ming Zeng. 2021. "Image-Based Automatic Watermeter Reading under Challenging Environments" Sensors 21, no. 2: 434. https://doi.org/10.3390/s21020434

APA StyleHong, Q., Ding, Y., Lin, J., Wang, M., Wei, Q., Wang, X., & Zeng, M. (2021). Image-Based Automatic Watermeter Reading under Challenging Environments. Sensors, 21(2), 434. https://doi.org/10.3390/s21020434