1. Introduction

In robotics, vision-based simultaneous localization and mapping (SLAM) is a geometric problem of mapping an unknown environment while tracking the camera pose simultaneously. Vision-based SLAM systems have a wide range of applications, such as autonomous navigation, search and rescue in natural disasters, and undersea exploration. Thus, the most important characteristics required in the SLAM system are to be accurate and robust. Liu et al. [

1] show that a camera with a wide field of view provides more accurate camera localization and more robust mapping. In [

2], it is also explained the advantages of a wide field of view in the problem of place recognition and geometric SLAM.

One of the solutions to obtain a wide field of view is to use a single camera with an omnidirectional lens. Yet, in [

3], it shows that images taken with an omnidirectional lens suffer from wide image scale variation and low angular resolution. Thus, a pixel measurement of an omnidirectional camera is less accurate than that of a perspective camera. Another solution for enlarging the field of view is using multiple cameras. Approaches in [

4,

5] noted that using multi-cameras has many advantages in terms of providing more efficient localization and higher error tolerance compared to single camera usage. As in our previous study [

6], we aim to use multiple cameras in this paper.

The primary challenge to be discussed in the use of multi-camera SLAM is the calibration of extrinsic parameters between cameras. For efficient localization and mapping, a SLAM system requires the integration of each information from cameras. For this reason, the available methods are reviewed depending on the necessity of multi-camera calibration.

SLAM systems where all cameras work as a single unit require precalibrated extrinsic parameters. The first example of this approach is the multi-camera parallel tracking and mapping with a nonoverlapping field of view (MCPTAM) [

7], which is the multi-camera version of PTAM. To prevent tracking loss as much as possible, the authors used a large field of view cameras (omnidirectional). Thus, the camera model has been replaced by a polynomial model, and this leads to making some changes such as the epipolar correspondence search. Another example that requires precalibrated extrinsic parameters is Multicol-SLAM [

8]. Multicol-SLAM extended the ORB-SLAM to a multiple fisheye camera usages and modified some pipelines of the ORB-SLAM in several aspects. First, they introduced a multi-keyframe concept. Second, the authors proposed the hypergraph formulas in an optimization process. Lastly, a loop closure thread revised in the multi-camera scenario is also one of their contributions. One of the recent examples of a multi-camera SLAM system is proposed by Yang et al. [

9]. The authors draw attention to the difficulties of offroad navigation for SLAM systems and develop a new vision-based SLAM system. Their main contributions are listed as follows: building a spatial sensing model of a multi-camera system considering the system’s imaging principle, designing a multi-camera collaborative SLAM framework using the advantages of a large field of view for recovering the scale, and using deep learning for detection of repetitive scenes that provides more accurate loop detection. Lately, Kuo et al. also proposed another method of a multi-camera SLAM system that requires precalibrated extrinsic parameters [

10]. The authors aim to develop a SLAM system that is compatible with arbitrary multi-camera configurations automatically. For this purpose, they come up with three main contributions that are an adaptive initialization system, a sensor-agnostic, an information-theoretic keyframe selection method, and a scalable voxel-based map management approach.

On the other hand, some approaches do not require the extrinsic parameters between cameras in advance. For instance, Heng et al. [

5] developed a self-calibrated SLAM system with four cameras mounted on a micro aerial vehicle (MAV). On the MAV, they set the back and the front camera pairs up according to the stereo configuration. For online self-calibration, at least one stereo camera must be calibrated in advance. To recover the relative motion of the MAV, the authors proposed the minimal and linear 3-point algorithm that uses the information from the 3-axis gyroscope. Another self-calibrated multi-camera slam system is proposed by Carrera et al. [

11]. In this approach, it is not necessary to have an overlapping region between cameras. However, there is a requirement that the robot must rotate the spot 360 degrees to ensure that all cameras see the same features. Each camera is localized according to the other cameras in the system. Then, the extracted maps from each camera are merged using 3D alignment, and all the map components are optimized. Still, the requirement of the robot turning 360 degrees is a constraint, and this could be considered as a precalibration method. A recent example of an autocalibration method for a multi-camera system is proposed by Feng et al. [

12]. Their approach is calibrating a multi-camera system without any overlapping regions using SLAM. For this purpose, they first reconstruct the 3D model of the environment using SLAM and calibrate each camera through 2D-3D correspondences between the image sequences and the extracted 3D model.

Whether a multi-camera SLAM system precalibrates or calculates the extrinsic parameters online, slight errors in extrinsic parameters may cause significant errors in the reprojection of map points, and this causes failure during initialization and tracking. To overcome this issue, we present a novel solution to an uncalibrated multi-camera SLAM system. We aim to develop a robust multi-camera SLAM system in which each camera is tracked independently on a single map, and the extrinsic parameters are estimated online. In the camera tracking, we do not use the extrinsic parameters explicitly to avoid the tracking loss by the error in the parameters which affect robustness. However, in the mapping stage, we still estimate and refine the extrinsic parameters to gather and utilize all the observations from the multiple cameras that improve the map accuracy.

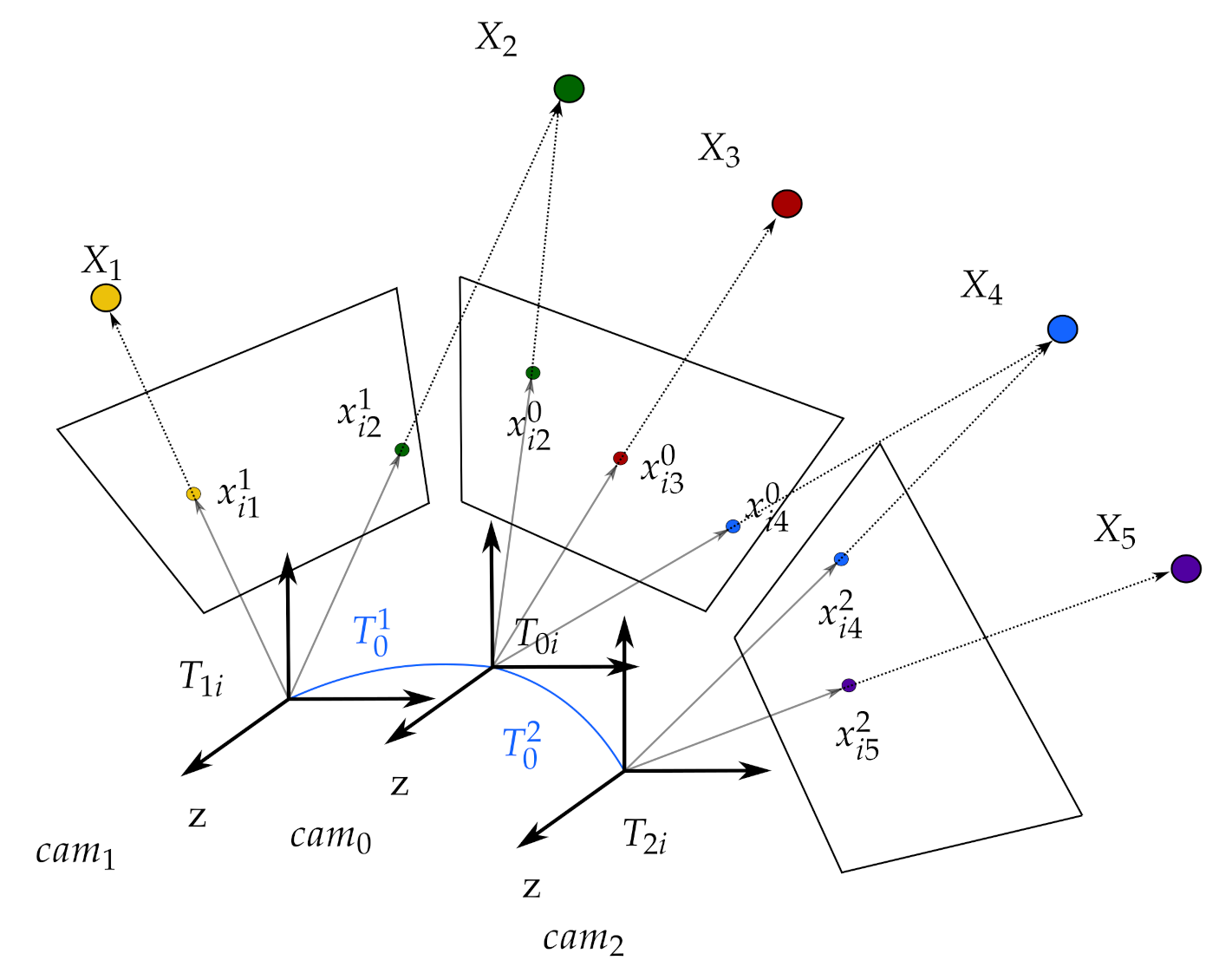

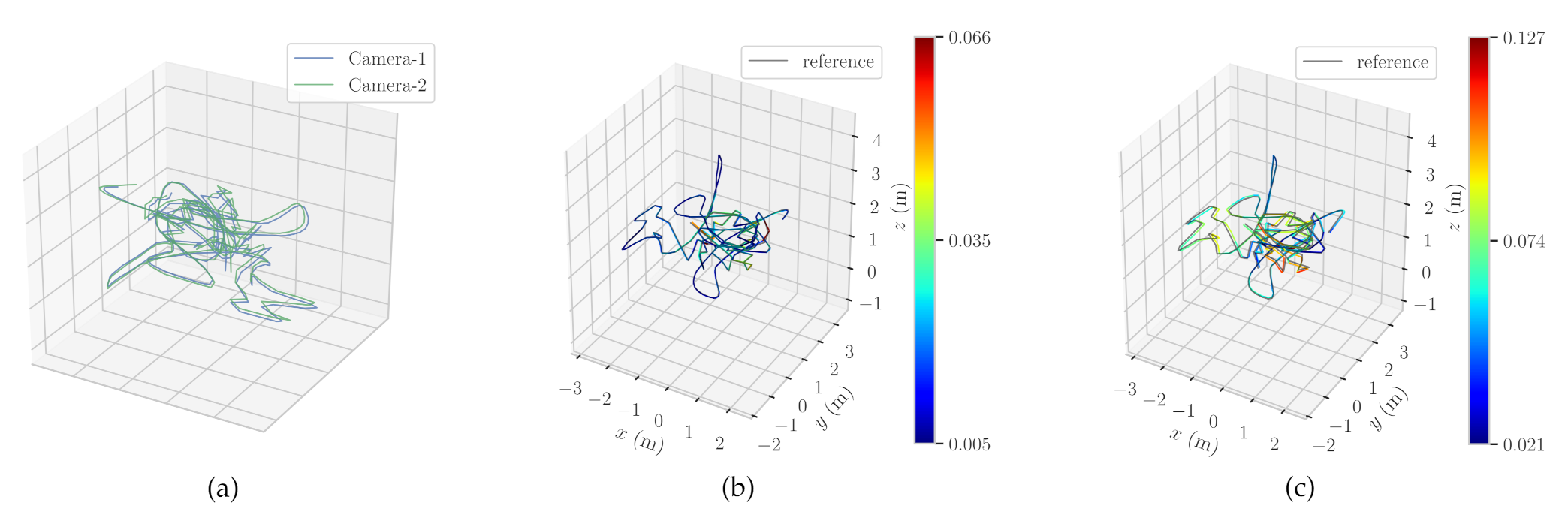

Figure 1 presents an example of a multi-camera rig.

Like the monocular SLAM systems, our system has a scale ambiguity problem for RGB cameras. RGB cameras are not able to set the scale factor (length of translational movement) concerning corresponding feature matching. Even though the proposed system uses multiple cameras, it is not possible to solve the scale ambiguity since the extrinsic parameter calculation is not in the Euclidean space. For resolving the scale ambiguity problem, RGB-D camera usage is one solution. RGB-D sensors provide both the Euclidean geometry and the image texture of an environment by integrating a depth map measured by a depth sensor, and color information comes from an RGB camera. We resolve the scale ambiguity problem since a depth sensor presents the scale information, and the proposed system is compatible with RGB-D cameras.

Since each camera performs its tracking, the proposed method might be considered similar to the collaborative SLAM systems. However, there are some significant differences. In collaborative SLAM systems like [

13,

14], multiple agents create individual maps, and a server merges those maps for a global map. Each camera runs independent processes such as tracking, local mapping and loop closing. Compared to this, the proposed method has a single shared map for all the cameras, while the cameras are tracked independently on the same map. In addition, there are single local mapping and single loop closing threads for all the cameras by utilizing the fact that all the cameras are fixed in the whole sequence.

2. Framework of the Proposed the Multi-Camera SLAM System

Our method extends the state-of-the-art monocular ORB-SLAM2 [

15] algorithm to an uncalibrated multi-camera SLAM system.

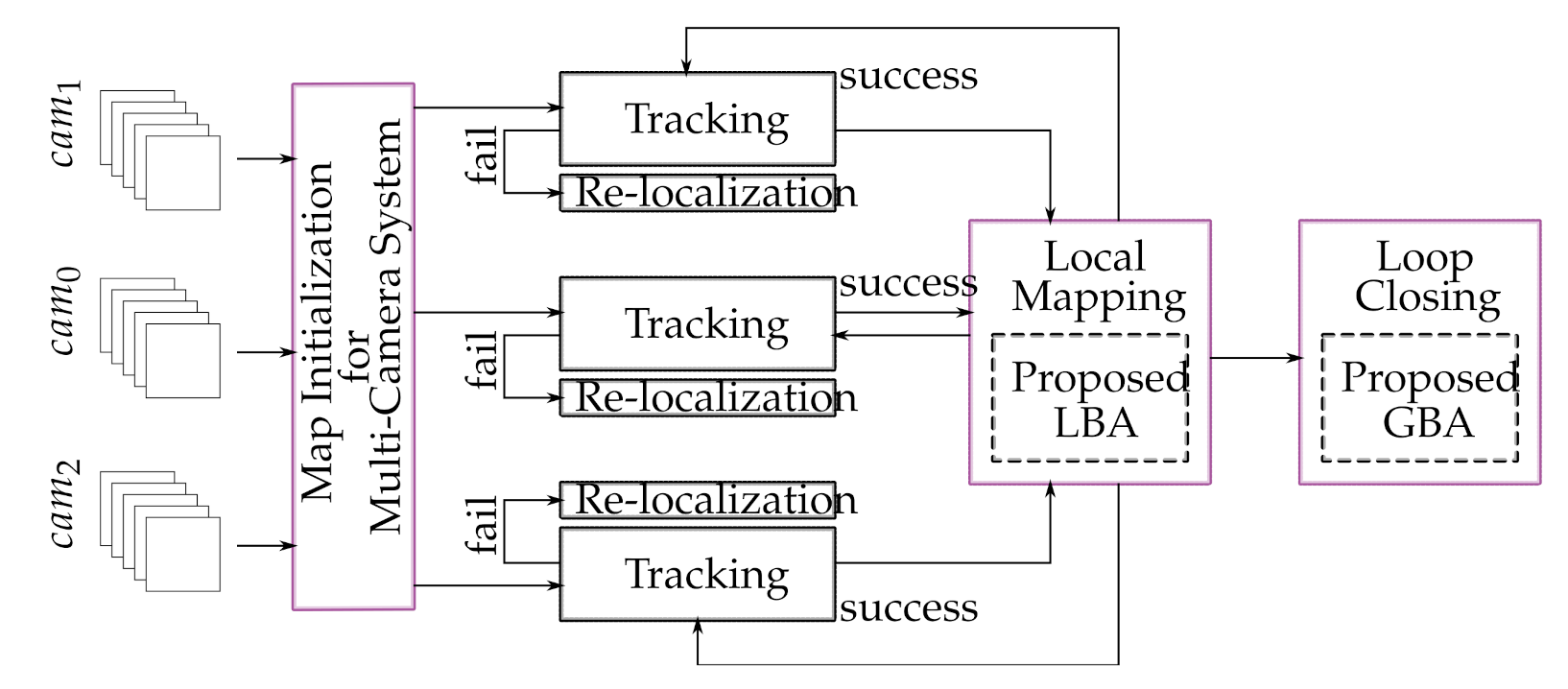

Figure 2 represents the block diagram of the proposed multi-camera SLAM system, and our contributions are in the blocks in purple. The main contributions that make the proposed method different from conventional multi-camera SLAM systems can be summarized as follows:

SLAM framework for an uncalibrated multi-camera system in which each camera is tracked independently on a shared map.

Online calibration of extrinsic parameters in the multi-camera system

Initialization of multi-camera configuration.

Simultaneous refinement of the SLAM components and extrinsic parameters by a multi-edge based graph optimization.

The SLAM components are described in the

Table 1 and illustrated in

Figure 1 for a better understanding of the proposed method.

2.1. TIMA SLAM

In conventional multi-camera SLAM systems, all cameras work as a single unit. To work as a single unit, such systems use given extrinsic parameters between cameras from the first frame to the last frame. In cases where the extrinsic parameters are not accurate, the system may fail on the map initialization or camera tracking due to the wrong map point projection from multiple cameras. As a solution to this problem, our method introduces two new concepts. The first one is an uncalibrated multi-camera system in which each camera is tracked independently on a single map. The second one is self-calibration and refinement of the extrinsic parameters for better localization and mapping.

When each camera works independently, the system does not need to use precalculated extrinsic parameters, which could be inaccurate. Unlike conventional multi-camera SLAM systems, the proposed independent tracking of each camera is not affected by the incorrect extrinsic parameters, even though they observe the same map points. Furthermore, in case a multi-camera SLAM system that operates as a single unit loses its track, it cannot relocalize itself unless it revisits the places previously observed. However, in the proposed system, as long as just one camera keeps tracking, the system can relocalize the other cameras by obtaining the reference keyframe tracking camera.

In the mapping process, we collect observations from all the cameras and estimate the map points and keyframe poses. We also estimate the extrinsic parameters simultaneously for more accurate mapping. Once the system calculates the initial extrinsic parameters online, it updates them iteratively to estimate a more accurate map. The optimization process will be explained in detail in

Section 2.5.

Considering the advantages of the proposed method, we call it ‘TIMA SLAM–Tracking Independent and Mapping Altogether for an uncalibrated multi-camera system’. This is because all the cameras in the system are being tracked independently while a map is built by observations from all cameras.

2.2. Multi-Camera Initialization

In the TIMA SLAM, despite operating multiple cameras, the initial map is generated by a camera selected as a reference. For that purpose, the system calculates the relative poses between the consecutive frames and triangulates the map points. For the relative pose estimation, two geometric models are evaluated in parallel. According to the assumption of planar and nonplanar scenes, the system calculates a homography and the fundamental matrix respectively. The selection of the appropriate model is determined by the equation given in [

15]. Additionally, the system rearranges the associated motion hypotheses. At last, the system performs the global bundle adjustment (GBA) for the initial map optimization.

After the reference camera generates the initial map, each nonreference camera in the system localizes itself using the reference keyframe and the map points sent by the reference camera. For the nonreference camera localization, the system estimates each camera pose by performing RANSAC iterations with the perspective-n-point(PNP) algorithm [

16]. If enough inliers support the pose of a camera, the system optimizes the pose and searches for more matches. Lastly, if there are still enough inliers, the system performs one more camera pose optimization and registers the camera. The same process applies to relocalize any camera in case it loses its tracking.

In the next stage, the extrinsic parameters (the relative poses between the cameras and the reference camera) are calculated upon the moment each camera localizes itself because of all cameras in the system being fixed to each other. Let

and

be the coordinate systems of the cameras and let

and

be their respective poses in world coordinate system

where

and

are the rotation matrices, and

and

are the translation vectors for

and

respectively. The relative pose between

and

is denoted as following:

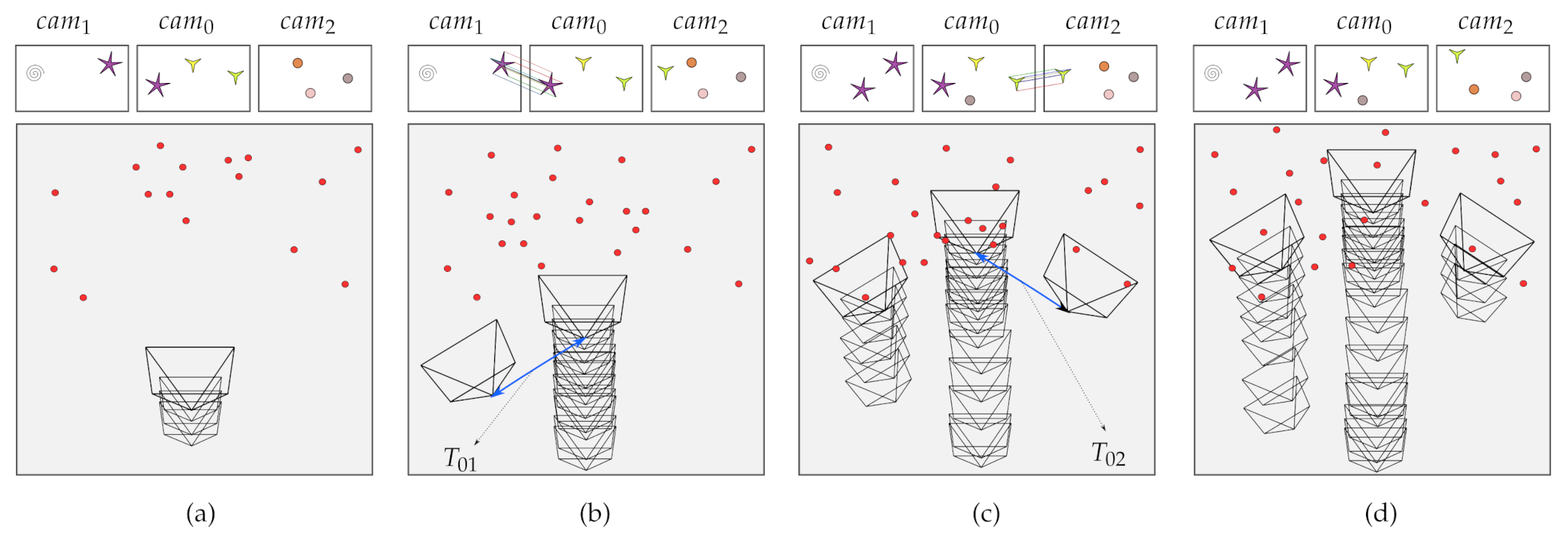

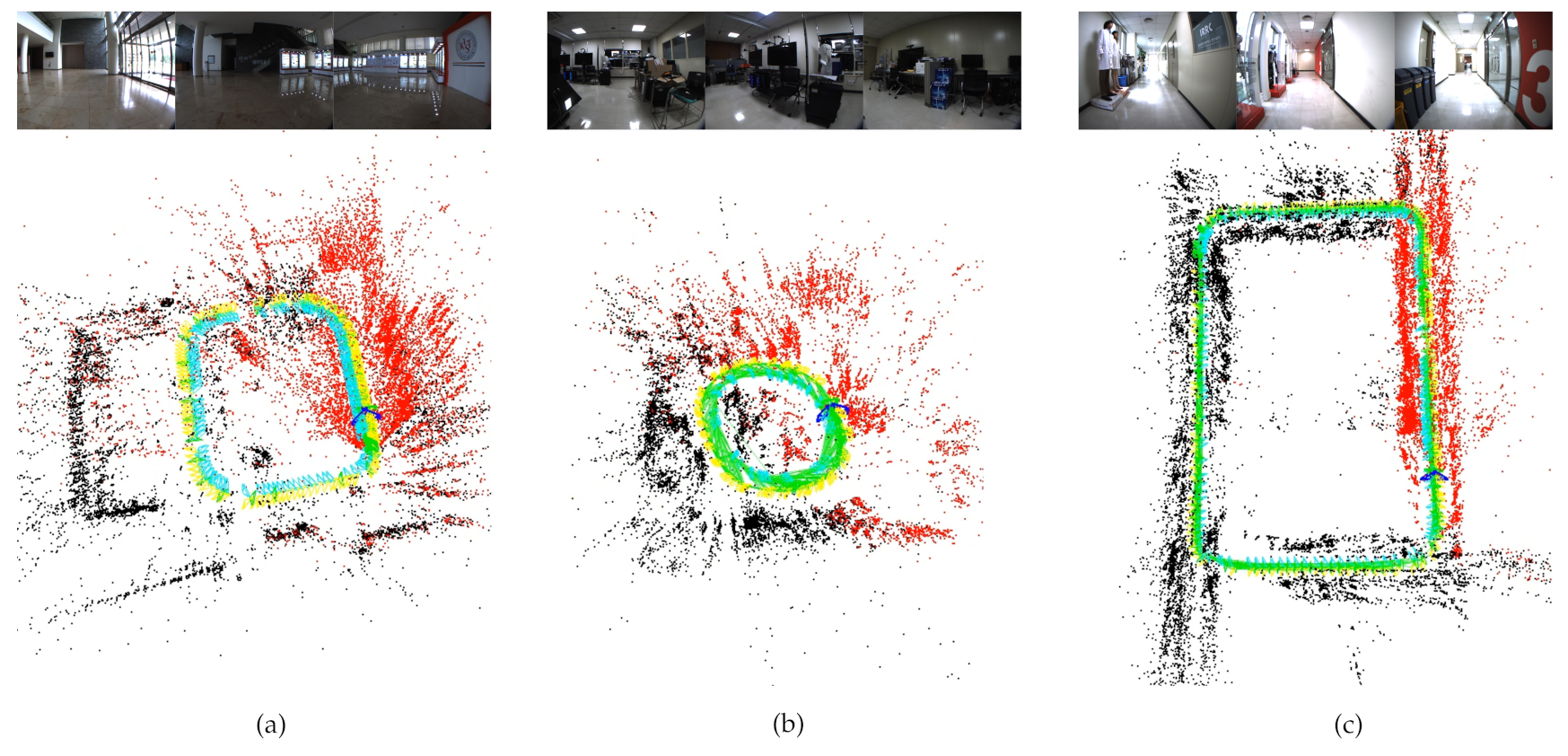

Figure 3 presents the whole process of the multi-camera initialization with the initial estimation of extrinsic parameters. In this figure, the scenario shows the camera rig goes straight and the nonreference cameras are registered respectively. The big boxes represent the sparse map of the SLAM at different timestamps. Each big-box include three small boxes that illustrate the current frames of the corresponding cameras. The map on each timestamp shows the camera trajectory with created keyframes (pyramids) and the map points (red circles). For the localization of nonreference cameras, we look for ORB correspondences between the reference keyframe and the current keyframe of the nonreference camera. As soon as the nonreference camera completes the localization, we calculate the relative poses between the reference and the nonreference camera (blue lines) and complete the multi-camera initialization process.

2.3. Camera Tracking

Since each camera performs independent tracking, we use the original ORB-SLAM2’s tracking thread for the proposed system [

15]. The tracking thread estimates the camera pose and makes a decision when to add a new keyframe. The system extracts FAST corners using the construction of a pyramid. A pyramid represents a group of the same signal or image at different scales, containing upsampling and downsampling by a constant scale factor. The tracking thread uses 8 scale level between pyramid levels with the scale factor 1.2. The extracted FAST corners are then described with oriented FAST and rotated BRIEF (ORB) descriptor. Next, it matches the features between the previous frame and the current frame and performs motion-only bundle adjustment that optimizes only the camera pose with fixed map points. The place recognition gets involved in the case of tracking loss. After the camera pose estimation with a group of feature matches, the system projects the created map onto the current frame and looks for further map point correspondences. In case the system detects all the map points in the frame, the system performs a camera pose optimization. For the making decision of keyframe insertion, the tracking thread works with the local mapping thread. Since the local mapping drops the redundant keyframes, the tracking thread attempts to add new keyframes concerning certain conditions. These conditions are listed as follows:

There should be more than 20 frames processed since the last global relocalization.

There should be more than 20 frames processed since the latest keyframe addition and local mapping should be idle.

There should be at least 50 points tracked on the current frame.

The current frame should track less than points of the reference keyframe.

2.4. Cooperative Local Mapping

The local mapper primarily manages the covisibility between the keyframes via the map points and optimizes the local keyframe poses and the map points using local bundle adjustment (LBA). For that purpose, it follows several steps, and these steps are keyframe insertion, map point culling, new map point insertion, local bundle adjustment. and local keyframe culling.

In the processing of a new keyframe, the local mapper updates the covisibility graph and adds a new node for the current keyframe. Then, it links a current keyframe to another keyframe that shares the most map points in common via updating the spanning-tree. Lastly, it calculates a bag of words (BoW) representation (benefits for the data association to triangulate new map points) of the current keyframe.

The local mapper eliminates the low-quality map points repetitively considering two conditions. The first condition is that a tracking thread must see the map point in more than 25% of the frames predicted to be visible. The second one is that at least three keyframes should observe the map point.

The local mapper creates new map points through triangulating ORB from associated keyframes in the covisibility graph. In case there is an unmatched ORB in the current keyframe, the system looks for a match with another unmatched ORB in other keyframes. The ORB match should meet the epipolar constraint to be accepted. To insert a new map point, the local mapper triangulates ORB pairs and checks both frames if they have a positive depth. Additionally, it checks the reprojection error, parallax, and scale consistency.

The LBA optimizes the local keyframes and all the map points observed by the local keyframes. The local keyframes consist of the current keyframe and the other keyframes which are connected to the current keyframe by the covisibility graph [

15]. Unlike the original ORB-SLAM2, our modified LBA module optimizes the local keyframes and all the map points observed by the local keyframes and the relative poses between cameras simultaneously. Our LBA module gathers information from all of the cameras and optimizes every map component at once. By this means, the system updates the relative poses between the cameras all the time.

Lastly, to keep a functional simplicity, a local mapper searches for the redundant keyframes and eliminates them from the map. Keyframes, where more than 90% of the map points are observable to three other keyframes with the same scale level, are deleted from the map.

2.5. Optimization for an Online Extrinsic Calibration

The proposed system merges the information that comes from multiple cameras to improve the accuracy of the map by an optimization. One possible way is to apply a conventional bundle adjustment which optimizes the map points and all the keyframes registered, even though the keyframes belong to different cameras. However, this requires more degrees of freedom to be optimized than the actual system, because each camera in the rigidly fixed camera system cannot move independently. We utilize the fact that the cameras are rigidly mounted, and thus, the extrinsic parameters must be fixed, while they are not known in advance.

We keep estimating the extrinsic parameters explicitly online by optimizing them along with the other SLAM parameters in the whole sequence. To achieve this, we propose to modify the SLAM formulation in the bundle adjustment. The initial estimates for the extrinsic parameters are obtained by the multi-camera initialization explained in

Section 2.2. We convert the SLAM problem of multiple independent cameras into that of the reference camera with additional observations which are observed by the other cameras by using hypotheses of the extrinsic parameters.

To convert the problem, all the keyframes of nonreference cameras are transformed into the corresponding keyframe of the reference camera, as if all the map points are observed from the reference camera. To transform the keyframes of nonreference cameras into those of the reference camera, the system uses the current estimation of the extrinsic parameters. If there are no corresponding keyframes of the reference camera, the system creates sets of

virtual keyframes for the reference camera. This problem conversion ensures the minimum degree-of-freedom (DOF) parameterization of the unknowns for keyframes to be optimized.

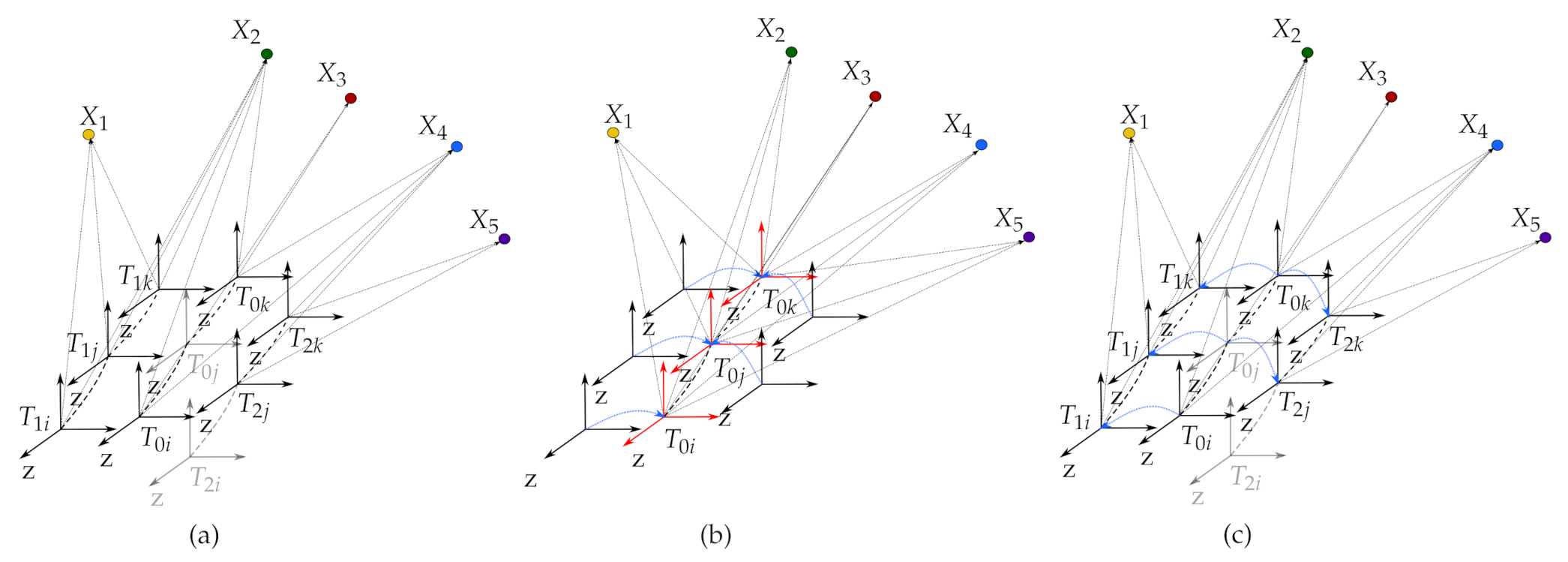

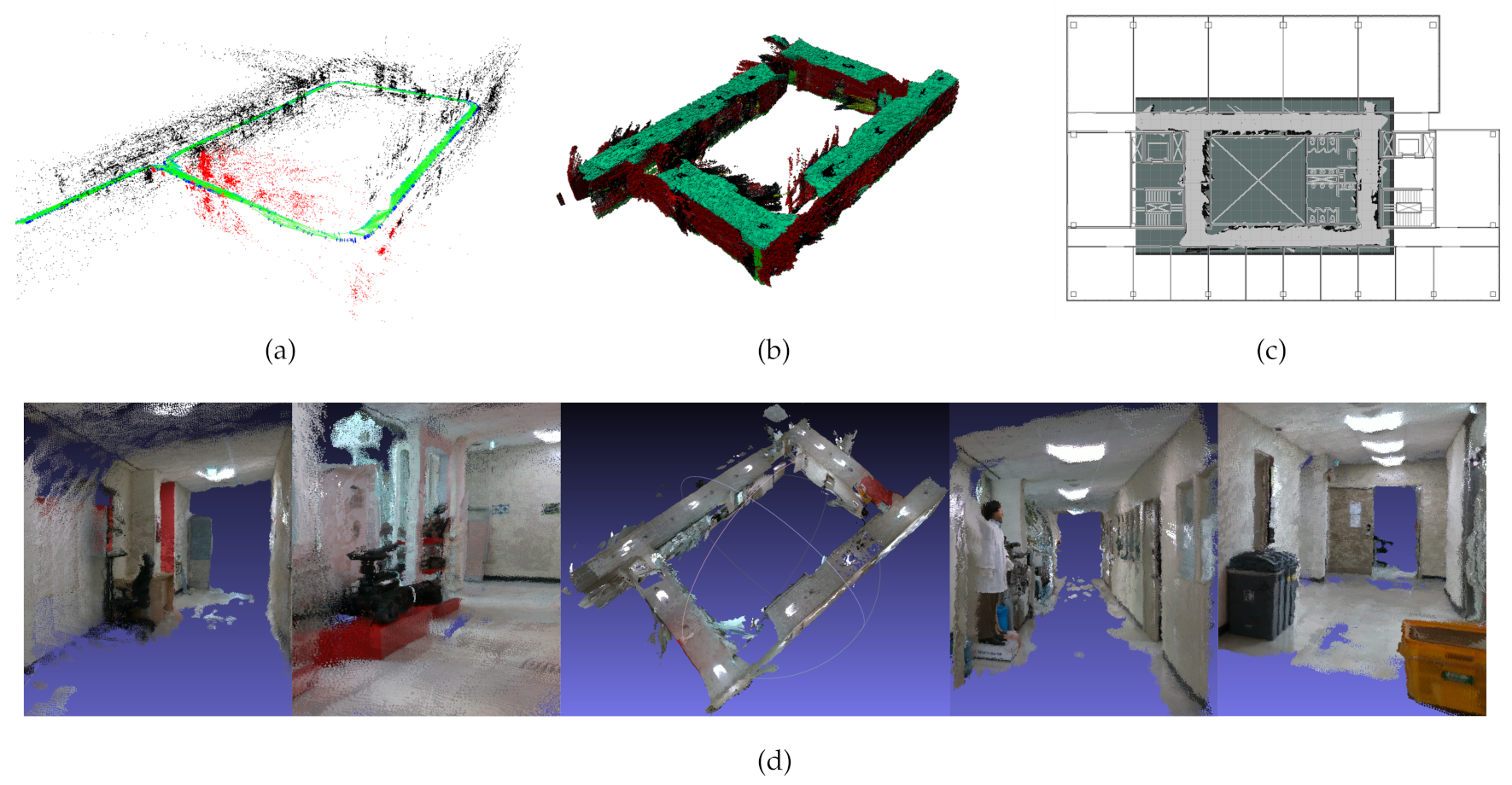

Figure 4 presents how the proposed method lowers the degree of freedom to be optimized. As seen in

Figure 4a, the number of keyframe parameters to be optimized is

=

6DOF), since there are seven keyframes. However, once all the keyframes are transformed into the corresponding keyframe of the reference camera, the number of the keyframe related parameters becomes

=

6DOF +

6DOF), where there are three keyframes with two extrinsic parameters between cameras. This difference becomes bigger as the sequence is longer, and the representation with fewer degrees of freedom utilizes more and stronger constraints that limit the solution space. This would help with the stability of the whole system.

We solve the converted problem as a nonlinear minimization and represent a nonlinear cost function describing reprojection errors. A 3D point is observed by the camera c corresponding reference keyframe k whose pose is represented as in the world coordinate, and its observation is given as .

The cost function is to be minimized is the sum of the squared reprojection errors denoted as:

The projection function

is represented as:

where

and

represent the rotation and translation part of the matrix derived from the following equation:

where

and

represent the rotation and translation of the reference camera’s keyframe while

and

represent the rotation and translation of the extrinsic parameters between the reference camera and the nonreference camera, respectively. The focal length

of the camera with associated principle points

are known in advance. Note that the relative transforms

are also parameters to be optimized as well as the map points

and the keyframe poses

.

Like the ORB-SLAM, all optimizations are solved by a graph-based approach in this study. However, in the proposed method, it is not possible to represent the graph by the binary edge that connects two vertices. The main reason is that all the keyframes of nonreference cameras are transformed into the corresponding keyframe of the reference camera. Thus, the extrinsic parameters as unknowns require multi-edges that make connections to two or more vertices, as shown in

Figure 5. Even when any two cameras do not see a shared map point, we need to use the multi-edges due to the extrinsic parameters. The representation of the multi-edge based graph-based optimization is shown in

Figure 5 for two consecutive timestamps. In this figure, vertices (parameters) are illustrated by circles, and the edges (measurements) are shown as boxes. The scenario presented in

Figure 5 is illustrated based on the

Figure 4 for timestamp

i and

j.

2.6. Loop Closing

Each time the system adds a new keyframe, loop closer searches for possible corresponding keyframes in the map to detect a loop. If a loop is detected, the loop closer first calculates a similarity transformation between the current keyframe and the loop candidate keyframe to check the drift in the loop. Then, the system fixes the pose of the current keyframe and covisible keyframes that are connected to the current keyframe to align both sides of the loop using the computed similarity transformation. Next, it merges the duplicated map points by checking the inliers that match both with the current keyframe and the loop keyframe with its neighbors. In the last step of the loop closing, the conventional global bundle adjustment (GBA) fixes all keyframe poses and map points from the initial keyframe whose id is zero. Unlike the original GBA, we use our GBA module based on the multi-edge-based graph optimization to update the extrinsic parameters between cameras, all the keyframes’ poses, and all the map points in the map. Since LBA optimizes the SLAM components locally, the system needs to update everything from scratch using the proposed GBA with the updated extrinsic parameters.