Investigating the Usability of a Head-Mounted Display Augmented Reality Device in Elementary School Children

Abstract

1. Introduction

1.1. Theoretical and Empirical Background for the Use of HMD-AR in Education

1.2. Usability of HMD-AR-Devices in Education

1.3. The Microsoft HoloLens 2 and Its Potential for Education

1.4. Ethics of Using HMD-AR Devices in Elementary Education

1.5. Aim of the Study

- Evaluation of the overall usability of the HoloLens 2 as an HMD-AR-device;

- Comparison of the provided AR-interaction modes concerning their efficiency;

- Assessment of the children’s interaction preference in HMD-AR;

- Examination of the change in activity-related achievement emotions.

2. Materials and Methods

2.1. Sample

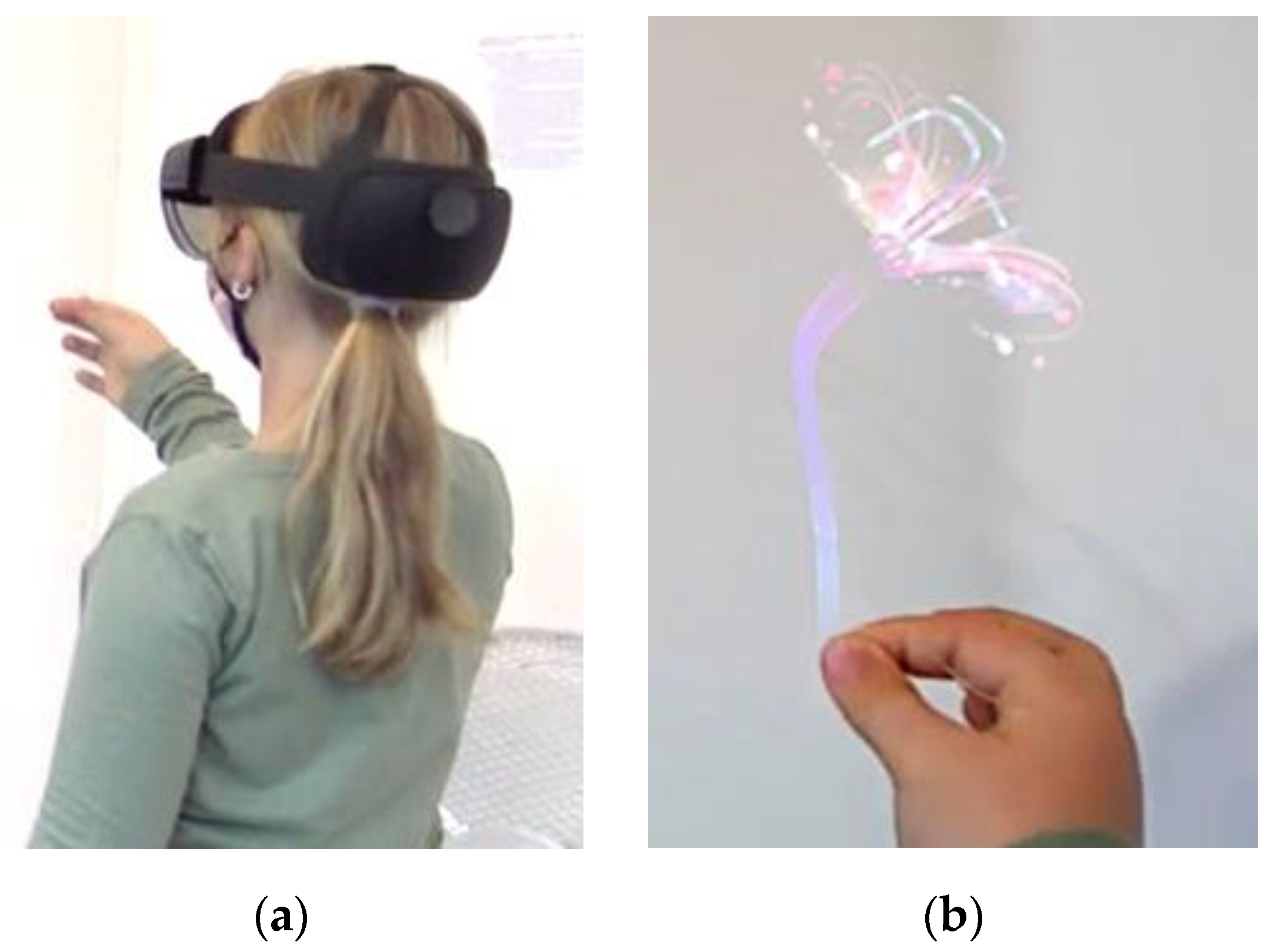

2.2. Study Design

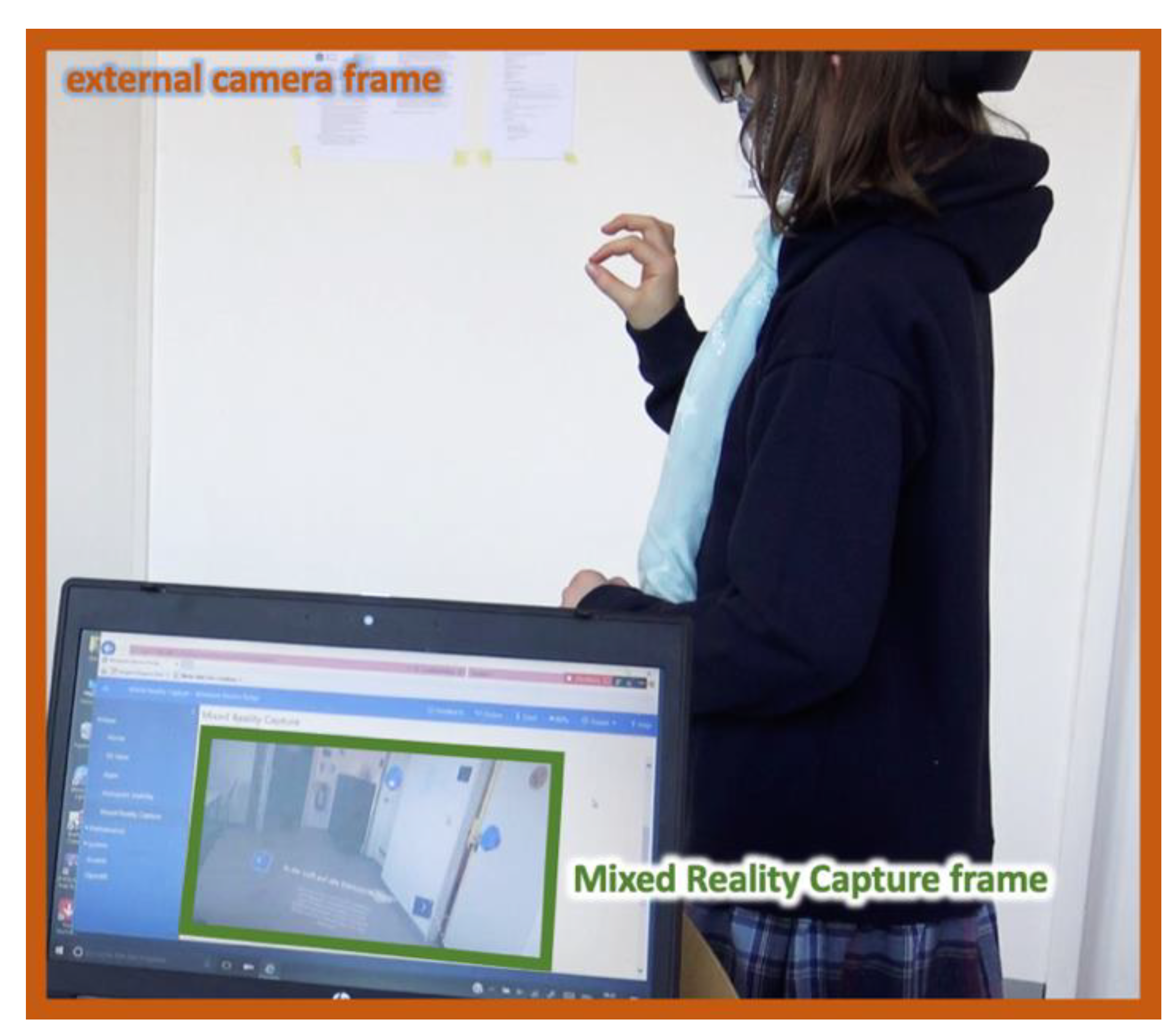

2.3. Procedure and Data Collection

2.4. Data Analysis

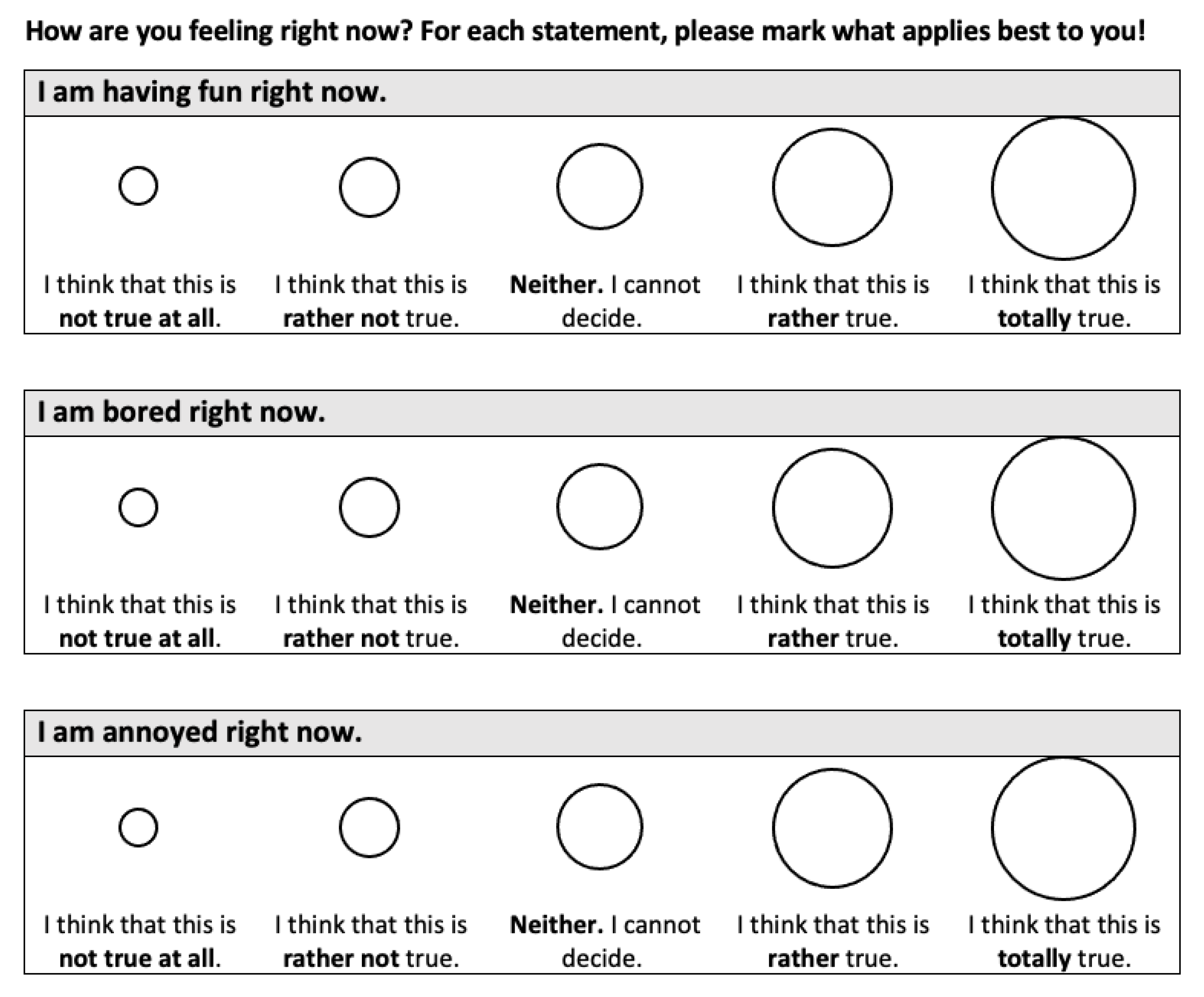

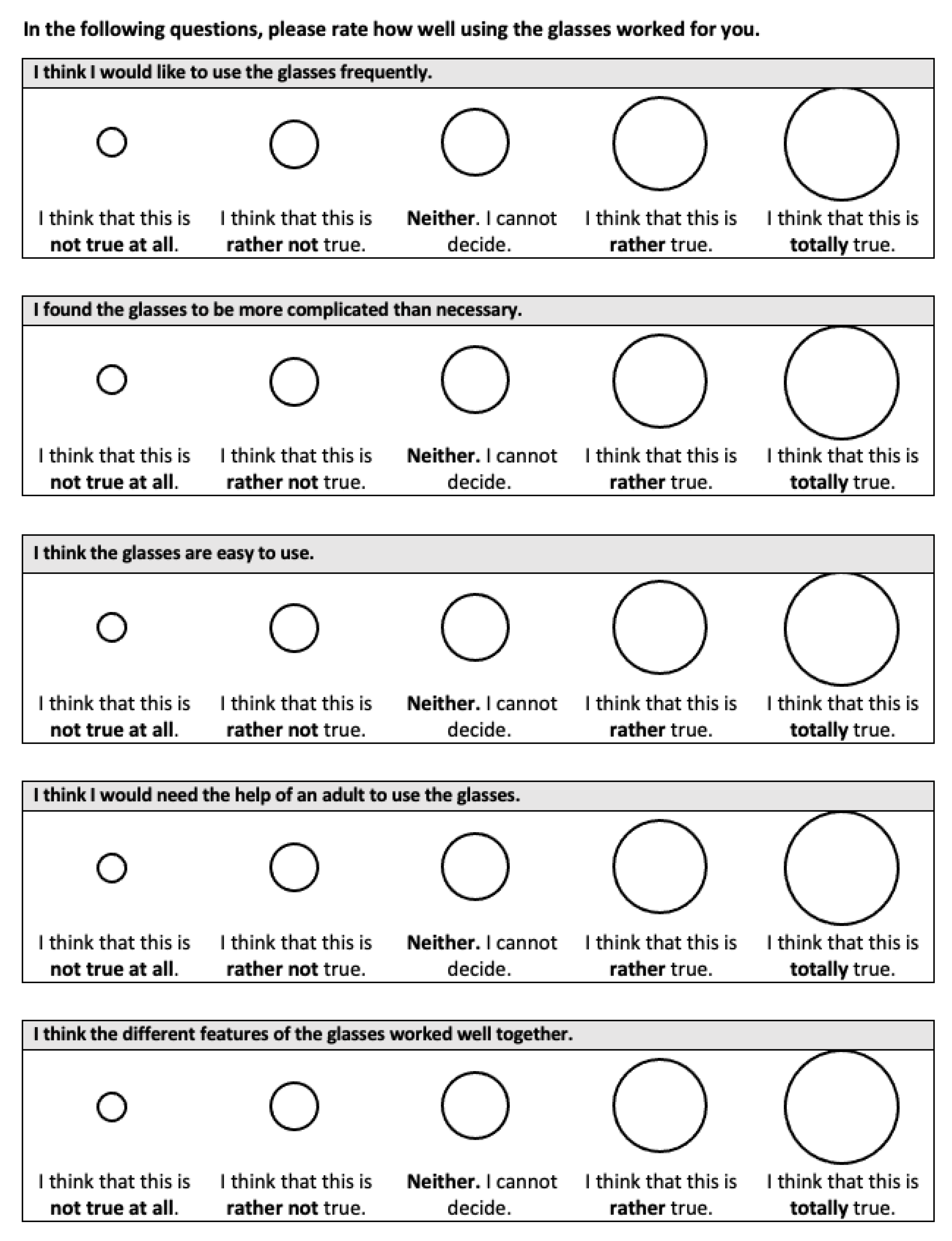

2.4.1. Overall Device Usability

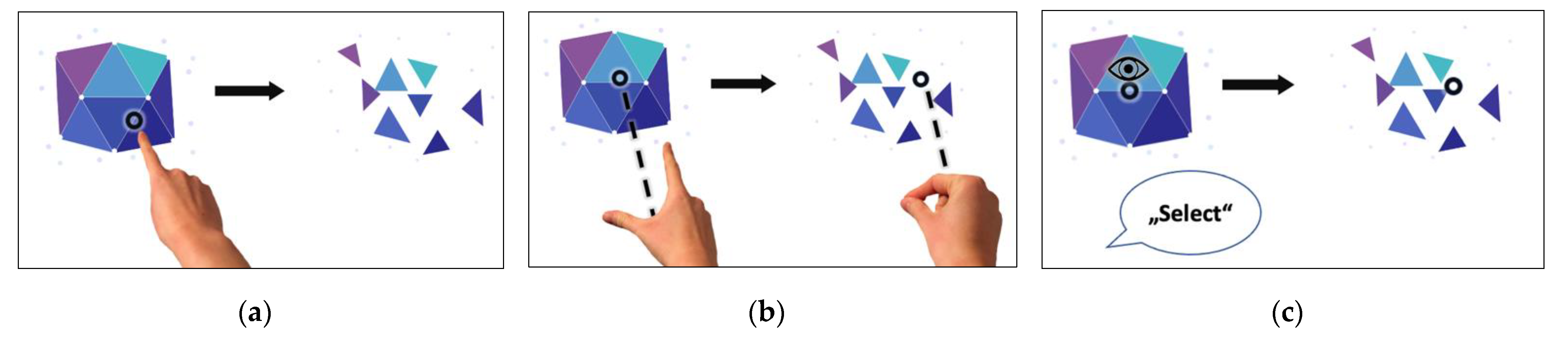

2.4.2. Efficiency of the AR-Interaction Modes

2.4.3. Interaction Preference in AR

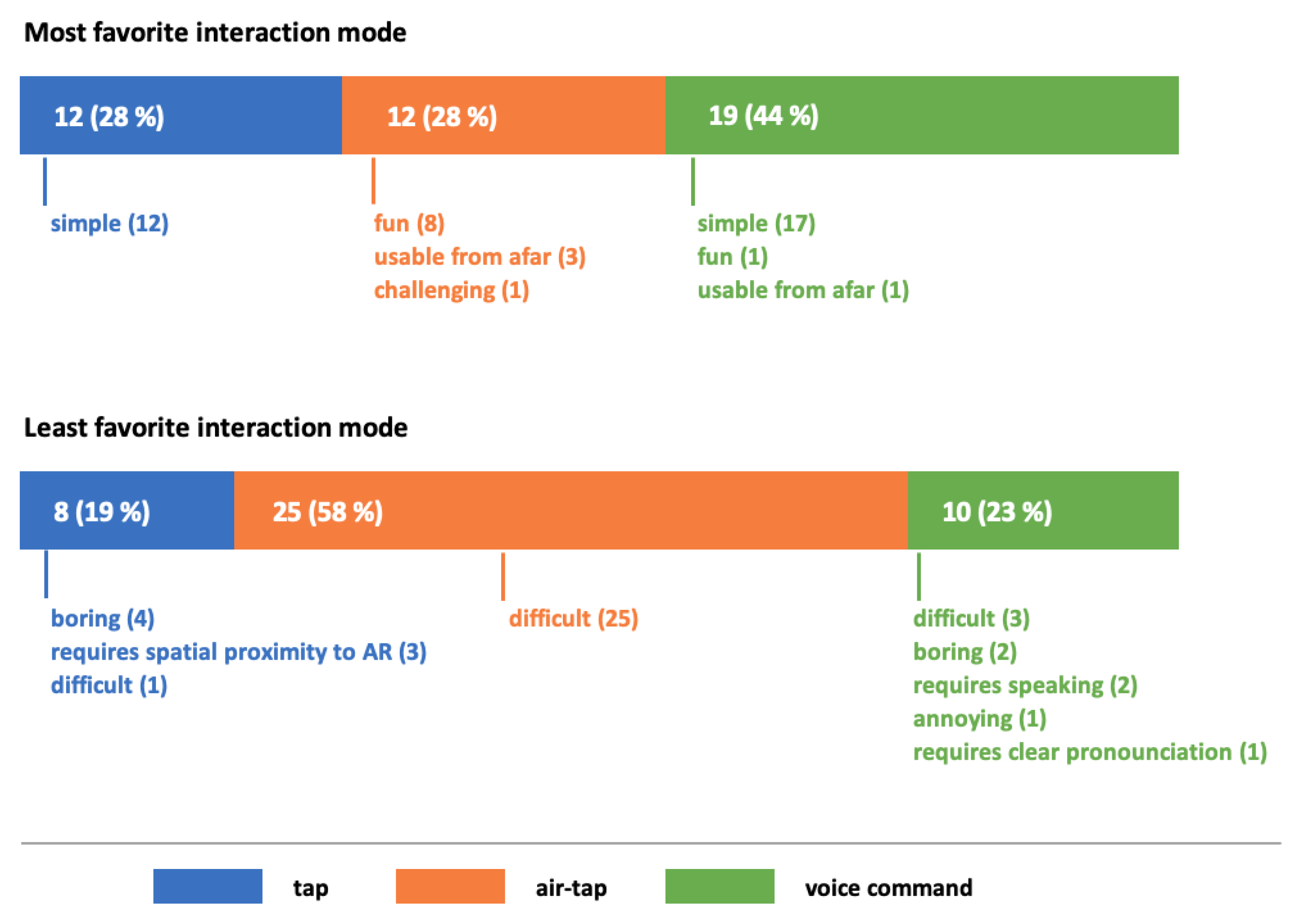

2.4.4. Changes in Activity-Related Achievement Emotions

3. Results

3.1. Overall Device Usability

3.2. Efficiency of the AR-Interaction Modes

3.3. Interaction Preferences in AR

3.4. Changes in Acitivity-Related Achievement Emotions

4. Discussion

4.1. Overall Device Usability

4.2. Efficiency of the AR-Interaction Modes

4.3. Interaction Preferences in AR

4.4. Activity-Related Achievement Emotions

4.5. General Limitations of the Study

4.6. Practical Application

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Pre-test item, e.g., ‘I am having fun right now.’ | |||||

| Answer | ‘Totally disagree’ | ‘Rather disagree’ | ‘Neither’ | ‘Rather agree’ | ‘Totally agree’ |

| Pre-Score X | 3 | 4 | 5 | 6 | 7 |

| Post-test item, e.g., ‘How much fun you are having right now in comparison to before?’ | |||||

| Answer | ‘Much less’ | ‘A little less’ | ‘Unchanged’ | ‘A little more’ | ‘Much more’ |

| Post-Score Y | X − 2 | X − 1 | X | X + 1 | X + 2 |

Appendix B

References

- Azuma, R.; Baillot, Y.; Behringer, R.; Feiner, S.; Julier, S.; MacIntyre, B. Recent advances in augmented reality. IEEE Comput. Graph. Appl. 2001, 21, 34–47. [Google Scholar] [CrossRef]

- Cheng, K.-H.; Tsai, C.-C. Affordances of augmented reality in science learning: Suggestions for future research. J. Sci. Educ. Technol. 2013, 22, 449–462. [Google Scholar] [CrossRef]

- Milgram, P.; Kishino, F. A taxonomy of mixed reality visual displays. IEICE Trans. Inf. Syst. 1994, 77, 1321–1329. [Google Scholar]

- Akçayır, M.; Akçayır, G. Advantages and challenges associated with augmented reality for education: A systematic review of the literature. Educ. Res. Rev. 2017, 20, 1–11. [Google Scholar] [CrossRef]

- Garzón, J.; Acevedo, J. Meta-analysis of the impact of augmented reality on students’ learning gains. Educ. Res. Rev. 2019, 27, 244–260. [Google Scholar] [CrossRef]

- Garzón, J.; Kinshuk; Baldiris, S.; Gutiérrez, J.; Pavón, J. How do pedagogical approaches affect the impact of augmented reality on education? A meta-analysis and research synthesis. Educ. Res. Rev. 2020, 31, 100334. [Google Scholar] [CrossRef]

- Ozdemir, M.; Sahin, C.; Arcagok, S.; Demir, M.K. The effect of augmented reality applications in the learning process: A meta-analysis study. Eurasian J. Educ. Res. 2018, 18, 165–186. [Google Scholar] [CrossRef]

- Ibáñez, M.-B.; Delgado-Kloos, C. Augmented reality for STEM learning: A systematic review. Comput. Educ. 2018, 123, 109–123. [Google Scholar] [CrossRef]

- Lara, O.D.; Labrador, M.A. A survey on human activity recognition using wearable sensors. IEEE Commun. Surv. Tutor. 2013, 15, 1192–1209. [Google Scholar] [CrossRef]

- Vrigkas, M.; Nikou, C.; Kakadiaris, I.A. A review of human activity recognition methods. Front. Robot. AI 2015, 2, 28. [Google Scholar] [CrossRef]

- Santos, M.E.C.; Chen, A.; Taketomi, T.; Yamamoto, G.; Miyazaki, J.; Kato, H. Augmented reality learning experiences: Survey of prototype design and evaluation. IEEE Trans. Learn. Technol. 2014, 7, 38–56. [Google Scholar] [CrossRef]

- Mayer, R. Multimedia Learning, 2nd ed.; Cambridge University Press: New York, NY, USA, 2009. [Google Scholar]

- Strzys, M.P.; Thees, M.; Kapp, S.; Kuhn, J. Smartglasses in STEM laboratory courses—The augmented thermal flux experiment. In Proceedings of the Physics Education Research Conference 2018, Washington, DC, USA, 1–2 August 2018. [Google Scholar] [CrossRef]

- Thees, M.; Kapp, S.; Strzys, M.P.; Beil, F.; Lukowicz, P.; Kuhn, J. Effects of augmented reality on learning and cognitive load in university physics laboratory courses. Comput. Hum. Behav. 2020, 108, 106316. [Google Scholar] [CrossRef]

- Altmeyer, K.; Kapp, S.; Thees, M.; Malone, S.; Kuhn, J.; Brünken, R. The use of augmented reality to foster conceptual knowledge acquisition in STEM laboratory courses—Theoretical background and empirical results. Br. J. Educ. Technol. 2020, 51, 611–628. [Google Scholar] [CrossRef]

- Szajna, A.; Stryjski, R.; Woźniak, W.; Chamier-Gliszczyński, N.; Kostrzewski, M. Assessment of augmented reality in manual wiring production process with use of mobile AR glasses. Sensors 2020, 20, 4755. [Google Scholar] [CrossRef]

- Ainsworth, S. DeFT: A conceptual framework for considering learning with multiple representations. Learn. Instr. 2006, 16, 183–198. [Google Scholar] [CrossRef]

- Evans, G.; Miller, J.; Iglesias Pena, M.; MacAllister, A.; Winer, E. Evaluating the Microsoft HoloLens through an augmented reality assembly application. In Proceedings of the SPIE Defense + Security 2017, Anaheim, CA, USA, 9–13 April 2017; p. 101970V. [Google Scholar] [CrossRef]

- Kapp, S.; Thees, M.; Beil, F.; Weatherby, T.; Burde, J.-P.; Wilhelm, T.; Kuhn, J. A comparative study in an undergraduate physics laboratory course. In Proceedings of the CSEDU 2020: 12th International Conference on Computer Supported Education, Prague, Czech Republic, 2–4 May 2020; Volume 2, pp. 197–206. [Google Scholar] [CrossRef]

- Wilson, M. Six views of embodied cognition. Psychon. Bull. Rev. 2002, 9, 625–636. [Google Scholar] [CrossRef] [PubMed]

- Korbach, A.; Ginns, P.; Brünken, R.; Park, B. Should learners use their hands for learning? Results from an eye-tracking study. J. Comput. Assist. Learn. 2020, 36, 102–113. [Google Scholar] [CrossRef]

- Bujak, K.R.; Radu, I.; Catrambone, R.; MacIntyre, B.; Zheng, R.; Golubski, G. A psychological perspective on augmented reality in the mathematics classroom. Comput. Educ. 2013, 68, 536–544. [Google Scholar] [CrossRef]

- Yuen, S.C.-Y.; Yaoyuneyong, G.; Johnson, E. Augmented reality: An overview and five directions for AR in education. J. Educ. Technol. Dev. Exch. JETDE 2011, 4, 119–140. [Google Scholar] [CrossRef]

- Majeed, Z.H.; Ali, H.A. A review of augmented reality in educational applications. Int. J. Adv. Technol. Eng. Explor. 2020, 7, 20–27. [Google Scholar] [CrossRef][Green Version]

- Dede, C. Immersive interfaces for engagement and learning. Science 2009, 323, 66–69. [Google Scholar] [CrossRef]

- Wu, H.-K.; Lee, S.W.-Y.; Chang, H.-Y.; Liang, J.-C. Current status, opportunities and challenges of augmented reality in education. Comput. Educ. 2013, 62, 41–49. [Google Scholar] [CrossRef]

- Anderson, C.L.; Anderson, K.M. Wearable technology: Meeting the needs of individuals with disabilities and its applications to education. In Perspectives on Wearable Enhanced Learning (WELL); Buchem, I., Klamma, R., Wild, F., Eds.; Springer: Cham, Switzerland, 2019; pp. 59–77. [Google Scholar]

- Barz, M.; Kapp, S.; Kuhn, J.; Sonntag, D. Automatic recognition and augmentation of attended objects in real-time using eye tracking and a head-mounted display. In Proceedings of the ACM Symposium on Eye Tracking Research and Applications, Virtual Event, Germany, 25–27 May 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Arici, F.; Yildirim, P.; Caliklar, Ş.; Yilmaz, R.M. Research trends in the use of augmented reality in science education: Content and bibliometric mapping analysis. Comput. Educ. 2019, 142, 103647. [Google Scholar] [CrossRef]

- Kuhn, J.; Lukowicz, P.; Hirth, M.; Weppner, J. GPhysics—Using google glass as experimental tool for wearable-technology enhanced learning in physics. In Proceedings of the 11th International Conference on Intelligent Environments, Prague, Czech Republic, 15–17 July 2015; pp. 212–219. [Google Scholar] [CrossRef]

- Zhang, H.; Cui, Y.; Shan, H.; Qu, Z.; Zhang, W.; Tu, L.; Wang, Y. Hotspots and trends of virtual reality, augmented reality and mixed reality in education field. In Proceedings of the 6th International Conference of the Immersive Learning Research Network (iLRN 2020), San Luis Obispo, CA, USA, 21–25 June 2020; pp. 215–219. [Google Scholar] [CrossRef]

- Pekrun, R. The control-value theory of achievement emotions: Assumptions, corollaries, and implications for educational research and practice. Educ. Psychol. Rev. 2006, 18, 315–341. [Google Scholar] [CrossRef]

- Pekrun, R.; Goetz, T.; Frenzel, A.C.; Barchfeld, P.; Perry, R.P. Measuring emotions in students’ learning and performance: The achievement emotions questionnaire (AEQ). Contemp. Educ. Psychol. 2011, 36, 36–48. [Google Scholar] [CrossRef]

- Munoz-Cristobal, J.A.; Jorrin-Abellan, I.M.; Asensio-Perez, J.I.; Martinez-Mones, A.; Prieto, L.P.; Dimitriadis, Y. Supporting teacher orchestration in ubiquitous learning environments: A study in primary education. IEEE Trans. Learn. Technol. 2015, 8, 83–97. [Google Scholar] [CrossRef]

- Squire, K.D.; Jan, M. Mad city mystery: Developing scientific argumentation skills with a place-based augmented reality game on handheld computers. J. Sci. Educ. Technol. 2007, 16, 5–29. [Google Scholar] [CrossRef]

- Ibanez, M.-B.; de Castro, A.J.; Delgado Kloos, C. An empirical study of the use of an augmented reality simulator in a face-to-face physics course. In Proceedings of the 2017 IEEE 17th International Conference on Advanced Learning Technologies (ICALT), Timisoara, Romania, 3–7 July 2017; pp. 469–471. [Google Scholar] [CrossRef]

- Radu, I. Augmented reality in education: A meta-review and cross-media analysis. Pers. Ubiquitous Comput. 2014, 18, 1533–1543. [Google Scholar] [CrossRef]

- Nielsen, J. Usability Engineering; Academic Press: San Diego, CA, USA, 1993. [Google Scholar]

- Bourges-Waldegg, P.; Moreno, L.; Rojano, T. The role of usability on the implementation and evaluation of educational technology. In Proceedings of the 33rd Annual Hawaii International Conference on System Sciences, Maui, Hawaii, 4–7 January 2000; Volume 1, p. 7. [Google Scholar] [CrossRef]

- Papakostas, C.; Troussas, C.; Krouska, A.; Sgouropoulou, C. Measuring user experience, usability and interactivity of a personalized mobile augmented reality training system. Sensors 2021, 21, 3888. [Google Scholar] [CrossRef] [PubMed]

- Chang, Y.-L.; Hou, H.-T.; Pan, C.-Y.; Sung, Y.-T.; Chang, K.-E. Apply an augmented reality in a mobile guidance to increase sense of place for heritage places. J. Educ. Technol. Soc. 2015, 18, 166–178. [Google Scholar]

- Radu, I.; MacIntyre, B. Using children’s developmental psychology to guide augmented-reality design and usability. In Proceedings of the 2012 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Atlanta, GA, USA, 5–8 November 2012; pp. 227–236. [Google Scholar] [CrossRef]

- Munsinger, B.; White, G.; Quarles, J. The usability of the Microsoft HoloLens for an augmented reality game to teach elementary school children. In Proceedings of the 11th International Conference on Virtual Worlds and Games for Serious Applications (VS-Games), Vienna, Austria, 4–6 September 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Kennedy, J.; Lemaignan, S.; Montassier, C.; Lavalade, P.; Irfan, B.; Papadopoulos, F.; Senft, E.; Belpaeme, T. Child speech recognition in human-robot interaction: Evaluations and recommendations. In Proceedings of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6 March 2017; pp. 82–90. [Google Scholar] [CrossRef]

- Oviatt, S.; Cohen, P.R. The paradigm shift to multimodality in contemporary computer interfaces. Synth. Lect. Hum.-Cent. Inform. 2015, 8, 1–243. [Google Scholar] [CrossRef]

- Jameson, A.; Kristensson, P.O. Understanding and supporting modality choices. In The Handbook of Multimodal-Multisensor Interfaces: Foundations, User Modeling, and Common Modality Combinations; Oviatt, S., Schuller, B., Cohen, P.R., Sonntag, D., Potamianos, G., Krüger, A., Eds.; Association for Computing Machinery (ACM): New York, NY, USA, 2017; Volume 1, pp. 201–238. [Google Scholar] [CrossRef]

- Oviatt, S.; Schuller, B.; Cohen, P.R.; Sonntag, D.; Potamianos, G.; Krüger, A. Introduction: Scope, trends, and paradigm shift in the field of computer interfaces. In The Handbook of Multimodal-Multisensor Interfaces: Foundations, User Modeling, and Common Modality Combinations; Oviatt, S., Schuller, B., Cohen, P.R., Sonntag, D., Potamianos, G., Krüger, A., Eds.; Association for Computing Machinery (ACM): New York, NY, USA, 2017; pp. 1–15. [Google Scholar] [CrossRef]

- Southgate, E.; Smith, S.P.; Scevak, J. Asking ethical questions in research using immersive virtual and augmented reality technologies with children and youth. In Proceedings of the 2017 IEEE Virtual Reality (VR), Los Angeles, CA, USA, 18–22 March 2017; pp. 12–18. [Google Scholar] [CrossRef]

- Steele, P.; Burleigh, C.; Kroposki, M.; Magabo, M.; Bailey, L. Ethical considerations in designing virtual and augmented reality products—Virtual and augmented reality design with students in mind: Designers’ perceptions. J. Educ. Technol. Syst. 2020, 49, 219–238. [Google Scholar] [CrossRef]

- Slater, M.; Gonzalez-Liencres, C.; Haggard, P.; Vinkers, C.; Gregory-Clarke, R.; Jelley, S.; Watson, Z.; Breen, G.; Schwarz, R.; Steptoe, W.; et al. The ethics of realism in virtual and augmented reality. Front. Virtual Real. 2020, 1, 1. [Google Scholar] [CrossRef]

- Product Safety Warnings and Instructions. Available online: https://support.microsoft.com/en-us/topic/product-safety-warnings-and-instructions-726eab87-f471-4ad8-48e5-9c25f68927ba (accessed on 22 September 2021).

- Riemer, V.; Schrader, C. Mental model development in multimedia learning: Interrelated effects of emotions and self-monitoring. Front. Psychol. 2019, 10, 899. [Google Scholar] [CrossRef]

- Brooke, J. SUS: A “quick and dirty” usability scale. In Usability Evaluation in Industry; Jordan, P.W., Thomas, B., Weerdmeester, B.A., McClelland, I., Eds.; Taylor & Francis: London, UK, 1996; pp. 189–194. [Google Scholar]

- Bruck, S.; Watters, P.A. Estimating cybersickness of simulated motion using the simulator sickness questionnaire (SSQ): A controlled study. In Proceedings of the 2009 Sixth International Conference on Computer Graphics, Imaging and Visualization, Tianjin, China, 11–14 August 2009; pp. 486–488. [Google Scholar] [CrossRef]

- Bangor, A.; Kortum, P.; Miller, J. Determining what individual SUS scores mean: Adding an adjective rating scale. J. Usability Stud. 2009, 4, 114–123. [Google Scholar]

- Vovk, A.; Wild, F.; Guest, W.; Kuula, T. Simulator sickness in augmented reality training using the Microsoft HoloLens. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21 April 2018; pp. 1–9. [Google Scholar] [CrossRef]

- Serio, Á.D.; Ibáñez, M.B.; Kloos, C.D. Impact of an augmented reality system on students’ motivation for a visual art course. Comput. Educ. 2013, 68, 586–596. [Google Scholar] [CrossRef]

- Sung, E.; Mayer, R.E. When graphics improve liking but not learning from online lessons. Comput. Hum. Behav. 2012, 28, 1618–1625. [Google Scholar] [CrossRef]

- Moreno, R.; Mayer, R. Interactive multimodal learning environments. Educ. Psychol. Rev. 2007, 19, 309–326. [Google Scholar] [CrossRef]

- Knörzer, L.; Brünken, R.; Park, B. Emotions and multimedia learning: The moderating role of learner characteristics: Emotions in multimedia learning. J. Comput. Assist. Learn. 2016, 32, 618–631. [Google Scholar] [CrossRef]

- Parong, J.; Mayer, R.E. Learning science in immersive virtual reality. J. Educ. Psychol. 2018, 110, 785–797. [Google Scholar] [CrossRef]

| Dependent Variable | Mean (SD) |

|---|---|

| mean number of attempts for ‘tap’ | 1.001 (0.508) |

| mean number of attempts for ‘air-tap’ | 2.763 (1.549) |

| mean number of attempts for ‘voice command’ | 1.194 (0.771) |

| mean time [s] for ‘tap’ | 1.200 (0.346) |

| mean time [s] for ‘air-tap’ | 16.047 (13.443) |

| mean time [s] for ‘voice command’ | 3.672 (6.007) |

| Dependent Variable | Compared Interaction Modes | Z | p (Two-Tailed) | r (Cohen) |

|---|---|---|---|---|

| mean number of attempts | tap—air-tap | –1.49 | <0.001 *** | 0.227 |

| tap—voice command | 0.22 | 0.306 | ||

| air-tap—voice command | 1.27 | <0.001 *** | 0.193 | |

| mean time | tap—air-tap | –1.95 | <0.001 *** | 0.298 |

| tap—voice command | –1.047 | <0.001 *** | 0.215 | |

| air-tap—voice command | 0.907 | <0.001 *** | 0.138 |

| Dependent Variable | Mean 1 (SD) | Pos. Signs | Neg. Signs | Ties |

|---|---|---|---|---|

| enjoyment-pre | 6.350 (0.613) | 36 | 0 | 7 |

| enjoyment-post | 7.880 (0.981) | |||

| boredom-pre | 3.370 (0.817) | 2 | 30 | 11 |

| boredom-post | 2.090 (1.250) | |||

| frustration-pre | 3.050 (0.213) | 1 | 27 | 15 |

| frustration-post | 1.840 (1.022) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lauer, L.; Altmeyer, K.; Malone, S.; Barz, M.; Brünken, R.; Sonntag, D.; Peschel, M. Investigating the Usability of a Head-Mounted Display Augmented Reality Device in Elementary School Children. Sensors 2021, 21, 6623. https://doi.org/10.3390/s21196623

Lauer L, Altmeyer K, Malone S, Barz M, Brünken R, Sonntag D, Peschel M. Investigating the Usability of a Head-Mounted Display Augmented Reality Device in Elementary School Children. Sensors. 2021; 21(19):6623. https://doi.org/10.3390/s21196623

Chicago/Turabian StyleLauer, Luisa, Kristin Altmeyer, Sarah Malone, Michael Barz, Roland Brünken, Daniel Sonntag, and Markus Peschel. 2021. "Investigating the Usability of a Head-Mounted Display Augmented Reality Device in Elementary School Children" Sensors 21, no. 19: 6623. https://doi.org/10.3390/s21196623

APA StyleLauer, L., Altmeyer, K., Malone, S., Barz, M., Brünken, R., Sonntag, D., & Peschel, M. (2021). Investigating the Usability of a Head-Mounted Display Augmented Reality Device in Elementary School Children. Sensors, 21(19), 6623. https://doi.org/10.3390/s21196623