Confidence-Calibrated Human Activity Recognition

Abstract

:1. Introduction

2. Related Works

2.1. Wearable Sensor-Based HAR Using Deep Learning

2.2. Calibration of Neural Networks

2.3. Ensembling

3. Methods

3.1. Ensembles and Model Calibration

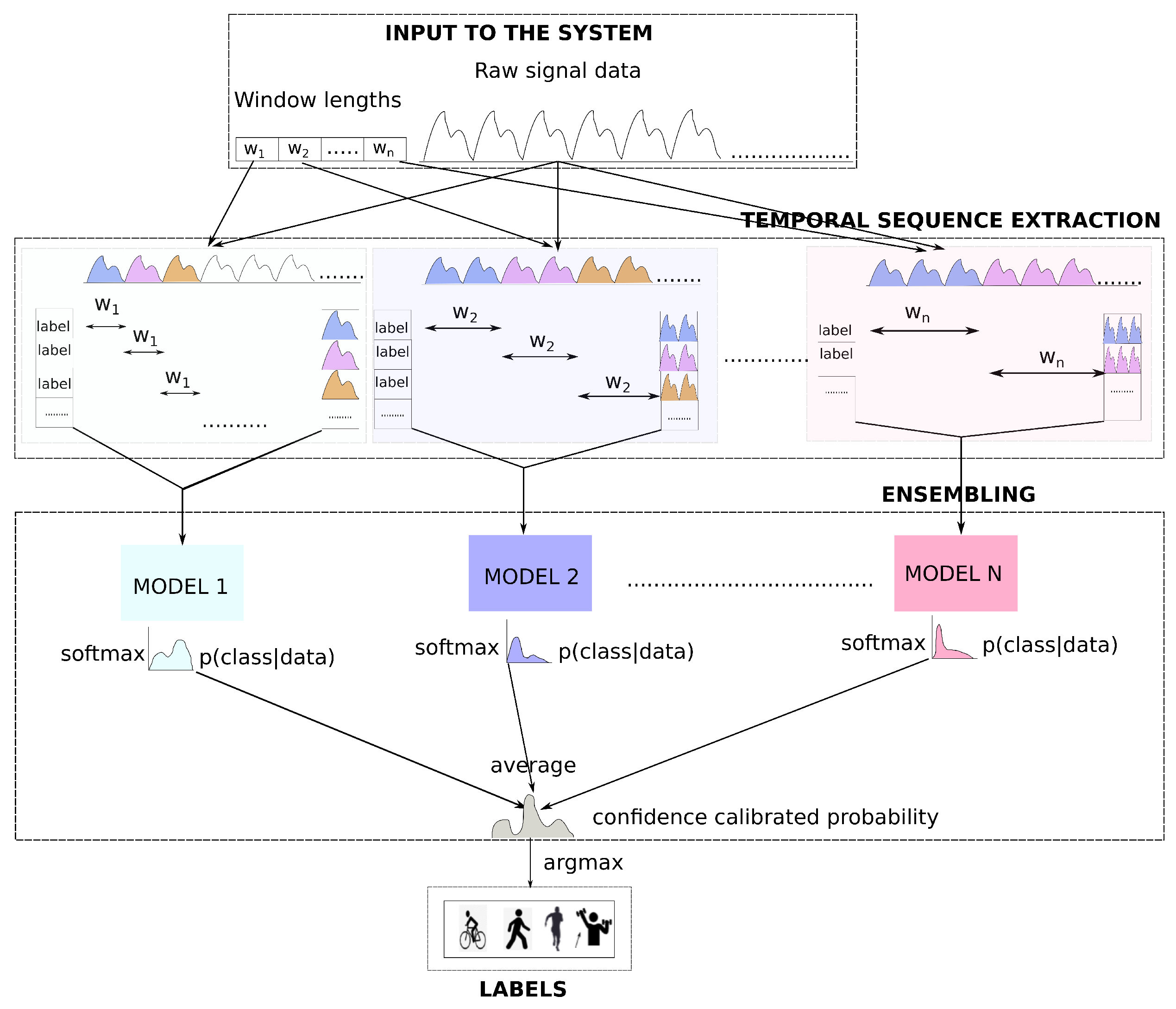

3.2. Deep Time Ensembles

- Extracting temporal sequences from the time series (See temporal sequence extraction module of Figure 1) based on a set of different window sizes.

- Training ensembles based on extracted temporal sequences (See ensembling module of Figure 1).

| Algorithm 1 Deep Time Ensemble—Training |

|

| Algorithm 2 Deep Time Ensemble—Evaluation/Prediction |

|

- Dissimilar activities are associated with different time windows instead of a fixed one as proposed in most of the earlier works. This led to devising DTE that ensembles different temporal representation of the same input signal.

- The observation that the ensembled model also calibrates the predictive output. This in turn results in predictions that represents the true likelihood.

4. Evaluation

- How does DTE fare in calibrating neural network models?

- How does DTE compare with the popular temperature-scaling [14] method of calibration?

- How does DTE compare with the previous work in the downstream classification task?

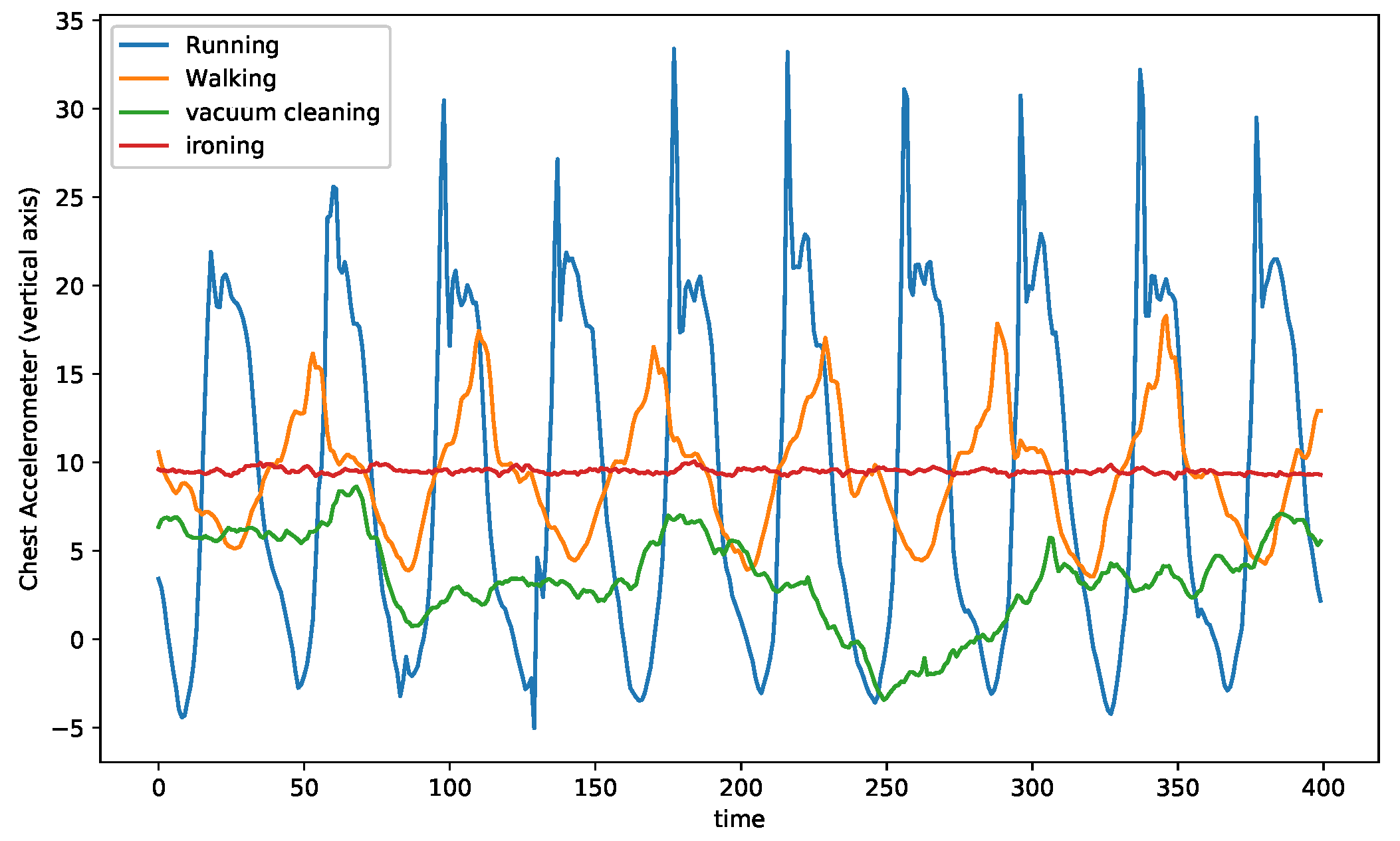

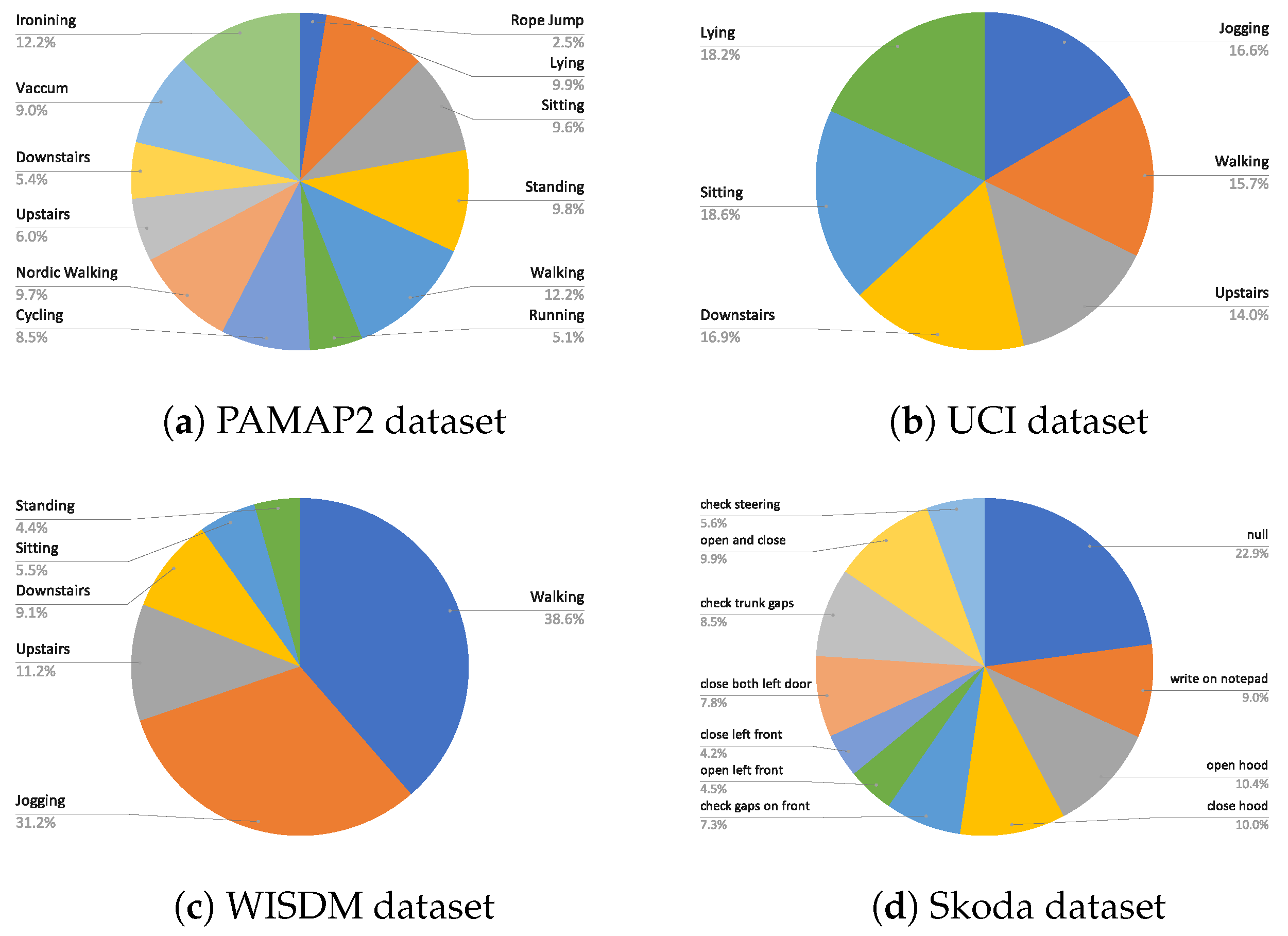

4.1. Datasets

- WISDM dataset:WISDM dataset [10] consists of 36 subjects and 6 activities, namely standing, sitting, downstairs, upstairs, walking, and jogging. The activities were recorded with a tri-axial accelerometer sensor. The training, validation, and evaluation splits for the WISDM dataset are adopted from [20,29]. Users 1–24 form training data, 24 and 25 form the validation data, and 26–36 are used for testing. After preprocessing and windowing of the test split, 3026 test samples for evaluation are obtained.

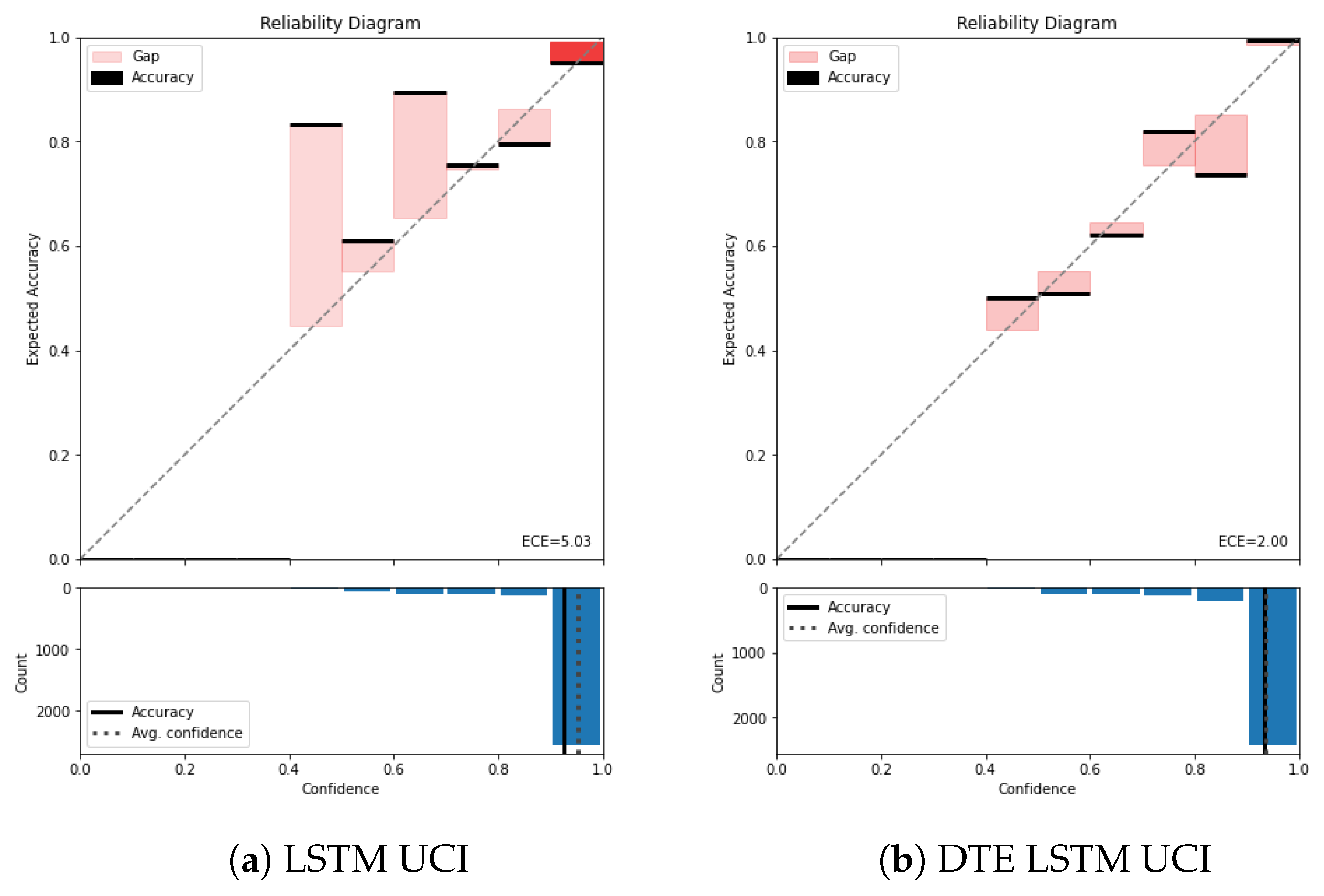

- UCI dataset: The UCI dataset [11] is a public dataset consisting of six activities lying, standing, sitting, downstairs, upstairs, and walking recorded from 30 subjects. The dataset was recorded with a triaxial accelerometer and gyroscope, resulting in six dimensions. Similar to the WISDM dataset, the training, validation, and testing split of this dataset was also adopted from [20]. After preprocessing and windowing, the number of test samples for evaluation is 2993.

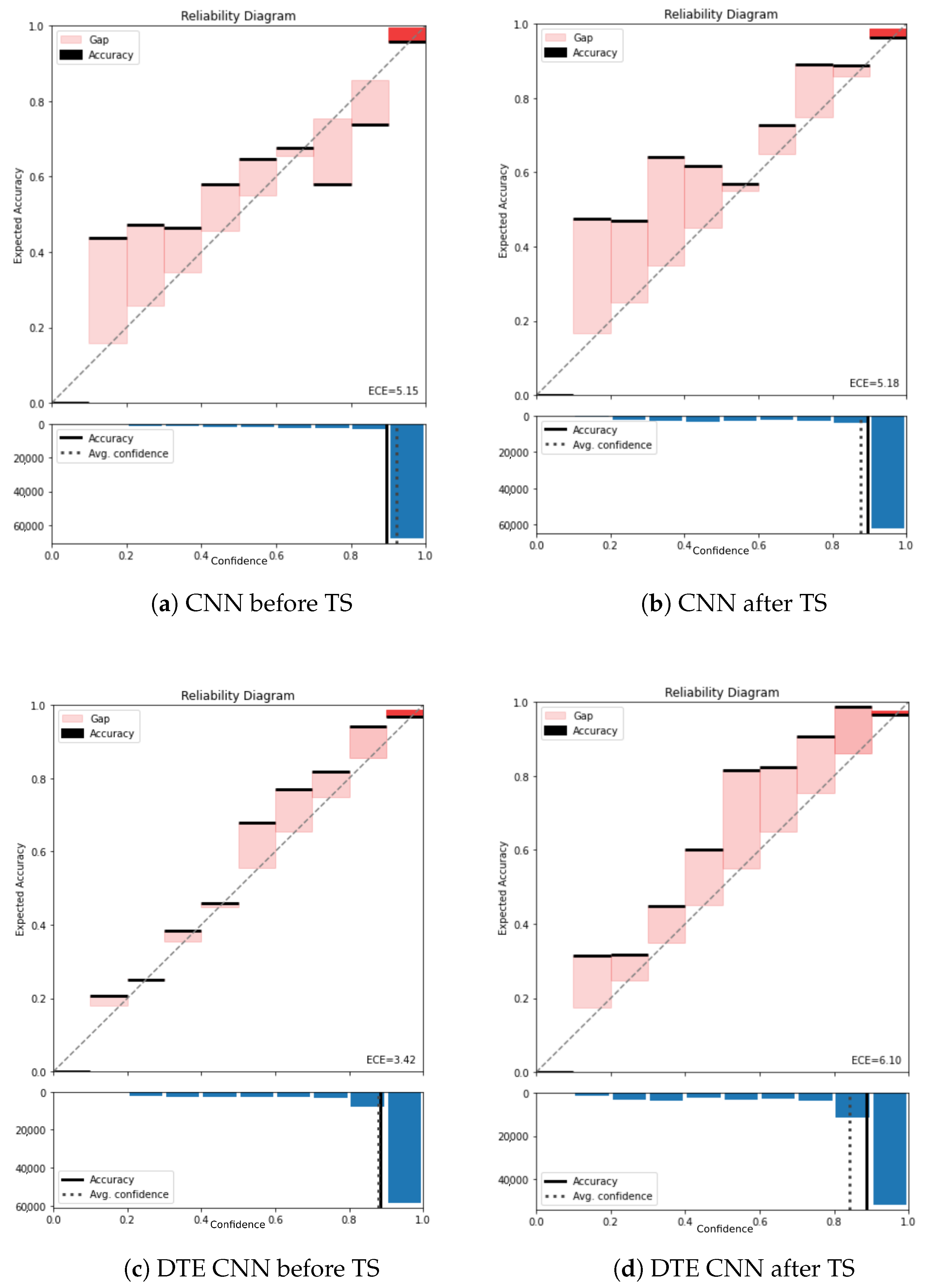

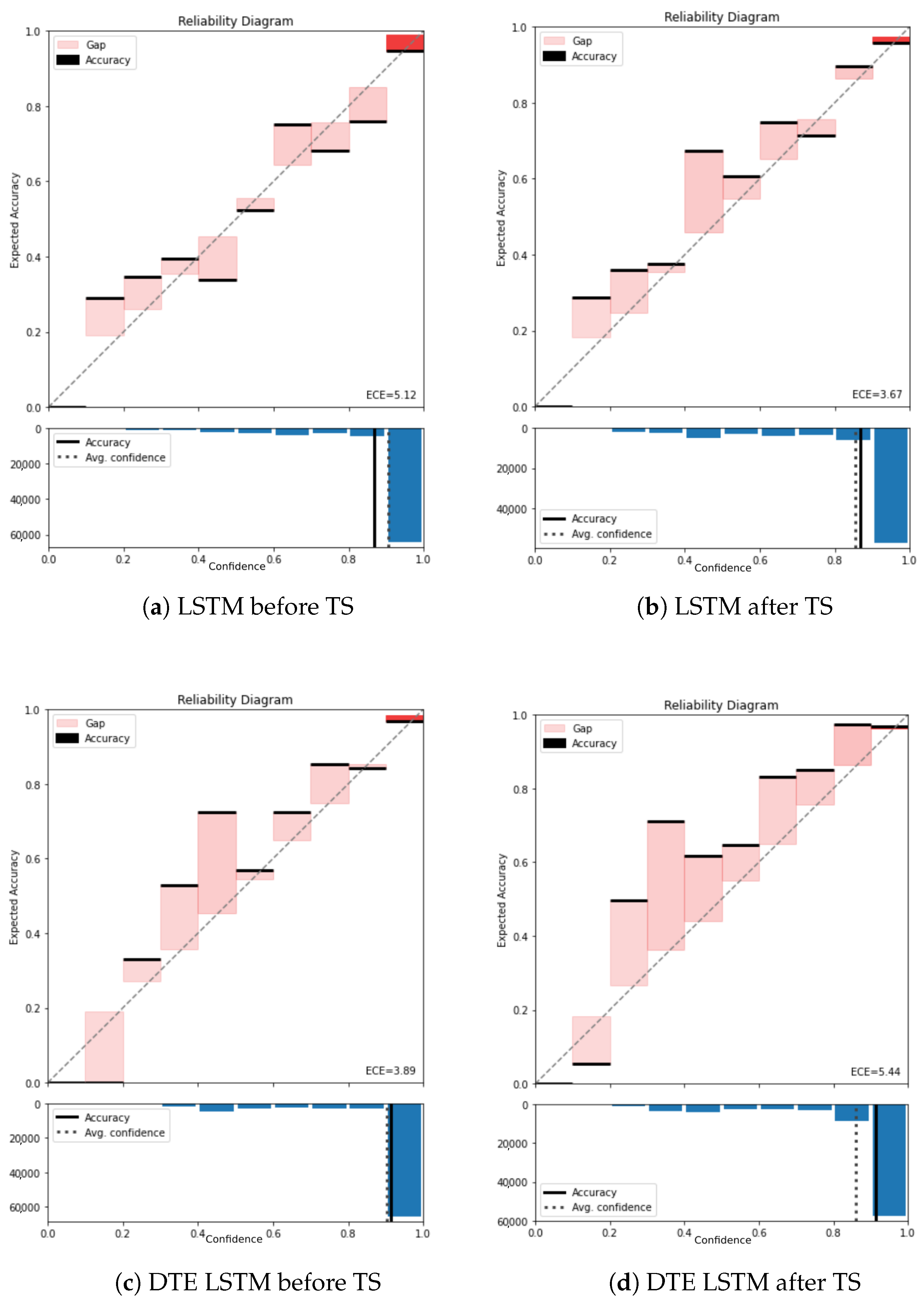

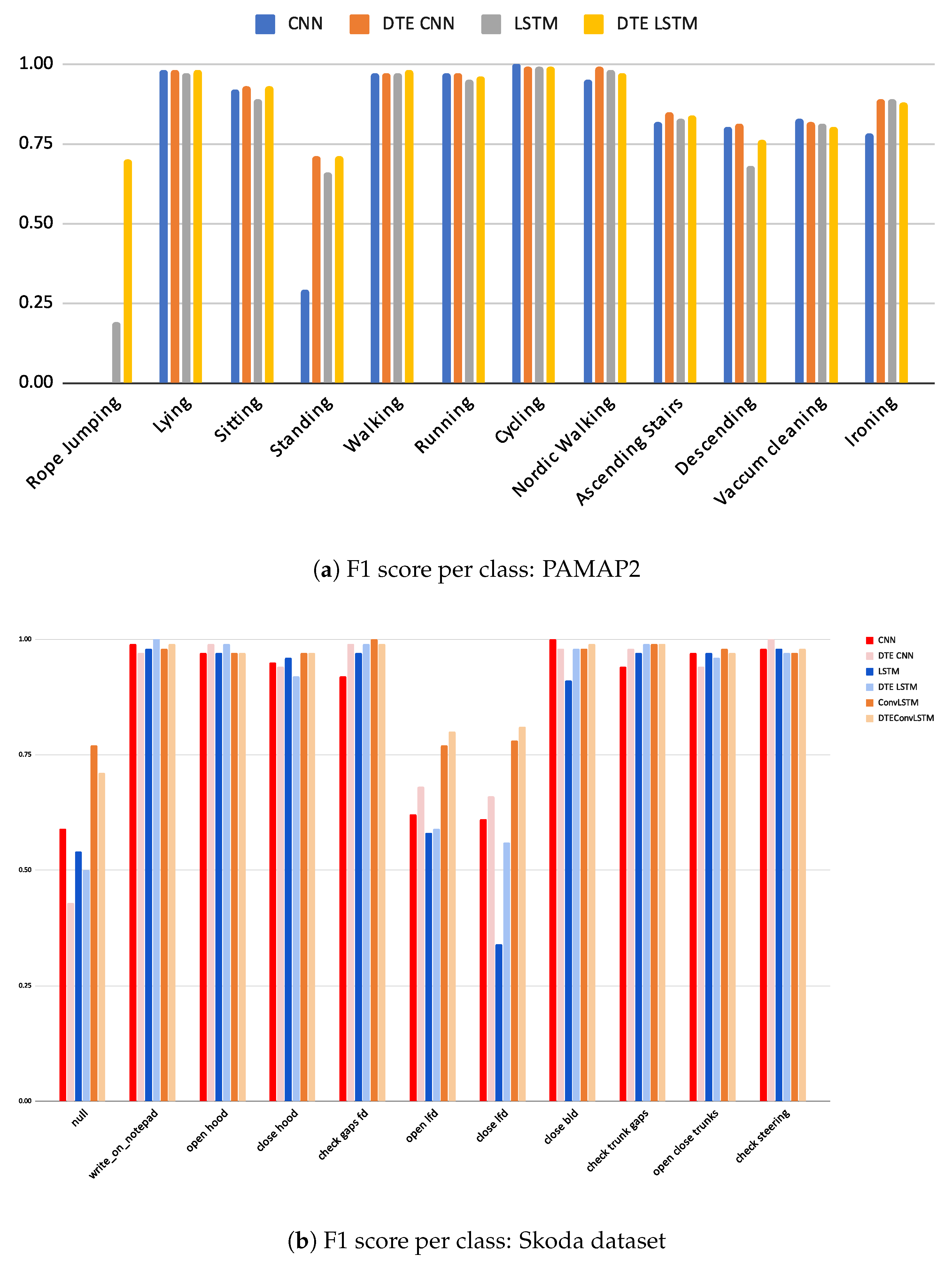

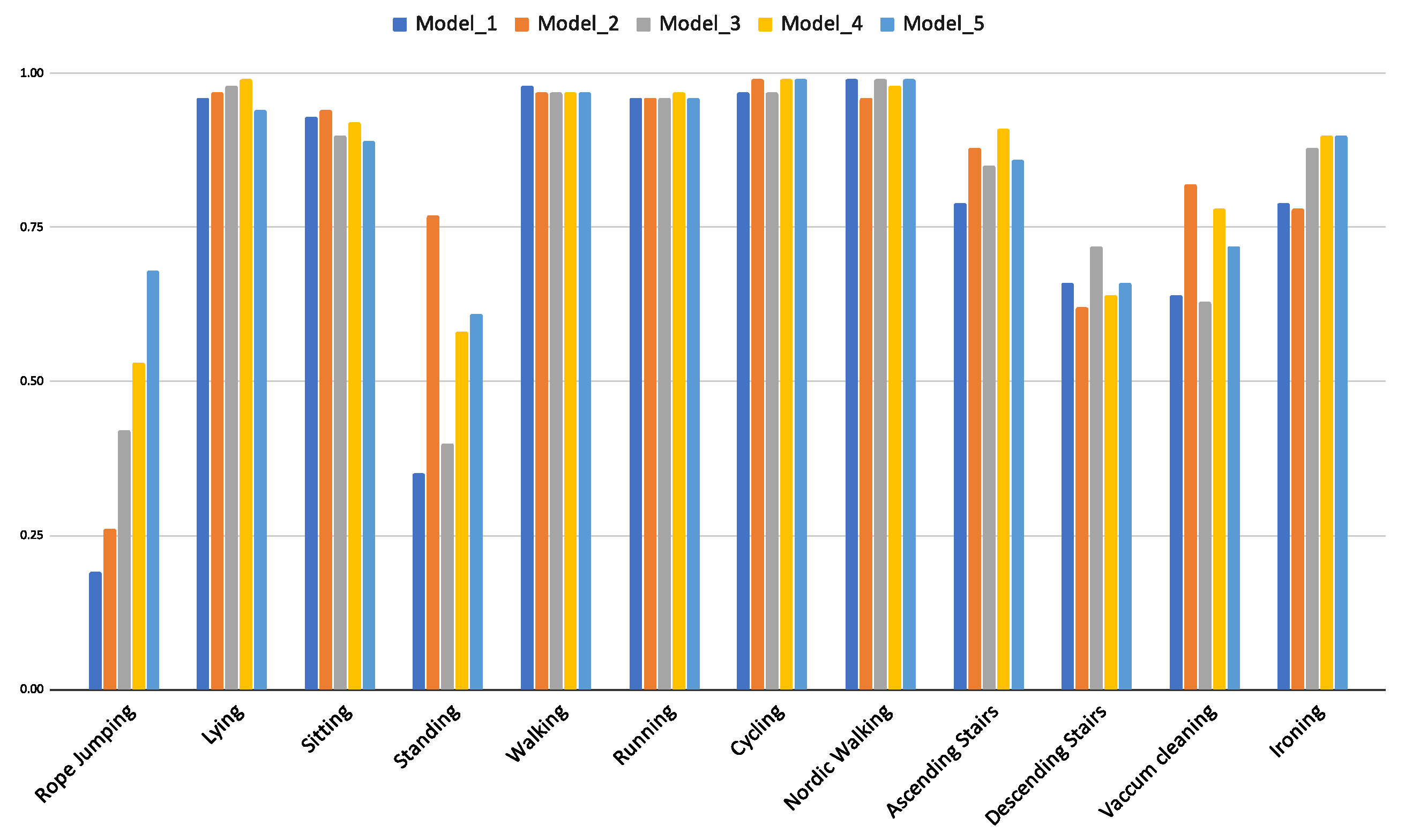

- PAMAP2 dataset: The PAMAP2 dataset [12] consists of 12 activities recorded from nine subjects for over 10 h. It consists of sporting activities, activities of daily life, and other domestic activities. It consists of a wide array of multivariate sensor data (accelerometer, gyroscope, magnetometer, heart rate, etc.), resulting in 52 dimensions. The training, testing, and validation dataset was extracted following the protocol of [30]. Runs 1 and 2 from subject 5 is the validation set, and runs 1 and 2 from subject 6 is the testing set. The rest of the data were used for training. Guan et al. [6] did a thorough sample-wise evaluation on the PAMAP2 dataset in their work. To accommodate a similar evaluation strategy, testing samples from the testing dataset with complete overlap are extracted. This gives 83 K samples for testing. These samples are used to evaluate our method with both [6,30].

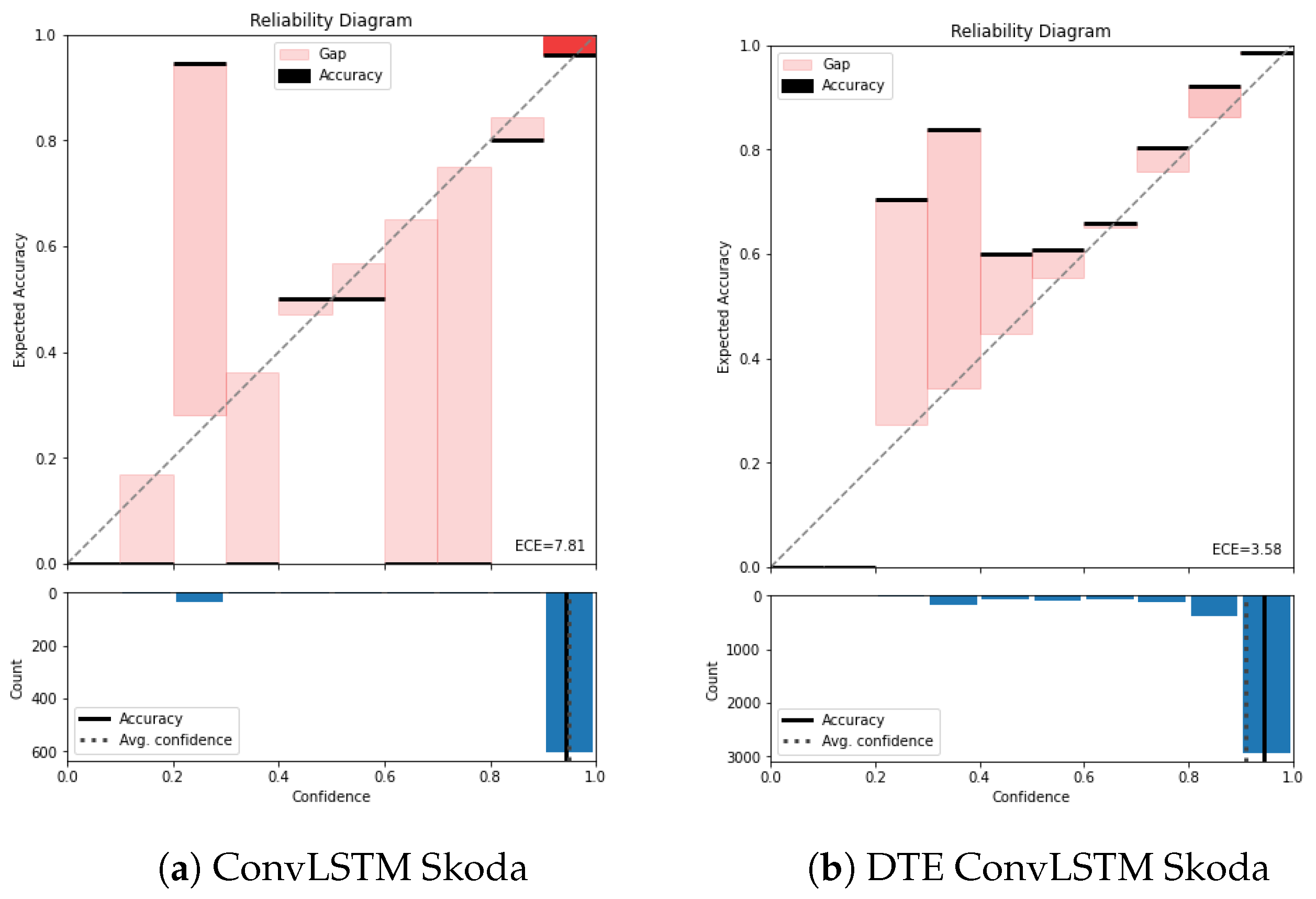

- Skoda dataset: The Skoda dataset [13] is comprised of a collection of 10 manipulative gestures/activities of a factory worker working in the assembly line of a car manufacturing process. The worker wore 20 3D accelerometer sensors. The training/validation/testing splits of the Skoda dataset are adopted from [21]. To create test samples same overlap as training is assumed.

4.2. Model Configuration

4.3. Calibration Results

4.3.1. ECE and Reliability Diagrams

4.3.2. Binwise Calibration

4.3.3. Comparison with Temperature Scaling

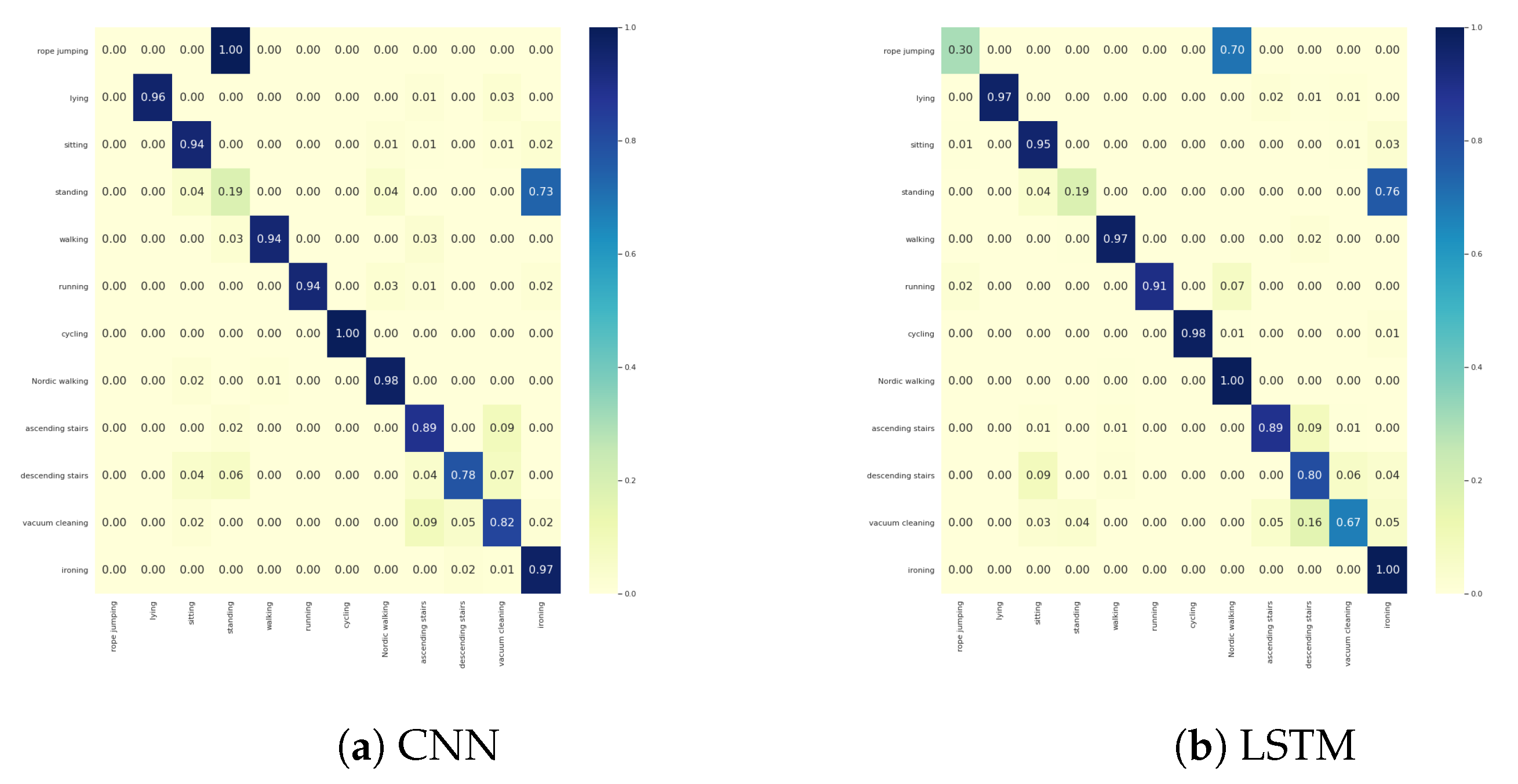

4.4. Classification Results

4.5. Comparison with Standard Ensemble Models

4.6. Window-Size Selection

5. Future Work

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AR | Activity Recognition |

| HAR | Human Activity Recognition |

| DTE | Deep Time Ensembles |

| CNN | Convolutional Neural Networks |

| LSTM | Long Short Term Memory |

| ECE | Expected Calibration Error |

Appendix A. Details of Implementation

| Category | Configuration |

|---|---|

| Window-sizes (s) | [5, 6, 7, 8, 9] |

| Batch size | 64 |

| Optimizer | Adam |

| Learning rate | 0.00001 |

| L2 regularization | 0.005 |

| Convolutional layer 1 | filters: 128 filter size: 9 stride: 1 padding: valid activation: ReLU |

| Pooling layer | function: max size: 2 stride: 2 |

| Dropout rate | 0.1 |

| Convolutional layer 2 | filters: 128 filter size: 5 stride: 1 padding: valid activation: ReLU |

| Pooling layer | function: max size: 2 stride: 2 |

| Dropout rate | 0.25 |

| Flatten and Dense layers | neurons: 512 activation: ReLU weight reg: L2 |

| Dropout rate | 0.5 |

| Fully connected layer | neurons: number of classes activation: Softmax |

| Category | Configuration |

|---|---|

| Window-sizes (s) | [5, 6, 7, 8, 9] |

| Batch size | 64 |

| Optimizer | Adam |

| Learning rate | 0.001 |

| Dropout rate (before LSTM and Dense layers) | 0.5 |

| 2 LSTM layers | neurons: 256 |

| Fully connected layer | neurons: number of classes activation: Softmax |

| Category | Configuration |

|---|---|

| Window sizes (s) | [1.5, 2, 2.5, 3, 3.5] |

| Batch size | 200 |

| Optimizer | Adam |

| Learning rate | 0.0005 |

| L2 regularization | 0.0005 |

| Dropout rate | 0.25 |

| Convolutional layer | filters: 196 filter size: 16 stride: 1 padding: valid activation: ReLU bias init: 0.01 kernel init: TruncNorm(stddev = 0.01) |

| Pooling layer | function: max size: 4 |

| Fully connected layer | neurons: 1024 activation: Softmax bias init: 0.01 weight init: TruncNorm(stddev = 0.01) kernel reg: L2 |

| Category | Configuration |

|---|---|

| Windows sizes | [1.5, 2, 2.5, 3, 3.5] |

| Batch size | 200 |

| Optimizer | Adam |

| Learning rate | 0.001 |

| LSTM layer 1 | neurons: 128 |

| LSTM layer 2 | neurons: 128 return sequence: True |

| Fully connected layer | neurons: number of classes activation: Softmax |

| Category | Configuration |

|---|---|

| Window sizes (s) | [6, 7, 8, 9, 10] |

| Batch size | 200 |

| Optimizer | Adam |

| Learning rate | 0.0005 |

| L2 regularization | 0.0005 |

| Dropout rate | 0.25 |

| Convolutional layer | filters: 196 filter size: 16 stride: 1 padding: valid activation: ReLU bias init: 0.01 kernel init: TruncNorm(stddev = 0.01) |

| Pooling layer | function: max size: 4 |

| Fully connected layer | neurons: 1024 activation: Softmax bias init: 0.01 weight init: TruncNorm(stddev = 0.01) kernel reg: L2 |

| Category | Configuration |

|---|---|

| Window sizes (s) | [6, 7, 8, 9, 10] |

| Batch size | 64 |

| Optimizer | Adam |

| Learning rate | 0.0025 |

| Learning loss rate | 0.0015 |

| 2 LSTM layers | neurons: 30 bias init: 1 |

| Fully connected layer | neurons: number of classes activation: Softmax |

| Parameter | Configuration |

|---|---|

| Window sizes (s) | [4, 4.5, 5, 5.5, 6] |

| Batch size | 100 |

| Optimizer | RMSProp |

| Learning rate | 0.001 |

| Decay | 0.9 |

| Dropout rate (before LSTM and Dense layers) | 0.5 |

| 3 Convolutional layers | filters: 64 filter size: 5 activation: ReLU |

| 2 LSTM layers | SKODA DTE ConvLSTM model configurationneurons: 128 |

| Fully connected layer | neurons: number of classes activation: Softmax |

Appendix B. Calibration Results

Appendix B.1. Calibration Results with Temperature Scaling

Appendix B.2. Classification Results

Appendix C. Number of Ensemble Models

References

- Rassem, A.; El-Beltagy, M.; Saleh, M. Cross-country skiing gears classification using deep learning. arXiv 2017, arXiv:1706.08924. [Google Scholar]

- Avci, A.; Bosch, S.; Marin-Perianu, M.; Marin-Perianu, R.; Havinga, P. Activity recognition using inertial sensing for healthcare, wellbeing and sports applications: A survey. In Proceedings of the 23th International Conference on Architecture of Computing Systems 2010, VDE, Hannover, Germany, 22–23 February 2010; pp. 1–10. [Google Scholar]

- Zhang, M.; Sawchuk, A.A. USC-HAD: A daily activity dataset for ubiquitous activity recognition using wearable sensors. In Proceedings of the 2012 ACM Conference on Ubiquitous Computing, Pittsburgh, PA, USA, 5–8 September 2012; pp. 1036–1043. [Google Scholar]

- Hong, Y.J.; Kim, I.J.; Ahn, S.C.; Kim, H.G. Activity recognition using wearable sensors for elder care. In Proceedings of the 2008 Second International Conference on Future Generation Communication and Networking, Hainan, China, 13–15 December 2008; Volume 2, pp. 302–305. [Google Scholar]

- Gal, Y.; Ghahramani, Z. Dropout as a bayesian approximation: Representing model uncertainty in deep learning. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 20–22 June 2016; pp. 1050–1059. [Google Scholar]

- Guan, Y.; Plötz, T. Ensembles of deep lstm learners for activity recognition using wearables. ACM Interact. Mob. Wearable Ubiquitous Technol. 2017, 1, 1–28. [Google Scholar] [CrossRef] [Green Version]

- Kumar, A.; Liang, P.; Ma, T. Verified uncertainty calibration. arXiv 2019, arXiv:1909.10155. [Google Scholar]

- Dietterich, T.G. Ensemble methods in machine learning. In Lecture Notes in Computer Science, Proceedings of the International Workshop on Multiple Classifier Systems, Cagliari, Italy, 21–23 June 2000; Springer: Berlin/Heidelberg, Germany, 2000; pp. 1–15. [Google Scholar]

- Seo, S.; Seo, P.H.; Han, B. Learning for single-shot confidence calibration in deep neural networks through stochastic inferences. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 9030–9038. [Google Scholar]

- Weiss, G.M.; Yoneda, K.; Hayajneh, T. Smartphone and smartwatch-based biometrics using activities of daily living. IEEE Access 2019, 7, 133190–133202. [Google Scholar] [CrossRef]

- Anguita, D.; Ghio, A.; Oneto, L.; Parra, X.; Reyes-Ortiz, J.L. A public domain dataset for human activity recognition using smartphones. Esann 2013, 3, 3. [Google Scholar]

- Reiss, A.; Stricker, D. Introducing a new benchmarked dataset for activity monitoring. In Proceedings of the 2012 16th International Symposium on Wearable Computers, Newcastle, UK, 18–22 June 2012; pp. 108–109. [Google Scholar]

- Stiefmeier, T.; Roggen, D.; Ogris, G.; Lukowicz, P.; Tröster, G. Wearable activity tracking in car manufacturing. IEEE Pervasive Comput. 2008, 7, 42–50. [Google Scholar] [CrossRef]

- Guo, C.; Pleiss, G.; Sun, Y.; Weinberger, K.Q. On calibration of modern neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Sydney, Australia, 6–11 August 2017; pp. 1321–1330. [Google Scholar]

- Bao, L.; Intille, S.S. Activity recognition from user-annotated acceleration data. In Lecture Notes in Computer Science, Proceedings of the International Conference on Pervasive Computing, Linz/Vienna, Austria, 21–23 April 2004; Springer: Berlin/Heidelberg, Germany, 2004; pp. 1–17. [Google Scholar]

- Casale, P.; Pujol, O.; Radeva, P. Human activity recognition from accelerometer data using a wearable device. In Lecture Notes in Computer Science, Proceedings of the Iberian Conference on Pattern Recognition and Image Analysis, Las Palmas de Gran Canaria, Spain, 8–10 June 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 289–296. [Google Scholar]

- Feng, Z.; Mo, L.; Li, M. A Random Forest-based ensemble method for activity recognition. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 5074–5077. [Google Scholar]

- He, Z.; Jin, L. Activity recognition from acceleration data based on discrete consine transform and SVM. In Proceedings of the 2009 IEEE International Conference on Systems, Man and Cybernetics, San Antonio, TX, USA, 11–14 October 2009; pp. 5041–5044. [Google Scholar]

- He, Z.Y.; Jin, L.W. Activity recognition from acceleration data using AR model representation and SVM. In Proceedings of the 2008 International Conference on Machine Learning and Cybernetics, Kunming, China, 12–15 July 2008; Volume 4, pp. 2245–2250. [Google Scholar]

- Ignatov, A. Real-time human activity recognition from accelerometer data using Convolutional Neural Networks. Appl. Soft Comput. 2018, 62, 915–922. [Google Scholar] [CrossRef]

- Ordóñez, F.J.; Roggen, D. Deep convolutional and lstm recurrent neural networks for multimodal wearable activity recognition. Sensors 2016, 16, 115. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yao, S.; Hu, S.; Zhao, Y.; Zhang, A.; Abdelzaher, T. Deepsense: A unified deep learning framework for time-series mobile sensing data processing. In Proceedings of the 26th International Conference on World Wide Web, Perth, Australia, 3–7 April 2017; pp. 351–360. [Google Scholar]

- Ishimaru, S.; Hoshika, K.; Kunze, K.; Kise, K.; Dengel, A. Towards reading trackers in the wild: Detecting reading activities by EOG glasses and deep neural networks. In Proceedings of the 2017 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2017 ACM International Symposium on Wearable Computers, Maui, HI, USA, 11–15 September 2017; pp. 704–711. [Google Scholar]

- Jiang, W.; Yin, Z. Human activity recognition using wearable sensors by deep convolutional neural networks. In Proceedings of the 23rd ACM International Conference on Multimedia, Brisbane, Australia, 26–30 October 2015; pp. 1307–1310. [Google Scholar]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Murad, A.; Pyun, J.Y. Deep recurrent neural networks for human activity recognition. Sensors 2017, 17, 2556. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, L. Recognition of human activities using continuous autoencoders with wearable sensors. Sensors 2016, 16, 189. [Google Scholar] [CrossRef] [PubMed]

- Agarwal, P.; Alam, M. A lightweight deep learning model for human activity recognition on edge devices. Procedia Comput. Sci. 2020, 167, 2364–2373. [Google Scholar] [CrossRef]

- Hammerla, N.Y.; Halloran, S.; Plötz, T. Deep, convolutional, and recurrent models for human activity recognition using wearables. arXiv 2016, arXiv:1604.08880. [Google Scholar]

- Platt, J. Probabilistic outputs for support vector machines and comparisons to regularized likelihood methods. Adv. Large Margin Classif. 1999, 10, 61–74. [Google Scholar]

- Zadrozny, B.; Elkan, C. Obtaining calibrated probability estimates from decision trees and naive bayesian classifiers. ICML Citeseer 2001, 1, 609–616. [Google Scholar]

- Zadrozny, B.; Elkan, C. Transforming classifier scores into accurate multiclass probability estimates. In Proceedings of the Eighth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Edmonton, AB, Canada, 23–26 July 2002; pp. 694–699. [Google Scholar]

- Naeini, M.P.; Cooper, G.; Hauskrecht, M. Obtaining well calibrated probabilities using bayesian binning. In Proceedings of the AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; Volume 29. [Google Scholar]

- Lakshminarayanan, B.; Pritzel, A.; Blundell, C. Simple and scalable predictive uncertainty estimation using deep ensembles. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 6402–6413. [Google Scholar]

- Song, Y.; Viventi, J.; Wang, Y. Diversity encouraged learning of unsupervised LSTM ensemble for neural activity video prediction. arXiv 2016, arXiv:1611.04899. [Google Scholar]

- Beluch, W.H.; Genewein, T.; Nürnberger, A.; Köhler, J.M. The power of ensembles for active learning in image classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 9368–9377. [Google Scholar]

- DeGroot, M.H.; Fienberg, S.E. The comparison and evaluation of forecasters. J. R. Stat. Soc. Ser. D (The Stat.) 1983, 32, 12–22. [Google Scholar] [CrossRef]

- Reliability Diagrams. Available online: https://github.com/hollance/reliability-diagrams (accessed on 30 April 2021).

- Naftaly, U.; Intrator, N.; Horn, D. Optimal ensemble averaging of neural networks. Netw. Comput. Neural Syst. 1997, 8, 283–296. [Google Scholar] [CrossRef] [Green Version]

- Malinin, A.; Mlodozeniec, B.; Gales, M. Ensemble distribution distillation. arXiv 2019, arXiv:1905.00076. [Google Scholar]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 11–18 December 2015; pp. 4489–4497. [Google Scholar]

- Tran, D.; Wang, H.; Torresani, L.; Ray, J.; LeCun, Y.; Paluri, M. A closer look at spatiotemporal convolutions for action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6450–6459. [Google Scholar]

| Dataset | Previous Works | Architecture | Evaluation | F1-Score (Macro) |

|---|---|---|---|---|

| PAMAP2 | Guan et al. [6] | LSTM | 7 training/2 testing (and validation) | 0.85 |

| Hammerla et al. [30] | CNN | 7 training/2 testing (and validation) | 0.83 | |

| WISDM | Ignatov et al. [20] | CNN | 26 training/10 testing | 0.90 |

| Agarwal et al. [29] | LSTM | 0.7 training/0.3 testing | 0.95 | |

| UCI | Ignatov et al. [20] | CNN | 26 training/10 testing | 0.93 |

| Skoda | Adopted from Hammerla et al. [30] | CNN | 0.8 training/0.2 testing | 0.86 |

| LSTM-s model of Hammerla et al. [30] | LSTM | 0.8 training/0.2 testing | 0.84 | |

| Ordonez et al. [21] | ConvLSTM | 0.8 training/0.2 testing | 0.92 |

| Dataset | Time Window (In Seconds) | No. of Temporal Sequences (Train) | Number of Test Samples |

|---|---|---|---|

| PAMAP2 | 9 | 9658 | 83,031 |

| 8 | 11,268 | ||

| 7 | 13,523 | ||

| 6 | 16,904 | ||

| 5 | 22,541 | ||

| UCI | 3.5 | 4124 | 2993 |

| 3 | 5184 | ||

| 2.5 | 6818 | ||

| 2 | 8677 | ||

| 1.5 | 11,774 | ||

| WISDM | 10 | 7367 | 3026 |

| 9 | 8230 | ||

| 8 | 9303 | ||

| 7 | 10,703 | ||

| 6 | 12,554 | ||

| Skoda | 6 | 4780 | 23,157 |

| 5.5 | 5039 | ||

| 5 | 5327 | ||

| 4.5 | 5484 | ||

| 4 | 5650 |

| Dataset | Architecture | F1 | F1 | Accuracy | ECE |

|---|---|---|---|---|---|

| PAMAP2 | CNN (from Hammerla et al. [30]) | 0.79 ± 0.04 | 0.85 ± 0.03 | 0.86 ± 0.02 | 0.06 ± 0.01 |

| DTE CNN | 0.83 ± 0.03 | 0.89 ± 0.01 | 0.89 ± 0.01 | 0.03 ± 0.005 | |

| LSTM (from Guan et al. [6]) | 0.84 ± 0.02 | 0.85 ± 0.02 | 0.85 ± 0.02 | 0.08 ± 0.02 | |

| DTE LSTM | 0.89 ± 0.01 | 0.89 ± 0.01 | 0.9 ± 0.009 | 0.04 ± 0.008 | |

| UCI | CNN (from Ignatov et al. [20]) | 0.93 ± 0.004 | 0.93 ± 0.004 | 0.94 ± 0.004 | 0.04 ± 0.005 |

| DTE CNN | 0.94 ± 0.003 | 0.94 ± 0.003 | 0.95 ± 0.003 | 0.02 ± 0.004 | |

| LSTM | 0.91 ± 0.02 | 0.92 ± 0.009 | 0.92 ± 0.008 | 0.04 ± 0.003 | |

| DTE LSTM | 0.94 ± 0.003 | 0.94 ± 0.004 | 0.94 ± 0.004 | 0.02 ± 0.001 | |

| WISDM | CNN (from Ignatov et al. [20]) | 0.87 ± 0.01 | 0.91 ± 0.01 | 0.91 ± 0.01 | 0.09 ± 0.007 |

| DTE CNN | 0.88 ± 0.01 | 0.93 ± 0.01 | 0.92 ± 0.01 | 0.04 ± 0.008 | |

| LSTM (from Agarwal et al. [29]) | 0.89 ± 0.01 | 0.9 ± 0.01 | 0.89 ± 0.01 | 0.05 ± 0.009 | |

| DTE LSTM | 0.91 ± 0.008 | 0.93 ± 0.006 | 0.93 ± 0.007 | 0.03 ± 0.01 | |

| Skoda | LSTM (LSTM-s model from [30]) | 0.83 ± 0.02 | 0.88 ± 0.01 | 0.89 ± 0.01 | 0.06 ± 0.003 |

| DTE LSTM | 0.86 ± 0.004 | 0.89 ± 0.001 | 0.89 ± 0.001 | 0.03 ± 0.002 | |

| CNN (from Hammerla et al. [30]) | 0.85 ± 0.01 | 0.88 ± 0.01 | 0.89 ± 0.01 | 0.06 ± 0.003 | |

| DTE CNN | 0.86 ± 0.002 | 0.89 ± 0.001 | 0.90 ± 0.001 | 0.04 ± 0.002 | |

| ConvLSTM (from Ordonez et al. [21]) | 0.92 ± 0.02 | 0.95 ± 0.01 | 0.93 ± 0.01 | 0.08 ± 0.003 | |

| DTE ConvLSTM | 0.93 ± 0.003 | 0.94 ± 0.01 | 0.94 ± 0.004 | 0.03 ± 0.003 |

| Dataset | Architecture | F1 | F1 | Accuracy | ECE |

|---|---|---|---|---|---|

| PAMAP2 | Ensemble CNN | 0.78 ± 0.04 | 0.85 ± 0.04 | 0.86 ± 0.03 | 0.04 ± 0.002 |

| DTE CNN | 0.83 ± 0.03 | 0.89 ± 0.01 | 0.89 ± 0.01 | 0.03 ± 0.005 | |

| Ensemble LSTM | 0.83 ± 0.01 | 0.89 ± 0.02 | 0.88 ± 0.04 | 0.04 ± 0.004 | |

| DTE LSTM | 0.89 ± 0.01 | 0.89 ± 0.01 | 0.9 ± 0.009 | 0.04 ± 0.008 | |

| UCI | Ensemble CNN | 0.93 ± 0.03 | 0.93 ± 0.005 | 0.93 ± 0.004 | 0.03 ± 0.009 |

| DTE CNN | 0.94 ± 0.003 | 0.94 ± 0.003 | 0.95 ± 0.003 | 0.02 ± 0.004 | |

| Ensemble LSTM | 0.93 ± 0.005 | 0.93 ± 0.007 | 0.93 ± 0.006 | 0.04 ± 0.009 | |

| DTE LSTM | 0.94 ± 0.003 | 0.94 ± 0.004 | 0.94 ± 0.004 | 0.02 ± 0.001 | |

| WISDM | Ensemble CNN | 0.86 ± 0.01 | 0.9 ± 0.08 | 0.91 ± 0.06 | 0.04 ± 0.003 |

| DTE CNN | 0.88 ± 0.01 | 0.93 ± 0.01 | 0.92 ± 0.01 | 0.04 ± 0.008 | |

| Ensemble LSTM | 0.9 ± 0.06 | 0.92 ± 0.03 | 0.92 ± 0.04 | 0.04 ± 0.002 | |

| DTE LSTM | 0.91 ± 0.008 | 0.93 ± 0.006 | 0.93 ± 0.007 | 0.03 ± 0.01 | |

| Skoda | Ensemble LSTM | 0.84 ± 0.03 | 0.89 ± 0.01 | 0.89 ± 0.02 | 0.03 ± 0.003 |

| DTE LSTM | 0.86 ± 0.004 | 0.89 ± 0.001 | 0.89 ± 0.001 | 0.03 ± 0.002 | |

| Ensemble CNN | 0.87 ± 0.03 | 0.91 ± 0.02 | 0.91 ± 0.03 | 0.04 ± 0.003 | |

| DTE CNN | 0.86 ± 0.002 | 0.89 ± 0.001 | 0.90 ± 0.001 | 0.04 ± 0.002 | |

| Ensemble ConvLSTM | 0.93 ± 0.02 | 0.94 ± 0.01 | 0.95 ± 0.01 | 0.04 ± 0.004 | |

| DTE ConvLSTM | 0.93 ± 0.003 | 0.94 ± 0.01 | 0.94 ± 0.004 | 0.03 ± 0.003 |

| Dataset | Time-Window Sets (In Seconds) | Accuracy | F1 (Macro) | F1 (Average) |

|---|---|---|---|---|

| PAMAP2 | [3, 4, 5, 6, 7] | 0.9 | 0.86 | 0.88 |

| [5, 6, 7, 8, 9] | 0.9 | 0.89 | 0.9 | |

| [8, 9, 10, 11, 12] | 0.87 | 0.82 | 0.85 | |

| UCI | [1.5, 2, 2.5, 3, 3.5] | 0.94 | 0.94 | 0.95 |

| [2, 2.5, 3, 3.5, 4] | 0.93 | 0.91 | 0.92 | |

| [3.5, 4, 4.5, 5, 5.5] | 0.89 | 0.87 | 0.9 | |

| WISDM | [3, 4, 5, 6, 7] | 0.89 | 0.86 | 0.89 |

| [6, 7, 8, 9, 10] | 0.92 | 0.9 | 0.92 | |

| [8, 9, 10, 11, 12] | 0.86 | 0.82 | 0.85 | |

| Skoda | [2, 2.5, 3, 3.5, 4] | 0.91 | 0.86 | 0.9 |

| [4, 4.5, 5,5. 5,6] | 0.93 | 0.93 | 0.94 | |

| [6, 6.5, 7, 7.5, 8] | 0.88 | 0.85 | 0.88 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Roy, D.; Girdzijauskas, S.; Socolovschi, S. Confidence-Calibrated Human Activity Recognition. Sensors 2021, 21, 6566. https://doi.org/10.3390/s21196566

Roy D, Girdzijauskas S, Socolovschi S. Confidence-Calibrated Human Activity Recognition. Sensors. 2021; 21(19):6566. https://doi.org/10.3390/s21196566

Chicago/Turabian StyleRoy, Debaditya, Sarunas Girdzijauskas, and Serghei Socolovschi. 2021. "Confidence-Calibrated Human Activity Recognition" Sensors 21, no. 19: 6566. https://doi.org/10.3390/s21196566

APA StyleRoy, D., Girdzijauskas, S., & Socolovschi, S. (2021). Confidence-Calibrated Human Activity Recognition. Sensors, 21(19), 6566. https://doi.org/10.3390/s21196566