An Improvement of the Fire Detection and Classification Method Using YOLOv3 for Surveillance Systems

Abstract

:1. Introduction

- (1)

- We created a large dataset for the fire detection area with various scenarios of fire and flame (day and night), which will be made publicly available on the Internet. In a deep CNN, important features are learned using large databases to predict accurately and overcome overfitting problems.

- (2)

- We propose a YOLOv3-based improved fire detection approach to increase the level of robustness and eliminate the time-consuming process.

- (3)

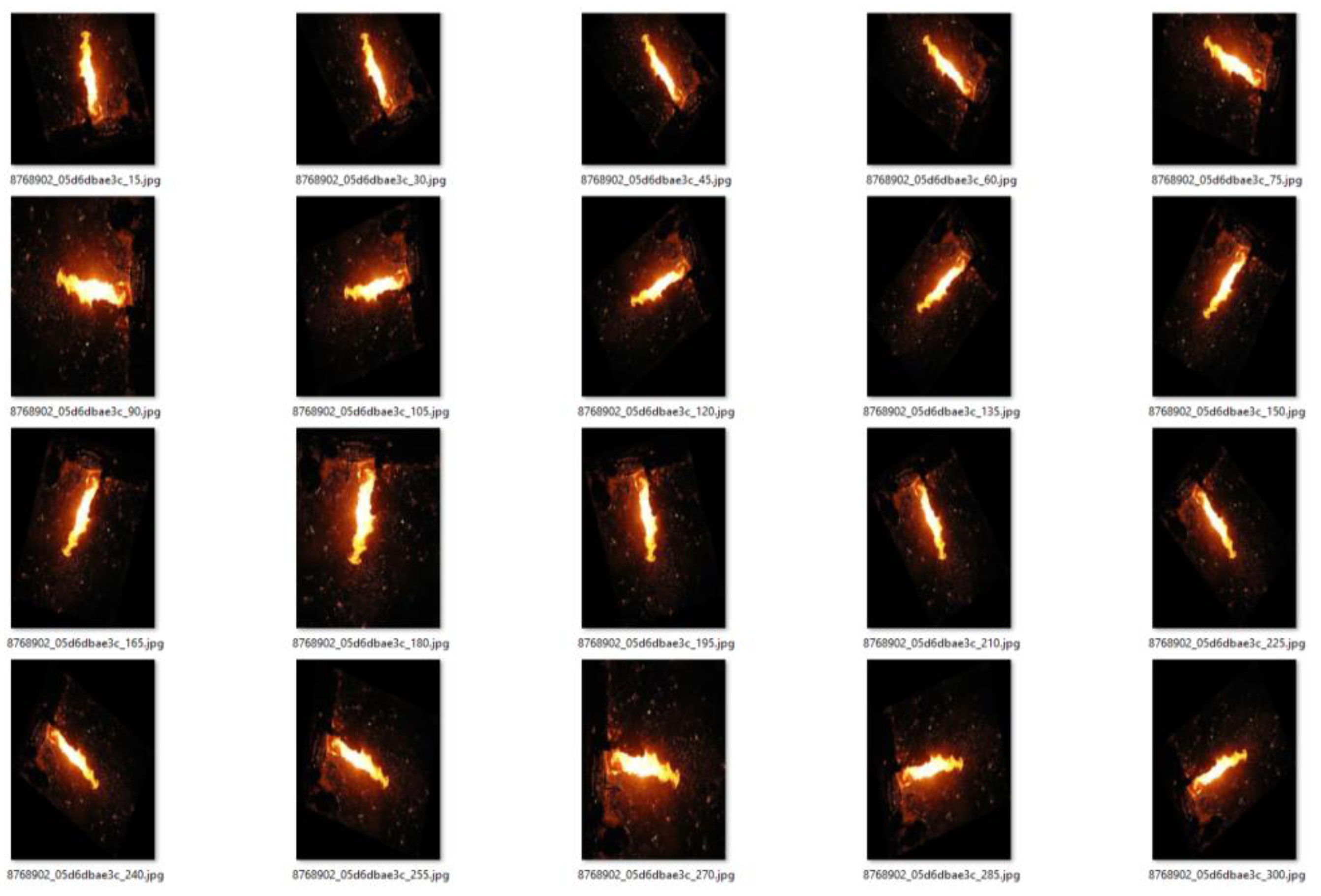

- A method was developed to automatically move labeled bounded boxes when the fire dataset is turned to 15° every time.

- (4)

- We used independent logistic classifiers and binary cross-entropy loss in YOLOv3 for class predictions during training. It has the advantage of being much faster than other detection networks with comparable performance.

- (5)

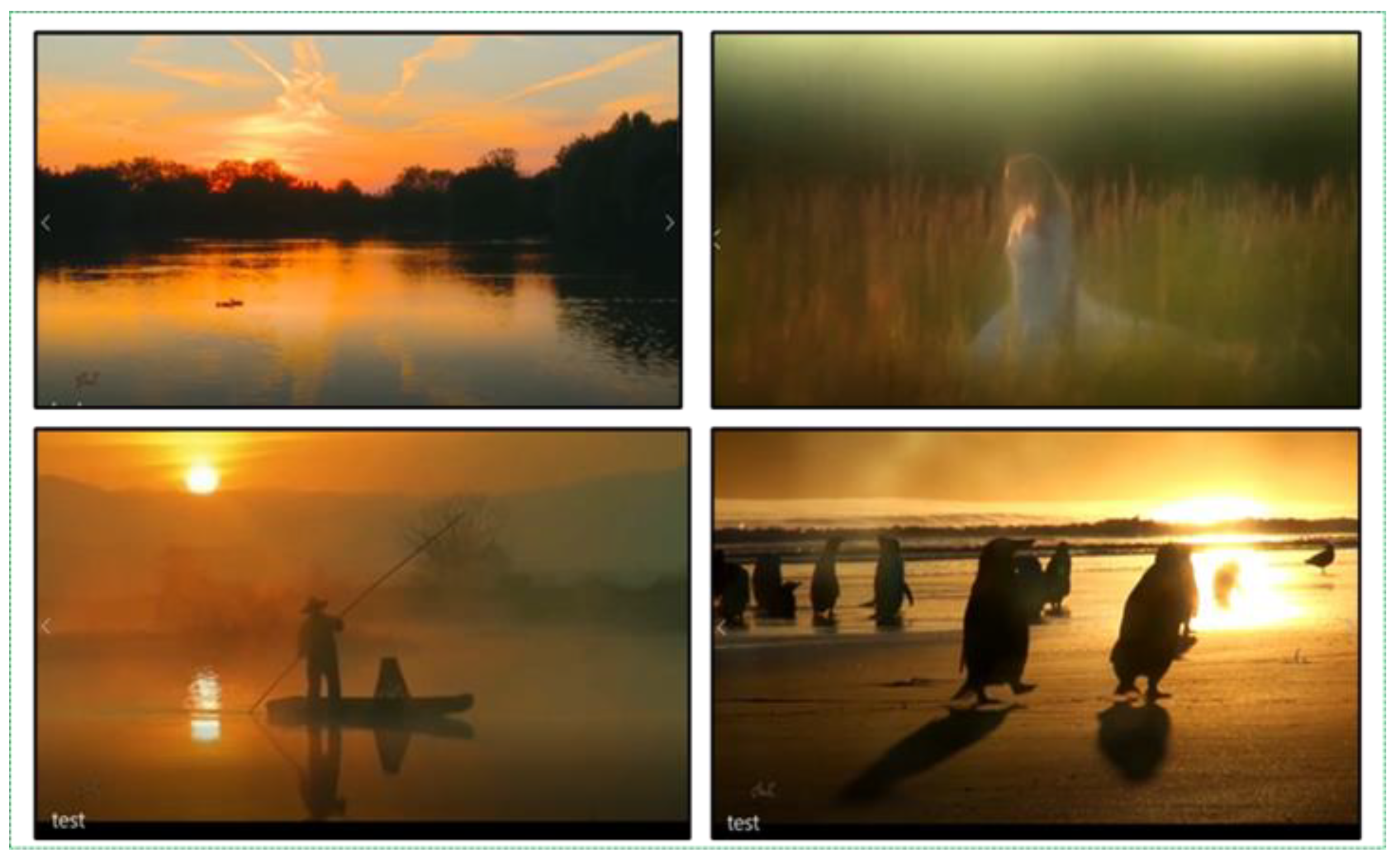

- We reduced the number of false positives in the fire detection process by using fire-like images and removing low-resolution images from the dataset. In addition, it significantly decreased the average precision rate of inaccurately detecting small fire regions.

2. Related Work

2.1. Computer Vision and Image Processing Approaches for Fire and Smoke Detection

2.2. Deep Learning Approaches for Fire and Smoke Detection

3. Proposed Fire Detection Architecture

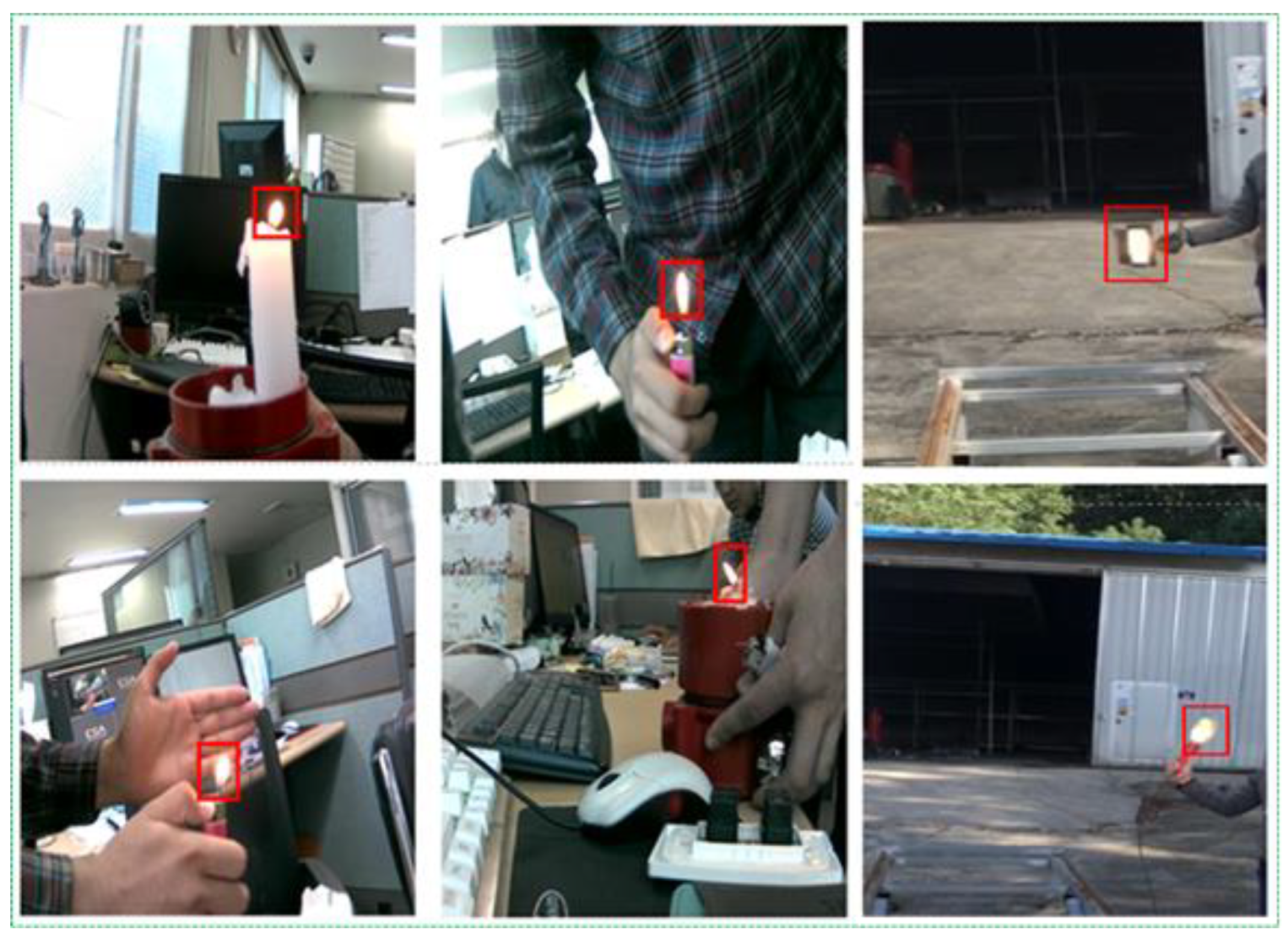

3.1. Dataset

3.2. System Overview

3.3. Fire Detection Process

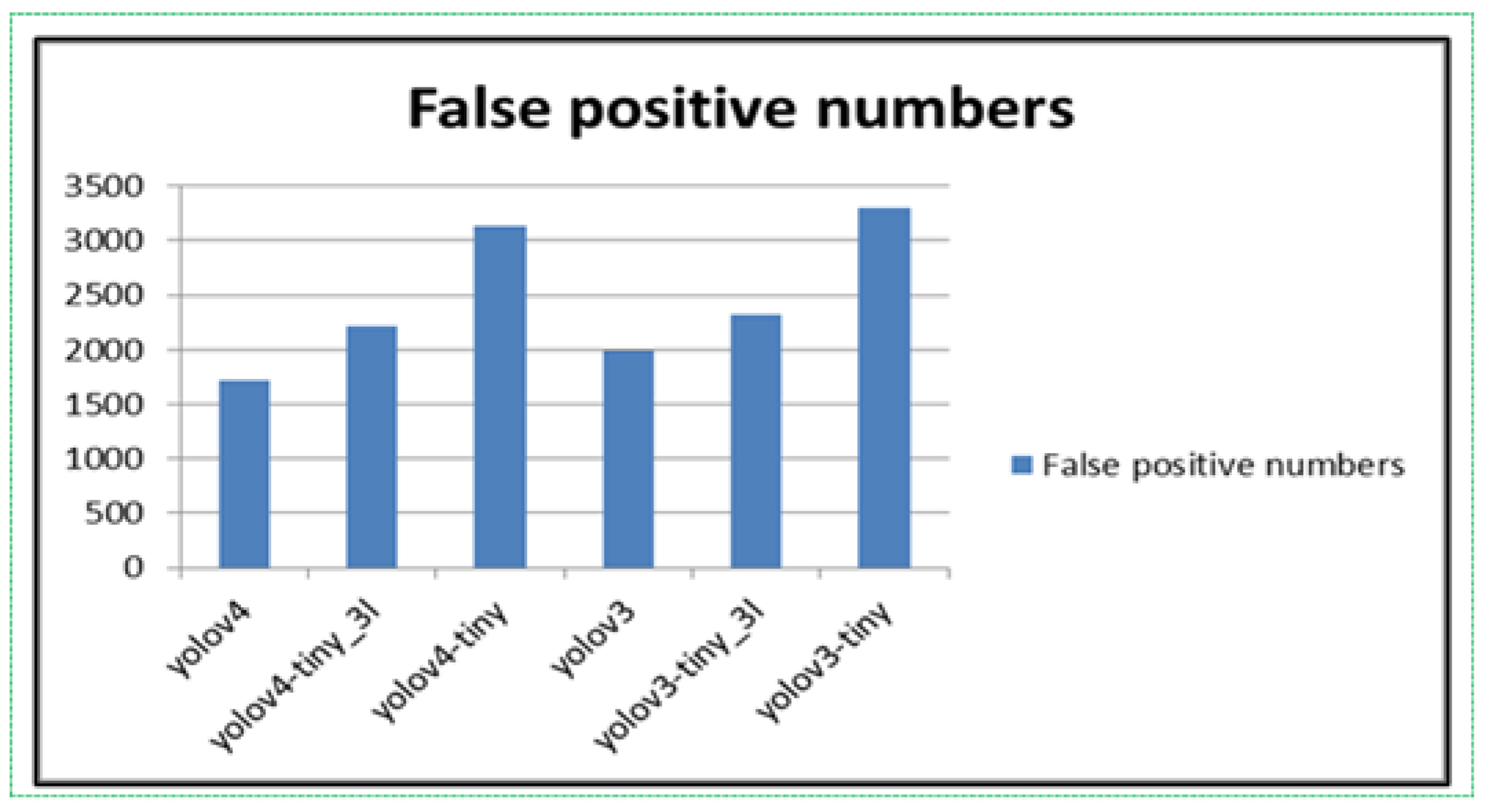

4. Experimental Results and Discussion

5. Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Korean Statistical Information Service. Available online: http://kosis.kr (accessed on 10 August 2021).

- Ba, R.; Chen, C.; Yuan, J.; Song, W.; Lo, S. SmokeNet: Satellite Smoke Scene Detection Using Convolutional Neural Network with Spatial and Channel-Wise Attention. Remote Sens. 2019, 11, 1702. [Google Scholar] [CrossRef] [Green Version]

- Dimitropoulos, K.; Barmpoutis, P.; Grammalidis, N. Spatio-temporal flame modeling and dynamic texture analysis for automatic video-based fire detection. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 339–351. [Google Scholar] [CrossRef]

- Valikhujaev, Y.; Abdusalomov, A.; Cho, Y.I. Automatic Fire and Smoke Detection Method for Surveillance Systems Based on Dilated CNNs. Atmosphere 2020, 11, 1241. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Stathaki, T.; Dimitropoulos, K.; Grammalidis, N. Early Fire Detection Based on Aerial 360-Degree Sensors, Deep Convolution Neural Networks and Exploitation of Fire Dynamic Textures. Remote Sens. 2020, 12, 3177. [Google Scholar] [CrossRef]

- Lu, G.; Gilabert, G.; Yan, Y. Vision based monitoring and characterization of combustion flames. J. Phys. Conf. Ser. 2005, 15, 194–200. [Google Scholar] [CrossRef]

- Gagliardi, A.; Saponara, S. AdViSED: Advanced Video SmokE Detection for Real-Time Measurements in Antifire Indoor and Outdoor Systems. Energies 2020, 13, 2098. [Google Scholar] [CrossRef] [Green Version]

- Toulouse, T.; Rossi, L.; Celik, T.; Akhloufi, M. Automatic fire pixel detection using image processing: A comparative analysis of rule-based and machine learning-based methods. SIViP 2016, 10, 647–654. [Google Scholar] [CrossRef] [Green Version]

- Jiang, Q.; Wang, Q. Large space fire image processing of improving canny edge detector based on adaptive smoothing. In Proceedings of the 2010 International Conference on Innovative Computing and Communication and 2010 Asia-Pacific Conference on Information Technology and Ocean Engineering, Macao, China, 30–31 January 2010; pp. 264–267. [Google Scholar]

- Zhang, Z.; Zhao, J.; Zhang, D.; Qu, C.; Ke, Y.; Cai, B. Contour based forest fire detection using FFT and wavelet. Proc. Int. Conf. CSSE 2008, 1, 760–763. [Google Scholar]

- Celik, T.; Demirel, H.; Ozkaramanli, H.; Uyguroglu, M. Fire detection using statistical color model in video sequences. J. Vis. Commun. Image Represent. 2007, 18, 176–185, ISSN 1047-3203. [Google Scholar] [CrossRef]

- Prema, C.E.; Vinsley, S.S.; Suresh, S. Efficient flame detection based on static and dynamic texture analysis in forest fire detection. Fire Technol. 2018, 54, 255–288. [Google Scholar] [CrossRef]

- Luo, Y.; Zhao, L.; Liu, P.; Huang, D. Fire smoke detection algorithm based on motion characteristic and convolutional neural networks. Multimed. Tools Appl. 2018, 77, 15075–15092. [Google Scholar] [CrossRef]

- Park, M.; Ko, B.C. Two-Step Real-Time Night-Time Fire Detection in an Urban Environment Using Static ELASTIC-YOLOv3 and Temporal Fire-Tube. Sensors 2020, 20, 2202. [Google Scholar] [CrossRef] [Green Version]

- Sharma, J.; Granmo, O.C.; Goodwin, M. Emergency Analysis: Multitask Learning with Deep Convolutional Neural Networks for Fire Emergency Scene Parsing. In Advances and Trends in Artificial Intelligence. Artificial Intelligence Practices; IEA/AIE 2021. Lecture Notes in Computer Science; Fujita, H., Selamat, A., Lin, J.C.W., Ali, M., Eds.; Springer: Cham, Switzerland, 2021; Volume 12798. [Google Scholar] [CrossRef]

- Li, P.; Zhao, W. Image fire detection algorithms based on convolutional neural networks. Case Stud. Therm. Eng. 2020, 19, 100625, ISSN 2214-157X. [Google Scholar] [CrossRef]

- Muhammad, K.; Ahmad, J.; Mehmood, I.; Rho, S.; Baik, S.W. Convolutional Neural Networks Based Fire Detection in Surveillance Videos. IEEE Access 2018, 6, 18174–18183. [Google Scholar] [CrossRef]

- Pan, H.; Badawi, D.; Cetin, A.E. Computationally Efficient Wildfire Detection Method Using a Deep Convolutional Network Pruned via Fourier Analysis. Sensors 2020, 20, 2891. [Google Scholar] [CrossRef] [PubMed]

- Li, T.; Zhao, E.; Zhang, J.; Hu, C. Detection of Wildfire Smoke Images Based on a Densely Dilated Convolutional Network. Electronics 2019, 8, 1131. [Google Scholar] [CrossRef] [Green Version]

- Kim, B.; Lee, J. A Video-Based Fire Detection Using Deep Learning Models. Appl. Sci. 2019, 9, 2862. [Google Scholar] [CrossRef] [Green Version]

- Lee, S.J.; Kim, B.H.; Kim, M.Y. Multi-Saliency Map and Machine Learning Based Human Detection for the Embedded Top-View Imaging System. IEEE Access 2021, 9, 70671–70682. [Google Scholar] [CrossRef]

- Szeliski, R. Computer Vision Algorithms and Applications; Springer: London, UK, 2011. [Google Scholar]

- Barmpoutis, P.; Papaioannou, P.; Dimitropoulos, K.; Grammalidis, N. A Review on Early Forest Fire Detection Systems Using Optical Remote Sensing. Sensors 2020, 20, 6442. [Google Scholar] [CrossRef]

- Redmon, J. Darknet: Open-Source Neural Networks in C. 2013–2016. Available online: http://pjreddie.com/darknet/ (accessed on 22 August 2021).

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Wu, S.; Zhang, L. Using popular object detection methods for real time forest fire detection. In Proceedings of the 11th International Symposium on Computational Intelligence and Design (SCID), Hangzhou, China, 8–9 December 2018; pp. 280–284. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems 25; Pereira, F., Burges, C.J.C., Bottou, L., Weinberger, K.Q., Eds.; Curran Associates Inc.: Red Hook, NY, USA, 2012; pp. 1097–1105. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Xu, R.; Lin, H.; Lu, K.; Cao, L.; Liu, Y. A Forest Fire Detection System Based on Ensemble Learning. Forests 2021, 12, 217. [Google Scholar] [CrossRef]

- Shi, F.; Qian, H.; Chen, W.; Huang, M.; Wan, Z. A Fire Monitoring and Alarm System Based on YOLOv3 with OHEM. In Proceedings of the 39th Chinese Control Conference, Shenyang, China, 27–29 July 2020; pp. 7322–7327. [Google Scholar]

- Cao, C.; Tan, X.; Huang, X.; Zhang, Y.; Luo, Z. Study of Flame Detection based on Improved YOLOv4. J. Phys. 2021, 1952, 022016. [Google Scholar] [CrossRef]

- Niu, W.; Xia, K.; Pan, Y. Contiguous Loss for Motion-Based, Non-Aligned Image Deblurring. Symmetry 2021, 13, 630. [Google Scholar] [CrossRef]

| Dataset | Open Source Datasets | Video Frames | Total |

|---|---|---|---|

| Fire Images | 4336 | 4864 | 9200 |

| Dataset | Training Images | Testing Images | Total |

|---|---|---|---|

| Fire Images Fire-like Images | 205,717 20,000 | 5883 0 | 211,600 20,000 |

| Algorithm | Input Size | Training Accuracy (ap50) | Testing Accuracy (ap50) | Weight Size | Iteration Number | Training Time |

|---|---|---|---|---|---|---|

| YOLOv4 | 608 × 608 | 81.1% | 74.3% | 245 MB | 50,000 | 98 h |

| YOLOv4-tiny_3l | 77.8% | 71.8% | 23 MB | 22 h | ||

| YOLOv4-tiny | 69.02% | 62.9% | 23 MB | 21 h | ||

| YOLOv3 | 82.4% | 77.8% | 236 MB | 57 h | ||

| YOLOv3-tiny_3l | 75.6% | 72.4% | 33.7 MB | 26.5 h | ||

| YOLOv3-tiny | 70.9% | 64.2% | 33.7 MB | 22 h |

| Before | After Filtering | After Contrast Increase (Double) | After Contrast Decrease (Half) |

|---|---|---|---|

| 211,600 | 208,300 | 208,300 | 208,300 |

| Algorithms | Input Size | Training Accuracy (ap50) | Testing Accuracy (ap50) | Weight Size | Iteration Number | Training Time |

|---|---|---|---|---|---|---|

| YOLOv4 | 608 × 608 | 81.1% | 74.3% | 245 MB | 50,000 | 98 h |

| YOLOv4-tiny_3l | 77.8% | 71.8% | 23 MB | 22 h | ||

| YOLOv4-tiny | 69.02% | 62.9% | 23 MB | 21 h | ||

| YOLOv3 | 98.3% | 97.8% | 236 MB | 85 h | ||

| YOLOv3-tiny_3l | 75.6% | 72.4% | 33.7 MB | 26.5 h | ||

| YOLOv3-tiny | 70.9% | 64.2% | 33.7 MB | 22 h |

| Algorithms | Input Size | Training Accuracy (ap50) | Testing Accuracy (ap50) | Weight Size | Iteration Number | Training Time |

|---|---|---|---|---|---|---|

| YOLOv4 | 608 × 608 | 96.1% | 95.3% | 245 MB | 50,000 | 103 h |

| YOLOv4-tiny_3l | 94.2% | 89.9% | 23 MB | 37 h | ||

| YOLOv4-tiny | 88.3% | 85.1% | 23 MB | 33 h | ||

| YOLOv3 | 98.3% | 97.8% | 236 MB | 85 h | ||

| YOLOv3-tiny_3l | 95.6% | 91.4% | 33.7 MB | 39 h | ||

| YOLOv3-tiny | 85.3% | 82.7% | 33.7 MB | 37.5 h |

| Algorithms | P (%) | R (%) | FM (%) | IoU (%) | Average (%) |

|---|---|---|---|---|---|

| ELASTIC-YOLOv3 [14] | 98.5 | 96.9 | 97.7 | 96.9 | 97.7 |

| YOLOv3-incremental [25] | 97.9 | 91.2 | 94.3 | 93.8 | 94.4 |

| Faster R-CNN [26] | 81.7 | 94.5 | 87.2 | 89.2 | 88.2 |

| Dilated CNNs [4] | 98.9 | 97.4 | 98.2 | 98.7 | 98.1 |

| AlexNet [27] | 73.3 | 61.3 | 75.1 | 85.2 | 79.9 |

| ResNet [28] | 94.8 | 93.6 | 94.2 | 95.8 | 94.3 |

| VGG16 [29] | 97.5 | 87.9 | 92.7 | 91.9 | 92.6 |

| YOLOv5 [30] | 98.5 | 96.7 | 98.0 | 97.1 | 97.9 |

| YOLOv3+OHEM [31] | 86.6 | 77.8 | 89.2 | 86.3 | 84.5 |

| YOLOv4 [32] | 95.9 | 96.7 | 98.3 | 97.1 | 96.9 |

| Our Method (Improved YOLOv3) | 98.1 | 99.2 | 99.5 | 98.7 | 98.9 |

| Criterions | YOLOv3 + OHEM [31] | Dilated CNNs [4] | ELASTIC–YOLOv3 [14] | Our Method (Improved YOLOv3) |

|---|---|---|---|---|

| Scene Independence | normal | powerful | normal | powerful |

| Object Independence | normal | powerful | powerful | normal |

| Fire Independence | not strong | powerful | normal | powerful |

| Robust to Color | normal | normal | not strong | powerful |

| Robust to Noise | powerful | normal | powerful | powerful |

| Fire Spread Detection | normal | not strong | not strong | powerful |

| Computational Load | not strong | powerful | normal | powerful |

| Input Resolution | Number of Frames (fps) | Processing Time (s) |

|---|---|---|

| 608 × 608 | 1 | 0.26 |

| 416 × 416 | 1 | 0.24 |

| 320 × 320 | 1 | 0.23 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abdusalomov, A.; Baratov, N.; Kutlimuratov, A.; Whangbo, T.K. An Improvement of the Fire Detection and Classification Method Using YOLOv3 for Surveillance Systems. Sensors 2021, 21, 6519. https://doi.org/10.3390/s21196519

Abdusalomov A, Baratov N, Kutlimuratov A, Whangbo TK. An Improvement of the Fire Detection and Classification Method Using YOLOv3 for Surveillance Systems. Sensors. 2021; 21(19):6519. https://doi.org/10.3390/s21196519

Chicago/Turabian StyleAbdusalomov, Akmalbek, Nodirbek Baratov, Alpamis Kutlimuratov, and Taeg Keun Whangbo. 2021. "An Improvement of the Fire Detection and Classification Method Using YOLOv3 for Surveillance Systems" Sensors 21, no. 19: 6519. https://doi.org/10.3390/s21196519

APA StyleAbdusalomov, A., Baratov, N., Kutlimuratov, A., & Whangbo, T. K. (2021). An Improvement of the Fire Detection and Classification Method Using YOLOv3 for Surveillance Systems. Sensors, 21(19), 6519. https://doi.org/10.3390/s21196519