Abstract

With the advancement of miniaturization in electronics and the ubiquity of micro-electro-mechanical systems (MEMS) in different applications including computing, sensing and medical apparatus, the importance of increasing production yields and ensuring the quality standard of products has become an important focus in manufacturing. Hence, the need for high-accuracy and automatic defect detection in the early phases of MEMS production has been recognized. This not only eliminates human interaction in the defect detection process, but also saves raw material and labor required. This research developed an automated defects recognition (ADR) system using a unique plenoptic camera capable of detecting surface defects of MEMS wafers using a machine-learning approach. The developed algorithm could be applied at any stage of the production process detecting defects at both entire MEMS wafer and single component scale. The developed system showed an F1 score of 0.81 U on average for true positive defect detection, with a processing time of 18 s for each image based on 6 validation sample images including 371 labels.

1. Introduction

Modern electronic devices, such as smart phones, consumer electronics, healthcare devices, or surveillance and safety assistant systems, combine a huge variety of functions and offer a high level of comfort and functionality in a reduced space. To ensure the high quality of miniaturized products, micro-technology (less than 1 mm in size) offers techniques, tools, and process configurations for the reliable high-volume production of micro-components to achieve a continuous trend of miniaturization and multi-functionalization. In the last decades, micro- and nano-manufacturing and metrology has been driven by micro-electro-mechanical systems (MEMS), where well-established manufacturing methods based on semiconductor technologies are able to produce structures in micro-meter dimensions [1]. In order to produce mechanical micro parts (i.e., metals or polymers), classical manufacturing technologies, such as forming, have to be applied and downscaled from the macro to the micro scale (i.e., micro deep drawing). By downscaling process parameters to the micro scale or vice versa, size defects might occur which lead to unexpected process behavior.

There have been several attempts toward detecting surface defects in steel production as well as in micro-fabricated devices, wafers, and MEMS, based on a non-learning, non-destructive testing (NDT) approach [2,3,4,5]. For instance, in [6], a new technique based on transient infrared thermography in a transmission mode was used to detect a multi-layered MEMS for defect detection. Using finite element analysis (FEA) defect simulation, the research calculated the sample surface temperature differences between defective and healthy regions. It was concluded that using the aforementioned technique, the size of defects could be estimated more consistently using the surface temperature gradient for transmission mode thermography compared to the reflection mode. Nonetheless, this technique would only be able to detect defects such as delamination and voids, with the defects’ size being down to a few hundred microns, if the camera is equipped with a micro-lens that might result in distortion.

In another study, Li et al. [7] applied a high-speed image super-resolution algorithm based on sparse representation for defect detection in MEMS. Unlike traditional super-resolution algorithms that process the whole image at once, the research approach sought to divide the image into several blocks based on different categories and their features and process them individually, achieving significantly higher processing speeds between the high- and low-resolution dictionary pairs. As a result, it was concluded that different defects in MEMS were able to be detected with a significantly lower processing time accepting a slightly lower image quality. The research goal was not to detect the defects in MEMS but to improve the sample images’ detail and quality such that the defects could be more easily illustrated and detected, either by an operator or by an ADR tool.

In [8], a customized deep-learning convolutional neural network (CNN) model was developed to detect defects in the semiconductor wafer manufacturing process. The model was trained to detect four types of defects including circles, clusters, scratches, and spots, and was limited to different defect patterns in a whole wafer. The developed model managed to achieve 84% accuracy in mixed defect detection cases.

In the work carried out by Chen et al. [9], a K-means clustering algorithm was employed for defect detection in the grain surface of silicon wafers. Since the K-means clustering algorithm is prone to noise and as grain surfaces are very noisy, using traditional K-means clustering algorithms would not result in a very high accuracy detection rate. Thus, the authors implemented a pre-processing technique based on morphological operations (closed and open) to reduce the noise. The results demonstrated that the developed algorithm yielded 99.02% accuracy in detecting grain surface defects.

In a study by Shanker and Zhong [10], a template-based vision system for wafer die surface defect inspection was implemented. The system was capable of detecting defects with sizes ranging from 12.7 microns up to around 20 cm. Unlike Devka et al. [8], the researchers considered each die as one part of the whole wafer. A flawless die was used as a template die for the whole wafer to be compared against. The research used image processing techniques to subtract the reference image (healthy die) from every single die in a wafer and, based on the pixel value of the result image, it was possible to determine whether the dies had defects based on the mean square error value, as long as the defect size was within the detectable limit of the algorithm. Moreover, Tien et al. [11] implemented an automatic positioning and wafer detection system based on image processing and fuzzy inference algorithms. A charge-coupled device (CCD) was used, coupled with pre-processing steps, including noise filtering and edge detection, as well as defining the defective template in order to infer its characteristic points to employ it as the reference input for the fuzzy interface. The research adopted a heuristic approach towards detecting, localizing, and classifying defects in a wafer. The results showed a 97.4% average true positive defect detection accuracy for two different defect types (scratches and stains) amongst 153 die samples. In general, the heuristic approach yields higher accuracy when dealing with a limited dataset as it works independently of the number of training datasets. Moreover, heuristic algorithms are very efficient for discrete and simple detections [12]. In defect detection, as long as the detection case is simple enough to be implemented algorithmically, a heuristic approach coupled with some image-processing-based enhancement techniques is adequate. Nonetheless, as the shape and defect characteristics complexity increases, the use of more robust techniques such as machine learning (ML) is needed [12].

In recent years, there have been several attempts to develop ML-based surface defect detection, resulting in more robust and versatile automatic defect detection algorithms [13,14,15,16,17].

Tello et al. [18], conducted research using ML for the recognition of mixed-defect patterns during semiconductor fabrication, a process based on the use of a randomized general regression network (RGRN) model. The research was an extension to the previous work by the authors in which the developed model was capable of detecting 99.8% of the defects in the single-pattern scenario when only one defect category was introduced. Nonetheless, the performance proved to be poor when a wafer had mixed-defect patterns. The paper expanded upon the authors’ previous work in order to increase the detection accuracy of mixed-defect patterns by implementing a deep-structured ML model as well as a novel information gain (IG)-based splitter. Moreover, a spatial filter was applied to reduce model bias during the training phase and to eliminate random noises. The results showed improved model detection accuracy of 86.17% for mixed-defect patterns.

In Xingxing Li et al. [19], a crack detection algorithm was developed based on the Yolov4 target detection method for silicon wafer surface defect detection. Although the model managed to detect over 98% of the true positive defects, it was only targeted towards a single defect detection (surface cracks). Nonetheless, the model was capable of detecting different shapes of cracks on the surface of silicon wafers ranging from short to long.

In another study, Xiaoyan et al. [20] developed a lightweight CNN model dubbed ‘WDD-Net’ for silicon wafer structural defect detection with a very high detection accuracy (99%). The research evaluated the WDD-Net model against two other established CNN models, one based on VGG-16 and the other based on MobileNet-v2, in which the experimental results showed that WDD-Net was five times faster than the 307 KB models, hence the term ‘lightweight’. Nonetheless, the model requires a moderate pre-processing stage since the silicon wafer image needs to be divided into thousands of sub-images for the developed model to work and the localization feature for detected defects is missing.

This research was directed towards the development of an ML model for surface defect detection, based on a proven neural network architecture (CNN), effective both in terms of accuracy and detection speed. As discussed, there are some shortcomings with some of the aforementioned techniques for surface defect detection including the need for computationally expensive pre-processing, and the lack of available real data that requires the development of a side model, a generative adversarial network (GAN), for compensation [21], absence of localization of the defects, and lack of variety in detectable defect types.

2. Materials and Methods

For the development of the ADR algorithm, the authors have explored several neural network models and techniques in order to identify the right algorithm that could not only detect and classify different defects but could also be capable of localizing them with region of interest (ROI) bounding boxes. The convolutional neural network (CNN) is a deep learning model used for object detection for single items in different applications including image and video recognition, recommender systems, image classification, medical image analysis, natural language processing, and financial time series [22]. Due to the nature of the MEMS defects, a CNN model could not be used as the core ML algorithm since there were several defects per input image that needed to be localized in addition to being detected and classified. Thus, a CNN derivative model called Region-CNN (R-CNN) was chosen for this task that could deliver all three requirements for the input images, i.e., detection, classification, and localization [23]. The authors decided to use Faster R-CNN, which dramatically improves the overall performance of R-CNN [24]. Faster R-CNN, as the name suggests, is faster compared to R-CNN and Fast R-CNN [25] while achieving the same detection accuracy. The basic concept is to break down the detection of objects into 2 separate phases. In the first phase, regions are identified within the image that are likely to contain the object of interest. The Faster R-CNN then runs on each proposed region in the second phase, and outputs the object category score and the corresponding bounding box coordinates containing the object [24].

CNN, and inherently R-CNN, in order to work with high enough accuracy, require significant amounts of data to be trained [26]. To compensate for this, the authors used a pre-trained publicly available model with a very large number of input images and labels called Common Objects in Context (COCO), and applied transfer learning by training our model on top of the pre-trained one. Using this method, the need for a very significant amount of data for training from scratch was significantly reduced and the model could be tailored to accommodate the specific defects that the task required. COCO is a large-scale object detection, segmentation, and captioning dataset that contains 330 k images (>200 k labelled) of day-to-day objects from persons to chairs and cakes. It contains 1.5 million object instances including 80 object categories, 91 stuff categories, 5 captions per image, and 250,000 people with key points [27].

An ADR neural network model based on TensorFlow Faster R-CNN Inception v2 COCO was developed for this project. There are several base models to choose from, each with advantages and disadvantages. The Faster R-CNN Inception v2 COCO, in particular, has a benchmark detection speed of 58 ms and COCO mAP[^1] of 28 (Table 1). Moreover, higher accuracy models require more computation resources and might not work on mid-range systems and might take a significant amount of time to process one image. Essentially, there is a trade-off between speed and accuracy in industrial environments in which speed is a strong constraint. Thus, Faster R-CNN Inception v2 COCO was chosen as it has very high accuracy and at the same time has a reasonable time delay per input image. CNN-based models have been successfully used previously in surface defect detection [28], making it an ideal model for this research. Additionally, the authors used a residual learning framework (ResNet)-based CNN as its error rate was among the lowest in the ImageNet validation set [29].

Table 1.

Comparison between different Faster R-CNN models [30].

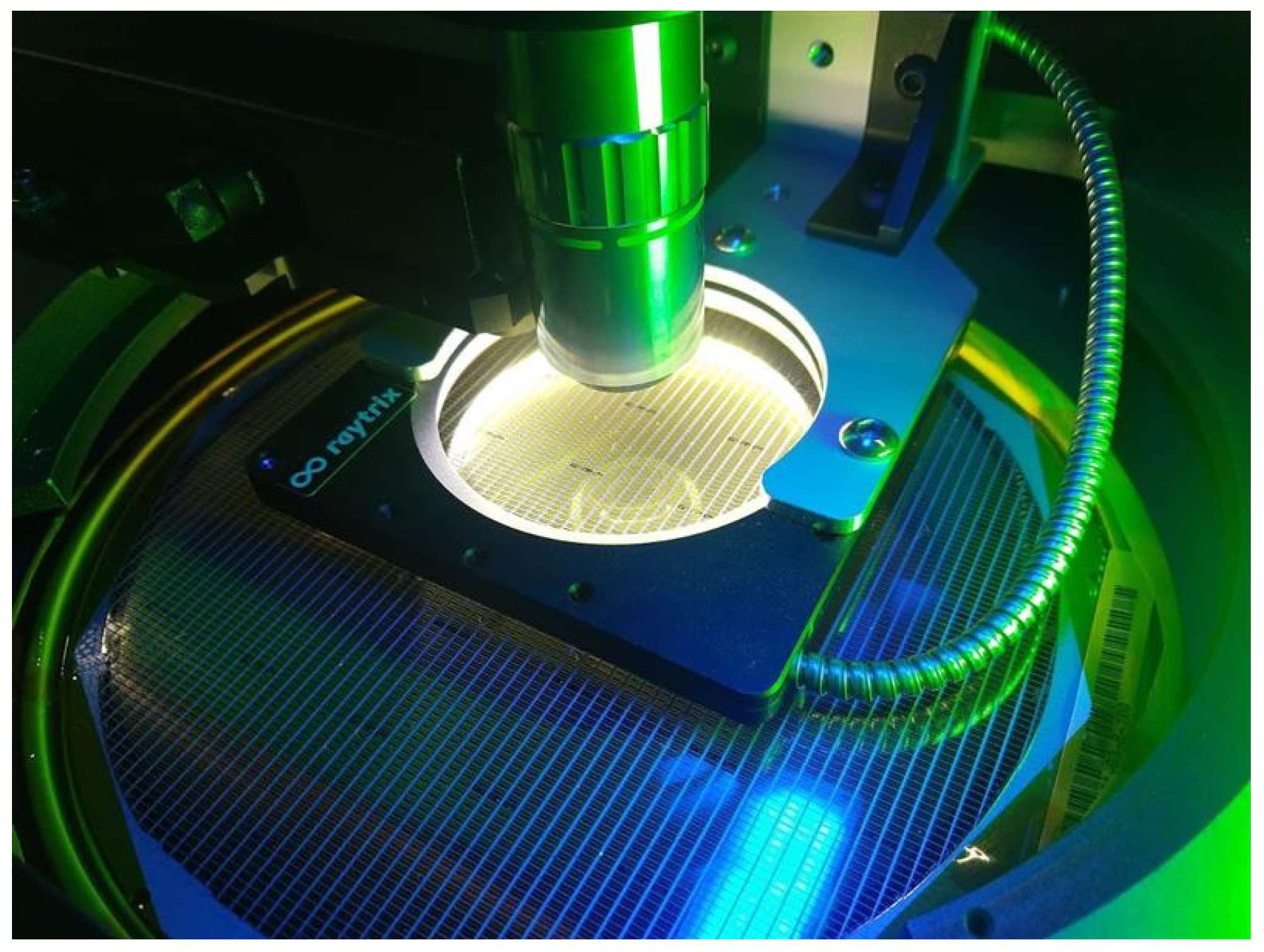

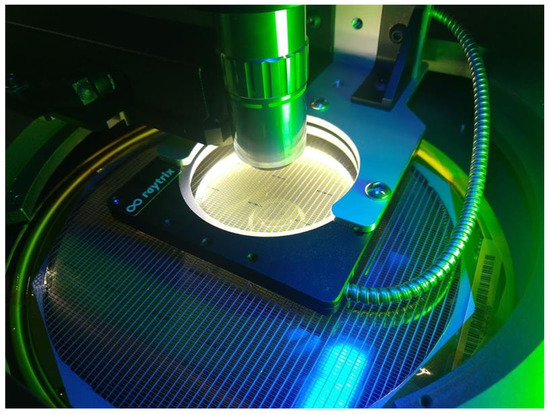

An inspection system consisting of a conventional micro-electrical wafer prober with a plenoptic camera was developed by Raytrix GmbH and retrofitted by aixACCT Systems GmbH (Figure 1). These cameras capture the information of a light ray’s origin in 3D space, via an array of micro-lenses installed closely in front of a conventional photosensitive chip. Once the calibration step of the camera has finished, the imaged object is computationally reconstructed, resulting in a fully focused and 3D depth map image. The chosen cameras are compact and have an extended depth of focus compared to microscope cameras with similar optical properties as the micro-lenses have various focal lengths. The combination of all these advantages renders this technology ideal for MEMS inspection.

Figure 1.

A close-up view of the developed plenoptic camera.

Data Preparation of Plenoptic Images and Training

The images were collected using a conventional micro-electrical wafer probe, retrofitted by aixACCT Systems GmbH with a plenoptic camera developed by Raytrix GmbH. The plenoptic camera captured the information of the light rays’ origin in 3D space, via a micro-lenses array positioned closely in front of a conventional photosensitive chip. Once the calibration process of the camera had been completed, the imaged object was computationally reconstructed, outputting a fully focused image with 3D depth map information.

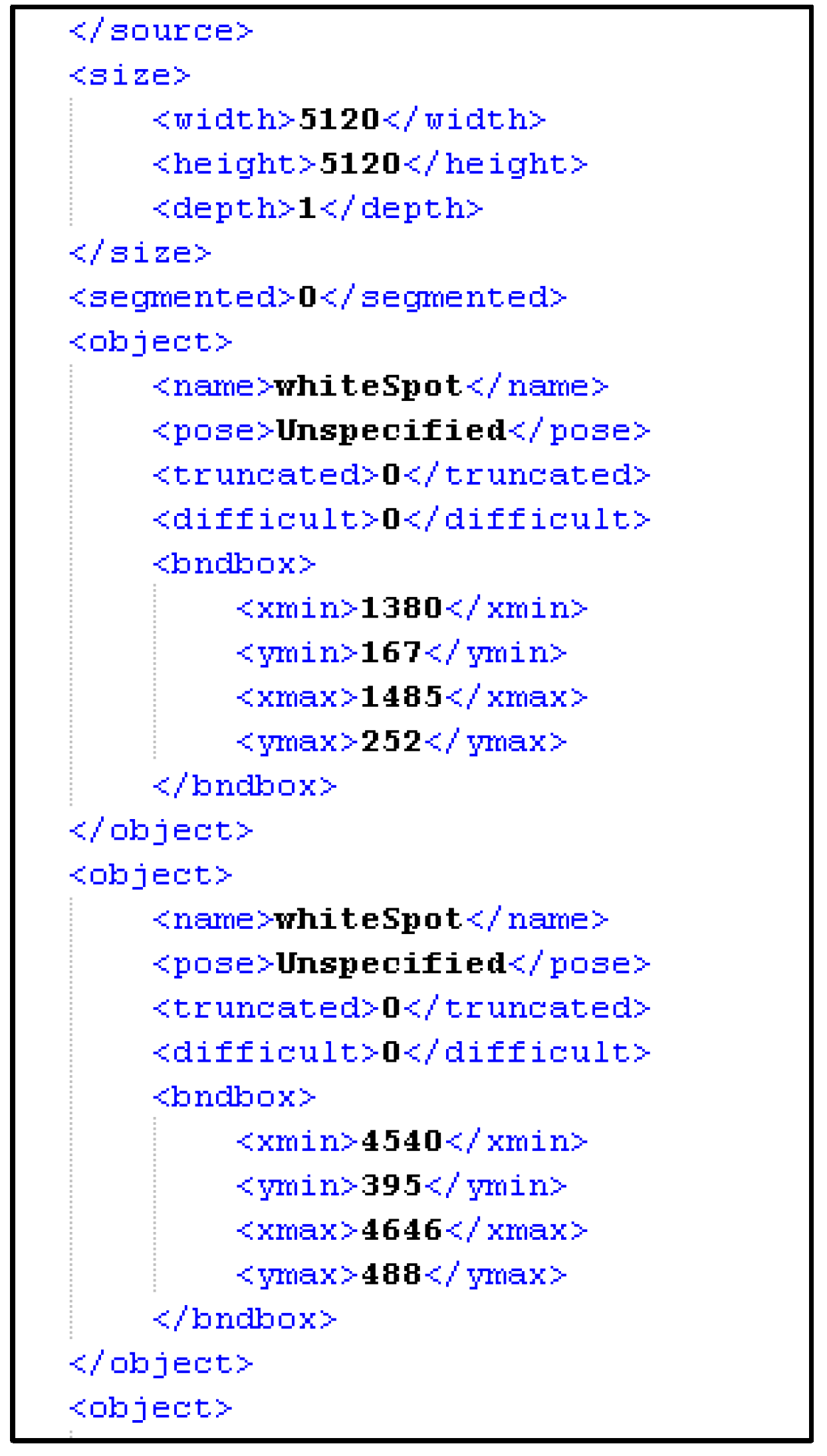

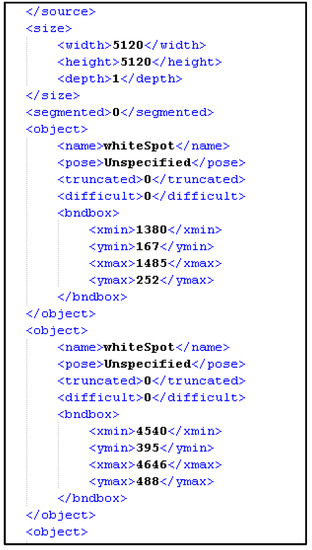

Every image in the dataset was labelled manually by creating a corresponding XML file (Figure 2) for each image containing the X and Y coordinates of every defect in that image alongside the type of that defect. A team of professionals in detecting the end users’ wafer defect types participated in defining and selecting the defects in the sample images. The coordination of these defects was performed manually using software called ‘LabelImg’.

Figure 2.

An example of a companion XML file for plenoptic images.

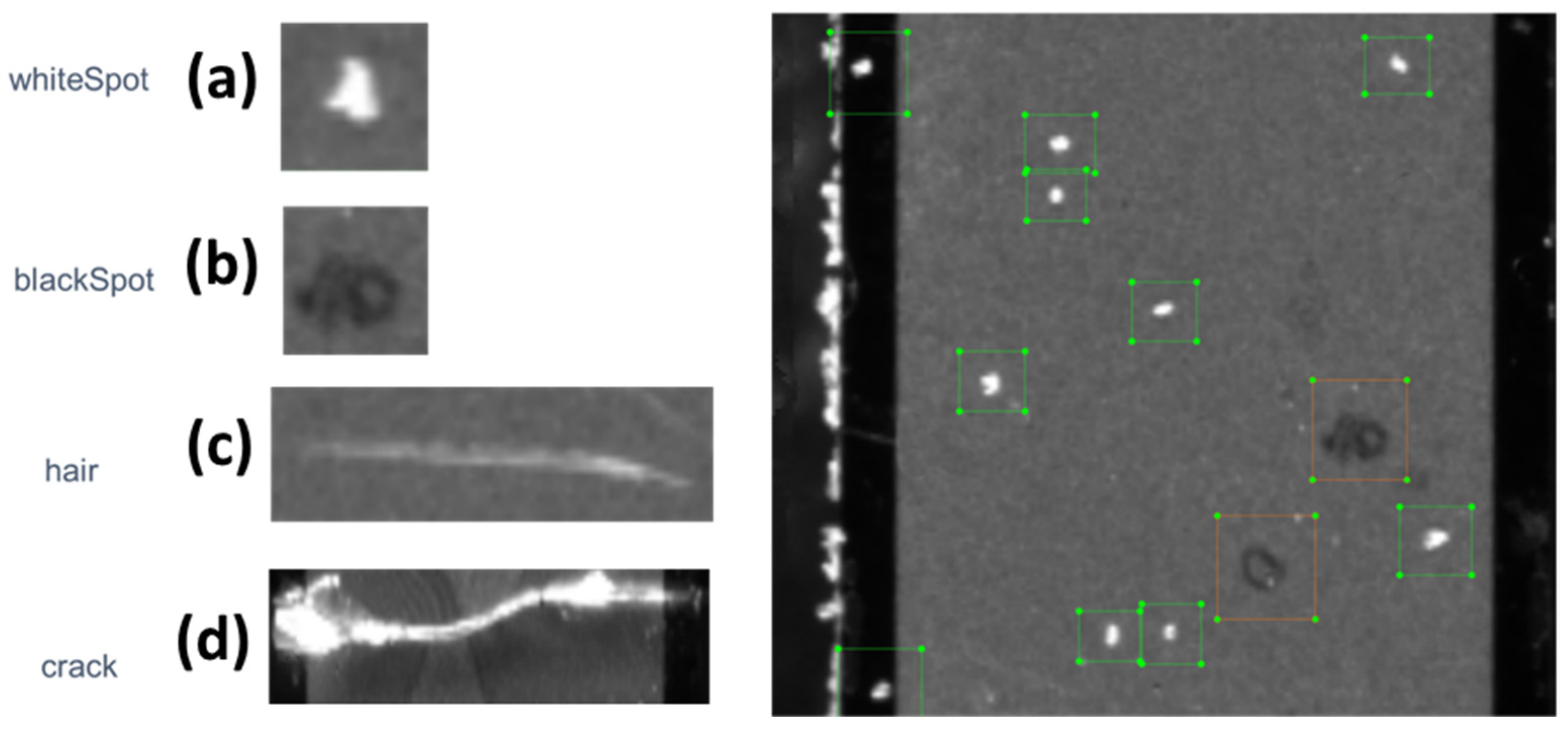

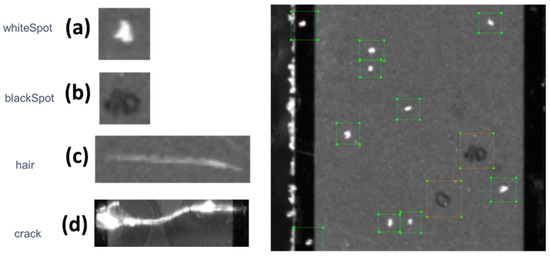

Based on the initial analysis of the input dataset, four different types of defect (Figure 3) were identified among the collected images. Those defect types were the basis of the labelling process.

Figure 3.

Different defects in plenoptic images. (a,b) represent small white and black spots, respectively, as a result of the accumulation of dust and dirt. (c), as the label suggests, is human hair, whereas (d) shows physical cracks on the surface of the specimen due to tension. The right side of the figure represents (a,b) labelled defects in green and orange rectangles, respectively.

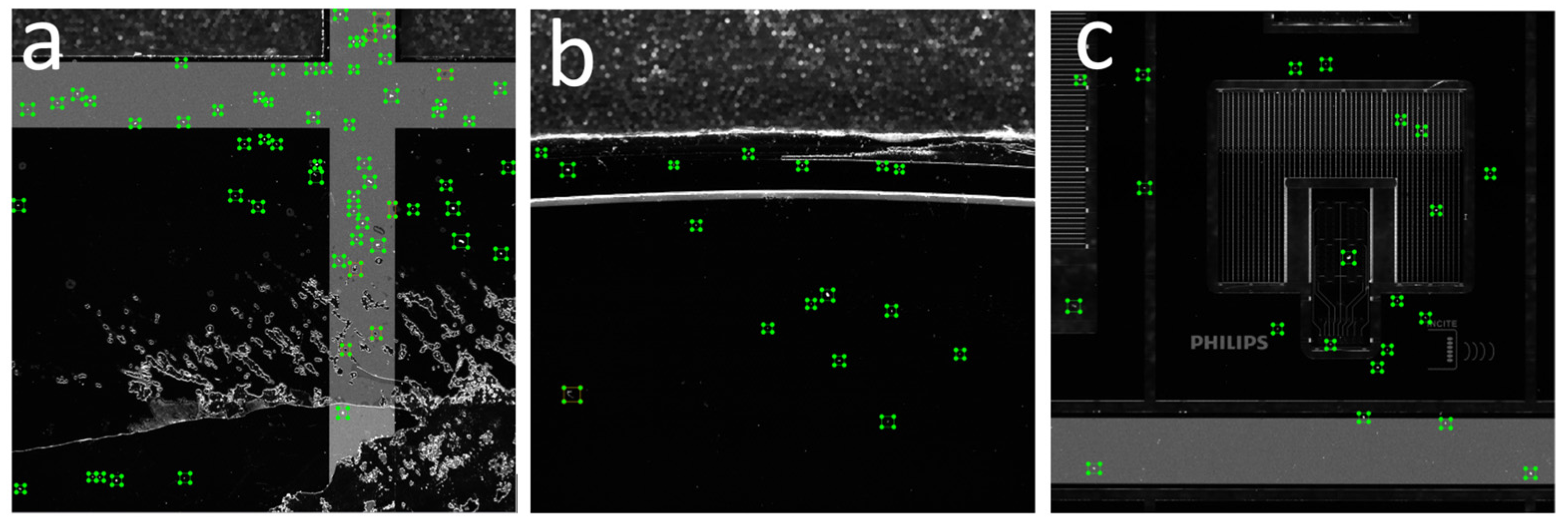

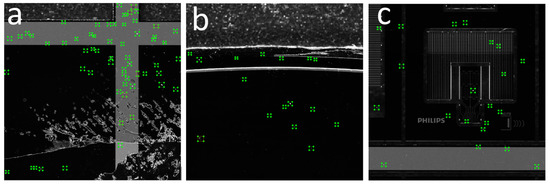

Figure 4 shows an example of a labelled image.

Figure 4.

An example of the labelling process. (a–c) represent parts of a wafer with shatter, upper edge, and middle part, respectively.

At the end of the labelling process, the data were fed into the developed ADR model. Overall, 415 images were used for training and testing. The images were divided into three separate batches: one for training (319), one for testing (90), and one comprising 6 images used for validation purposes (Table 2). In general, the quality of the model depends on four equally important criteria: the quality of the pre-trained model, the quality of the machine learning architecture, the quantity and variability of the training data, and the quality of the labelling. The machine learning architecture and the library were selected after careful analysis of the state of the art as explained above. Although the amount of data is limited by the available dataset, the 415 images represent a suitable quantity of data considering each image had, on average, around 50 defects to be labelled. Among the four criteria, labelling is the only critical factor relying on a manual human process. A total of 20,683 individual labels containing the four different types of defect illustrated in Figure 3 were created. The input image resolution was 5120 × 5120 pixels with an average of ~35 MB in size per image. The number of instances for each defect type varied significantly, with “crack” and “hair” having the lowest occurrence in the entire dataset. The computer used for the training and testing of the developed ADR system was based on a 2 × Intel® Xeon® Gold 6152 CPU (22-Core, 44-Threads, 30.25 MB L3 Cache, up to 3.7 GHz with Intel® Turbo Boost Technology) utilizing an NVIDIA TESLA V100 PCIe 32 GB HBM2, 900 GB/s Bandwidth—DOUBLE-PRECISION: 7 teraFLOPS—SINGLE-PRECISION: 14 teraFLOPS -DEEP LEARNING: 112 teraFLOPS and 640 GB Penta Channel DDR4 at 2666 MHz.

Table 2.

The number of images and labels used in training, testing, and validation for the plenoptic ADR system.

3. Results

The plenoptic ADR model based on Faster R-CNN Inception v2 COCO training process took 130 h to be completed for 200,000 epochs. The detection speed, on average, was around 18 s per image and the whole trained model’s total loss was around 0.07, as can be seen from the following table (Table 3).

Table 3.

The Faster R-CNN Inception v2 COCO trained model performance result.

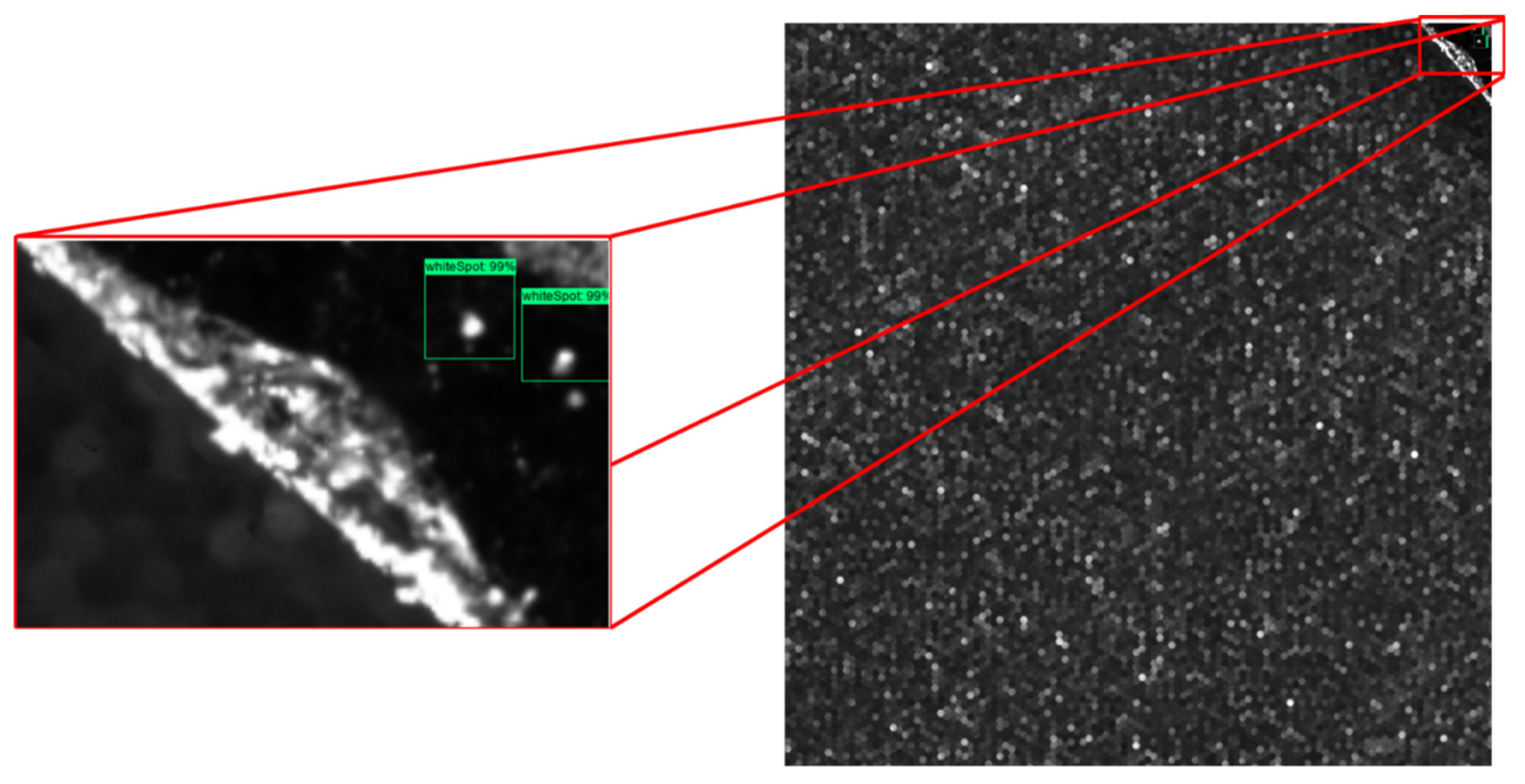

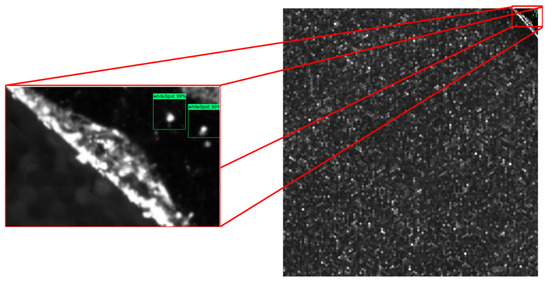

Additionally, a measurement was taken to test and validate the accuracy of the model in terms of the minimum detectable particle and the model’s ability to differentiate the defect from the background. It was concluded that the model had an accuracy along the long semi-axis of 16 um for the detectable minimum particle size. Figure 5 shows three plenoptic images fed to the ADR system for defect detection, classification, and localization. As can be seen, the model detected most of the defects with very high accuracy (>98% confidence level, on average). The developed ADR model was designed so that the minimum confidence level (detection sensitivity) could be manually changed by a user. As the minimum confidence level decreased, the model could detect even more defects, although with lower accuracy.

Figure 5.

Detected defects of a plenoptic image by the ADR system.

Table 4 shows the confusion matrix for the developed model against all four defect categories. The results showed that the developed model’s accuracy was high in both average precision and average recall. Nonetheless, the low recall values for “blackSpot” and “hair” defects are due to the fact that the dataset had an imbalanced distribution of the defects’ instances for these two categories.

Table 4.

Confusion matrix for the developed ML model based on Faster R-CNN Inception v2 COCO in detection of medical MEMS defects.

4. Discussion

The development of an ML algorithm based on the Faster R-CNN Inception v2 COCO model to detect and localize surface defects in medical MEMS wafers has proven to be an effective and accurate approach. Even though some research on ADR has been conducted using a heuristic approach with promising results, the complexity of defects and SNR has a direct impact on the accuracy level of these approaches. However, the machine learning approach effectiveness is greatly dependent on the number of samples for training. Correct and accurate data labelling plays a significant role in reducing latency and increasing accuracy. Nevertheless, the overall success rate of a machine learning algorithm with a relatively limited training dataset can be increased by implementing and combining more confidence factors.

Overall, the machine learning approach is ideal for detections that are more sophisticated in terms of shape and color and which require a lot of thresholding, and for variables such as complex defects. However, for simpler cases, such as standard object detection, its disadvantages outweigh its benefits when dealing with very limited datasets, mainly due to its need for a significant amount of system resources (i.e., CPU and memory) to process information beforehand. Moreover, data labelling is a painstaking task and requires a significant amount of time. The developed defect detection model was designed so the minimum detectable confidence level could be manually changed by a user and as the minimum detectable confidence level decreases. For instance, the model could detect more defects at the expense of a lower confidence level. The results also showed a statistically significant true-positive detection rate among the four identified categories. The developed model’s score was high, both in average precision and average recall considering the low occurrence frequency of two of the defect categories “blackSpot” and “hair”, which resulted in lower recall values. Overall, the model managed to detect true-positive defects among all four defect categories with 0.81 F1 accuracy on average, with 18 s of processing time per input image.

5. Conclusions

This research sought to develop an automated defect recognition (ADR) system and measurement software capable of detecting surface defects of MEMS using a deep learning approach. The developed algorithm could be applied at any stage of production and assembly process for detecting defects at both the entire-MEMS-wafer and single-component scale. The developed system showed an F1 score of 0.81 U on average for true-positive defect detection with the processing time of 18 s for each image based on six validation sample images including 371 labels.

An ADR system was developed including the software and data-processing algorithms for identification and quantification of imperfections in molded parts and assemblies and their relation to part functionality for plenoptic MEMS images. The ADR software was developed to obtain image data from the plenoptic system. A deep learning neural network algorithm was developed based on the Faster R-CNN Inception v2 COCO and using a transfer learning approach. Consequently, the defects’ features were evaluated and labelled and fed into the developed ML model for training. Moreover, the efficacy of the developed ADR system in detecting, localizing, and classifying defects in MEMS was evaluated and tested. Upon the detection of defects, bounding boxes, with the information about the defects, alongside their detection confidence percentage, were added to the input images, which could be sent to a central system for further analysis and monitoring. The research approach proved to be a reliable and high-accuracy method for detecting surface defects in medical MEMS, the results of which could be applied to similar detection scenarios including solar panel cells, micro fractures, etc. The researchers aim to use the research findings to develop a fully automatic sub-surface defect detections system for medical MEMS.

The findings of this study could cut manufacturing costs significantly as it will offer a system with automated knowledge and inspection data-based process feedback that would allow the detection and traceability of faults that may occur in MEMS production, especially for critical applications such as aerospace, space, and healthcare. It could provide technological and competitive advantage in the growing manufacturing and production industry.

Author Contributions

Conceptualization, A.A. and J.K.; methodology, A.A. and J.K.; software, A.A.; validation, A.A.; formal analysis, A.A.; investigation, A.A.; resources, A.A.; data curation, A.A.; writing—original draft preparation, A.A. and J.K.; writing—review and editing, all; visualization, A.A.; supervision, J.K. and T.-H.G. All authors have read and agreed to the published version of the manuscript.

Funding

This project has received funding from the European Union’s HORIZON 2020 research and innovation program under Grant Agreement no. 768883.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Acknowledgments

The work presented in this paper is part of the collaborative research project, A Complimentary Inspection Technique based on Computer Tomography and Plenoptic Camera for MEMS Components (CITCOM) funded by the European Collaborative Project Horizon 2020 and courtesy of support from CSEM, aixACTT Systems GmbH, Raytrix GmbH and Microsemi.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Weimer, D.; Thamer, H.; Fellmann, C.; Lütjen, M.; Thoben, K.D.; Scholz-Reiter, B. Towards 100% in-situ 2D/3D quality inspection of metallic micro components using plenoptic cameras. Procedia CIRP 2014, 17, 847–852. [Google Scholar] [CrossRef] [Green Version]

- Li, M.; Wan, S.; Deng, Z.; Wang, Y. Fabric defect detection based on saliency histogram features. Comput. Intell. 2019, 35, 517–534. [Google Scholar] [CrossRef]

- Ashour, M.W.; Khalid, F.; Abdul Halin, A.; Abdullah, L.N.; Darwish, S.H. Surface Defects Classification of Hot-Rolled Steel Strips Using Multi-directional Shearlet Features. Arab. J. Sci. Eng. 2019, 44, 2925–2932. [Google Scholar] [CrossRef]

- Luo, Q.; Sun, Y.; Li, P.; Simpson, O.; Tian, L.; He, Y. Generalized Completed Local Binary Patterns for Time-Efficient Steel Surface Defect Classification. IEEE Trans. Instrum. Meas. 2019, 68, 667–679. [Google Scholar] [CrossRef] [Green Version]

- Luo, Q.; Fang, X.; Sun, Y.; Liu, L.; Ai, J.; Yang, C.; Simpson, O. Surface defect classification for hot-rolled steel strips by selectively dominant local binary patterns. IEEE Access 2019, 7, 1–10. [Google Scholar] [CrossRef]

- Wang, X.; Whalley, D.C.; Silberschmidt, V.V. Non-destructive defect detection for MEMS devices using transient thermography. In Proceedings of the 2016 6th Electronic System-Integration Technology Conference (ESTC), Grenoble, France, 13–15 September 2016. [Google Scholar] [CrossRef] [Green Version]

- Li, X.; Zhao, Y.; Hu, T.; Zhang, Q.; Li, Y. A high-speed image super-resolution algorithm based on Sparse Representation for Mems Defect Detection. In Proceedings of the 11th IEEE Annual International Conference on Nano/Micro Engineered and Molecular Systems (NEMS), Matsushima Bay and Sendai MEMS City, Japan, 17–20 April 2016; pp. 17–20. [Google Scholar]

- Devika, B.; George, N. Convolutional Neural Network for Semiconductor Wafer Defect Detection. In Proceedings of the 2019 10th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kanpur, India, 6–8 July 2019; pp. 6–11. [Google Scholar] [CrossRef]

- Chen, X.; Zhao, C.; Chen, J.; Zhang, D.; Zhu, K.; Su, Y. K-means clustering with morphological filtering for silicon wafer grain defect detection. In Proceedings of the 2020 IEEE 4th Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), Chongqing, China, 12–14 June 2020; pp. 1251–1255. [Google Scholar] [CrossRef]

- Shankar, N.G.; Zhong, Z.W. Defect detection on semiconductor wafer surfaces. Microelectron. Eng. 2005, 77, 337–346. [Google Scholar] [CrossRef]

- Tien, C.L.; Lai, Q.H.; Lin, C.S. Development of optical automatic positioning and wafer defect detection system. Meas. Sci. Technol. 2016, 27, 025205. [Google Scholar] [CrossRef]

- Amini, A.; Banitsas, K.; Cosmas, J. A comparison between heuristic and machine learning techniques in fall detection using Kinect v2. In Proceedings of the 2016 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Benevento, Italy, 15–18 May 2016; pp. 1–6. [Google Scholar] [CrossRef] [Green Version]

- Chen, H.; Pang, Y.; Hu, Q.; Liu, K. Solar cell surface defect inspection based on multispectral convolutional neural network. J. Intell. Manuf. 2020, 31, 453–468. [Google Scholar] [CrossRef] [Green Version]

- Cheng, K.C.C.; Chen, L.L.Y.; Li, J.W.; Li, K.S.M.; Tsai, N.C.Y.; Wang, S.J.; Huang, A.Y.A.; Chou, L.; Lee, C.S.; Chen, J.E.; et al. Machine Learning-Based Detection Method for Wafer Test Induced Defects. IEEE Trans. Semicond. Manuf. 2021, 34, 161–167. [Google Scholar] [CrossRef]

- Jizat, J.A.M.; Majeed, A.P.P.A.; Ahmad, A.F.; Taha, Z.; Yuen, E. Evaluation of the machine learning classifier in wafer defects classification. ICT Express 2021. [Google Scholar] [CrossRef]

- Zheng, X.; Zheng, S.; Kong, Y.; Chen, J. Recent advances in surface defect inspection of industrial products using deep learning techniques. Int. J. Adv. Manuf. Technol. 2021, 113, 35–58. [Google Scholar] [CrossRef]

- Schlosser, T.; Beuth, F.; Friedrich, M.; Kowerko, D. A Novel Visual Fault Detection and Classification System for Semiconductor Manufacturing Using Stacked Hybrid Convolutional Neural Networks. In Proceedings of the 2019 24th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Zaragoza, Spain, 10–13 September 2019; pp. 1511–1514. [Google Scholar] [CrossRef] [Green Version]

- Tello, G.; Al-Jarrah, O.Y.; Yoo, P.D.; Al-Hammadi, Y.; Muhaidat, S.; Lee, U. Deep-structured machine learning model for the recognition of mixed-defect patterns in semiconductor fabrication Processes. IEEE Trans. Semicond. Manuf. 2018, 31, 315–322. [Google Scholar] [CrossRef]

- Li, X.; Duan, C.; Zhi, Y.; Yin, P. Wafer crack detection based on yolov4 target detection method. In Proceedings of the IOP Conference Series: Earth and Environmental Science, Chengdu, China, 11 July 2021; Volume 1802, pp. 1–7. [Google Scholar]

- Chen, X.; Chen, J.; Han, X.; Zhao, C.; Zhang, D.; Zhu, K.; Su, Y. A light-weighted CNN model for wafer structural defect detection. IEEE Access 2020, 8, 24006–24018. [Google Scholar] [CrossRef]

- Chen, S.H.; Kang, C.H.; Perng, D.B. Detecting and measuring defects in wafer die using gan and yolov3. Appl. Sci. 2020, 10, 8725. [Google Scholar] [CrossRef]

- Valueva, M.V.; Nagornov, N.N.; Lyakhov, P.A.; Valuev, G.V.; Chervyakov, N.I. Application of the residue number system to reduce hardware costs of the convolutional neural network implementation. Math. Comput. Simul. 2020, 177, 232–243. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef] [Green Version]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Brain, D.; Webb, G.I. On the Effect of Data Set Size on Bias And Variance in Classification Learning. In Proceedings of the Fourth Australian Knowledge Acquisition Workshop, Sydney, Australia, 5–6 December 1999; pp. 117–128. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 8–11 September 2014; 8693 LNCS. Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar] [CrossRef] [Green Version]

- Cheon, S.; Lee, H.; Kim, C.O.; Lee, S.H. Convolutional Neural Network for Wafer Surface Defect Classification and the Detection of Unknown Defect Class. IEEE Trans. Semicond. Manuf. 2019, 32, 163–170. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef] [Green Version]

- TensorFlow 1 Detection Model Zoo-GitHub. Available online: https://github.com/tensorflow/models/blob/master/research/object_detection/g3doc/tf1_detection_zoo.md (accessed on 3 September 2020).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).