An Efficient and Precise Remote Sensing Optical Image Matching Technique Using Binary-Based Feature Points

Abstract

1. Introduction

2. Materials and Methods

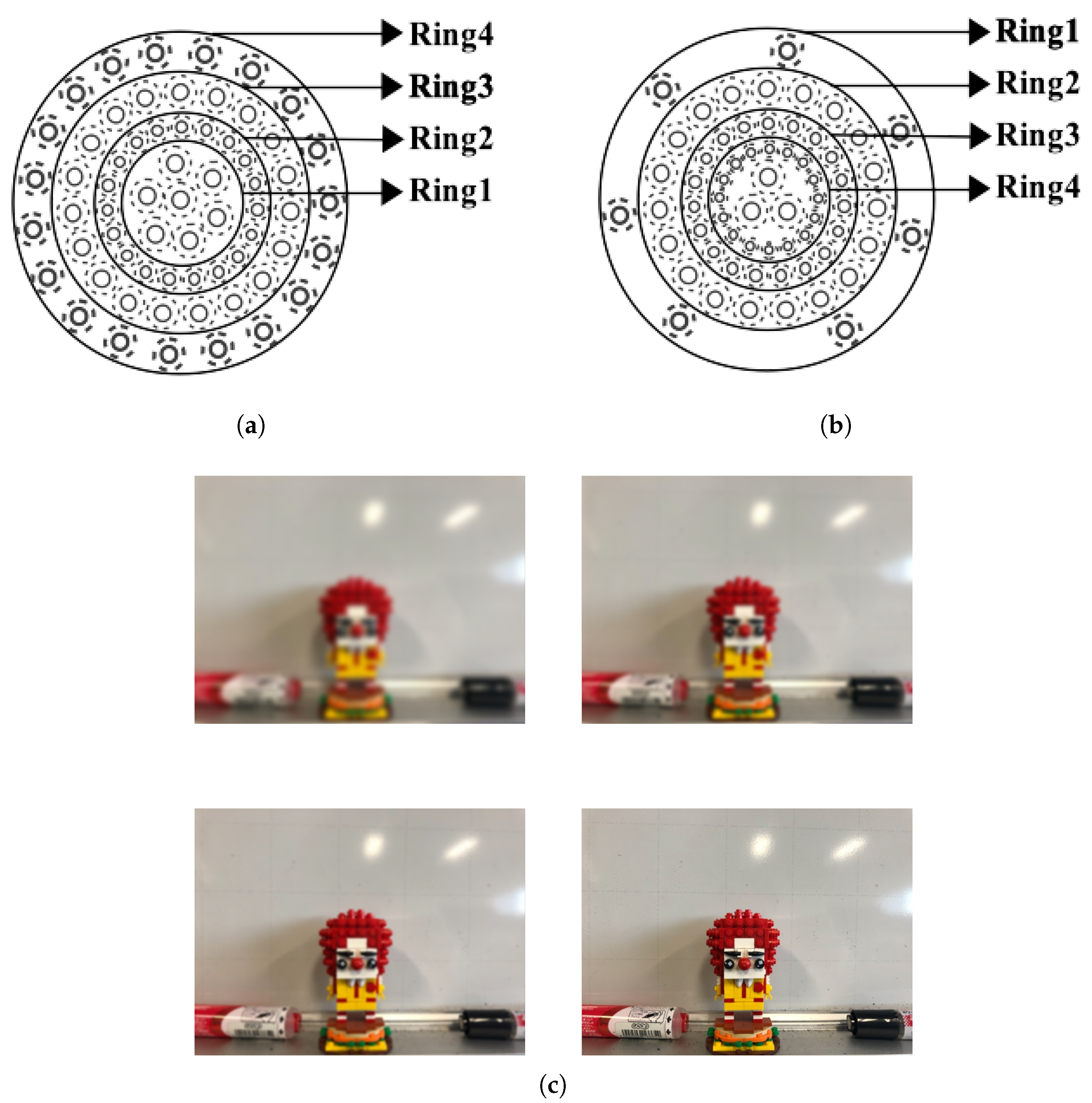

2.1. Enhanced Accelerated BRISK Algorithm

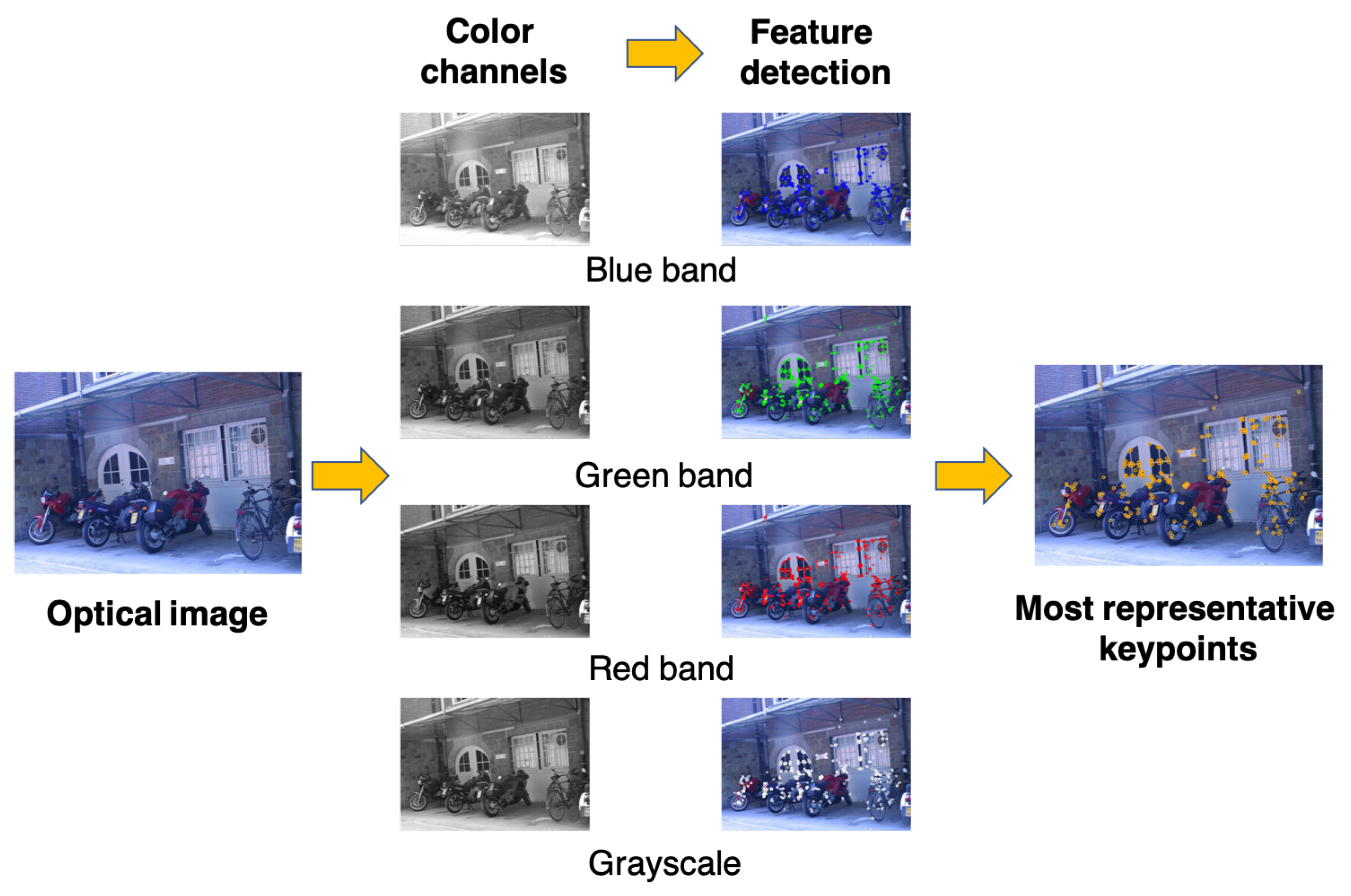

2.2. Synthetic-Colored Feature Descriptors

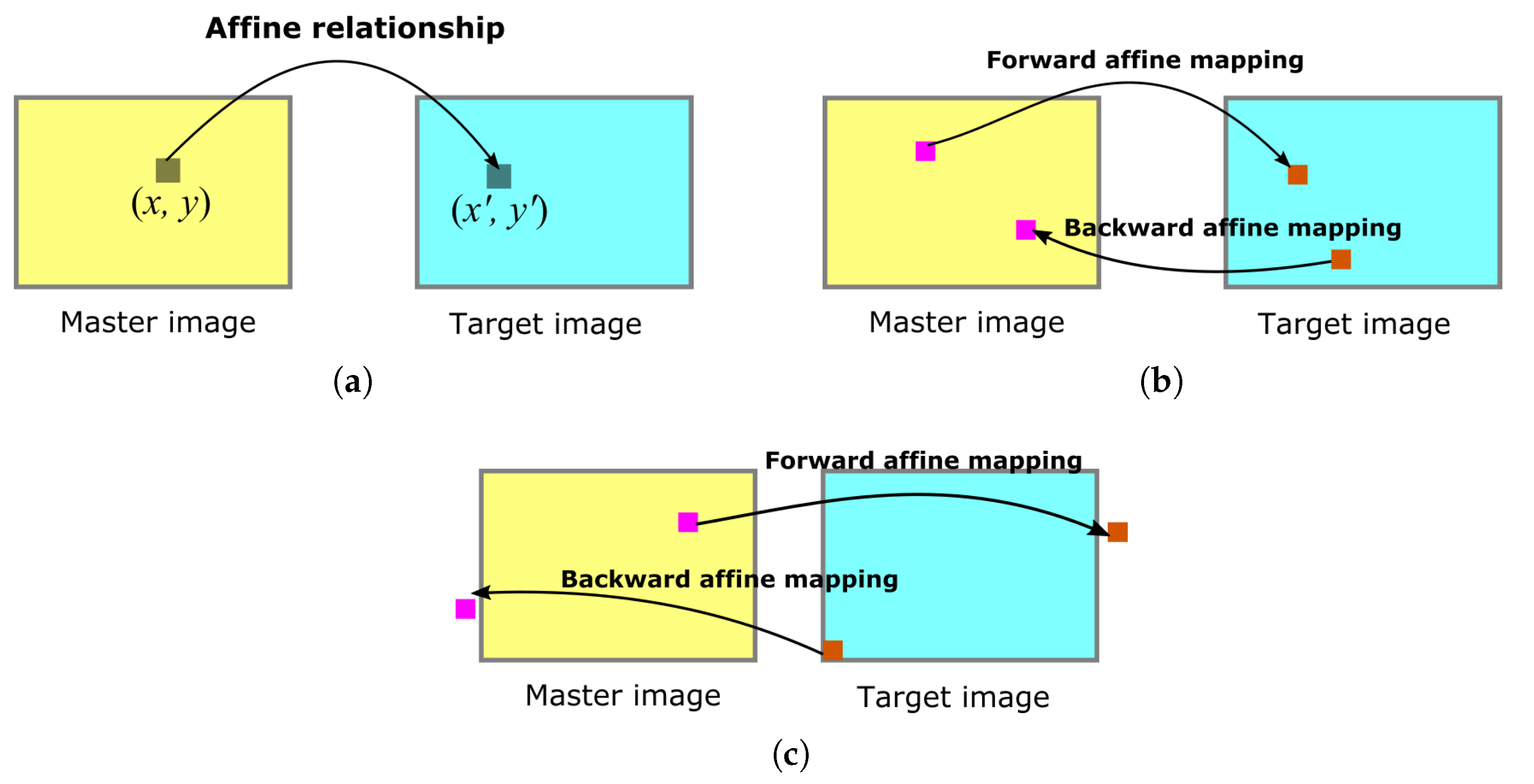

2.3. Geometric Mapping for Additional Matches

2.4. Outlier Removal and Evaluation Indicators

3. Experimental Results and Analysis

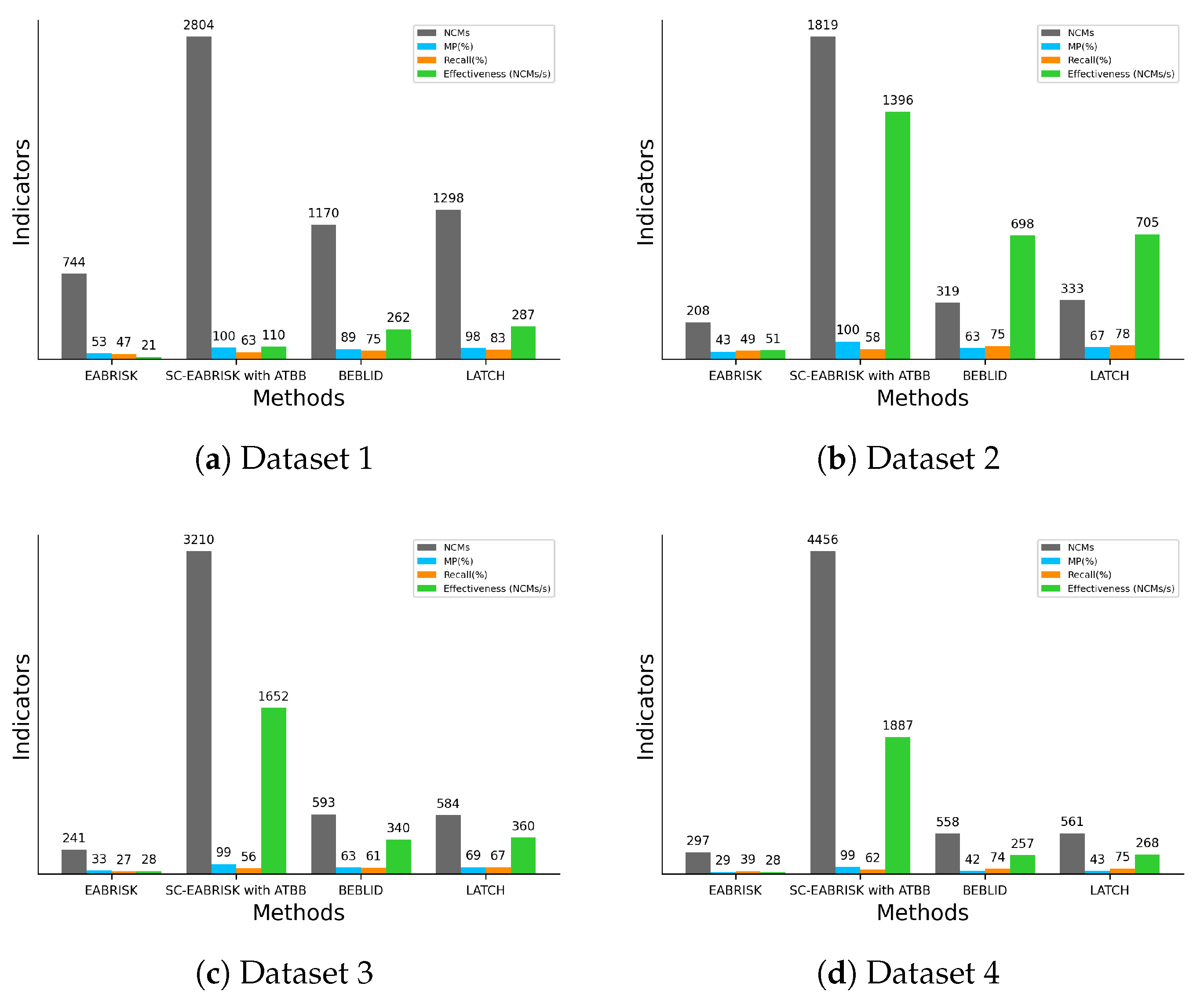

3.1. Experiments and Analyses on Benchmark Imagery Datasets

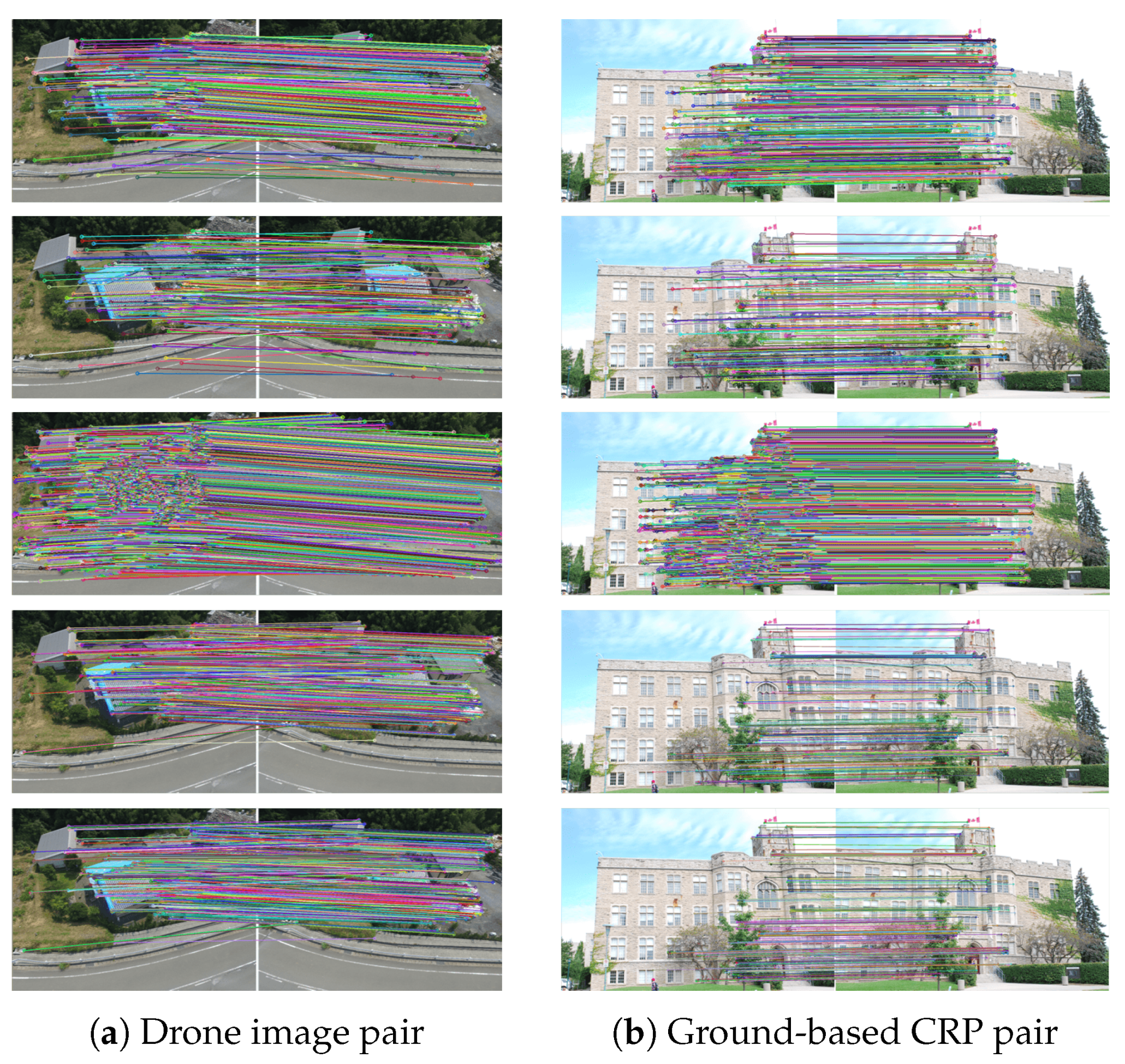

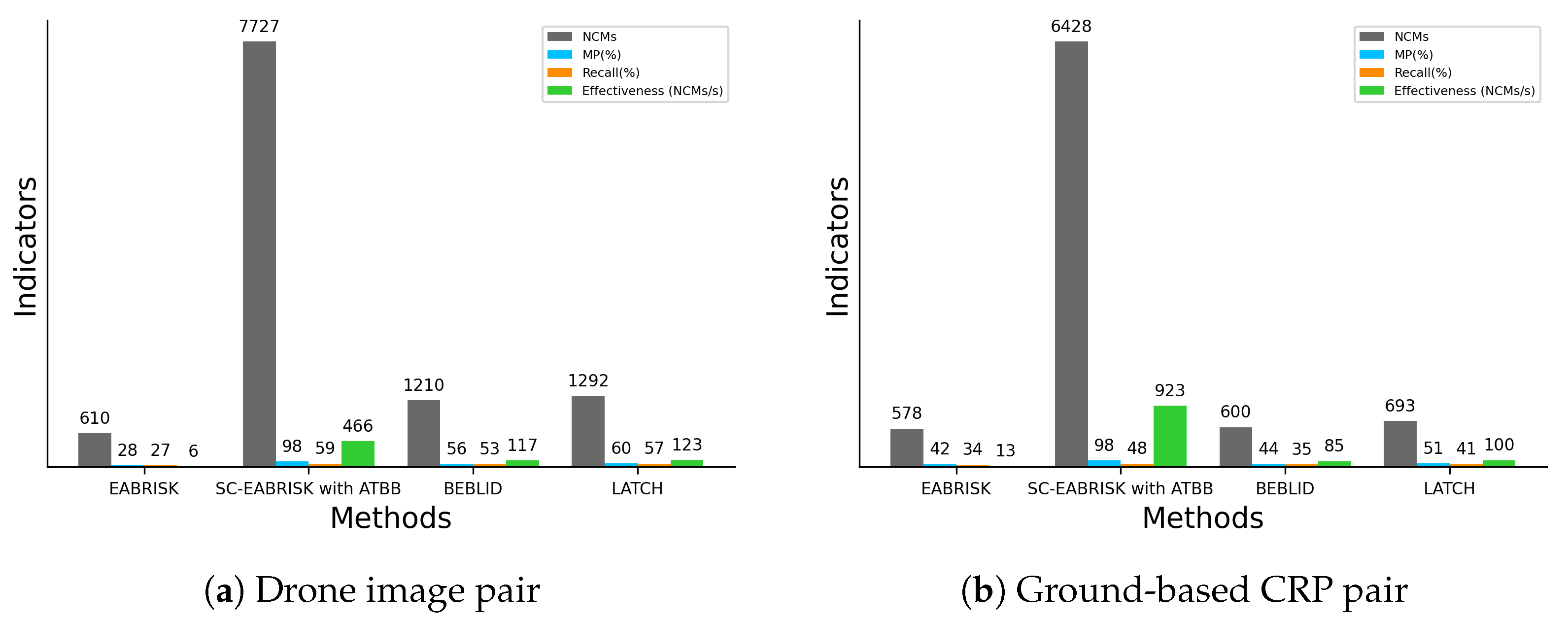

3.2. Experiments and Analyses on CRPs

3.3. Experiments and Analyses on Aerial and Satellite Images

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liu, Z.; An, J.; Jing, Y. A Simple and Robust Feature Point Matching Algorithm Based on Restricted Spatial Order Constraints for Aerial Image Registration. IEEE Trans. Geosci. Remote Sens. 2012, 50, 514–527. [Google Scholar] [CrossRef]

- Gong, M.; Zhao, S.; Jiao, L.; Tian, D.; Wang, S. A Novel Coarse-to-Fine Scheme for Automatic Image Registration Based on SIFT and Mutual Information. IEEE Trans. Geosci. Remote Sens. 2014, 52, 4328–4338. [Google Scholar] [CrossRef]

- Ma, W.; Wen, Z.; Wu, Y.; Jiao, L.; Gong, M.; Zheng, Y.; Liu, L. Remote Sensing Image Registration with Modified SIFT and Enhanced Feature Matching. IEEE Trans. Geosci. Remote Sens. 2017, 14, 3–7. [Google Scholar] [CrossRef]

- Tsai, C.; Lin, Y. An accelerated image matching technique for UAV orthoimage registration. ISPRS J. Photogramm. Remote Sens. 2017, 128, 130–145. [Google Scholar] [CrossRef]

- Qin, R.; Greun, A. 3D change detection at street level using mobile laser scanning point clouds and terrestrial images. ISPRS J. Photogramm. Remote Sens. 2014, 90, 23–35. [Google Scholar] [CrossRef]

- Lerma, J.; Navarro, S.; Cabrelles, M.; Seguí, A.; Hernández, D. Automatic orientation and 3D modelling from markerless rock art imagery. ISPRS J. Photogramm. Remote Sens. 2013, 76, 64–75. [Google Scholar] [CrossRef]

- Cheng, M.L.; Matsuoka, M. Extracting three-dimensional (3D) spatial information from sequential oblique unmanned aerial system (UAS) imagery for digital surface modeling. Int. J. Remote Sens. 2021, 42, 1643–1663. [Google Scholar] [CrossRef]

- Ekhtari, N.; Javad, M.; Zoej, V.; Sahebi, M.; Mohammadzadeh, A. Automatic building extraction from LIDAR digital elevation models and WorldView imagery. J. Appl. Remote Sens. 2009, 3, 033571. [Google Scholar] [CrossRef]

- Hartly, R.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Moulon, P.; Monasse, P.; Marlet, R. Adaptive Structure from Motion with a Contrario Model Estimation. In Proceedings of the Asian Computer Vision Conference (ACCV 2012), Daejeon, Korea, 5–9 November 2012; pp. 257–270. [Google Scholar] [CrossRef]

- Sedaghat, A.; Mokhtarzade, M.; Ebadi, H. Uniform Robust Scale-Invariant Feature Matching for Optical Remote Sensing Images. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 49, 4516–4527. [Google Scholar] [CrossRef]

- Stefano, L.; Mattoccia, S.; Tombari, F. ZNCC-based template matching using bounded partial correlation. Pattern Recognit. Lett. 2005, 26, 2129–2134. [Google Scholar] [CrossRef]

- Gruen, A. Adaptive least square correlation: A powerful image matching technique. S. Afr. J. Photogramm. Remote Sens. Cartogr. 1987, 14, 175–187. [Google Scholar]

- Chen, H.; Varshney, P.; Arora, M. Mutual information based image registration for remote sensing data. Int. J. Remote Sens. 2003, 24, 3701–3706. [Google Scholar] [CrossRef]

- Remondino, H.; El-Hakim, S.; Gruen, A.; Zhang, L. Turning images into 3-D models. IEEE Signal Process. Mag. 2008, 25, 55–65. [Google Scholar] [CrossRef]

- Mikolajczyk, K.; Tuytelaars, T.; Schmid, C.; Zisserman, A.; Matas, J.; Schaffalitzky, F.; Kadir, T.; Gool, L. A comparison of affine region detectors. Int. J. Comput. Vis. 2005, 65, 43–72. [Google Scholar] [CrossRef]

- Li, J.; Allinson, M. A comprehensive review of current local features for computer vision. Neurocomputing 2008, 71, 1771–1787. [Google Scholar] [CrossRef]

- Lowe, D. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 90, 91–110. [Google Scholar] [CrossRef]

- Mikolajczyk, K.; Schmid, C. Scale and Affine Invariant Interest Point Detectors. Int. J. Comput. Vis. 2004, 60, 63–86. [Google Scholar] [CrossRef]

- Hong, G.; Zheng, Y. Wavelet-based image registration technique for high-resolution remote sensing images. Comput. Geosci. 2008, 34, 1708–1720. [Google Scholar] [CrossRef]

- Yi, K.; Trulls, E.; Lepetit, V.; Fua, P. Lift: Learned invariant feature transform. In Proceedings of the European Conference on Computer Vision (ECCV 2016), Amsterdam, The Netherlands, 8–16 October 2016; pp. 467–483. [Google Scholar]

- Savinov, N.; Seki, A.; Ladicky, L.; Sattler, T.; Pollefeys, M. Quad-networks: Unsupervised learning to rank for interest point detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR’17), Honolulu, HI, USA, 21–26 July 2017; pp. 1822–1830. [Google Scholar] [CrossRef]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. Superpoint: Self-supervised interest point detection and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW’18), Salt Lake City, UT, USA, 18–22 June 2018; pp. 224–236. [Google Scholar] [CrossRef]

- Ono, Y.; Trulls, E.; Fua, P.; Yi, K. LF-NET: Learning local features from images. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, Montréal, QC, Canada, 3–8 December 2018. [Google Scholar]

- Wang, S.; Quan, D.; Liang, X.; Ning, M.; Guo, Y.; Jiao, L. A deep learning framework for remote sensing image registration. ISPRS J. Photogramm. Remote Sens. 2018, 145, 148–164. [Google Scholar] [CrossRef]

- Laguna, A.; Riba, E.; Ponsa, D.; Mikolajczyk, K. Key.net: Keypoint detection by handcrafted and learned CNN filters. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–3 November 2019; pp. 5836–5844. [Google Scholar] [CrossRef]

- Heipke, C.; Rottensteiner, F. Deep learning for geometric and semantic tasks in photogrammetry and remote sensing. Geo-Spat. Inf. Sci. 2020, 23, 10–19. [Google Scholar] [CrossRef]

- Ma, J.; Jiang, X.; Fan, A.; Jiang, J.; Yan, J. Image Matching from Handcrafted to Deep Features: A Survey. Int. J. Comput. Vis. 2021, 129, 23–79. [Google Scholar] [CrossRef]

- Chen, L.; Rottensteiner, F.; Heipke, C. Feature detection and description for image matching: From hand-crafted design to deep learning. Geo-Spat. Inf. Sci. 2021, 24, 58–74. [Google Scholar] [CrossRef]

- Liu, Z.; Monasse, P.; Marlet, R. Match Selection and Refinement for Highly Accurate Two-View Structure from Motion. In Proceedings of the European Conference on Computer Vision (ECCV 2014), Zurich, Switzerland, 6–12 September 2014; Volume 8690, pp. 818–833. [Google Scholar] [CrossRef]

- Ham, B.; Cho, M.; Schmid, C.; Ponce, J. Proposal Flow. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR’16), Las Vegas, NV, USA, 27–30 June 2016; pp. 3475–3484. [Google Scholar] [CrossRef]

- Long, J.; Zhang, N.; Darrell, T. Do Convnets Learn Correspondence? Adv. Neural Inf. Process. Syst. 2014, 27, 1601–1609. [Google Scholar]

- Ufer, N.; Ommer, B. Deep Semantic Feature Matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR’17), Honolulu, HI, USA, 21–26 July 2017; pp. 5929–5938. [Google Scholar] [CrossRef]

- Luo, Z.; Zhou, L.; Bai, X.; Chen, H.; Zhang, J.; Yao, Y.; Li, S.; Fang, T.; Quan, L. Aslfeat: Learning local features of accurate shape and localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR’20), Seattle, WA, USA, 13–19 June 2020; pp. 6589–6598. [Google Scholar] [CrossRef]

- Kanazawa, A.; Jacobs, D.; Chandraker, M. WarpNet: Weakly Supervised Matching for Single-View Reconstruction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR’16), Las Vegas, NV, USA, 27–30 June 2016; pp. 3253–3261. [Google Scholar] [CrossRef]

- Choy, C.; Gawk, J.; Savarese, S.; Chandraker, M. Universal Correspondence Network. In Proceedings of the 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 2406–2414. [Google Scholar]

- Zhou, T.; Krähenbühl, P.; Aubry, M.; Huang, Q.; Efros, A. Learning Dense Correspondence via 3D-Guided Cycle Consistency. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR’16), Las Vegas, NV, USA, 27–30 June 2016; pp. 117–126. [Google Scholar] [CrossRef]

- Revaud, J.; Weinzaepfel, P.; Harchaoui, Z.; Schmid, C. DeepMatching: Hierarchical Deformable Dense Matching. Int. J. Comput. Vis. 2016, 120, 1–24. [Google Scholar] [CrossRef]

- Bojanić, D.; Bartol, K.; Pribanić, T.; Petković, T.; Donoso, Y.; Mas, J. On the Comparison of Classic and Deep Keypoint Detector and Descriptor Methods. In Proceedings of the 2019 11th International Symposium on Image and Signal Processing and Analysis (ISPA), Dubrovnik, Croatia, 23–25 September 2019; pp. 64–69. [Google Scholar] [CrossRef]

- Bhowmik, A.; Gumhold, S.; Rother, C.; Brachmann, E. Reinforced feature points: Optimizing feature detection and description for a high-level task. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR’20), Seattle, WA, USA, 13–19 June 2020; pp. 4181–4190. [Google Scholar] [CrossRef]

- Xu, W.; Zhong, S.; Zhang, W.; Wang, J.; Yan, L. A New Orientation Estimation Method Based on Rotation Invariant Gradient for Feature Points. IEEE Geosci. Remote Sens. Lett. 2021, 18, 791–795. [Google Scholar] [CrossRef]

- Fan, B.; Kong, Q.; Wang, X.; Wang, Z.; Xiang, S.; Pan, C.; Fua, P. A Performance Evaluation of Local Features for Image-Based 3D Reconstruction. IEEE Trans. Image Process. 2019, 28, 4774–4789. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, L.; Wang, Z.; Wang, R. Dense Stereo Matching Strategy for Oblique Images That Considers the Plane Directions in Urban Areas. IEEE Trans. Geosci. Remote Sens. 2020, 58, 5109–5116. [Google Scholar] [CrossRef]

- Sedaghat, A.; Ebadi, H. Remote Sensing Image Matching Based on Adaptive Binning SIFT Descriptor. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 53, 5283–5293. [Google Scholar] [CrossRef]

- Ke, Y.; Sukthankar, R. PCA-SIFT: A more distinctive representation for local image descriptors. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’04), Washington, DC, USA, 27 June–2 July 2004; pp. 27–44. [Google Scholar] [CrossRef]

- Mikolajczyk, K.; Schmid, C. A performance evaluation of local descriptors. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1615–1630. [Google Scholar] [CrossRef] [PubMed]

- Bay, H.; Ess, A.; Tuytelaars, T.; Gool, L. Speeded-Up Robust Features (SURF). In Proceedings of the European Conference on Computer Vision (ECCV 2008), Glasgow, UK, 23–28 August 2008; pp. 404–417. [Google Scholar] [CrossRef]

- Yu, G.; Morel, J. ASIFT: An Algorithm for Fully Affine Invariant Comparison. Image Process. Line 2011, 1, 11–38. [Google Scholar] [CrossRef]

- Wenger, P. A technique for counting ones in a binary computer. Commun. ACM 1960, 3, 322. [Google Scholar] [CrossRef]

- Calonder, M.; Lepetit, V.; Strecha, C.; Fua, P. BRIEF: Binary robust independent elementary features. In Proceedings of the European Conference on Computer Vision (ECCV 2010), Heraklion, Crete, Greece, 5–11 September 2010; Volume 6314, pp. 778–792. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision (ICCV’11), Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar] [CrossRef]

- Leutenegger, S.; Chli, M.; Siegwart, R.Y. BRISK: Binary Robust invariant scalable keypoints. In Proceedings of the 2011 International Conference on Computer Vision (ICCV’11), Barcelona, Spain, 6–13 November 2011; pp. 2548–2555. [Google Scholar] [CrossRef]

- Kamel, M.; Hussein, S.; Salama, G.; Elhalwagy, Y. Efficient Target Detection Technique Using Image Matching Via Hybrid Feature Descriptors. In Proceedings of the 2020 12th International Conference on Electrical Engineering (ICEENG), Cairo, Egypt, 7–9 April 2020; pp. 102–107. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, H.; Guo, H.; Xiong, N. A FAST-BRISK Feature Detector with Depth Information. Sensors 2018, 11, 3908. [Google Scholar] [CrossRef] [PubMed]

- Cheng, M.L.; Matsuoka, M. An Enhanced Image Matching Strategy Using Binary-Stream Feature Descriptors. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1253–1257. [Google Scholar] [CrossRef]

- Shao, Z.; Li, C.; Li, D.; Altan, O.; Zhang, L.; Ding, L. An Accurate Matching Method for Projecting Vector Data into Surveillance Video to Monitor and Protect Cultivated Land. ISPRS Int. J. Geo-Inf. 2020, 9, 448. [Google Scholar] [CrossRef]

- Abdel-Hakim, A.; Farag, A. CSIFT: A SIFT Descriptor with Color Invariant Characteristics. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; Volume 2, pp. 1978–1983. [Google Scholar] [CrossRef]

- Jing, H.; He, S.; Han, Q.; Niu, X. CBRISK: Colored Binary Robust Invariant Scalable Keypoints. IEICE Trans. Inf. Syst. 2013, 96, 392–395. [Google Scholar] [CrossRef]

- Alitappeh, R.; Saravi, K.; Mahmoudi, F. A New Illumination Invariant Feature Based on SIFT Descriptor in Color Space. In Proceedings of the International Symposium on Robotics and Intelligent Sensors 2012 (IRIS 2012), Kuching, Sarawak, Malaysia, 4–6 September 2012; Volume 41, pp. 305–311. [Google Scholar] [CrossRef][Green Version]

- Chen, Y.; Chan, C.; Tsai, W. Creak: Color-based retina keypoint descriptor. In Proceedings of the 2016 International Conference on Image Processing, Computer Vision, and Pattern Recognition (IPCV’16), Las Vegas, NV, USA, 25–28 July 2016; pp. 252–258. [Google Scholar] [CrossRef]

- Alahi, A.; Ortiz, R.; Vandergheynst, P. FREAK: Fast Retina Keypoint. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR’12), Providence, RI, USA, 16–21 June 2012; pp. 510–517. [Google Scholar] [CrossRef]

- Hogan, M.; Weddell, J. Histology of the Human Eye. In An Atlas and Textbook, 1st ed.; Saunders: Philadelphia, PA, USA, 1971. [Google Scholar]

- Ott, M. Visual accommodation in vertebrates: Mechanisms, physiological response and stimuliu. J. Comp. Physiol. A 2006, 192, 97–111. [Google Scholar] [CrossRef] [PubMed]

- Garway-Heath, D.F.; Caprioli, J.; Fitzke, F.W.; Hitchings, R.A. Scaling the hill of vision: The physiological relationship between light sensitivity and ganglion cell numbers. Investig. Ophthalmol. Vis. Sci. 2000, 41, 1774–1782. [Google Scholar]

- Watson, A. A formula for human retinal ganglion cell receptive field density as a function of visual field location. J. Vis. 2014, 14, 1–17. [Google Scholar] [CrossRef] [PubMed]

- Hendrickson, A. Organization of the Adult Primate Fovea. In Macular Degeneration; Penfold, P.L., Provis, J.M., Eds.; Springer: Berlin/Heidelberg, Germany, 2005. [Google Scholar] [CrossRef]

- Hartley, R. In defense of the eight-point algorithm. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 580–593. [Google Scholar] [CrossRef]

- Nïster, D. An efficient solution to the five-point relative pose problem. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 16, 756–770. [Google Scholar] [CrossRef]

- Fischler, M.; Bolle, R. A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Torr, P.; Zisserman, A. Robust parameterization and computation of the trifocal tensor. Image Vis. Comput. 1997, 15, 591–605. [Google Scholar] [CrossRef]

- Subbarao, R.; Meer, P. Beyond RANSAC: User Independent Robust Regression. In Proceedings of the 2006 Conference on Computer Vision and Pattern Recognition Workshop (CVPRW’06), New York, NY, USA, 17–22 June 2006; p. 101. [Google Scholar] [CrossRef]

- Chum, O.; Matas, J.; Kittler, J. Locally Optimized RANSAC. In Pattern Recognition; Springer: Berlin/Heidelberg, Germany, 2003; Volume 2781. [Google Scholar] [CrossRef]

- Raguram, R.; Chum, O.; Pollefeys, M.; Matas, J.; Frahm, J.M. USAC: A Universal Framework for Random Sample Consensus. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2022–2038. [Google Scholar] [CrossRef]

- Barath, D.; Matas, J. Graph-Cut RANSAC. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR’18), Salt Lake City, UT, USA, 18–23 June 2018; pp. 6733–6741. [Google Scholar] [CrossRef]

- Barath, D.; Matas, J.; Noskova, J. MAGSAC: Marginalizing sample consensus. In Proceedings of the 2019 IEEE Conference on Computer Vision and Pattern Recognition (CVPR’19), Long Beach, CA, USA, 16–20 June 2019; pp. 10189–10197. [Google Scholar] [CrossRef]

- Mohammed, H.; El-Sheimy, N. A Descriptor-less Well-Distributed Feature Matching Method Using Geometrical Constraints and Template Matching. Remote Sens. 2018, 10, 747. [Google Scholar] [CrossRef]

- Jin, Y.; Mishkin, D.; Mishchuk, A. Image Matching Across Wide Baselines: From Paper to Practice. Int. J. Comput. Vis. 2021, 129, 517–547. [Google Scholar] [CrossRef]

- Mikolajczyk, K.; Schmid, C. An Affine Invariant Interest Point Detector. In Proceedings of the European Conference on Computer Vision (ECCV 2002) Lecture Notes in Computer Science, Copenhagen, Denmark, 28–31 May 2002; Volume 2350. [Google Scholar] [CrossRef]

- Maier, J.; Humenberger, M.; Zendel, O.; Vincze, M. Ground truth accuracy and performance of the matching pipeline. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW’17), Honolulu, HI, USA, 21–26 July 2017; pp. 969–979. [Google Scholar] [CrossRef]

- Levi, G.; Hassner, T. LATCH: Learned arrangements of three patch codes. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; pp. 1–9. [Google Scholar] [CrossRef]

- Suárez, I.; Sfeir, G.; Buenaposada, J.; Baumela, L. BEBLID: Boosted efficient binary local image descriptor. Pattern Recognit. Lett. 2020, 133, 366–372. [Google Scholar] [CrossRef]

- Ye, Y.; Bruzzone, L.; Shan, J.; Bovolo, F.; Zhu, Q. Fast and Robust Matching for Multimodal Remote Sensing Image Registration. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9059–9070. [Google Scholar] [CrossRef]

| Color Space | Grayscale | Red | Green | Blue | SC Keypoints | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Image pair | Dataset 1 | |||||||||

| master | target | master | target | master | target | master | target | master | target | |

| Keypoints | 1651 | 1568 | 1654 | 1576 | 1653 | 1577 | 1635 | 1564 | 1396 | 1407 |

| FPs | 2588768 | - | 1964172 | |||||||

| Image pair | Dataset 2 | |||||||||

| master | target | master | target | master | target | master | target | master | target | |

| Keypoints | 625 | 427 | 613 | 413 | 651 | 439 | 741 | 547 | 241 | 196 |

| FPs | 266875 | - | 47236 | |||||||

| Image pair | Dataset 3 | |||||||||

| master | target | master | target | master | target | master | target | master | target | |

| Keypoints | 1062 | 878 | 1034 | 804 | 1120 | 958 | 1013 | 913 | 363 | 311 |

| FPs | 932436 | - | 112893 | |||||||

| Image pair | Dataset 4 | |||||||||

| master | target | master | target | master | target | master | target | master | target | |

| Keypoints | 1644 | 753 | 1426 | 597 | 1819 | 926 | 2142 | 935 | 731 | 290 |

| FPs | 1237932 | - | 211990 | |||||||

| Algorithm | EABRISK | SC-EABRISK | SC-EABRISK with ATBB |

|---|---|---|---|

| Dataset 1 | 33.709 | 24.651 | 25.686 |

| Dataset 2 | 4.180 | 0.637 | 1.398 |

| Dataset 3 | 8.417 | 1.007 | 1.916 |

| Dataset 4 | 10.686 | 1.773 | 2.362 |

| Color Space | Grayscale | Red | Green | Blue | SC Keypoints | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Image pair | Drone image | |||||||||

| master | target | master | target | master | target | master | target | master | target | |

| Keypoints | 2688 | 2270 | 2692 | 2509 | 2713 | 2327 | 2429 | 1997 | 1253 | 992 |

| FPs | 6101760 | - | 1242976 | |||||||

| Image pair | Ground-based CRPs | |||||||||

| master | target | master | target | master | target | master | target | master | target | |

| Keypoints | 1712 | 2399 | 1999 | 2695 | 1621 | 2332 | 2200 | 2857 | 633 | 851 |

| FPs | 4107088 | - | 538683 | |||||||

| Algorithm | EABRISK | SC-EABRISK | SC-EABRISK with ATBB |

|---|---|---|---|

| Drone image pair | 94.177 | 14.561 | 16.595 |

| Ground-based CRP pair | 44.54 | 4.677 | 6.834 |

| Color Space | Grayscale | Red | Green | Blue | SC Keypoints | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Image pair | Aerial image | |||||||||

| master | target | master | target | master | target | master | target | master | target | |

| Keypoints | 3361 | 2105 | 3594 | 2279 | 4013 | 2489 | 1591 | 1100 | 609 | 383 |

| FPs | 7074905 | - | 233247 | |||||||

| Image pair | Satellite image | |||||||||

| master | target | master | target | master | target | master | target | master | target | |

| Keypoints | 2821 | 1093 | 2754 | 1094 | 2910 | 1102 | 2756 | 1097 | 1745 | 698 |

| FPs | 3083353 | - | 1218010 | |||||||

| Algorithm | EABRISK | SC-EABRISK | SC-EABRISK with ATBB |

|---|---|---|---|

| Aerial image pair | 89.743 | 2.38 | 4.982 |

| Satellite image pair | 31.78 | 11.647 | 12.903 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, M.-L.; Matsuoka, M. An Efficient and Precise Remote Sensing Optical Image Matching Technique Using Binary-Based Feature Points. Sensors 2021, 21, 6035. https://doi.org/10.3390/s21186035

Cheng M-L, Matsuoka M. An Efficient and Precise Remote Sensing Optical Image Matching Technique Using Binary-Based Feature Points. Sensors. 2021; 21(18):6035. https://doi.org/10.3390/s21186035

Chicago/Turabian StyleCheng, Min-Lung, and Masashi Matsuoka. 2021. "An Efficient and Precise Remote Sensing Optical Image Matching Technique Using Binary-Based Feature Points" Sensors 21, no. 18: 6035. https://doi.org/10.3390/s21186035

APA StyleCheng, M.-L., & Matsuoka, M. (2021). An Efficient and Precise Remote Sensing Optical Image Matching Technique Using Binary-Based Feature Points. Sensors, 21(18), 6035. https://doi.org/10.3390/s21186035