Artificial Intelligence Surgery: How Do We Get to Autonomous Actions in Surgery?

Abstract

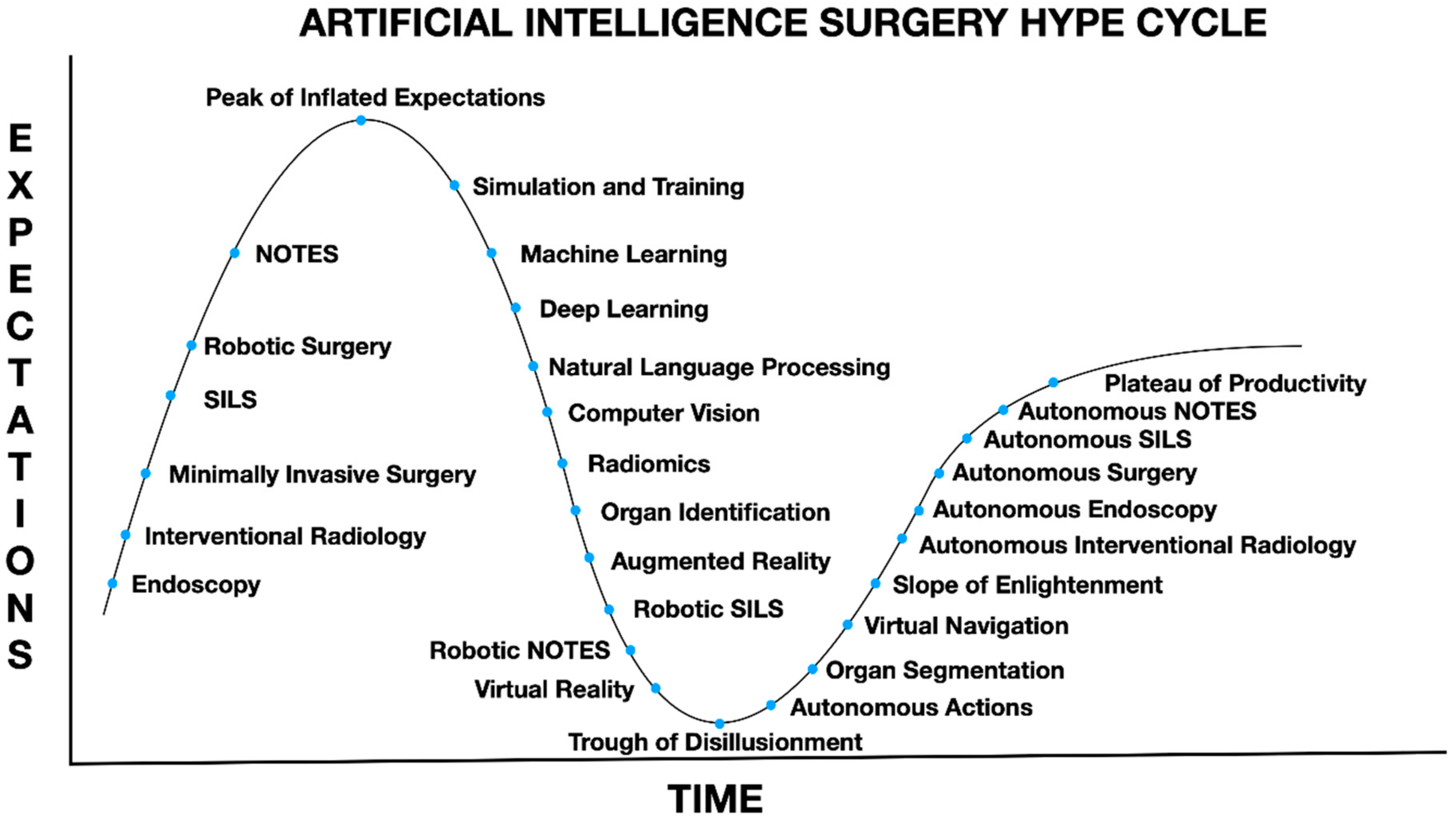

1. Introduction

2. Machine Learning

3. Natural Language Processing

4. Deep Learning and Computer Vision

4.1. Traditional vs. Deep Models

4.2. Advances in Computer Vision

4.3. CV in AIS

4.4. Reinforcement Learning

4.5. Challenges in AIS

4.5.1. Data Availability

4.5.2. Data Annotation

4.5.3. Ethics of Technological Advancements in AIS

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Oosterhoff, J.H.; Doornberg, J.N. Machine Learning Consortium Artificial intelligence in orthopaedics: False hope or not? A narrative review along the line of Gartner’s hype cycle. EFORT Open Rev. 2020, 5, 593–603. [Google Scholar] [CrossRef]

- Gumbs, A.A.; Perretta, S.; D’Allemagne, B.; Chouillard, E. What is Artificial Intelligence Surgery? Artif. Intell. Surg. 2021, 1, 1–10. [Google Scholar] [CrossRef]

- Gumbs, A.A.; De Simone, B.; Chouillard, E. Searching for a better definition of robotic surgery: Is it really different from laparoscopy? Mini Invasive Surg. 2020, 2020, 90. [Google Scholar] [CrossRef]

- Randolph, A.G.; Haynes, R.B.; Wyatt, J.C.; Cook, D.J.; Guyatt, G.H. Users’ Guides to the Medical Literature: XVIII. How to use an article evaluating the clinical impact of a computer-based clinical decision support system. JAMA 1999, 282, 67–74. [Google Scholar] [CrossRef] [PubMed]

- Kassahun, Y.; Yu, B.; Tibebu, A.T.; Stoyanov, D.; Giannarou, S.; Metzen, J.H.; Poorten, E.V. Surgical robotics beyond enhanced dexterity instrumentation: A survey of machine learning techniques and their role in intelligent and autonomous surgical actions. Int. J. Comput. Assist. Radiol. Surg. 2015, 11, 553–568. [Google Scholar] [CrossRef] [PubMed]

- Hashimoto, D.A.; Rosman, G.; Rus, D.; Meireles, O.R. Artificial Intelligence in Surgery: Promises and Perils. Ann. Surg. 2018, 268, 70–76. [Google Scholar] [CrossRef]

- Ngiam, K.Y.; Khor, I.W. Big data and machine learning algorithms for health-care delivery. Lancet Oncol. 2019, 20, e262–e273. [Google Scholar] [CrossRef]

- Corey, K.M.; Kashyap, S.; Lorenzi, E.; Lagoo-Deenadayalan, S.A.; Heller, K.; Whalen, K.; Balu, S.; Heflin, M.T.; McDonald, S.R.; Swaminathan, M.; et al. Development and validation of machine learning models to identify high-risk surgical patients using automatically curated electronic health record data (Pythia): A retrospective, single-site study. PLoS Med. 2018, 15, e1002701. [Google Scholar] [CrossRef]

- Bertsimas, D.; Dunn, J.; Velmahos, G.C.; Kaafarani, H.M.A. Surgical Risk Is Not Linear: Derivation and Validation of a Novel, User-friendly, and Machine-learning-based Predictive OpTimal Trees in Emergency Surgery Risk (POTTER) Calculator. Ann. Surg. 2018, 268, 574–583. [Google Scholar] [CrossRef]

- Bihorac, A.; Ozrazgat-Baslanti, T.; Ebadi, A.; Motaei, A.; Madkour, M.; Pardalos, P.M.; Lipori, G.; Hogan, W.R.; Efron, P.A.; Moore, F.; et al. MySurgeryRisk: Development and Validation of a Machine-learning Risk Algorithm for Major Complications and Death After Surgery. Ann. Surg. 2019, 269, 652–662. [Google Scholar] [CrossRef]

- Chiew, C.J.; Liu, N.; Wong, T.H.; Sim, Y.E.; Abdullah, H.R. Utilizing Machine Learning Methods for Preoperative Prediction of Postsurgical Mortality and Intensive Care Unit Admission. Ann. Surg. 2020, 272, 1133–1139. [Google Scholar] [CrossRef]

- El Hechi, M.W.; Eddine, S.A.N.; Maurer, L.; Kaafarani, H.M. Leveraging interpretable machine learning algorithms to predict postoperative patient outcomes on mobile devices. Surgery 2021, 169, 750–754. [Google Scholar] [CrossRef]

- Hung, A.J.; Chen, J.; Gill, I.S. Automated Performance Metrics and Machine Learning Algorithms to Measure Surgeon Performance and Anticipate Clinical Outcomes in Robotic Surgery. JAMA Surg. 2018, 153, 770–771. [Google Scholar] [CrossRef]

- Winkler-Schwartz, A.; Yilmaz, R.; Mirchi, N.; Bissonnette, V.; Ledwos, N.; Siyar, S.; Azarnoush, H.; Karlik, B.; Del Maestro, R. Machine Learning Identification of Surgical and Operative Factors Associated With Surgical Expertise in Virtual Reality Simulation. JAMA Netw. Open 2019, 2, e198363. [Google Scholar] [CrossRef] [PubMed]

- Khalid, S.; Goldenberg, M.; Grantcharov, T.; Taati, B.; Rudzicz, F. Evaluation of Deep Learning Models for Identifying Surgical Actions and Measuring Performance. JAMA Netw. Open 2020, 3, e201664. [Google Scholar] [CrossRef] [PubMed]

- O’Sullivan, B.; Huang, S.H.; De Almeida, J.R.; Hope, A. Alpha Test of Intelligent Machine Learning in Staging Head and Neck Cancer. J. Clin. Oncol. 2020, 38, 1255–1257. [Google Scholar] [CrossRef] [PubMed]

- Maubert, A.; Birtwisle, L.; Bernard, J.; Benizri, E.; Bereder, J. Can machine learning predict resecability of a peritoneal carcinomatosis? Surg. Oncol. 2019, 29, 120–125. [Google Scholar] [CrossRef]

- Bartek, M.A.; Saxena, R.C.; Solomon, S.; Fong, C.T.; Behara, L.D.; Venigandla, R.; Velagapudi, K.; Lang, J.D.; Nair, B.G. Improving Operating Room Efficiency: Machine Learning Approach to Predict Case-Time Duration. J. Am. Coll. Surg. 2019, 229, 346–354.e3. [Google Scholar] [CrossRef]

- Nadkarni, P.M.; Ohno-Machado, L.; Chapman, W. Natural language processing: An introduction. J. Am. Med. Inform. Assoc. 2011, 18, 544–551. [Google Scholar] [CrossRef]

- Yim, W.W.; Yetisgen, M.; Harris, W.P.; Kwan, S.W. Natural Language Processing in Oncology: A Review. JAMA Oncol. 2016, 2, 797–804. [Google Scholar] [CrossRef] [PubMed]

- Koleck, T.A.; Dreisbach, C.; Bourne, P.E.; Bakken, S. Natural language processing of symptoms documented in free-text narratives of electronic health records: A systematic review. J. Am. Med. Inform. Assoc. 2019, 26, 364–379. [Google Scholar] [CrossRef]

- Hughes, K.S.; Zhou, J.; Bao, Y.; Singh, P.; Wang, J.; Yin, K. Natural language processing to facilitate breast cancer research and management. Breast J. 2019, 26, 92–99. [Google Scholar] [CrossRef]

- Banerjee, I.; Li, K.; Seneviratne, M.; Ferrari, M.; Seto, T.; Brooks, J.D.; Rubin, D.L.; Hernandez-Boussard, T. Weakly supervised natural language processing for assessing patient-centered outcome following prostate cancer treatment. JAMIA Open 2019, 2, 150–159. [Google Scholar] [CrossRef]

- Zunic, A.; Corcoran, P.; Spasic, I. Sentiment Analysis in Health and Well-Being: Systematic Review. JMIR Med. Inform. 2020, 8, e16023. [Google Scholar] [CrossRef] [PubMed]

- Bucher, B.T.; Shi, J.; Ferraro, J.P.; Skarda, D.E.; Samore, M.H.; Hurdle, J.F.; Gundlapalli, A.V.; Chapman, W.W.; Finlayson, S.R.G. Portable Automated Surveillance of Surgical Site Infections Using Natural Language Processing: Development and Validation. Ann. Surg. 2020, 272, 629–636. [Google Scholar] [CrossRef]

- Soguero-Ruiz, C.; Hindberg, K.; Rojo-Alvarez, J.L.; Skrovseth, S.O.; Godtliebsen, F.; Mortensen, K.; Revhaug, A.; Lindsetmo, R.-O.; Augestad, K.M.; Jenssen, R. Support Vector Feature Selection for Early Detection of Anastomosis Leakage from Bag-of-Words in Electronic Health Records. IEEE J. Biomed. Health Inform. 2014, 20, 1404–1415. [Google Scholar] [CrossRef] [PubMed]

- Mellia, J.A.; Basta, M.N.; Toyoda, Y.; Othman, S.; Elfanagely, O.; Morris, M.P.; Torre-Healy, L.; Ungar, L.H.; Fischer, J.P. Natural Language Processing in Surgery: A Systematic Review and Meta-analysis. Ann. Surg. 2021, 273, 900–908. [Google Scholar] [CrossRef]

- Stahl, C.C.; Jung, S.A.; Rosser, A.A.; Kraut, A.S.; Schnapp, B.H.; Westergaard, M.; Hamedani, A.G.; Minter, R.M.; Greenberg, J.A. Natural language processing and entrustable professional activity text feedback in surgery: A machine learning model of resident autonomy. Am. J. Surg. 2021, 221, 369–375. [Google Scholar] [CrossRef] [PubMed]

- Gumbs, A.A.; Crovari, F.; Vidal, C.; Henri, P.; Gayet, B. Modified Robotic Lightweight Endoscope (ViKY) Validation In Vivo in a Porcine Model. Surg. Innov. 2007, 14, 261–264. [Google Scholar] [CrossRef]

- Gumbs, A.A.; Croner, R.; Rodriguez, A.; Zuker, N.; Perrakis, A.; Gayet, B. 200 Consecutive laparoscopic pancreatic resections performed with a robotically controlled laparoscope holder. Surg. Endosc. 2013, 27, 3781–3791. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 3rd ed.; Prentice-Hall, Inc.: Hoboken, NJ, USA, 2008. [Google Scholar]

- Vyborny, C.J.; Giger, M.L. Computer vision and artificial intelligence in mammography. Am. J. Roentgenol. 1994, 162, 699–708. [Google Scholar] [CrossRef]

- Schwab, E.; Goossen, A.; Deshpande, H.; Saalbach, A. Localization of Critical Findings in Chest X-Ray Without Local Annotations Using Multi-Instance Learning. In Proceedings of the 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI), Iowa City, IA, USA, 3–7 April 2020; pp. 1879–1882. [Google Scholar]

- Noble, W.S. What is a support vector machine? Nat. Biotechnol. 2006, 24, 1565–1567. [Google Scholar] [CrossRef]

- Elyan, E.; Gaber, M.M. A genetic algorithm approach to optimising random forests applied to class engineered data. Inf. Sci. 2017, 384, 220–234. [Google Scholar] [CrossRef]

- Ding, L.; Goshtasby, A. On the Canny edge detector. Pattern Recognit. 2001, 34, 721–725. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Gool, L.V. SURF: Speeded Up Robust Features. Computer Vision—ECCV 2006; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Goodfellow, I.; Benjio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Holcomb, S.D.; Porter, W.K.; Ault, S.V.; Mao, G.; Wang, J. Overview on DeepMind and Its AlphaGo Zero AI. In Proceedings of the 2018 International Conference on Big Data and Education, Honolulu, HI, USA, 9–11 March 2018; pp. 67–71. [Google Scholar]

- Yang, Z.; Yang, D.; Dyer, C.; He, X.; Smola, A.; Hovy, E. Hierarchical attention networks for document classification. In Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; pp. 1480–1489. [Google Scholar]

- Esteva, A.; Robicquet, A.; Ramsundar, B.; Kuleshov, V.; Depristo, M.; Chou, K.; Cui, C.; Corrado, G.; Thrun, S.; Dean, J. A guide to deep learning in healthcare. Nat. Med. 2019, 25, 24–29. [Google Scholar] [CrossRef] [PubMed]

- Shone, N.; Ngoc, T.N.; Phai, V.D.; Shi, Q. A deep learning approach to network in-trusion detection. IEEE Trans. Emerg. Top. Comput. Intell. 2018, 2, 41–50. [Google Scholar] [CrossRef]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Park, U.; Jain, A.K. Face matching and retrieval using soft biometrics. IEEE Trans. Inf. Forensics Secur. 2010, 5, 406–415. [Google Scholar] [CrossRef]

- Taigman, Y.; Yang, M.; Ranzato, M.; Wolf, L. DeepFace: Closing the Gap to Human-Level Performance in Face Verification. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1701–1708. [Google Scholar]

- Ren, S.; He, K.; Girshick, R. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.E.; Fu, C.; Berg, A.C. SSD: Single Shot Multibox Detector; CoRR: Ithaca, NY, USA, 2015. [Google Scholar]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-FCN: Object Detection via Region-Based Fully Convolutional Networks; CoRR: Ithaca, NY, USA, 2016. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Zhao, W.; Chellappa, R.; Phillips, P.J.; Rosenfeld, A. Face recognition: A literature survey. ACM Comput. Surv. 2003, 35, 399–458. [Google Scholar] [CrossRef]

- Shen, D.; Wu, G.; Suk, H.-I. Deep Learning in Medical Image Analysis. Annu. Rev. Biomed. Eng. 2017, 19, 221–248. [Google Scholar] [CrossRef] [PubMed]

- Pomponiu, V.; Nejati, H.; Cheung, N.-M. Deepmole: Deep neural networks for skin mole lesion classification. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 2623–2627. [Google Scholar]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- Hu, Z.; Tang, J.; Wang, Z.; Zhang, K.; Zhang, L.; Sun, Q. Deep learning for image-based cancer detection and diagnosis—A survey. Pattern Recognit. 2018, 83, 134–149. [Google Scholar] [CrossRef]

- Levine, A.B.; Schlosser, C.; Grewal, J.; Coope, R.; Jones, S.; Yip, S. Rise of the Machines: Advances in Deep Learning for Cancer Diagnosis. Trends Cancer 2019, 5, 157–169. [Google Scholar] [CrossRef]

- Wadhwa, A.; Bhardwaj, A.; Verma, V.S. A review on brain tumor segmentation of MRI images. Magn. Reson. Imaging 2019, 61, 247–259. [Google Scholar] [CrossRef]

- Schlemper, J.; Oktay, O.; Schaap, M.; Heinrich, M.; Kainz, B.; Glocker, B.; Rueckert, D. Attention gated networks: Learning to leverage salient regions in medical images. Med. Image Anal. 2019, 53, 197–207. [Google Scholar] [CrossRef]

- Rajpurkar, P.; Irvin, J.; Bagul, A.; Ding, D.; Duan, T.; Mehta, H.; Yang, B.; Zhu, K.; Laird, D.; Ball, R.L.; et al. Mura: Large Dataset for Abnormality Detection in Musculoskeletal Radiographs. arXiv 2018, arXiv:1712.06957. [Google Scholar]

- Armato, S.G., III; McLennan, G.; Bidaut, L.; McNitt-Gray, M.F.; Meyer, C.R.; Reeves, A.P.; Zhao, B.; Aberle, D.R.; Henschke, C.I.; Hoffman, E.A.; et al. The Cancer Imaging Archive (TCIA). Available online: https://wiki.cancerimagingarchive.net/display/Public/LIDC-IDRI (accessed on 16 August 2021).

- Twinanda, A.; Shehata, S.; Mutter, D.; Marescaux, J.; de Mathelin, M.; Padoy, N. Endonet: A deep architecture for recognition tasks on laparoscopic videos. IEEE Trans. Med. Imaging 2017, 36, 86–97. [Google Scholar] [CrossRef]

- Yu, F.; Croso, G.S.; Kim, T.S.; Song, Z.; Parker, F.; Hager, G.D.; Reiter, A.; Vedula, S.S.; Ali, H.; Sikder, S. Assessment of Automated Identification of Phases in Videos of Cataract Surgery Using Machine Learning and Deep Learning Techniques. JAMA Netw. Open 2019, 2, e191860. [Google Scholar] [CrossRef] [PubMed]

- Marban, A.; Srinivasan, V.; Samek, W.; Fernandez, J.; Casals, A. Estimating position amp; velocity in 3d space from monocular video sequences using a deep neural network. Proceedings of IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017; pp. 1460–1469. [Google Scholar]

- Sarikaya, D.; Corso, J.J.; Guru, K.A. Detection and Localization of Robotic Tools in Robot-Assisted Surgery Videos Using Deep Neural Networks for Region Proposal and Detection. IEEE Trans. Med. Imaging 2017, 36, 1542–1549. [Google Scholar] [CrossRef] [PubMed]

- Lee, D.; Yu, H.W.; Kwon, H.; Kong, H.; Lee, K.; Kim, H. Evaluation of surgical skills during robotic surgery by deep learning-based multiple surgical instrument tracking in training and actual operations. J. Clin. Med. 2020, 9, 1964. [Google Scholar] [CrossRef] [PubMed]

- Garcıa-Peraza-Herrera, L.; Li, W.; Gruijthuijsen, C.; Devreker, A.; Attilakos, G.; Deprest, J.; Vander Poorten, E.; Stoyanov, D.; Vercauteren, T.; Ourselin, S. Real-time segmentation of non-rigid surgical tools based on deep learning and tracking. In Computer-Assisted and Robotic Endoscopy; Peters, T., Yang, G., Navab, N., Mori, K., Luo, X., Reichl, T., McLeod, J., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Shvets, A.; Rakhlin, A.; Kalinin, A.; Iglovikov, V. Automatic instrument segmentation in robot-assisted surgery using deep learning. Proceedings of 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; pp. 624–628. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 1–26 July 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Ward, T.M.; Mascagni, P.; Madani, A.; Padoy, N.; Perretta, S.; Hashimoto, D.A. Surgical data science and artificial intelligence for surgical education. J. Surg. Oncol. 2021, 124, 221–230. [Google Scholar] [CrossRef]

- Birkhoff, D.C.; van Dalen, A.S.H.; Schijven, M.P. A Review on the Current Applications of Artificial Intelligence in the Operating Room. Surg. Innov. 2021, 28. [Google Scholar] [CrossRef]

- Pangal, D.J.; Kugener, G.; Shahrestani, S.; Attenello, F.; Zada, G.; Donoho, D.A. A Guide to Annotation of Neurosurgical Intraoperative Video for Machine Learning Analysis and Computer Vision. World Neurosurg. 2021, 150, 26–30. [Google Scholar] [CrossRef]

- Kennedy-Metz, L.; Mascagni, P.; Torralba, A.; Dias, R.; Perona, P.; Shah, J.; Padoy, N.N.; Zenati, M. Computer vision in the operating room: Opportunities and caveats. IEEE Trans. Med. Robot. Bionics 2020, 3, 2–10. [Google Scholar] [CrossRef] [PubMed]

- Hua, J.; Zeng, L.; Li, G.; Ju, Z. Learning for a Robot: Deep Reinforcement Learning, Imitation Learning, Transfer Learning. Sensors 2021, 21, 1278. [Google Scholar] [CrossRef]

- Zhang, T.; Mo, H. Reinforcement learning for robot research: A comprehensive review and open issues. Int. J. Adv. Robot. Syst. 2021, 18, 17298814211007305. [Google Scholar] [CrossRef]

- Datta, S.; Li, Y.; Ruppert, M.M.; Ren, Y.; Shickel, B.; Ozrazgat-Baslanti, T.; Rashidi, P.; Bihorac, A. Reinforcement learning in surgery. Surgery 2021, 170, 329–332. [Google Scholar] [CrossRef]

- Gao, X.; Jin, Y.; Dou, Q.; Heng, P.-A. Automatic Gesture Recognition in Robot-assisted Surgery with Reinforcement Learning and Tree Search. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 31 May–31 August 2020; pp. 8440–8446. [Google Scholar]

- Sato, M.; Koga, K.; Fujii, T.; Osuga, Y. Can Reinforcement Learning Be Applied to Surgery. In Artificial Intelligence—Emerging Trends and Applications; IntechOpen: London, UK, 2018. [Google Scholar]

- Richter, F.; Member, S.; Orosco, R.K.; Yip, M.C. dVRL: Reinforcement Learning Environments for Surgical Robotics. arXiv 2019, arXiv:1903.02090. [Google Scholar]

- Barnoy, Y.; O’Brien, M.; Wang, W.; Hager, G. Robotic Surgery with Lean Reinforcement Learning. arXiv 2021, arXiv:2105.01006. [Google Scholar]

- Varier, V.M.; Rajamani, D.K.; Goldfarb, N.; Tavakkolmoghaddam, F.; Munawar, A.; Fischer, G.S. Collaborative Suturing: A Reinforcement Learning Approach to Automate Hand-off Task in Suturing for Surgical Robots. In Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 31 August–4 September 2020; pp. 1380–1386. [Google Scholar]

- Hussein, A.; Gaber, M.M.; Elyan, E. Imitation Learning: A Survey of Learning Methods. ACM Comput. Surv. 2017, 50, 1–35. [Google Scholar] [CrossRef]

- Hussein, A.; Elyan, E.; Gaber, M.M.; Jayne, C. Deep imitation learning for 3D navigation tasks. Neural Comput. Appl. 2018, 29, 389–404. [Google Scholar] [CrossRef]

- Kim, J.W.; Zhang, P.; Gehlbach, P.; Iordachita, I.; Kobilarov, M. Towards Autonomous Eye Surgery by Combining Deep Imitation Learning with Optimal Control. arXiv 2020, arXiv:2011.07778. [Google Scholar]

- Luongo, F.; Hakim, R.; Nguyen, J.H.; Anandkumar, A.; Hung, A.J. Deep learning-based computer vision to recognize and classify suturing gestures in robot-assisted surgery. Surgery 2021, 169, 1240–1244. [Google Scholar] [CrossRef] [PubMed]

- Madapana, N.; Low, T.; Voyles, R.M.; Xue, Y.; Wachs, J.; Rahman, M.; Sanchez-Tamayo, N.; Balakuntala, M.V.; Gonzalez, G.; Bindu, J.P.; et al. DESK: A Robotic Activity Dataset for Dexterous Surgical Skills Transfer to Medical Robots. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 6928–6934. [Google Scholar]

- Hashimoto, D.A.; Rosman, G.; Witkowski, E.R.; Stafford, C.; Navarette-Welton, A.J.; Rattner, D.W.; Lillemoe, K.D.; Rus, D.L.; Meireles, O.R. Computer Vision Analysis of Intraoperative Video: Automated Recognition of Operative Steps in Laparoscopic Sleeve Gastrectomy. Ann. Surg. 2019, 270, 414–421. [Google Scholar] [CrossRef] [PubMed]

- Angelos, P. Complications, Errors, and Surgical Ethics. World J. Surg. 2009, 33, 609–611. [Google Scholar] [CrossRef] [PubMed]

- Ross, S.; Robert, M.; Harvey, M.-A.; Farrell, S.; Schulz, J.; Wilkie, D.; Lovatsis, D.; Epp, A.; Easton, B.; McMillan, B.; et al. Ethical issues associated with the introduction of new surgical devices, or just because we can, doesn’t mean we should. J. Obstet. Gynaecol. Can. 2008, 30, 508–513. [Google Scholar] [CrossRef]

- Ali, Y.R.H. Ethical impact of the technology on the healthcare system. J. Clin. Investig. Stud. 2020, 3, 1–2. [Google Scholar]

- Ross, S.; Weijer, C.; Gafni, A.; Ducey, A.; Thompson, C.; LaFreniere, R. Ethics, economics and the regulation and adoption of new medical devices: Case studies in pelvic floor surgery. BMC Med. Ethic 2010, 11, 14. [Google Scholar] [CrossRef]

- Cardenas, D. Surgical ethics: A framework for surgeons, patients, and society. Rev. Colégio Bras. Cirurg. 2020, 47, e20202519. [Google Scholar] [CrossRef]

- Bengio, Y.; Lecun, Y.; Hinton, G. Turing Lecture 2018: Deep Learning for AI. Commun. ACM 2021, 64, 58–65. [Google Scholar] [CrossRef]

- Wagner, M.; Bihlmaier, A.; Kenngott, H.G.; Mietkowski, P.; Scheikl, P.M.; Bodenstedt, S.; Schiepe-Tiska, A.; Vetter, J.; Nickel, F.; Speidel, S.; et al. A learning robot for cognitive camera control in minimally invasive surgery. Surg. Endosc. 2021, 35, 5365–5374. [Google Scholar] [CrossRef] [PubMed]

- Lao, J.; Chen, Y.; Li, Z.-C.; Li, Q.; Zhang, J.; Liu, J.; Zhai, G. A Deep Learning-Based Radiomics Model for Prediction of Survival in Glioblastoma Multiforme. Sci. Rep. 2017, 7, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Rodríguez-Ruiz, A.; Lång, K.; Gubern-Merida, A.; Broeders, M.; Gennaro, G.; Clauser, P.; Helbich, T.H.; Chevalier, M.; Tan, T.; Mertelmeier, T.; et al. Stand-Alone Artificial Intelligence for Breast Cancer Detection in Mammography: Comparison With 101 Radiologists. J. Natl. Cancer Inst. 2019, 111, 916–922. [Google Scholar] [CrossRef] [PubMed]

- Degrave, J.; Hermans, M.; Dambre, J.; Wyffels, F. A Differentiable Physics Engine for Deep Learning in Robotics. Front. Neurorobot. 2019, 13, 6. [Google Scholar] [CrossRef] [PubMed]

- Bauernschmitt, R.; Schirmbeck, E.U.; Knoll, A.; Mayer, H.; Nagy, I.; Wessel, N.; Wildhirt, S.M.; Lange, R. Towards robotic heart surgery: Introduction of autonomous procedures into an experimental surgical telemanipulator system. Int. J. Med. Robot. Comput. Assist. Surg. 2005, 1, 74–79. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Treat, M.R.; Amory, S.E.; Downey, P.E.; Taliaferro, D.A. Initial clinical experience with a partly autonomous robotic surgical instrument server. Surg. Endosc. 2006, 20, 1310–1314. [Google Scholar] [CrossRef]

- Bethea, B.T.; Okamura, A.; Kitagawa, M.; Fitton, T.P.; Cattaneo, S.M.; Gott, V.L.; Baumgartner, W.A.; Yuh, D.D. Application of Haptic Feedback to Robotic Surgery. J. Laparoendosc. Adv. Surg. Tech. 2004, 14, 191–195. [Google Scholar] [CrossRef]

- King, C.-H.; Culjat, M.O.; Franco, M.L.; Bisley, J.; Carman, G.P.; Dutson, E.P.; Grundfest, W.S. A Multielement Tactile Feedback System for Robot-Assisted Minimally Invasive Surgery. IEEE Trans. Haptics 2008, 2, 52–56. [Google Scholar] [CrossRef]

- Hong, M.B.; Jo, Y.H. Design and Evaluation of 2-DOF Compliant Forceps with Force-Sensing Capability for Minimally Invasive Robot Surgery. IEEE Trans. Robot. 2012, 28, 932–941. [Google Scholar] [CrossRef]

- Kim, U.; Lee, D.-H.; Yoon, W.J.; Hannaford, B.; Choi, H.R. Force Sensor Integrated Surgical Forceps for Minimally Invasive Robotic Surgery. IEEE Trans. Robot. 2015, 31, 1214–1224. [Google Scholar] [CrossRef]

- Qasaimeh, M.; Sokhanvar, S.; Dargahi, J.; Kahrizi, M. PVDF-Based Microfabricated Tactile Sensor for Minimally Invasive Surgery. J. Microelectromech. Syst. 2008, 18, 195–207. [Google Scholar] [CrossRef]

- Zhao, Y.; Guo, S.; Wang, Y.; Cui, J.; Ma, Y.; Zeng, Y.; Liu, X.; Jiang, Y.; Li, Y.; Shi, L.; et al. A CNN-based prototype method of unstructured surgical state perception and navigation for an endovascular surgery robot. Med. Biol. Eng. Comput. 2019, 57, 1875–1887. [Google Scholar] [CrossRef]

- Zheng, J.-Q.; Zhou, X.-Y.; Riga, C.; Yang, G.-Z. Towards 3D Path Planning from a Single 2D Fluoroscopic Image for Robot Assisted Fenestrated Endovascular Aortic Repair. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 8747–8753. [Google Scholar]

- Li, Z.; Dankelman, J.; De Momi, E. Path planning for endovascular catheterization under curvature constraints via two-phase searching approach. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 619–627. [Google Scholar] [CrossRef]

- Mahmoodian, N.; Schaufler, A.; Pashazadeh, A.; Boese, A.; Friebe, M.; Illanes, A. Proximal detection of guide wire perforation using feature extraction from bispectral audio signal analysis combined with machine learning. Comput. Biol. Med. 2019, 107, 10–17. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.-H.; Sühn, T.; Illanes, A.; Maldonado, I.; Ahmad, H.; Wex, C.; Croner, R.; Boese, A.; Friebe, M. Proximally placed signal acquisition sensoric for robotic tissue tool interactions. Curr. Dir. Biomed. Eng. 2018, 4, 67–70. [Google Scholar] [CrossRef]

- Illanes, A.; Boese, A.; Maldonado, I.; Pashazadeh, A.; Schaufler, A.; Navab, N.; Friebe, M. Novel clinical device tracking and tissue event characterization using proximally placed audio signal acquisition and processing. Sci. Rep. 2018, 8, 1–11. [Google Scholar] [CrossRef]

- Chen, C.; Sühn, T.; Kalmar, M.; Maldonado, I.; Wex, C.; Croner, R.; Boese, A.; Friebe, M.; Illanes, A. Texture differentiation using audio signal analysis with robotic interventional instruments. Comput. Biol. Med. 2019, 112, 103370. [Google Scholar] [CrossRef]

- Illanes, A.; Schaufler, A.; Sühn, T.; Boese, A.; Croner, R.; Friebe, M. Surgical audio information as base for haptic feedback in robotic-assisted procedures. Curr. Dir. Biomed. Eng. 2020, 6, 1–5. [Google Scholar] [CrossRef]

- Schaufler, A.; Illanes, A.; Maldonado, I.; Boese, A.; Croner, R.; Friebe, M. Surgical Audio Guidance: Feasibility Check for Robotic Surgery Procedures. Curr. Dir. Biomed. Eng. 2020, 6, 571–574. [Google Scholar] [CrossRef]

- Schaufler, A.; Sühn, T.; Esmaeili, N.; Boese, A.; Wex, C.; Croner, R.; Friebe, M.; Illanes, A. Automatic differentiation between Veress needle events in laparoscopic access using proximally attached audio signal characterization. Curr. Dir. Biomed. Eng. 2019, 5, 369–371. [Google Scholar] [CrossRef]

- Sühn, T.; Pandey, A.; Friebe, M.; Illanes, A.; Boese, A.; Lohman, C. Acoustic sensing of tissue-tool interactions—potential applications in arthroscopic surgery. Curr. Dir. Biomed. Eng. 2020, 6, 595–598. [Google Scholar] [CrossRef]

- Marcus, H.J.; Payne, C.J.; Hughes-Hallett, A.; Gras, G.; Leibrandt, K.; Nandi, D.; Yang, G.Z. Making the Leap: The Translation of Innovative Surgical Devices from the Laboratory to the Operating Room. Ann. Surg. 2016, 263, 1077–1078. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gumbs, A.A.; Frigerio, I.; Spolverato, G.; Croner, R.; Illanes, A.; Chouillard, E.; Elyan, E. Artificial Intelligence Surgery: How Do We Get to Autonomous Actions in Surgery? Sensors 2021, 21, 5526. https://doi.org/10.3390/s21165526

Gumbs AA, Frigerio I, Spolverato G, Croner R, Illanes A, Chouillard E, Elyan E. Artificial Intelligence Surgery: How Do We Get to Autonomous Actions in Surgery? Sensors. 2021; 21(16):5526. https://doi.org/10.3390/s21165526

Chicago/Turabian StyleGumbs, Andrew A., Isabella Frigerio, Gaya Spolverato, Roland Croner, Alfredo Illanes, Elie Chouillard, and Eyad Elyan. 2021. "Artificial Intelligence Surgery: How Do We Get to Autonomous Actions in Surgery?" Sensors 21, no. 16: 5526. https://doi.org/10.3390/s21165526

APA StyleGumbs, A. A., Frigerio, I., Spolverato, G., Croner, R., Illanes, A., Chouillard, E., & Elyan, E. (2021). Artificial Intelligence Surgery: How Do We Get to Autonomous Actions in Surgery? Sensors, 21(16), 5526. https://doi.org/10.3390/s21165526