The Device–Object Pairing Problem: Matching IoT Devices with Video Objects in a Multi-Camera Environment

Abstract

:1. Introduction

2. Related Work

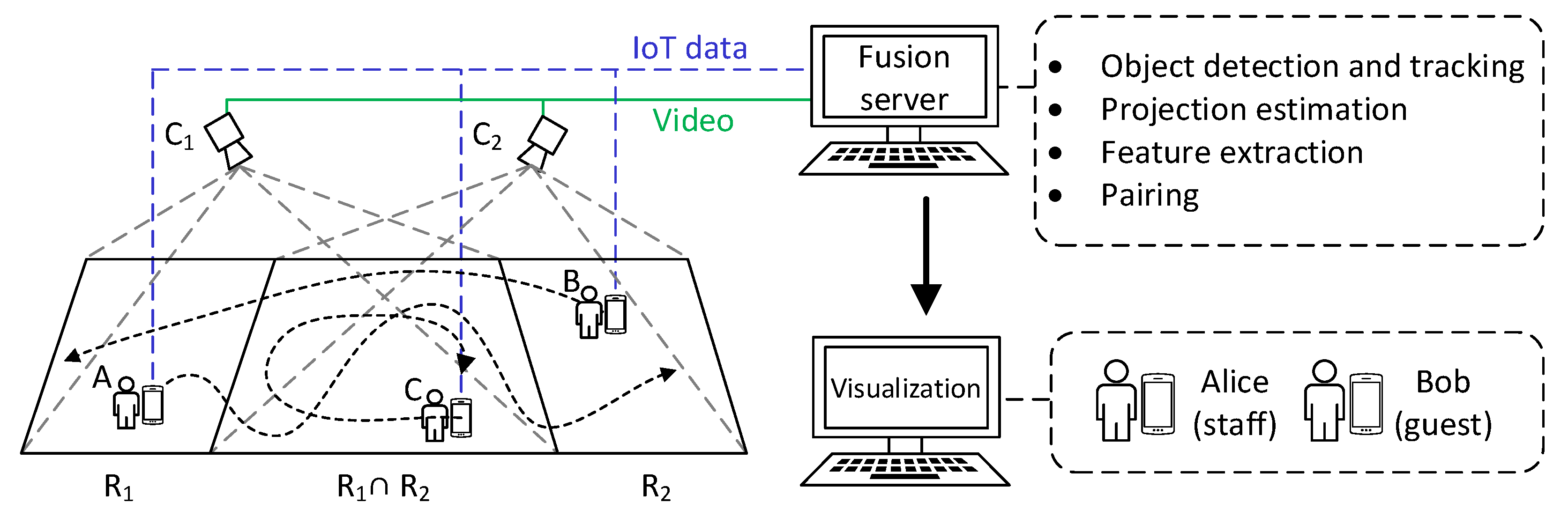

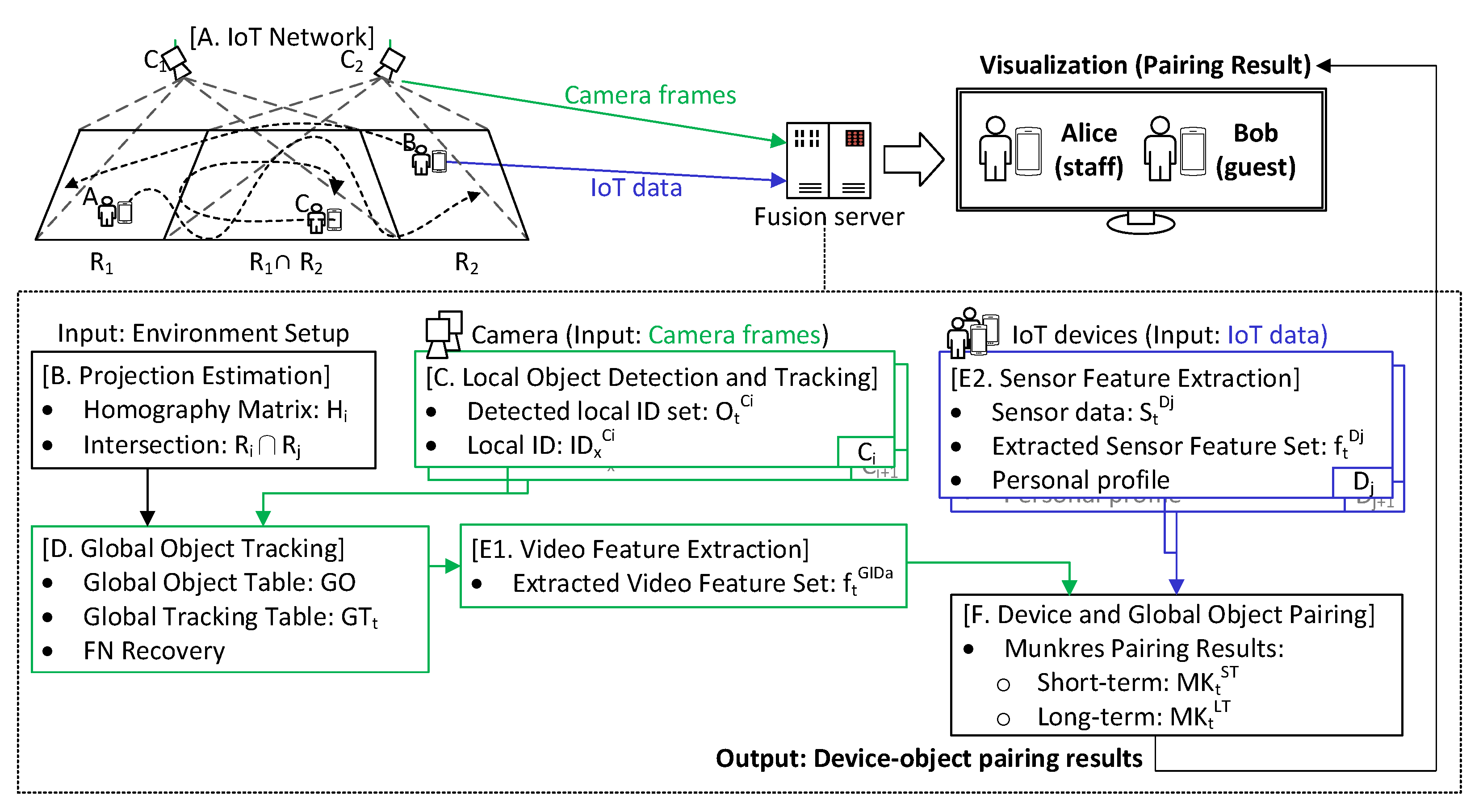

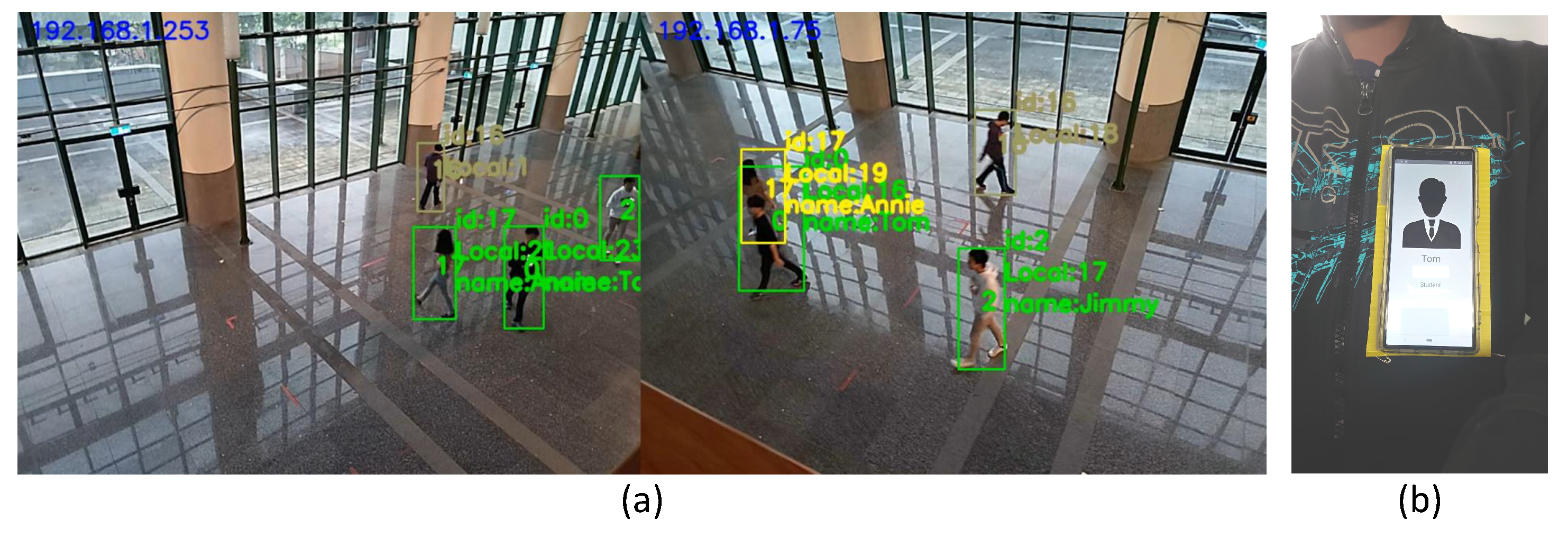

3. Fusion-Based Device–Object Pairing

3.1. IoT Network

3.2. Projection Estimation

3.3. Local Object Detection and Tracking

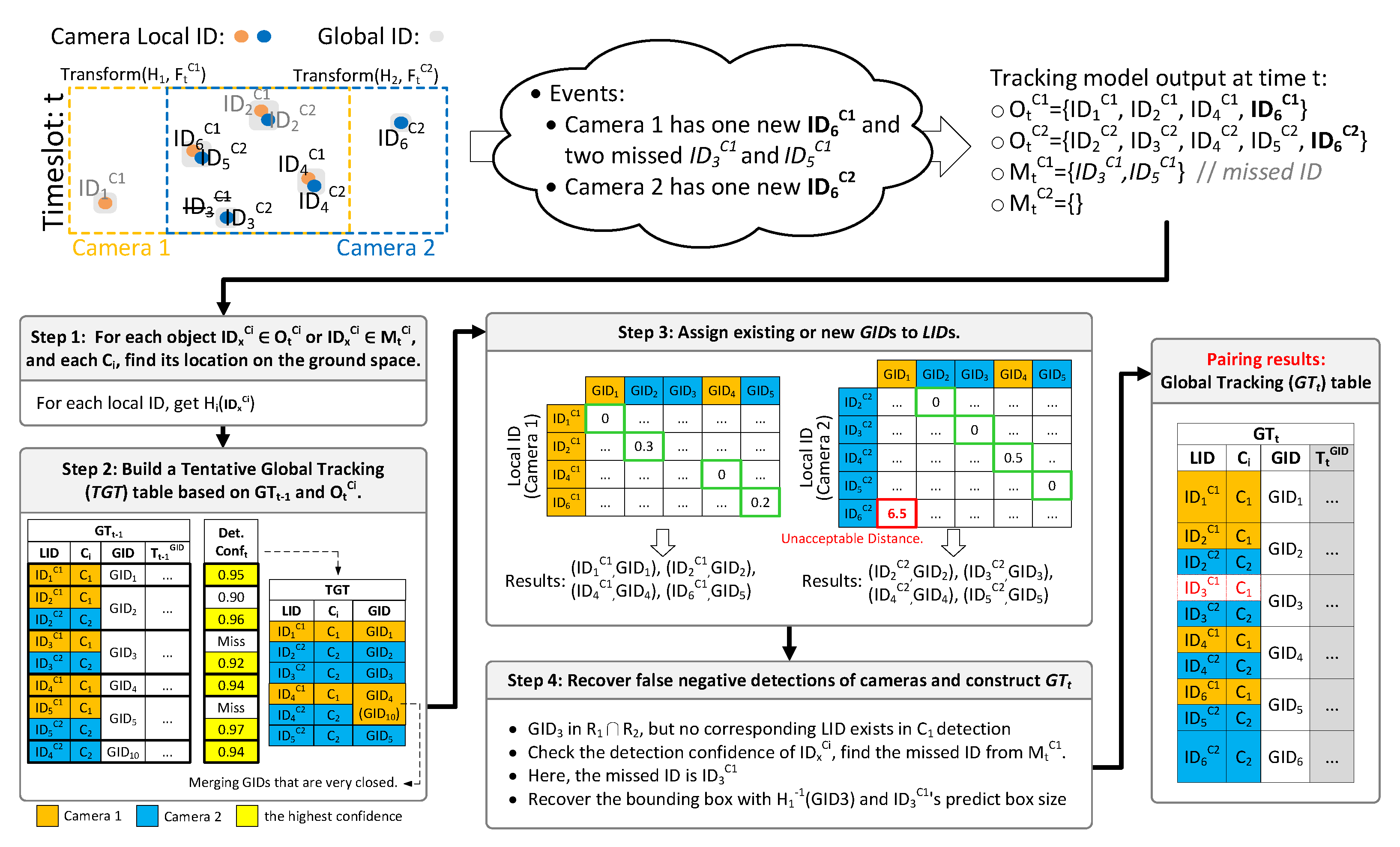

3.4. Global Object Tracking

- For each object or , and each , find x’s location on the ground space.

- Build a Tentative Global Tracking table ().

- Assign existing or new s to s.

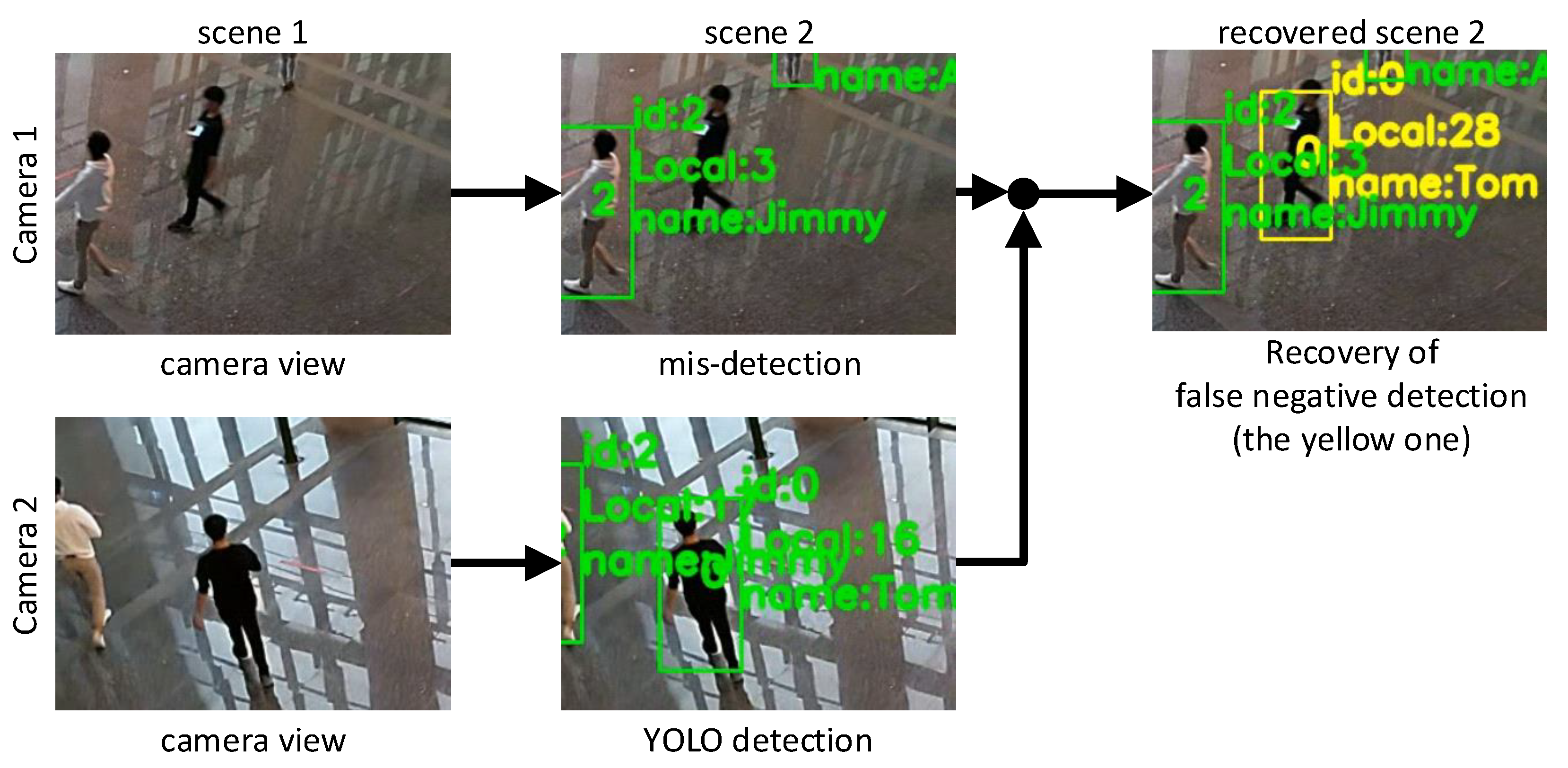

- Recover false negative detections of cameras and construct .

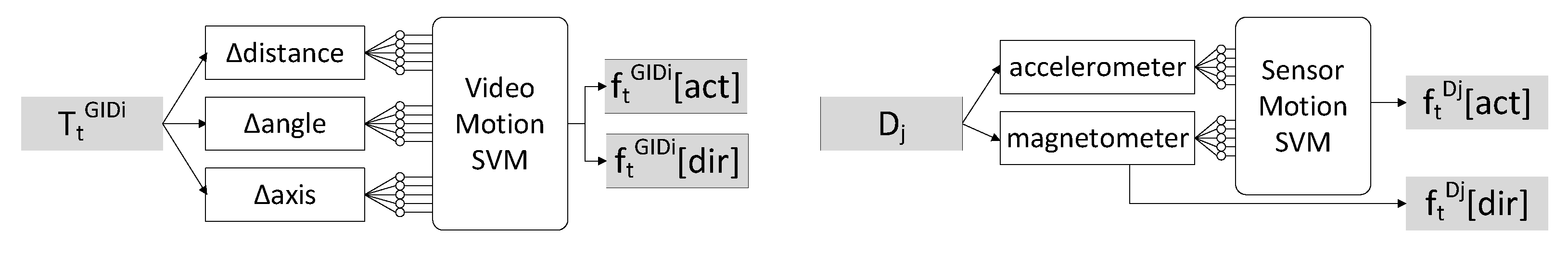

3.5. Feature Extraction

- : distance between each sampling point in a time slot.

- : angle between each sampling point in a time slot.

- : axis between each sampling point in a time slot.

- Three-axis accelerometer reading.

- Three-axis magnetometer reading.

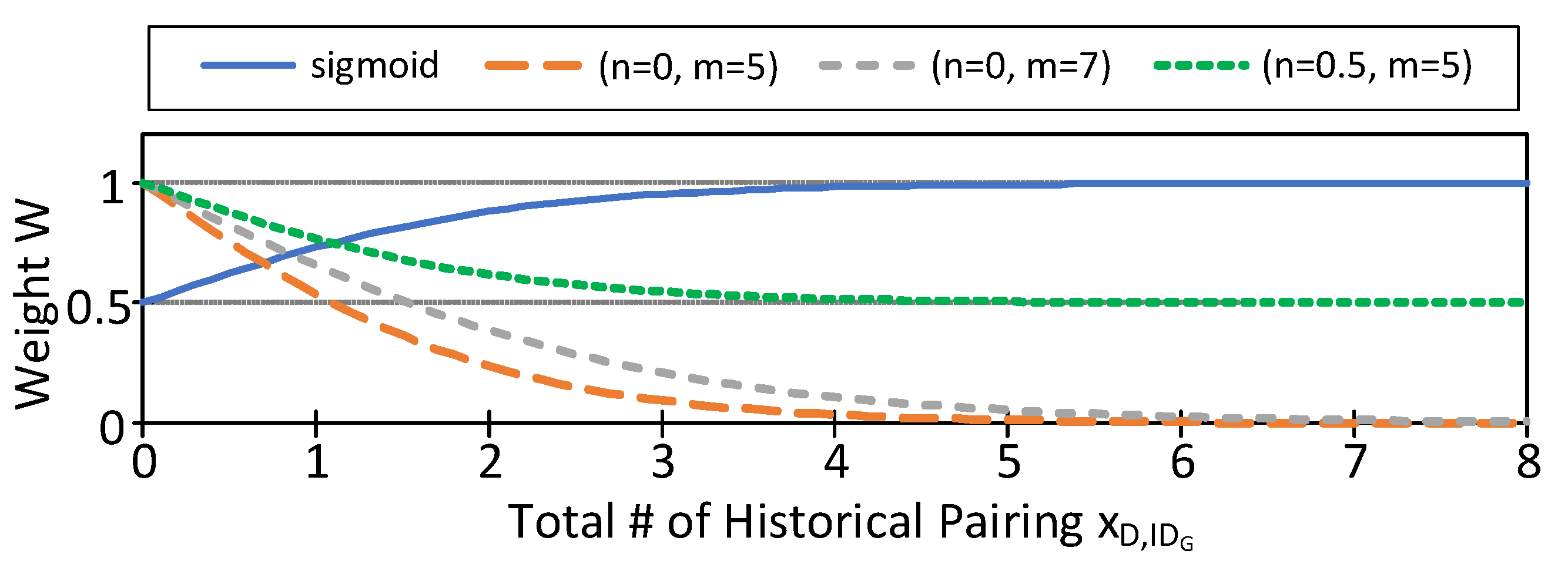

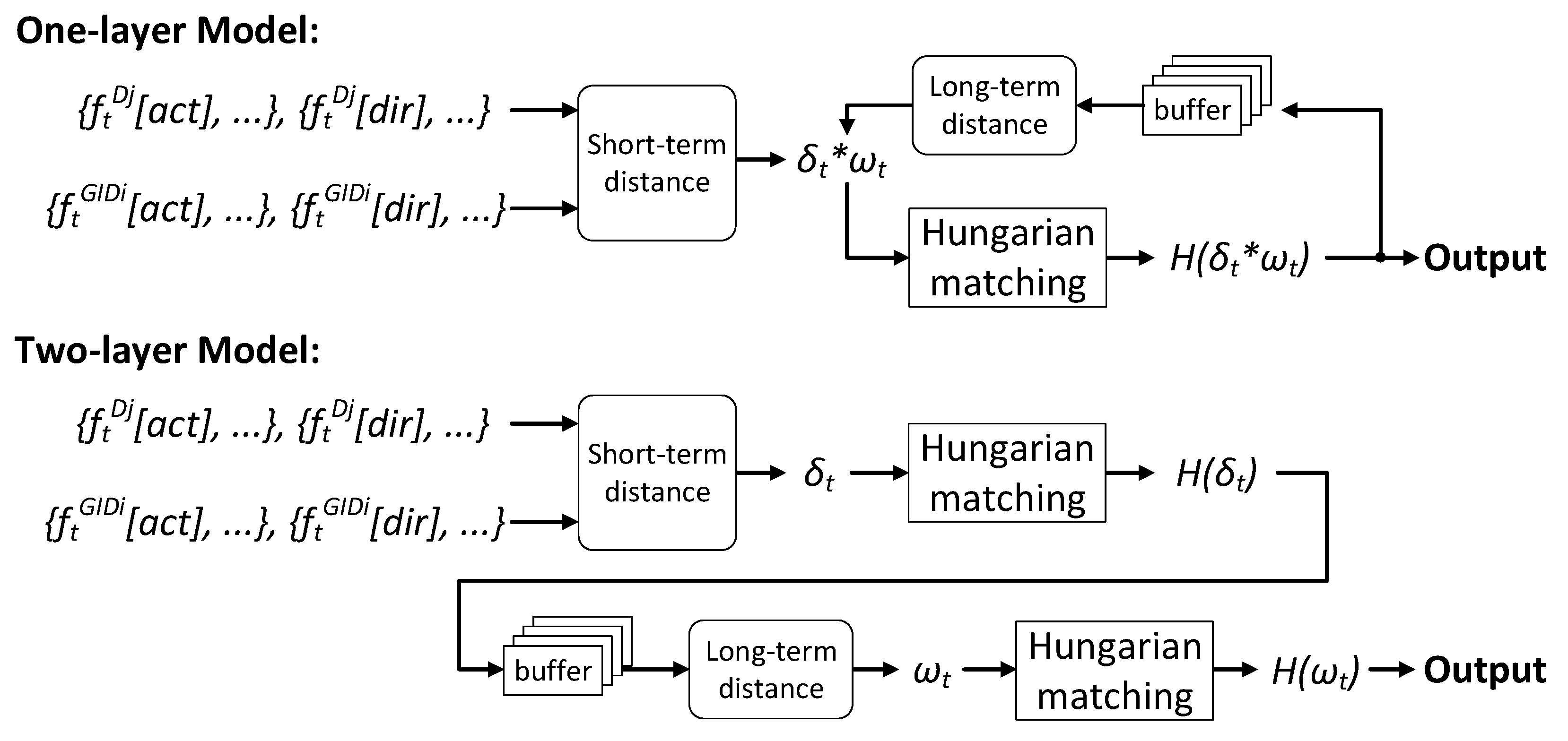

3.6. Device and Global Object Pairing

4. Performance Evaluation and Discussion

4.1. Experimental Setting

4.2. Pairing Accuracy

4.3. False Negative Recovery Capability

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| YOLO | You Only Look Once |

| RMPE | Regional Multi-person Pose Estimation |

| FPS | Frames Per Second |

| MOT | Multiple Object Tracking |

| RSSI | Received Signal Strength Indicator |

| IMU | Inertial Measurement Unit |

| JSON | JavaScript Object Notation |

| SORT | Simple Online and Realtime Tracking |

| GT | Global Tracking table |

| LID | Local ID |

| GID | Global ID |

| TGT | Tentative Global Tracking table |

| SVM | Support Vector Machine |

| V-SVM | video motion SVM |

| S-SVM | sensor motion SVM |

| PITA | Person Identification and Tracking Accuracy |

| IDP | Identification Precision |

| IDR | Identification Recall |

| IDF1 | Identification F-score 1 |

| TSync | Time Synchronization |

| DTW | Dynamic Time Warping |

| IoU | Intersection over Union |

References

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.E.; Sheikh, Y. OpenPose: Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 43, 172–186. [Google Scholar] [CrossRef] [Green Version]

- Fang, H.; Xie, S.; Tai, Y.; Lu, C. RMPE: Regional Multi-person Pose Estimation. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2353–2362. [Google Scholar]

- Ceccato, V. Eyes and Apps on the Streets: From Surveillance to Sousveillance Using Smartphones. Crim. Justice Rev. 2019, 44, 25–41. [Google Scholar] [CrossRef] [Green Version]

- Kostikis, N.; Hristu-Varsakelis, D.; Arnaoutoglou, M.; Kotsavasiloglou, C.; Baloyiannis, S. Towards remote evaluation of movement disorders via smartphones. In Proceedings of the International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; pp. 5240–5243. [Google Scholar]

- Bramberger, M.; Doblander, A.; Maier, A.; Rinner, B.; Schwabach, H. Distributed Embedded Smart Cameras for Surveillance Applications. IEEE Comput. 2006, 39, 68–75. [Google Scholar] [CrossRef]

- Vasuhi, S.; Vaidehi, V. Target Tracking using Interactive Multiple Model for Wireless Sensor Network. Inf. Fusion 2016, 27, 41–53. [Google Scholar] [CrossRef]

- Dai, J.; Zhang, P.; Wang, D.; Lu, H.; Wang, H. Video Person Re-Identification by Temporal Residual Learning. IEEE Trans. Image Process. 2019, 28, 1366–1377. [Google Scholar] [CrossRef] [Green Version]

- Rathod, V.; Katragadda, R.; Ghanekar, S.; Raj, S.; Kollipara, P.; Rani, I.A.; Vadivel, A. Smart Surveillance and Real-Time Human Action Recognition Using OpenPose. In ICDSMLA 2019; Springer: Singapore, 2019; pp. 504–509. [Google Scholar]

- Lienhart, R.; Maydt, J. An Extended Set of Haar-like Features for Rapid Object Detection. Proc. Int. Conf. Image Process. 2002, 1, 900–903. [Google Scholar]

- Berclaz, J.; Fleuret, F.; Turetken, E.; Fua, P. Multiple Object Tracking Using K-Shortest Paths Optimization. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 1806–1819. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple Online and Realtime Tracking. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3464–3468. [Google Scholar]

- YuEmail, F.; Li, W.; Li, Q.; Liu, Y.; Yan, X.S.J. POI: Multiple Object Tracking with High Performance Detection and Appearance Feature. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 36–42. [Google Scholar]

- Eshel, R.; Moses, Y. Homography based Multiple Camera Detection and Tracking of People in a Dense Crowd. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Anchorage, AK, USA, 23–28 June 2008. [Google Scholar]

- Spanhel, J.; Bartl, V.; Juranek, R.; Herout, A. Vehicle Re-Identifiation and Multi-Camera Tracking in Challenging City-Scale Environment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Neff, C.; Mendieta, M.; Mohan, S.; Baharani, M.; Rogers, S.; Tabkhi, H. REVAMP2T: Real-Time Edge Video Analytics for Multicamera Privacy-Aware Pedestrian Tracking. IEEE Internet Things J. 2020, 7, 2591–2602. [Google Scholar] [CrossRef] [Green Version]

- Kokkonis, G.; Psannis, K.E.; Roumeliotis, M.; Schonfeld, D. Real-time Wireless Multisensory Smart Surveillance with 3D-HEVC Streams for Internet-of-Things (IoT). J. Supercomput. Vol. 2017, 73, 1044–1062. [Google Scholar] [CrossRef]

- Zhang, T.; Chowdhery, A.; Bahl, P.; Jamieson, K.; Banerjee, S. The Design and Implementation of a Wireless Video Surveillance System. In Proceedings of the ACM International Conference on Mobile Computing and Networking (MobiCom), Paris, France, 7–11 September 2015; pp. 426–438. [Google Scholar]

- Tsai, R.Y.C.; Ke, H.T.Y.; Lin, K.C.J.; Tseng, Y.C. Enabling Identity-Aware Tracking via Fusion of Visual and Inertial Features. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019. [Google Scholar]

- Kao, H.W.; Ke, T.Y.; Lin, K.C.J.; Tseng, Y.C. Who Takes What: Using RGB-D Camera and Inertial Sensor for Unmanned Monitor. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019. [Google Scholar]

- Ruiz, C.; Pan, S.; Bannis, A.; Chang, M.P.; Noh, H.Y.; Zhang, P. IDIoT: Towards Ubiquitous Identification of IoT Devices through Visual and Inertial Orientation Matching During Human Activity. In Proceedings of the IEEE/ACM International Conference on Internet-of-Things Design and Implementation (IoTDI), Sydney, NSW, Australia, 21–24 April 2020; pp. 40–52. [Google Scholar]

- Cabrera-Quiros, L.; Tax, D.M.J.; Hung, H. Gestures In-The-Wild: Detecting Conversational Hand Gestures in Crowded Scenes Using a Multimodal Fusion of Bags of Video Trajectories and Body Worn Acceleration. IEEE Trans. Multimed. 2020, 22, 138–147. [Google Scholar] [CrossRef]

- Nguyen, L.T.; Kim, Y.S.; Tague, P.; Zhang, J. IdentityLink: User-Device Linking through Visual and RF-Signal Cues. In Proceedings of the ACM International Joint Conference on Pervasive and Ubiquitous Computing, Seattle, WA, USA, 13–17 September 2014; pp. 529–539. [Google Scholar]

- Van, L.D.; Zhang, L.Y.; Chang, C.H.; Tong, K.L.; Wu, K.R.; Tseng, Y.C. Things in the air: Tagging wearable IoT information on drone videos. Discov. Internet Things 2021, 1, 6. [Google Scholar] [CrossRef]

- Tseng, Y.Y.; Hsu, P.M.; Chen, J.J.; Tseng, Y.C. Computer Vision-Assisted Instant Alerts in 5G. In Proceedings of the 2020 29th International Conference on Computer Communications and Networks (ICCCN), Honolulu, HI, USA, 3–6 August 2020; pp. 1–9. [Google Scholar]

- Zhang, L.Y.; Lin, H.C.; Wu, K.R.; Lin, Y.B.; Tseng, Y.C. FusionTalk: An IoT-Based Reconfigurable Object Identification System. IEEE Internet Things J. 2021, 8, 7333–7345. [Google Scholar] [CrossRef]

- MQTT Version 5.0. Available online: http://docs.oasis-open.org/mqtt/mqtt/v5.0/mqtt-v5.0.html (accessed on 3 August 2021).

- Standard ECMA-404 The JSON Data Interchange Syntax. Available online: https://www.ecma-international.org/publications-and-standards/standards/ecma-404/ (accessed on 3 August 2021).

- Romero, A. Keyframes, InterFrame and Video Compression. Available online: https://blog.video.ibm.com/streaming-video-tips/keyframes-interframe-video-compression/ (accessed on 3 August 2021).

- Camera Calibration with OpenCV. Available online: https://docs.opencv.org/2.4/doc/tutorials/calib3d/camera_calibration/camera_calibration.html (accessed on 3 August 2021).

- Basic Concepts of the Homography Explained with Code. Available online: https://docs.opencv.org/master/d9/dab/tutorial_homography.html (accessed on 3 August 2021).

- The Shapely User Manual. Available online: https://shapely.readthedocs.io/en/latest/manual.html (accessed on 3 August 2021).

- Clementini, E.; Felice, P.D.; van Ooster, P. A Small Set of Formal Topological Relationships Suitable for End-User Interaction. In International Symposium on Spatial Databases; Springer: Berlin/Heidelberg, Germany, 1993; pp. 277–295. [Google Scholar]

- Moore, K.; Landman, N.; Khim, J. Hungarian Maximum Matching Algorithm. Available online: https://brilliant.org/wiki/hungarian-matching/ (accessed on 3 August 2021).

- Kuhn, H.W. The Hungarian Method for the Assignment Problem. Nav. Res. Logist. Q. 1955, 10, 83–97. [Google Scholar] [CrossRef] [Green Version]

- Berndt, D.J.; Clifford, J. Using Dynamic Time Warping to Find Patterns in Time Series. In Proceedings of the KDD Workshop, Seattle, WA, USA, 31 July–1 August 1994; pp. 359–370. [Google Scholar]

| YOLOv3 (2 Cameras) | SORT | GID Tracking | Device Pairing | Overall | |

|---|---|---|---|---|---|

| Average Time Consumption (s) | 0.058 | 0.003 | 0.014 | 0.002 | 0.081 |

| Train# | Test# | Accuracy | ||||||

|---|---|---|---|---|---|---|---|---|

| V-SVM | 11,020 | 2,756 | 0.975 | |||||

| S-SVM | 143,164 | 35,792 | 0.996 | |||||

| Stop | Straight | Turn Left | Turn Right | |||||

| Precision | Recall | Precision | Recall | Precision | Recall | Precision | Recall | |

| V-SVM | 0.997 | 0.997 | 0.948 | 0.981 | 0.964 | 0.981 | 0.991 | 0.968 |

| S-SVM | 0.989 | 0.999 | 0.994 | 0.989 | 0.999 | 0.996 | 0.999 | 0.996 |

| Case 1a | Case 1b | Case 2a | Case 2b | Case 3 | |

|---|---|---|---|---|---|

| User 1 | 0.937 | 0.972 | 0.932 | 0.88 | 0.992 |

| User 2 | 0.915 | 0.910 | 0.857 | 0.855 | 0.867 |

| User 3 | 0.940 | 0.975 | 0.837 | 0.853 | 0.557 |

| Unknown | 0.927 | 0.882 | 0.725 | 0.71 | 0.508 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tong, K.-L.; Wu, K.-R.; Tseng, Y.-C. The Device–Object Pairing Problem: Matching IoT Devices with Video Objects in a Multi-Camera Environment. Sensors 2021, 21, 5518. https://doi.org/10.3390/s21165518

Tong K-L, Wu K-R, Tseng Y-C. The Device–Object Pairing Problem: Matching IoT Devices with Video Objects in a Multi-Camera Environment. Sensors. 2021; 21(16):5518. https://doi.org/10.3390/s21165518

Chicago/Turabian StyleTong, Kit-Lun, Kun-Ru Wu, and Yu-Chee Tseng. 2021. "The Device–Object Pairing Problem: Matching IoT Devices with Video Objects in a Multi-Camera Environment" Sensors 21, no. 16: 5518. https://doi.org/10.3390/s21165518

APA StyleTong, K.-L., Wu, K.-R., & Tseng, Y.-C. (2021). The Device–Object Pairing Problem: Matching IoT Devices with Video Objects in a Multi-Camera Environment. Sensors, 21(16), 5518. https://doi.org/10.3390/s21165518