Edge Caching Based on Collaborative Filtering for Heterogeneous ICN-IoT Applications

Abstract

:1. Introduction

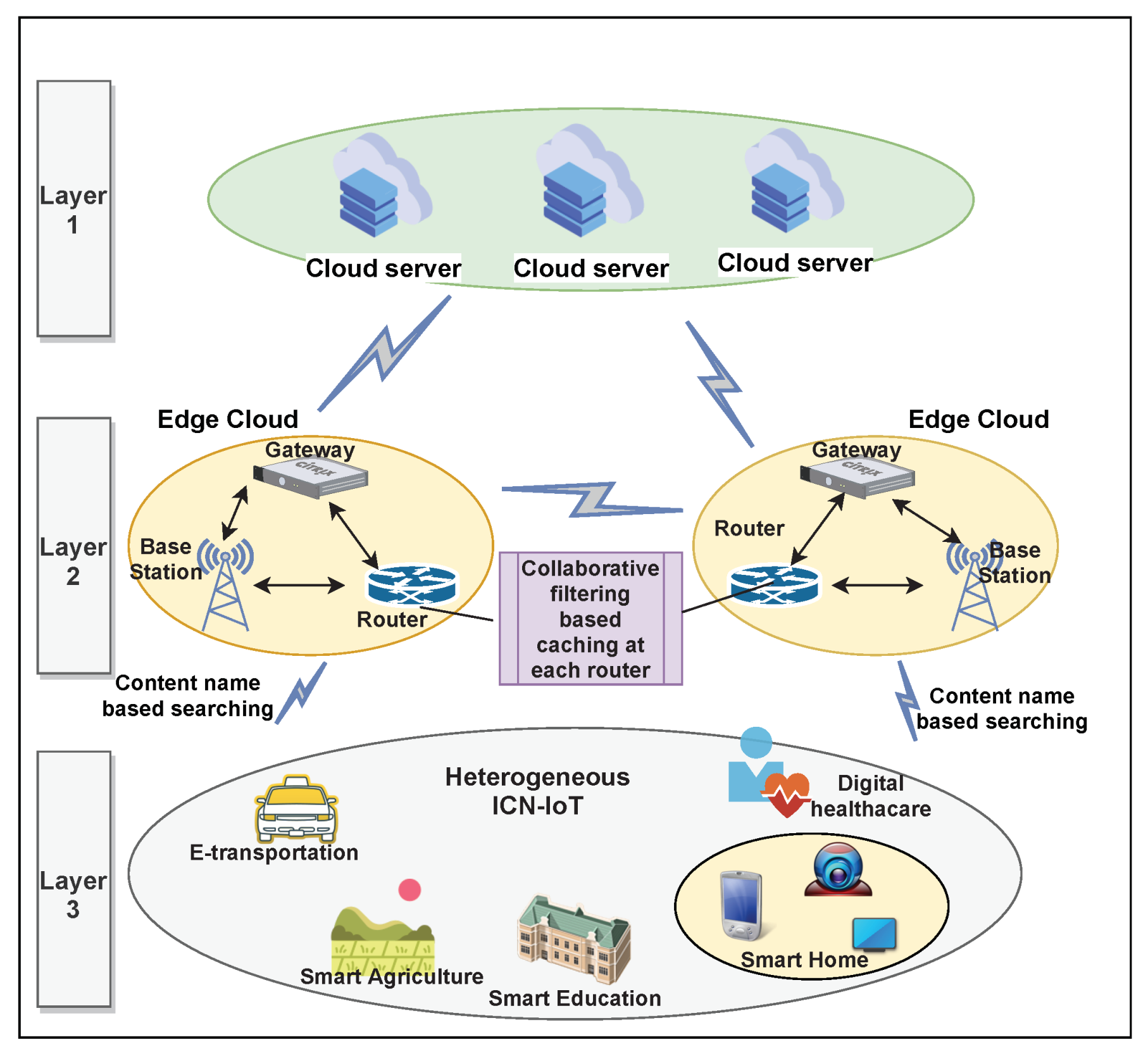

- We propose an enhanced ICN-IoT content caching strategy by enabling AI’s collaborative filtering within edge cloud to support heterogeneous IoT architectures for traffic management at conventional cloud computing model. An architecture is designed by combining ICN-IoT, edge, cloud, and AI for heterogeneous IoT applications to provide an enhanced hardware model which support user’s QoE.

- We propose a content-based collaborative filtering caching technique for intelligently caching content on edge nodes. Through the combination of a wireless communication model, and collaborative filtering caching model of edge nodes, a content fetching algorithm is designed to retrieve user’s data efficiently.

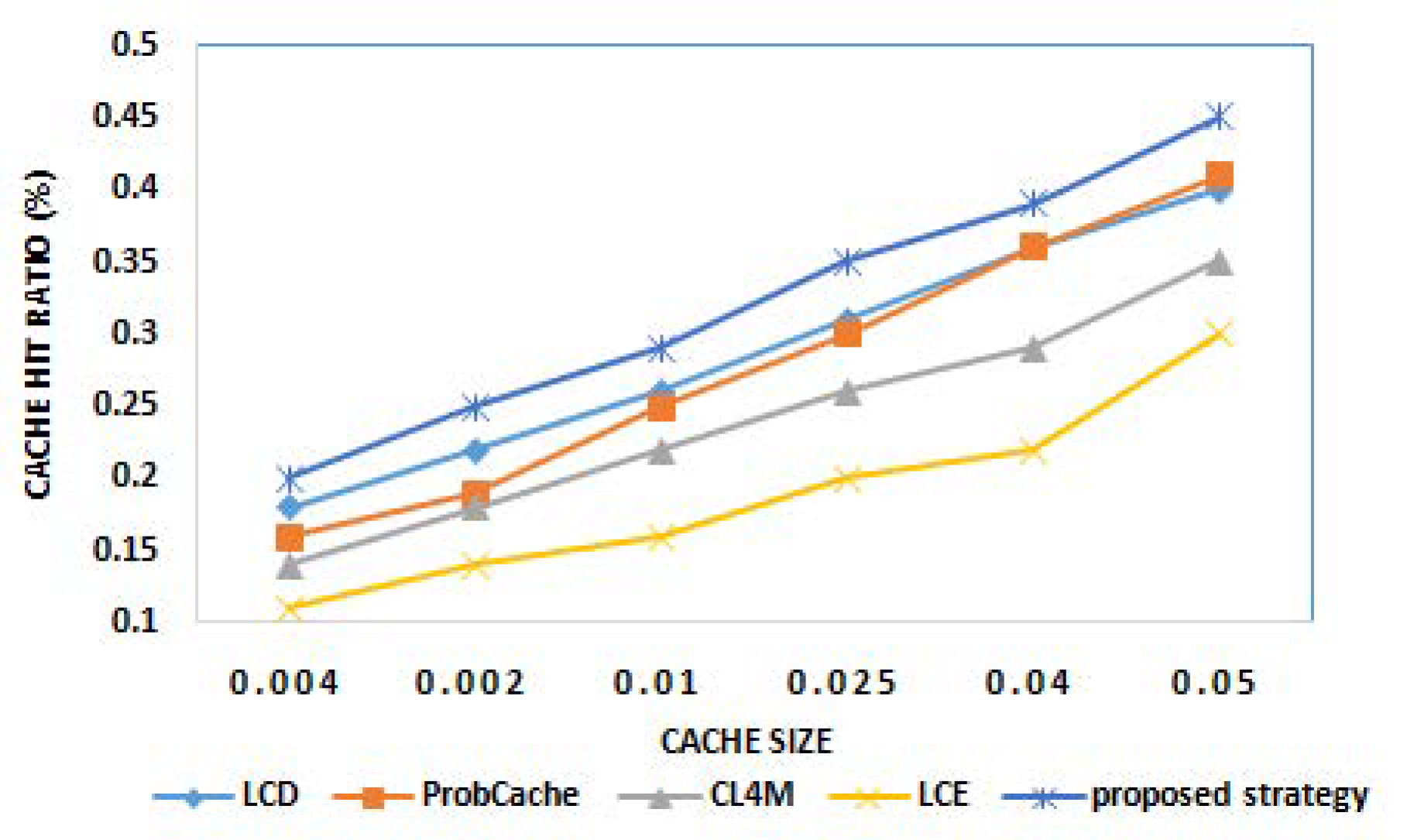

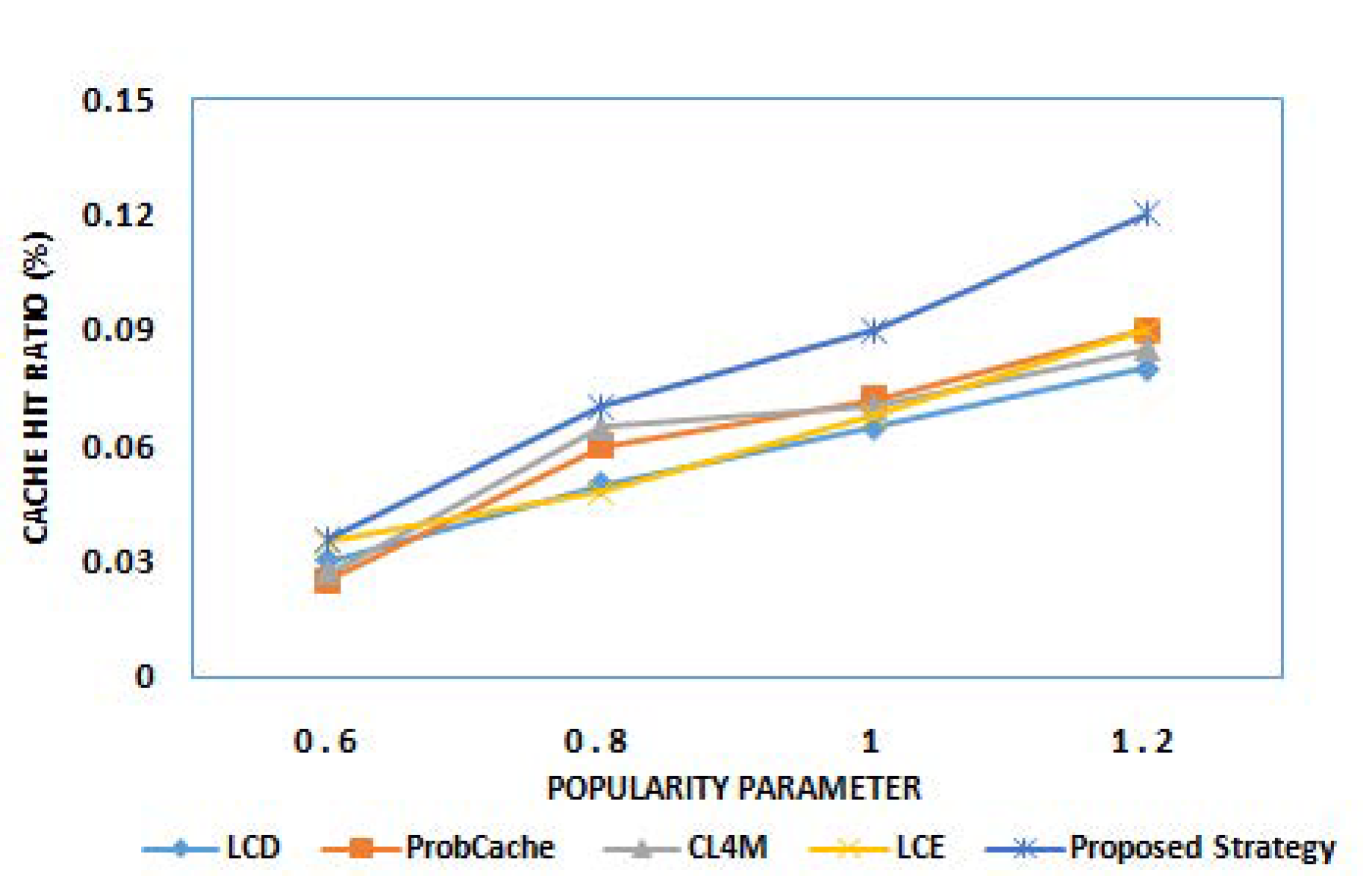

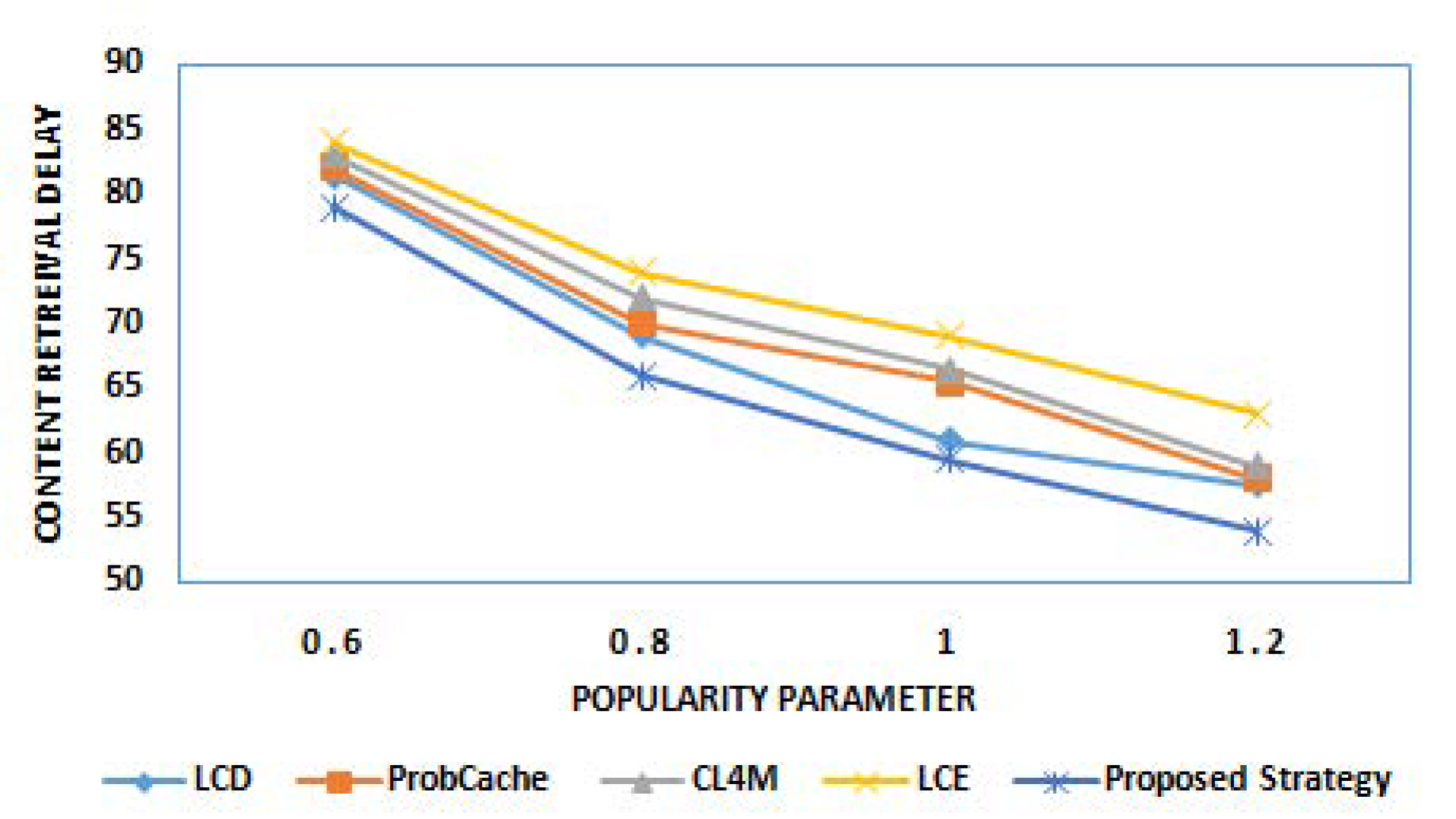

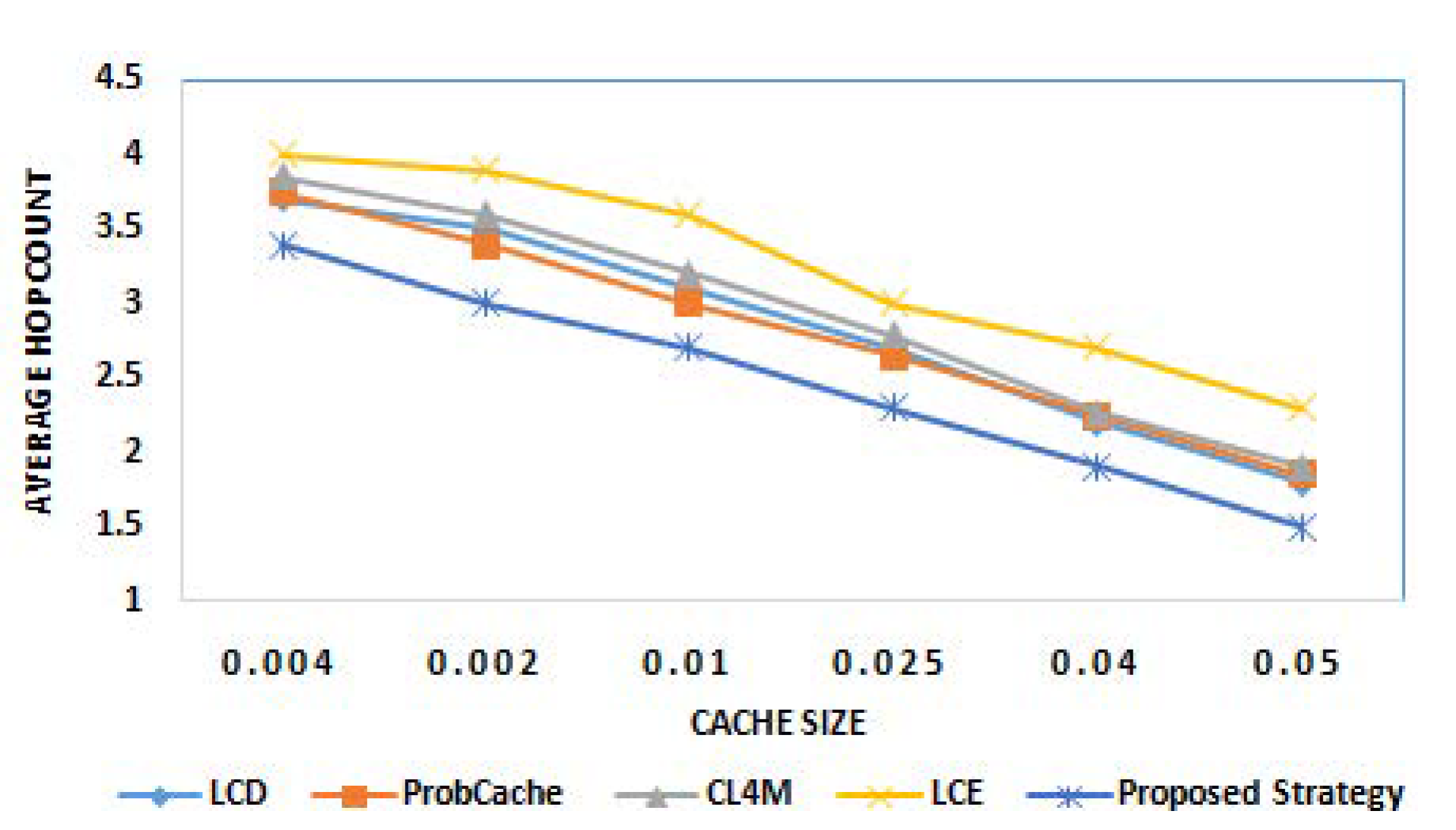

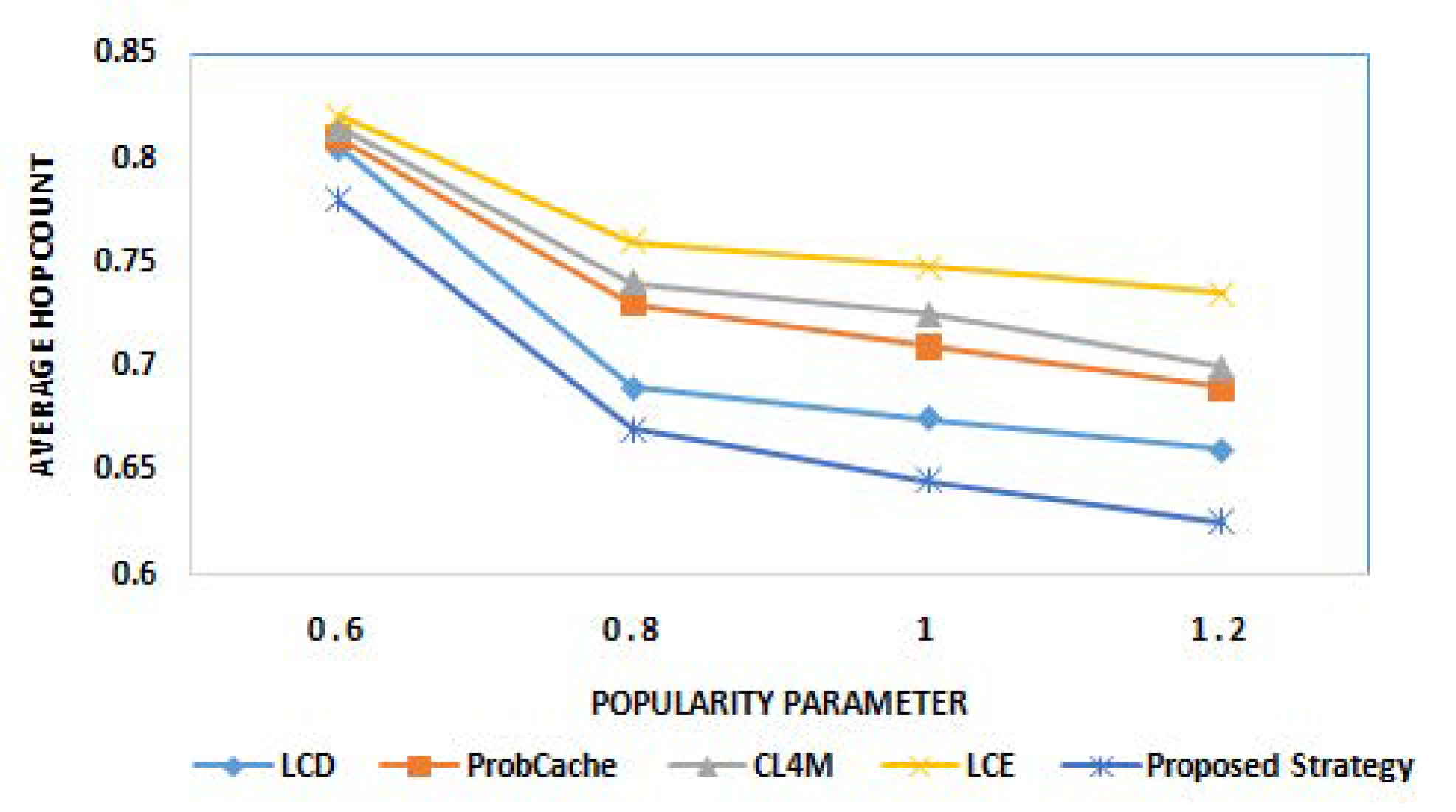

- We perform extensive simulations to validate the effectiveness of our proposed scheme over state-of-the-art caching strategies, such as LCE, LCD, ProbCache, and CL4M. The results obtained from experimentation prove that our proposed scheme significantly achieves higher cache hit ratio. In addition, the proposed scheme is efficient to achieve lower content delay and reduced path stretch when compared to these strategies.

2. Related Work

2.1. IoT and ICN

2.2. IoT and Edge Computing

2.3. Artificial Intelligence in Edge Caching

3. System Model

4. Proposed Framework

4.1. Edge Clustering

4.2. Edge Caching

4.3. Content Fetching

| Algorithm 1: Content Searching . |

| Input: associated with |

| Output: |

| Begin: |

| 1 For each requested by do |

| 2 Check in ’s cache |

| 3 if ((() ← check () ) ≠ 1) |

| 4 if(( ← check ()) ≠ 1) |

| 5 cloud Database ← check() |

| 6 caches |

| 7 return to |

| 8 else |

| 9 return to |

| 10 endif |

| 11 else |

| 12 return to |

| 13 endif |

| End |

5. Evaluation Scenario

5.1. Performance Metrics

5.1.1. Cache Hit Ratio ()

5.1.2. Content Retrieval Delay (CRD)

5.1.3. Average Hop Count (AHC)

5.2. Simulation Results

5.2.1. Cache Hit Ratio (CHR)

5.2.2. Content Retrieval Delay (CRD)

5.2.3. Average Hop Count (AHC)

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Afzal, B.; Umair, M.; Shah, G.A.; Ahmed, E. Enabling IoT platforms for social IoT applications: Vision, feature mapping, and challenges. Future Gener. Comput. Syst. 2019, 92, 718–731. [Google Scholar] [CrossRef]

- Aldowah, H.; Rehman, S.U.; Umar, I. Trust in IoT Systems: A Vision on the Current Issues, Challenges, and Recommended Solutions. Adv. Smart Soft Comput. 2021, 1188, 329–339. [Google Scholar]

- Hajjaji, Y.; Boulila, W.; Farah, I.R.; Romdhani, I.; Hussain, A. Big data and IoT-based applications in smart environments: A systematic review. Comput. Sci. Rev. 2021, 39, 100318. [Google Scholar] [CrossRef]

- Hao, Y.; Miao, Y.; Hu, L.; Hossain, M.S.; Muhammad, G.; Amin, S.U. Smart-Edge-CoCaCo: AI-enabled smart edge with joint computation, caching, and communication in heterogeneous IoT. IEEE Netw. 2019, 33, 58–64. [Google Scholar] [CrossRef] [Green Version]

- Forestiero, A.; Mastroianni, C.; Papuzzo, G.; Spezzano, G. A proximity-based self-organizing framework for service composition and discovery. In Proceedings of the 2010 10th IEEE/ACM International Conference on Cluster, Cloud and Grid Computing, Melbourne, Australia, 17–20 May 2010; pp. 428–437. [Google Scholar]

- Zhao, L.; Wang, J.; Liu, J.; Kato, N. Optimal edge resource allocation in IoT-based smart cities. IEEE Netw. 2019, 33, 30–35. [Google Scholar] [CrossRef]

- Markakis, E.K.; Karras, K.; Sideris, A.; Alexiou, G.; Pallis, E. Computing, caching, and communication at the edge: The cornerstone for building a versatile 5G ecosystem. IEEE Commun. Mag. 2017, 55, 152–157. [Google Scholar] [CrossRef]

- Naveen, S.; Kounte, M.R. Key technologies and challenges in IoT edge computing. In Proceedings of the 2019 Third International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud) (I-SMAC), Palladam, India, 12–14 December 2019; pp. 61–65. [Google Scholar]

- Huh, J.H.; Seo, Y.S. Understanding edge computing: Engineering evolution with artificial intelligence. IEEE Access 2019, 7, 164229–164245. [Google Scholar] [CrossRef]

- Arshad, S.; Azam, M.A.; Rehmani, M.H.; Loo, J. Recent advances in information-centric networking-based Internet of Things (ICN-IoT). IEEE Internet Things J. 2018, 6, 2128–2158. [Google Scholar] [CrossRef] [Green Version]

- Hua, Y.; Guan, L.; Kyriakopoulos, K.G. A Fog caching scheme enabled by ICN for IoT environments. Future Gener. Comput. Syst. 2020, 111, 82–95. [Google Scholar] [CrossRef]

- Bittencourt, L.F.; Diaz-Montes, J.; Buyya, R.; Rana, O.F.; Parashar, M. Mobility-aware application scheduling in fog computing. IEEE Cloud Comput. 2017, 4, 26–35. [Google Scholar] [CrossRef] [Green Version]

- Wang, M.; Wu, J.; Li, G.; Li, J.; Li, Q.; Wang, S. Toward mobility support for information-centric IoV in smart city using fog computing. In Proceedings of the 2017 IEEE International Conference on Smart Energy Grid Engineering (SEGE), Oshawa, ON, Canada, 14–17 August 2017; pp. 357–361. [Google Scholar]

- Sinky, H.; Khalfi, B.; Hamdaoui, B.; Rayes, A. Adaptive edge-centric cloud content placement for responsive smart cities. IEEE Netw. 2019, 33, 177–183. [Google Scholar] [CrossRef]

- Sinky, H.; Khalfi, B.; Hamdaoui, B.; Rayes, A. Responsive content-centric delivery in large urban communication networks: A LinkNYC use-case. IEEE Trans. Wirel. Commun. 2017, 17, 1688–1699. [Google Scholar] [CrossRef]

- Vallero, G.; Deruyck, M.; Meo, M.; Joseph, W. Base Station switching and edge caching optimisation in high energy-efficiency wireless access network. Comput. Netw. 2021, 192, 108100. [Google Scholar] [CrossRef]

- Niu, Y.; Gao, S.; Liu, N.; Pan, Z.; You, X. Clustered small base stations for cache-enabled wireless networks. In Proceedings of the 2017 9th International Conference on Wireless Communications and Signal Processing (WCSP), Nanjing, China, 11–13 October 2017; pp. 1–6. [Google Scholar]

- Yu, C.; Tang, Q.; Liu, Z.; Dong, B.; Wei, Z. A recommender system for ordering platform based on an improved collaborative filtering algorithm. In Proceedings of the 2018 International Conference on Audio, Language and Image Processing (ICALIP), Shanghai, China, 16–17 July 2018; pp. 298–302. [Google Scholar]

- Cui, Z.; Xu, X.; Fei, X.; Cai, X.; Cao, Y.; Zhang, W.; Chen, J. Personalized recommendation system based on collaborative filtering for IoT scenarios. IEEE Trans. Serv. Comput. 2020, 13, 685–695. [Google Scholar] [CrossRef]

- Wang, W.; Chen, J.; Wang, J.; Chen, J.; Liu, J.; Gong, Z. Trust-enhanced collaborative filtering for personalized point of interests recommendation. IEEE Trans. Ind. Inform. 2019, 16, 6124–6132. [Google Scholar] [CrossRef]

- Song, F.; Ai, Z.Y.; Li, J.J.; Pau, G.; Collotta, M.; You, I.; Zhang, H.K. Smart collaborative caching for information-centric IoT in fog computing. Sensors 2017, 17, 2512. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Baccelli, E.; Mehlis, C.; Hahm, O.; Schmidt, T.C.; Wählisch, M. Information centric networking in the IoT: Experiments with NDN in the wild. In Proceedings of the 1st ACM Conference on Information-Centric Networking, Paris, France, 24–26 September 2014; pp. 77–86. [Google Scholar]

- JSM, L.M.; Lokesh, V.; Polyzos, G.C. Energy efficient context based forwarding strategy in named data networking of things. In Proceedings of the 3rd ACM Conference on Information-Centric Networking, Kyoto, Japan, 26–28 September 2016; pp. 249–254. [Google Scholar]

- Datta, S.K.; Bonnet, C. Interworking of NDN with IoT architecture elements: Challenges and solutions. In Proceedings of the 2016 IEEE 5th Global Conference on Consumer Electronics, Kyoto, Japan, 11–14 October 2016; pp. 1–2. [Google Scholar]

- Siris, V.A.; Thomas, Y.; Polyzos, G.C. Supporting the IoT over integrated satellite-terrestrial networks using information-centric networking. In Proceedings of the 2016 8th IFIP International Conference on New Technologies, Mobility and Security (NTMS), Larnaca, Cyprus, 21–23 November 2016; pp. 1–5. [Google Scholar]

- Hahm, O.; Adjih, C.; Baccelli, E.; Schmidt, T.; Wählisch, M. Time Slotted Channel Hopping and Information-Centric Networking for IoT. Available online: https://reposit.haw-hamburg.de/handle/20.500.12738/4227 (accessed on 21 July 2021).

- Dong, L.; Wang, G. Support context-aware IoT content request in Information Centric networks. In Proceedings of the 2016 25th Wireless and Optical Communication Conference (WOCC), Chengdu, China, 21–23 May 2016; pp. 1–4. [Google Scholar]

- Quevedo, J.; Corujo, D.; Aguiar, R. A case for ICN usage in IoT environments. In Proceedings of the 2014 IEEE Global Communications Conference, Austin, TX, USA, 8–12 December 2014; pp. 2770–2775. [Google Scholar]

- Jacobson, V.; Smetters, D.K.; Thornton, J.D.; Plass, M.F.; Briggs, N.H.; Braynard, R.L. Networking named content. In Proceedings of the 5th International Conference on Emerging Networking Experiments and Technologies, Rome, Italy, 1–4 December 2009; pp. 1–12. [Google Scholar]

- Laoutaris, N.; Che, H.; Stavrakakis, I. The LCD interconnection of LRU caches and its analysis. Perform. Eval. 2006, 63, 609–634. [Google Scholar] [CrossRef]

- Psaras, I.; Chai, W.K.; Pavlou, G. Probabilistic in-network caching for information-centric networks. In Proceedings of the Second Edition of the ICN Workshop on Information-Centric Networking, Helsinki, Finland, 17 August 2012; pp. 55–60. [Google Scholar]

- Cho, K.; Lee, M.; Park, K.; Kwon, T.T.; Choi, Y.; Pack, S. WAVE: Popularity-based and collaborative in-network caching for content-oriented networks. In Proceedings of the 2012 Proceedings IEEE INFOCOM Workshops, Orlando, FL, USA, 25–30 March 2012; pp. 316–321. [Google Scholar]

- Li, J.; Wu, H.; Liu, B.; Lu, J.; Wang, Y.; Wang, X.; Zhang, Y.; Dong, L. Popularity-driven coordinated caching in named data networking. In Proceedings of the 2012 ACM/IEEE Symposium on Architectures for Networking and Communications Systems (ANCS), Austin, TX, USA, 29–30 October 2012; pp. 15–26. [Google Scholar]

- Ren, J.; Qi, W.; Westphal, C.; Wang, J.; Lu, K.; Liu, S.; Wang, S. Magic: A distributed max-gain in-network caching strategy in information-centric networks. In Proceedings of the 2014 IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Toronto, ON, Canada, 27 April–2 May 2014; pp. 470–475. [Google Scholar]

- Zhang, Z.; Lung, C.H.; St-Hilaire, M.; Lambadaris, I. Smart proactive caching: Empower the video delivery for autonomous vehicles in ICN-based networks. IEEE Trans. Veh. Technol. 2020, 69, 7955–7965. [Google Scholar] [CrossRef]

- Khelifi, H.; Luo, S.; Nour, B.; Sellami, A.; Moungla, H.; Naït-Abdesselam, F. An optimized proactive caching scheme based on mobility prediction for vehicular networks. In Proceedings of the 2018 IEEE Global Communications Conference (GLOBECOM), Abu Dhabi, United Arab Emirates, 9–13 December 2018; pp. 1–6. [Google Scholar]

- Sarkar, S.; Misra, S. Theoretical modelling of fog computing: A green computing paradigm to support IoT applications. IET Netw. 2016, 5, 23–29. [Google Scholar] [CrossRef] [Green Version]

- Yannuzzi, M.; Milito, R.; Serral-Gracià, R.; Montero, D.; Nemirovsky, M. Key ingredients in an IoT recipe: Fog Computing, Cloud computing, and more Fog Computing. In Proceedings of the 2014 IEEE 19th International Workshop on Computer Aided Modeling and Design of Communication Links and Networks (CAMAD), Athens, Greece, 1–3 December 2014; pp. 325–329. [Google Scholar]

- Lee, K.; Kim, D.; Ha, D.; Rajput, U.; Oh, H. On security and privacy issues of fog computing supported Internet of Things environment. In Proceedings of the 2015 6th International Conference on the Network of the Future (NOF), Montreal, QC, Canada, 30 September–2 October 2015; pp. 1–3. [Google Scholar]

- Sarkar, S.; Chatterjee, S.; Misra, S. Assessment of the Suitability of Fog Computing in the Context of Internet of Things. IEEE Trans. Cloud Comput. 2015, 6, 46–59. [Google Scholar] [CrossRef]

- Salman, O.; Elhajj, I.; Kayssi, A.; Chehab, A. Edge computing enabling the Internet of Things. In Proceedings of the 2015 IEEE 2nd World Forum on Internet of Things (WF-IoT), Milan, Italy, 14–16 December 2015; pp. 603–608. [Google Scholar]

- Aazam, M.; Huh, E.N. Fog computing micro datacenter based dynamic resource estimation and pricing model for IoT. In Proceedings of the 2015 IEEE 29th International Conference on Advanced Information Networking and Applications, Gwangju, Korea, 24–27 March 2015; pp. 687–694. [Google Scholar]

- Abedin, S.F.; Alam, M.G.R.; Tran, N.H.; Hong, C.S. A Fog based system model for cooperative IoT node pairing using matching theory. In Proceedings of the 2015 17th Asia-Pacific Network Operations and Management Symposium (APNOMS), Busan, Korea, 19–21 August 2015; pp. 309–314. [Google Scholar]

- Aazam, M.; Huh, E.N. Fog computing and smart gateway based communication for cloud of things. In Proceedings of the 2014 International Conference on Future Internet of Things and Cloud, Barcelona, Spain, 27–29 August 2014; pp. 464–470. [Google Scholar]

- Markakis, E.; Negru, D.; Bruneau-Queyreix, J.; Pallis, E.; Mastorakis, G.; Mavromoustakis, C.X. A p2p home-box overlay for efficient content distribution. In Emerging Innovations in Wireless Networks and Broadband Technologies; IGI Global: Hershey, PA, USA, 2016; pp. 199–220. [Google Scholar]

- Wei, Y.; Yu, F.R.; Song, M.; Han, Z. Joint optimization of caching, computing, and radio resources for fog-enabled IoT using natural actor–critic deep reinforcement learning. IEEE Internet Things J. 2018, 6, 2061–2073. [Google Scholar] [CrossRef]

- Luo, S.; Chen, X.; Zhou, Z. F3C: Fog-enabled Joint Computation, Communication and Caching Resource Sharing for Energy-Efficient IoT Data Stream Processing. In Proceedings of the 2019 IEEE 39th International Conference on Distributed Computing Systems (ICDCS), Dallas, TX, USA, 7–10 July 2019; pp. 1019–1028. [Google Scholar]

- Abkenar, F.S.; Khan, K.S.; Jamalipour, A. Smart Cluster-based Distributed Caching for Fog-IoT Networks. IEEE Internet Things J. 2020, 8, 3875–3884. [Google Scholar] [CrossRef]

- Doan, K.N.; Van Nguyen, T.; Quek, T.Q.; Shin, H. Content-aware proactive caching for backhaul offloading in cellular network. IEEE Trans. Wirel. Commun. 2018, 17, 3128–3140. [Google Scholar] [CrossRef]

- Thar, K.; Tran, N.H.; Oo, T.Z.; Hong, C.S. DeepMEC: Mobile edge caching using deep learning. IEEE Access 2018, 6, 78260–78275. [Google Scholar] [CrossRef]

- Zhang, C.; Ren, P.; Du, Q. Learning-to-rank based strategy for caching in wireless small cell networks. In International Conference on Internet of Things as a Service; Springer: Berlin/Heidelberg, Germany, 2018; pp. 111–119. [Google Scholar]

- Baştuğ, E.; Bennis, M.; Debbah, M. Proactive caching in 5G small cell networks. In Towards 5G: Applications, Requirements and Candidate Technologies; Wiley: Hoboken, NJ, USA, 2016; pp. 78–98. [Google Scholar]

- Bastug, E.; Bennis, M.; Debbah, M. Living on the edge: The role of proactive caching in 5G wireless networks. IEEE Commun. Mag. 2014, 52, 82–89. [Google Scholar] [CrossRef] [Green Version]

- Chang, Z.; Lei, L.; Zhou, Z.; Mao, S.; Ristaniemi, T. Learn to cache: Machine learning for network edge caching in the big data era. IEEE Wirel. Commun. 2018, 25, 28–35. [Google Scholar] [CrossRef]

- Wang, W.; Lan, R.; Gu, J.; Huang, A.; Shan, H.; Zhang, Z. Edge caching at base stations with device-to-device offloading. IEEE Access 2017, 5, 6399–6410. [Google Scholar] [CrossRef]

- Xiang, H.; Yan, S.; Peng, M. A deep reinforcement learning based content caching and mode selection for slice instances in fog radio access networks. In Proceedings of the 2019 IEEE 90th Vehicular Technology Conference (VTC2019-Fall), Honolulu, HI, USA, 22–25 September 2019; pp. 1–5. [Google Scholar]

- Jiang, F.; Yuan, Z.; Sun, C.; Wang, J. Deep Q-learning-based content caching with update strategy for fog radio access networks. IEEE Access 2019, 7, 97505–97514. [Google Scholar] [CrossRef]

- Liu, Y.; Ma, Z.; Yan, Z.; Wang, Z.; Liu, X.; Ma, J. Privacy-preserving federated k-means for proactive caching in next generation cellular networks. Inf. Sci. 2020, 521, 14–31. [Google Scholar] [CrossRef]

- De Amorim, R.C.; Hennig, C. Recovering the number of clusters in data sets with noise features using feature rescaling factors. Inf. Sci. 2015, 324, 126–145. [Google Scholar] [CrossRef] [Green Version]

- Thinsungnoena, T.; Kaoungkub, N.; Durongdumronchaib, P.; Kerdprasopb, K.; Kerdprasopb, N. The clustering validity with silhouette and sum of squared errors. Learning 2015, 3. [Google Scholar] [CrossRef] [Green Version]

- Ali, A.S.; Mahmoud, K.R.; Naguib, K.M. Optimal caching policy for wireless content delivery in D2D networks. J. Netw. Comput. Appl. 2020, 150, 102467. [Google Scholar] [CrossRef]

| Ref | Machine Learning Technique | Algorithm | Objective | Caching Strategy | Caching Location | Network |

|---|---|---|---|---|---|---|

| [49] | Supervised | CNN | High computation offloading ratio | Proactive | Base station | wireless cellular |

| [50] | Supervised | DNN | Reduced content retrieval delay | Proactive | Base station | Mobile edge computing |

| [51] | Supervised and unsupervised | Learn-to-rank | Improved cache hit ratio | Reactive | Small base station | Small cell network |

| [52,53] | Supervised | NA | High user satisfaction ratio | Proactive | Base station, user equipment | Small cell network |

| [54] | Unsupervised | DNN | Minimize latency, High data rate | Proactive | Mobile base station | Hetnet |

| [55] | Reinforcement learning | Q-learning | minimum cache replacement transmission cost | Proactive | Base station, user equipment | Macro cellular |

| [56] | Reinforcement learning | Deep RL | maximum cache hit ratio | Reactive | V2I | Radio access networks |

| [57] | Reinforcement learning | Deep Q-learning | maximum cache hit ratio | Proactive | Base station, user equipment, access point | Radio access networks |

| Edge Nodes/Features | f | f | ⋯ | f |

|---|---|---|---|---|

| EN | ⋯ | ⋯ | ⋯ | ⋯ |

| EN | ⋯ | ⋯ | ⋯ | ⋯ |

| ⋯ | ⋯ | ⋯ | ⋯ | ⋯ |

| EN | ⋯ | ⋯ | ⋯ | ⋯ |

| Features/Contents | C | C | ⋯ | C |

|---|---|---|---|---|

| f | ⋯ | ⋯ | ⋯ | ⋯ |

| f | ⋯ | ⋯ | ⋯ | ⋯ |

| ⋯ | ⋯ | ⋯ | ⋯ | ⋯ |

| f | ⋯ | ⋯ | ⋯ | ⋯ |

| Edge Nodes/Contents | C | C | ⋯ | C |

|---|---|---|---|---|

| ED | ⋯ | ⋯ | ⋯ | ⋯ |

| ED | ⋯ | ⋯ | ⋯ | ⋯ |

| ⋯ | ⋯ | ⋯ | ⋯ | ⋯ |

| ED | ⋯ | ⋯ | ⋯ | ⋯ |

| Similarity | C | C | ⋯ | C | ⋯ | C | ⋯ | C |

|---|---|---|---|---|---|---|---|---|

| C | 0 | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ |

| C | ⋯ | 0 | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ |

| ⋯ | ⋯ | ⋯ | 0 | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ |

| C | ⋯ | ⋯ | ⋯ | 0 | ⋯ | ⋯ | ⋯ | ⋯ |

| ⋯ | ⋯ | ⋯ | ⋯ | ⋯ | 0 | ⋯ | ⋯ | ⋯ |

| Prediction | C | C | ⋯ | C | ⋯ | C | ⋯ | C |

|---|---|---|---|---|---|---|---|---|

| ED | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ |

| ED | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ |

| ⋯ | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ |

| ED | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gupta, D.; Rani, S.; Ahmed, S.H.; Verma, S.; Ijaz, M.F.; Shafi, J. Edge Caching Based on Collaborative Filtering for Heterogeneous ICN-IoT Applications. Sensors 2021, 21, 5491. https://doi.org/10.3390/s21165491

Gupta D, Rani S, Ahmed SH, Verma S, Ijaz MF, Shafi J. Edge Caching Based on Collaborative Filtering for Heterogeneous ICN-IoT Applications. Sensors. 2021; 21(16):5491. https://doi.org/10.3390/s21165491

Chicago/Turabian StyleGupta, Divya, Shalli Rani, Syed Hassan Ahmed, Sahil Verma, Muhammad Fazal Ijaz, and Jana Shafi. 2021. "Edge Caching Based on Collaborative Filtering for Heterogeneous ICN-IoT Applications" Sensors 21, no. 16: 5491. https://doi.org/10.3390/s21165491

APA StyleGupta, D., Rani, S., Ahmed, S. H., Verma, S., Ijaz, M. F., & Shafi, J. (2021). Edge Caching Based on Collaborative Filtering for Heterogeneous ICN-IoT Applications. Sensors, 21(16), 5491. https://doi.org/10.3390/s21165491