Texture Recognition Based on Perception Data from a Bionic Tactile Sensor

Abstract

1. Introduction

2. Materials and Methods

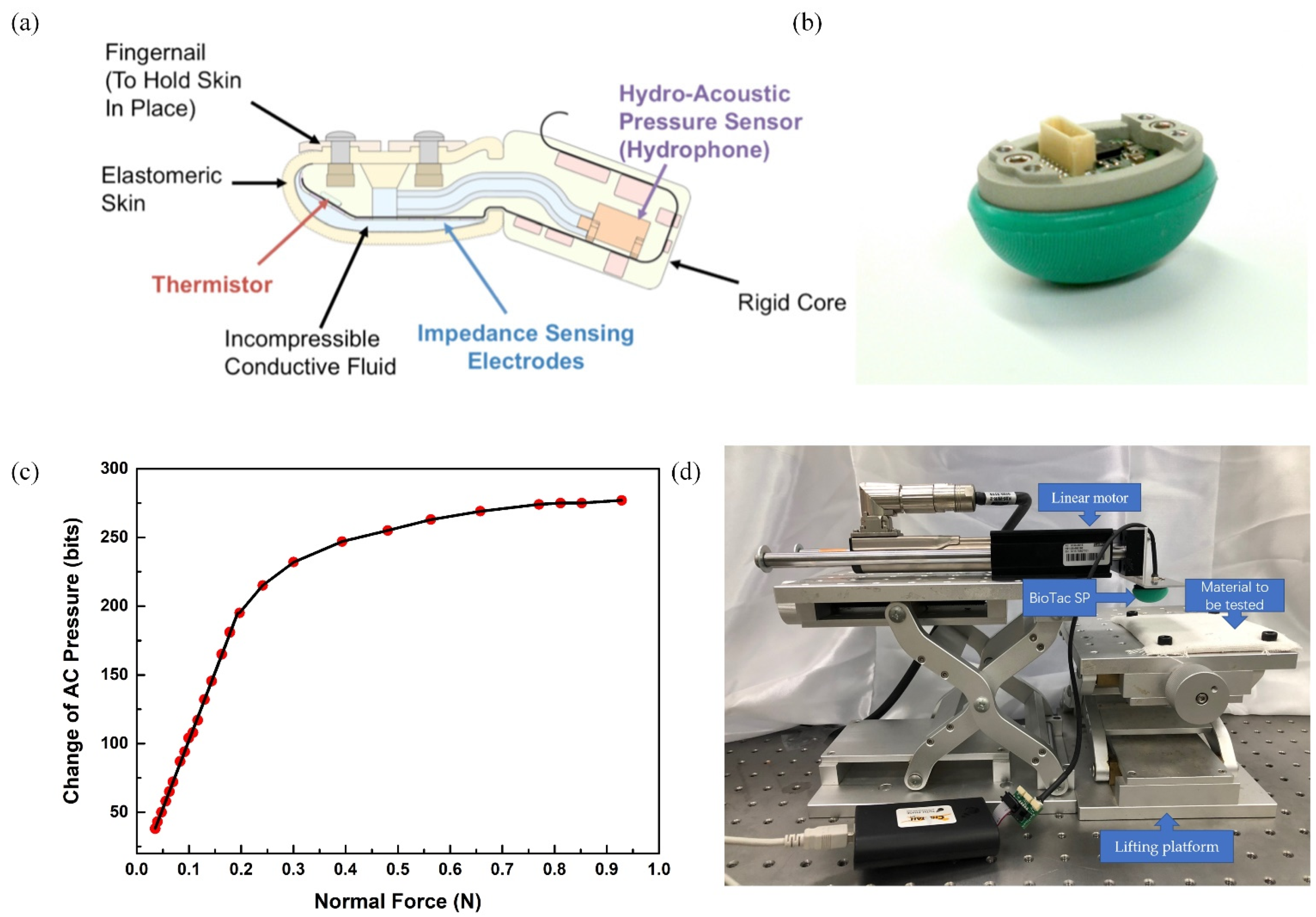

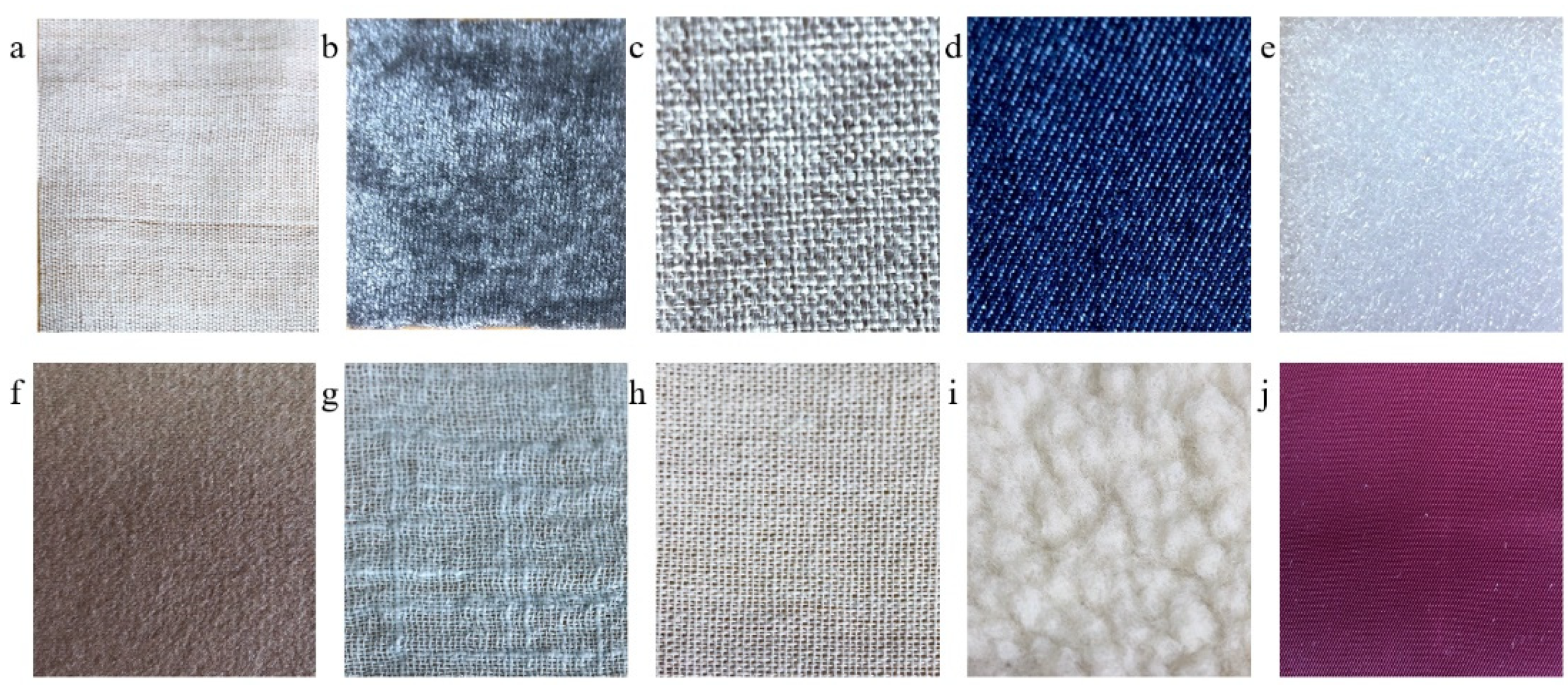

2.1. Experimental Device and Data Collection

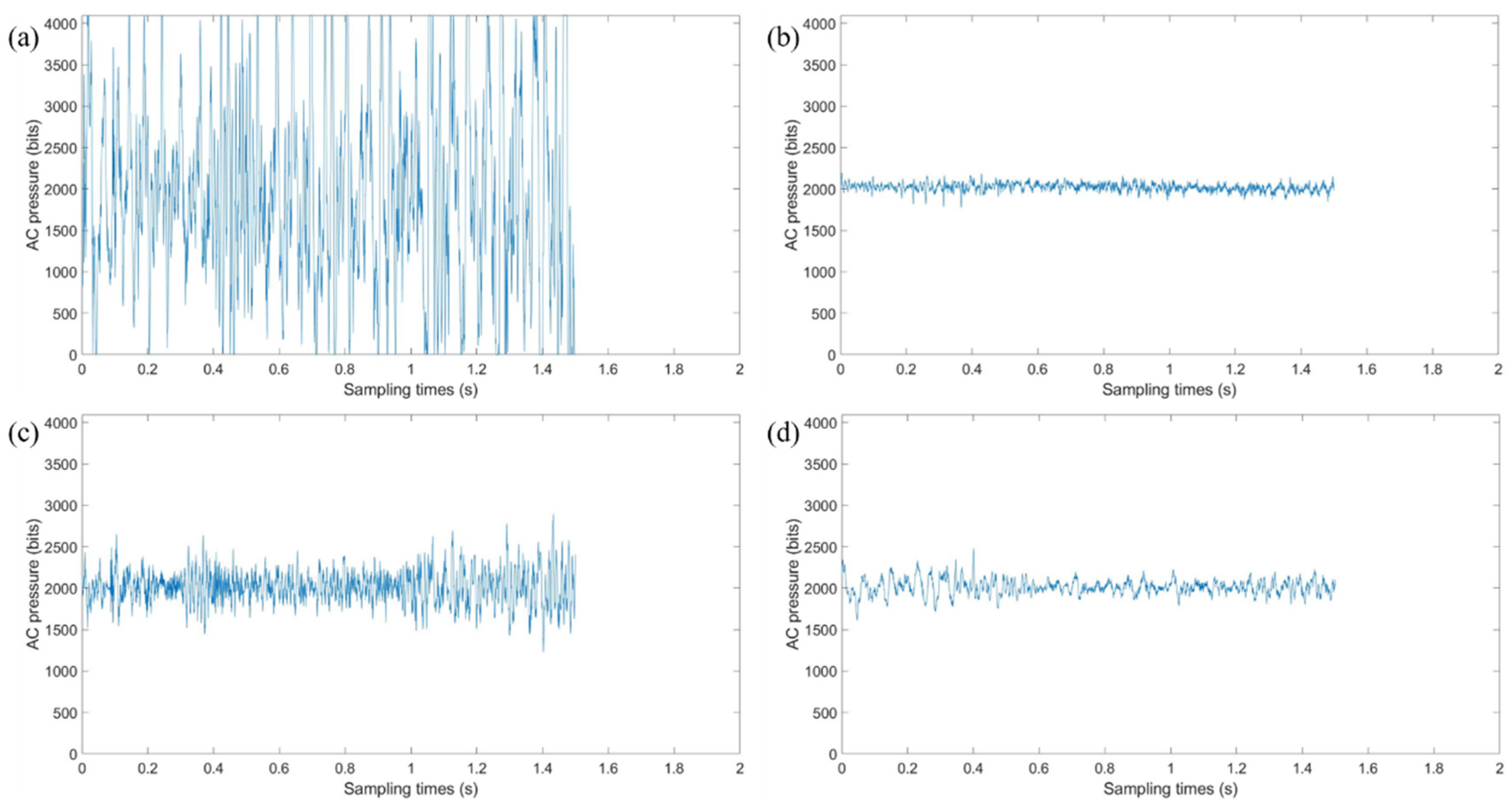

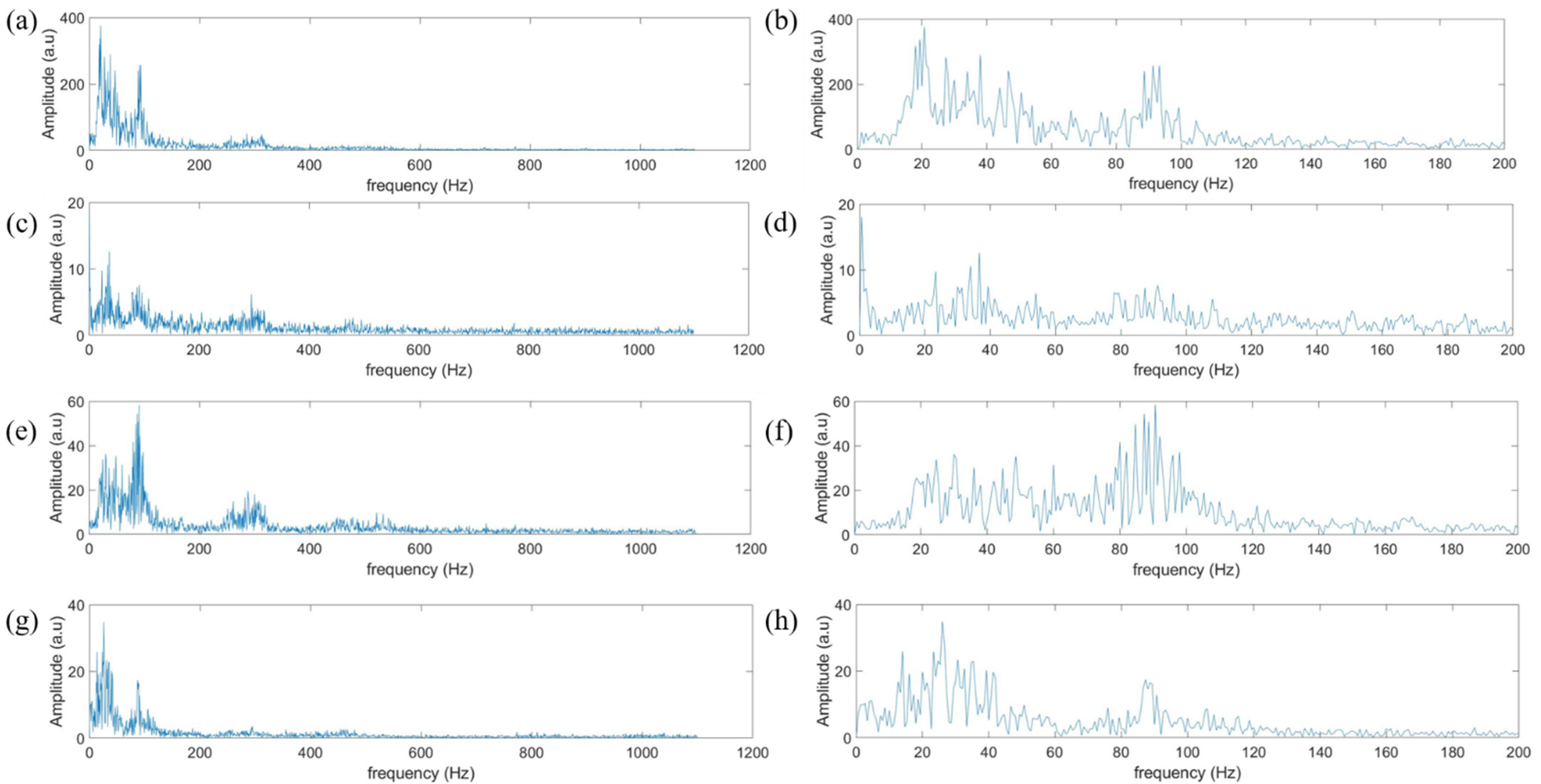

2.2. Feature Extraction of Vibration Data

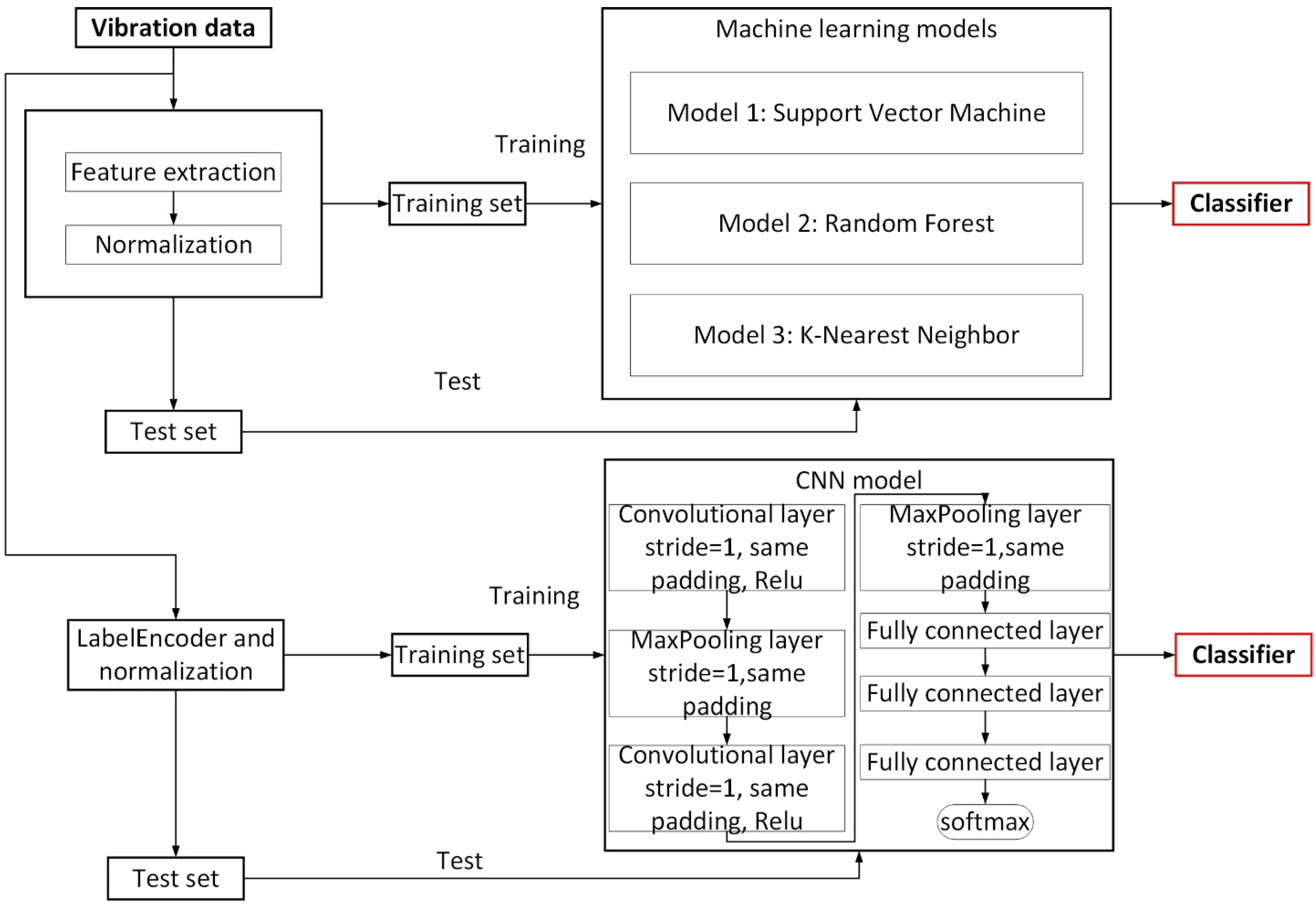

2.3. Texture Recognition

2.4. Performance Measures

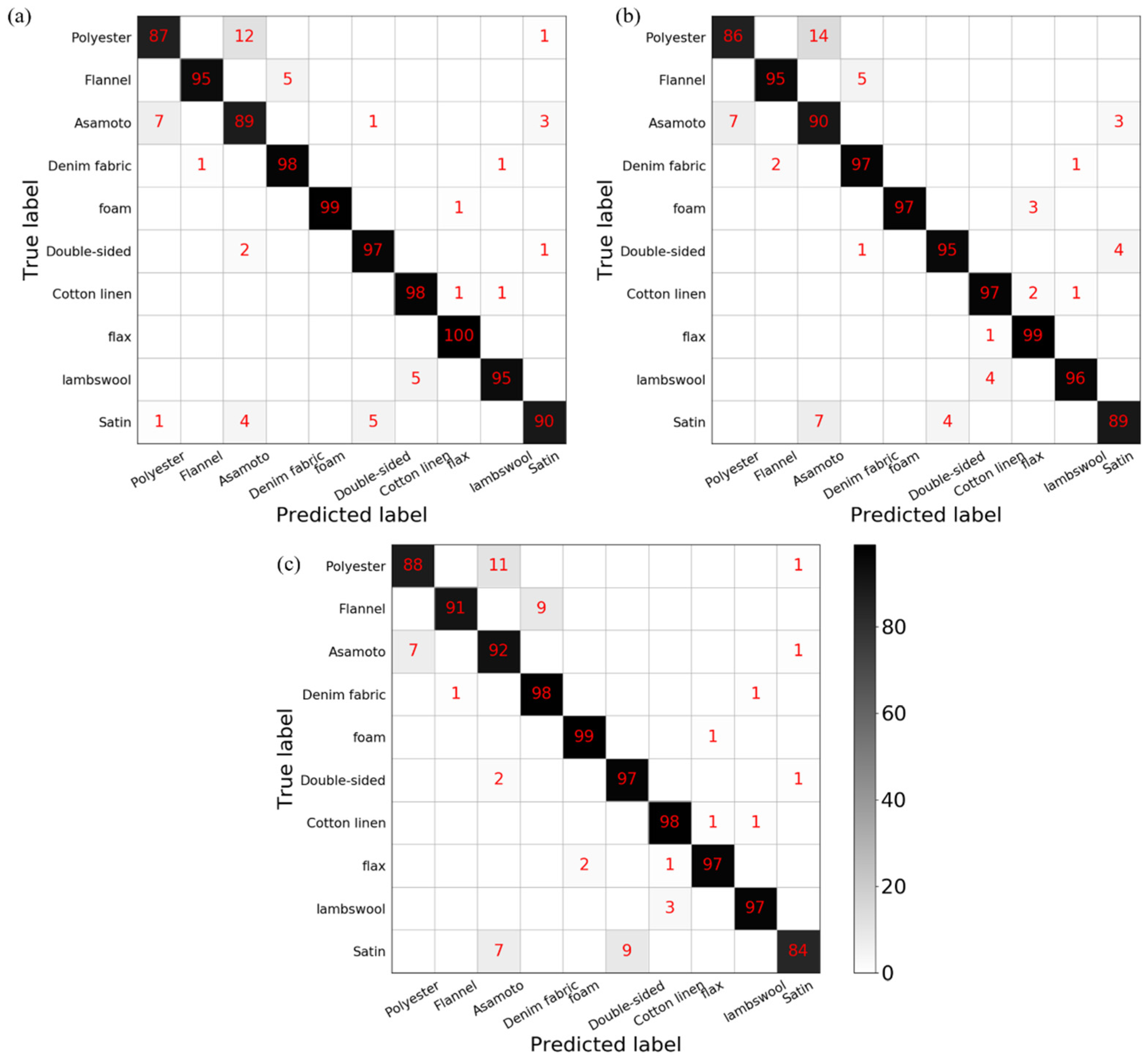

3. Results and Discussion

4. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kalisch, T.; Kattenstroth, J.C.; Kowalewski, R.; Tegenthoff, M.; Dinse, H.C. Cognitive and Tactile Factors Affecting Human Haptic Performance in Later Life. PLoS ONE 2012, 7, e30420. [Google Scholar] [CrossRef]

- Liu, C.K.; Qiao, H.; Su, J.H.; Zhang, P. Vision-Based 3-D Grasping of 3-D Objects with a Simple 2-D Gripper. IEEE Trans. Syst. Man Cybern. Syst. 2014, 44, 605–620. [Google Scholar]

- Bekiroglu, Y.; Kragic, D.; Kyrki, V. Learning Grasp Stability Based on Tactile Data and HMMs. In Proceedings of the 19th IEEE International Conference on Robot and Human Interactive Communication, Viareggio, Italy, 13–15 September 2010; pp. 132–137. [Google Scholar]

- Cheng, Y.; Su, C.Z.; Jia, Y.Y.; Xi, N. Data Correlation Approach for Slippage Detection in Robotic Manipulations Using Tactile Sensor Array. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems, Hamburg, Germany, 28 Septermber–2 October 2015; pp. 2717–2722. [Google Scholar]

- Zhang, Y.F.; Liu, H. Tactile Sensor Based Varying Contact Point Manipulation Strategy for Dexterous Robot Hand Manipulating Unknown Objects. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, Portugal, 7–12 October 2012; pp. 4756–4761. [Google Scholar]

- Taira, R.; Saga, S.; Okatani, T.; Deguchi, K. 3D Reconstruction of Reflective Surface on Reflection Type Tactile Sensor Using Constraints of Geometrical Optics. In Proceedings of the Society of Instrument and Control Engineers of Japan (SICE), Taipei, Taiwan, 18–21 August 2010; pp. 3144–3149. [Google Scholar]

- Yao, G.; Xu, L.; Cheng, X.W.; Li, Y.Y.; Huang, X.; Guo, W.; Liu, S.Y.; Wang, Z.L.; Wu, H. Bioinspired Triboelectric Nanogenerators as Self-Powered Electronic Skin for Robotic Tactile Sensing. Adv. Funct. Mater. 2020, 30, 1907312. [Google Scholar] [CrossRef]

- Wu, H.; Yang, G.G.; Zhu, K.H.; Liu, S.Y.; Guo, W.; Jiang, Z.; Li, Z. Materials, Devices, and Systems of On-Skin Electrodes for Electrophysiological Monitoring and Human–Machine Interfaces. Adv. Sci. 2020, 8, 2001938. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Liu, S.Y.; Huang, X.; Guo, W.; Li, Y.Y.; Wu, H. A stretchable dual-mode sensor array for multifunctional robotic electronic skin. Nano Energy 2019, 62, 164–170. [Google Scholar] [CrossRef]

- Luo, S.; Bimbo, J.; Dahiya, R.; Liu, H. Robotic tactile perception of object properties: A review. Mechatronics 2017, 48, 54–67. [Google Scholar] [CrossRef]

- Tsuji, S.; Kohama, T. Using a Convolutional Neural Network to Construct a Pen-type Tactile Sensor System for Roughness Recognition. Sens. Actuators A Phys. 2019, 291, 7–12. [Google Scholar] [CrossRef]

- Romano, J.M.; Kuchenbecker, K.J. Methods for Robotic Tool-mediated Haptic Surface Recognition. In Proceedings of the IEEE Haptics Symposium, Houston, TX, USA, 23–37 February 2014; pp. 49–56. [Google Scholar]

- Yuan, W.; Zhu, C.; Owens, A.; Srinivasan, M.A.; Adelson, E.H. Shape-independent Hardness Estimation Using Deep Learning and a GelSight Tactile Sensor. In Proceedings of the IEEE International Conference on Robotics and Automation, Marina Bay Sands, Singapore, 29 May–3 June 2017; pp. 951–958. [Google Scholar]

- An, B.W.; Heo, S.; Ji, S.; Bien, F.; Park, J.U. Transparent and flexible fingerprint sensor array with multiplexed detection of tactile pressure and skin temperature. Nat. Commun. 2018, 9, 2458. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Bai, L.; Li, T.; Zhao, C.; Zhang, J.S.; Zhang, N.; Song, G.F.; Gan, Q.Q.; Xu, Y. Wearable and robust triboelectric nanogenerator based on crumpled gold films. Nano Energy 2018, 46, 73–80. [Google Scholar] [CrossRef]

- Wang, X.M.; Tao, L.Q.; Yuan, M.; Wang, Z.P.; Yu, J.B.; Xie, D.L.; Luo, F.; Chen, X.P.; Wong, C.P. Sea urchin-like microstructure pressure sensors with an ultra-broad range and high sensitivity. Nat. Commun. 2021, 12, 1776. [Google Scholar] [CrossRef]

- Wang, H.S.; Hong, S.K.; Han, J.H.; Jung, Y.H.; Jeong, H.K.; Im, T.H.; Jeong, C.K.; Lee, B.Y.; Kim, G.; Yoo, C.D.; et al. Biomimetic and flexible piezoelectric mobile acoustic sensors with multiresonant ultrathin structures for machine learning biometrics. Sci. Adv. 2021, 7, eabe5683. [Google Scholar] [CrossRef] [PubMed]

- Kroemer, O.; Lampert, C.H.; Peters, J. Learning Dynamic Tactile Sensing with Robust Vision-Based Training. IEEE Trans. Robot. 2011, 27, 545–557. [Google Scholar] [CrossRef]

- Fox, C.W.; Evans, M.; Pearson, M.; Prescott, T.J. Tactile SLAM with a Biomimetic Whiskered Robot. In Proceedings of the IEEE International Conference on Robotics and Automation, St. Paul, MN, USA, 14–18 May 2012; pp. 4925–4930. [Google Scholar]

- Kuchenbecker, K.J.; Fiene, J.; Niemeyer, G. Improving Contact Realism through Event-Based Haptic Feedback. IEEE Trans. Vis. Comput. Graph. 2006, 12, 219–230. [Google Scholar] [CrossRef]

- Mitchinson, B.; Pearson, M.J.; Pipe, A.G.; Prescott, T.I. Biomimetic Tactile Target Acquisition, Tracking and Capture. Robot. Auton. Syst. 2014, 62, 366–375. [Google Scholar] [CrossRef][Green Version]

- Huet, L.A.; Rudnicki, J.W.; Hartmann, M.J. Tactile Sensing with Whiskers of Various Shapes: Determining the Three-Dimensional Location of Object Contact Based on Mechanical Signals at The Whisker Base. Soft Robot. 2017, 4, 88–102. [Google Scholar] [CrossRef]

- Giguere, P.; Dudek, G. A Simple Tactile Probe for Surface Identification by Mobile Robots. IEEE Trans. Robot. 2011, 27, 534–544. [Google Scholar] [CrossRef]

- Kaboli, M.; Mittendorfer, P.; Hugel, V.; Cheng, G. Humanoids Learn Object Properties from Robust Tactile Feature Descriptors via Multi-modal Artificial Skin. In Proceedings of the IEEE-RAS International Conference on Humanoid Robots, Seoul, Korea, 3–5 November 2015; pp. 187–192. [Google Scholar]

- Winstone, B.; Griffiths, G.; Pipe, T.; Melhuish, C.; Rossiter, J. TACTIP—Tactile Fingertip Device, Texture Analysis through Optical Tracking of Skin Features. In Proceedings of the Conference on Biomimetic and Biohybrid Systems, London, UK, 29 July–2 August 2013; pp. 323–334. [Google Scholar]

- Hoelscher, J.; Peters, J.; Hermans, T. Evaluation of Tactile Feature Extraction for Interactive Object Recognition. In Proceedings of the IEEE-RAS International Conference on Humanoid Robots, Seoul, Korea, 3–5 November 2015; pp. 310–317. [Google Scholar]

- Jamali, N.; Sammut, C. Majority Voting: Material Classification by Tactile Sensing Using Surface Texture. IEEE Trans. Robot. 2011, 27, 508–521. [Google Scholar] [CrossRef]

- Juan, M.G.; Jesús, G.G.; Alfonso, J.G. Enhancing Perception with Tactile Object Recognition in Adaptive Grippers for Human–Robot Interaction. Sensors 2018, 18, 692. [Google Scholar]

- Hui, X.; Liu, H.; Shan, L.; Seneviratne, L.D.; Althoefer, K. Fiber Optics Tactile Array Probe for Tissue Palpation during Minimally Invasive Surgery. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 2539–2544. [Google Scholar]

- Tenzer, Y.; Jentoft, L.P.; Howe, R. The Feel of MEMS Barometers: Inexpensive and Easily Customized Tactile Array Sensors. Robot. Autom. Mag. 2014, 21, 89–95. [Google Scholar] [CrossRef]

- Chorley, C.; Melhuish, C.; Pipe, T.; Rossiter, J. Development of a Tactile Sensor Based on Biologically Inspired Edge Encoding. In Proceedings of the International Conference on Advanced Robotics, Munich, Germany, 22–26 June 2009; pp. 1–6. [Google Scholar]

- Sato, K.; Kamiyama, K.; Kawakami, N.; Tachi, S. Finger-Shaped Gelforce: Sensor for Measuring Surface Traction Fields for Robotic Hand. IEEE Trans. Haptics 2010, 3, 37–47. [Google Scholar] [CrossRef] [PubMed]

- Johnson, M.K.; Cole, F.; Raj, A.; Adelson, E.H. Microgeometry Capture Using an Elastomeric Sensor. ACM Trans. Graph. 2011, 30, 46–53. [Google Scholar] [CrossRef]

- Yamaguchi, A.; Atkeson, C.G. Combining Finger Vision and Optical Tactile Sensing: Reducing and Handling Errors while Cutting Vegetables. In Proceedings of the IEEE-RAS International Conference on Humanoid Robots, Cancun, Mexico, 15–17 November 2016; pp. 1045–1051. [Google Scholar]

- Funabashi, S.; Morikuni, S.; Geier, A.; Schmitz, A.; Ogasa, S.; Tomo, T.P.; Somlor, S.; Sugano, S. Object Recognition Through Active Sensing Using a Multi-Fingered Robot Hand with 3D Tactile Sensors. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems, Madrid, Spain, 1–5 October 2018; pp. 2589–2595. [Google Scholar]

- Li, R.; Adelson, E.H. Sensing and Recognizing Surface Textures Using a GelSight Sensor. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 25–27 June 2013; pp. 1241–1247. [Google Scholar]

- Garcia-Garcia, A.; Zapata-Impata, B.S.; Orts-Escolano, S.; Gil, P.; Garcia-Rodriguez, J. Tactile GCN: A Graph Convolutional Network for Predicting Grasp Stability with Tactile Sensors. In Proceedings of the 2019 International Joint Conference on Neural Networks, Budapest, Hungary, 14–19 July 2019; pp. 1–8. [Google Scholar]

- Gandarias, J.M.; Gómez-de-Gabriel, J.M.; García-Cerezo, A.J. Human and Object Recognition with a High-resolution Tactile Sensor. In Proceedings of the 2017 IEEE Sensors, Glasgow, UK, 29 October–1 November 2017; pp. 1–3. [Google Scholar]

- Polat, E.O.; Balci, O.; Kakenov, N.; Uzlu, H.B.; Kocabas, C.; Dahiya, R. Synthesis of Large Area Graphene for High Performance in Flexible Optoelectronic Devices. Sci. Rep. 2015, 5, 16744. [Google Scholar] [CrossRef]

- Hoffmann, M.; Straka, Z.; Farkas, I.; Vavrecka, M.; Metta, G. Robotic Homunculus: Learning of Artificial Skin Representation in a Humanoid Robot Motivated by Primary Somatosensory Cortex. IEEE Trans. Cogn. Dev. Syst. 2017, 10, 163–176. [Google Scholar] [CrossRef]

- Muscari, L.; Seminara, L.; Mastrogiovanni, F.; Valle, M.; Capurro, M.; Cannata, G. Real-Time Reconstruction of Contact Shapes for Large Area Robot Skin. In Proceedings of the IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 2360–2366. [Google Scholar]

- Mittendorfer, P.; Cheng, G. Humanoid Multimodal Tactile-Sensing Modules. IEEE Trans. Robot. 2011, 27, 401–410. [Google Scholar] [CrossRef]

- Bartolozzi, C.; Natale, L.; Nori, F.; Metta, G. Robots with a Sense of Touch. Nat. Mater. 2016, 15, 921–925. [Google Scholar] [CrossRef] [PubMed]

- Kaboli, M.; Long, A.; Cheng, G. Humanoids Learn Touch Modalities Identification via Multi-modal Robotic Skin and Robust Tactile Descriptors. Adv. Robot. 2015, 29, 1411–1425. [Google Scholar] [CrossRef]

- Fishel, J.A.; Loeb, G.E. Bayesian Exploration for Intelligent Identification of Textures. Front. Neurorobot. 2012, 6, 4. [Google Scholar] [CrossRef] [PubMed]

- Ho, V.A.; Araki, T.; Makikawa, M.; Hirai, S. Experimental investigation of surface identification ability of a low-profile fabric tactile sensor. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Algarve, Portugal, 7–11 October 2012; pp. 4497–4504. [Google Scholar]

- Dallaire, P.; Giguere, P.; Emond, D.; Chaibdraa, B. Autonomous Tactile Perception: A Combined Improved Sensing and Bayesian Nonparametric Approach. Robot. Auton. Syst. 2014, 62, 422–435. [Google Scholar] [CrossRef]

- Liu, H.; Song, X.; Bimbo, J.; Seneviratne, L.; Althoefer, K. Surface Material Recognition through Haptic Exploration Using an Intelligent Contact Sensing Finger. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Algarve, Portugal, 7–11 October 2012; pp. 52–57. [Google Scholar]

- Sm, A.; Dr, B.; Clt, B. Deep Residual Pooling Network for Texture Recognition. Pattern Recognit. 2021, 112, 107817. [Google Scholar]

- Florindo, J.B. DSTNet: Successive applications of the discrete Schroedinger transform for texture recognition. Inf. Sci. 2020, 507, 356–364. [Google Scholar] [CrossRef]

- Yuan, W.; Mo, Y.; Wang, S.; Adelson, E.H. Active clothing material perception using tactile sensing and deep learning. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 4842–4849. [Google Scholar]

- Polic, M.; Krajacic, I.; Lepora, N.; Orsag, M. Convolutional autoencoder for feature extraction in tactile sensing. IEEE Robot. Autom. Lett. 2019, 4, 3671–3678. [Google Scholar] [CrossRef]

- Su, Z.; Hausman, K.; Chebotar, Y.; Molchanov, A.; Loeb, G.E.; Sukhatme, G.S.; Schaal, S. Force estimation and slip detection/classification for grip control using a biomimetic tactile sensor. In Proceedings of the IEEE-RAS 15th International Conference on Humanoid Robots, Seoul, Korea, 3–5 November 2015; pp. 297–303. [Google Scholar]

- Jiminez, M.C.; Fishel, J.A. Evaluation of force, vibration and thermal tactile feedback in prosthetic limbs. In Proceedings of the IEEE International Conference on Haptic Interfaces for Virtual Environment and Teleoperator Systems (Haptics), Houston, TX, USA, 23–26 February 2014; pp. 437–441. [Google Scholar]

- SynTouch. Available online: https://syntouchinc.com (accessed on 18 July 2021).

- Strese, M.; Lee, J.Y.; Schuwerk, C.; Han, Q.; Kim, H.G.; Steinbach, E. A haptic texture database for tool-mediated texture recognition and classification. In Proceedings of the 2014 IEEE International Symposium on Haptic, Audio and Visual Environments and Games (HAVE) Proceedings, Richardson, TX, USA, 10–11 October 2014; pp. 118–123. [Google Scholar]

- Orii, H.; Tsuji, S.; Kouda, T.; Kohama, T. Tactile texture recognition using convolutional neural networks for time-series data of pressure and 6-axis acceleration sensor. In Proceedings of the 2017 IEEE International Conference on Industrial Technology (ICIT), Toronto, ON, Canada, 22–25 March 2017; pp. 1076–1080. [Google Scholar]

- Kerr, E.; McGinnity, T.M.; Coleman, S. Material recognition using tactile sensing. Expert Syst. Appl. 2018, 94, 94–111. [Google Scholar] [CrossRef]

- Gandarias, J.M.; Pastor, F.; García-Cerezo, A.J.; Gómez-de-Gabriel, J.M. Active tactile recognition of deformable objects with 3d convolutional neural networks. In Proceedings of the 2019 IEEE World Haptics Conference (WHC), Tokyo, Japan, 9–12 July 2019; pp. 551–555. [Google Scholar]

| Layer Connection | Input Size | Operation | Kernel Size | Output Size |

|---|---|---|---|---|

| 0–1 | 3300 × 1 | Convolution | 25 × 1 × 8 | 3300 × 8 |

| 1–2 | 3300 × 8 | Maxpooling | 15 × 1 | 220 × 8 |

| 2–3 | 220 × 8 | Convolution | 25 × 1 × 16 | 220 × 16 |

| 3–4 | 220 × 16 | Maxpooling | 15 × 1 | 14 × 16 |

| 4–5 | 14 × 16 | Fully connected layer | 224 | 224 |

| 5–6 | 224 | Fully connected layer | 128 | 128 |

| 6–7 | 128 | Fully connected layer (softmax) | 10 | 10 |

| Algorithm/Material | TP | FP | FN | Precision | Recall | F1 | |

|---|---|---|---|---|---|---|---|

| SVM | Polyester | 87 | 8 | 13 | 0.92 | 0.87 | 0.89 |

| Flannel | 95 | 1 | 5 | 0.99 | 0.95 | 0.97 | |

| Asamoto | 89 | 18 | 11 | 0.86 | 0.89 | 0.87 | |

| Denim fabric | 98 | 5 | 2 | 0.95 | 0.98 | 0.97 | |

| Foam | 99 | 0 | 1 | 1.0 | 0.99 | 0.99 | |

| Double-sided | 97 | 6 | 3 | 0.94 | 0.97 | 0.96 | |

| Cotton linen | 98 | 5 | 2 | 0.95 | 0.98 | 0.97 | |

| Flax | 100 | 2 | 0 | 0.98 | 1.0 | 0.99 | |

| Lambswool | 95 | 2 | 5 | 0.98 | 0.95 | 0.96 | |

| Satin | 90 | 5 | 10 | 0.95 | 0.90 | 0.92 | |

| RF | Polyester | 86 | 7 | 14 | 0.92 | 0.86 | 0.89 |

| Flannel | 95 | 2 | 5 | 0.98 | 0.95 | 0.96 | |

| Asamoto | 90 | 21 | 10 | 0.81 | 0.90 | 0.85 | |

| Denim fabric | 97 | 6 | 3 | 0.94 | 0.97 | 0.96 | |

| Foam | 97 | 0 | 3 | 1.0 | 0.97 | 0.98 | |

| Double-sided | 95 | 4 | 5 | 0.96 | 0.95 | 0.95 | |

| Cotton linen | 97 | 5 | 3 | 0.95 | 0.97 | 0.96 | |

| Flax | 99 | 5 | 1 | 0.95 | 0.99 | 0.97 | |

| Lambswool | 96 | 2 | 4 | 0.98 | 0.96 | 0.97 | |

| Satin | 89 | 7 | 11 | 0.93 | 0.89 | 0.91 | |

| KNN | Polyester | 88 | 7 | 12 | 0.93 | 0.88 | 0.90 |

| Flannel | 91 | 1 | 9 | 0.99 | 0.91 | 0.95 | |

| Asamoto | 92 | 20 | 8 | 0.82 | 0.92 | 0.87 | |

| Denim fabric | 98 | 9 | 2 | 0.92 | 0.98 | 0.95 | |

| Foam | 99 | 2 | 1 | 0.98 | 0.99 | 0.99 | |

| Double-sided | 97 | 9 | 3 | 0.92 | 0.97 | 0.94 | |

| Cotton linen | 98 | 4 | 2 | 0.96 | 0.98 | 0.97 | |

| Flax | 97 | 2 | 3 | 0.98 | 0.97 | 0.97 | |

| Lambswool | 97 | 2 | 3 | 0.98 | 0.97 | 0.97 | |

| Satin | 84 | 3 | 16 | 0.97 | 0.84 | 0.90 | |

| Algorithm/Material | TP | FP | FN | Precision | Recall | F1 | |

|---|---|---|---|---|---|---|---|

| CNN | Polyester | 14 | 0 | 0 | 1.0 | 1.0 | 1.0 |

| Flannel | 11 | 0 | 0 | 1.0 | 1.0 | 1.0 | |

| Asamoto | 25 | 0 | 0 | 1.0 | 1.0 | 1.0 | |

| Denim fabric | 24 | 0 | 0 | 1.0 | 1.0 | 1.0 | |

| Foam | 28 | 0 | 0 | 1.0 | 1.0 | 1.0 | |

| Double-sided | 16 | 1 | 0 | 0.94 | 1.0 | 0.97 | |

| Cotton linen | 16 | 0 | 2 | 1.0 | 0.89 | 0.94 | |

| Flax | 23 | 0 | 0 | 1.0 | 1.0 | 1.0 | |

| Lambswool | 21 | 2 | 0 | 0.91 | 1.0 | 0.95 | |

| Satin | 19 | 0 | 1 | 1.0 | 0.95 | 0.97 | |

| Model | Training Accuracy (%) | Test Accuracy (%) | Time (s) |

|---|---|---|---|

| SVM | 95.88 ± 1.48 | 95 ± 2.28 | 53.9 |

| RF | 95.59 ± 1.29 | 94 ± 2.24 | 273 |

| KNN | 96.75 ± 1.68 | 94 ± 2.24 | 3.76 |

| CNN | 98.5 | 98.5 | 25.9 |

| Reference | Method | Sensor | Accuracy (%) | Run Time (to Train One Fold) (s) | Description |

|---|---|---|---|---|---|

| Strese et al. [56], 2014 | Gaussian Mixture Model | Accelerometer | 80.2 | / | classification of 43 kinds of objects |

| Orii et al. [57], 2017 | CNN | Pressure and 6-axis accelerometer | 70.7 | / | classification of 4 kinds of objects |

| Gandarias et al. [38], 2017 | 1. SURF | High resolution pressure sensor | 80 | 0.01 | classification of 8 kinds of objects |

| 2. DCNN | 91.7 | 0.7 | |||

| Kerr et al. [58], 2018 | SVM | BioTac | 86.19 | 0.48 | classification of 14 kinds of objects |

| Gandarias et al. [59], 2019 | 3D CNN whose input is 3D data | High resolution pressure sensor | 96.3 | / | classification of 9 kinds of deformable objects |

| Our method | CNN | BioTac SP | 98.5 | 0.032 | classification of 10 kinds of objects |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, S.; Wu, H. Texture Recognition Based on Perception Data from a Bionic Tactile Sensor. Sensors 2021, 21, 5224. https://doi.org/10.3390/s21155224

Huang S, Wu H. Texture Recognition Based on Perception Data from a Bionic Tactile Sensor. Sensors. 2021; 21(15):5224. https://doi.org/10.3390/s21155224

Chicago/Turabian StyleHuang, Shiyao, and Hao Wu. 2021. "Texture Recognition Based on Perception Data from a Bionic Tactile Sensor" Sensors 21, no. 15: 5224. https://doi.org/10.3390/s21155224

APA StyleHuang, S., & Wu, H. (2021). Texture Recognition Based on Perception Data from a Bionic Tactile Sensor. Sensors, 21(15), 5224. https://doi.org/10.3390/s21155224