Appendix B

An ANN is a mathematical description of the nervous system’s structure in the human brain [

43]. The simplest ANN (i.e., a simple perceptron) consists of an input layer and output layers of nodes. Those nodes are mutually fully connected. The perceptron is built in multiple layers consisting of multiple connected nodes. An output is produced through the ANN when a signal (i.e., data) is given to the input layer. The inside of the ANN contains weights to produce the desired output (i.e., prediction) corresponding to a given input.

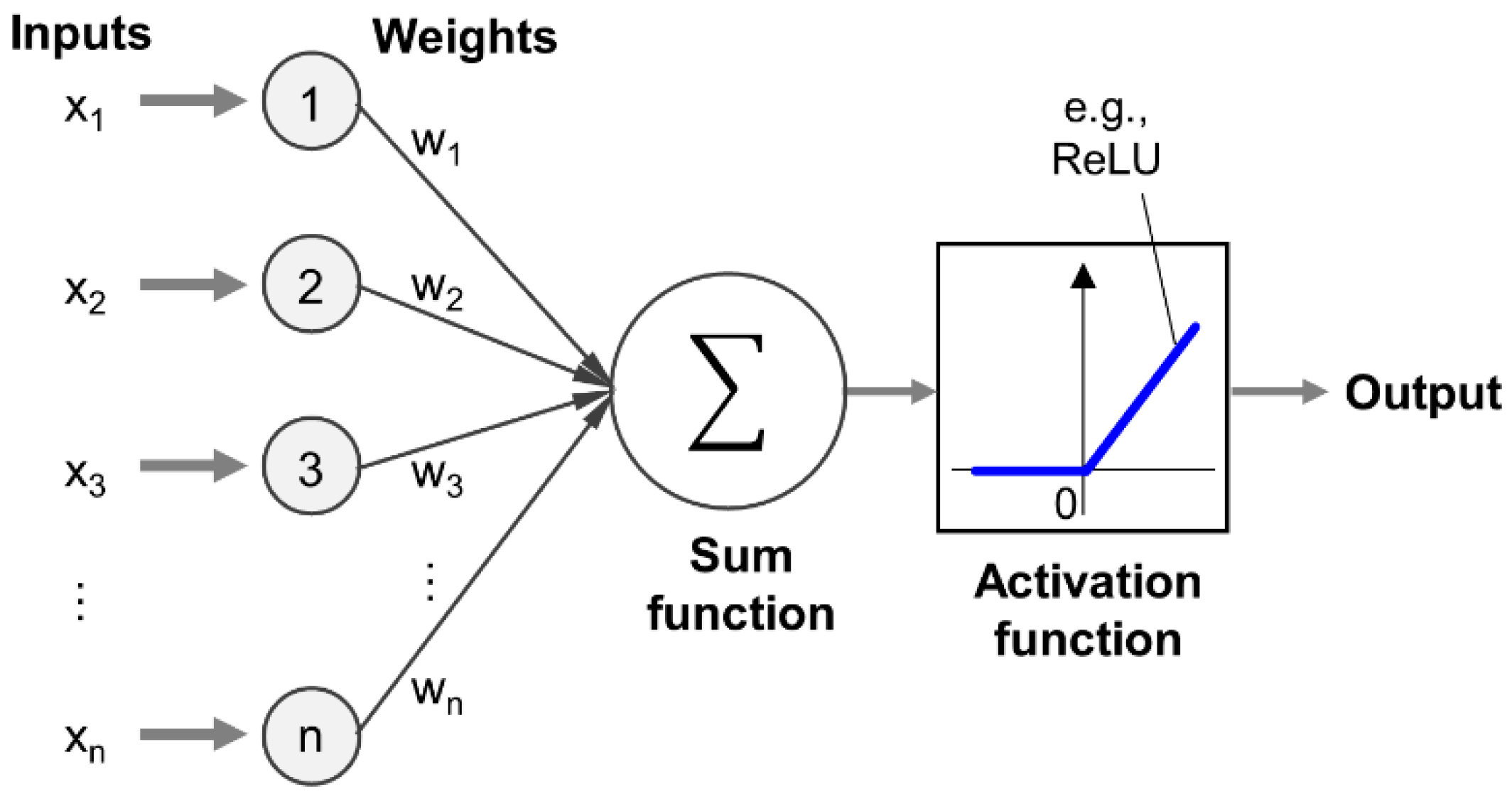

Figure A1 shows a simple ANN that consists of inputs, output, and inside the ANN, including weights, biases, a sum function, and activation function. Input is given from another system. The weight is a value that represents the influence of the previous layer’s input set or another processing element on this process element. The sum function calculates the net effects of inputs and weights on this process element (i.e., the weighted sum of nodes). The weighted sum contains a coefficient, w, as the weight, and a constant term called a bias. The activation function is used to determine the output value from the weighted sum and introduce a nonlinear effect into the output. The rectified linear unit (ReLU) is typically used as an activation function for nonlinear regression analysis [

38].

Figure A1.

Simple neural network. ReLU: rectified linear unit.

Figure A1.

Simple neural network. ReLU: rectified linear unit.

Predictions using an ANN require training, as ANN architecture learns from the training dataset. By the back-propagation method [

44], a constructed ANN architecture is trained to determine the weights and biases of the ANN that minimize the error between the ANN output (i.e., predicted values) and training dataset (i.e., observed values). A loss function is used to quantitatively evaluate the error. While various loss functions are available in ANN training, mean squared error (MSE) is commonly used to minimize the L2 norm of the error. The gradient descent method is used to find the weight and bias that minimize the error between the ANN output and training dataset [

45]. The gradients of the loss function with respect to each weight and bias are calculated using the chain rule and partial derivatives. The gradients determine the updated directions of the weight and bias that reduce the loss function. The gradient descent method commonly used is Adam [

39]. The weights are updated until the partial derivative of the loss function is zero during training. The amount that the weights are updated is called the learning rate. A larger learning rate yields faster convergence of the training; however, the training could be unstable. A lower learning rate stabilizes the training but requires a large number of iterations to converge. The trained ANN is used as an ANN model to make predictions based on a given input. The prediction performance of the ANN model is evaluated using metrics such as MSE, MAE, MAPE,

R2, and RMSE, depending on the focus of the assessment. Further details on ANN are reviewed in references [

26,

46,

47,

48,

49].

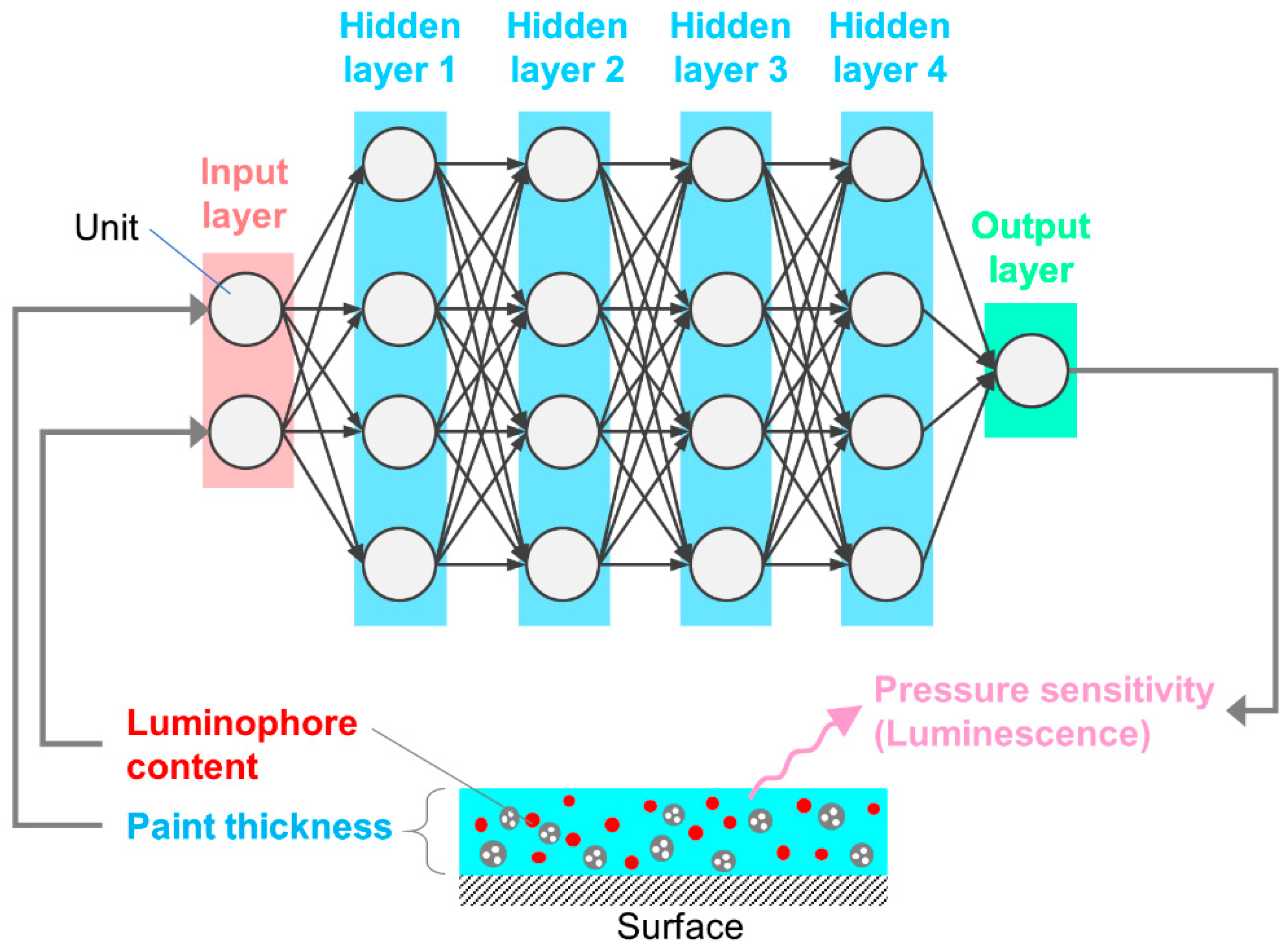

Construction of ANN architecture is required to develop an ANN model for prediction. A parametric study was preliminarily performed to determine the ANN architecture (i.e., size of units and layers) used in the present study. An ANN was constructed for different sizes of units and layers. The size of units and layers were varied to find a suitable ANN architecture that minimizes error between the prediction and training dataset, error’s bias, and error’s variance. ANN was trained using the Keras Regressor, an open-source ANN library, running with the TensorFlow, an open-source ANN library, under the Python environment, a programming language. The augmented dataset described in

Section 2 was used for training. MSE was used as a loss function for training. MSE is calculated using the following equation:

The variance of MSE was also assessed because a lower value of the MSE does not indicate that the precision in predictions is always better in the generalization that captures the dominant trend between inputs and outputs and fits the predictions within that trend [

32].

ANN was trained using an augmented dataset of 100, 1000, and 10,000 entries.

Table A1,

Table A2 and

Table A3 shows the MSE of the ANN for different sizes of layers and units.

Table A4,

Table A5 and

Table A6 shows the variance of the MSE of the ANN for different sizes of layers and units. The size of hidden layers was varied from 1 to 5. The unit in the hidden layer was varied from 2, 4, 8, and 16. The criteria to determine ANN was as follows: (1) MSE is less than 0.1; (2) variance is as small as possible. The size of the hidden layer was determined to be 4, and the unit size was determined to be 4 based on the criteria.

Table A1.

Mean squared error (MSE) of ANN for different layers and units in training for 100 entries of the augmented dataset.

Table A1.

Mean squared error (MSE) of ANN for different layers and units in training for 100 entries of the augmented dataset.

| Layer | Unit = 2 | Unit = 4 | Unit = 8 | Unit = 16 |

|---|

| 1 | 0.414 | 0.304 | 0.171 | 0.146 |

| 2 | 0.390 | 0.133 | 0.107 | 0.031 |

| 3 | 0.504 | 0.145 | 0.053 | 0.030 |

| 4 | 0.266 | 0.100 | 0.103 | 0.013 |

| 5 | 0.197 | 0.178 | 0.029 | 0.052 |

Table A2.

MSE of ANN for different layers and units in training for 1000 entries of the augmented dataset.

Table A2.

MSE of ANN for different layers and units in training for 1000 entries of the augmented dataset.

| Layer | Unit = 2 | Unit = 4 | Unit = 8 | Unit = 16 |

|---|

| 1 | 0.412 | 0.412 | 0.147 | 0.140 |

| 2 | 0.290 | 0.109 | 0.057 | 0.038 |

| 3 | 0.502 | 0.183 | 0.040 | 0.054 |

| 4 | 0.306 | 0.084 | 0.052 | 0.032 |

| 5 | 0.189 | 0.157 | 0.052 | 0.012 |

Table A3.

MSE of ANN for different layers and units in training for 10,000 entries of the augmented dataset.

Table A3.

MSE of ANN for different layers and units in training for 10,000 entries of the augmented dataset.

| Layer | Unit = 2 | Unit = 4 | Unit = 8 | Unit = 16 |

|---|

| 1 | 0.503 | 0.189 | 0.188 | 0.132 |

| 2 | 0.389 | 0.094 | 0.026 | 0.030 |

| 3 | 0.177 | 0.147 | 0.147 | 0.011 |

| 4 | 0.190 | 0.071 | 0.042 | 0.052 |

| 5 | 0.190 | 0.071 | 0.043 | 0.028 |

Table A4.

Variance of ANN for different layers and units in training for 100 entries of the augmented dataset.

Table A4.

Variance of ANN for different layers and units in training for 100 entries of the augmented dataset.

| Layer | Unit = 2 | Unit = 4 | Unit = 8 | Unit = 16 |

|---|

| 1 | 0.577 | 0.683 | 0.801 | 0.836 |

| 2 | 0.590 | 0.853 | 0.874 | 0.947 |

| 3 | 0.481 | 0.834 | 0.939 | 0.962 |

| 4 | 0.734 | 0.889 | 0.876 | 0.963 |

| 5 | 0.788 | 0.803 | 0.940 | 0.923 |

Table A5.

Variance of ANN for different layers and units in training for 1000 entries of the augmented dataset.

Table A5.

Variance of ANN for different layers and units in training for 1000 entries of the augmented dataset.

| Layer | Unit = 2 | Unit = 4 | Unit = 8 | Unit = 16 |

|---|

| 1 | 0.575 | 0.575 | 0.817 | 0.845 |

| 2 | 0.696 | 0.876 | 0.932 | 0.956 |

| 3 | 0.482 | 0.802 | 0.939 | 0.947 |

| 4 | 0.682 | 0.896 | 0.936 | 0.958 |

| 5 | 0.797 | 0.828 | 0.947 | 0.989 |

Table A6.

Variance of ANN for different layers and units in training for 10,000 entries of the augmented dataset.

Table A6.

Variance of ANN for different layers and units in training for 10,000 entries of the augmented dataset.

| Layer | Unit = 2 | Unit = 4 | Unit = 8 | Unit = 16 |

|---|

| 1 | 0.484 | 0.798 | 0.797 | 0.841 |

| 2 | 0.598 | 0.886 | 0.960 | 0.953 |

| 3 | 0.812 | 0.835 | 0.838 | 0.988 |

| 4 | 0.797 | 0.909 | 0.947 | 0.958 |

| 5 | 0.798 | 0.911 | 0.929 | 0.975 |