Abstract

We report the results of a study on the learnability of the locations of haptic icons on smartphones. The aim was to study the influence of the use of complex and different vibration patterns associated with haptic icons compared to the use of simple and equal vibrations on commercial location-assistance applications. We studied the performance of users with different visual capacities (visually impaired vs. sighted) in terms of the time taken to learn the icons’ locations and the icon recognition rate. We also took into consideration the users’ satisfaction with the application developed to perform the study. The experiments concluded that the use of complex and different instead of simple and equal vibration patterns obtains better recognition rates. This improvement is even more noticeable for visually impaired users, who obtain results comparable to those achieved by sighted users.

1. Introduction

Our interaction with mobile phones has changed in the last few years. First, that interaction was conducted using physical keyboards integrated into the phone; since though, those keyboards have been replaced by on-screen keyboards, and our interaction with applications is currently made using touchscreens. In fact, the touchscreen is the only way to control modern smartphones. This is a great handicap for visually impaired (VI) people in terms of accessibility and usability [1], mainly because there are no physical keys or reference points, which makes it difficult to reach a specific area of the screen or activate a certain function quickly [2].

Smartphones’ user interfaces (UIs) are composed of many visual elements (icons, buttons, bars, etc.) that allow for a fast understanding of the content structure with a quick view. This is a parallel process that allows quick reading (skimming) of the whole content of the UI [3]. However, VI people lack a quick overview of the screen, and hence, they have to explore the screen randomly or use sequential techniques to scan the whole UI in order to make a mental model of the location of the items of the UI. Consequently, VI people use a much slower process to operate a smartphone [4], one requiring a high cognitive load [5,6], which has a negative impact on the user experience.

In the last few years, some researchers have been developing accessible UI for VI people (accessibility-driven blind-friendly user interfaces) [7,8,9,10,11,12,13], and some solutions have been proposed to explore the touchscreen with the aim of improving the user experience. Those proposals can be classified into three types: screen readers, logical partitions with adaptive UIs and vibrotactile feedback.

1.1. Screen Readers

Screen readers are the most widely used technique to manipulate a smartphone screen using a set of predefined gestures. When a finger swipes the screen to navigate sequentially over a list or matrix of elements, the system reads out (using text-to-speech) the name of each element on the list when the finger is over it. Since 2009, there have been commercial applications such as VoiceOver for iOS [14] and TalkBack for Android [15] that allow this type of interaction.

1.2. Logical Partitions

This technique uses logical partitions, ordered menus, guiding techniques (access overlays) and adaptive UI to improve the user experience. With the aim of accelerating navigation tasks, logical partition techniques have been proposed to organize the UI elements in a predefined or customizable scheme [16,17], to use alphabetically ordered menus [18], to form adaptive UIs that change according to the context and user preferences [1] or to form guidance techniques with access overlays [8] based on physical reference points (edge projections), the voice (neighborhood browsing and touch and speak) or data sonification. Edge projection locates all the elements on the edges of the touchscreen. The neighborhood browsing technique reads out the distance to the nearest element on the screen as the user touches it. Finally, in touch-and-speak, users have to touch the screen and say aloud system commands to obtain the direction to an objective element on the screen.

Other methods have been proposed using sounds to guide users via auditory scroll bars [19] or using stereo sound in order for the user to remember where an element is located in the menu [20] or to support them with learning gestures [21].

A constraint of the techniques based on voice or data sonification is that they are not suitable for certain environments such as noisy locations or moments that require some kind of intimacy [22].

1.3. Vibrotactile Feedback

Vibrotactile interaction is widely employed on touchscreen-based smartphones to generate alerts and messages and to support a secondary feedback channel that highly improves the user experience [14,23], allowing users to perform common tasks much faster (scrolling, inputting, etc.) [24,25,26]. This technique is widely used on current screen readers [27]. In addition, new technologies allow users to develop a great variety of distinguishable vibrotactile patterns that can be used to identify icons without seeing or hearing them, which is suitable for visually impaired people [28,29,30].

The research in [31] used only vibrations to move across a list and demonstrated that it helped users to memorize the list order. The results showed that the method obtained improvements compared to VoiceOver in terms of the selection time, error rate and user satisfaction. The study published in [32] combined data sonification and vibrations in order to allow visually impaired people to learn the spatial layout of the graphic information shown on a screen. The work in [33] used haptic and speech feedback to build a digital map over a touchscreen in order to make it more accessible for VI people. The study in [22] proposed a logical partition of the user interface where each partition generated a different vibration pattern.

In previous work [29], we demonstrated that the use of different vibrotactile patterns assigned to mobile applications alerts in conjunction with reinforcement in the learning process of the association improved the alert recognition rate. Besides this, we found that the improvement was more significant for VI users.

As an evolution of our previous work, the study presented in this work uses a screen reader that makes a logical partition of the touchscreen similar to the one presented in [22], but instead of using areas of the screen, we use icons with different assigned vibration patterns. Hence, the aim of this work is to assess if the alert recognition improvement obtained by using different vibrotactile patterns is also applicable to the learning of the location of icons on a tactile screen.

With the aim to assist users in learning the location of a set of haptic icons on a smartphone screen, a mobile application has been developed.

In this study, a mobile application was designed and developed with the aim of assisting users in the process of learning the locations of a set of haptic icons scattered on a smartphone screen.

Considering the literature reviewed, we stated our hypotheses as:

- The use of complex vibration patterns (vibration patterns with different intensities, durations and numbers of pulses) associated with smartphone application haptic icons instead of simple and equal vibrations (the same vibration pattern that consists of a simple vibration pulse) improves the user experience in terms of the recognition ratio and the memorization of the haptic icons’ locations.

- This improvement also applies to visually impaired users.

2. Materials and Methods

2.1. Participants

Forty-six participants aged 18–60 years old took part in the experiments. Eighteen of them had a visual disability ranging from 80% to 100%, and consequently, they were unable to use graphical user interfaces. However, they used accessibility applications such as the Android Accessibility Suite to be able to interact with their smartphones. They were volunteers that worked in ONCE (Organización Nacional de Ciegos Españoles—Spanish National Blind Association). The only requirement was that they had to be smartphone users in order to be sure they knew how to use them as well as their accessibility applications. On the other hand, 28 of the participants were fully sighted, non-visually impaired users. They were student and teacher volunteers from the Electronic Technology faculty of the University of Málaga.

2.2. Experimental Design

The users’ visual capacities (VI vs. sighted) and the vibration types of the haptic icons (simple and equal vs. complex and different vibration patterns) were considered as independent variables in a 2 × 2 design. On the other hand, we considered the time needed to solve the test, the haptic icon recognition rate and the evaluation of the usability questionnaire as dependent variables.

All participants participated in a previous learning stage in which they navigated the screen and received acoustic information of the touched haptic icon (in the form of a voice telling them the name of the related application) while the vibration related to the pressed haptic icon was played.

Half of the participants (23) used the developed application with simple vibrations assigned to each haptic icon (14 sighted and 9 VI) and the other half used the application with complex vibrations assigned to each haptic icon (14 sighted and 9 VI). Table 1 summarizes the subjects’ distribution.

Table 1.

Distribution of subjects according to their visual capacity and the vibration type used in the EXT application.

Informed written consent was given by all participants in the study. The data obtained were analyzed anonymously.

2.3. Stimuli and Devices

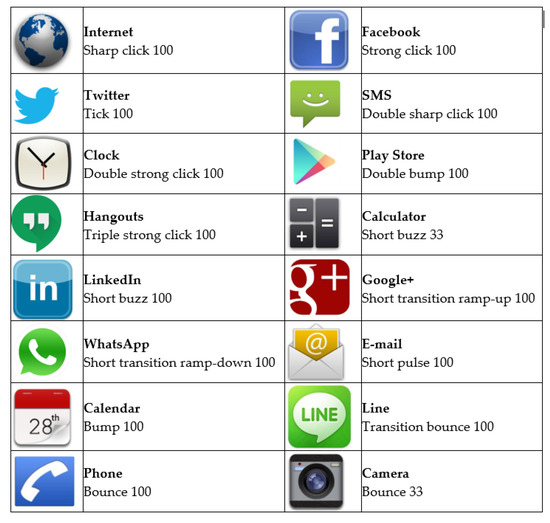

An Android application named EXT (Enhanced eXplore by Touch) was developed as a tool to implement the experiments. The source code can be freely accessed online (https://github.com/Equinoxe-fgc/EXT (accessed on 23 July 2021)). The EXT haptic icons were chosen from those predefined in the gallery of the Haptic Effect Preview tool [34]. No conceptual meanings could be associated with the applications they represented (Figure 1).

Figure 1.

Haptic icons and their related vibrations. The vibrations have been chosen from the Haptic Effect Preview application. The numbers represent the vibration intensities as percentages with 100 being the maximum vibration intensity.

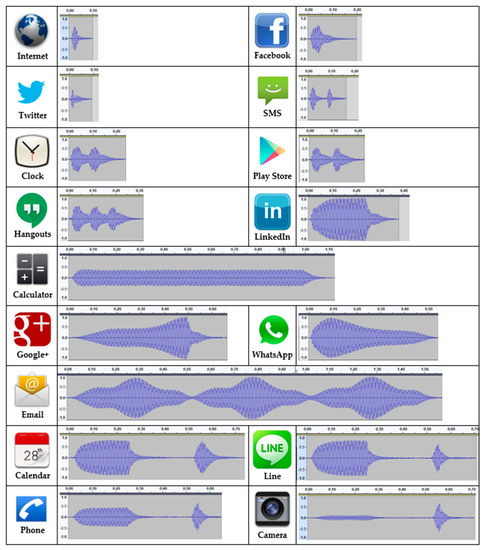

To perform the tests, a Samsung Galaxy S3 smartphone was employed because this is one of the recommended models to use with the Immersion SDK for Mobile Haptics [35]. As the vibrations were in the audio range of frequencies, they were recorded using a microphone attached to the smartphone and post-processed in order to obtain a good quality graphical representation of the vibrations. After analyzing the recorded waves, the vibration frequency was determined to be 200 Hz. Consequently, a bandpass filter from 100 to 300 Hz was applied to the signals to remove artifacts caused by screen touches or environmental noise. Afterward, the amplitudes were normalized in order to be comparable. The signal waveforms of the EXT haptic icon are shown in Figure 2, where the y-axis represents the relative intensity, and the x-axis is measured in seconds. The audio vibration patterns are available as supplementary materials.

Figure 2.

Haptic icons and their related vibration waveforms.

According to the type of vibration, the utilized haptic icon can be categorized into seven classes:

- Single click: They are very short vibrations (0.1 s). The vibration is so short that the vibration intensity does not have enough time to reach the maximum specified value. They are used by the Internet, Facebook and Twitter haptic icons.

- Double-click: They are two consecutive short vibrations. In the case of the SMS haptic icon, both vibrations do not overlap as the first vibration ends before the second one starts. On the contrary, the Clock and Play Store haptic icon vibrations do overlap, with the difference between them equal to the time between clicks.

- Triple-click: It is composed of three consecutive, overlapping clicks (Hangouts).

- Buzz: They are symmetrical vibrations that hit the peak of the effect’s vibration amplitude somewhere in the middle, sustaining it for a period of time. Calculator and LinkedIn use this type of vibration with different amplitudes and durations.

- Ramp: They vary the vibration amplitude, either increasing it over time (ramp-up) or starting with a high amplitude and decreasing it gradually until it stops (ramp-down). Google+ uses a ramp-up vibration with a rise time of 0.5 s and a release time of 0.15 s. Meanwhile, WhatsApp uses a ramp-down with a very short rise time of 0.08 s and a release time of 0.5 s.

- Pulse: They are smooth ramps up and down combined. The Email haptic icon uses three consecutive pulses of 0.5 s for each one.

- Buzz-bump: They are haptic icons with a combination of a buzz and a bump. A bump is a softer click. Calendar and Line use the same buzz but a different bump duration, with the Calendar bump having double the duration to the one used by Line. Calendar, Phone and Camera share the bump characteristics but differ on the bump intensity.

2.4. Experimental Procedure

As a previous step to the experiment, the subjects had to memorize the locations of sixteen icons related to applications and their associated vibrotactile stimuli. The aim of the experiment was to establish whether the use of different and complex vibrations had a better success ratio in terms of the users’ ability to memorize the locations of haptic icons on the smartphone screen. Additionally, the experiment sought to conclude if the improvement applied to visually impaired people. If it could be demonstrated, this improvement could be employed to increase smartphone accessibility and navigation for people with visual disabilities. Accessibility applications such as TalkBack for Android-based smartphones use simple and equal vibrations for all the icons shown on-screen. Hence, these could be improved using different and complex vibrations if it can be demonstrated that this type of vibration for haptic icons obtains a better recognition rate.

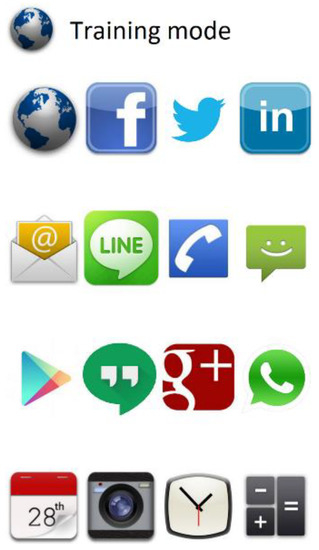

The application is divided into two sections:

- Training section: In this section, the screen shows sixteen different haptic icons in a 4 × 4 grid. Every icon corresponds to an application (Facebook, Line, Camera, etc.). When an icon is pressed, the smartphone plays a recording with the application name, and the vibration associated with the haptic icon is also played (Figure 3). The user can have as many trials and time as they would like to learn the locations and associated vibration patterns of all haptic icons.

Figure 3. Training section screen of the EXT application. A set of sixteen haptic icons is shown in a 4 × 4 grid.

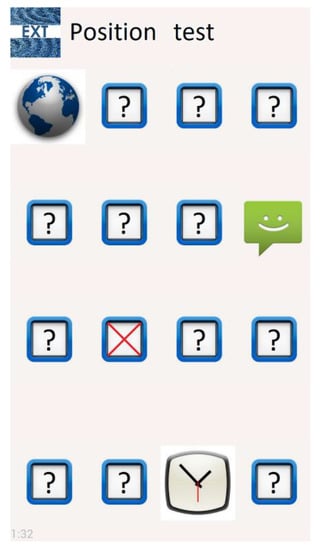

Figure 3. Training section screen of the EXT application. A set of sixteen haptic icons is shown in a 4 × 4 grid. - Test section: This section shows the same sixteen haptic icons as the training section, with the same distribution, although they are hidden by “?” symbols (Figure 4). The subject can explore the screen, touching it to find the icons. When a haptic icon is touched, a ‘beep’ sound is played. The menu key of the smartphone is programmed to play the name of the application the subject must find on the screen, which they are to do in the quickest amount of time possible. When the subject has selected the haptic icon to use as a response, the EXT application informs them whether the answer is correct. If it is correct, the application’s visual icon is shown and remains visible for the remainder of the test. In addition, if the icon is touched again, a voice will be played with the name of the application. However, if the answer is wrong, the application’s visual icon will be hidden again by the icon with the “?” symbol and the user will have to continue searching for the requested icon without any limit on the number of attempts, although this particular icon’s recognition will be considered as failed. Every time a haptic icon has been correctly found, the name of the next application to be found will be played until the sixteen icons are located.

Figure 4. Test section screen. The visible icons have been correctly recognized and the “?” icons hide the haptic icons to be recognized. The numbers on the bottom-left corner measure the time taken to make the test.

Figure 4. Test section screen. The visible icons have been correctly recognized and the “?” icons hide the haptic icons to be recognized. The numbers on the bottom-left corner measure the time taken to make the test.

The application allows two modes of operation: using simple or complex vibrations patterns. This feature will allow us to determine if the use of complex haptic icon vibrations improves the users’ capability to remember the locations of icons on the smartphone screen compared with the use of simple vibration patterns used by accessibility applications such as TalkBack.

- Simple vibration patterns: Every haptic icon uses the same simple vibration pattern to provide feedback to the subject when exploring the smartphone screen using the sense of touch, as with the TalkBack accessibility application. The Facebook vibration pattern (Figure 2) was selected for this as it is a short and intense vibration.

After the test was completed, all the subjects had to fill out a six-question usability questionnaire. The first three questions were adapted from the System Usability Scale (SUS) and Computer System Usability Questionnaire (CSUQ) [36] usability forms. The last three questions were related to the perception, differentiation and recognition [37] of the haptic icons of the developed application. A seven-level Likert scale was given, ranging from totally disagree (value 1) to totally agree (value 7). Table 2 shows the questions used for evaluation.

Table 2.

Usability form.

3. Results

This section presents the results of the experiments, focusing on the statistically significant results (p < 0.05).

3.1. Test Time

The main descriptive statistics of the test time differentiating VI and sighted subjects are shown in Table 3. The mean execution time of the test section was 7 min 22 s (5 min 52 s for sighted subjects and 9 min 42 s for VI subjects). The minimum test time for both types of subjects was 3 min; moreover, the maximum test time was 12 min for sighted subjects, but this was double, 24 min, for VI subjects. The mean test time needed for those subjects that used simple vibrations was 8 min 30 s, while the mean time taken when using complex vibrations was 6 min 14 s.

Table 3.

Descriptive statistics for the test time (in minutes:seconds), grouping VI and sighted subjects as a function of the vibration patterns used.

A two-way ANOVA (ANalysis Of VAriance) was conducted to examine the effect of the visual capacity and the type of vibration on the time taken to perform the test (Table 4).

Table 4.

Two-way ANOVA study of the time test as a function of the visual capacity of the subjects and the vibration type used (being df the degrees of freedom).

There was no statistically significant interaction between the effects of both parameters on the time taken (F (2.46) = 0.465, significance = 0.499 > 0.05). However, simple main effects analysis showed that the vibration type significantly affects the test time (F (1.46) = 4.672 and significance = 0.036 < 0.05). The same behavior is observed regarding the visual capacity of the subject, which also affects the recognition time (F (1.46) = 11.583, significance = 0.01 < 0.05). In fact, the visual capacity has the main influence on the test time as VI subjects need more time than sighted subjects to complete the test.

3.2. Recognition Rate

The two-way ANOVA test shows a statistically significant interaction between the effects of the visual capacity of the subjects and the type of vibration (simple or complex) on the recognition rate (Table 5; F (2.54) = 8.397, significance = 0.006 < 0.05).

Table 5.

Two-way ANOVA test of the recognition rate as a function of the visual capacity of the subjects and the vibration type used.

Simple main effects analysis shows that significant differences exist between subjects with different visual capacities (F (2.46) = 15.15, significance = 0.0 < 0.05) and the type of vibrations (F (2.46) = 30.96, significance = 0.0 < 0.05).

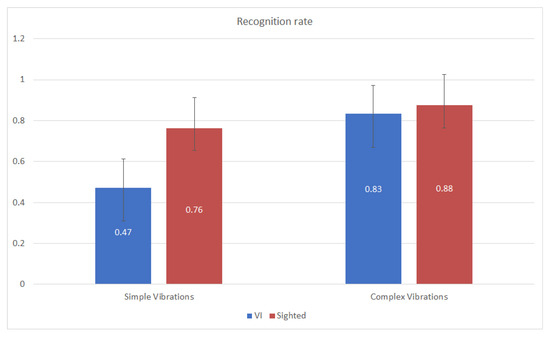

As shown in Figure 5, the recognition rate is considerably improved when using complex and different vibration patterns for VI subjects, increasing from 0.47 to 0.83. Moreover, the recognition rate is slightly increased (0.12) for sighted subjects when using complex vibrations. Consequently, it can be deduced that the use of different and complex vibration patterns associated with the icons of applications significantly improves (0.36) the recognition rate for VI users, while it slightly increases the recognition rate of sighted subjects. This conclusion agrees with the results obtained by the one-way ANOVA test of the influence of the use of simple or complex vibrations on VI (F (1.18) = 29.641, significance = 0.0 < 0.05) and sighted (F (1.28) = 4.521, significance = 0.043 < 0.05) subjects.

Figure 5.

Application recognition rate. For all the subjects, this figure shows the recognition rate as a function of the use of simple and equal vibrations patterns or complex and different vibration patterns while differentiating between VI and sighted subjects.

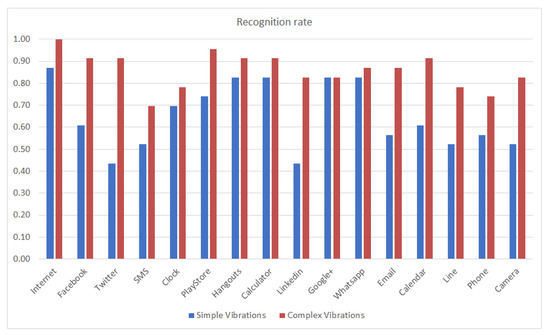

The recognition rate per haptic icon as a function of the type of vibration pattern (with unique and simple vibrations compared to complex and different vibrations) and without, considering the type of subject (VI or sighted), is shown in Figure 6.

Figure 6.

Recognition rate per haptic icon for simple vs. complex vibrations for the whole population.

The recognition rate increases for all haptic icons when using complex vibration patterns compared to the results obtained using simple vibrations. This is the expected behavior as complex vibrations have more information than simple vibrations. However, in spite of this predictable result, assistive applications such as TalkBack for Android do not use this advantage. The most noticeable improvements are for the Internet (reaching 1.0), Facebook and Twitter, which have single-click vibrations with different intensities and durations, as well as the icons with pulses and buzz-bump vibrations. Considering the single-click vibrations, it is expected that these will be more distinguishable from the simple vibrations (using the Facebook vibration pattern) as it is a way to differentiate them. On the other hand, the buzz-bump vibration patterns (Calendar, Line, Phone and Camera) are very different from the rest of the vibration patterns, hence, they are expected to be more discernable. Finally, the Email haptic icon also improves as the associated pulse vibration pattern is the most different from the rest of the vibration patterns in terms of duration and structure.

Figure 7 compares the recognition rate of sighted subjects using simple and complex vibration patterns. The figure shows that for most of the haptic icons, the recognition rates are slightly increased except for the Internet, Facebook and Twitter, where the increase is more noticeable, as shown in Figure 6. The LinkedIn (buzz) and Email (pulses) haptic icons also perform much better. However, the SMS (double-click) and Google+ (ramp-up) icons slightly reduce in their recognition rates.

Figure 7.

Recognition rate per haptic icon for sighted people using simple and complex vibration patterns.

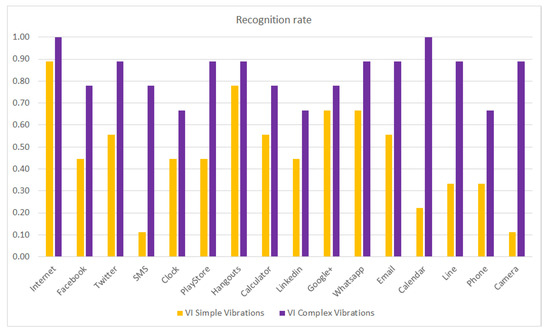

The same study (results presented in Figure 7) is repeated but considering the recognition rate per haptic icon of the VI subjects as a function of the use of simple or complex vibration patterns, with the results shown in Figure 8. It can be observed that the recognition rate is considerably increased when using complex vibration patterns, especially for the SMS, Calendar and Camera haptic icons. It is worth mentioning that the Calendar haptic icon obtained only a 0.2 recognition rate when using simple vibrations and obtained a 1.0 recognition rate (it is always recognized) when using complex vibrations. In previous work [29], some VI subjects pointed out that they had more problems recognizing the haptic icons when they were associated with applications they did not use regularly. Consequently, it is expected that applications such as SMS (that has marginal use nowadays) or the Camera will obtain low recognition rates for VI people.

Figure 8.

Recognition rate per haptic icon for VI people using simple and complex vibration patterns.

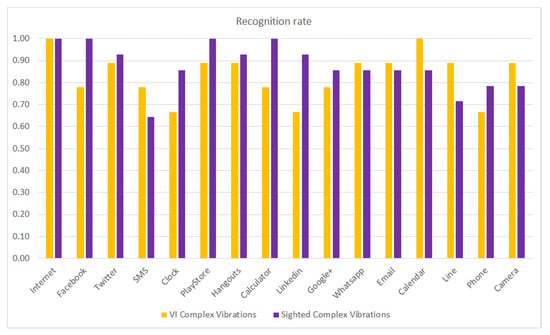

Figure 9 compares the haptic icon recognition rates of VI and sighted subjects when using complex and different vibration patterns. It can be observed that the recognition rates obtained by VI subjects are very close to or even better than those obtained by the sighted subjects for most of the haptic icons. For VI subjects, the Internet and Calendar haptic icons are fully recognized; the same behavior is observed for sighted subjects for the Internet (sharp click), Facebook (strong click), Play Store (double bump click) and Calculator (long buzz) haptic icons. Sighted people obtain better results than VI people at recognizing the Facebook (strong click), Calculator (long buzz) and LinkedIn (short buzz) haptic icons. On the other hand, VI subjects better recognize the SMS (double sharp click), Calendar (transition bump), Line (transition bounce 100) and Camera (transition bounce 33) haptic icons.

Figure 9.

Recognition rate per haptic icon for VI and sighted people using complex vibration patterns.

In our experiment, the haptic icons’ locations were fixed and, hence, it may be possible that the memorization of their position was related to their relative location. That is, haptic icons situated in certain locations could have been more easily remembered because of their position instead of their vibration patterns. Figure 10 represents, using a heatmap graph, the same information shown in Figure 9 but taking into consideration the haptic icons’ positions. The center of the figure shows the haptic icons’ locations, while the left and right graphs show the recognition rates for VI and sighted people, respectively, using complex vibration patterns. The colors represent, according to the legend shown in the right part of the figure, the recognition rate, using colors close to green for values close to 1.0 and red for values those close to 0.0. The displayed values for VI people do not show any pattern that could infer that there is a relationship between the recognition rate and the haptic icon location. Nevertheless, sighted people seem to better recognize or remember the first row of haptic icons. The Internet haptic icon is perfectly recognized by both types of users because it is the first one and it is thus easier to remember.

Figure 10.

Heatmap recognition rate per haptic icon for VI (left) and sighted (right) people using complex vibration patterns. The haptic icons’ locations are represented in the (center) of the figure.

Finally, Figure 11 represents the recognition rates of VI and sighted people considering the types of vibration patterns classified in Section 2.3., that is, click, double-click, triple-click, buzz, ramp, pulse and buzz-bump vibration. There was no significant difference between the VI and sighted users’ performances. Sighted users usually obtained a better recognition rate, except for pulses and buzz-bumps, for which VI users outperformed sighted users, but these variations were insignificant. The noticeable difference was for buzz vibrations (Calculator and LinkedIn), where sighted users outperformed VI people by more than 0.20. Unfortunately, with the collected data, we cannot infer which vibration was selected when an icon was incorrectly recognized, only whether it was recognized.

Figure 11.

Recognition rate per type of vibration pattern for VI and sighted people.

3.3. Usability Form

We implemented nonparametric Mann-Whitney U tests to compare the replies on the use of simple and equal vibration patterns or complex and different ones for the VI and sighted subjects on the usability form.

Table 6 shows the usability answers from the EXT application. There is a clear difference between sighted and VI subjects, as sighted people stated that vibrations were not clearly perceived, while VI people answered that they were very clearly perceived (Question 1). This difference is the same for simple and complex vibrations. However, all the subjects perceived that the vibrations were not clearly distinguishable (Question 2) or that it was not easy or was difficult to recognize the meaning of each haptic icon (Question 3), even though the recognition rates were high, especially when using complex vibration patterns. According to the answers, VI people would like to assign vibrations to assist their tactile exploration of the mobile phone screen (Question 4), although sighted subjects would not take up this option. All the sighted subjects affirm that they would need more practice to remember the icons’ locations (Question 5). Finally, all the subjects agree that the EXT application is very easy to use (Question 6).

Table 6.

Median results of the EXT application usability form.

4. Discussion

In this paper, we have presented a group of experiments with the aim to evaluate the learning of the locations of a set of haptic icons on a smartphone screen. Considering the time taken to learn the icons’ locations, it was noticeable that they could be learned in less than ten minutes, even though this time was different depending on the visual capacity of the subjects.

4.1. Effect of the Vibration Type

Our first hypothesis was that complex vibration patterns associated with smartphone application haptic icons could improve the user experience in terms of icon location on a smartphone screen. The experiments showed that the time needed to memorize and remember the icons’ locations was reduced compared to the use of simple and equal vibrations. In addition, the experiments also demonstrated that the icon location recognition rate was also improved, as the subjects considered it easier to associate different locations with different vibrations. This improvement was consistent with the one obtained in our previous work [29], which demonstrated that the use of different vibration patterns also improved the recognition of alerts associated with mobile applications.

However, assistance applications such as TalkBack for Android do not use different vibration patterns but rather use simple and equal ones. Hence, it would be a great improvement in terms of usability to adapt this type of application by using different vibration patterns and even allowing the users to associate the vibrations with certain application icons.

Considering the types of vibration patterns, the single-click applications (Internet, Facebook and Twitter) are much better distinguished than when all of them use simple vibrations (the Facebook waveform vibration). The same behavior is noticed for buzz-bump and pulses vibration patterns.

4.2. Effect of the Subjects’ Visual Condition

Our second hypothesis was that the possible improvements obtained by using complex and different vibration patterns also applied to visually impaired users. The conducted experiments showed that the VI subjects had a drastically reduced time taken to learn the icons’ locations compared to the time taken by sighted subjects. This behavior suggests that the learning process is highly supported by the different vibrotactile feedback obtained. In addition, the icon location recognition rate was also highly improved when using different and complex vibration patterns, allowing VI subjects to obtain similar results to sighted subjects who can also use their vision to learn the icon locations. This improvement was even more noticeable for some types of applications (those that are not usually employed by VI people). Considering the recognition rate as a function of the type of vibration patterns, the results are consistent with the previously stated results, and hence, the selected vibration patterns do not significantly affect the results. However, a deeper study of the distinguishability of the selected vibration patterns could be performed as a future work.

Consequently, the use of complex and different vibration patterns associated with application icons is highly recommended for VI users, as it allows them to achieve a user experience very similar to that of sighted users.

5. Conclusions

In this paper, we proposed the use of complex and different vibration patterns and associated them with different haptic icons on a smartphone, which is in contrast to the use of simple and equal vibrations as performed by commercial assistance applications. The experiments conducted considered the visual capability of the subjects in order to study whether the user experience of visually impaired users could also be improved when using smartphone devices.

An Android smartphone application was developed to perform the experiments. The application consists of two stages: a learning stage where users memorize the locations of haptic icons and the associated vibrations, and a test stage where users recognize the locations of haptic icons using vibrotactile feedback. The application tracks the time taken by subjects to learn the locations of the haptic icons presented on the screen and the recognition rate of each haptic icon.

The obtained results show that the use of different and complex vibration patterns associated with haptic icons reduces the time needed to learn the icons’ locations. This reduction is more noticeable for VI people. The recognition rate is also improved compared to the results obtained by using simple and equal vibration patterns. The improvement obtained by VI users is so great that these users demonstrated almost the same recognition rate as that of sighted users. This leads us to conclude that the use of complex vibration patterns improves the user experience, especially for VI people. It can also add new features for sighted people as they can easily navigate “non-visually” on their phone while it is in their pocket, in a backpack or while looking at another screen.

For future work, we propose testing the EXT application using different smartphone models to determine whether the recognition rate changes, as not all the smartphone models have the same vibration accuracy and capacity. Some users proposed that we add a new feature to the application in terms of the capability to configure the vibration patterns to the applications. Consequently, a new experiment could be performed in order to measure whether the recognition rate improves. Another improvement to the EXT application would be to store information about the user selection when a haptic icon is incorrectly located. This could add information about which vibration patterns are confused.

Supplementary Materials

The following are available online at https://www.mdpi.com/article/10.3390/s21155024/s1, Audio S1: Vibration patterns.

Author Contributions

F.J.G.-C., investigation, formal analysis and writing—original draft; J.L.L.-R., software and investigation; P.M.G., conceptualization and investigation; A.D.-E., conceptualization, writing—review and editing, and funding acquisition. All authors have read and agreed to the published version of the manuscript.

Funding

This research was part of the Spanish project “Interfaces de relación entre el entorno y las personas con discapacidad” (Relationship interfaces between the environment and disability people) led by Technosite and funded by Centro para el Desarrollo Tecnológico Industria (CDTI). Project code: 8.06/5.58.2941.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki and approved by the Ethics Committee of the University of Málaga (with number 561 and register code CEUMA: 87-2019-H) on 3 February 2020.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to privacy.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Khan, A.; Khusro, S.; Alam, I. BlindSense: An Accessibility-inclusive Universal User Interface for Blind People. Eng. Technol. Appl. Sci. Res. 2018, 8, 2775–2784. [Google Scholar] [CrossRef]

- Csapó, Á.; Wersényi, G.; Nagy, H.; Stockman, T. A survey of assistive technologies and applications for blind users on mobile platforms: A review and foundation for research. J. Multimodal User Interfaces 2015, 9, 275–286. [Google Scholar] [CrossRef] [Green Version]

- Ahmed, F.; Borodin, Y.; Soviak, A.; Islam, M.; Ramakrishnan, I.; Hedgpeth, T. Accessible skimming: Faster screen reading of web pages. In Proceedings of the 25th Annual ACM Symposium on User Interface Software and Technology (UIST’12), Cambridge, MA, USA, 7–10 October 2012; pp. 367–378. [Google Scholar]

- Oliveira, J.; Guerreiro, T.; Nicolau, H.; Jorge, J.; Gonçalves, D. Blind people and mobile touch-based text-entry: Acknowledging the need for different flavors. In Proceedings of the 13th International ACM SIGACCESS Conference on Computers and Accessibility (ASSETS’11), Dundee, UK, 24–26 October 2011; pp. 179–186. [Google Scholar]

- Hu, B.; Ning, X. Effects of Touch Screen Interface Parameters on User Task Performance. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2016, 60, 820–824. [Google Scholar]

- Zhang, L.; Liu, Y. On methods of designing smartphone interface. In Proceedings of the 2010 IEEE International Conference on Software Engineering and Service Sciences (ICSESS), Beijing, China, 16–18 July 2010; pp. 584–587. [Google Scholar]

- Akkara, J.; Kuriakose, A. Smartphone apps for visually impaired persons. Kerala J. Ophthalmol. 2019, 31, 242. [Google Scholar] [CrossRef]

- Kane, S.; Morris, M.; Zolyomi, A.; Wigdor, D.; Ladner, R.E.; Wobbrock, J. Access overlays: Improving non-visual access to large touch screens for blind users. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology (UIST’11), Santa Barbara, CA, USA, 16–19 October 2011; pp. 273–282. [Google Scholar]

- Karkar, A.; Al-Maadeed, S. Mobile Assistive Technologies for Visual Impaired Users: A Survey. In Proceedings of the 2018 International Conference on Computer and Applications (ICCA), Beirut, Lebanon, 25–26 July 2018; pp. 427–433. [Google Scholar]

- Khan, A.; Khusro, S. An insight into smartphone-based assistive solutions for visually impaired and blind people—Issues, challenges and opportunities. Univers. Access Inf. Soc. 2020, 19, 1–25. [Google Scholar] [CrossRef]

- Khan, A.; Khusro, S. Blind-friendly user interfaces—A pilot study on improving the accessibility of touchscreen interfaces. Multimed. Tools Appl. 2019, 78, 17495–17519. [Google Scholar] [CrossRef]

- Rodrigues, A.; Nicolau, H.; Montague, K.; Guerreiro, J.; Guerreiro, T. Open Challenges of Blind People Using Smartphones. Int. J. Hum. Comput. Interact. 2020, 36, 1605–1622. [Google Scholar] [CrossRef]

- Zhang, D.; Zhou, L.; Uchidiuno, J.; Kilic, I.D. Personalized Assistive Web for Improving Mobile Web Browsing and Accessibility for Visually Impaired Users. ACM Trans. Access Comput. 2017, 10, 1–22. [Google Scholar] [CrossRef]

- Apple. Haptics: User Interaction. 2019. Available online: https://developer.apple.com/design/human-interface-guidelines/ios/user-interaction/haptics/ (accessed on 14 September 2020).

- Chen, C.; Ganov, S.; Raman, T.V. TalkBack: An Open Source Screenreader for Android. 2009. Available online: https://opensource.googleblog.com/2009/10/talkback-open-source-screenreader-for.html (accessed on 14 September 2020).

- Khusro, S.; Niazi, B.; Khan, A.; Alam, I. Evaluating smartphone screen divisions for designing blind-friendly touch-based interfaces. In Proceedings of the 2019 International Conference on Frontiers of Information Technology (FIT), Islamabad, Pakistan, 16–18 December 2019; pp. 328–333. [Google Scholar]

- Vidal, S.; Lefebvre, G. Gesture based interaction for visually-impaired people. In Proceedings of the 6th Nordic Conference on Human-Computer Interaction: Extending Boundaries (NordiCHI), New York, NY, USA, 16–20 October 2010; pp. 809–812. [Google Scholar]

- Jeon, M.; Walker, B. Spindex (Speech Index) Improves Auditory Menu Acceptance and Navigation Performance. TACCESS 2011, 3, 10. [Google Scholar] [CrossRef] [Green Version]

- Yalla, P.; Walker, B.N. Advanced auditory menus: Design and evaluation of auditory scroll bars. In Proceedings of the 10th International ACM SIGACCESS Conference on Computers and Accessibility (ASSETS’08), Halifax, NS, Canada, 13–15 October 2008; pp. 105–112. [Google Scholar]

- Zhao, S.; Dragicevic, P.; Chignell, M.; Balakrishnan, R.; Baudisch, P. Earpod: Eyes-free menu selection using touch input and reactive audio feedback. In Proceedings of the Conference on Human Factors in Computing Systems, San Jose, CA, USA, 28 April–3 May 2007; pp. 1395–1404. [Google Scholar]

- Oh, U.; Kane, S.; Findlater, L. Follow that sound: Using sonification and corrective verbal feedback to teach touchscreen gestures. In Proceedings of the 15th International ACM SIGACCESS Conference on Computers and Accessibility (ASSETS), Bellevue, WA, USA, 21–23 October 2013. [Google Scholar]

- Buzzi, M.C.; Buzzi, M.; Leporini, B.; Paratore, M.T. Vibro-tactile enrichment improves blind user interaction with mobile touchscreens. In Proceedings of the 14th IFIP Conference on Human-Computer Interaction, Cape Town, South Africa, 2–6 September 2013; pp. 641–648. [Google Scholar]

- Choi, S.; Kuchenbecker, K. Vibrotactile Display: Perception, Technology, and Applications. Proc. IEEE 2013, 101, 2093–2104. [Google Scholar] [CrossRef]

- Brewster, S.; Chohan, F.; Brown, L. Tactile feedback for mobile interactions. In Proceedings of the Conference on Human Factors in Computing Systems, San Jose, CA, USA, 28 April–3 May 2007; pp. 159–162. [Google Scholar]

- Poupyrev, I.; Maruyama, S.; Rekimoto, J. Ambient touch: Designing tactile interfaces for handheld devices. In Proceedings of the ACM Symposium on UIST (User Interface Software and Technology), Paris, France, 27–30 October 2002; pp. 51–60. [Google Scholar]

- Yatani, K.; Truong, K. SemFeel: A user interface with semantic tactile feedback for mobile touch-screen devices. In Proceedings of the 22nd Annual ACM Symposium on User Interface Software and Technology, Victoria, BC, Canada, 4–7 October 2009; pp. 111–120. [Google Scholar]

- Grussenmeyer, W.; Folmer, E. Accessible touchscreen technology for people with visual impairments: A survey. ACM Trans. Access Comput. 2017, 9, 1–31. [Google Scholar] [CrossRef]

- María Galdón, P.; Ignacio Madrid, R.; De La Rubia-Cuestas, E.J.; Diaz-Estrella, A.; Gonzalez, L. Enhancing mobile phones for people with visual impairments through haptic icons: The effect of learning processes. Assist. Technol. 2013, 25, 80–87. [Google Scholar] [CrossRef] [PubMed]

- González-Cañete, F.J.; Rodríguez, J.L.L.; Galdón, P.M.; Díaz-Estrella, A. Improvements in the learnability of smartphone haptic interfaces for visually impaired users. PLoS ONE 2019, 14, e0225053. [Google Scholar] [CrossRef] [PubMed]

- Kokkonis, G.; Minopoulos, G.; Psannis, K.E.; Ishibashi, Y. Evaluating Vibration Patterns in HTML5 for Smartphone Haptic Applications. In Proceedings of the 2019 2nd World Symposium on Communication Engineering (WSCE), Nagoya, Japan, 20–23 December 2019; pp. 122–126. [Google Scholar]

- Pielot, M.; Hesselmann, T.; Heuten, W.; Kazakova, A.; Boll, S. PocketMenu: Non-visual menus for touch screen devices. In Proceedings of the 14th International Conference on Human Computer Interaction with Mobile Devices and Services (MobileHCI’12), San Francisco, CA, USA, 21–24 September 2012; pp. 327–330. [Google Scholar]

- Giudice, N.; Palani, H.; Brenner, E.; Kramer, K. Learning non-visual graphical information using a touch-based vibro-audio interface. In Proceedings of the 14th International ACM SIGACCESS Conference on Computers and Accessibility (ASSETS’12), Boulder, CO, USA, 22–24 October 2012. [Google Scholar]

- Magnusson, C.; Molina, M.; Rassmus-Gröhn, K.; Szymczak, D. Pointing for non-visual orientation and navigation. In Proceedings of the 6th Nordic Conference on Human-Computer Interaction: Extending Boundaries (NordiCHI), Reykjavik, Iceland, 16–20 October 2010; pp. 735–738. [Google Scholar]

- Immmersion. Immersion Releases Haptic Muse Effect Preview App for Android Game Developers. 2013. Available online: https://ir.immersion.com/news-releases/news-release-details/immersion-releases-haptic-muse-effect-preview-app-android-game (accessed on 15 September 2020).

- Immersion. Immersion SDK for Mobile Haptics. 2016. Available online: https://www.immersion.com/meet-immersions-new-haptic-sdk-mobile-apps/ (accessed on 6 July 2021).

- Tullis, T.; Albert, W. Measuring the User Experience: Collecting, Analyzing, and Presenting Usability Metrics; Morgan Kaufmann Publisher: Burlington, MA, USA, 2013. [Google Scholar]

- Swerdfeger, B.; Fernquist, J. Exploring melodic variance in rhythmic haptic stimulus design. In Proceedings of the Graphics Interface, Kelowna, BC, Canada, 25–27 May 2009; pp. 133–140. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).