Reasons and Strategies for Privacy Features in Tracking and Tracing Systems—A Systematic Literature Review

Abstract

:1. Introduction

- Evaluating existing ATTS using existing evaluation guidelines [3] to highlight their pros and cons concerning the privacy features of the systems.

- Discussing different guidelines and standards that can be used for indoor localization systems in detail with a special focus on privacy.

- Providing the pros and cons of different frameworks and highlighting their suitability and challenges for employee tracking.

- Presenting challenges and opportunities for future research and discussions at the end of the paper.

2. Relevance & Background

2.1. Indoor Positioning Systems and Asset Tracking

Asset Tracking and Tracing Systems (ATTS)

2.2. Privacy by Design in the Working Environment

2.3. Related Work

3. Research Method

- RQ1:

- What are the most common reasons to implement privacy features in ATTS?

- RQ2:

- Which approaches exist to evaluate the privacy of users in ATTS?

- RQ3:

- What are the most frequently used strategies implementing privacy features in ATTS?

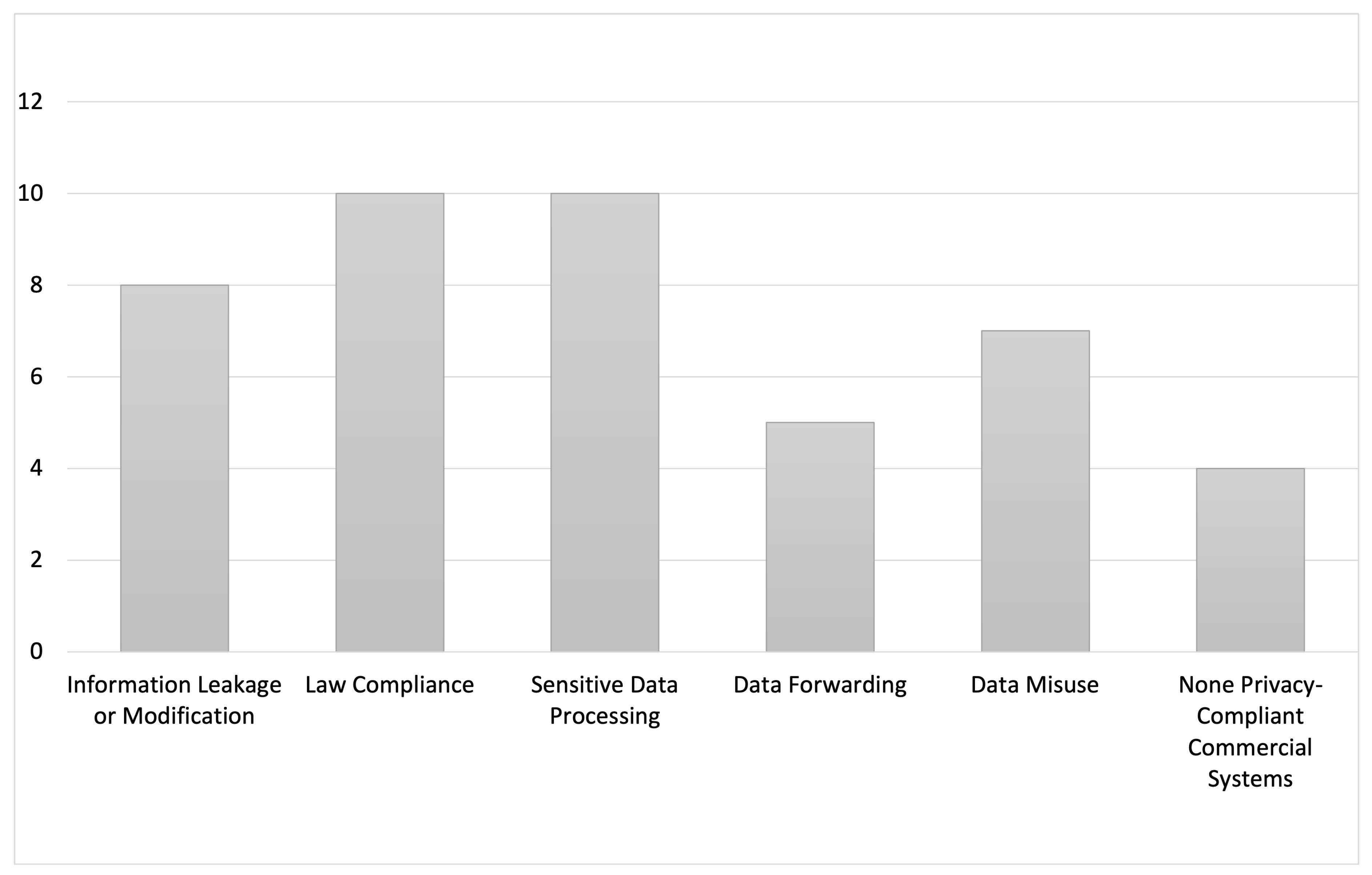

4. Reason and Need for Privacy Features in Tracking Systems

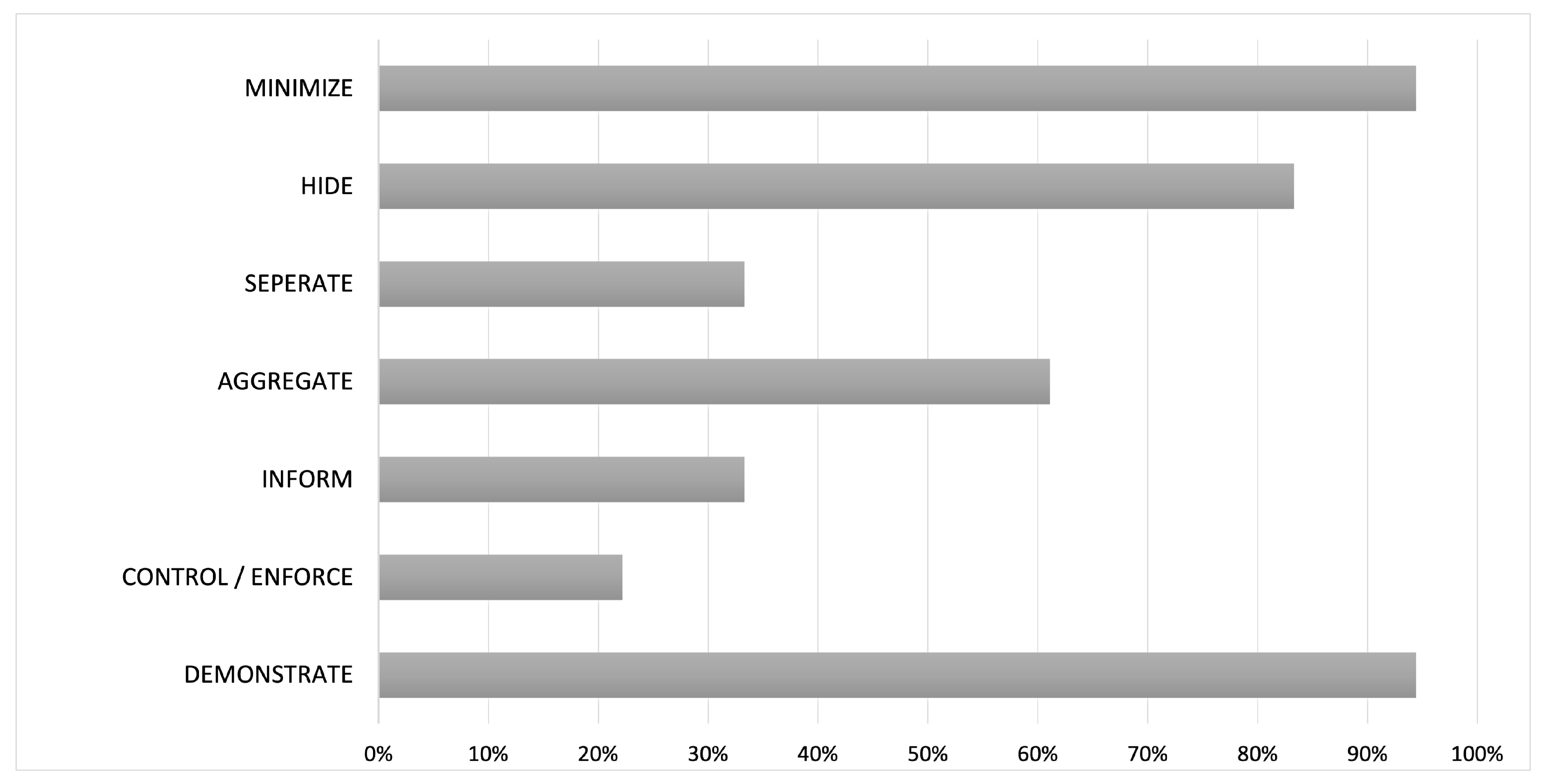

5. Privacy Strategies

5.1. Data-Oriented Strategies

5.1.1. Strategy 1—MINIMIZE

5.1.2. Strategy 2—HIDE

5.1.3. Strategy 3—SEPARATE

5.1.4. Strategy 4—AGGREGATE

5.2. Process-Oriented Strategies

5.2.1. Strategy 5—INFORM

5.2.2. Strategy 6—CONTROL

5.2.3. Strategy 7—ENFORCE

- Create—to respect the value of privacy and decide upon policies which share that value [64].

- Maintain—to respect the policies when designing or modifying features, especially after updating the policies to better protect personal information [65].

- Uphold—to ensure compliance with these policies. Personal information is valued as an asset and privacy as a goal to incentivize as a critical feature [66].

5.2.4. Strategy 8—DEMONSTRATE

- Information disclosure refers to the fact that the data controller should be informed exactly about the acquisition, collection, storage and processing of privacy data.

- A privacy-preserving ATTS should log all events where personal information is gathered, stored, processed or disseminated.

- A systematic and independent examination of logs, procedures, processes, software and hardware specifications should be performed.

- A form of compliance demonstration is Open Source.

- Data flow diagrams make it possible for interested parties to see the flows of data within an IoT application.

- Certification by a neutral institution increases the trustworthiness of IoT applications.

- To demonstrate privacy protection, a good practice is to use industry-wide standards that inherit privacy protection capabilities.

- Depending on the country and region, different guidelines, laws and regulations must be observed for IoT applications.

- Openness, transparency and notice—this principle means to disclose the choices offered by the data controller to the data subjects for the purposes for accessing, correcting and removing their information.

- Information security—access to personal data shall be granted only to those who need it in order to perform their duties.

- Privacy compliance—applicable laws can provide that supervisory authorities are responsible for monitoring compliance with applicable data protection laws [54].

6. Discussion

6.1. Research Questions

- What are the most common reasons to implement privacy features in ATTS?

- Which approaches exist to evaluate privacy of users in ATTS?

- What are the most frequently used strategies implementing privacy features in ATTS?

6.2. Limitations

6.3. Future Perspectives

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Abad, E.; Palacio, F.; Nuin, M.; Zárate, A.G.D.; Juarros, A.; Gómez, J.; Marco, S. RFID smart tag for traceability and cold chain monitoring of foods: Demonstration in an intercontinental fresh fish logistic chain. J. Food Eng. 2009, 93, 394–399. [Google Scholar] [CrossRef]

- Schlund, S.; Pokorni, B. Industrie 4.0—Wo Steht Die Revolution der Arbeitsgestaltung? Ingenics AG: Ulm, Germany, 2016. [Google Scholar]

- Perera, C.; McCormick, C.; Bandara, A.K.; Price, B.A.; Nuseibeh, B. Privacy-by-Design Framework for Assessing Internet of Things Applications and Platforms. In Proceedings of the 6th International Conference on the Internet of Things, Stuttgart, Germany, 7–9 November 2016; pp. 83–92. [Google Scholar] [CrossRef] [Green Version]

- Kaplan, E.; Hegarty, C. Understanding GPS: Principles and Applications; Artech House: London, UK, 2005. [Google Scholar]

- Liu, H.; Darabi, H.; Banerjee, P.; Liu, J. Survey of Wireless Indoor Positioning Techniques and Systems. IEEE Trans. Syst. Man Cybern. C 2007, 37, 1067–1080. [Google Scholar] [CrossRef]

- Zhang, Z.; Mehmood, A.; Shu, L.; Huo, Z.; Zhang, Y.; Mukherjee, M. A Survey on Fault Diagnosis in Wireless Sensor Networks. IEEE Access 2018, 6, 11349–11364. [Google Scholar] [CrossRef]

- Jandl, C.; Nurgazina, J.; Schöffer, L.; Reichl, C.; Wagner, M.; Moser, T. SensiTrack–A Privacy by Design Concept for industrial IoT Applications. In Proceedings of the 2019 24th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Zaragoza, Spain, 10–13 September 2019. [Google Scholar]

- Pešić, S.; Tošić, M.; Iković, O.; Radovanović, M.; Ivanović, M.; Bošković, D. Bluetooth Low Energy Microlocation Asset Tracking (BLEMAT) in a Context-Aware Fog Computing System. In Proceedings of the 8th International Conference on Web Intelligence, Mining and Semantics, Novi Sad, Serbia, 25–27 June 2018; pp. 1–11. [Google Scholar] [CrossRef]

- Pavithra, T.; Sreenivasa Ravi, K. Anti-Loss Key Tag Using Bluetooth Smart. Indian J. Sci. Technol. 2017, 10. [Google Scholar] [CrossRef]

- Salman, A.; El-Tawab, S.; Yorio, Z.; Hilal, A. Indoor Localization Using 802.11 WiFi and IoT Edge Nodes. In Proceedings of the 2018 IEEE Global Conference on Internet of Things (GCIoT), Alexandria, Egypt, 5–7 December 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Fadzilla, M.A.; Harun, A.; Shahriman, A.B. Wireless Signal Propagation Study in an Experiment Building for Optimized Wireless Asset Tracking System Development. In Proceedings of the 2018 International Conference on Computational Approach in Smart Systems Design and Applications (ICASSDA), Kuching, Malaysia, 15–17 August 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Moniem, S.A.; Taha, S.; Hamza, H.S. An anonymous mutual authentication scheme for healthcare RFID systems. In Proceedings of the 2017 IEEE SmartWorld/SCALCOM/UIC/ATC/CBDCom/IOP/SCI, San Francisco, CA, USA, 4–8 August 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Zafari, F.; Gkelias, A.; Leung, K.K. A Survey of Indoor Localization Systems and Technologies. IEEE Commun. Surv. Tutor. 2019, 21, 2568–2599. [Google Scholar] [CrossRef] [Green Version]

- Alkhawaja, F.; Jaradat, M.; Romdhane, L. Techniques of Indoor Positioning Systems (IPS): A Survey. In Proceedings of the 2019 Advances in Science and Engineering Technology International Conferences (ASET), Dubai, United Arab Emirates, 26 March–11 April 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Ziegeldorf, J.H.; Morchon, O.G.; Wehrle, K. Privacy in the Internet of Things: Threats and challenges. Secur. Commun. Netw. 2014, 7, 2728–2742. [Google Scholar] [CrossRef]

- Martin, J.; Mayberry, T.; Donahue, C.; Foppe, L.; Brown, L.; Riggins, C.; Rye, E.C.; Brown, D. A Study of MAC Address Randomization in Mobile Devices and When it Fails. Proc. Priv. Enhancing Technol. 2017, 2017, 365–383. [Google Scholar] [CrossRef] [Green Version]

- Becker, J.K.; Li, D.; Starobinski, D. Tracking Anonymized Bluetooth Devices. Proc. Priv. Enhancing Technol. 2019, 2019. [Google Scholar] [CrossRef] [Green Version]

- Mautz, R. Indoor Positioning Technologies; ETH Zurich: Zurich, Switzerland, 2012. [Google Scholar] [CrossRef]

- Zhu, H.; Tsang, K.F.; Liu, Y.; Wei, Y.; Wang, H.; Wu, C.K.; Chi, H.R. Extreme RSS Based Indoor Localization for LoRaWAN With Boundary Autocorrelation. IEEE Trans. Ind. Inform. 2021, 17, 4458–4468. [Google Scholar] [CrossRef]

- Heinrich, C.E. Real World Awareness (RWA) —Nutzen von RFID und anderen RWA-Technologien. In Herausforderungen in der Wirtschaftsinformatik; Springer: Berlin/Heidelberg, Germany, 2006; pp. 157–161. [Google Scholar] [CrossRef]

- Ahmad, S.; Lu, R.; Ziaullah, M. Bluetooth an Optimal Solution for Personal Asset Tracking: A Comparison of Bluetooth, RFID and Miscellaneous Anti-lost Traking Technologies. Int. J. Serv. Sci. Technol. 2015, 8, 179–188. [Google Scholar] [CrossRef]

- Langheinrich, M. Privacy by Design—Principles of Privacy-Aware Ubiquitous Systems; Springer: Berlin/Heidelberg, Germany, 2001. [Google Scholar]

- Regard, H. Recommendation of the Council Concerning Guidelines Governing the Protection of Privacy and Transborder Flows of Personal Data; OECD Guidelines. 2013. Available online: https://www.oecd.org/sti/ieconomy/2013-oecd-privacy-guidelines.pdf (accessed on 10 April 2021).

- Cavoukian, A. Privacy by Design: The 7 Foundational Principles; Information and Privacy Commissioner: Toronto, ON, Canada, 2009; Volume 5. [Google Scholar]

- Custers, B.; Ursic, H. Worker Privacy in a digitalized World under European Law. Comp. Labour Law Policy J. Forthcom. 2018, 39, 22. [Google Scholar]

- Wu, H.; Hung, T.; Wang, S.; Wang, J. Development of a shoe-based dementia patient tracking and rescue system. In Proceedings of the 2018 IEEE International Conference on Applied System Invention (ICASI), Chiba, Japan, 13–17 April 2018; pp. 885–887. [Google Scholar] [CrossRef]

- Wang, R.R.; Ye, R.R.; Xu, C.F.; Wang, J.Z.; Xue, A.K. Hardware design of a localization system for staff in high-risk manufacturing areas. J. Zhejiang Univ. Sci. 2013, 14, 1–10. [Google Scholar] [CrossRef]

- Schauer, L. Analyzing the Digital Society by Tracking Mobile Customer Devices. In Digital Marketplaces Unleashed; Springer: Berlin/Heidelberg, Germany, 2018; pp. 467–478. [Google Scholar] [CrossRef]

- Aleisa, N.; Renaud, K. Privacy of the Internet of Things: A Systematic Literature Review. In Proceedings of the 50th Hawaii International Conference on System Sciences, Hilton Waikoloa Village, HI, USA, 4–7 January 2017; p. 10. [Google Scholar]

- Kumar, J.S.; Patel, D.R. A Survey on Internet of Things: Security and Privacy Issues. Int. J. Comput. Appl. 2014. [Google Scholar] [CrossRef]

- Rushanan, M.; Rubin, A.D.; Kune, D.F.; Swanson, C.M. SoK: Security and Privacy in Implantable Medical Devices and Body Area Networks. In Proceedings of the 2014 IEEE Symposium on Security and Privacy, Berkeley, CA, USA, 18–21 May 2014; pp. 524–539. [Google Scholar] [CrossRef]

- Hong, Y.; Vaidya, J.; Wang, S. A Survey of Privacy-Aware Supply Chain Collaboration: From Theory to Applications. J. Inf. Syst. 2014, 28, 243–268. [Google Scholar] [CrossRef] [Green Version]

- Loukil, F.; Ghedira-Guegan, C.; Benharkat, A.N.; Boukadi, K.; Maamar, Z. Privacy-Aware in the IoT Applications: A Systematic Literature Review. In Proceedings of the On the Move to Meaningful Internet Systems. OTM 2017 Conference, Rhodes, Greece, 23–27 October 2017; Volume 10573, pp. 552–569. [Google Scholar] [CrossRef] [Green Version]

- Lin, J.; Yu, W.; Zhang, N.; Yang, X.; Zhang, H.; Zhao, W. A Survey on Internet of Things: Architecture, Enabling Technologies, Security and Privacy, and Applications. IEEE Internet Things J. 2017, 4, 1125–1142. [Google Scholar] [CrossRef]

- Kitchenham, B.; Charters, S. Guidelines for performing Systematic Literature Reviews in Software Engineering. 2007. Available online: https://www.elsevier.com/__data/promis_misc/525444systematicreviewsguide.pdf (accessed on 15 April 2021).

- Hoepman, J.H. Privacy Design Strategies. In ICT Systems Security and Privacy Protection; Springer: Berlin/Heidelberg, Germany, 2014; Volume 428, pp. 446–459. [Google Scholar] [CrossRef] [Green Version]

- Buccafurri, F.; Lax, G.; Nicolazzo, S.; Nocera, A. A Privacy-Preserving Solution for Tracking People in Critical Environments. In Proceedings of the 2014 IEEE 38th International Computer Software and Applications Conference Workshops, Vasteras, Sweden, 21–25 July 2014; pp. 146–151. [Google Scholar] [CrossRef]

- Baslyman, M.; Rezaee, R.; Amyot, D.; Mouttham, A.; Chreyh, R.; Geiger, G.; Stewart, A.; Sader, S. Real-time and location-based hand hygiene monitoring and notification. Pers. Ubiquitous Comput. 2015, 19, 667–688. [Google Scholar] [CrossRef]

- Stone, S. Design and Implementation of an Automatic Staff Availability Tracking System. In Proceedings of the 2015 International Conference on Computing, Communication and Security (ICCCS), Pointe aux Piments, Mauritius, 4–5 December 2015. [Google Scholar]

- Kim, J.; Jang, J.J.; Jung, I.Y. Near Real-Time Tracking of IoT Device Users. In Proceedings of the 2016 IEEE International Parallel and Distributed Processing Symposiuim Workshops (IPDPSW), Chicago, IL, USA, 23–27 May 2016; pp. 1085–1088. [Google Scholar] [CrossRef]

- Martin, P.D.; Rushanan, M.; Tantillo, T.; Lehmann, C.U.; Rubin, A.D. Applications of Secure Location Sensing in Healthcare. In Proceedings of the 7th ACM International Conference on Bioinformatics, Computational Biology, and Health Informatics, Seattle, WA, USA, 2–5 October 2016; pp. 58–67. [Google Scholar] [CrossRef]

- Fernández-Ares, A.; Mora, A.; Arenas, M.; García-Sanchez, P.; Romero, G.; Rivas, V.; Castillo, P.; Merelo, J. Studying real traffic and mobility scenarios for a Smart City using a new monitoring and tracking system. Future Gener. Comput. Syst. 2016, 76, 163–179. [Google Scholar] [CrossRef]

- Rahman, F.; Bhuiyan, M.Z.A.; Ahamed, S.I. A privacy preserving framework for RFID based healthcare systems. Future Gener. Comput. Syst. 2017, 72, 339–352. [Google Scholar] [CrossRef] [Green Version]

- Ziegeldorf, J.H.; Henze, M.; Bavendiek, J.; Wehrle, K. TraceMixer: Privacy-preserving crowd-sensing sans trusted third party. In Proceedings of the 2017 13th Annual Conference on Wireless On-demand Network Systems and Services (WONS), Jackson, WY, USA, 21–24 February 2017; pp. 17–24. [Google Scholar] [CrossRef]

- Ashur, T.; Delvaux, J.; Lee, S.; Maene, P.; Marin, E.; Nikova, S.; Reparaz, O.; Rožić, V.; Singelée, D.; Yang, B.; et al. A Privacy-Preserving Device Tracking System Using a Low-Power Wide-Area Network. In Cryptology and Network Security; Capkun, S., Chow, S.S.M., Eds.; Springer: Berlin/Heidelberg, Germany, 2018; Volume 11261, pp. 347–369. [Google Scholar] [CrossRef] [Green Version]

- Hepp, T.; Wortner, P.; Schönhals, A.; Gipp, B. Securing Physical Assets on the Blockchain: Linking a novel Object Identification Concept with Distributed Ledgers. In Proceedings of the 16th Annual International Conference on Mobile Systems, Applications, and Services, Munich, Germany, 15 June 2018; pp. 60–65. [Google Scholar] [CrossRef] [Green Version]

- Anandhi, S.; Anitha, R.; Sureshkumar, V. IoT Enabled RFID Authentication and Secure Object Tracking System for Smart Logistics. Wirel. Pers. Commun. 2019, 104, 543–560. [Google Scholar] [CrossRef]

- Maouchi, M.E.; Ersoy, O.; Erkin, Z. DECOUPLES: A decentralized, unlinkable and privacy-preserving traceability system for the supply chain. In Proceedings of the 34th ACM/SIGAPP Symposium on Applied Computing, Limassol, Cyprus, 8–12 April 2019; pp. 364–373. [Google Scholar] [CrossRef]

- Buccafurri, F.; Lax, G.; Nicolazzo, S.; Nocera, A. A Privacy-Preserving Localization Service for Assisted Living Facilities. IEEE Trans. Serv. Comput. 2020, 13, 16–29. [Google Scholar] [CrossRef]

- Faramondi, L.; Bragatto, P.; Fioravanti, C.; Gnoni, M.G.; Guarino, S.; Setola, R. A Privacy-Oriented Solution for the Improvement of Workers Safety. In Proceedings of the 43rd International Convention on Information, Communication and Electronic Technology (MIPRO), Opatija, Croatia, 28 September–2 October 2020; pp. 1789–1794. [Google Scholar] [CrossRef]

- Zhou, S.; Zhang, Z.; Luo, Z.; Wong, E.C. A lightweight anti-desynchronization RFID authentication protocol. Inf. Syst. Front. 2010, 12, 521–528. [Google Scholar] [CrossRef]

- Mitrokotsa, A.; Rieback, M.R.; Tanenbaum, A.S. Classifying RFID attacks and defenses. Inf. Syst. Front. 2010, 12, 491–505. [Google Scholar] [CrossRef] [Green Version]

- Backhaus, N. Review zur Wirkung elektronischer Überwachung am Arbeitsplatz und Gestaltung kontextsensitiver Assistenzsysteme; BAuA: Dortmund, Germany, 2018. [Google Scholar]

- International Organization for Standardization. ISO: Information Technology Security Techniques Privacy Framework; International Organization for Standardization: Geneva, Switzerland, 2011. [Google Scholar]

- Colesky, M.; Hoepman, J.; Hillen, C. A Critical Analysis of Privacy Design Strategies. In Proceedings of the 2016 IEEE Security and Privacy Workshops (SPW), San Jose, CA, USA, 22–26 May 2016; pp. 33–40. [Google Scholar] [CrossRef]

- Pfitzmann, A.; Dresden, T.; Hansen, M.; Kiel, U. Anonymity, Unlinkability, Unobservability, Pseudonymity, and Identity Management—A Consolidated Proposal for Terminology. 2005. Available online: https://dud.inf.tu-dresden.de/literatur/Anon_Terminology_v0.28.pdf (accessed on 22 April 2021).

- Sweeney, L. k-Anonymity: A model for protection privacy. Int. J. Uncertain. Fuzziness Knowl. Based Syst. 2002, 10, 557–570. [Google Scholar] [CrossRef] [Green Version]

- Fan, K.; Jiang, W.; Li, H.; Yang, Y. Lightweight RFID Protocol for Medical Privacy Protection in IoT. IEEE Trans. Ind. Inform. 2018, 14, 1656–1665. [Google Scholar] [CrossRef]

- Duckham, M.; Kulik, L. A Formal Model of Obfuscation and Negotiation for Location Privacy. In Pervasive Computing; Springer: Berlin/Heidelberg, Germany, 2005; pp. 152–170. [Google Scholar]

- Góes, R.M.; Rawlings, B.C.; Recker, N.; Willett, G.; Lafortune, S. Demonstration of Indoor Location Privacy Enforcement using Obfuscation. IFAC-PapersOnline 2018, 51, 145–151. [Google Scholar] [CrossRef]

- Bonomi, F.; Milito, R.; Zhu, J.; Addepalli, S. Fog computing and its role in the internet of things. In Proceedings of the ACM SIGCOMM 2012 Conference, Helsinki, Finland, 17 August 2012; p. 3. [Google Scholar]

- Beresford, A.; Stajano, F. Location privacy in pervasive computing. IEEE Pervasive Comput. 2003, 2, 46–55. [Google Scholar] [CrossRef] [Green Version]

- Peguera, M. Monograph “VII International Conference on Internet, Law & Politics. Net Neutrality and other challenges for the future of the Internet”. Rev. Internet Derecho PolíT 2012, 21. [Google Scholar] [CrossRef]

- Baraki, H.; Geihs, K.; Hoffmann, A.; Voigtmann, C.; Kniewel, R.; Macek, B.E.; Zirfas, J. Towards Interdisciplinary Design Patterns for Ubiquitous Computing Applications; Kassel University Press GmbH: Kassel, Germany, 2014; p. 46. [Google Scholar]

- Kelbert, F.; Pretschner, A. Towards a policy enforcement infrastructure for distributed usage control. In Proceedings of the 17th ACM Symposium on Access Control Models and Technologies, Newark, NJ, USA, 20–22 June 2012; p. 119. [Google Scholar] [CrossRef]

- Iachello, G.; Hong, J. End-User Privacy in Human-Computer Interaction. FNT Hum. Comput. Interact. 2007, 1, 1–137. [Google Scholar] [CrossRef] [Green Version]

- Perera, C.; Barhamgi, M.; Bandara, A.K.; Ajmal, M.; Price, B.; Nuseibeh, B. Designing privacy-aware internet of things applications. Inf. Sci. 2020, 512, 238–257. [Google Scholar] [CrossRef] [Green Version]

- Hong, J.I.; Ng, J.D.; Lederer, S.; Landay, J.A. Privacy Risk Models for Designing Privacy-Sensitive Ubiquitous Computing Systems. In Proceedings of the 5th Conference on Designing Interactive Systems: Processes, Practices, Methods, and Techniques, Cambridge, MA, USA, 1–4 August 2004; pp. 91–100. [Google Scholar] [CrossRef]

- Holcer, S.; Torres-Sospedra, J.; Gould, M.; Remolar, I. Privacy in Indoor Positioning Systems: A Systematic Review. In Proceedings of the 2020 International Conference on Localization and GNSS (ICL-GNSS), Tampere, Finland, 2–4 June 2020; pp. 1–6. [Google Scholar] [CrossRef]

| Radio Standard | Range Indoor | Power Consumption | Location Accuracy | Privacy Risks | Advantage | Disadvantage |

|---|---|---|---|---|---|---|

| Wifi | 35 m | moderate | m [14] | Smartphones or fitness wristbands with activated Wifi could also be tracked [15,16] | Widely available, high accuracy, no extra hardware | Prone to noise, requires complex process algorithms |

| UWB | 10–20 m | moderate | cm–m [14] | Low risk because of seperated hardware | Iimmun to interference, very high accuracy | Shorter range, requires specific hardware, high costs |

| RFID | 200 m | low | dm–m [14] | Smartcards of employees could be tracked [15,17] | Iow power, different possibilities for range (active, passive) | Localization accuracy is low |

| Bluetooth | 100 m | low | 5–10 m [18] | Smartphones or fitness wristbands with activated Bluetooth could also be tracked [15] | High throughput, reception range, low energy consumption | Low localization accuracy, prone to noise |

| LoRA | 500 m | extremely low | 10–20 m [19] | Low risk because of seperated hardware | Wide reception range, low energy consumption | Long distance between base station and device, server outdoor-to-indoor signal attenuation due building walls |

| Inclusion Criteria | Exclusion Criteria |

|---|---|

|

|

| Author | Year | Publication Type | Conference/Jounal | Publisher | Technology |

|---|---|---|---|---|---|

| Buccafurri et al. | 2014 | Conference | Int. Computer Software and Applications Conf. | IEEE | RFID |

| Baslyman et al. | 2015 | Journal | Personal and Ubiquitous Computing | Springer Link | Wifi |

| Stone and Spies | 2015 | Conference | Int. Conf. on Computing, Communication and Security (ICCCS) | IEEE | Presence Sensors |

| Kim et al. | 2016 | Conference | Int. Parallel and Distributed Processing Symp. | IEEE | Wifi |

| Martin et al. | 2016 | Conference | Int. Conf. on Bioinformatics, Computational Biology, and Health Informatics | ACM | Bluetooth |

| Fernandez-Ares et al. | 2016 | Journal | Future Generation Computer Systems | Scinece Direct | Wifi, Bluetooth |

| Moniem et al. | 2017 | Conference | Ubiquitous, Autonomic and Trusted Computing, UIC-ATC | IEE | RFID |

| Rahman et al. | 2017 | Journal | Future Generation Computer Systems | Science Direct | RFID |

| Ziegeldorf et al. | 2017 | Conference | Conf. on Wireless On-demand Network Systems and Services (WONS) | IEEE | Wifi/Bluetooth |

| Ashur et al. | 2018 | Conference | Int. Conf. on Cryptology and Network Security | Springer Link | LoRa, Bluetooth |

| Hepp et al. | 2018 | Conference | Workshop on Cryptocurrencies and Blockchains for Distributed Systems | ACM | Blockchain, RFID |

| Pešić et al. | 2018 | Conference | Int. Conf. on Web Intelligence, Mining and Semantics | ACM | Bluetooth |

| Salman et al. | 2018 | Conference | Glob. Conf. on Internet of Things (GCIoT) | IEEE | Wifi |

| Anandhi et al. | 2019 | Journal | Wireless Personal Communications | Springer Link | RFID |

| Jandl et al. | 2019 | Conference | Int. Conf. on emerging tech. and factory automation | IEEE | Bluetooth |

| Maouchi et al. | 2019 | Conference | Symp. on Applied Computing | ACM | Blockchain |

| Buccafurri et al. | 2020 | Journal | Trans. on Services Computing | IEE | RFID |

| Faramondi et al. | 2020 | Conference | Int. Convention on Information, Communication and Electronic Technology | IEEE | Wifi, Bluetooth |

: = Objects,

: = Objects,  : = Persons,

: = Persons,  : = Healthcare,

: = Healthcare,  : = University,

: = University,  : = Smart Home,

: = Smart Home,  : = Industry,

: = Industry,  : = Logistics).

: = Logistics).

: = Objects,

: = Objects,  : = Persons,

: = Persons,  : = Healthcare,

: = Healthcare,  : = University,

: = University,  : = Smart Home,

: = Smart Home,  : = Industry,

: = Industry,  : = Logistics).

: = Logistics).| [37] | [38] | [39] | [40] | [41] | [42] | [12] | [43] | [44] | [45] | [46] | [8] | [10] | [47] | [7] | [48] | [49] | [50] | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Tracked Items |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |

| Tracking Technology | RFID | WiFi | - | WiFi | BT | WiFi/BT | RFID | RFID | WiFi/BT | BT | Blockchain | BT | WiFi | RFID | BT | RFID | RFID | WiFi/BT |

| Application Domain |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |

| Reasons for Privacy Features | ||||||||||||||||||

| Leakage or Modification | - | ✓ | - | - | ✓ | - | ✓ | ✓ | - | ✓ | ✓ | ✓ | - | ✓ | - | - | - | - |

| Law Compliance | ✓ | ✓ | ✓ | - | - | ✓ | - | - | ✓ | - | - | - | ✓ | - | ✓ | ✓ | ✓ | ✓ |

| Sensible Data Processing | - | - | - | - | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | - | ✓ | - | - | ✓ | - | - | ✓ |

| Data Forwarding | - | - | - | - | - | - | - | ✓ | ✓ | - | ✓ | ✓ | - | - | - | ✓ | - | - |

| Data Misuse | - | - | ✓ | - | ✓ | - | - | ✓ | ✓ | - | ✓ | - | - | - | ✓ | - | - | ✓ |

| None-Privacy Comm. System | ✓ | ✓ | - | - | - | - | - | - | - | - | - | - | - | - | ✓ | - | ✓ | - |

| [37] | [38] | [39] | [40] | [41] | [42] | [12] | [43] | [44] | [45] | [46] | [8] | [10] | [47] | [7] | [48] | [49] | [50] | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MINIMIZE | ||||||||||||||||||

| Minimise Data Acquisition | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓ | ✓✓ | - | ✓✓ | - | ✓✓ | ✓ | ✓✓ | ✓✓ |

| Minimise Number of Data Sources | ✓ | ✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✗ | ✓ | ✓ | ✓✓ | - | ✓✓ | ✗ |

| Minimise Raw Data Intake | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | - | ✓✓ | - | ✓✓ | ✓✓ | - | ✓✓ | - | ✓✓ | - | ✓✓ | ✓✓ |

| Minimize Knowledge Discovery | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✗ | ✓✓ | ✓✓ | ✓✓ | - | ✓✓ | ✓✓ |

| Minimize Data Storage | - | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓ | ✓✓ | - | - | ✓✓ | ✓✓ | ✓✓ | ✓ | ✗ | ✓ | - | - | ✓✓ |

| Minimize Data Retention Period | - | ✓✓ | ✓ | - | ✗ | ✗ | ✓✓ | ✗ | ✗ | ✓ | ✗ | - | ✓✓ | ✗ | ✓ | ✗ | - | ✓✓ |

| Query Answering | - | ✗ | ✓✓ | ✓✓ | ✓✓ | ✓ | - | ✓✓ | ✓✓ | - | - | - | - | - | - | - | ✓✓ | ✓✓ |

| Repeated Query Blocking | - | ✗ | ✗ | ✗ | ✓ | - | - | - | - | ✓✓ | - | - | - | - | - | - | - | - |

| Minimize Data Retention Period | - | ✓✓ | ✓ | - | ✗ | ✗ | ✓✓ | ✗ | ✗ | ✓ | ✗ | - | ✓✓ | ✗ | ✓ | ✗ | - | ✓✓ |

| HIDE | ||||||||||||||||||

| Hidden Data Routing | ✓ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓✓ | ✓✓ | ✓ | - | ✗ | ✗ | ✗ | ✗ | ✓✓ | ✓✓ | - |

| Data Anonymization | ✓✓ | ✓ | ✗ | ✗ | ✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✗ | ✓✓ | ✗ | ✓✓ | ✓✓ | ✓✓ | ✓✓ |

| Encrypted Data Communication | ✓✓ | ✗ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓ | ✗ | ✓✓ | ✗ | ✗ | ✓✓ | ✓✓ | ✓✓ |

| Encrypted Data Processing | ✓✓ | ✗ | ✗ | ✗ | ✓✓ | ✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✗ | ✓✓ | ✗ | - | ✓✓ | ✓✓ | ✗ |

| Encrypted Data Storage | - | ✗ | ✗ | ✗ | ✓✓ | ✓✓ | ✗ | - | - | ✓✓ | ✓✓ | ✗ | ✓✓ | ✗ | - | ✓✓ | - | ✓✓ |

| SEPERATE | ||||||||||||||||||

| Distributed Data Processing | ✓ | ✓ | ✗ | ✗ | ✗ | ✓✓ | ✗ | - | ✗ | ✗ | ✓✓ | ✓✓ | ✗ | ✗ | ✓✓ | ✓✓ | - | ✓✓ |

| Distributed Data Storage | - | ✓ | ✗ | ✗ | ✓✓ | ✓✓ | ✗ | - | ✗ | ✗ | ✓✓ | ✓✓ | ✗ | ✗ | ✗ | ✓✓ | - | ✓✓ |

| AGGREGATE | ||||||||||||||||||

| Knowledge Discovery Based Aggregation | - | ✗ | ✗ | ✗ | ✓✓ | ✓✓ | - | ✓✓ | ✓✓ | - | - | ✗ | - | - | ✓✓ | ✓✓ | - | - |

| Geography Based Aggregation | - | ✗ | - | - | ✓✓ | ✓✓ | ✗ | ✓✓ | ✓✓ | ✓✓ | - | ✓ | - | - | ✓✓ | ✗ | - | ✓✓ |

| Chain Aggregation | - | - | - | - | ✓✓ | ✓✓ | ✗ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | - | ✗ | ✓✓ | - | - | - |

| Time Period Based Aggregation | - | ✗ | - | - | ✗ | ✓ | ✓ | ✓✓ | ✓ | ✗ | ✗ | ✓ | ✗ | ✗ | ✓✓ | - | - | - |

| Category Based Aggregation | - | ✗ | - | - | ✓ | ✓ | ✓✓ | ✓✓ | ✓✓ | ✗ | - | ✗ | ✓ | - | ✓✓ | - | - | - |

| [37] | [38] | [39] | [40] | [41] | [42] | [12] | [43] | [44] | [45] | [46] | [8] | [10] | [47] | [7] | [48] | [49] | [50] | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| INFORM | Information Disclosure | ✗ | ✓ | ✓ | ✓ | ✓✓ | ✗ | - | - | ✓ | - | - | ✓ | ✓ | - | - | - | ✓ | ✓✓ |

| CONTROL/ENFORCE * | Control | ✓ | ✓ | ✓ | ✗ | ✓ | ✗ | ✓ | ✓ | ✓ | ✓ | ✗ | ✗ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓✓ |

| DEMONSTRATE | Logging | ✓ | ✓✓ | ✗ | ✗ | ✓✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓✓ | ✗ | - | ✗ | ✓✓ | ✓✓ | - | ✓✓ |

| Auditing | - | - | ✓✓ | ✗ | - | ✗ | ✗ | ✗ | ✗ | ✗ | - | ✗ | - | ✗ | - | - | - | - | |

| Opensource | ✓ | ✗ | ✓ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | |

| Data Flow Diagrams | ✗ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✓✓ | ✗ | ✗ | ✓✓ | ✓✓ | |

| Certification | - | ✗ | ✗ | ✗ | ✓ | - | ✗ | - | - | ✗ | - | ✓✓ | ✗ | ✗ | ✗ | ✓✓ | - | ✗ | |

| Standardization. | - | ✗ | ✗ | ✗ | - | ✓ | ✗ | - | ✓ | ✗ | ✓ | ✓ | ✗ | ✗ | - | ✗ | - | - | |

| Compiliance | - | ✗ | ✗ | ✗ | ✓ | ✓✓ | ✗ | ✓✓ | ✓✓ | ✗ | - | ✗ | ✗ | ✗ | ✓✓ | ✗ | ✓✓ | ✓✓ | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jandl, C.; Wagner, M.; Moser, T.; Schlund, S. Reasons and Strategies for Privacy Features in Tracking and Tracing Systems—A Systematic Literature Review. Sensors 2021, 21, 4501. https://doi.org/10.3390/s21134501

Jandl C, Wagner M, Moser T, Schlund S. Reasons and Strategies for Privacy Features in Tracking and Tracing Systems—A Systematic Literature Review. Sensors. 2021; 21(13):4501. https://doi.org/10.3390/s21134501

Chicago/Turabian StyleJandl, Christian, Markus Wagner, Thomas Moser, and Sebastian Schlund. 2021. "Reasons and Strategies for Privacy Features in Tracking and Tracing Systems—A Systematic Literature Review" Sensors 21, no. 13: 4501. https://doi.org/10.3390/s21134501

APA StyleJandl, C., Wagner, M., Moser, T., & Schlund, S. (2021). Reasons and Strategies for Privacy Features in Tracking and Tracing Systems—A Systematic Literature Review. Sensors, 21(13), 4501. https://doi.org/10.3390/s21134501