Abstract

An earlier study carried out in 2010 at the archaeological site of L’Almoina (Valencia, Spain) found marked daily fluctuations of temperature, especially in summer. Such pronounced gradient is due to the design of the museum, which includes a skylight as a ceiling, covering part of the remains in the museum. In this study, it was found that the thermal conditions are not homogeneous and vary at different points of the museum and along the year. According to the European Standard EN10829, it is necessary to define a plan for long-term monitoring, elaboration and study of the microclimatic data, in order to preserve the artifacts. With the aforementioned goal of extending the study and offering a tool to monitor the microclimate, a new statistical methodology is proposed. For this propose, during one year (October 2019–October 2020), a set of 27 data-loggers was installed, aimed at recording the temperature inside the museum. By applying principal component analysis and k-means, three different microclimates were established. In order to characterize the differences among the three zones, two statistical techniques were put forward. Firstly, Sparse Partial Least Squares Discriminant Analysis (sPLS-DA) was applied to a set of 671 variables extracted from the time series. The second approach consisted of using a random forest algorithm, based on the same functions and variables employed by the first methodology. Both approaches allowed the identification of the main variables that best explain the differences between zones. According to the results, it is possible to establish a representative subset of sensors recommended for the long-term monitoring of temperatures at the museum. The statistical approach proposed here is very effective for discriminant time series analysis and for explaining the differences in microclimate when a net of sensors is installed in historical buildings or museums.

1. Introduction

The environmental conditions of historical buildings, exhibition facilities and storage areas in museums have been shown to be the most crucial factor in the preservation of collections and artifacts. Temperature, humidity and lighting can potentially deteriorate or even destroy historical or cultural objects that are kept, protected and displayed in collections [1]. A continuous monitoring of the indoor environment can provide information about the microclimatic conditions affecting the works of art. Monitoring is an essential tool for developing a preventive control program aimed at maintaining the optimal microclimatic conditions for preservation. As a consequence, long-term monitoring has to be applied to prevent the deterioration of artworks [2]. Furthermore, it is necessary to find practical solutions and tools for the incorporation of climate change adaptation in the preservation and management of cultural heritage [3]. In particular, in archaeological sites, temperature differences between various minerals in block surfaces and alternative surfaces cause thermal stress. Humidity and thermal stresses are important causes of microfractures between the mineral grains of blocks [2].

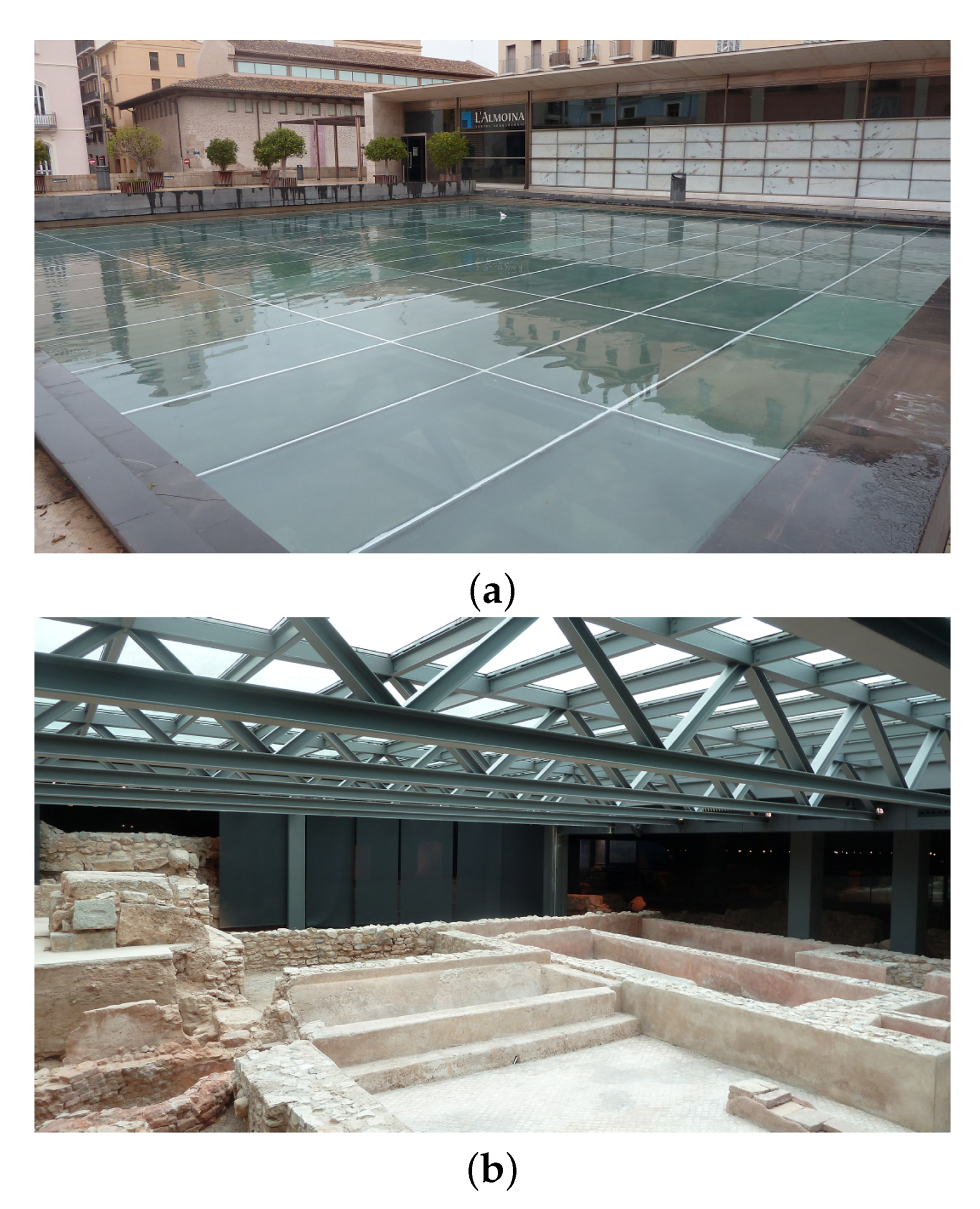

In L’Almoina museum (Valencia, Spain), a pronounced gradient of temperature was found [4] due to a skylight which was included in the architectonical design of the museum (Figure 1). A thermo-hygrometric monitoring study carried out in this museum in 2010 [4] discussed the significant effect of the skylight on the variations in T and RH. A pronounced greenhouse effect was noted, as a consequence of the skylight and the high temperatures reached in summer in Valencia. In 2011 the layer of water was removed due to a leak that had to be repaired. To replace the beneficial effect of the water layer, a provisional canvas cover was installed directly over the skylight, in order to avoid the overheating of the archaeological site below, by preventing direct sunlight. A second thermo-hygrometric monitoring study was performed in 2011 to assess the effect of different corrective measures and changes implemented in the museum [5]. The microclimatic data of RH and T recorded in 2010 before laying the canvas cover was compared with air conditions in 2013, after its installation. It was found that the presence of the canvas covering the skylight improved the T and RH conditions, so that the microclimate was in accordance with the international standards [6,7].

Given the marked detrimental influence of the skylight, a long-term monitoring is required for the control of thermal conditions. Thus, it is necessary to find practical solutions and tools for the preservation and management of the ruins. For this purpose, a statistical methodology for classifying different time series of temperature that are very similar is of interest. Such methodology can help to characterize different zones in the museum and to provide guidelines for monitoring the thermal conditions.

Some studies have been carried out in order to propose a plan for monitoring either temperature (T) or relative humidity (RH) for art conservation. Three reported studies proposed a methodology for classifying different time series using either observations of time series, or features from time series. García-Diego and Zarzo [8] and Zarzo et al. [9] applied Principal Component Analysis (PCA) in order to study the values of RH from sensors installed in different positions at the apse vault of Valencia’s Cathedral (Spain). Zarzo et al. [9] reported that the first and second principal components could be estimated according to a linear combination of the average RH values and the moving range of RH. Based on the two first components, the differences between the time series of RH in different positions in the apse were discussed. Ramírez et al. [10] proposed a statistical methodology in order to classify different time series of RH, which pointed to those zones with moisture problems in the apse vault of the Cathedral of Valencia. Merello et al. [11] analyzed 26 different time series of RH and T, recorded at Ariadne’s house (Pompeii, Italy), using graphical descriptive methods and Analysis of Variance (ANOVA), in order to assess the risks for long-term conservation of the mural paintings in the house. The work provided guidelines about the type, calibration, number and position of thermo-hygrometric sensors in outdoor or semi-confined environments.

The proposed methodology in this article has an adequate capability for discriminating time series with similar features. In addition, it can help to obtain parsimonious models with a small subset of variables leading to a satisfactory discrimination. As a consequence, its results can be easily interpreted and can help to select a subset of representative sensors for the long-term monitoring of indoor air conditions inside the museum. Finally, this methodology can be effective in order to establish the different zones in the archaeological site and to discriminate the microclimate of these areas.

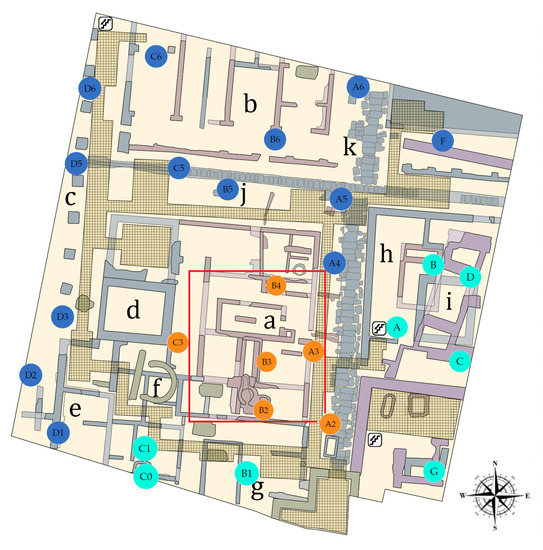

Figure 1.

Plan of the L’Almoina archaeological site, indicating the position of 27 data-loggers for monitoring the air conditions inside the museum. Based on the multivariate analysis of temperatures (see Section 3.3.6), three zones were established: North West (NW, in blue), South East (SE, in green) and Skylight (Sk, in orange). The different observable structures and the construction phases in the museum are indicated: (a) Roman baths; (b) Imperial granary; (c) Portico of the imperial forum; (d) Imperial chapel; (e) Imperial basilica; (f) Byzantine apse and tombs; (g) Byzantine Cathedral Baptistery; (h) Republican and Imperial Asklepieion; (i) Alcázar Aldalusí; (j) Decumani; and (k) Cardus [12].

Figure 1.

Plan of the L’Almoina archaeological site, indicating the position of 27 data-loggers for monitoring the air conditions inside the museum. Based on the multivariate analysis of temperatures (see Section 3.3.6), three zones were established: North West (NW, in blue), South East (SE, in green) and Skylight (Sk, in orange). The different observable structures and the construction phases in the museum are indicated: (a) Roman baths; (b) Imperial granary; (c) Portico of the imperial forum; (d) Imperial chapel; (e) Imperial basilica; (f) Byzantine apse and tombs; (g) Byzantine Cathedral Baptistery; (h) Republican and Imperial Asklepieion; (i) Alcázar Aldalusí; (j) Decumani; and (k) Cardus [12].

Aimed at better understanding the differences of microclimate in L’Almoina archaeological site (Figure 2), a set of 27 autonomous data-loggers was installed at different points of the museum (Figure 1). The time period under study was of about one year, from 22 October 2019 to 20 October 2020. The main goal of this research was to identify different microclimates at the museum and to characterize the differences in temperature between such zones. The purpose was to classify the sensors according to features and variables extracted from the time series of T. Another target was to identify those variables that best discriminate the different time series per zone. For this purpose, a methodology was applied based on sparse partial least squares discriminant analysis (sPLS-DA) and random forest (RF) with models and functions of time series [10,13]. Another target was to identify a subset of representative sensors for a long-term monitoring of thermic air conditions in the museum, as well as to determine the best location recommended for these sensors. The proposed methodology is rather new in the context of clustering of time series applied to cultural heritage. Furthermore, this methodology can be useful for defining different zones in the museum, according to features of the time series of T, as well as to achieve a correct classification of all sensors in such zones.

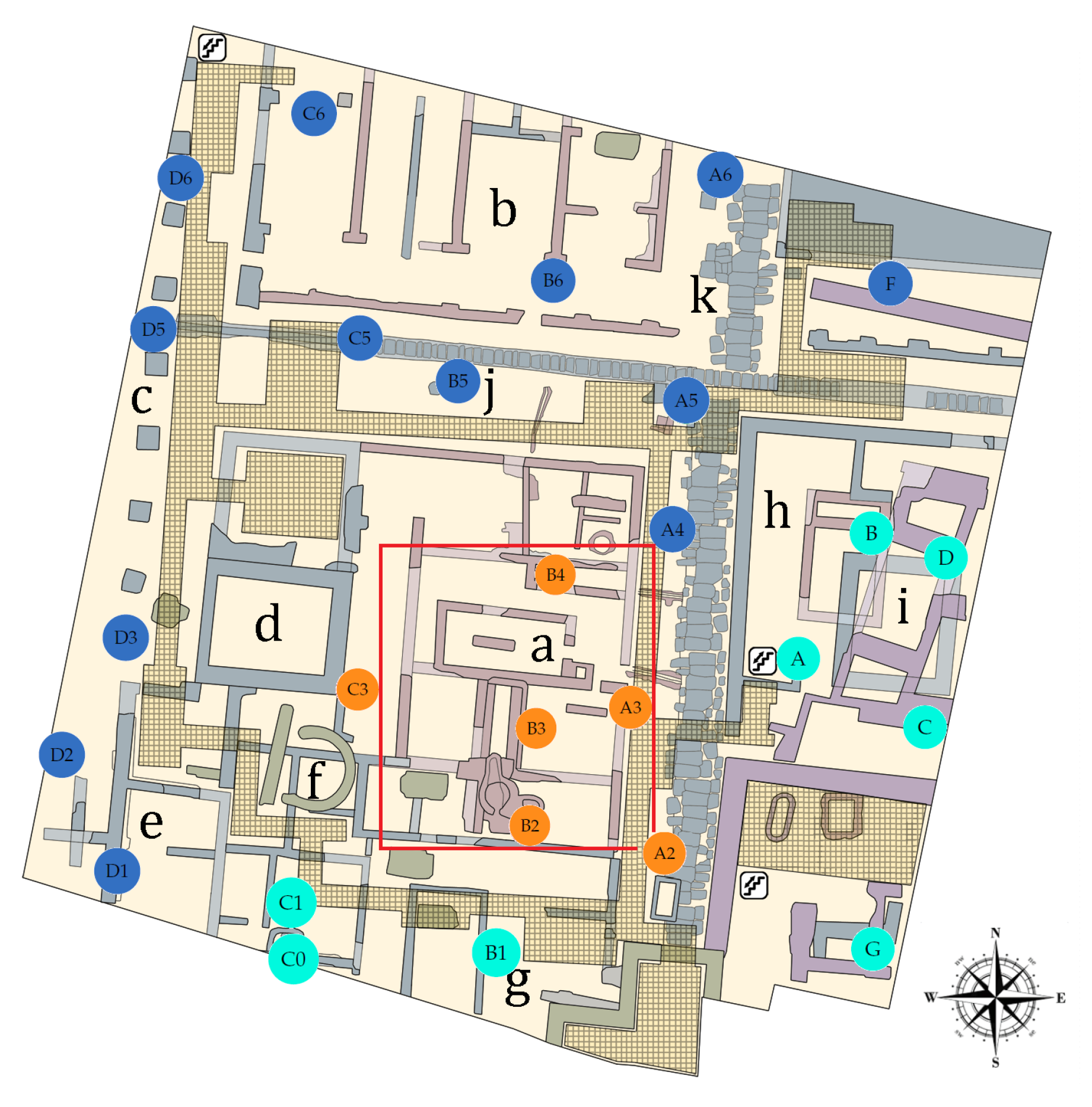

Figure 2.

View of the skylight covering part of L’Almoina archaeological site: (a) external view from the pedestrian plaza; and (b) internal view.

This article is structured as follows. In Section 2, a short background related to art conservation is presented. Characteristics of the dataset and the sensors, criteria for determining the stages of the time series of T, methods for calculating features of the time series and strategies for classifying time series are introduced in Section 3. The most notable results of the different analyses and their discussion are presented in Section 4. Finally, conclusions appear in Section 5.

2. Background

2.1. Studies for the Long-Term Preservation of Artworks

Many studies have been conducted in recent years which monitor the climatic parameters for the long-term preservation of cultural heritage. Frasca et al. [14] studied the impact of T, RH and carbon dioxide (CO) on the organic and hygroscopic artworks in the church of Mogiła Abbey. They found that artworks were at high risk of mechanical damage for approximately 15% of the time under study, due to an excessive variability of RH. Huijbregts et al. [15] proposed a method for evaluating the damage risk of long-term climate changes on artifacts in museums or historic buildings. This method was applied for two historic museums in the Netherlands and Belgium. For the examined case studies, they found that the expected climate change would significantly increase both indoor T and RH, with the increase of the latter having the highest impact on the damage potential for artifacts in museums. Zítek and Vyhlídal [16] proposed a novel air humidity control technique for preventing the moisture sensitive materials from varying the equilibrium of their moisture content, maintaining desirable environmental conditions for the preventive conservation of cultural heritage. Angelini et al. [17] designed and installed a wireless network of sensors for monitoring T and RH, aimed at establishing a correlation between the environmental conditions and the conservation state of artifacts. In addition, Lourenço et al. [18] studied air T and RH, among other parameters, in the historical city center of Bragaça (Portugal). Other researchers have studied the variation of some environmental parameters, in order to identify the main factors involved in the deterioration of certain remains, e.g., exposed and buried remains at the fourth century Roman villa of Chedworth in Gloucester, England [19].

2.2. European Standards

Experts suggest that it is necessary to investigate the actual environmental dynamics in a museum before any structural intervention. Furthermore, it is important to define the compatibility between the climate control potentials and the preservation requirements [2]. Several European Standards [20,21,22,23,24,25,26] have been developed for the monitoring, elaboration and study of the microclimatic data, as supporting actions for the preservation of artifacts. Long-term monitoring is required, as well as an appropriate statistical approach for the data management.

A large economical investment is being provided by governments within the European Union to preserve artworks in museums. Different research projects have monitored the indoor microclimate within museums, in order to analyze the relationship between thermo-hygrometric conditions and the degradation of materials, from which works of art are made. For example, with the goal of preserving artwork and artifacts, the CollectionCare Project is currently working on the development of an innovative system for making decisions about the preventive conservation of artworks in small- to medium-sized museums and private collections [27].

2.3. Characteristics of the L’Almoina Museum

The archaeological site of L’Almoina in Valencia (Spain) is an underground museum at about 3 m below the city floor level. It occupies an area of about 2500 . The archaeological remains are covered by a concrete structure, which forms an elevated plaza above the ruins. This cover connects with walkways and steps at different heights along its perimeter. There is no vertical retaining wall inside the museum to isolate the remains and prevent water diffusion through capillarity from the surrounding areas. An external glass skylight (225 ) was adapted to the museum so that part of the ruins could be observed from the pedestrian plaza. Nowadays, the skylight is protected by a layer of water (see Figure 2) to prevent high temperatures of the glass.

3. Materials and Methods

3.1. Materials: Description of the Datasets

In total, 27 data-loggers were installed for the monitoring of T and RH at L’Almoina museum. Technical details are as follows: data-logger model WS-DS102-1, with a temperature range between −40 and and an accuracy according to the MI-SOL manufacturer of , under 0–50 [28]. The data acquisition rate was of one recording every five minutes, so that a manual download of data was necessary every two months. The monitoring experiment started on 22 October 2019 and ended on 20 October 2020. The initial number of available observations of T was approximately 104,832 per sensor (i.e., 364 days·24 h/day·12 values/h), which were arranged as a matrix containing 27 columns (i.e., temperatures recorded by each sensor) by 104,832 rows. Daily cycles are clearly marked, which implies a repetitive pattern every 288 values, but such a data sequence seems too long. Thus, it was decided to calculate the median of values recorded per hour, which leads to daily cycles every 24 values. This frequency seems more convenient for the use of seasonal methods of time series analysis. Thus, a new matrix was arranged comprising 8730 observations by 27 sensors. This dataset did not contain missing values.

3.2. Data Calibration

All sensors were calibrated prior to their installation by means of an experiment carried out inside a climatic chamber, model alpha 990-40H from Design Environmental Ltd. (Gwent, UK). The temperature was maintained at three different levels: 5, 23 and . For each stage, the RH was 75%, 50% and 30%, respectively. Each stage of temperature was maintained for 2 h, so that the total calibration experiment lasted for 6 h. The frequency of temperature recorded was one datum per five minutes from each sensor. Next, the median of T per hour was calculated by sensor. Linear regression was applied to obtain calibration functions, one per sensor, relating the measured median of T as a function of the real temperature inside the chamber. Finally, the temperature matrix () was modified by correcting the bias of each sensor, according to the resulting calibration functions, which leads to a corrected matrix containing the median temperature per hour, after the calibration.

3.3. Statistical Methods

The statistical methodology comprises the following steps: (1) Identify structural breaks in all time series. (2) Extract features directly from the time series. (3) Compute classification variables using the additive seasonal Holt–Winters approach. (4) Compute classification variables by means of seasonal ARIMA. (5) Compute classification variables according to the Wold decomposition. (6) Determine the optimum number of classes and establish the class for each sensor, using PCA and k-means algorithm. (7) Check the classification of sensors using sPLS-DA and identify the optimal subset of variables that best discriminate between classes, per method. (8) Check the classification of sensors by means of the RF algorithm and identify the optimal set of variables calculated from each method, that best discriminate between the classes. (9) Propose a methodology for selecting a subset of representative sensors for future long-term monitoring experiments in the museum.

The main R software packages [29] (version 4.3) used to carry out the statistical analyses were: mixOmics [30,31], aTSA [32], forecast [33,34], strucchange [35], tseries [36], moments [37], PerformanceAnalytics [38], NbClust [39] and QuantTools [40].

3.3.1. Identification of Structural Breaks in the Time Series

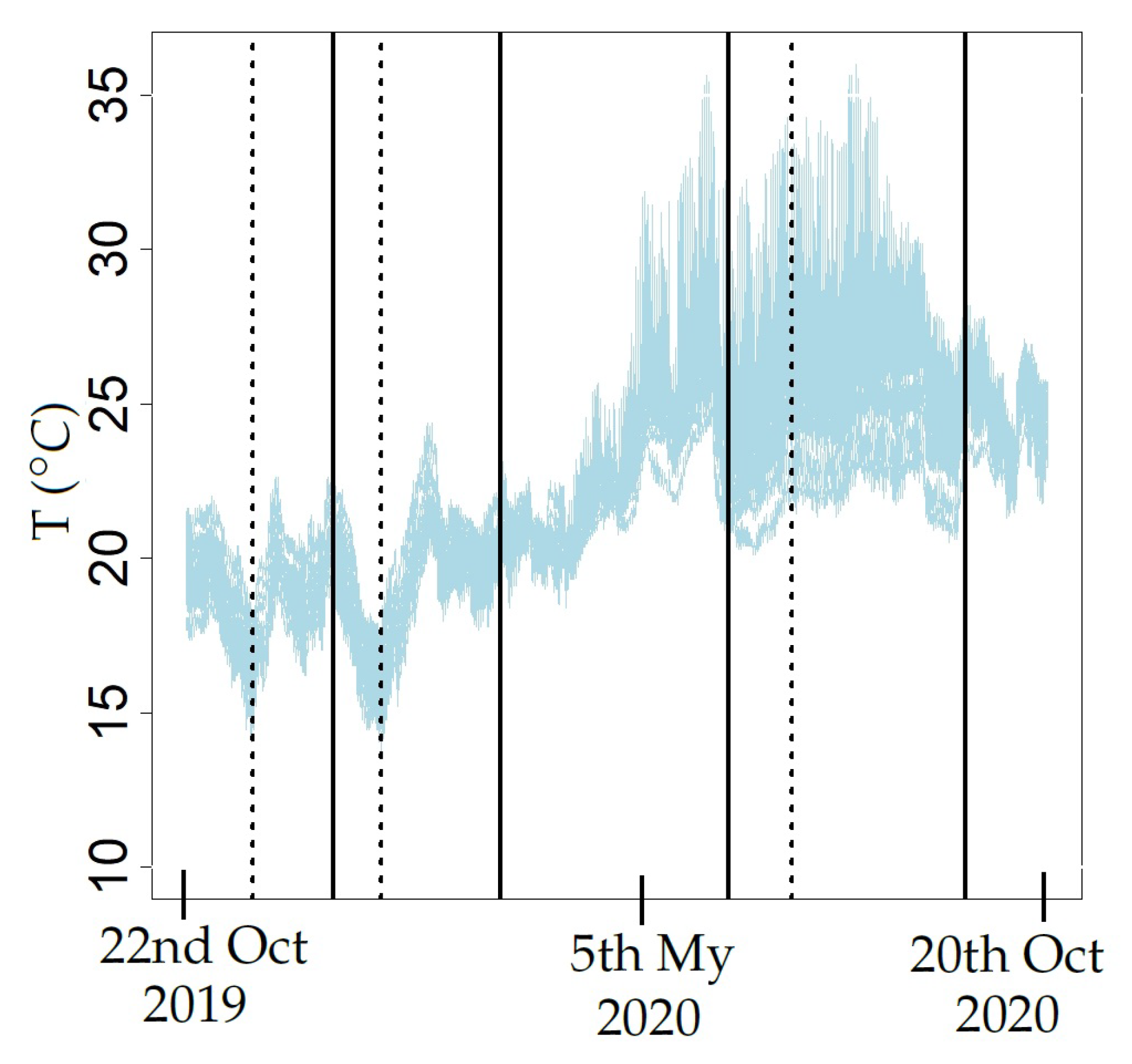

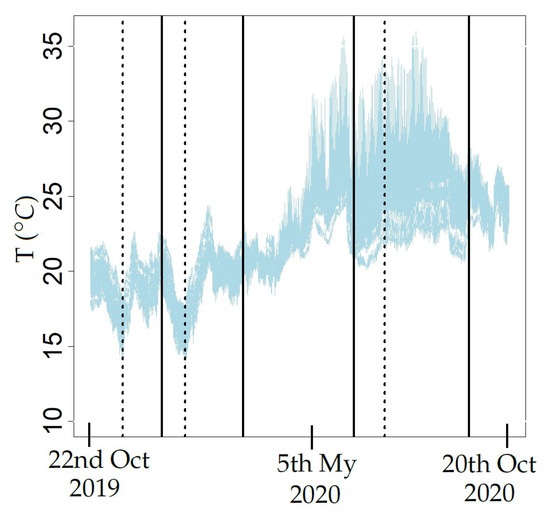

In real conditions, time series can undergo sudden shifts or changes in the slope of a linear trend. Such events are known as structural breaks [41]. The CUSUM and supF tests, among others, can be used to detect structural breaks in a time series [42,43]. By carefully inspecting the evolution of all observed time series of temperature () over time (Figure 3), certain potential structural breaks can be observed. Both the CUSUM and supF tests were applied after computing the logarithmic transformation and one regular differentiation to the time series. Such logarithmic transformation was intended to stabilize the variance, while the regular differentiation was used to remove the trend of the different time series [44]. The notation used throughout this paper is the following: refers to the logarithmic transformation of and denotes one regular differentiation of the logarithmic transformation of . Thus, each value of corresponds to , being . The tests were computed with functions Fstats and efp from the strucchange package [35]. Initially, 5 stages were tentatively established: warm 1 (Wr1, comprising n = 1490 observations), cold (Cd, n = 1703), transition (Tr, n = 2303), hot (Ht, n = 2327) and warm 2 (Wr2, n = 903) (see Figure 3). Wr1 corresponds to 22 October–23 December 2019, Cd to 24 December–3 March 2020, Tr to 4 March–7 June, Ht to 8 June–12 September and Wr2 to 13 September–20 October 2020.

Figure 3.

Trajectories of the different time series of T from the 27 sensors located in the museum. Values were recorded between 22 October 2019 and 20 October 2020. The separation of different stages (Wr1, Cd, Tr, Ht and Wr2) is indicated by means of solid vertical lines. Dashed vertical lines indicate the structural breaks identified within the stages Wr1, Cd and Ht.

3.3.2. Calculation of Classification Variables—Method M1

This method consists of computing features such as mean, median and maximum, among others, from estimates of the Auto Correlation Function (ACF), Partial Auto Correlation Function (PACF), spectral density and Moving Range (MR) [45,46]. The ACF and PACF correlograms of the observed time series are commonly used to fit Auto Regressive Moving Average (ARMA) models. Firstly, a set of variables denoted as Type 1 comprised the mean, MR and PACF, which were estimated for the 8 stages of values. The average of was calculated to capture the level or position in each stage of the time series. The mean of MR with order 2 (i.e., average range over 2 past values) was computed to identify sudden shifts or increases in the level of . For each stage of , the sample PACF parameters ( at lag l) were estimated for the first four lags (l = 1, 2, 3, 4), which are usually the most important ones for capturing the relevant information in time series.

Secondly, another set of variables called Type 2 comprised spectral density and ACF, which were estimated for values after applying the logarithm transformation and regular differencing. The objective of using this transformation and differencing was to stabilize the variances and remove the trend of , so that the computed variables provide information about the seasonal component of the time series. Spectral density was estimated using the periodogram of observed time series ( of signals w). The maximum peak of the periodogram and its frequency were identified. Values of ACF ( at lag l ) were estimated to analyze the correlation between values with the lagged values of the same observed time series at each lag, for the first 72 lags. This criterion was used because the values of the ACF correlogram for further lags were comprised within the limits of a 95% confidence interval in the correlogram.

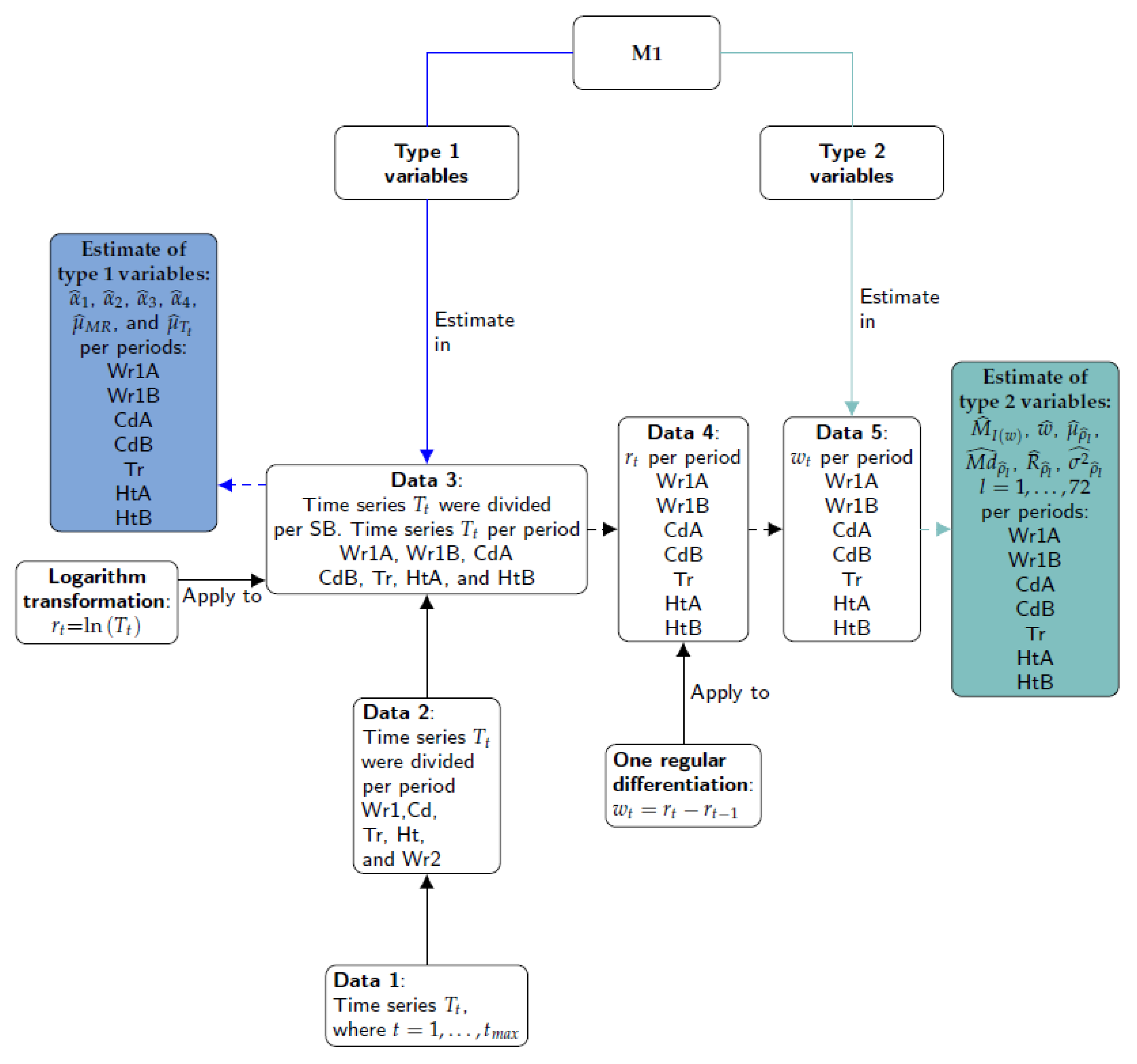

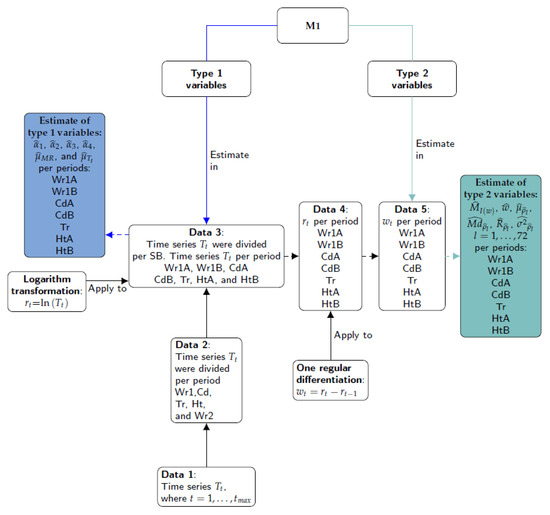

The different steps involved in M1 are depicted in Figure 4. Firstly, the 27 time series were split according to the climatic stages observed: Wr1, Cd, Tr, Ht and Wr2 (Data 2). Secondly, some of the main stages (Data 2) were subdivided according to the structural breaks (SB) identified in Wr1, Cd and Ht (Data 3). In the third step (Data 4), the logarithm transformation was applied and, next, one regular differentiation (Data 5). The fifth step consists of applying the formulas of Type 2 variables to : maximum of periodogram () and its frequency (w), as well as mean, median, range and variance of the sample ACF for the first 72 lags (, , and , with ). Finally, the formulas of Type 1 variables were applied to values (Data 3): mean of (), mean of MR of order 2 () and PACF for the first four lags (, , and ).

Figure 4.

Summary of steps involved in method M1: blue lines, Type 1 classification variables; green lines, Type 2 variables; solid lines, process; dashed line, results. Different boxes contain the name of the stages (i.e., Wr1A, Wr1B, CdA, CdB, Tr, HtA, HtB and Wr2) to indicate that the procedure was applied to all parts of the time series. A structural break was found in Wr1, Cd and Ht, so that the suffixes A and B denote the substages before and after the break, respectively.

The estimates of MR values were computed with the R software according to the function rollrange from the QuantTools package [40]. The sample ACF and sample PACF values were calculated with the function acf (stats) [47] and pacf (tseries) [36], respectively. The values of the periodogram and their frequencies were obtained with the function spectrum (stats).

3.3.3. Calculation of Classification Variables—Method M2

The Holt–Winters method (H-W) [48] is a type of exponential smoothing that is used for forecasting in time series analysis. The seasonal H-W (SH-W) method uses a smoothing equation for each component of a given time series: the level, slope and seasonality, which are denoted at time as , and , respectively. The additive SH-W prediction function of a time series of T is given by Equation (1), where p denotes the number of observations per period and k is the integer part of [49,50]. This equation was implemented with the conditions: , , and . When the algorithm converges, a corresponds to the last level of , b is the last slope of and – are the last seasonal contributions of each . A forecast of based on all the data up to time is denoted by ; a simpler notation for is .

The method M2 consists of fitting additive SH-W equations in two steps. Firstly, for each stage of the 27 time series of T, the classification variables are the last level of smoothing components of the additive SH-W method per sensor: level (a), slope (b) and seasonal components (). The method was fitted by considering as the number of observations per day. These smoothing components were called as Type 3 variables. Secondly, by considering the complete time series, the first 24 predictions of for each unique additive SH-W model per sensor were regarded as additional classification variables, which were denoted as Type 5 variables.

Although a residual analysis is not necessary when using SH-W method, estimates of the features from residuals were also computed per stage of the time series (Type 4 variables): sum of squared estimate of errors (SSE), maximum of periodogram () and its frequency (w) and several parameters (mean, median, range and variance) of sample ACF for 72 lags (, , and , with ). Moreover, the Kolmogorov–Smirnov (KS) normality test [51] and Shapiro–Wilk test (SW) [52,53,54] were applied in order to extract further information from the different stages of the observed time series. The statistic of the KS test (denoted as Dn) was used to compare the empirical distribution function of the residuals with the cumulative distribution function of the normal model. Likewise, the statistic of the SW test (Wn) was employed to detect deviations from normality, due to skewness and/or kurtosis. The statistics of both tests were also used as classification variables because they provide information about deviation from normality for the residuals derived from the SH-W method.

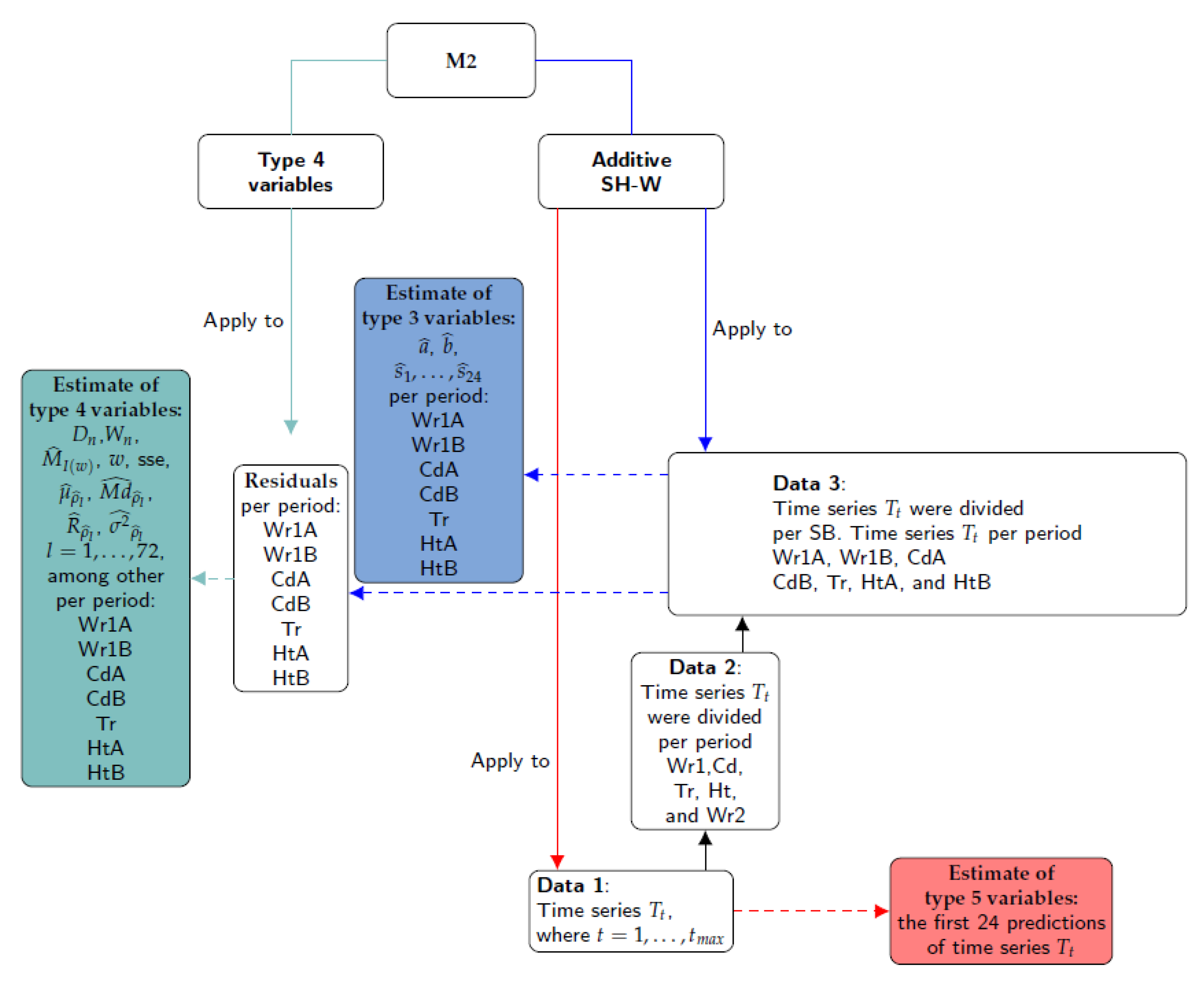

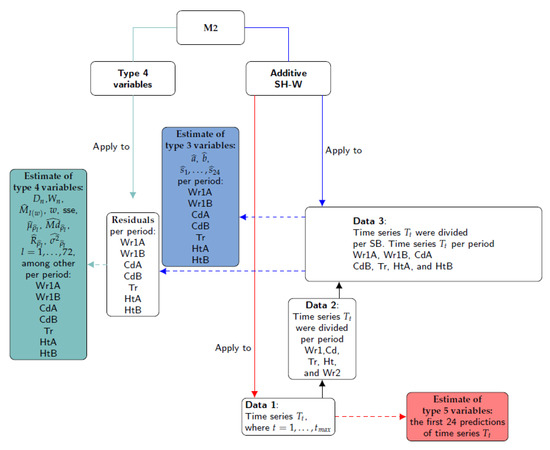

The steps involved in M2 are depicted in Figure 5. Firstly, the different time series were split according to the climatic stages observed (Data 2) and the structural breaks (SB) identified (Data 3). Secondly, the seasonal H-W method was applied to Data 3 in order to obtain the last level of smoothing components (Type 3 variables) and then the model residuals. The third step consisted of applying the formulas of Type 4 variables to the residuals. Finally, the seasonal H-W method was applied to Data 1 in order to obtain the first 24 predictions of T (Type 5 variables).

Figure 5.

Summary of steps in method M2: blue lines, Type 3 classification variables; green lines, Type 4 variables; red lines, Type 5 variables; solid lines, process; dashed line, results.

The HoltWinters function (stats) was used to fit the Additive SH-W method. The shapiro.test (stats) and ks.test (dgof) [55] were used to apply the normality tests. Values of the sample ACF and sample PACF were computed with the functions acf (stats) and pacf (tseries), respectively. Values of the periodogram and their frequencies were calculated with the function spectrum (stats).

3.3.4. Calculation of Classification Variables—Method M3

The ARMA model is also known as the Box–Jenkins approach, which focuses on the conditional mean of the time series and assumes that it is stationary [44]. By contrast, the ARIMA model can be used when a time series is not stationary. It employs regular differencing of the time series prior to fitting an ARMA model. ARIMA models employ p Auto Regressive (AR) terms, d regular differences and q Moving Average (MA) terms. Parameters of the AR component are denoted as () and parameters of the MA component as (). The error terms are assumed to be a sequence of data not autocorrelated with a null mean, which is called White Noise (WN) [44]. In addition, if a given time series is assumed to follow an ARIMA process, the conditional variance of residuals is supposed to be constant. If this is not the case, then it is assumed that an ARCH effect exists in the time series. Two of the most important models for capturing such changing conditional variance are the ARCH and Generalized ARCH (GARCH) models [41].

Seasonal ARIMA models are more appropriate in this case given the marked daily cycles. P refers to the number of seasonal AR (SAR) terms, D to the number of difference necessary to obtain a stationary time series, Q to the number of seasonal MA (SMA) terms and S to the number of observations per period ( in this case). Parameters of the SAR component are denoted as () and the SMA component as (). The error terms are assumed to be a WN sequence [44].

A seasonal ARIMA model is given by Equation (2), where the polynomial is the regular AR operator of order p, is the regular MA operator of order q, is the seasonal AR operator (SAR) of order P, is the seasonal MA operator (SMA) of order Q and B is the backshift operator (i.e., , for and , for ). Furthermore, represents the seasonal differences while accounts for the regular differences, so that is defined as and as [41]. In this study, , which was obtained by differentiating the series once regularly () and once seasonally (). Thus, .

For each stage of the time series, a common seasonal ARIMA model was fitted for the 27 observed time series (see Table 1). Firstly, each observed stage of the time series was checked to determine if it could be regarded as stationary, which implies that the mean and variance are constant over time t and the covariance between one observation and another (in lagged l steps) from the same time series does not depend on t [44]. The ACF and PACF correlograms were used to examine this condition. Furthermore, the Augmented Dickey–Fuller (ADF) test [56] was applied for checking the null hypothesis of non stationarity, as well as the Lagrange multiplier (LM) test [57] for examining the null hypothesis about the absence of ARCH effect. In addition, the autocorrelation Ljung–Box Q (LBQ) test [58] was applied for inspecting the null hypothesis of independence in a given time series. This LBQ test was carried out on the different lags from to , where is the sum of the number of AR, MA, SAR and SMA terms of the seasonal ARIMA models. It was applied to the time series of the model residuals and the squared residuals.

Table 1.

The most successful models per stage of the different observed time series are presented in the second column. Column 3 presents the percentages of the LBQ test on the different lags from to from the 27 sensors that fulfill the assumptions of independence. Column 4 presents the percentages of the LM test from the 27 sensors that fulfill the assumptions of the absence of Arch effect. The significance level used was 0.01.

The condition of stationarity is necessary when fitting an ARMA model. For this purpose, logarithmic transformation, one regular differentiation () and one seasonal differentiation () were applied to all time series of , in order to stabilize the variance and remove both the trend in mean and seasonal trend [59]. Seasonal differentiation was applied to the observed time series , and the results were denoted as (), being .

In order to determine the appropriate values of and , the corrected Akaike’s Information Criterion () [49] was used, which checks how well a model fits the time series using the restriction and . The most successful model for each stage of the different observed time series was chosen according to the lowest value. Next, the maximum likelihood estimation method was used to estimate the parameters of the seasonal ARIMA models [44]. Different tests were used to determine whether model assumptions were fulfilled. After computing the model residuals, ADF and LBQ [58] tests were applied to the residuals and their squared values, for 48 lags, in order to evaluate the condition of WN process. The ACF and PACF correlograms were also used. The next step was to evaluate the absence of Arch effects in the residuals. For this purpose, the LM test was applied to the residuals and their squared values [60,61]. Although the normality of errors is not an assumption for fitting ARIMA models, the distribution of residuals derived from the fitted models were compared with the normal distribution by means of the Q-Q normal scores plots, as well as the SW and KS normality tests.

Given that the errors of all models cannot be regarded as WN in this case, it is possible that the model residuals contain useful information about the performance of the different time series. In order to extract further information from the residuals, some features were calculated using ACF, PACF and statistics of normality tests, among others. They were used as additional classification variables.

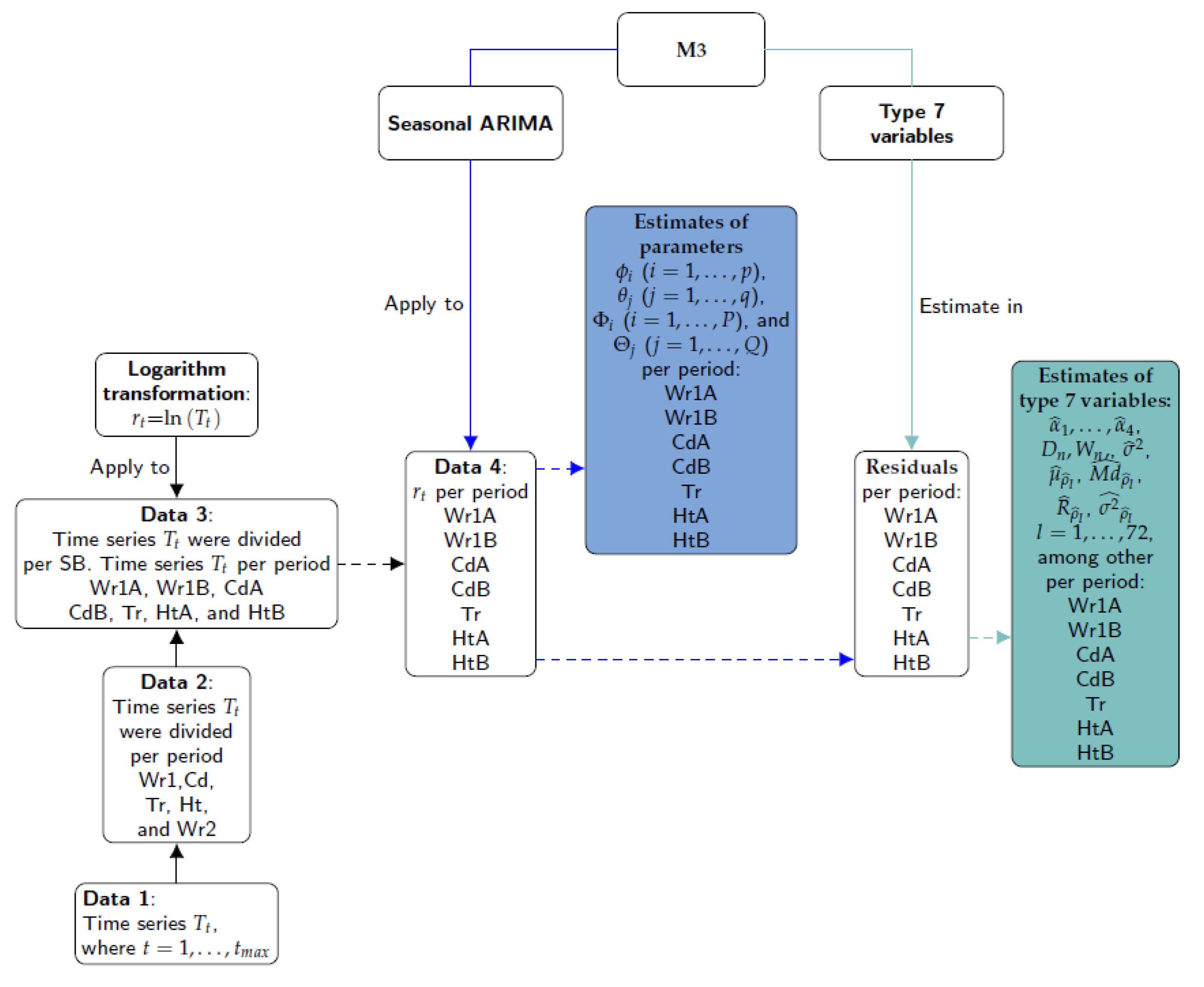

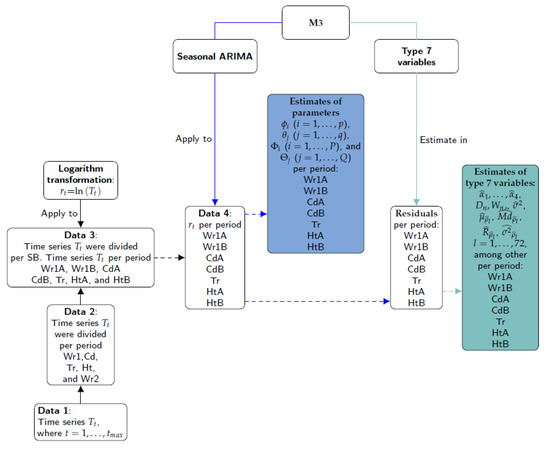

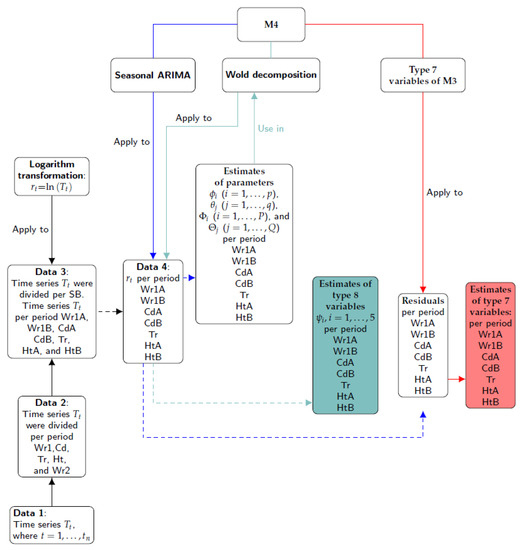

The steps involved in Method 3 are illustrated in Figure 6. Firstly, the different time series were split according to the climatic stages observed (Data 2) and the structural breaks (SB) identified (Data 3). Secondly, the logarithm transformation was applied to Data 3 (the result is denoted as Data 4). The third step consisted of applying the seasonal ARIMA model to Data 4 in order to obtain the estimates of model coefficients: , , and . These parameters were denoted as Type 6 variables. Next, different features (Type 7 variables) were computed from the residuals: variance (), maximum of periodogram () and its frequency w. For the set of 72 lags, additional features were computed from sample ACF values: mean (), median (), variance () and range (), with . Finally, the first four values of sample PACF (, , and ) were computed, as well as the statistics of the KS normality (Dn) and SW (Wn) tests.

Figure 6.

Summary of steps in method M3: blue lines, Type 6 classification variables; green lines, Type 7 variables; solid lines, process; dashed line, results.

In order to choose a seasonal ARIMA model and the estimations of the model parameters for each sensor, the arima (stats) and auto.arima (forecast) functions [33,34] were used. The ADF test was computed using the adf.test (aTSA) [32]. The LBQ test was applied by means of the Box.test function (stats). The LM test was carried out using the arch.test function (aTSA). The SW and KS normality tests were applied using the shapiro.test (stats) and ks.test functions (dgof), respectively.

3.3.5. Calculation of Classification Variables—Method M4

The Wold decomposition establishes that any covariance stationary process can be written as the sum of a non-deterministic and deterministic process. This decomposition, which is unique, is a linear combination of lags of a WN process and a second process whose future values can be predicted exactly by some linear functions of past observations. If the time series is purely non-deterministic, then it can be written as a linear combination of lagged values of a WN process (MA(∞) representation), that is, , where , and is a WN [41]. Although the Wold decomposition depends on an infinite number of parameters, the values of coefficients of the decomposition decay rapidly to zero.

For Method 3, a unique model was fitted for the 27 sensors in the same stage of the time series because it is necessary to have the same number of classification variables per sensor, in order to apply later the sPLS-DA method. It is not possible to work with ’the best model’ per sensor in each stage. In order to obtain the same number of variables using the ’best model’ per sensor, the Wold decomposition was applied to each sensor. Hence, Method 4 consists of obtaining the Wold decomposition for the ARMA models. Firstly, different seasonal ARIMA models were fitted iteratively to time series per sensor and stage of the time series, and the most successful model was determined.

As an illustration, consider that a time series follows a seasonal ARIMA process. Now, consider that and , with . Then, the time series follows an ARMA process, which can be decomposed according to the Wold approach by obtaining the polynomials and that determine the best ARMA model. In summary, for each seasonal ARIMA , it was possible to find the best ARMA model and its Wold decomposition.

The analysis of residuals of the different models fitted suggests that the condition of not autocorrelation is not fulfilled in all cases. Nonetheless, the Wold decomposition of each model was fitted independently, in order to have the ’best seasonal ARIMA model’ per sensor and the same number of parameters per sensor. For each model, the first five coefficients of the Wold decomposition were calculated and used as classification variables. In all cases, the most successful seasonal ARIMA model per sensor used and .

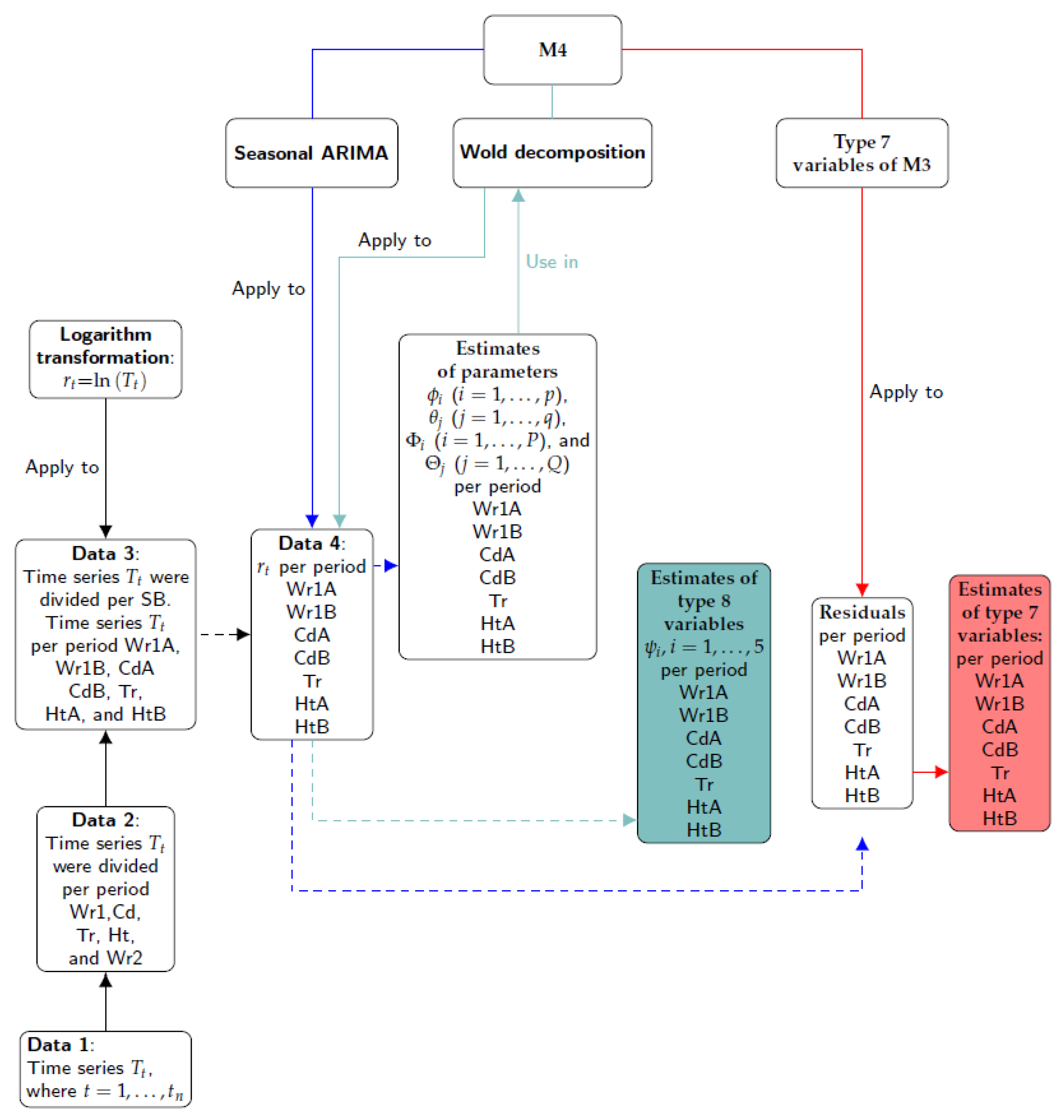

The steps involved in Method 4 are illustrated in Figure 7. Firstly, the different time series were split according to the climatic stages observed (Data 2) and the structural breaks (SB) identified (Data 3). Secondly, the logarithm transformation was applied to Data 3 (the result is denoted as Data 4). The third step consisted of applying the seasonal ARIMA model to Data 4 in order to obtain the estimates of parameters and their residuals. Next, the same formulas of Type 7 variables used in M3 were applied to the residuals. Finally, the Wold decomposition was determined using the estimates of parameters of seasonal ARIMA models. The first five coefficients of the MA weights (i.e., ) were denoted as Type 8 variables.

Figure 7.

Summary of steps of method M4: red lines, Type 7 variables of M3; green lines, Type 8 variables; solid lines, process; dashed line, results.

Apart from the same functions used for M3, this method employed ARMAtoMA (stats). One function was created for reducing a polynomial with AR and SAR components to a polynomial with just AR component. Likewise, another function converted a polynomial with MA and SMA components to one with MA component.

3.3.6. Determination of Number of Classes and Class per Sensor Using PCA and K-Means Algorithm

All classification variables calculated as described above for each sensor were arranged as a matrix called ’total classification dataset’ (TCD) with 27 rows (sensors) by 671 columns corresponding to the classification variables from the four methods. The total number of variables was 88, 296, 143 and 139 from M1, M2, M3 and M4, respectively. The multivariate analysis of the TCD matrix would allow the identification of different microclimates in the archaeological museum. It was checked that the statistical distribution of some classification variables was strongly skewed, which recommends to apply a data pretreatment prior to the multivariate analysis. For those variables with a strongly skewed distribution, different standard (simple) Box–Cox transformations [62] were applied with the goal of finding a simple transformation leading to a normal distribution. In particular, the Box–Cox transformations were used on those classification variables with a Fisher coefficient of kurtosis [63] or with a Fisher–Pearson standardized moment coefficient of skewness [63] outside the interval from −2.0 to 2.0. Before applying the Box–Cox transformations, an absolute value function was used for variables with a negative skewness that fulfilled one of the aforementioned conditions. The skewness statistic was computed for each variable in order to check the asymmetry of the probability distribution. The kurtosis parameter indicates which variables were heavy-tailed or light-tailed, relative to a normal distribution. Moreover, the estimates of kurtosis were useful measures for identifying outliers in the classification variables. The functions kurtosis and skewness (PerformanceAnalytics) [38] were used to compute the coefficients of kurtosis and skewness, while boxcoxfit (geoR) [64] was employed to apply different Box–Cox transformations. The function prcomp (stats) was used to carry out PCA.

Those values of a given classification variable that clearly departed from a straight line on the normal probability plot were removed and regarded as missing data. These were estimated using the NIPALS algorithm [65] implemented in the mixOmics package [31], which is able to cope with this drawback and returns accurate results [66]. After the data normalization, all variables were mean-centered and scaled to unit variance, which is the common pretreatment in PCA. Next, PCA was carried out to reduce the dimensionality of the TCD matrix. Each observation (sensor) was projected onto the first few principal components to obtain lower-dimensional data, while preserving as much of the data variation as possible.

Given that the two first components maximize the variance of the projected observations (TCD), only two components were employed to run the k-means clustering. This method is a centroid-based algorithm that computes the distance between each sensor and the different centroids, one per cluster or class. The algorithm determines K clusters so that the intra-cluster variation is as small as possible. However, prior to applying this method, the number of clusters K has to be determined, which depends on the type of clustering and previous knowledge about the time series of T. For this purpose, different criteria can be used [39,67]. Such methods do not always agree exactly in their estimation of the optimal number of clusters, but they tend to narrow the range of possible values. The NbClust function of the NbClust package [39] incorporates 30 different indices for determining the number of clusters [67]. This function claims to use the best clustering scheme from the different results obtained, by varying all combinations of the number of clusters, distance measures and clustering methods. It allows the user to identify the value K in which more indices coincide, providing assurance that a good choice is being made.

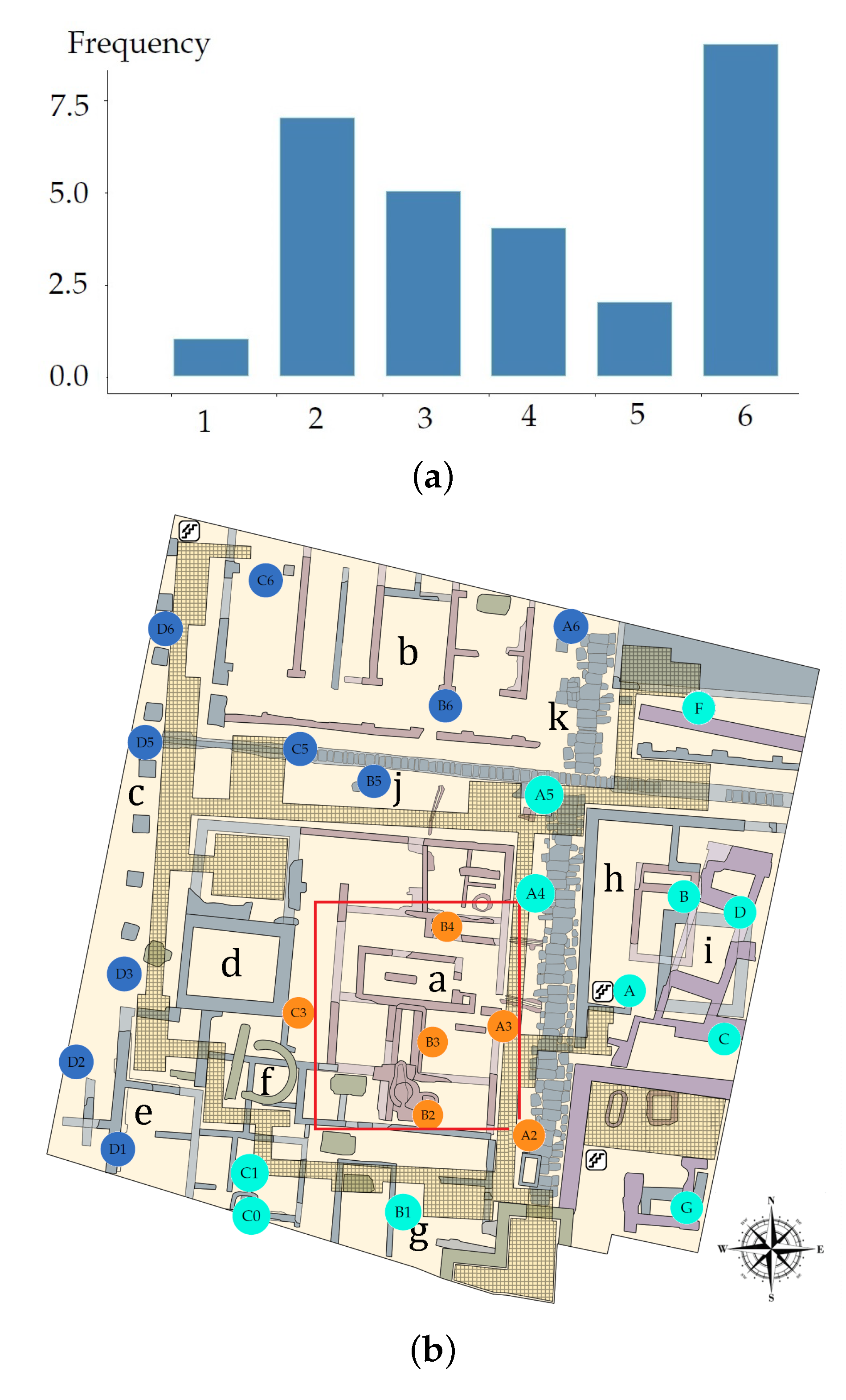

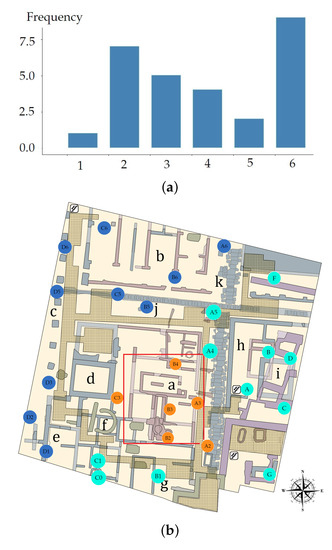

In this clustering method, the measure used to determine the internal variance of each cluster was the sum of the squared Euclidean distances between each sensor and each centroid. The distances were used to assign each sensor to a cluster. For this purpose, the k-means algorithm of Hartigan and Wong [68] was applied by means of the function kmeans (stats). It performs better than the algorithms proposed by MacQueen [69], Lloyd [70] and Forgy [71]. However, when the algorithm of Hartigan and Wong is carried out, it is often recommended to try several random starts. In the present study, 100 random starts were employed. This algorithm guarantees that, at each step, the total intra-variance of the clusters is reduced until reaching a local optimum. Results from the k-means algorithm depend on the initial random assignment. For this reason, the algorithm was run 100 times, each with a different initial assignment. The final result was the one leading to a classification with the lowest total variance value. By comparing the classification obtained with the position of sensors in the museum (Figure 8a), the three zones were denoted as North West (NW), South East (SE) and Skylight (Sk).

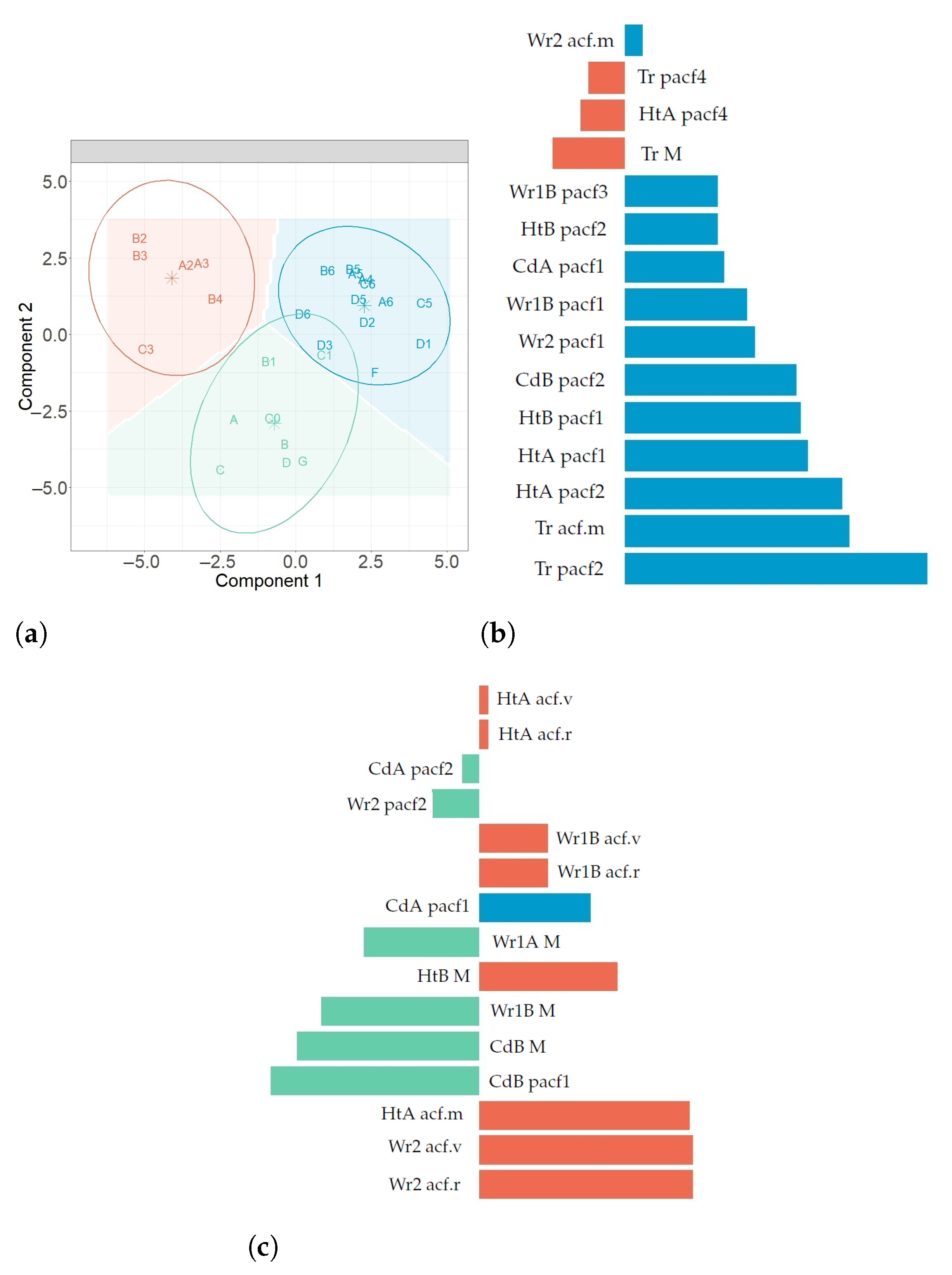

Figure 8.

Results associated with the k-means method. (a) Absolute frequency (number) of indices that indicates the best number of classes in the museum. For example, two classes are selected by seven indices. (b) Classification of sensors installed in the museum, according to the k-means method. Each color (blue, green and orange) corresponds to a different class.

3.3.7. Sensor Classification Using sPLS-DA

Partial Least Squares (PLS) regression [72] is a multivariate regression method which relates two data matrices (predictors and answer). PLS maximizes the covariance between latent components from these two datasets. A latent component is a linear combination of variables. The weight vectors used to calculate the linear combinations are called loading vectors.

Penalties such as Lasso and Ridge [73] have been applied to the weight vectors in PLS for variable selection in order to improve the interpretability when dealing with a large number of variables [74,75,76]. Chung and Keles [77] extended the sparse PLS [76] to classification problems (SPLSDA and SGPLS) and demonstrated that both SPLSDA and SGPLS improved classification accuracy compared to classical PLS [78,79,80]. Le Cao et al. [81] introduced a sparse version of the PLS algorithm for discrimination purposes (sPLS-Discriminant Analysis, sPLS-DA) which is an extension of the sPLS proposed by Lê Cao et al. [74,75]. They showed that sPLS-DA has very satisfying predictive performances and is able to select the most informative variables. Contrary to the two-stages approach (SPLSDA) proposed by Chung and Keles [77], sPLS-DA performs variable selection and classification in a single-step procedure. In order to classify the sensors and improve the interpretability of results, sPLS-DA was applied to the different classification datasets.

Since the original PLS algorithm proposed by Wold [72], many variants have arisen (e.g., PLS1, PLS2, PLS-A, PLS-SVD [82] and SIMPLS [83]), depending on how the regressor matrix () and response matrix () are deflated. Alternatives exist whether and are deflated separately or directly, using the cross product and the Singular Value Decomposition (SVD). A hybrid PLS with SVD is used in the version sPLS-DA [74]. For sPLS-DA, a regressor matrix is denoted as (, , or in this case), with dimension . The number of rows (sensors) is and the columns correspond to the classification variables p (, , or ). A response qualitative vector denoted as has length n and it indicates the class of each sensor, with values coded as 1 (for NW), 2 (SE) and 3 (Sk).

sPLS-DA was carried out using Lasso penalization of the loading vectors associated to [84] using a hybrid PLS with SVD decomposition [85]. The penalty function is included in the objective function of PLS-DA, which corresponds to PLS carried out using a response matrix with values of either 0 or 1, created with the values of response vector . Thus, this vector was converted into a dummy matrix with dimension , being the number of sensors and the number of sensor classes.

Regarding the optimization problem of sPLS-DA, in this case: is a matrix with p variables and n sensors, is a response vector (classes ) and is an indicator matrix, where , with . The sPLS-DA method modeled and as and , where and are matrices that contain the regression coefficients of and on the H latent components associated to , while and are random errors. Furthermore, each component of is a combination of selected variables, where , and each vector was computed sequentially as , where is the orthogonal projection of on subspace and is the solution the optimization problem according to Equation (3), subject to .

The optimization problem minimizes the Frobenius norm between the current cross product matrix () and the loading vectors ( and ), where and is the orthogonal projection of on subspace . Furthermore, , and , defined as , is the Lasso penalty function [75,81]. This optimization problem is solved iteratively based on the PLS algorithm [86]. The SVD decomposition of matrix is subsequently deflated for each iteration h. This matrix is computed as , where and are orthonormal matrices and is a diagonal matrix whose diagonal elements are called the singular values. During the deflation step of PLS, , because and are computed separately, and the new matrix is called . At each step, a new matrix is computed and decomposed by SVD [74]. Furthermore, the soft-thresholding function , with , was used in penalizing loading vectors to perform variable selection in regressor matrix, thus [74].

Rohart et al. [30] implemented an algorithm to solve the optimization problem in Equation (3), where the parameter needs to be tuned and the algorithm chooses among a finite set of values. It is possible to find a value for any null elements in a loading vector. These authors implemented the algorithm using the number of non-zero elements of the loading vector as input, which corresponds to the number of selected variables for each component. They implemented sPLS-DA in the R package mixOmics, which provides functions such as perf, tune.splsda and splsda, in order to determine the number of components and elements different to zero in the loading vector before running the final model.

In order to compare the performance of models constructed with a different number of components, 1000 training and test datasets were simulated and the sPLS-DA method (for a maximum number of 10 components) was tuned by three-fold cross-validation (CV) for . The perf function outputs the optimal number of components that achieve the best performance based on both types of classification error rate (CER): Balanced Error Rate (BER) and the Overall classification error rate (Overall). BER is the average proportion of wrongly classified sensors in each class, weighted by the number of sensors. In most cases, the results from sPLS-DA were better (or very similar) using Overall than when using BER. However, BER was preferred to Overall because it is less biased towards majority classes during the performance assessment of sPLS-DA. In this step, three different prediction distances were used, maximum, centroid and Mahalanobis [30], in order to determine the predicted class per sensor for each of the test datasets. For each prediction distance, both Overall and BER were computed.

The maximum number of components found in the present work was three () when BER was used instead of Overall. Furthermore, when sPLS-DA used classification variables from M2 and M3, two components led to the lowest BER values. Among the three prediction distances calculated (i.e., maximum, centroid and Mahalanobis), it was found that centroid performed better for the classification. Thus, this distance was used to determine the number of selected variables and to run the final model. Details of distances can be found in the Supplementary Materials of [30].

In order to compare the performance of diverse models with different penalties, 1000 training and test datasets were simulated and the sPLS-DA method was carried out by three-fold CV for . The performance was measured via BER, and it was assessed for each value of a grid (keepX) from Component 1 to H, one component at a time. The different grids of values of the number of variables were carefully chosen to achieve a trade-off between resolution and computational time. Firstly, a two coarse tuning grids were assessed before setting a finer grid. The algorithm used the same grids of keepX argument in tune.splsda function to tune each component.

Once the optimal parameters were chosen (i.e., number of components and number of variables to select), the final sPLS-DA model was run on the whole dataset . The performance of this model in terms of BER was estimated using repeated CV.

In summary, three-fold CV was carried out for a maximum of ten components, using the three distances and both types of CER. The optimal number of components was obtained by using the BER and centroid distance. Next, the optimal number of variables was identified by carrying out the second three-fold CV. It was run using BER, centroid distance and values of three grids with different number of variables. Next, when both optimal numbers were obtained, the final model was computed.

Regarding the most relevant variables for explaining the classification of sensors, there are many different criteria [86,87,88]. The first measure selected is the relative importance of each variable for each component and another is the accumulated importance of each variable from components. Both measures were employed in this research.

Lê Cao et al. [81] applied sPLS-DA and selected only those variables with a non-zero weight. The sparse loading vectors are orthogonal to each other, which leads to uniquely selected variables across all dimensions. Hence, one variable might be influential in one component, but not in the other. Considering the previous argument and that the maximum number of components was three (h = 1, 2, 3), Variable Importance in Projection () [86] was used to select the most important ones. It is defined using loading vectors and the correlations of all response variables, for each component. denotes the relative importance of variable for component h in the prediction model. Variables with are the most relevant for explaining the classification of sensors. was calculated using the vip function (mixOmics). Although the assumption of the sparse loading vectors being orthogonal was considered, in practice, some selected variables were common in two components. Then, a second measure of [88] was employed: denotes the overall importance of variable on all responses (one per class) cumulatively over all components. It is defined using the loading vectors and the sum of squares per component. Variables with are the most relevant for explaining the classification of sensors. The selected variables were ranked according to both types of VIPs, which are discussed below for each stage of the time series.

3.3.8. Sensor Classification Using Random Forest Algorithm

The RF algorithm [89] handles big datasets with high dimensionality. It consists of a large number of individual decision trees that were trained with a different sample of classification variables () generated by bootstrapping. The overall prediction from the algorithm was determined according to the predictions from individual decision trees. The class which receives most of the votes was selected as the prediction from each sensor. In addition, it can be used for identifying the most important variables.

An advantage of using the bootstrap resampling is that random forests have an Out-Of-Bag (OOB) sample that provides a reasonable approximation of the test error, which allows a built-in validation set that does not require an external data subset for validation. The following steps were carried out to obtain the prediction (output) from the algorithm, using the different classification data. Firstly, from a classification dataset, B random samples with replacements (bootstrap samples) were chosen, as well as one sample of 17 sensors. Next, from each bootstrap sample, a decision tree was grown. At each node, m variables out of total p were randomly selected without replacement. Each tree used an optimal number of variables that was determined by comparing the OOB classification error of a model, based on the number of predictors evaluated. Each node was divided using the variable that provided the best split according to the value of a variable importance measure (Gini index). Each tree grew to its optimal number of nodes. The optimal value of this hyper-parameter was obtained by comparing the OOB classification error of a model, based on the minimum size of the terminal nodes. From each bootstrap sample, a decision tree came up with a set of rules for classifying the sensors. Finally, the predicted class for each sensor was determined using those trees which excluded the sensor from its bootstrap sample. Each sensor was assigned to the class that received the majority of votes.

If the number of trees is high enough, the OOB classification error is roughly equivalent to the leave-one-out cross-validation error. Furthermore, RF does not produce overfitting problems when increasing the number of trees created in the process. According to previous arguments, 1500 trees were created for all cases to run the algorithm and the OOB classification error was used as an estimate of the test error. Prior to running the final RF algorithm, the optimal values of the number of predictors and the terminal nodes were determined (i.e., those corresponding to a stable OOB error).

In order to select the most important variables, the methodology proposed by Han et al. [90] was used, which is based on two indices: Mean Decrease Accuracy (MDA) and Mean Decrease in Gini (MDG). The combined information is denoted as MDAMDG. Some reasons for using the methodology were: (1) The OOB error usually gives fair estimations compared to the usual alternative test set error, even if it is considered to be a little bit optimistic. (2) The use of both indices is more robust than considering any individual one [90]. MDA corresponds to the average of the differences between OOB error before permuting the values of the variable and OOB error after permuting the values of the variable for all trees. Because a random forest is an ensemble of individual decision trees, the expected error rate called Gini impurity [91] is used to calculate MDG. For classification, the node impurity is measured by the Gini index, and the MDG index is based on this. The former is the sum of a variable’s total decrease in node impurity, weighted by the proportion of samples reaching that node in each individual decision tree in the random forest [92]. The higher is the MDG, the greater is the contribution of a given variable in the classification of sensors. The functions randomForest and importance [93] were used to carried out the RF algorithm.

4. Results and Discussion

The methodology employed consists of using sPLS-DA and RF in order to classify time series of T and to determine the optimal variables for discriminating the series. Both methods had input features calculated from different functions (e.g., sample ACF, sample PACF, spectral density and MR), as well as estimated parameters from the seasonal ARIMA model, Wold decomposition and the last level of smoothing components from the additive SH-W method. Additionally, other features were computed such as the mean, variance and maximum values of functions applied to residuals of the seasonal ARIMA or SH-W models (e.g., sample ACF, sample PACF, spectral density and statistics of the SW and KS normality test, among others). For sPLS-DA, centroid distance and BER were considered when running the final model. Two indicators of VIPs were used to rank the selected variables. The first one measures the relative importance of each relevant variable, per component in the prediction model, while the second indicator evaluates the overall importance of each variable over all components. Additionally, based on the results from sPLS-DA, a methodology was proposed to reduce the number of sensors required for a long-term microclimate monitoring. Regarding RF, the OOB classification error was used as an estimate of the test error and the parameters MDG and MDA were computed as indicators of the variable importance. Both sPLS-DA and RF methods were applied to select and compare the optimal variables that explain the classification of sensors according to values of T.

Before carrying out sPLS-DA and RF, the k-means algorithm with PCA was employed, in order to characterize the different zones in the museum, according to the indoor temperature. Once the three zones (NW, SE and Sk) were established, these classes were used as input for sPLS-DA and RF.

4.1. Identification of Structural Breaks in the Time Series

The supF and CUSUM tests were applied to study the stages. In Wr1, the former test revealed a structural break after the 682nd observation (20 November at 7:00 a.m., p-value = 0.01). In Cd, this test suggests another break after the 1981st observation (13 January at 10:00 a.m., p-value = 0.02). Finally, a structural break was also found in the Ht stage at the 6133rd observation (4 July at 10:00 a.m., p-value = 0.03). Accordingly, the stages Wr1, Cd and Ht were split in two parts (i.e., before and after the structural break). Thus, the following eight stages of T were considered: Wr1A (warm 1 before the structural break, n = 682), Wr1B (warm 1 after the break, n = 808), CdA (cold period before the break, n = 491), CdB (cold stage after the break, n = 1212), Tr (transition, n = 2303), HtA (hot stage before the break, n = 637), HtB (hot stage after the structural break, n = 1690) and Wr2 (warm 2, n = 903).

The CUSUM chart identified a significant shift at the same instances for the stages Wr1, Cd and Ht. The main reason for these structural breaks could have been sudden changes of T outside the museum or possibly modifications in the air conditioning and heating systems. January is usually the coldest month of the year in Valencia, while July and August are the hottest ones. It is reasonable to assume that the configuration of the air conditioning system was modified in these months, in order to maintain an appropriate microclimate inside the archaeological site.

According to the structural breaks, all time series were split into eight stages, and the four methods explained in the next sections were applied to each stage. This step is necessary to avoid possible problems with the properties of the estimated parameters of the models applied [94]. The four methods (M1–M4) were carried out separately for each stage of the 27 time series of or : Wr1A, Wr1B, CdA, CdB, Tr, HtA, HtB and Wr2. As an exception, in Method 2, apart from modeling each stage separately, the complete time series was also considered.

4.2. Calculation of Classification Variables—M1–M4

For Method 3, for the most successful seasonal ARIMA models, both tests (KS and SW) rejected the normality hypothesis of the errors in 100% of cases. Furthermore, all Q-Q normal score plots displayed that residuals were not falling close to the line at both extremes. ADF test suggests that the errors are stationary in all cases. According to the LBQ test, the errors are independent (lags from to ) as maximum in 77.00% of the 27 sensors (in stage Wr1A) and as minimum 0.0% (HtB). The LM test suggests the absence of Arch effects as maximum 92.52% (Wr1A) and as minimum 3.70% (Wr1B) (see Table 1).

For Method 4, for the most successful seasonal ARIMA models (see Table 2), both tests of normality rejected the hypothesis of normal distribution for the errors in 100% of models. The ADF test suggests that errors are stationary in all cases. The analysis of residuals of the different fitted models indicates that the condition of not autocorrelation (WN) is not fulfilled in all cases. In particular, according to the LBQ test, errors are autocorrelated (up to lag 24) at least in 25.93% of the models (for stage HtB) and the maximum was 92.59% (Wr1A). The LM test suggests absence of Arch effects, with a maximum of 96.30% (Wr1A) and a minimum of 3.70% (Tr) (see Table 1). In order to extract further information, the same features computed in M3 for the residuals (Type 7 variables) were also estimated in M4 and used as classification variables.

Table 2.

The most successful seasonal ARIMA model per sensor for different stages, with and .

The results of Methods 1–4 were arranged as matrices, denoted as (27 sensors × 88 variables), (27 sensors × 296 variables), (27 sensors × 143 variables) and (27 sensors × 139 variables), respectively. The regressor matrices to contained the following percentages of missing values: 5.28%, 5.66%, 3.55% and 8.61%, respectively.

4.3. Determination of Number of Classes and Class per Sensor Using PCA and K-Means Algorithm

The best option appeared to be (coincidence of 8 out of the 27 indices), followed by and (coincidences of 7 and 5, respectively), and then and (Figure 8a). According to previous research, there is a pronounced temperature gradient at the museum, particularly in summer, caused by the greenhouse effect of the skylight. Then, each cluster or class was expected to be related to the distance of each sensor from the skylight, among other factors, such as the influence of the weather conditions outdoors and the effect of the air conditioning system. Furthermore, due to the large size of the museum (2500 ) and the temperature gradient that exists at the entrance and below the skylight, it seems better to consider three zones instead of just two. Hence, was the number of clusters used for the k-means algorithm.

The k-means method classified sensors C0, C1, A4, A5 and F in the SE zone. However, by checking their position on the map of the museum (Figure 8b), these sensors could be regarded in the boundary between the NW and SE zones. Hence, it should be discussed whether such classification is appropriate, or if they should be regarded within the NW zone. In order to study this issue, two classifications were analyzed using sPLS-DA: (1) by considering A4, A5 and F in the NW zone; and (2) by locating C0 and C1 in the NW zone. For both cases, the rate of misclassified sensors was computed by means of sPLS-DA. The error rates for the classification from the k-means algorithm were: 0.25 (M1), 0.30 (M2), 0.29 (M3) and 0.42 (M4). For Case (1), the classification error rates were: 0.15 (M1), 0.19 (M2), 0.31 (M3) and 0.35 (M4). For Case (2), the error rates were: 0.21 (M1), 0.23 (M2), 0.30 (M3) and 0.41 (M4). It turns out that the lowest error rates were found for Case (1). Thus, sensors A4, A5 and F were considered as part of the NW zone for the next sections. Finally, 13 sensors were classified in the NW zone (A4, A5, A6, B5, B6, C5, C6, D1, D2, D3, D5, D6 and F), eight in the SE zone (A, B, B1, C, C0, C1, D and G) and six in the Sk zone (A2, A3, B2, B3, B4 and C3). The proposed classification of sensors is shown in Figure 1a, which depicts an association between T values and the three zones of the museum: NW, SE and Sk.

4.4. Sensor Classification Using sPLS-DA

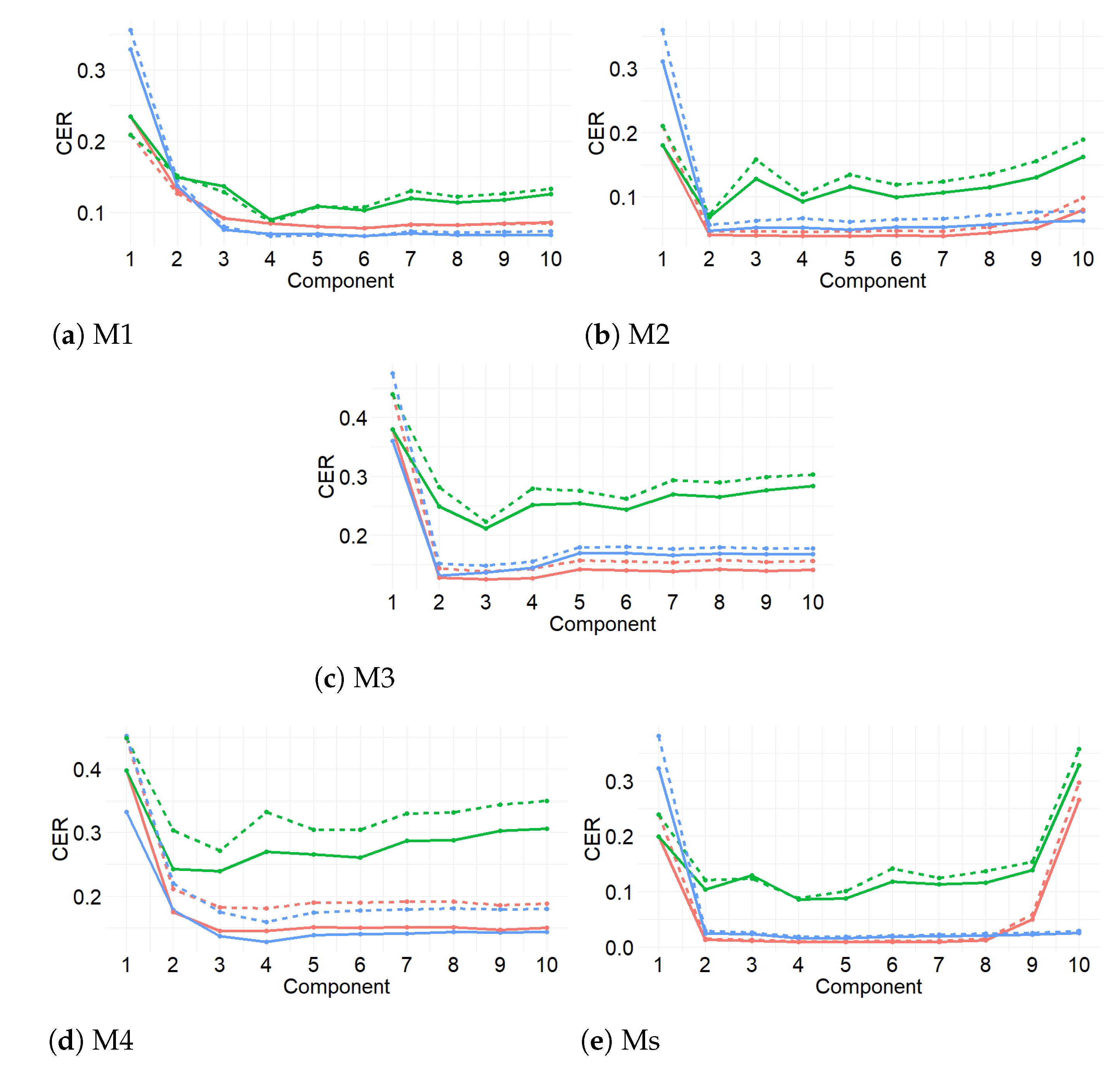

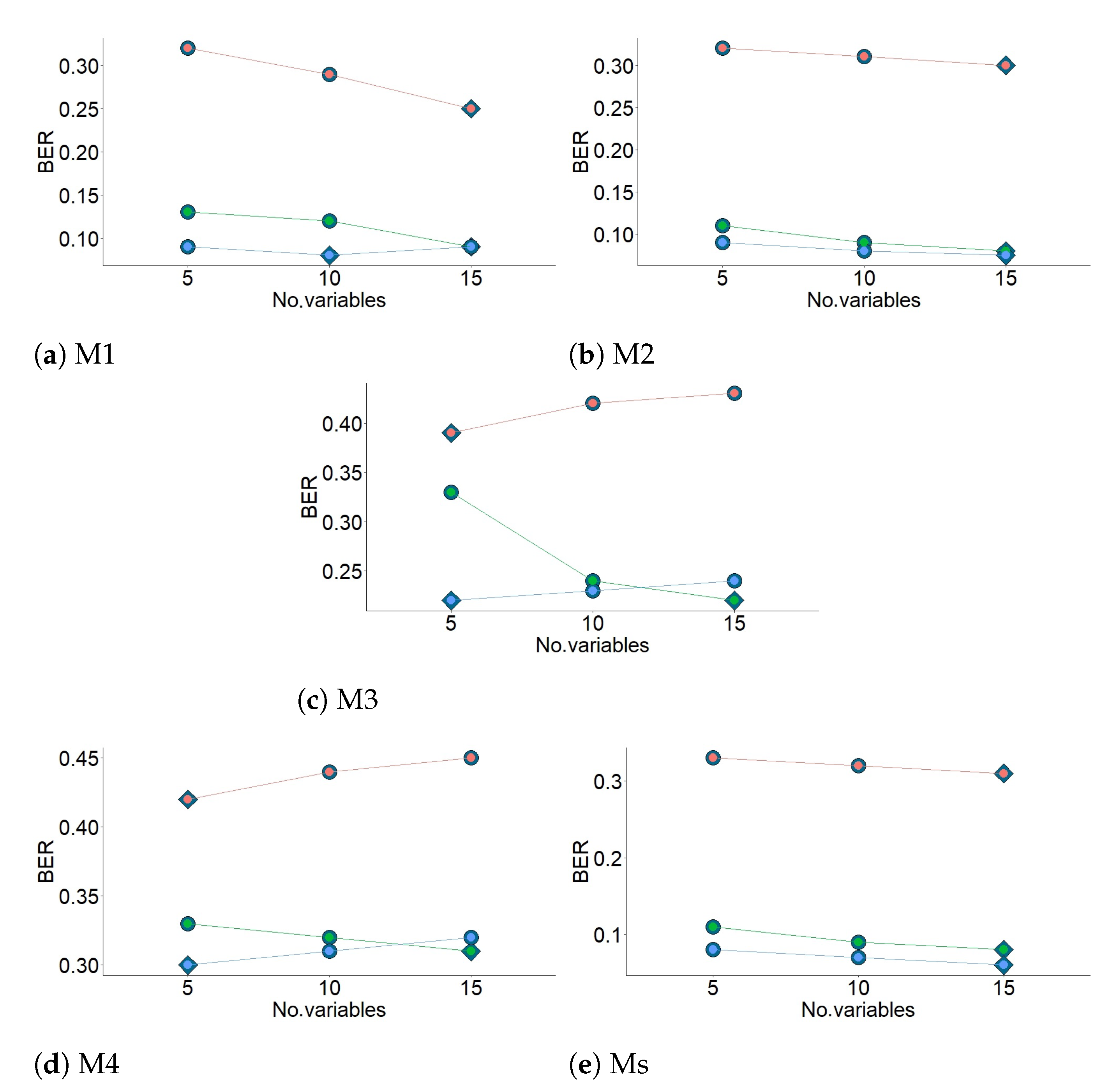

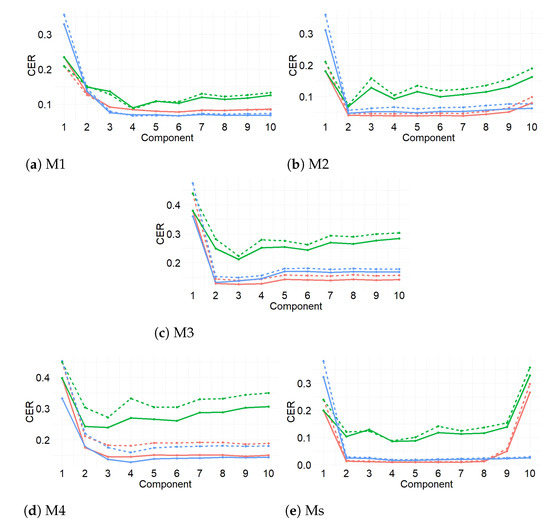

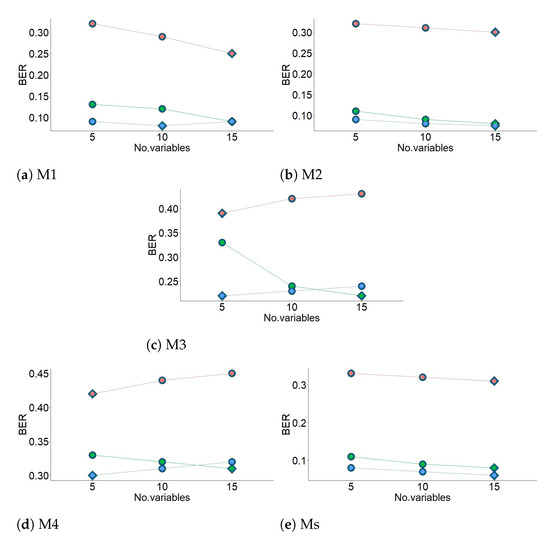

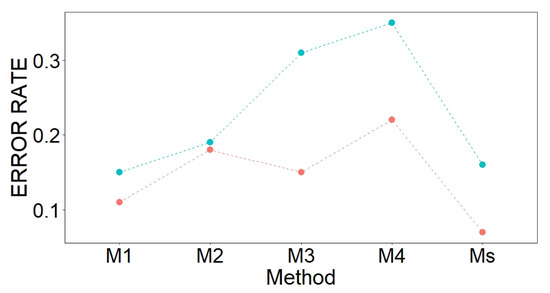

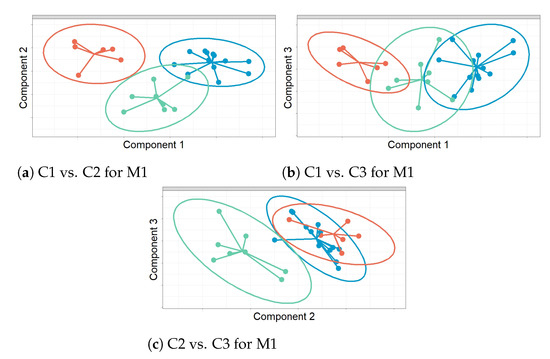

Figure 9 displays the results from the first three-fold CV for the four methods (M1–M4) and when using variables from all methods (Ms). According to the results, centroid distance performed the best for the first two components, in the case of M1, M4 and Ms. For M1 and Ms, Overall or BER performed the best for both classification error rates. For the other methods (M2, M3 and M4), Overall was the best. The centroid distance and BER from these results were selected as input in the next step of the method (see Figure 10). The values of BER and centroid distance suggested that three components are enough to classify the sensors. Figure 10 shows the results from the second three-fold CV for the final grid (5, 10 and 15 variables). The results suggest that the number of variables for the first component of each method were the following: 15 (M1 and M2), 5 (M3 and M4) and 15 for Ms. The information displayed in Figure 9 and Figure 10 (centroid distance, BER and number of variables per component) was used to apply the final model.

Figure 9.

Evaluation of the PLS-DA performance for the classification of sensors into three categories. Vertical axes indicate the classification error rate (CER) for each prediction distance as a function of the number of components (horizontal axis) for: M1 (a); M2 (b); M3 (c); M4 (d); and using the variables from all methods (e). Three types of prediction distances were considered: Mahalanobis (green lines), maximum (blue lines) and centroid (red lines). Two types of CER were computed: balanced error rate (dashed lines) and overall error rate (solid lines). PLS-DA was carried out using repeated three-fold CV 1000 times.

Figure 10.

Evaluation of the PLS-DA performance (considering three components) for the classification of sensors into three categories. Vertical axes indicate the Balance error rate (BER) per component (orange lines, Component 1; green lines, Component 2; and blue lines, Component 3). BER values were computed across all folds using 5, 10 or 15 variable (horizontal axes) for eachmethod: M1 (a);M2 (b);M3 (c);M4 (d); and all methods (Ms) (e). The three-fold CV technique was run 1000 times, using maximum distance prediction. Diamonds highlight the optimal number of variables per component.

The notation used in this study are the estimates of the following parameters: mean, range, median and variance of ACF values (acf.m, acf.r, acf.md and acf.v); PACF at lags 1–6 (pacf1–pacf6); mean of the time series (M); statistics of the KM test (kolg.t); statistics of the SW test (shap.t); maximum values of the periodogram (spec.mx); frequency of the maximum values of the periodogram (freq); variance of the residuals (res.v); SSE (sse); seasonal components (s1–s24); level (a); slope (b); coefficients of the Wold decomposition (psi1–psi5); the first AR term (ar1); the first MA term (ma1); the first SMA term (sma1); the first SAR term (sar1); and the 17th prediction of T, which was denoted as pred.17.

The most relevant variables per method are the estimates of the following parameters:

- ☐

- Selected variables from M1: PACF at lags 1–4, MR and parameters of the sample ACF (mean, range, median and mean) (Table 3 (a)).

- ☐

- Selected variables from M2: Level, slope, some seasonal components (5, 8, 10–12, 16–21 and 23), mean and median of sample ACF (residuals), SSE (residuals) and maximum of the periodogram (residuals) (Table 3 (b)).

- ☐

- Selected variables from M3: Parameters of MA, SAR and SMA of seasonal ARIMA models, PACF at lags 1–5 (residuals), mean and median of sample ACF (residuals), statistic of KM normality test (residuals) and frequency for the maximum of the periodogram (residuals) (Table 4 (a)).

- ☐

- Selected variables from M4: The first four Wold coefficients, mean and median of sample ACF (residuals), statistics of the SW and KM normality tests (residuals), PACF at first five lags (residuals), variance (residuals) and maximum of the periodogram (residuals) (see Table 4 (b)).

- ☐

- Selected variables when using the total set of variables from the four methods (some values are highlighted in bold and blue in Table 3 and Table 4): Slope (M2), some seasonal components (2, 3, 5, 8, 11, 12, 14, 16–18 and 21–23) (M2); SSE (M2); Wold Coefficient 1 (M4); mean (M1–M4), median (M2), range (M1 and M2) and variance (M1 and M2) of sample ACF values; and maximum of the periodogram (M2–M4).

Table 3.

Selected variables (V) per component (C) from sPLS-DA. Variables highlighted in bold and blue correspond to selected variables for Ms.

Table 3.

Selected variables (V) per component (C) from sPLS-DA. Variables highlighted in bold and blue correspond to selected variables for Ms.

| C1 | C2 | C3 | ||||

|---|---|---|---|---|---|---|

| Stage | V | Stage | V | Stage | V | |

| (a) For Method 1 | ||||||

| 1 | Tr | pacf2 | Wr2 | acf.r | CdA | pacf3 |

| 2 | Tr | acf.m | Wr2 | acf.v | Wr1A | pacf4 |

| 3 | HtA | pacf2 | HtA | acf.m | CdA | pacf4 |

| 4 | HtA | pacf1 | CdB | pacf1 | Wr1A | acf.r |

| 5 | HtB | pacf1 | CdB | M | Wr1A | acf.v |

| 6 | CdB | pacf2 | Wr1B | M | Wr1A | rMh |

| 7 | Wr2 | pacf1 | HtB | M | Wr1B | rMh |

| 8 | Wr1B | pacf1 | Wr1A | M | CdA | rMh |

| 9 | CdA | pacf1 | CdA | pacf1 | CdB | rMh |

| 10 | HtB | pacf2 | Wr1B | acf.r | Tr | rMh |

| 11 | Wr1B | pacf3 | Wr1B | acf.v | HtA | rMh |

| 12 | Tr | M | Wr2 | pacf2 | HtB | rMh |

| 13 | HtA | pacf4 | CdA | pacf2 | Wr2 | rMh |

| 14 | Tr | pacf4 | HtA | acf.r | ||

| 15 | Wr2 | acf.m | HtA | acf.v | ||

| (b) For Method 2 | ||||||

| 1 | HtA | sse | HtA | acf.m | ||

| 2 | Tr | sse | HtA | acf.md | ||

| 3 | Tr | s18 | HtA | s12 | ||

| 4 | Tr | b | HtA | s11 | ||

| 5 | Tr | s16 | HtA | acf.r | ||

| 6 | HtB | sse | CdA | acf.v | ||

| 7 | Tr | s17 | HtA | acf.v | ||

| 8 | HtB | s21 | HtA | s23 | ||

| 9 | Tr | spec.mx | CdA | s14 | ||

| 10 | Wr2 | s20 | CdA | s5 | ||

| 11 | HtB | acf.m | Tr | a | ||

| 12 | Wr1B | s12 | CdA | s10 | ||

| 13 | Wr2 | acf.md | HtA | s8 | ||

| 14 | HtB | s19 | HtA | s10 | ||

| 15 | HtB | s23 | Wr1A | acf.m | ||

Table 4.

Selected variables (V) per component (C) from sPLS-DA. Variables highlighted in bold and blue correspond to selected variables for Ms.

Table 4.

Selected variables (V) per component (C) from sPLS-DA. Variables highlighted in bold and blue correspond to selected variables for Ms.

| C1 | C2 | C3 | ||||

|---|---|---|---|---|---|---|

| Stage | V | Stage | V | Stage | V | |

| (a) For Method 3 | ||||||

| 1 | Tr | acf.m | CdA | acf.m | ||

| 2 | HtA | res.v | CdA | pacf4 | ||

| 3 | Tr | ma1 | CdA | pacf5 | ||

| 4 | HtB | ma1 | Wr2 | pacf4 | ||

| 5 | Tr | kolg.t | Tr | sar2 | ||

| 6 | CdB | pacf2 | ||||

| 7 | HtB | pacf1 | ||||

| 8 | Wr2 | sar2 | ||||

| 9 | CdA | sar1 | ||||

| 10 | HtA | sma1 | ||||

| 11 | HtA | pacf3 | ||||

| 12 | HtA | freq | ||||

| 13 | HtA | pacf1 | ||||

| 14 | HtB | acf.md | ||||

| 15 | Wr1A | pacf5 | ||||

| (b) For Method 4 | ||||||

| 1 | HtA | psi1 | HtA | pacf5 | Wr1B | psi1 |

| 2 | HtA | spec.mx | HtB | pacf1 | CdB | acf.m |

| 3 | HtA | res.v | Wr2 | acf.v | CdB | spec.mx |

| 4 | Tr | psi1 | HtA | shap.t | Wr2 | acf.m |

| 5 | Wr1A | acf.m | HtA | acf.m | Wr2 | shap.t |

| 6 | Wr2 | acf.r | ||||

| 7 | Tr | pacf1 | ||||

| 8 | Tr | acf.md | ||||

| 9 | Wr1B | psi4 | ||||

| 10 | CdA | pacf5 | ||||

| 11 | Tr | psi3 | ||||

| 12 | CdA | acf.md | ||||

| 13 | Wr2 | psi2 | ||||

| 14 | Tr | kolg.t | ||||

| 15 | Wr2 | kolg.t |

Based on the information in Table 3 and Table 4, the two most influential stages of the time series are highlighted in bold in Table 5 per component and method, or just one stage when it had a value greater than 50%. It can be deduced from the results in Table 5 that the two most important stages for classifying sensors were HtA and Tr, which is intuitively appealing because important temperature fluctuations occur in summer due to the greenhouse effect caused by the skylight.

Table 5.

Percentages of selected variables per stage of the time series for each component (C) and each method (M). Values were computed according to the information contained in Table 3 and Table 4 (e.g., the value 60.0% for C2 of M2 means that 9 out of the 15 selected variables correspond to HtA, according to Table 3 (b)). The two highest values of each column are highlighted in bold and blue, but only one is selected in case of a percentage > 50%.

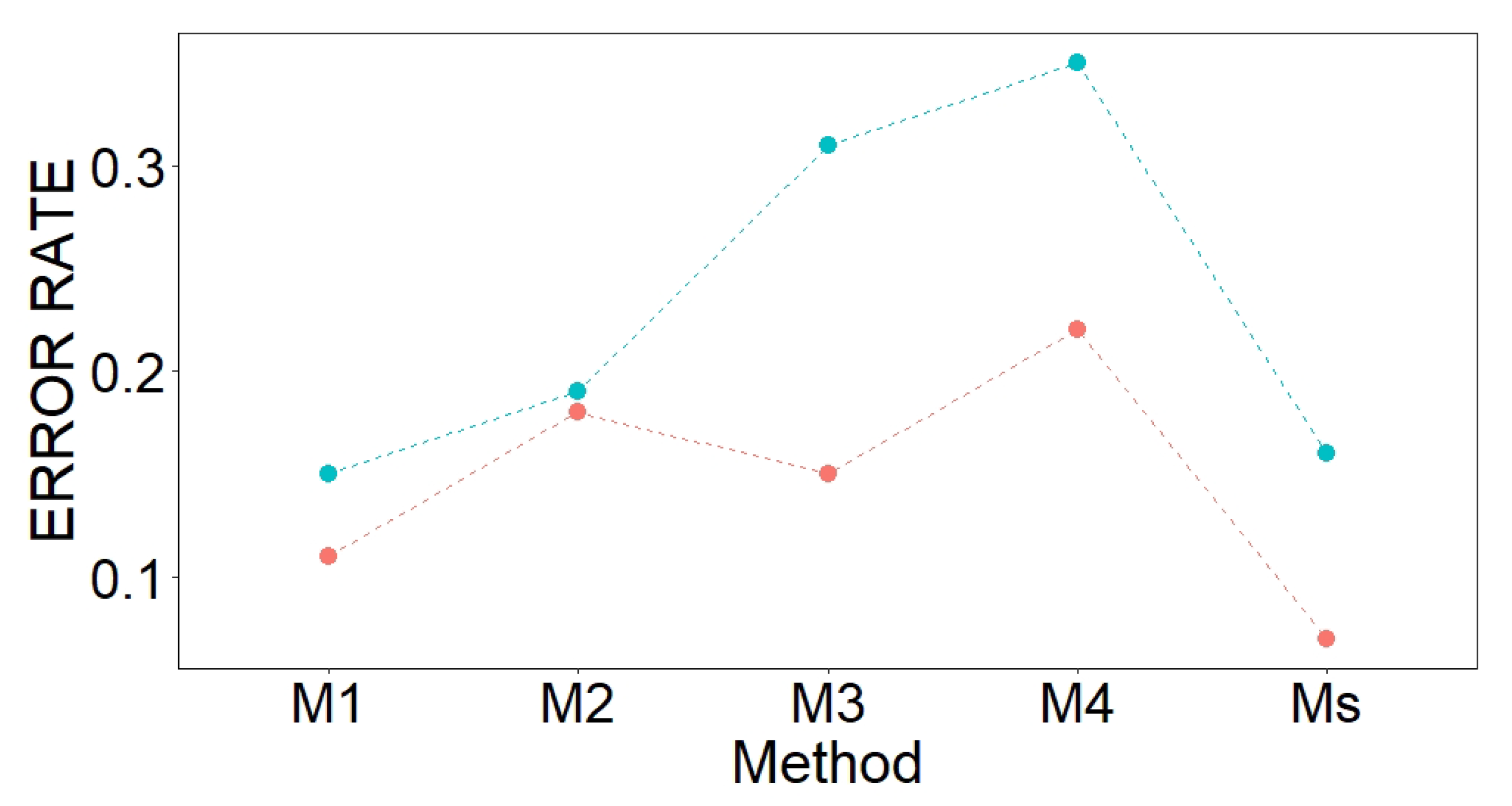

Figure 11 displays the estimations of the average BER over all components from the sPLS-DA. According to the results, M1 obtained the best performance, followed by M2.

Figure 11.

Error rates derived from the sPLS-DA and RF algorithms. Red points are OOB classification error rates per method, based on different sets of classification variables: M1, M2, M3, M4 and all variables (Ms). Blue points are mean values of BER for all the components, per method, for sPLS-DA.

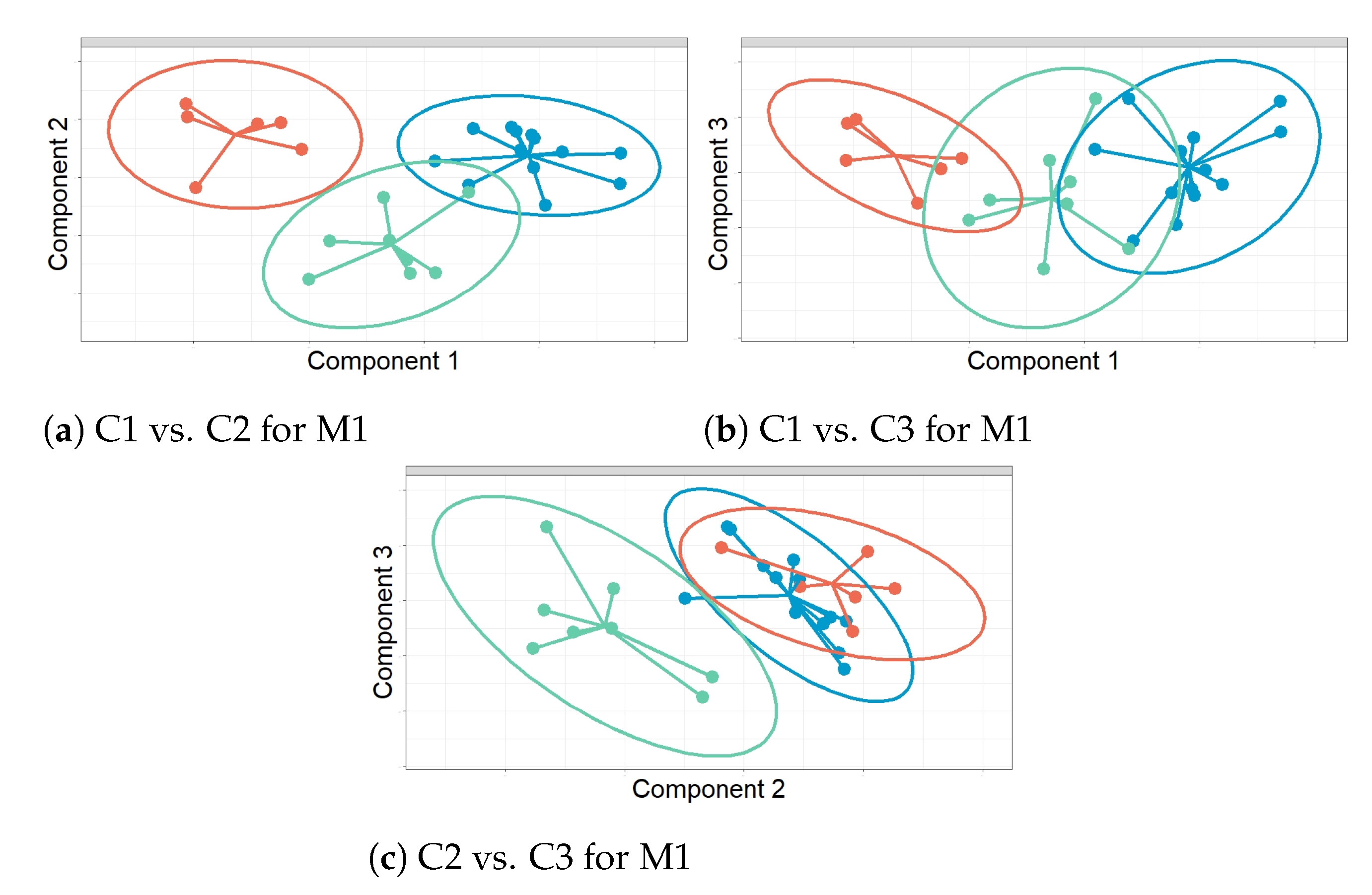

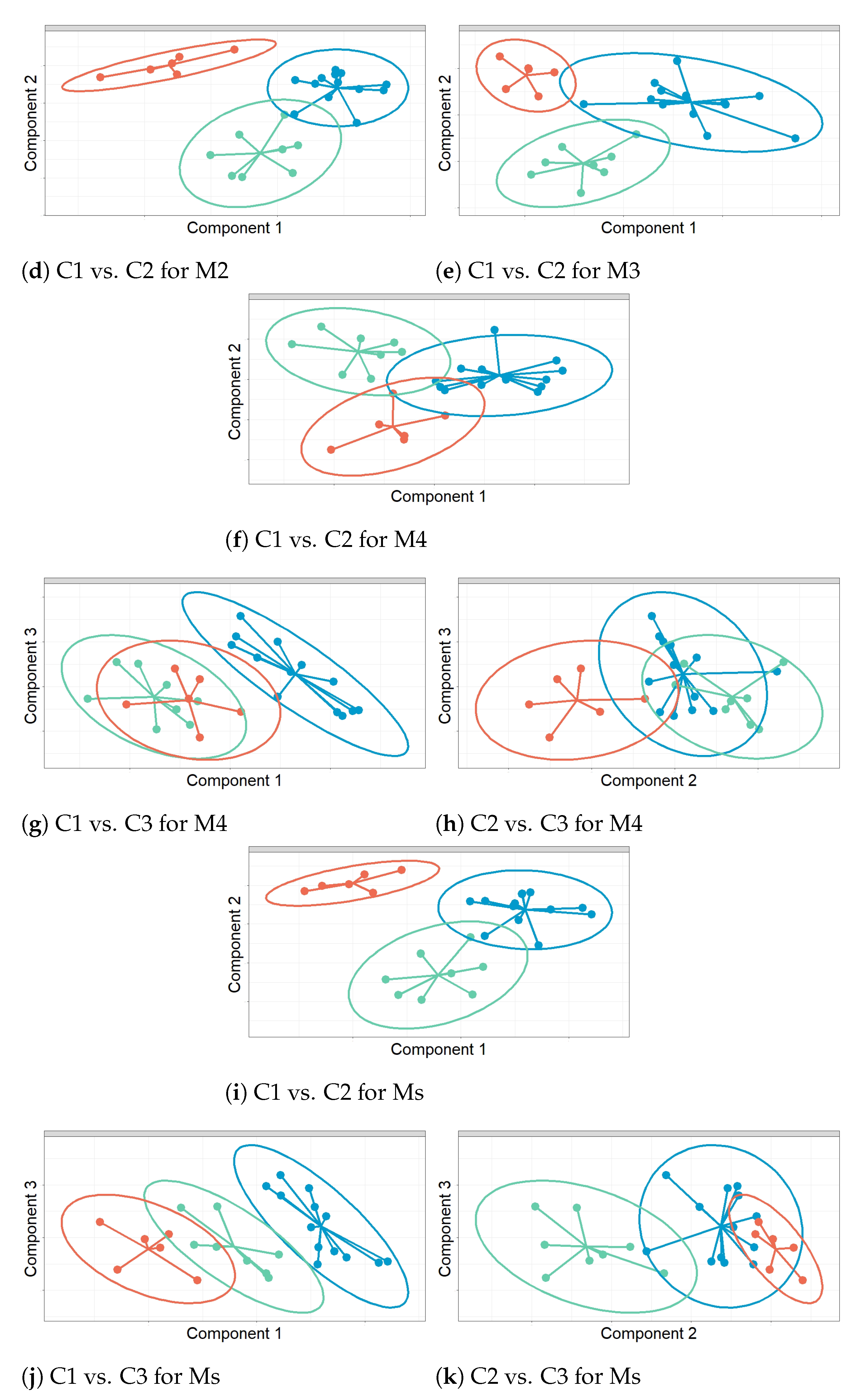

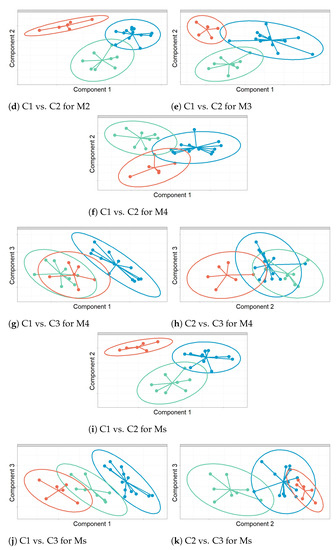

For Component 1 (C1) versus Component 2 (C2) from sPLS-DA: C1 displays a clear discrimination between sensors in the Sk class against NW and SE for both M1 and M2 (see Figure 12a,d). This fact is more clear for M2. For M2, C2 clearly separates Sk vs. SE, as well as Sk vs. NE (see Figure 12a). For M3, C1 discriminates Sk vs. NW satisfactorily, and C2 shows the same performance for this method (see Figure 12e). For M4, C1 properly separates SE vs. NW, while C2 discriminates between SE and Sk (see Figure 12d). When using all variables (Ms), C1 shows an adequate discrimination of Sk vs. NW and C2 between Sk vs. SE (see Figure 12i). For C1 vs. C3 from sPLS-DA: For M1, C1 clearly separates Sk against NW (see Figure 12b). For M4, C1 discriminates NW against the other classes, but Sk and SE appear overlaid (see Figure 12g). With C3, the classes could not be discriminated. For C2 vs. C3 from sPLS-DA: C2 properly separates SE against the other clusters in M1 and Ms, but the other classes appear overlaid (see Figure 12c,k). For M4, C2 shows a good discrimination of Sk against the other classes (see Figure 12h), but C3 did not yield any separation.

Figure 12.

Projection of sensors over the three relevant components (C1–C3) from sPLS-DA, per method (M1–M4) or when using all variables (Ms). Graphs show discrimination of the sensors, according to three classes: North Western (NW), South Eastern (SE) and Skylight (Sk). Color codes: NW sensors in blue, Sk in orange and SE in green. Each graph displays a confidence ellipse for each class (at a confidence level of 95%), in order to highlight the strength of the discrimination.

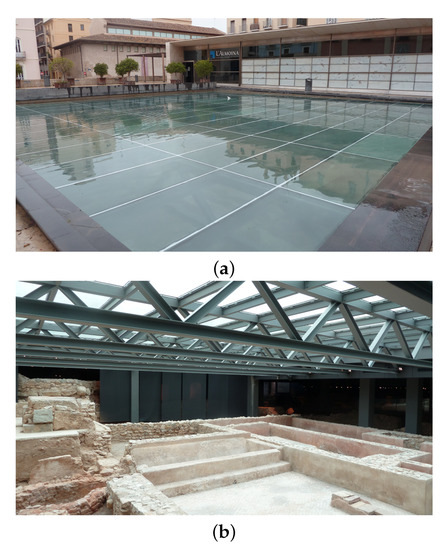

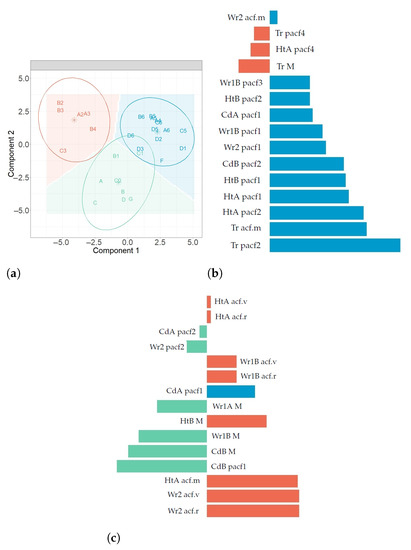

Figure 13 displays the results from sPLS-DA for M1. The scatter plot in Figure 13a shows the projection of sensors over Components 1 and 2. According to the three confidence ellipses (at a confidence level of 95%) and their centroids, each class can be characterized using the centroids. Furthermore, the bar plots in Figure 13b,c represent the loading weights of the selected variables for C1 and C2, respectively. A different color was used to indicate the class (SE, NW and Sk) in which the variable yields the maximum level of expression on average, which was computed to have an approximate assessment of the influence of variables in each class, per component. The selected variables that characterize each class were the following: for the NW class, the mean of values ACF and PACF at lags 1–3; for the SE zone, PACF at lags 1 and 2 and the mean of the time series; and for the Sk category, the mean, range and variance of ACF values and the mean of the time series.

Figure 13.

(a) Projection of sensors over components C1 and C2 from sPLS-DA for M1. The graph displays a good discrimination of the sensors according to the classes. They are color coded according to the zone where the sensor is located: NWin blue, SE in green and Sk in orange. The most important variables for (b) C1 (c) and C2, according to the absolute value of their coefficients, are ordered from bottom to top. The color corresponds to the zone in which the variable yields the highest mean component value.

4.5. Sensor Classification Using Random Forest Algorithm

Figure 11 displays the values of OOB classification error. The best results were achieved from Ms and the second best result from M1. Table 6 shows the selected variables in descending order, according to MDAMDG. The most relevant variables per method are the estimates of the following parameters:

- ☐

- Selected variables from M1: Mean, range and variance of the sample ACF values, the first four values of sample PACF, the maximum value of the periodogram and the mean.

- ☐

- Selected variables from M2: Level, slope and 18 seasonal components (2, 3, 7–12, 14 and 16–24) from sH-W method, SSE, maximum of the periodogram values, statistic of the KS normality test and 17th prediction of T.

- ☐

- Selected variables from M3: First term of the AR, MA and SMA components of the seasonal ARIMA models, sample PACF at lags 1, 2 and 5, mean and median of the sample ACF values, residual variance, maximum of the periodogram values and statistic of the KS normality test.

- ☐

- Selected variables from M4: Coefficients 1, 3, 4 and 5 from the Wold decomposition; the first five values of the sample PACF; the mean, median, range and variance of the sample ACF values; residual variance; maximum of the periodogram values of the residuals; and statistics of both the SH and KS normality tests.

- ☐