1. Introduction

The development of industrial automation became quite mature by the 1970s, and many companies have been gradually introducing automated production technology to assist production lines since [

1]. The automation of the production line has enabled the manufacturing process to be more efficient, data-based, and unified. Automation technologies have heavily influenced many processes, from loading and unloading objects to sorting, assembly, and packaging [

2].

In 2012, the German government proposed the concept of Industry 4.0 [

3]. Many companies have started to develop automation technologies, from upper layer software or hardware providers and middle layer companies that store, analyze, manage, and provide solutions, to lower layer companies, so that they can apply these technologies in their factories [

4]. In addition, a smarter integrated sensing and control system based on existing automation technologies has begun to evolve as part of the development of a highly automated or even fully automated production model [

5].

In modern automation technology, development is mainly based on a variety of algorithms and robot arm control systems [

6]. The robotic arm has the advantages of high precision, fast-moving speed, and versatile motion. In advanced applications, extended sensing modules, such as vision modules, distance sensors, or force sensors, can be integrated with the robotic arm to give human sense to robotic systems [

7].

Robot systems in general have the ability to “see” things through various vision systems [

8]. These help robots to detect and locate objects, which speeds up the technical process. In addition, they improve the processing accuracy and expand the range of applications for automated systems. The type of data obtained can be approximately divided into a 2D vision system (RGB/Mono Camera system), which receives planar images [

9], and a 3D vision system (RGB-D Camera system), which receives depth images in the form of vision modules [

10]. These two systems have their own advantages and disadvantages and suitable application scenarios. In the field of industrial manufacturing automation, the 2D vision system is commonly used to meet the needs of high precision, ease of installation, and use.

The robotic arm with a 2D vision camera is the most commonly used setup in today’s industrial automation applications. Pick and place [

11] and random bin picking [

12] are among the most frequent applications that use an industrial camera with a manipulator. Several open-source and commercial toolboxes, such as OpenCV [

13] and Halcon [

14], are already available for use in vision systems. However, regardless of how mature the software support is, a lot of hardware is still needed with human intervention to complete the process. Camera calibration is needed before operation and is applied to various image processing algorithms so that important parameters, such as focal length, center of image, intrinsic parameter, extrinsic parameter, and lens distortion [

15,

16], can be learnt. Furthermore, camera calibration is a challenging and time-consuming process for an operator unfamiliar with the characteristics of the camera on the production line. Camera calibration is a major issue that is not easily solved in the automation industry and complicates the introduction of production line automation. Traditional vision-based methods [

17,

18,

19,

20] require 3D fixtures corresponding to a reference coordinate system to calibrate a robot. These methods are time-consuming, inconvenient, and may not be feasible in some applications.

A camera installed in the working environment or mounted on a robotic arm can be categorized as an eye-to-hand (camera-in-hand) calibration or stand-alone calibration [

21]. The purpose of the hand–eye correction is similar to that of the robot arm end-point tool calibration (TCP calibration), which obtains a convergence homogeneous matrix between the robot end-effector and the tool end-point [

22]. However, unlike TCP calibration, it can be corrected by using tools to touch fixed points in different positions. To obtain a hand–eye conversion matrix, the visual systems use different methods, such as the parametrization of a stochastic mode [

23] and dual-quaternion parameterization [

24], since the actual image center cannot be used. For self-calibration methods, the camera is rigidly linked to the robot end-effector [

25]. A vision-based measurement device and a posture measuring device have been used in a system that captures robot position data to model manipulator stiffness [

26] and estimate kinematic parameters [

27,

28,

29]. The optimization technique is based on the end-effector’s measured positions. However, these methods require offline calibration, which is a limitation. In such systems, accurate camera model calibration and robot kinematics model calibration are required for accurate positioning. The camera calibration procedure required to achieve high accuracy is, therefore, time-consuming and expensive [

30].

Positioning of the manufactured object is an important factor in industrial arm applications. If the object is not correctly positioned, it may cause assembly failure or destroy the object. Consequently, the accuracy of object positioning often indirectly influences the processing accuracy of the automated system. Although numerous studies have been conducted to define object positioning accurately based on vision systems [

31,

32,

33], no system has been found with an offline programing platform to perform AOI inspection on a production line.

Table 1 shows a comparison between the system we propose here and to existing vision-based position correction systems in terms of performance. The proposed system expedites the development of a vision-based object position correction module for a robot-guided inspection system. This allows the robot-guided inspection system to complete AOI tasks automatically in a production line regardless of the object’s position. In the proposed system, the AOI targets can be automatically mapped onto a new object for the inspection task, whereas existing vision systems have been developed to locate the object’s position but not for the production line. To operate these systems, the operator needs to be skillful, and integration with the other system is a tedious process. Furthermore, user-defined robot target positions cannot be updated for inspection if there is a change in the object’s position, which makes it more challenging to perform tasks on the production line. The proposed position correction system is capable of self-calibration and can update the object position and AOI targets automatically in the production line.

Here, we propose a novel approach to automate manufacturing systems for various applications in order to solve the object position error encountered on the production line. We developed an automated position correction module to locate an object’s position and adjust the robot pose and position in relation to the detected error values on displacement or rotation. The proposed position correction module is based on an automatic hand–eye calibration and the PnP algorithm. The automatic hand–eye calibration was performed using a calibration board to reduce manual error, whereas the PnP algorithm calculates the object position error using artificial marker images. The position correction module identifies the object’s current position and then measures and adjusts the robot work points for a defined task. This developed module was integrated with the autonomous robot-guided inspection system to build a smart system to perform AOI tasks on the production line. The robot-guided inspection system based on the offline programming (OLP) platform was developed by integrating a 2D/3D vision module [

34]. In addition, the position correction module maps the defined AOI target positions to a new object unless they are changed. The effectiveness and robustness of the proposed system was indicated by conducting two tests and comparing captured images with sets of standard images. This innovative system minimizes human effort and time consumption to expedite the AOI setup process in the production line, thereby increasing productivity.

The remainder of this paper is organized as follows: in

Section 2, we give an overview of the position correction system integration with robot-guided inspection architecture; in

Section 3, we provide an overview of the position correction system and introduce the proposed method; in

Section 4, we detail the integration of the position correction module with the OLP platform and report the system performance; in

Section 5, we report the conclusions of the proposed system.

2. System Overview

The robot-guided inspection system was designed and developed with the vision module by Amit et al. [

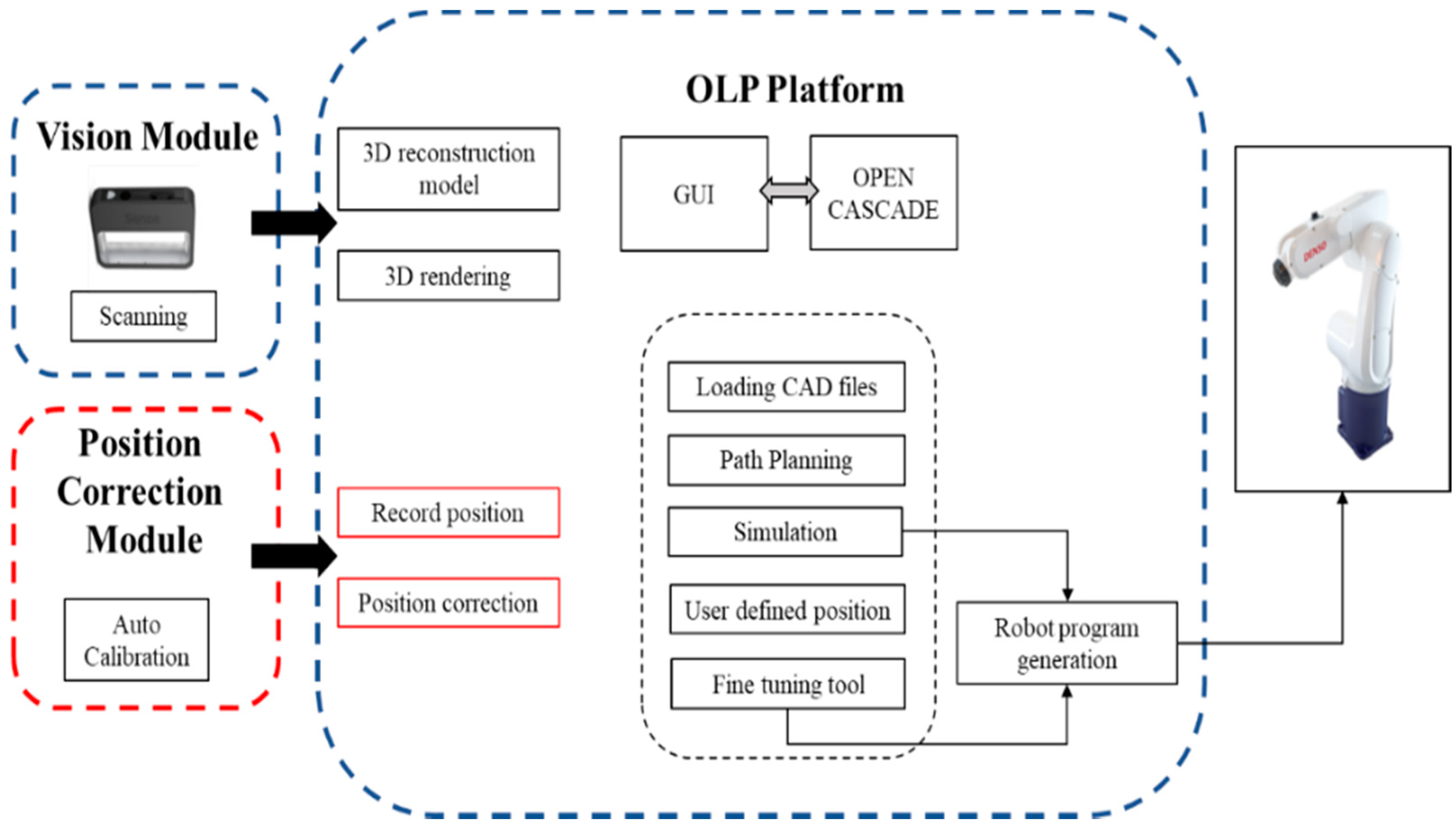

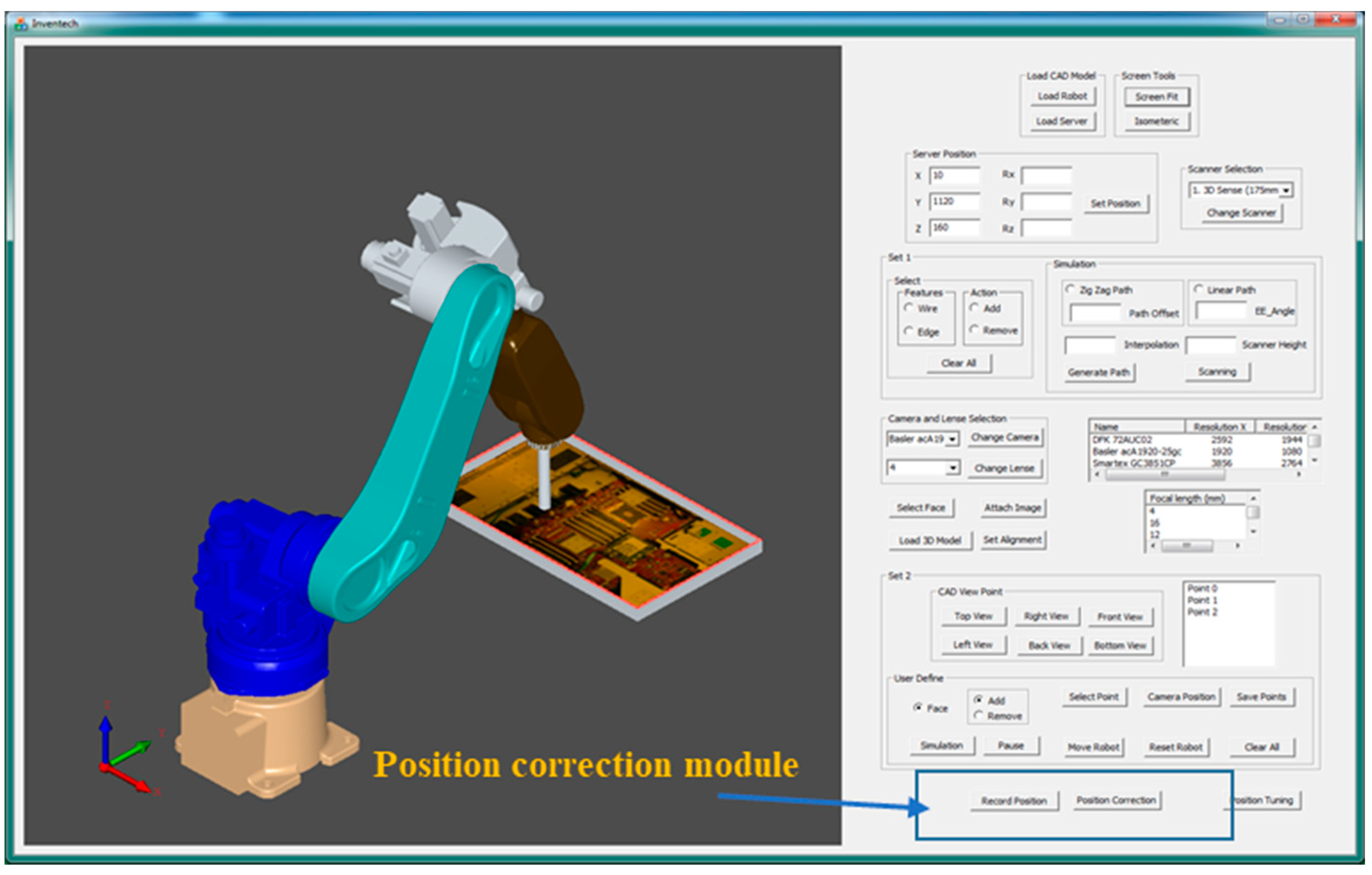

34]. The robot-guided inspection system, shown in the blue dashed boxes in

Figure 1, consists of an OLP platform and vision module. The OLP platform was designed and developed using OCC open source libraries to generate a robot trajectory for 3D scanning and to define AOI target positions using CAD information [

35]. The robot-guided inspection efficiently performs AOI planning tasks using only scanned data and does not require a physical object or an industrial manipulator. This developed system can also be used in different production lines based on robot-guided inspection. However, the developed system is not comprehensive enough to be used in an assembly line to perform reliable AOI tasks. Therefore, the robot-guided inspection system was integrated with the position correction module (red dashed boxes in

Figure 1) to resolve issues related to object displacement or rotation errors in a production line for AOI tasks.

Figure 1 presents a complete overview of the proposed system architecture, which includes the OLP platform, vision module, and position correction module. In this study, the primary objective of the position correction system was to calculate the rotation and translation of the new object over the production line using artificial markers on the object. Moreover, the proposed system was developed to minimize the complexity of hand–eye calibration and position correction within the production line. In addition, we aimed for the user of the integrated autonomous robot-guided system to not have to define the AOI target positions unless they are changed. This would not only save time and effort, but increase productivity. The proposed position correction system consisted of a simple hand–eye calibration method for the development of the position correction method.

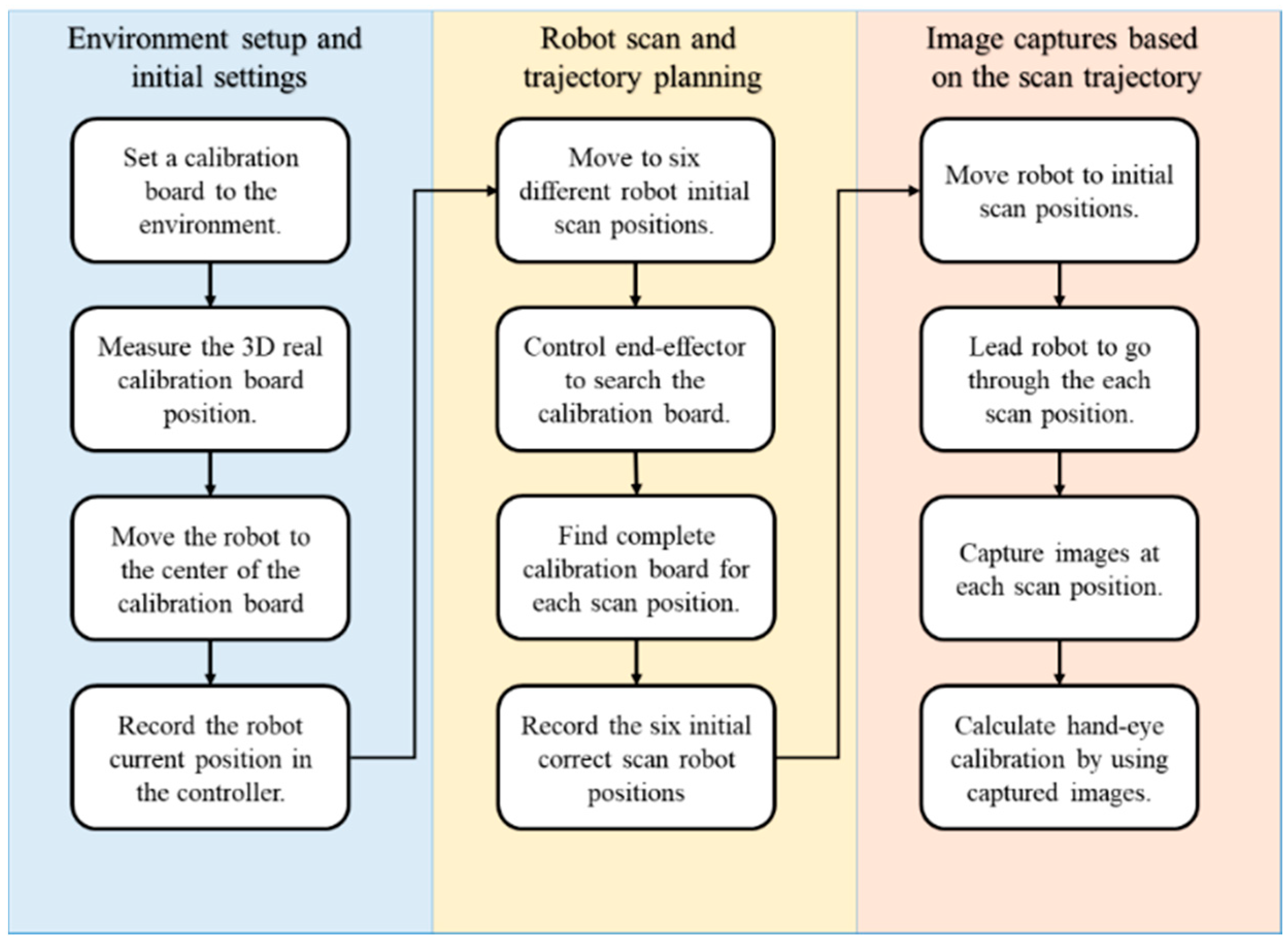

The flowchart shown in

Figure 2 explains the automatic hand–eye calibration method, which is part of the position correction system. The calibration method was divided into three main stages: “environment setup and initial settings”, “robot scan and trajectory planning”, and “image capture based on the scan trajectory”. In the environment setup and initialization phase, the user must prepare the environment for calibration by measuring the position of the calibration board in the workspace, helping the arm see the calibration board and providing other simple basic settings. The robot scan and trajectory planning stage recorded the optimal end-effector position, while capturing the calibration board image at each position during the image captures based on the scan trajectory stage. The environment setup and initial settings is the only part of the system that requires manual operation (

Figure 2). The proposed calibration method was implemented to initiate the position correction module to measure and compensate for the position error before defining the AOI target positions on new objects.

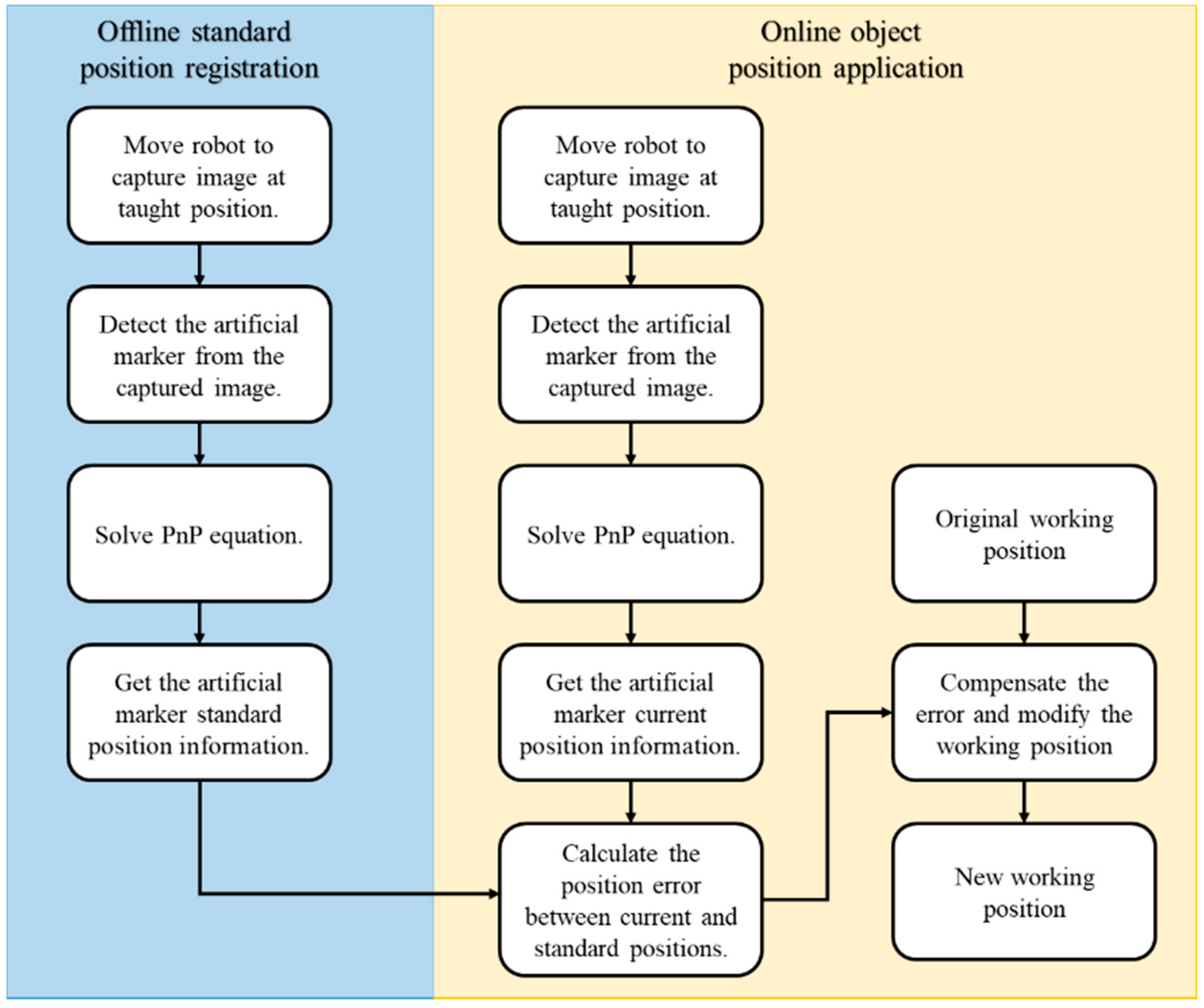

The flowchart of the proposed object correction methodology is presented in

Figure 3 and is divided into the offline registration process and the real-time online object positioning. In the offline process, the user can specify the robot’s artificial marker detection position, design the marker pattern, and work points. In the online process, the system takes a picture of the artificial marker at the specific position based on the user’s offline settings. Subsequently, the system identifies the current object position and autonomously measures and adjusts the robot work points for the AOI inspection task. The position correction system is then integrated with the autonomous robot-guided optical inspection system to demonstrate the performance of the proposed system.

3. Overview of the Position Correction System

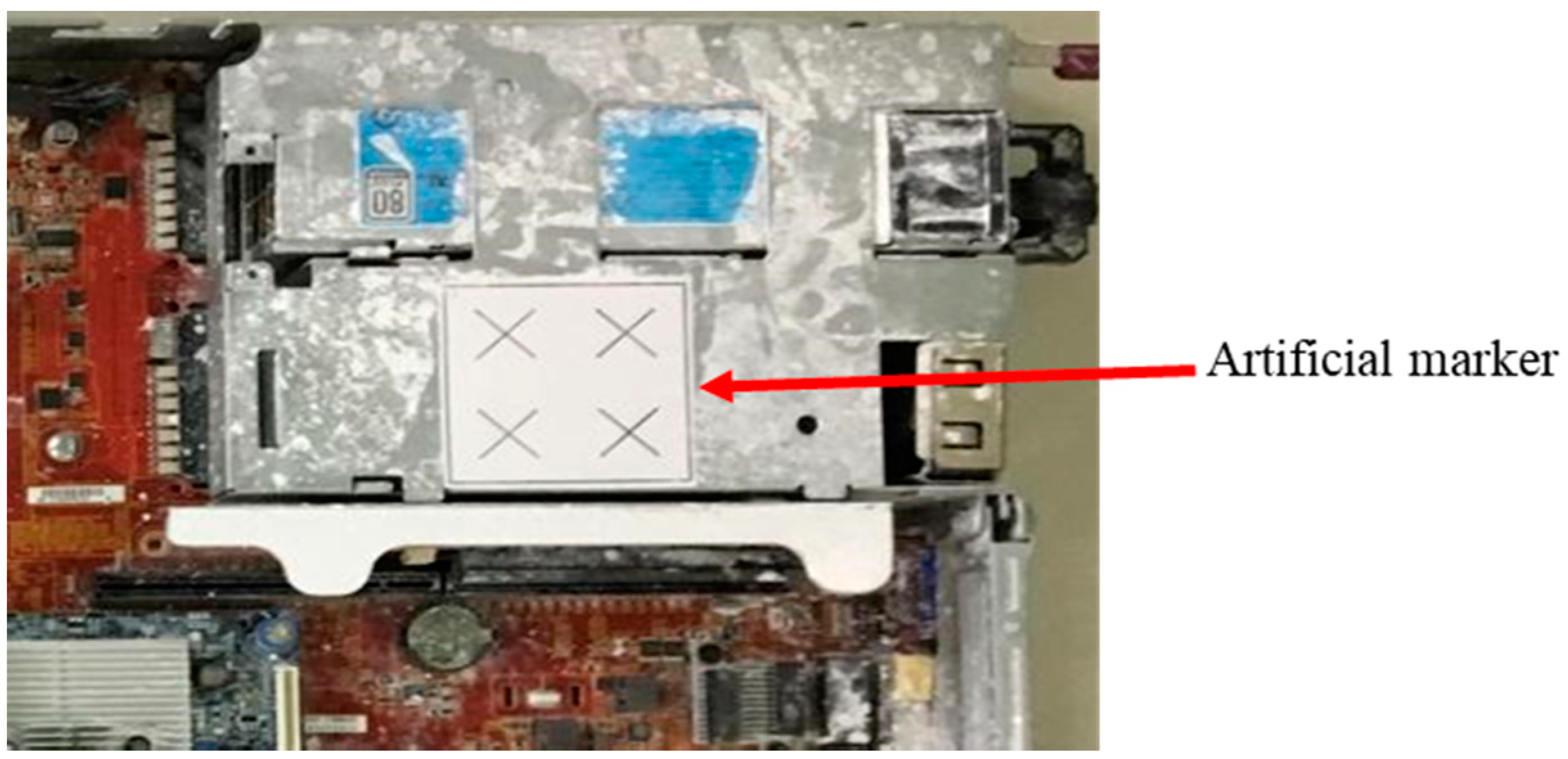

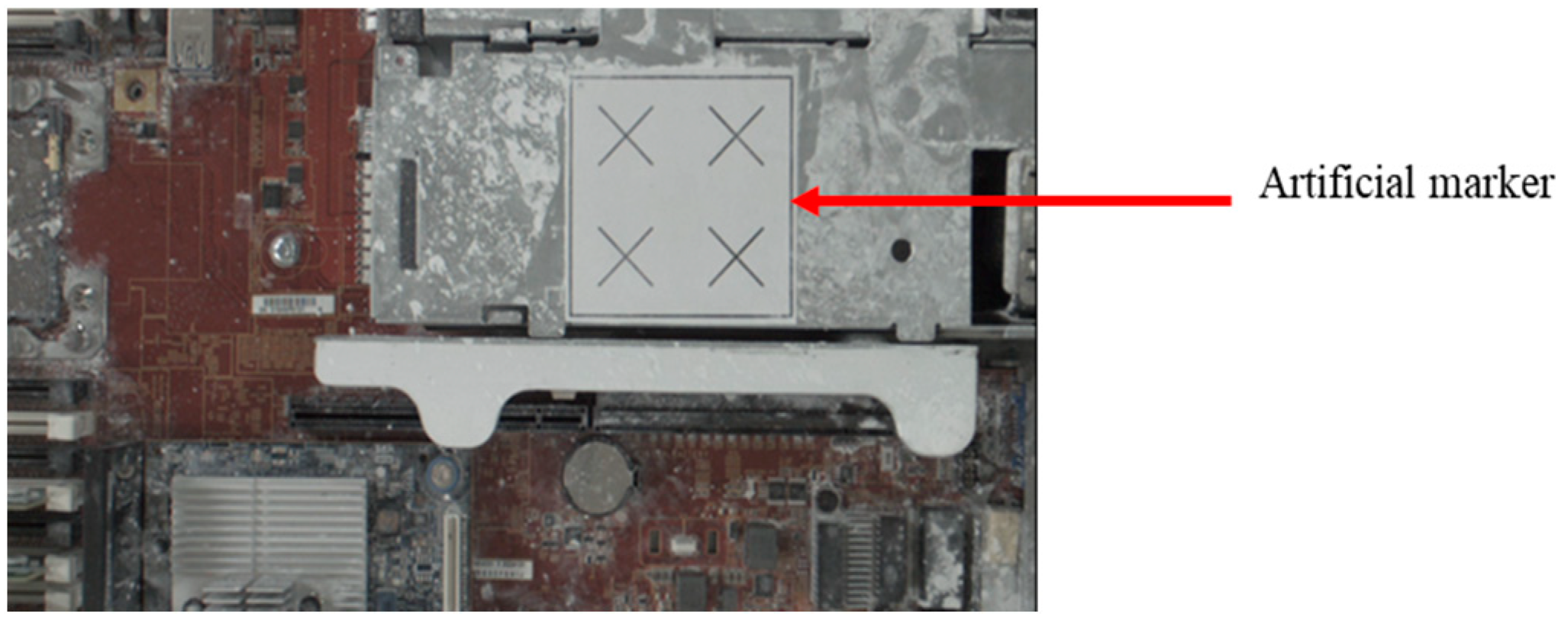

In this study, an image-based object position correction system was designed and developed. Object positioning has always been a key component of the automated manufacturing process. The proposed position correction system was developed based on a calibration and a position correction methodology. The calibrated camera, with a specific artificial marker on the object for PnP image processing, identifies and defines the position [

36,

37], as shown in

Figure 4. A transformation matrix

T is then obtained from the marker coordinate (

Pmarker) to the camera coordinate (

PCamera). The displacement error of the work point is determined by the difference between the object before and after the transformation matrix. The system must then compensate for the position error before defining the AOI target positions on the new object. The system simulation results were evaluated before being integrated with the robot-guided inspection system. This system assists the OLP platform with performing robot-guided AOI applications to automatically inspect misplaced components of manufactured objects on a production line.

3.1. Automatic Robot Hand–Eye Calibration Methodology

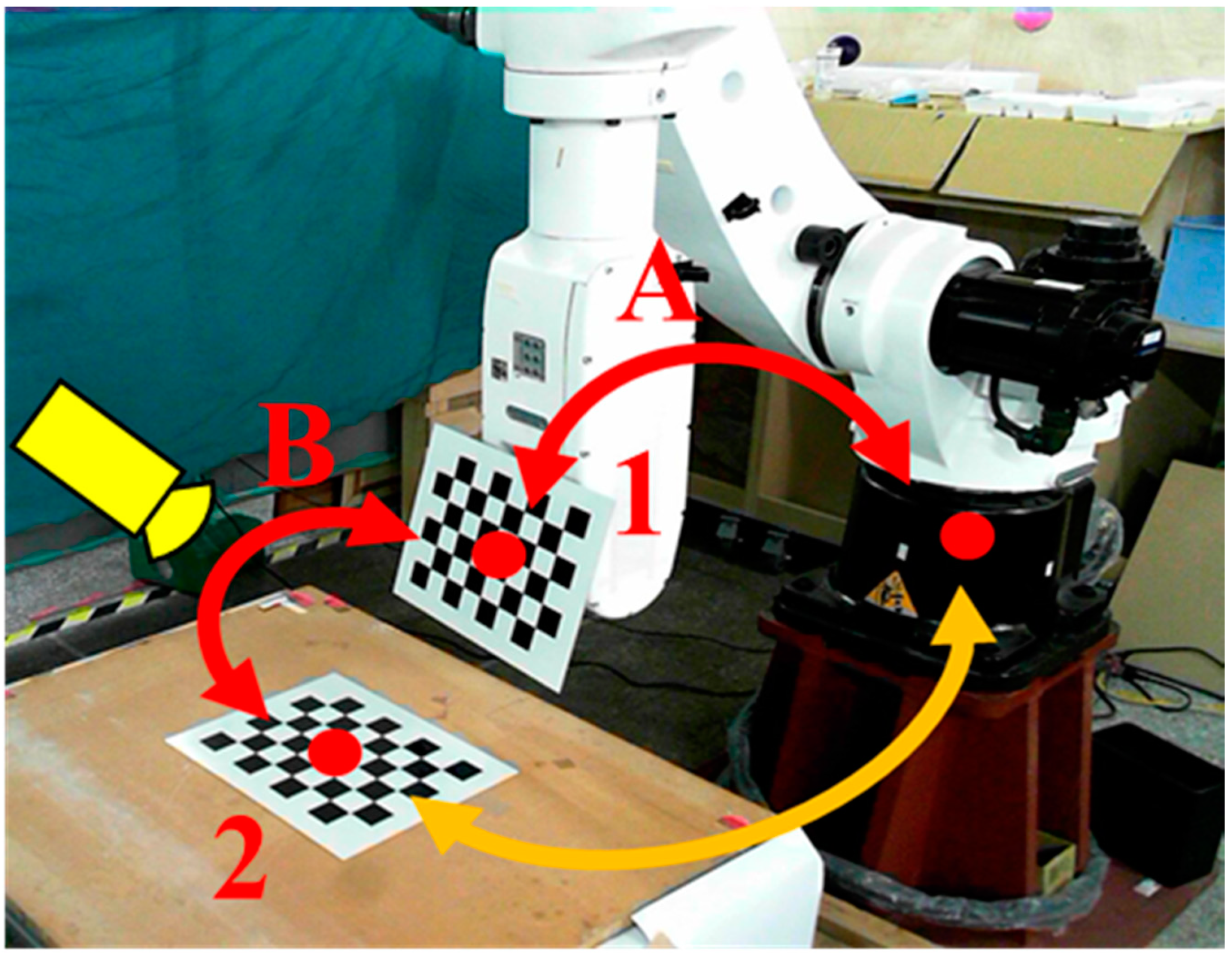

We evaluated the performance of the proposed automatic hand–eye calibration process shown in

Figure 2. The first step of this process was to measure the position of the calibration board in the work space. The calibration board (an 8 × 6 chess board) was fixed on the robot arm and moved closer to the second calibration board on the table [

38,

39], as shown in

Figure 5. We then adjusted the robot arm and camera position so that both calibration boards were visible simultaneously. Two transformation matrices,

A and

B, were used to calculate the relationship between the calibration boards. Transformation matrix

A was obtained using the robot arm controller to record the end-effector coordinates. Transformation matrix

B was calculated by solving the camera image PnP equations. The transformation matrix between the robot arm and calibration board 2 was calculated after determining the relationship between

A and

B, as shown in

Figure 5.

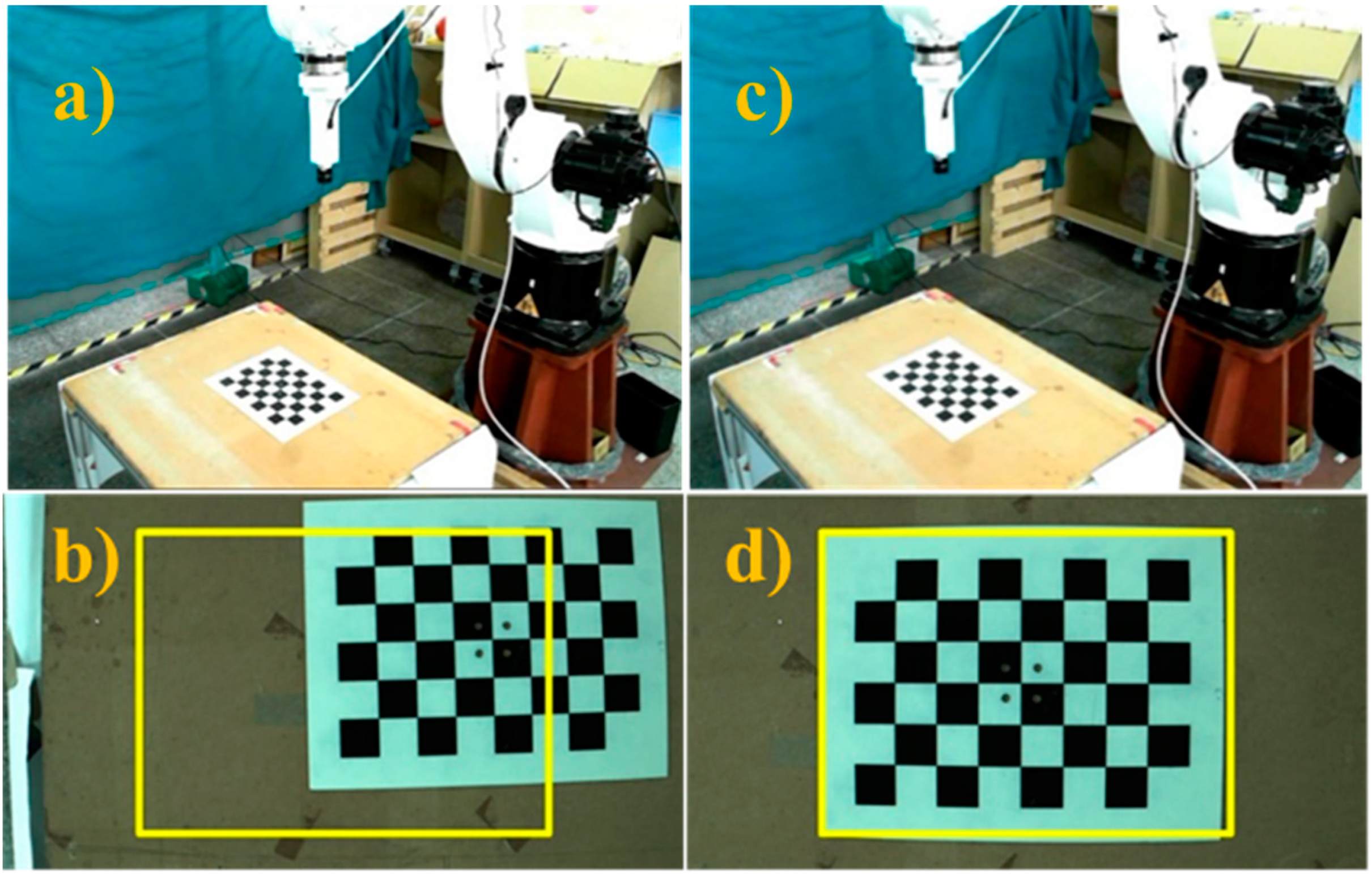

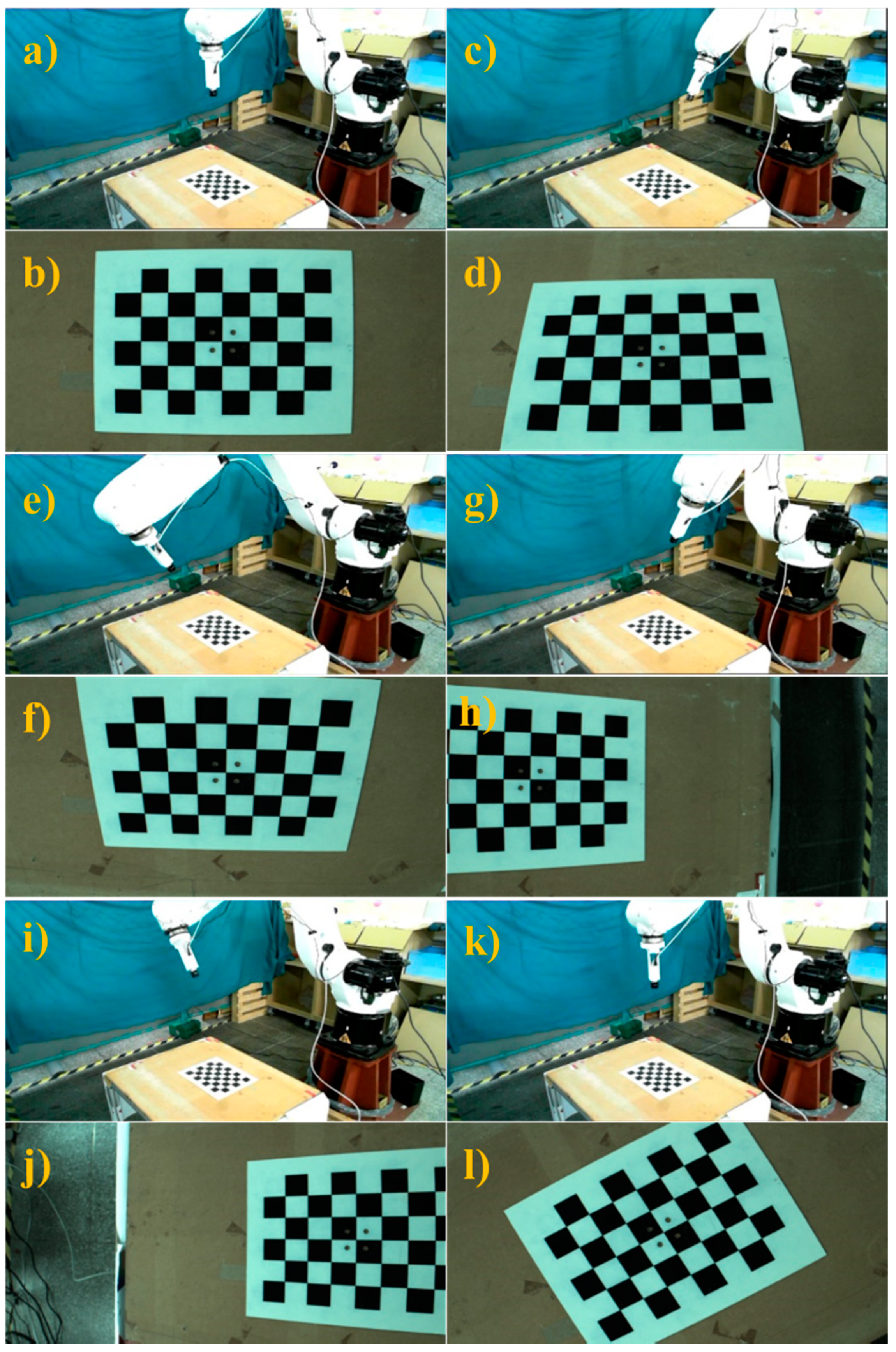

Once the transformation matrix was obtained, the initial position of the robot was adjusted to perform automatic hand–eye calibration, as shown in

Figure 6. The proposed system enabled the robot to follow the hand–eye calibration trajectory and take pictures of the calibration board at different robot positions, as shown in the

Figure 7.

Table 2 presents each position of the robot end-effector relative to the robot arm’s initial position and the hand–eye calibration results are shown in

Table 3. Using these results, the conversion matrix between the robot arm end-effector and the camera connector was calculated and compared with the conversion matrix derived from the original connector design. The proposed calibration method had an error on the

z-axis of around 2 mm and a greater error of nearly 9 mm on the

x-axis. The results obtained from the hand–eye correction were sufficiently similar to the designed connector results. Further analysis was performed to identify potential causes of calibration error in order to make further improvements. During this process, errors may have occurred at the 3D printed connector, and the connector center to place the camera was difficult to estimate. In addition, the hand–eye calibration itself is a complex process, and the proposed approach uses the simple AX = ZB closed conversion relationship for inference, so there is a possibility of error. The calibration results obtained were used in the proposed position correction methodology to compute and compensate for the position error on a new object in a production line.

3.2. Object Position Correction Methodolgy

After obtaining the calibration results, the user must set the checkpoint position (

PCheck) for the robot arm with the camera in the initial “template login phase” to capture the full artificial marker image. The robot arm moves to the (

PCheck) position and uses the camera to detect the artificial marker and solve the PnP image problem. Therefore, the transformation matrix

T of the artificial marker coordinate (

Pmarker) and camera coordinate (

PCamera) was obtained using Equation (1).

where

T is a standard position (

TS) and is used as a standard sample to verify and measure the change in object position.

Once the standard position has been obtained, it undergoes the “error compensation phase”, which compensates for the object’s position error during various manufacturing applications. In practice, there are several different work points for various processes of AOI tasks, machining, and assembly applications. The positions of these work points are recorded and are collectively known as P. If the object shifts during the process, the robot arm moves to the checkpoint position (PCheck) to detect and resolve the image PnP problem and obtain a new marker position (Pmarker). This will calculate a new transformation matrix TN between the new marker coordinate Pmarker and camera coordinate PCamera.

The offset transformation matrix

TD shown in Equation (2) shows the displacement and rotation of the manual markers and is calculated using the standard transformation matrix

TS and the new transformation matrix

TN.

The rotation and translation error for defining new work points, PNew, were calculated using the offset transformation matrix, artificial marker center point offset (S), and the robot arm’s original coordinates.

Following this, the work point

P is translated back to the origin of the robot arm coordinate system with the artificial marker center point, as shown in Equation (3):

Work point

P is then rotated with reference to the artificial marker rotation:

Work point

P is translated back to the original artificial coordinate, as shown in Equation (5):

Using Equation (6), a new work point (

PNew) is defined for the AOI task after error compensation:

In summary, translation and rotation error are computed once the object positioning system recognizes and evaluates the artificial marker in the actual application. Based on the proposed approach, this position correction system successfully adjusts and generates new work point coordinates for AOI inspection tasks.

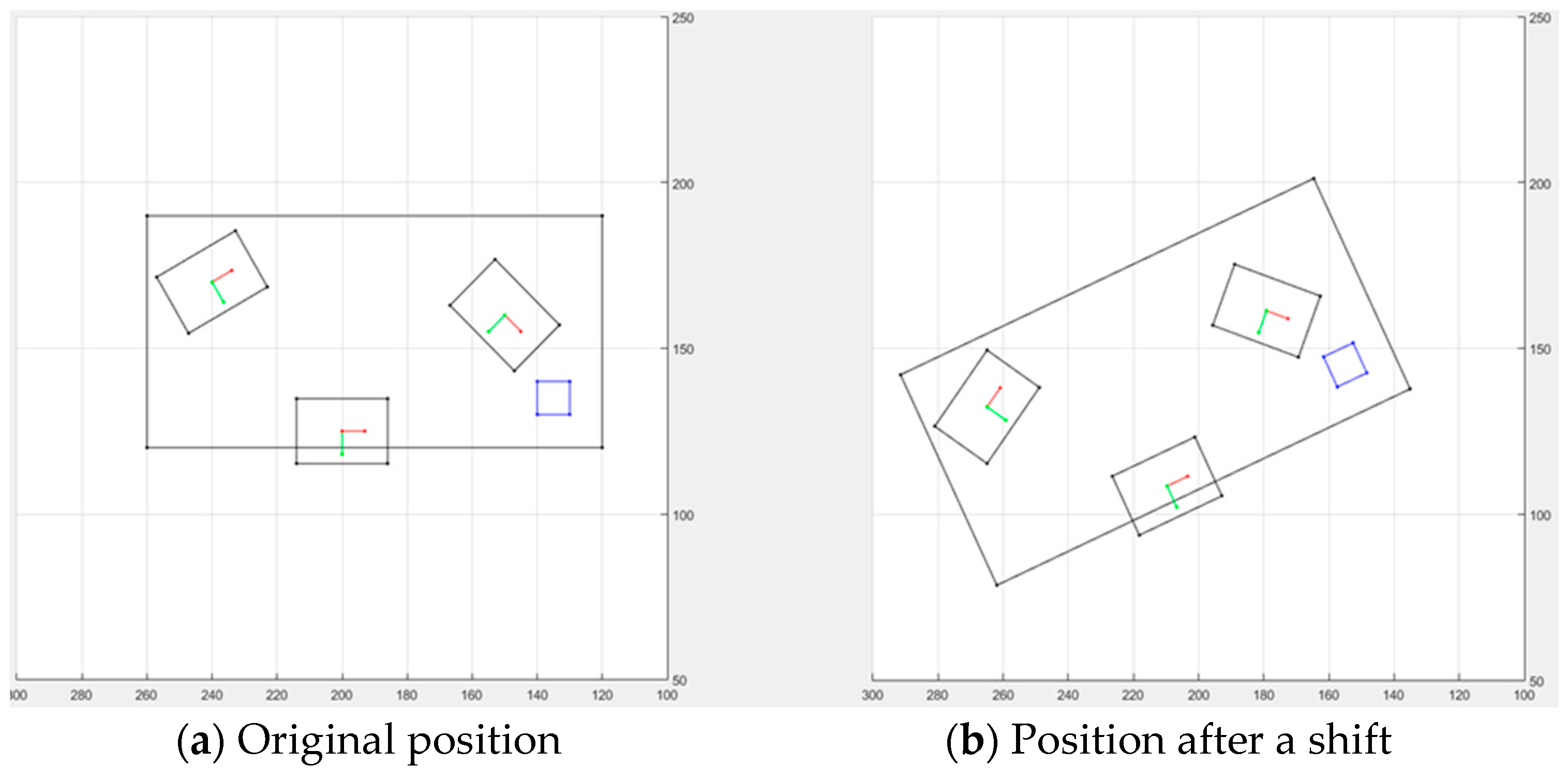

The schematic diagram shown in

Figure 8 was generated using MATLAB software and was based on the proposed position correction system. In the simulation, the computer server board (object) was moved to an unknown position, after which the position correction system identified the AOI camera target point based on the artificial marker. The performance of the position correction system was high for the AOI target point and object position, regardless of the error caused by the hardware device. The system successfully found the position error and compensated for it to calculate the robot’s new AOI target point, irrespective of the object position. Thus, the object positioning correction system effectively utilized the automatic hand–eye calibration method and simple artificial markers to detect the object’s current position prior to any shifting.

Section 4 discusses the integration and implementation of the proposed method in the AOI application, as well as the experimental results.

4. Integration of Position Correction Module with OLP Platform

The position correction system we developed was integrated with the autonomous robot-guided optical inspection system to build a smart system for the production line. Prior to performing position correction, a path for real-time scanning and target positions for AOI tasks was generated and visualized graphically in the OLP platform, as shown in

Figure 9 [

34]. Furthermore, the generated robot program was sent to the HIWIN-620 industrial robot to capture AOI images, which were compared to virtual images. However, the developed system was still unable to reliably perform AOI tasks in a production line. Therefore, the robot-guided inspection system was integrated with the position correction module to resolve the issues related to object displacement or rotation errors in a production line for AOI tasks.

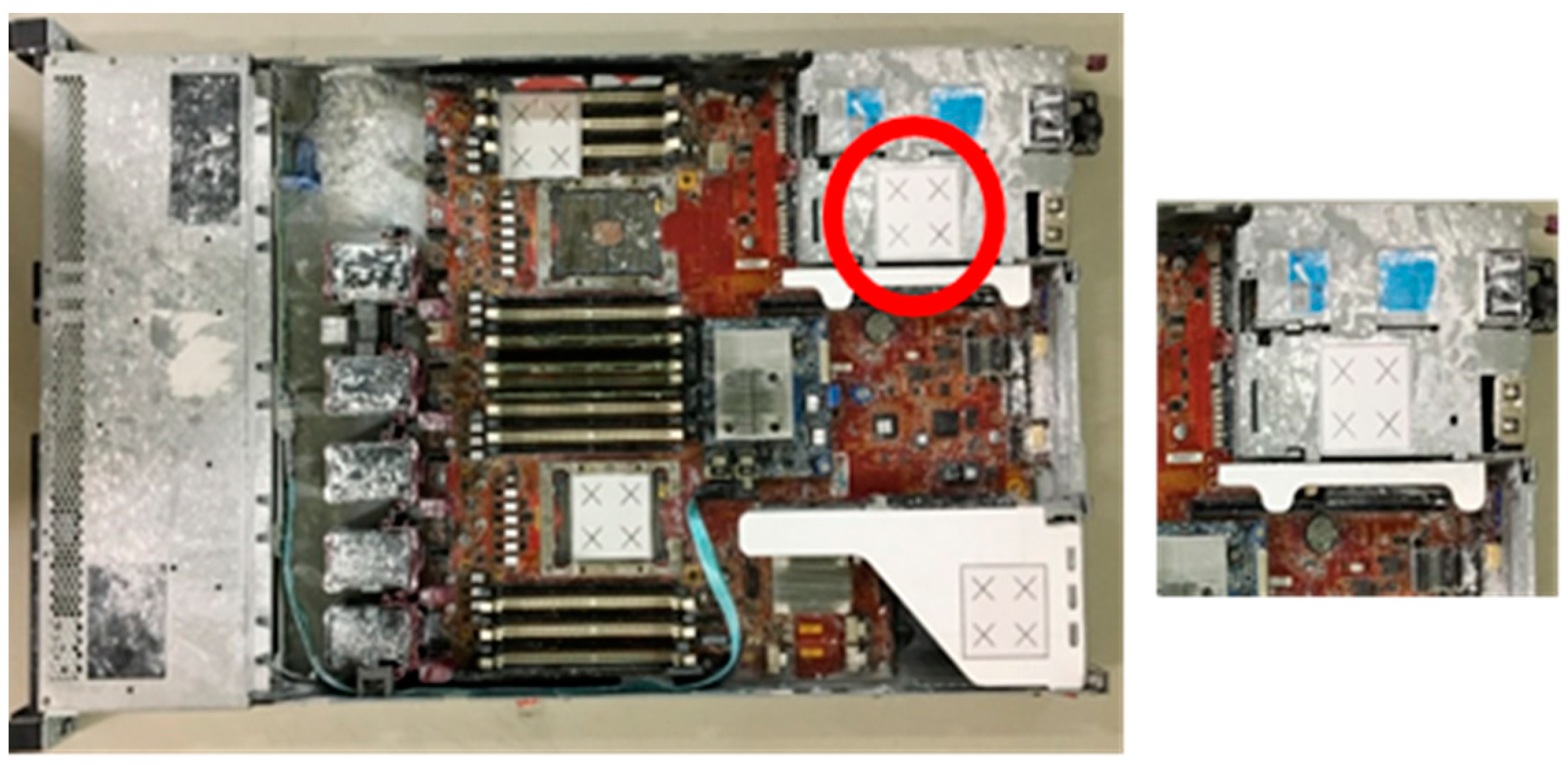

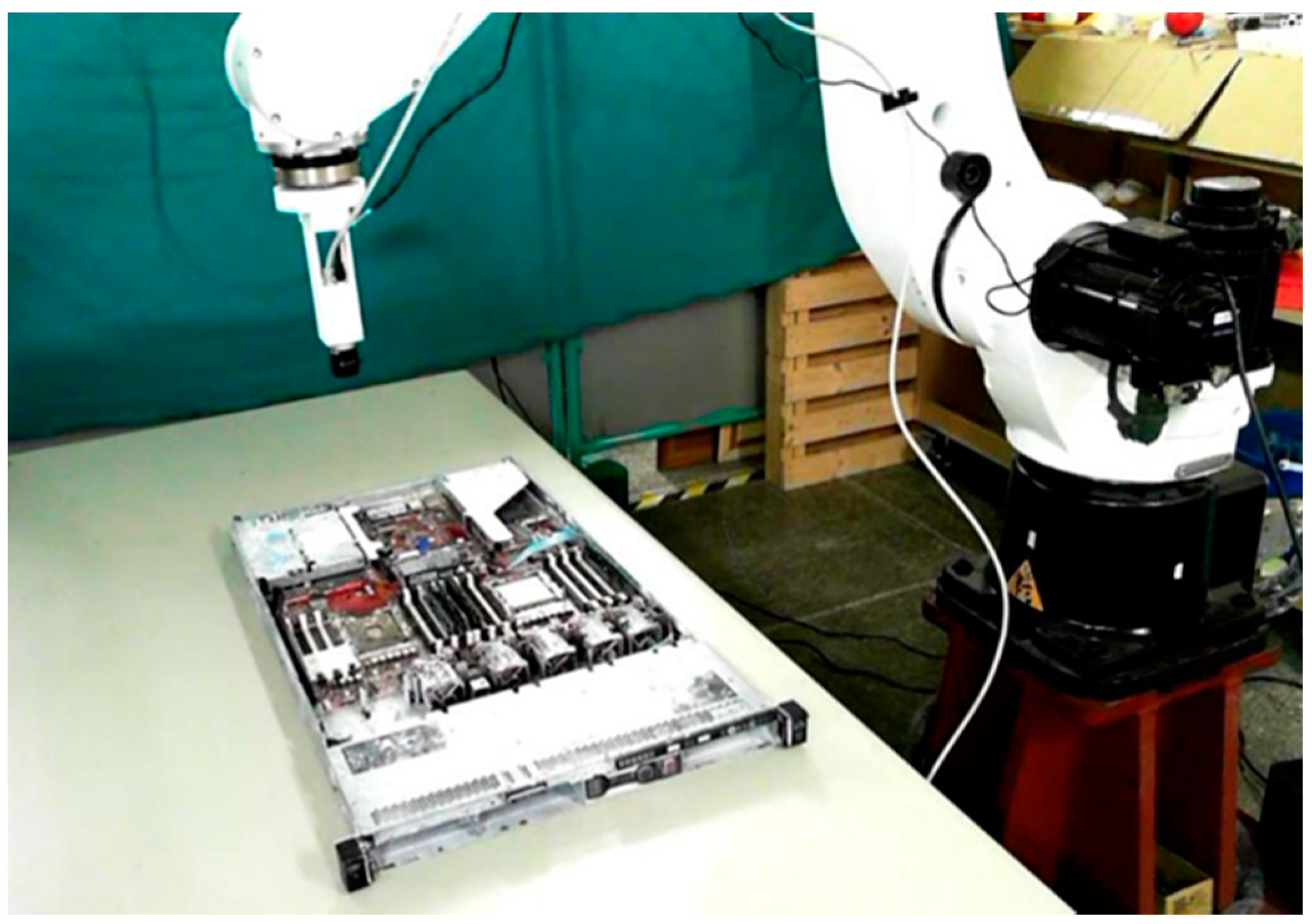

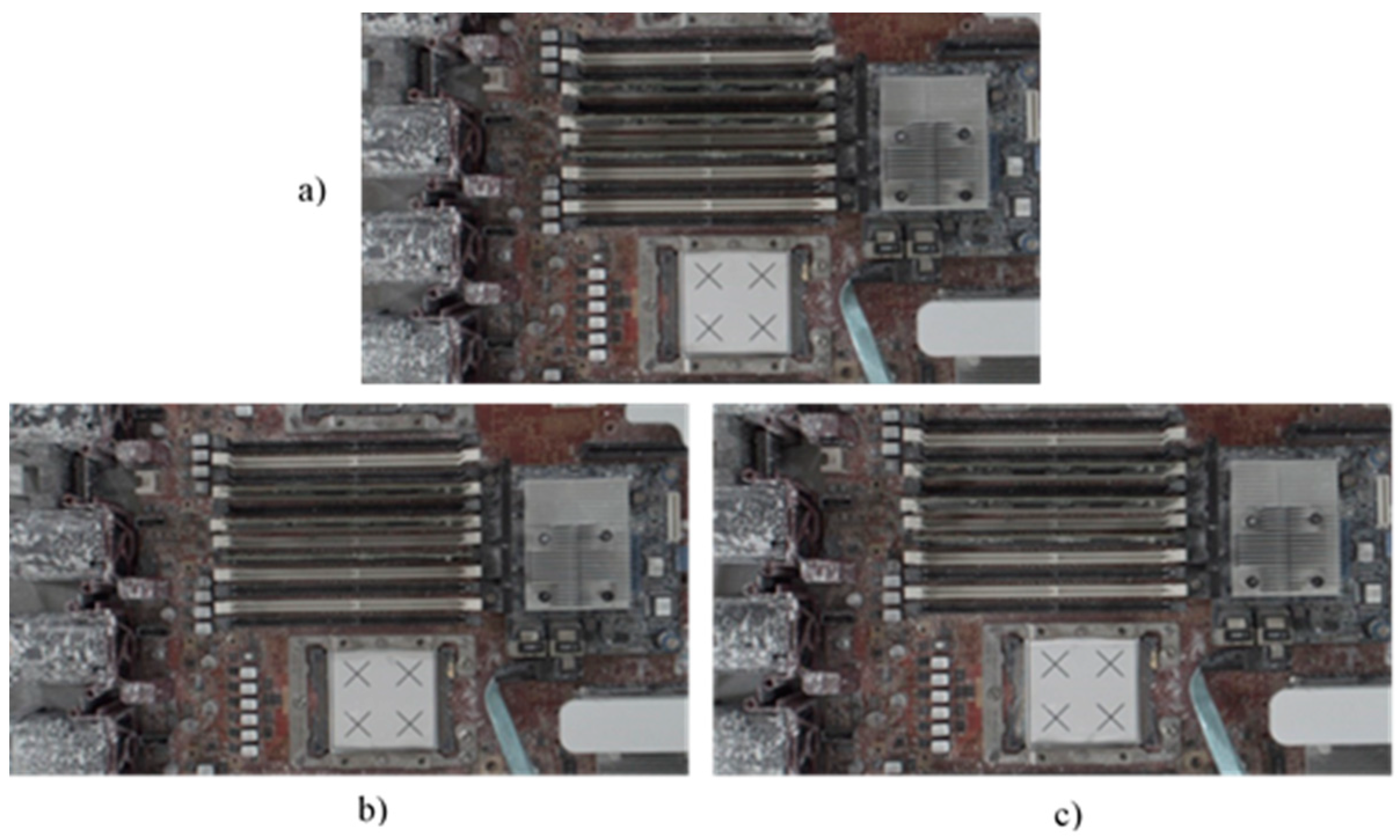

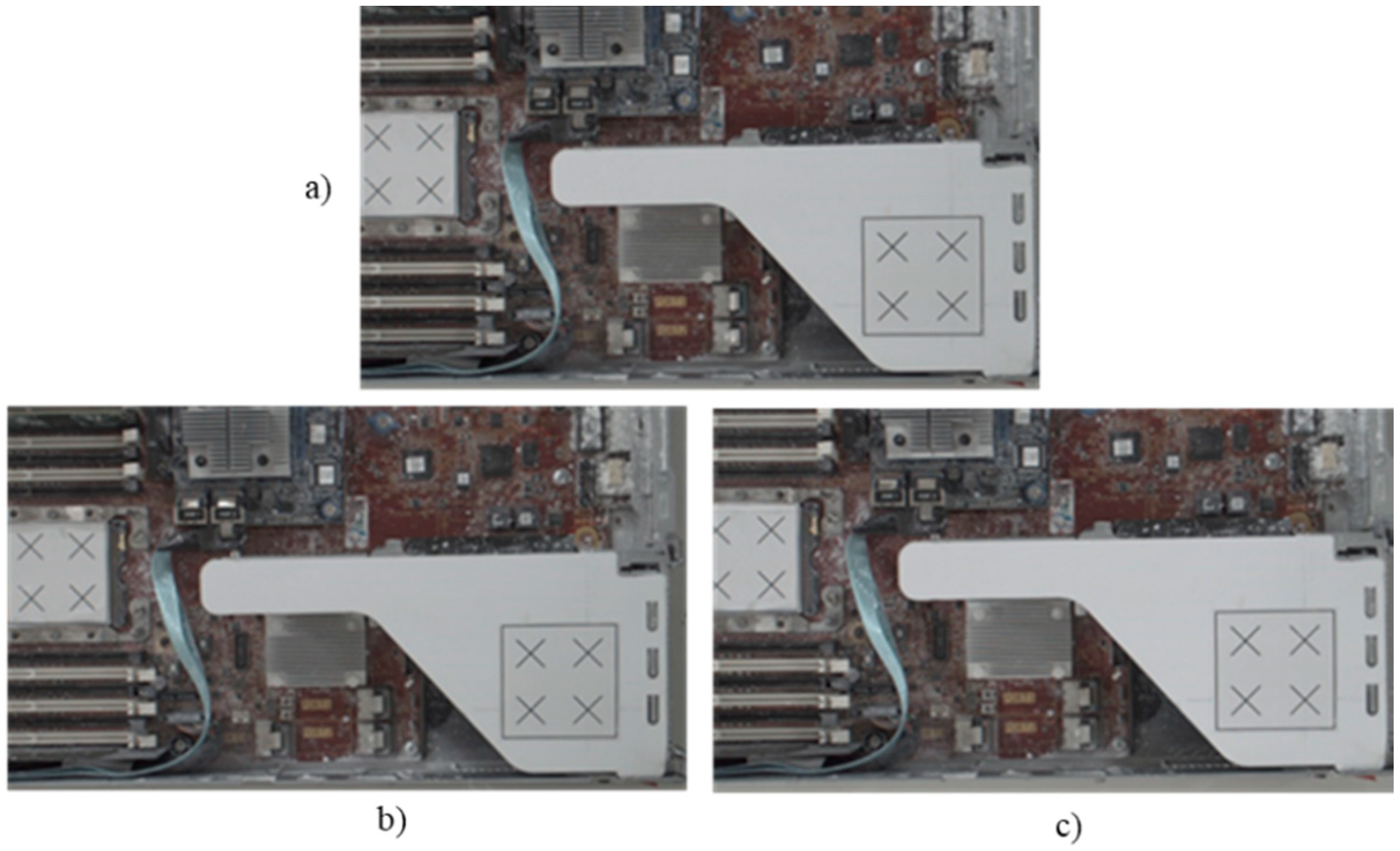

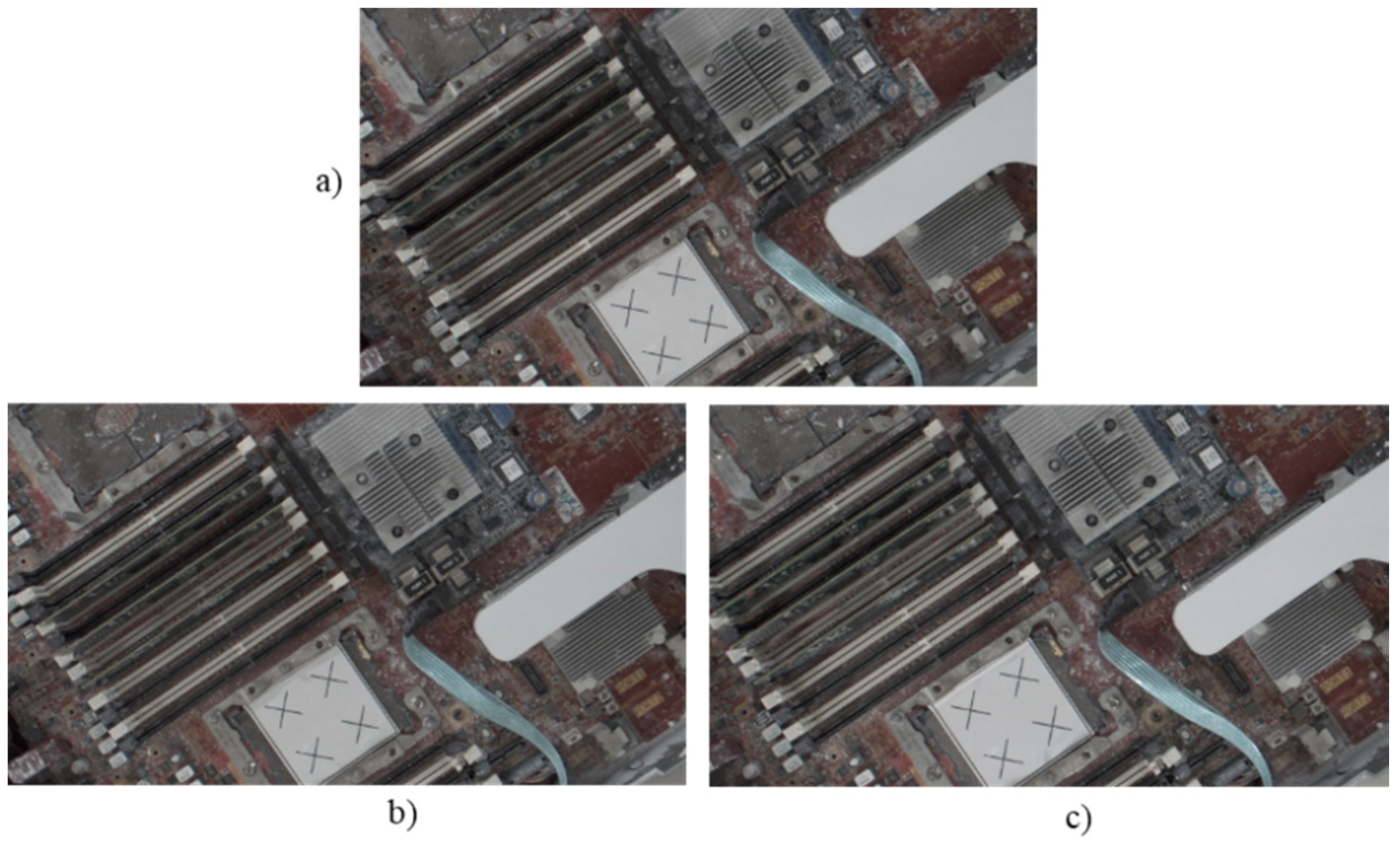

Here, we report and discuss experiments performed using the proposed position correction module for robot-guided inspection. On a production line, the robot arm employs the object position correction module to perform an AOI operation autonomously. To execute an AOI inspection, the robot arm gathers photos of the target object from various angles. If the object shifts, the proposed system detects this and adjusts the robot’s AOI image shooting position. Once positional changes are made, the system captures the defined AOI target images. The object used in this experiment was a large computer server with four artificial markers, as shown in

Figure 10.

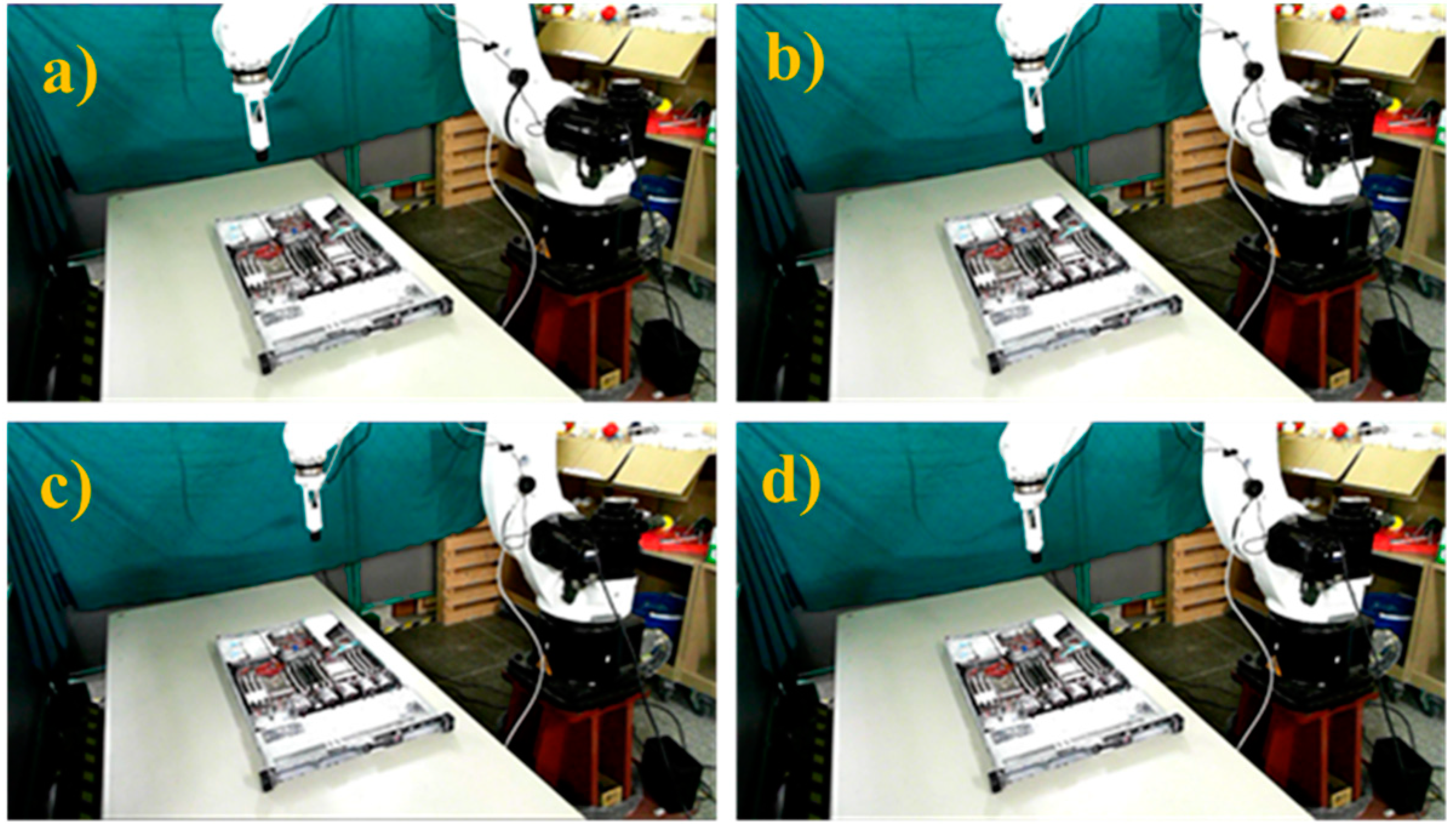

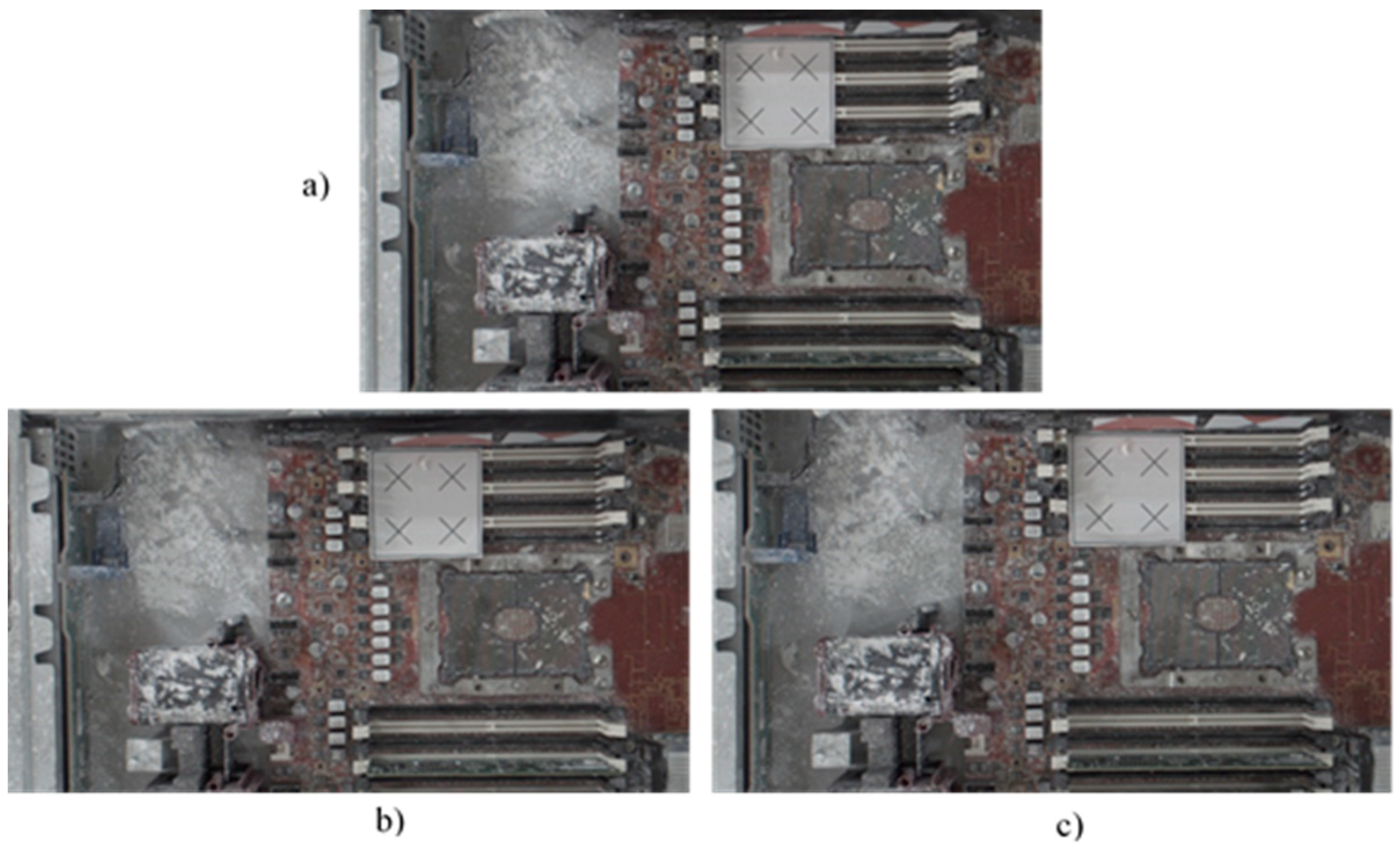

During the system execution process, we first manually guided the robot arm to shoot the positioning marker image, as shown in

Figure 11. We obtained the sample image of the positioning marker as a reference for the position correction system, as shown in

Figure 12, and recorded the positioning marker position and its transformation matrix relative to the camera.

Once the sample image of the positioning marker was captured, the robot’s target positions were selected for the AOI inspection task before any displacement or rotation. In the experiment, four different robot shooting positions were selected at different heights and angles, as shown in

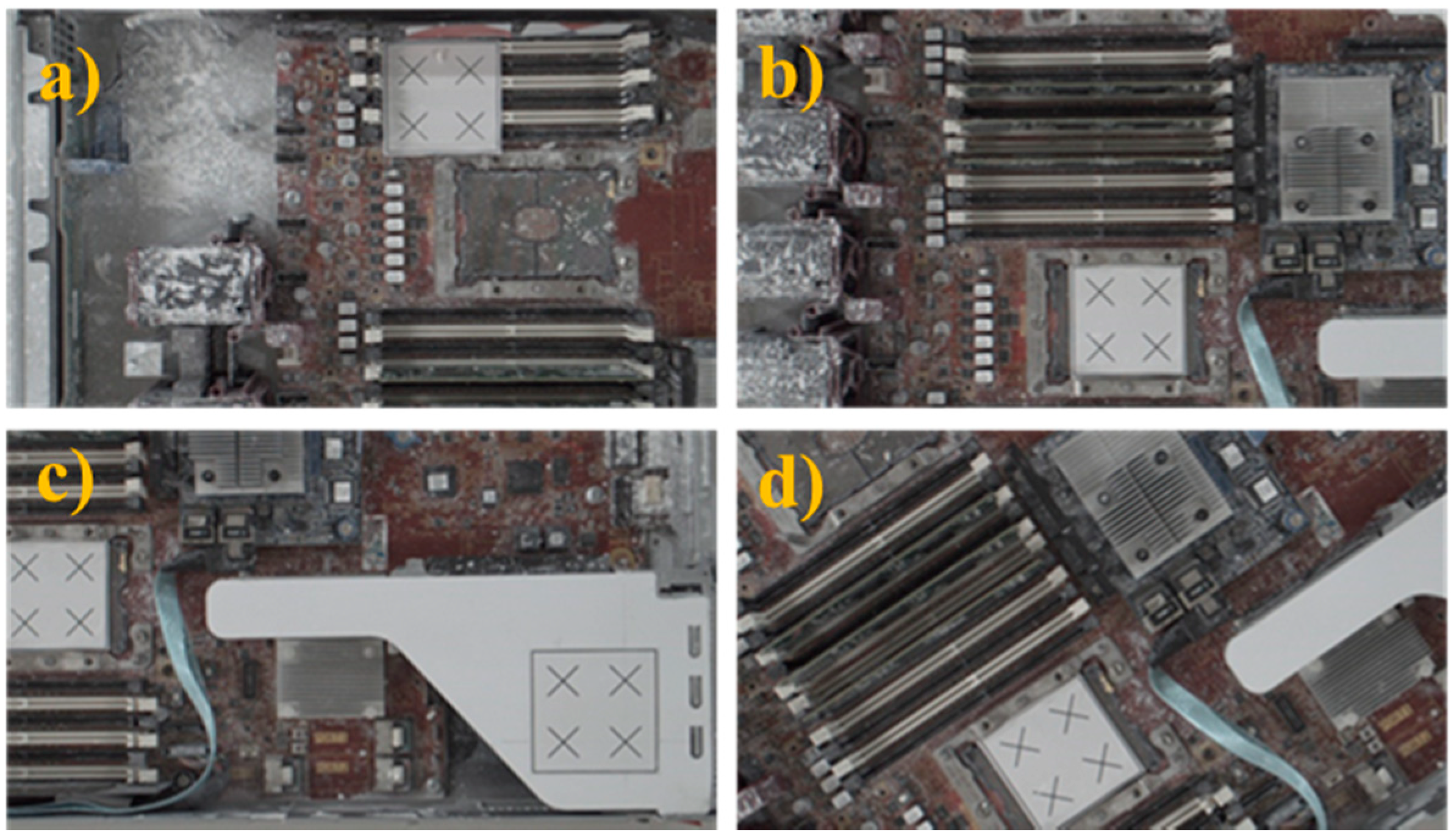

Figure 13, and the images captured at these positions are shown in

Figure 14. These were the target points used to perform the position correction procedure.

Following this preparatory procedure, the system already had all of the parameters and specification data required to perform object image repositioning.

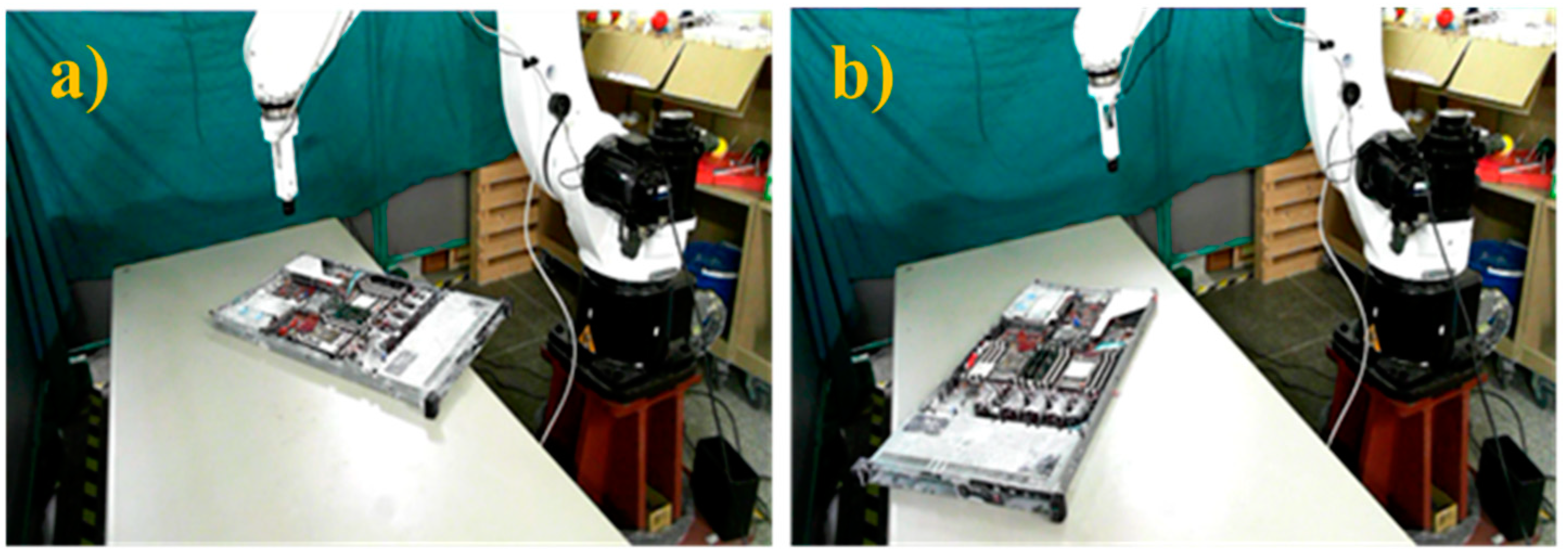

Figure 14 shows the original standard image without displacement. Two experiments with random manual displacement were conducted to evaluate the performance of the proposed position correction module, as shown in

Figure 15. Once the manual displacement was carried out, the proposed position correction modules calculated the new AOI position to capture images, as shown in

Figure 16,

Figure 17,

Figure 18 and

Figure 19. These were then compared with the standard images shown in

Figure 14.

Two experiments were conducted to measure the performance of the position correction system. In test 1, the object was moved by 49.1 mm, with a final average residual error of 5.15 mm, and the proposed system compensated for 91.5% of the position error. In test 2, the object was moved 50.6 mm, with a final average residual error of 3.80 mm, and the proposed system compensated for 92.5% of the position error. New images were captured and compared with the standard images for the two random object positions. The captured images differed slightly from the standard image. These results demonstrate that the proposed system efficiently compensates for most of the error caused by object displacement.

Table 4 presents the error analysis of the object position correction system, in which new images have a distance error (norm) of 10–40 pixels compared to the standard image (

Figure 20), with an average of 21.09 pixels. The errors measured in pixels were then converted to mm (

Table 5). The distance error was between 6 and 2 mm (

Figure 21) and the mean error was 3.97 mm. The proposed method’s efficiency could be improved by accurately positioning the camera and replacing the 3D print camera holder, as well as minimizing calibration error, which may improve system accuracy by up to 95.3%. The developed system resolved the issues associated with the object translation or rotation error in the production line. The robot-guided inspection system with position correction module enhanced the ability to perform user-defined AOI tasks autonomously on any production line.