Optical Aberration Calibration and Correction of Photographic System Based on Wavefront Coding

Abstract

1. Introduction

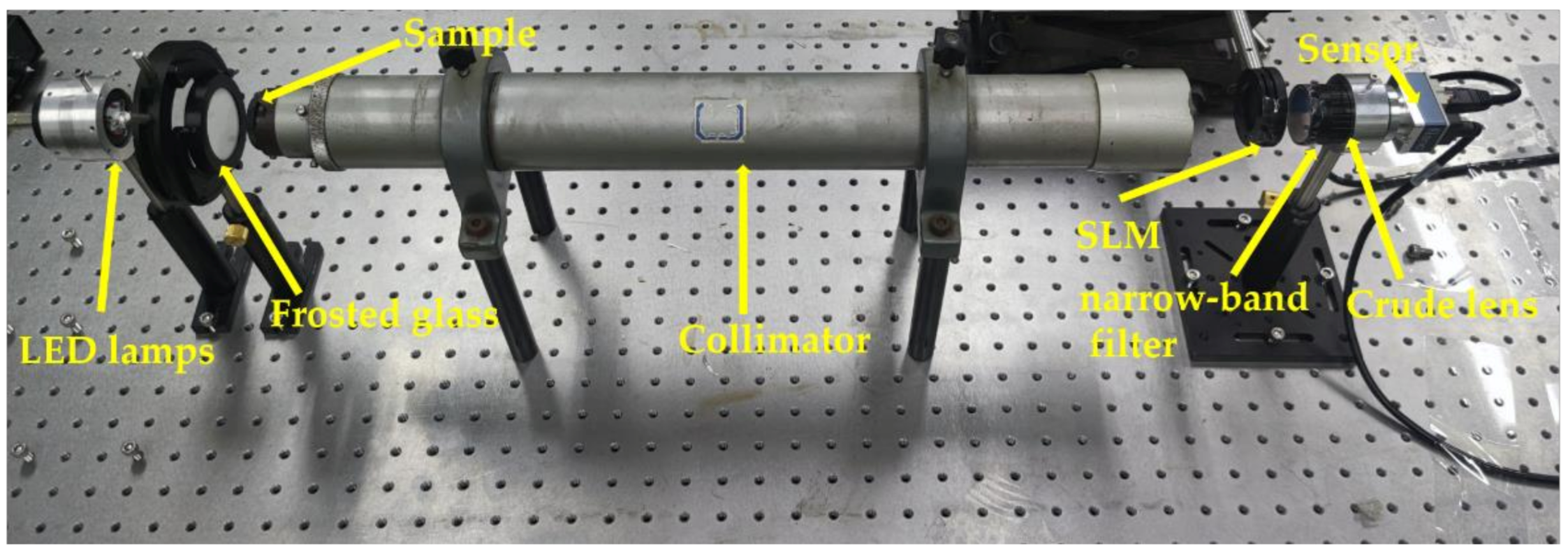

2. Materials and Methods

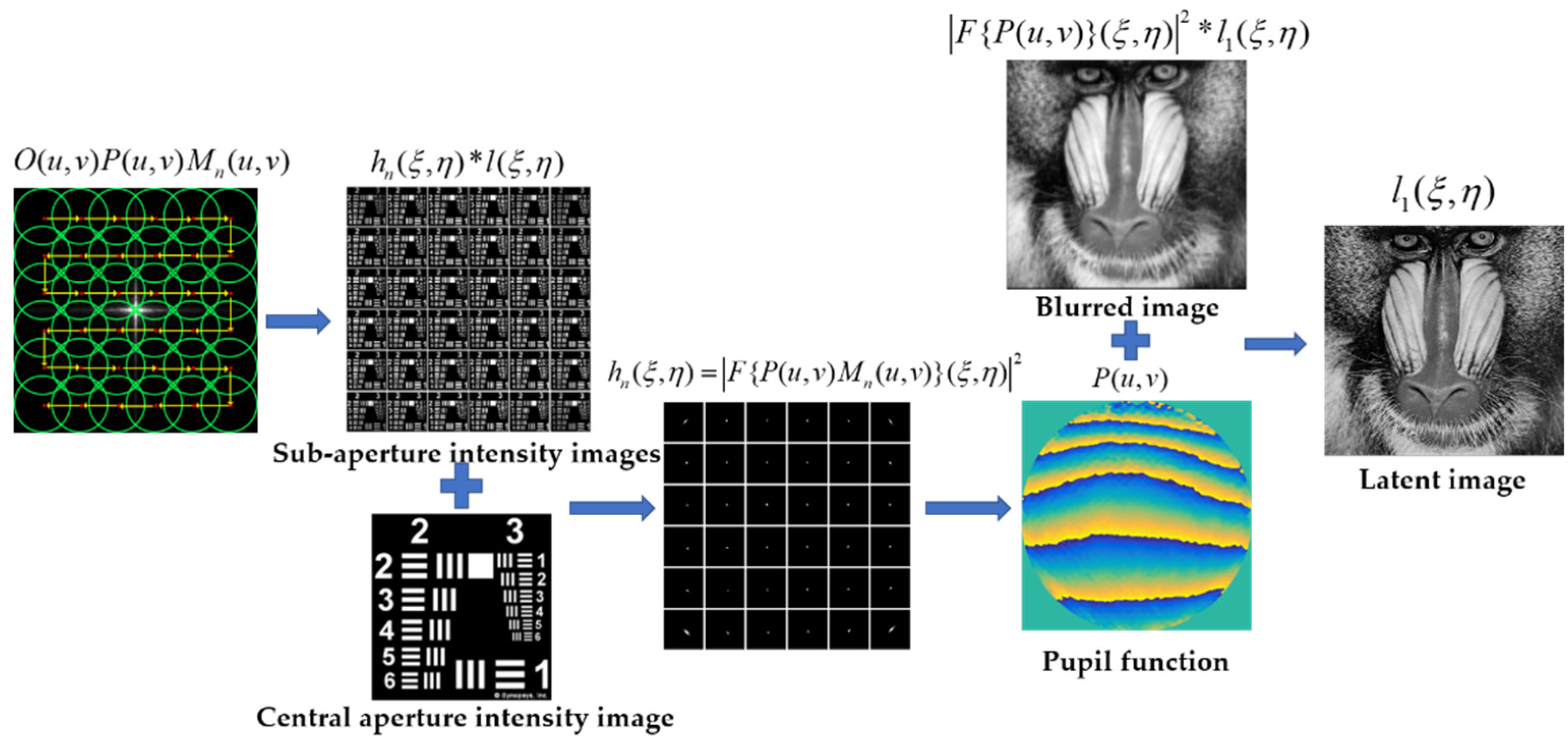

2.1. Local Aberration Recovery

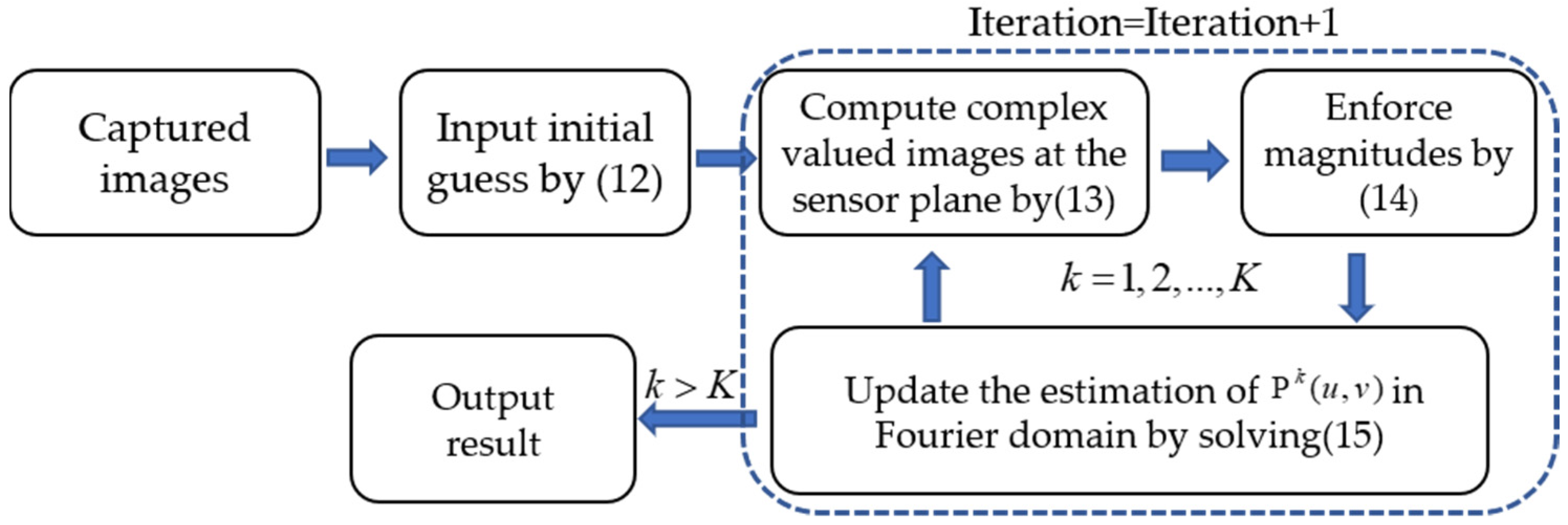

2.2. Pupil Function Reconstruction

2.3. Image Deconvolution

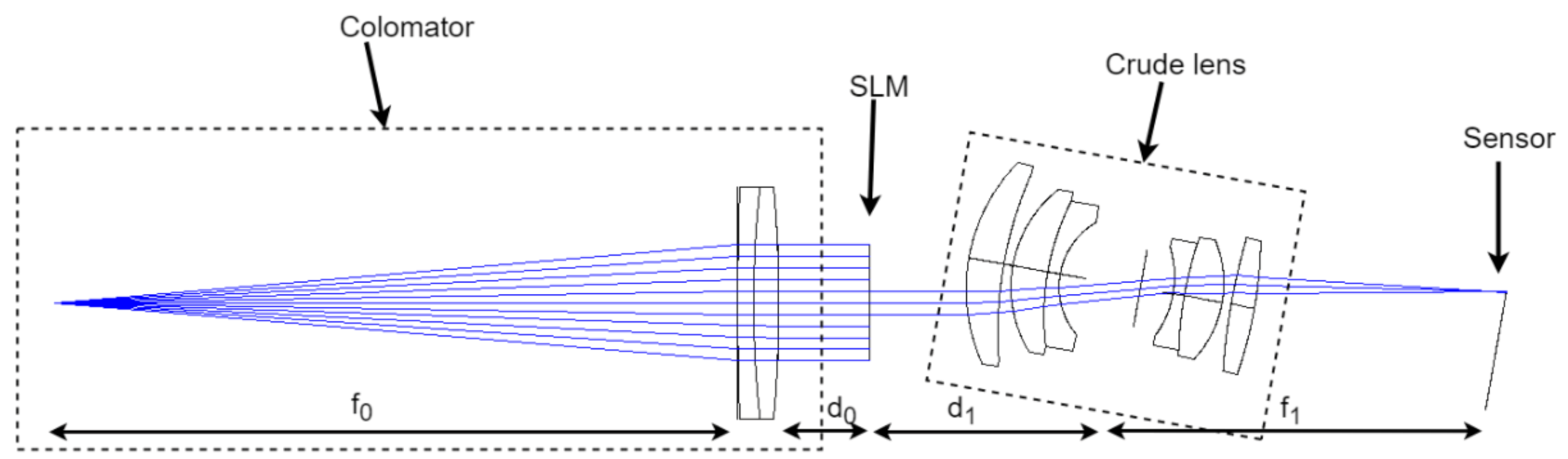

3. Experiments and Results

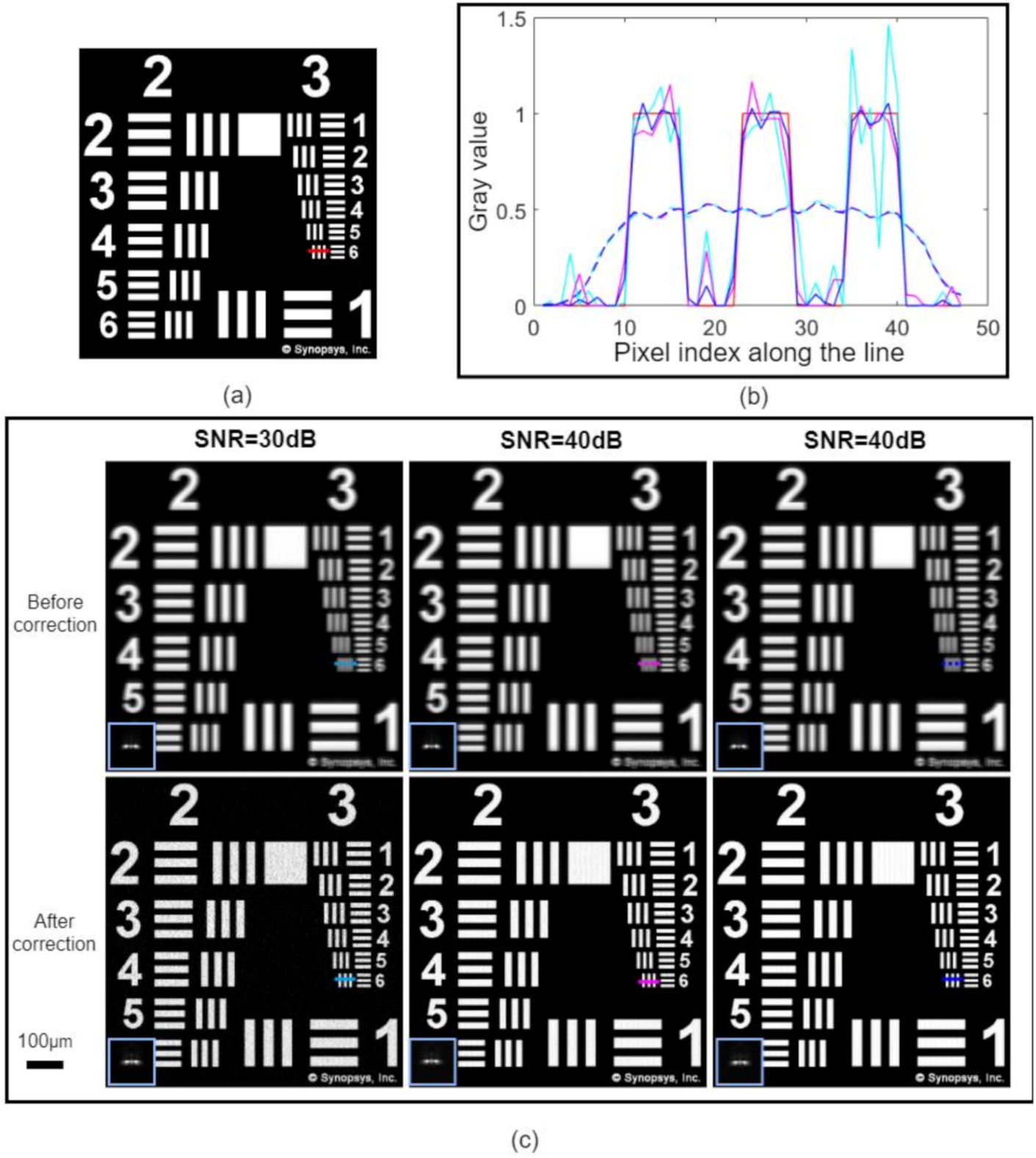

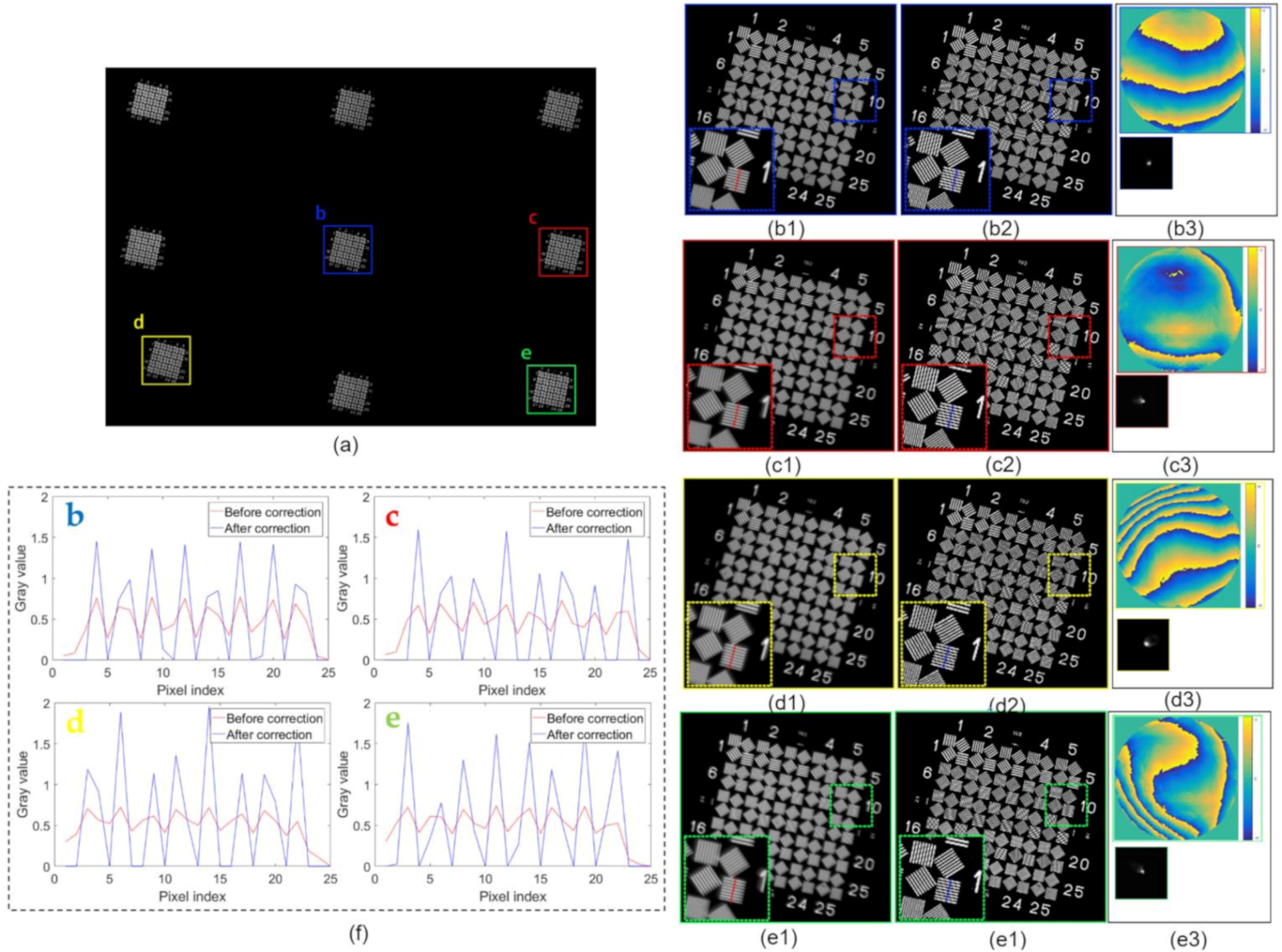

3.1. Simulated Data Experiments

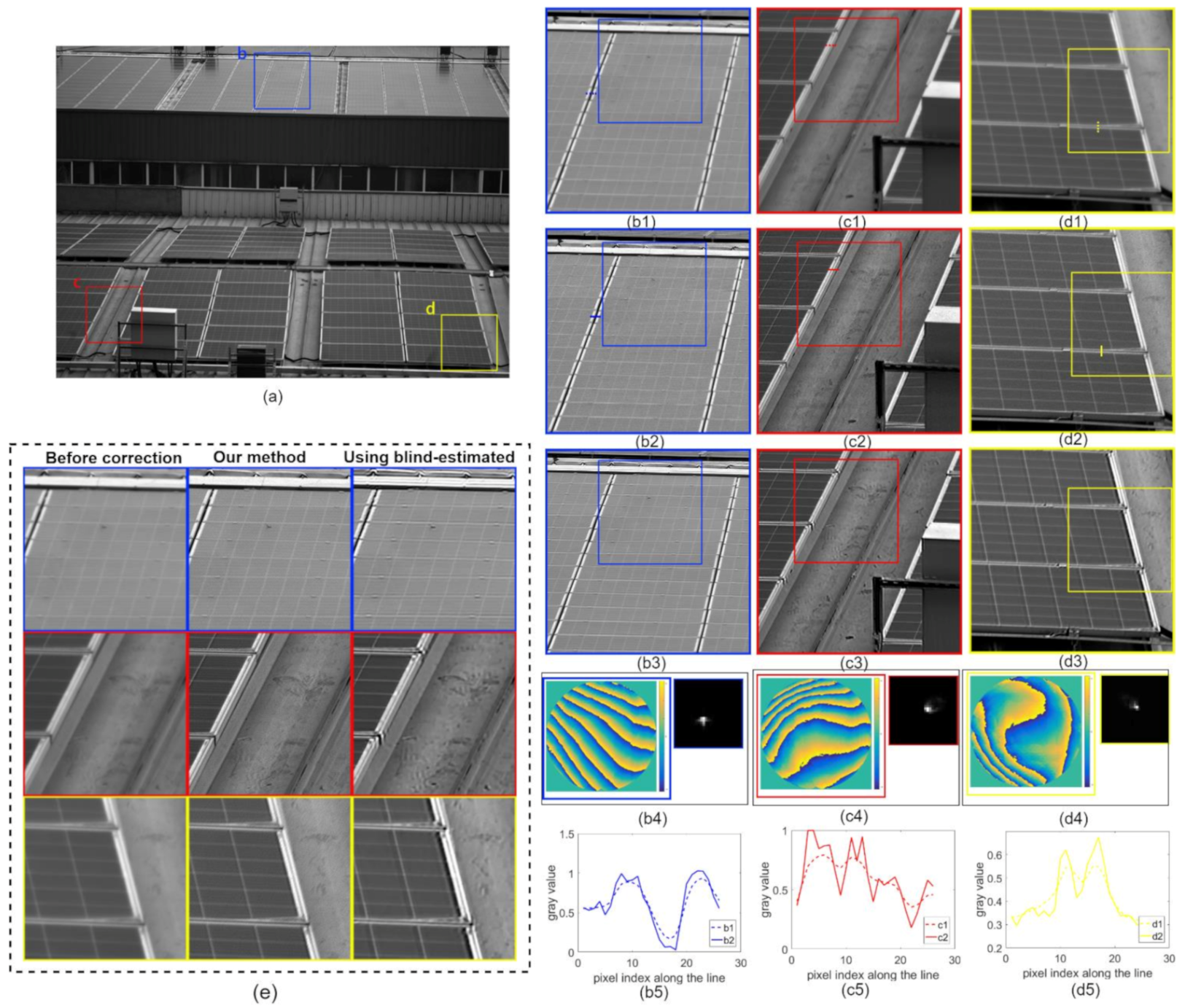

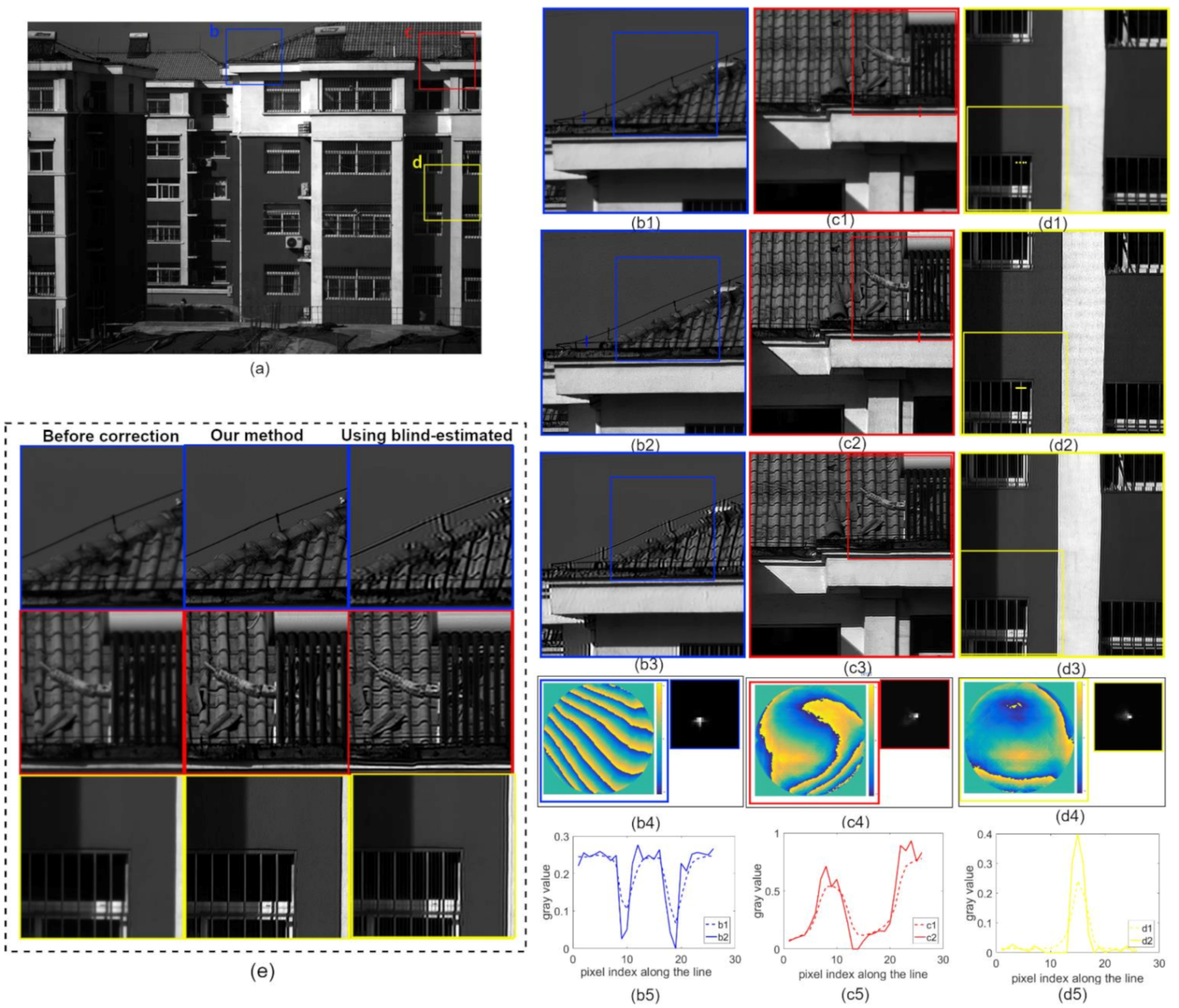

3.2. Real Data Experiments

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Schuler, C.J.; Hirsch, M.; Harmeling, S.; Scholkopf, B. Non-Stationary Correction of Optical Aberrations. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 659–666. [Google Scholar]

- Heide, F.; Rouf, M.; Hullin, M.B.; Labitzke, B.; Heidrich, W.; Kolb, A. High-Quality Computational Imaging through Simple Lenses. ACM Trans. Graph. 2013, 32, 1–14. [Google Scholar] [CrossRef]

- Ahi, K.; Shahbazmohamadi, S.; Asadizanjani, N. Quality Control and Authentication of Packaged Integrated Circuits Using Enhanced-Spatial-Resolution Terahertz Time-Domain Spectroscopy and Imaging. Opt. Lasers Eng. 2018, 104, 274–284. [Google Scholar] [CrossRef]

- Ahi, K. A Method and System for Enhancing the Resolution of Terahertz Imaging. Measurement 2019, 138, 614–619. [Google Scholar] [CrossRef]

- Fienup, J.R.; Miller, J.J. Aberration Correction by Maximizing Generalized Sharpness Metrics. JOSA A 2003, 20, 609–620. [Google Scholar] [CrossRef] [PubMed]

- Thiebaut, E.; Conan, J.-M. Strict a Priori Constraints for Maximum-Likelihood Blind Deconvolution. J. Opt. Soc. Am. A 1995, 12, 485–492. [Google Scholar] [CrossRef]

- Yue, T.; Suo, J.; Wang, J.; Cao, X.; Dai, Q. Blind Optical Aberration Correction by Exploring Geometric and Visual Priors. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1684–1692. [Google Scholar]

- Gong, X.; Lai, B.; Xiang, Z. AL0 Sparse Analysis Prior for Blind Poissonian Image Deconvolution. Opt. Express 2014, 22, 3860. [Google Scholar] [CrossRef] [PubMed]

- Leger, D.; Duffaut, J.; Robinet, F. MTF Measurement Using Spotlight. In Proceedings of the IGARSS ’94-1994 IEEE International Geoscience and Remote Sensing Symposium, Pasadena, CA, USA, 8–12 August 1994. [Google Scholar] [CrossRef]

- Zheng, Y.; Huang, W.; Pan, Y.; Xu, M. Optimal PSF Estimation for Simple Optical System Using a Wide-Band Sensor Based on PSF Measurement. Sensors 2018, 18, 3552. [Google Scholar] [CrossRef]

- Shih, Y.; Guenter, B.; Joshi, N. Image Enhancement Using Calibrated Lens Simulations. In Transactions on Petri Nets and Other Models of Concurrency XV; Springer Science and Business Media LLC: Berlin, Germany, 2012; Volume 7575, pp. 42–56. [Google Scholar]

- Pandharkar, R.; Kirmani, A.; Raskar, R. Lens Aberration Correction Using Locally Optimal Mask Based Low Cost Light Field Cameras. Imaging Syst. 2010, 3. [Google Scholar] [CrossRef]

- Vettenburg, T.; Harvey, A.R. Correction of Optical Phase Aberrations Using Binary-Amplitude Modulation. J. Opt. Soc. Am. A 2011, 28, 429–433. [Google Scholar] [CrossRef] [PubMed]

- Patwary, N.; Shabani, H.; Doblas, A.; Saavedra, G.; Preza, C. Experimental Validation of a Customized Phase Mask Designed to Enable Efficient Computational Optical Sectioning Microscopy through Wavefront Encoding. Appl. Opt. 2017, 56, D14–D23. [Google Scholar] [CrossRef]

- Doblas, A.; Preza, C.; Dutta, A.; Saavedra, G. Tradeoff between Insensitivity to Depth-Induced Spherical Aberration and Resolution of 3D Fluorescence Imaging Due to the Use of Wavefront Encoding with a Radially Symmetric Phase Mask. In Proceedings of the Three-Dimensional and Multidimensional Microscopy, Image Acquisition and Processing XXV, San Francisco, CA, USA, 29–31 January 2018; SPIE: Bellingham, WA, USA, 2018; Volume 10499, p. 104990F. [Google Scholar]

- González-Amador, E.; Padilla-Vivanco, A.; Toxqui-Quitl, C.; Olvera-Angeles, M.; Arines, J.; Acosta, E. Wavefront Coding with Jacobi-Fourier Phase Masks. In Proceedings of the Current Developments in Lens Design and Optical Engineering XX, San Diego, CA, USA, 2019, 12 August 2019; p. 1110405. [Google Scholar]

- Beverage, J.L.; Shack, R.V.; Descour, M.R. Measurement of the Three-Dimensional Microscope Point Spread Function Using a Shack-Hartmann Wavefront Sensor. J. Microsc. 2002, 205, 61–75. [Google Scholar] [CrossRef] [PubMed]

- Allen, L.J.; Oxley, M.P. Phase Retrieval from Series of Images Obtained by Defocus Variation. Opt. Commun. 2001, 199, 65–75. [Google Scholar] [CrossRef]

- Waller, L.; Tian, L.; Barbastathis, G. Transport of Intensity Phase-Amplitude Imaging with Higher Order Intensity Derivatives. Opt. Express 2010, 18, 12552–12561. [Google Scholar] [CrossRef] [PubMed]

- Gureyev, T.; Nugent, K. Rapid Quantitative Phase Imaging Using the Transport of Intensity Equation. Opt. Commun. 1997, 133, 339–346. [Google Scholar] [CrossRef]

- Zhang, Y.; Pedrini, G.; Osten, W.; Tiziani, H.J. Reconstruction of Inline Digital Holograms from Two Intensity Measurements. Opt. Lett. 2004, 29, 1787–1789. [Google Scholar] [CrossRef] [PubMed]

- Ou, X.; Zheng, G.; Yang, C. Embedded Pupil Function Recovery for Fourier Ptychographic Microscopy. Opt. Express 2014, 22, 4960–4972. [Google Scholar] [CrossRef]

- Chung, J.; Martinez, G.W.; Lencioni, K.C.; Sadda, S.R.; Yang, C. Computational Aberration Compensation by Coded-Aperture-Based Correction of Aberration Obtained from Optical Fourier Coding and Blur Estimation. Optica 2019, 6, 647–661. [Google Scholar] [CrossRef]

- Shen, C.; Chan, A.C.; Chung, J.; Williams, D.E.; Hajimiri, A.; Yang, C. Computational Aberration Correction of VIS-NIR Multi-spectral Imaging Microscopy Based on Fourier Ptychography. Opt. Express 2019, 27, 24923. [Google Scholar] [CrossRef]

- Lizana, A.; Martín, N.; Estapé, M.; Fernández, E.; Moreno, I.; Márquez, A.; Iemmi, C.; Campos, J.; Yzuel, M.J. Influence of the Incident Angle in the Performance of Liquid Crystal on Silicon Displays. Opt. Express 2009, 17, 8491. [Google Scholar] [CrossRef] [PubMed]

- Zheng, G.; Ou, X.; Horstmeyer, R.; Yang, C. Characterization of Spatially Varying Aberrations for Wide Field-of-View Microscopy. Opt. Express 2013, 21, 15131–15143. [Google Scholar] [CrossRef]

- Trussell, H.; Hunt, B. Image Restoration of Space Variant Blurs by Sectioned Methods. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brisbane, Australia, 19–24 April 2005; Volume 3, pp. 196–198. [Google Scholar]

- Costello, T.P.; Mikhael, W.B. Efficient Restoration of Space-Variant Blurs from Physical Optics by Sectioning with Modified Wiener Filtering. Digit. Signal Process. 2003, 13, 1–22. [Google Scholar] [CrossRef]

- Yuan, L.; Sun, J.; Quan, L.; Shum, H.Y. Image Deblurring with Blurred/Noisy Image Pairs. In ACM SIGGRAPH 2007 Papers; Association for Computing Machinery: New York, NY, USA, 2007; p. 1-es. [Google Scholar]

- Neumaier, A. Solving Ill-Conditioned and Singular Linear Systems: A Tutorial on Regularization. SIAM Rev. 1998, 40, 636–666. [Google Scholar] [CrossRef]

- Gerchberg, R.W. A Practical Algorithm for the Determination of Phase from Image and Diffraction Pictures. Optik 1972, 35, 237–246. [Google Scholar]

- Lei, T.; Waller, L. 3D Intensity and Phase Imaging from Light Field Measurements in an LED Array Microscope. Optica 2015, 2, 104–111. [Google Scholar]

- Krishnan, D.; Fergus, R. Fast Image Deconvolution using Hyper-Laplacian Priors. In Proceedings of the Advances in Neural Information Processing Systems 22: 23rd Annual Conference on Neural Information Processing Systems 2009, Vancouver, BC, Canada, 7–10 December 2009. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Bovik, A.C. Mean Squared Error: Love it or Leave it? A New Look at Signal Fidelity Measures. IEEE Signal Process. Mag. 2009, 26, 98–117. [Google Scholar] [CrossRef]

- Debevec, P.E.; Malik, J. Recovering High Dynamic Range Radiance Maps from Photographs. In ACM SIGGRAPH 2008 Classes; Association for Computing Machinery: New York, NY, USA, 1997; Volume 97, pp. 1–10. [Google Scholar]

- Shannon, C.E. Communication In The Presence Of Noise. Proc. IEEE 1998, 86, 447–457. [Google Scholar] [CrossRef]

- Shen, H.; Zhang, L. A MAP-Based Algorithm for Destriping and Inpainting of Remotely Sensed Images. IEEE Trans. Geosci. Remote Sens. 2009, 47, 1492–1502. [Google Scholar] [CrossRef]

- Krishnan, D.; Tay, T.; Fergus, R. Blind Deconvolution Using a Normalized Sparsity Measure. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 233–240. [Google Scholar]

| Index | Images | SNR = 30 dB | SNR = 40 dB | SNR = 50 dB |

|---|---|---|---|---|

| PSNR | Before correction | 15.5182 | 15.5333 | 15.5348 |

| After correction | 17.6292 | 25.8131 | 29.6136 | |

| SSIM | Before correction | 0.5558 | 0.6056 | 0.6110 |

| After correction | 0.5621 | 0.7932 | 0.9164 |

| Index | Images | b | c | d | e |

|---|---|---|---|---|---|

| CV | Before correction | 1.5536 | 1.3910 | 1.3207 | 1.3313 |

| After correction | 2.2419 | 2.2611 | 2.3022 | 2.1944 | |

| MRD | Before correction | 1.2810 | 1.2101 | 1.1657 | 1.1679 |

| After correction | 1.5678 | 1.5711 | 1.5651 | 1.5513 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yao, C.; Shen, Y. Optical Aberration Calibration and Correction of Photographic System Based on Wavefront Coding. Sensors 2021, 21, 4011. https://doi.org/10.3390/s21124011

Yao C, Shen Y. Optical Aberration Calibration and Correction of Photographic System Based on Wavefront Coding. Sensors. 2021; 21(12):4011. https://doi.org/10.3390/s21124011

Chicago/Turabian StyleYao, Chuanwei, and Yibing Shen. 2021. "Optical Aberration Calibration and Correction of Photographic System Based on Wavefront Coding" Sensors 21, no. 12: 4011. https://doi.org/10.3390/s21124011

APA StyleYao, C., & Shen, Y. (2021). Optical Aberration Calibration and Correction of Photographic System Based on Wavefront Coding. Sensors, 21(12), 4011. https://doi.org/10.3390/s21124011